A Deep Learning-Based Preprocessing Method for Single Interferometric Fringe Patterns

Abstract

:1. Introduction

2. Method

2.1. Interference Fringe Model

2.2. The DN-U-NET Network Model

2.3. Dataset and Environment Configuration

2.4. Evaluation Indicators

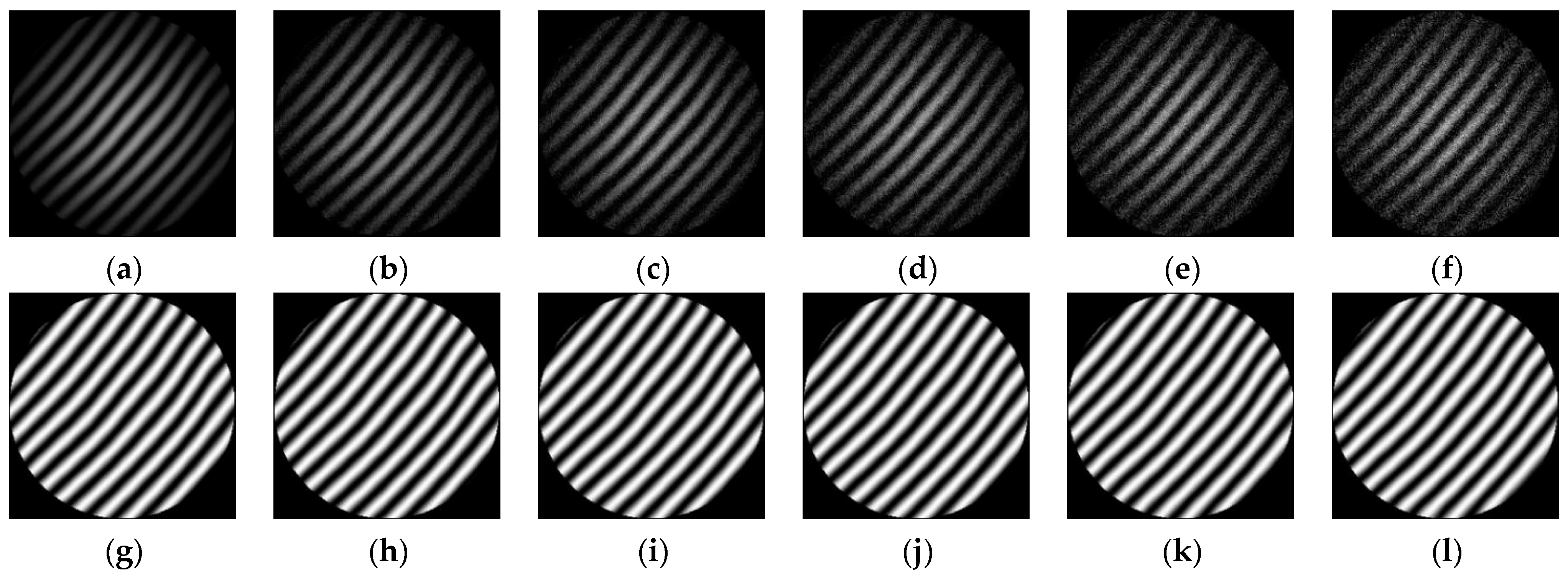

3. Simulation and Analysis

4. Experimental Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Leach, R.K.; Senin, N.; Feng, X.; Stavroulakis, P.; Su, R.; Syam, W.P.; Widjanarko, T. Information-rich metrology: Changing the game. Commer. Micro Manuf. 2017, 8, 33–39. [Google Scholar]

- Servin, M.; Cuevas, F.J. A novel technique for spatial phase-shifting interferometry. J. Mod. Opt. 1995, 42, 1853–1862. [Google Scholar] [CrossRef]

- Servin, M.; Estrada, J.C.; Medina, O. Fourier transform demodulation of pixelated phase-masked interferograms. Opt. Express 2010, 18, 16090–16095. [Google Scholar] [CrossRef] [PubMed]

- Li, J.P.; Song, L.; Chen, L.; Li, B.; Han, Z.G.; Gu, C.F. Quadratic polar coordinate transform technique for the demodulation of circular carrier interferogram. Opt. Commun. 2015, 336, 166–172. [Google Scholar] [CrossRef]

- Servin, M.; Marroquin, J.L.; Quiroga, J.A. Regularized quadrature and phase tracking from a single closed-fringe interferogram. J. Opt. Soc. Am. A 2004, 21, 411–419. [Google Scholar] [CrossRef] [PubMed]

- Kai, L.; Kemao, Q. Improved generalized regularized phase tracker for demodulation of a single fringe pattern. Opt. Express 2013, 21, 24385–24397. [Google Scholar] [CrossRef] [PubMed]

- Quiroga, J.A.; Servin, M. Isotropic n-dimensional fringe pattern normalization. Opt. Commun. 2003, 224, 221–227. [Google Scholar] [CrossRef]

- Servin, M.; Marroquin, J.L.; Cuevas, F.J. Fringe-follower regularized phase tracker for demodulation of closed-fringe interferograms. J. Opt. Soc. Am. A 2001, 18, 689–695. [Google Scholar] [CrossRef]

- Rivera, M. Robust phase demodulation of interferograms with open or closed fringes. J. Opt. Soc. Am. A 2005, 22, 1170–1175. [Google Scholar] [CrossRef] [PubMed]

- Quiroga, J.A.; Gómez-Pedrero, J.A.; García-Botella, Á. Algorithm for fringe pattern normalization. Opt. Commun. 2001, 197, 43–51. [Google Scholar] [CrossRef]

- Ochoa, N.A.; Silva-Moreno, A.A. Normalization and noise-reduction algorithm for fringe patterns. Opt. Commun. 2007, 270, 161–168. [Google Scholar] [CrossRef]

- Bernini, M.B.; Federico, A.; Kaufmann, G.H. Normalization of fringe patterns using the bidimensional empirical mode decomposition and the Hilbert transform. Appl. Opt. 2009, 48, 6862–6869. [Google Scholar] [CrossRef] [PubMed]

- Tien, C.L.; Jyu, S.S.; Yang, H.M. A method for fringe normalization by Zernike polynomial. Opt. Rev. 2009, 16, 173–175. [Google Scholar] [CrossRef]

- Sharma, S.; Kulkarni, R.; Ajithaprasad, S.; Gannavarpu, R. Fringe pattern normalization algorithm using Kalman filter. Results Opt. 2021, 5, 100152. [Google Scholar] [CrossRef]

- Feng, L.; Du, H.; Zhang, G.; Li, Y.; Han, J. Fringe Pattern Orthogonalization Method by Generative Adversarial Nets. Acta Photonica Sin. 2023, 52, 0112003. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Park, J.; Woo, S.; Lee, J.Y.; Kweon, I.S. BAM: Bottleneck Attention Module. In Proceedings of the British Machine Vision Conference (BMVC). British Machine Vision Association (BMVA), Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018, Proceedings 4; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- Alom, Z.; Yakopcic, C.; Hasan, M.; Taha, T.M.; Asari, V.K. Recurrent residual U-Net for medical image segmentation. J. Med. Imaging 2019, 6, 014006. [Google Scholar] [CrossRef] [PubMed]

- Abraham, N.; Khan, N.M. A novel focal tversky loss function with improved attention u-net for lesion segmentation. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; pp. 683–687. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. Unet 3+: A full-scale connected unet for medical image segmentation. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar]

- Liu, X.; Yang, Z.; Dou, J.; Liu, Z. Fast demodulation of single-shot interferogram via convolutional neural network. Opt. Commun. 2021, 487, 126813. [Google Scholar] [CrossRef]

| Zernike Coefficient | a1 | a2–a3 | a4–a6 | a7–a36 |

| Value Range/λ | 0 | [−3, 3] | [−0.1, 0.1] | [−0.01, 0.01] |

| Method | RMSE | PSNR | SSIM | ENL | |||||

|---|---|---|---|---|---|---|---|---|---|

| Before | After | Before | After | Before | After | Label | Before | After | |

| U-NET | 96.0986 | 4.6914 | 8.4765 | 34.7048 | 0.3857 | 0.9888 | 1.0845 | 0.7979 | 1.0728 |

| U-NET++ | 5.0276 | 34.1035 | 0.9869 | 1.0783 | |||||

| R2_U-NET | 4.5779 | 34.9174 | 0.9895 | 1.0780 | |||||

| Attention_U-NET | 4.5777 | 34.9178 | 0.9895 | 1.0779 | |||||

| U-NET3+ | 4.9441 | 34.2490 | 0.9873 | 1.0789 | |||||

| DN-U-NET | 4.3928 | 35.2760 | 0.9897 | 1.0799 | |||||

| Noise Level | RMSE | PSNR | SSIM | ENL | |||||

|---|---|---|---|---|---|---|---|---|---|

| Before | After | Before | After | Before | After | Label | Before | After | |

| 0 | 94.6761 | 3.7286 | 8.6060 | 36.6999 | 0.4665 | 0.9880 | 1.0845 | 0.8186 | 1.0670 |

| 0.05 | 95.1219 | 3.7145 | 8.5652 | 36.7329 | 0.4459 | 0.9907 | 0.8361 | 1.0701 | |

| 0.07 | 95.4267 | 3.0072 | 8.5374 | 38.5675 | 0.4210 | 0.9935 | 0.8209 | 1.0735 | |

| 0.09 | 95.8054 | 4.1439 | 8.5030 | 35.7826 | 0.3968 | 0.9895 | 0.8059 | 1.0785 | |

| 0.12 | 96.4116 | 3.8796 | 8.4482 | 36.3551 | 0.3678 | 0.9910 | 0.7723 | 1.0866 | |

| 0.15 | 96.9670 | 6.2108 | 8.3983 | 32.2678 | 0.3428 | 0.9800 | 0.7351 | 1.1135 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, X.; Zhang, D.; Hao, Y.; Liu, B.; Wang, H.; Tian, A. A Deep Learning-Based Preprocessing Method for Single Interferometric Fringe Patterns. Photonics 2024, 11, 226. https://doi.org/10.3390/photonics11030226

Zhu X, Zhang D, Hao Y, Liu B, Wang H, Tian A. A Deep Learning-Based Preprocessing Method for Single Interferometric Fringe Patterns. Photonics. 2024; 11(3):226. https://doi.org/10.3390/photonics11030226

Chicago/Turabian StyleZhu, Xueliang, Di Zhang, Yilei Hao, Bingcai Liu, Hongjun Wang, and Ailing Tian. 2024. "A Deep Learning-Based Preprocessing Method for Single Interferometric Fringe Patterns" Photonics 11, no. 3: 226. https://doi.org/10.3390/photonics11030226

APA StyleZhu, X., Zhang, D., Hao, Y., Liu, B., Wang, H., & Tian, A. (2024). A Deep Learning-Based Preprocessing Method for Single Interferometric Fringe Patterns. Photonics, 11(3), 226. https://doi.org/10.3390/photonics11030226