A Segment-Based Algorithm for Grid Junction Corner Detection Used in Stereo Microscope Camera Calibration

Abstract

:1. Introduction

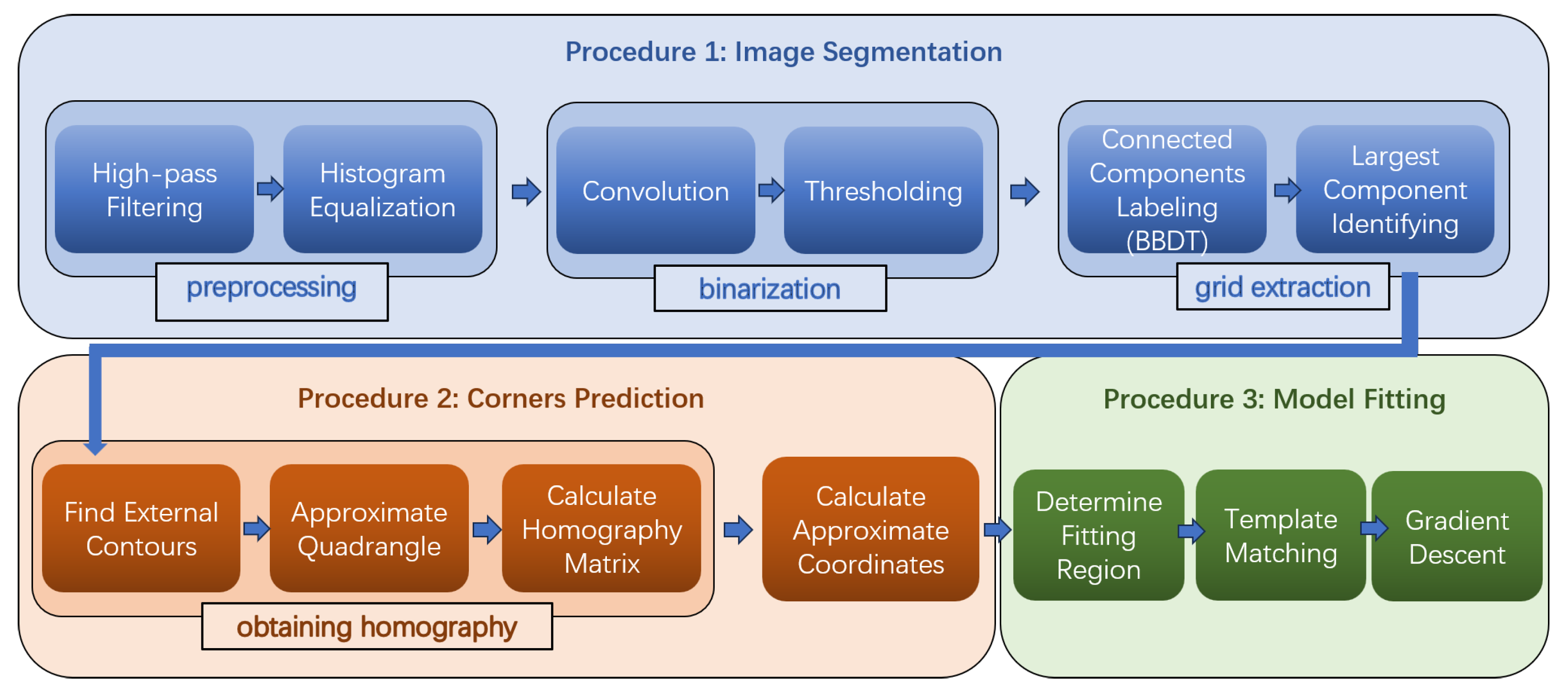

2. Methodology

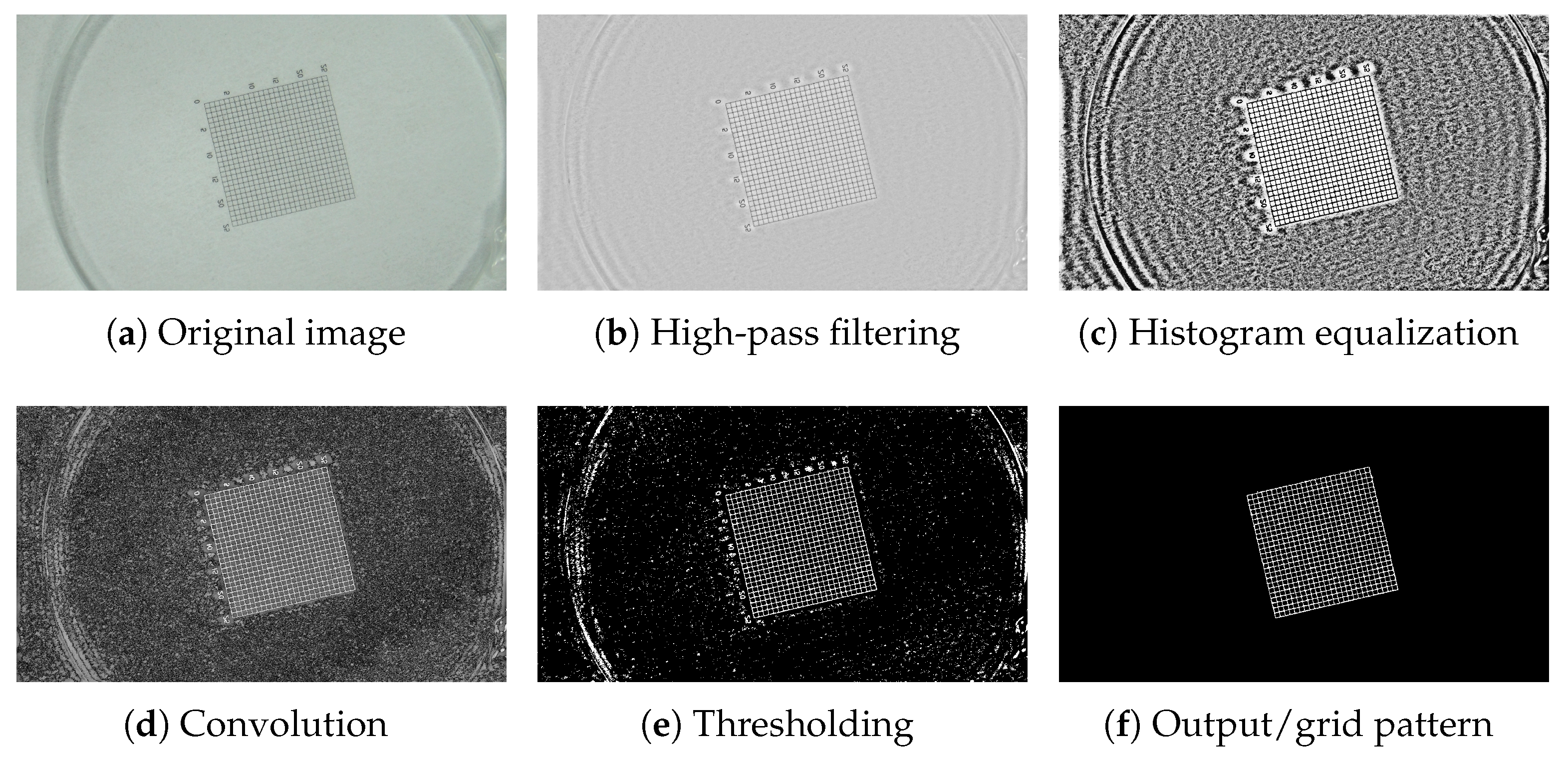

2.1. Segmentation Procedure

2.2. Corner Prediction

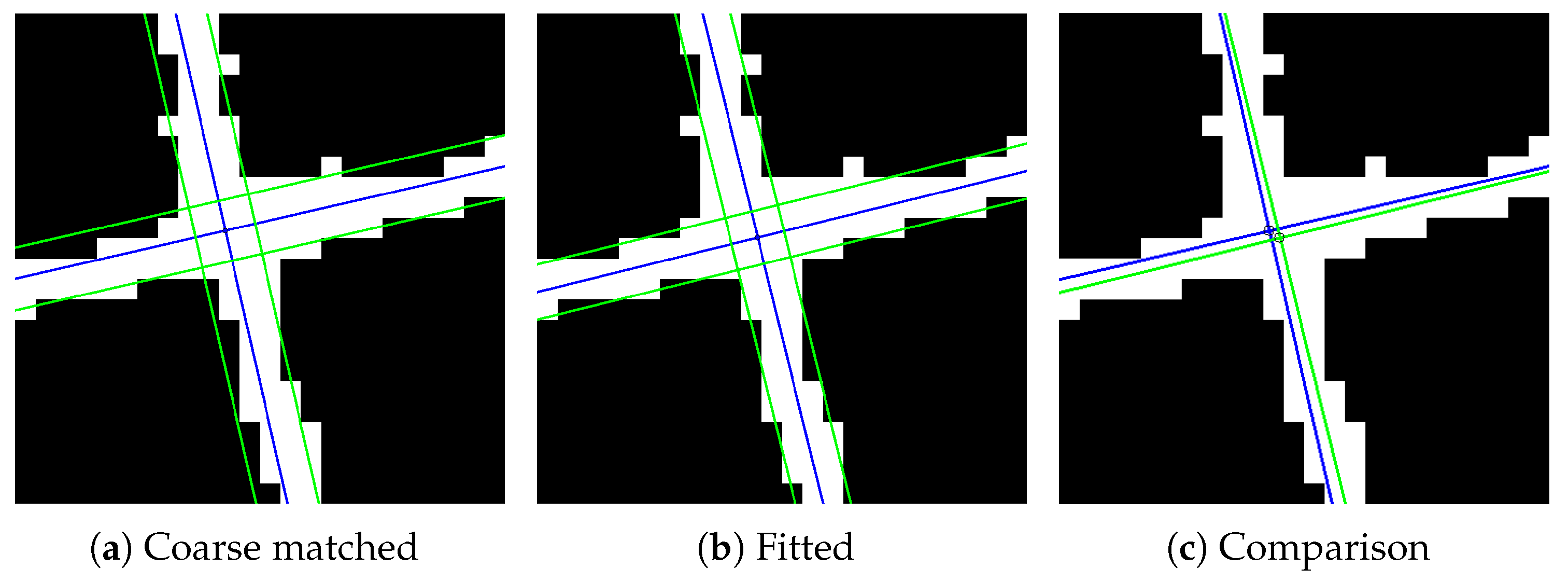

2.3. Model Fitting

2.3.1. Mathematical Model of Grid Junction

2.3.2. Fitting Process

3. Experimental Results

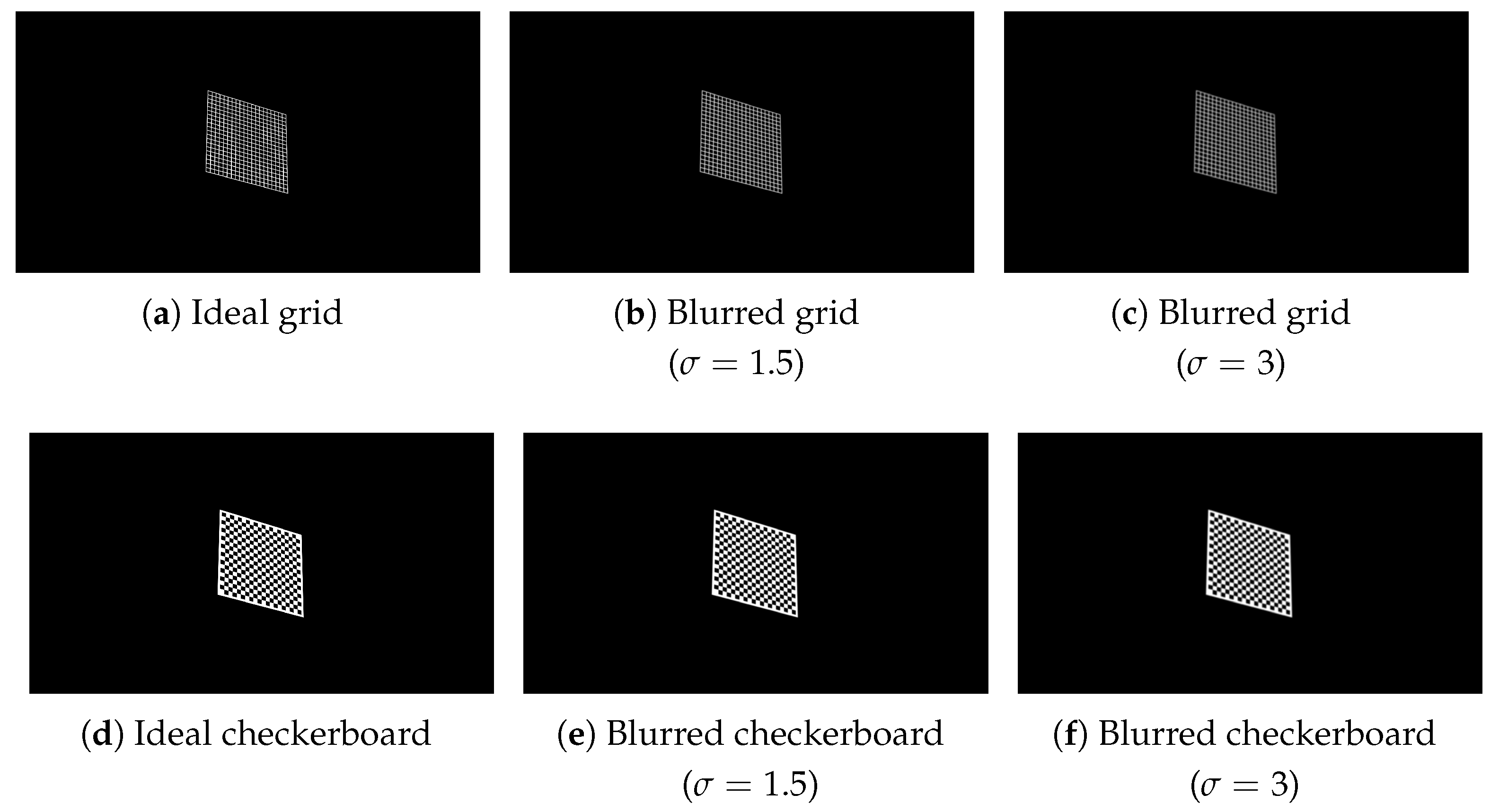

3.1. Detection Precision and Robustness

3.2. Reprojection Error in Calibration and 3D Reconstruction

3.3. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tserevelakis, G.J.; Tekonaki, E.; Kalogeridi, M.; Liaskas, I.; Pavlopoulos, A.; Zacharakis, G. Hybrid Fluorescence and Frequency-Domain Photoacoustic Microscopy for Imaging Development of Parhyale hawaiensis Embryos. Photonics 2023, 10, 264. [Google Scholar] [CrossRef]

- Wu, J.; Cai, X.; Wei, J.; Wang, C.; Zhou, Y.; Sun, K. A Measurement System with High Precision and Large Range for Structured Surface Metrology Based on Atomic Force Microscope. Photonics 2023, 10, 289. [Google Scholar] [CrossRef]

- Zhu, W.; Ma, C.; Xia, L.; Li, X. A Fast and Accurate Algorithm for Chessboard Corner Detection. In Proceedings of the 2009 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009. [Google Scholar] [CrossRef]

- Juan, H.; Junying, X.; Xiaoquan, X.; Qi, Z. Automatic corner detection and localization for camera calibration. In Proceedings of the IEEE 2011 10th International Conference on Electronic Measurement & Instruments, Chengdu, China, 16–19 August 2011. [Google Scholar] [CrossRef]

- Guan, X.; Jian, S.; Hongda, P.; Zhiguo, Z.; Haibin, G. A Novel Corner Point Detector for Calibration Target Images Based on Grayscale Symmetry. In Proceedings of the 2009 Second International Symposium on Computational Intelligence and Design, Changsha, China, 12–14 December 2009. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, G.; Xie, X.; Wang, Z. A new algorithm for accurate and automatic chessboard corner detection. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Z.; Wu, Y. Recognition of corners of planar pattern image. In Proceedings of the 2010 8th World Congress on Intelligent Control and Automation, Jinan, China, 7–9 July 2010. [Google Scholar] [CrossRef]

- Shi, D.; Huang, F.; Yang, J.; Jia, L.; Niu, Y.; Liu, L. Improved Shi–Tomasi sub-pixel corner detection based on super-wide field of view infrared images. Appl. Opt. 2024, 63, 831–837. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Guo, C. A Novel Algorithm for Detecting both the Internal and External Corners of Checkerboard Image. In Proceedings of the 2009 First International Workshop on Education Technology and Computer Science, Wuhan, China, 7–8 March 2009. [Google Scholar] [CrossRef]

- Bennett, S.; Lasenby, J. ChESS—Quick and Robust Detection of Chess-board Features. Comput. Vis. Image Underst. 2014, 118, 197–210. [Google Scholar] [CrossRef]

- Huang, L.; He, L.; Li, J.; Yu, L. A Checkerboard Corner Detection Method Using Circular Samplers. In Proceedings of the 2018 IEEE 4th International Conference on Computer and Communications (ICCC), Chengdu, China, 7–10 December 2018. [Google Scholar] [CrossRef]

- Sang, Q.; Huang, T.; Wang, H. An improved checkerboard detection algorithm based on adaptive filters. Pattern Recognit. Lett. 2023, 172, 22–28. [Google Scholar] [CrossRef]

- Yimin, L.; Naiguang, L.; Xiaoping, L.; Peng, S. A novel approach to sub-pixel corner detection of the grid in camera calibration. In Proceedings of the 2010 International Conference on Computer Application and System Modeling (ICCASM 2010), Taiyuan, China, 22–24 October 2010. [Google Scholar] [CrossRef]

- Placht, S.; Fürsattel, P.; Mengue, E.A.; Hofmann, H.; Schaller, C.; Balda, M.; Angelopoulou, E. ROCHADE: Robust Checkerboard Advanced Detection for Camera Calibration. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 766–779. [Google Scholar]

- Feng, W.; Wang, H.; Fan, J.; Xie, B.; Wang, X. Geometric Parameters Calibration of Focused Light Field Camera Based on Edge Spread Information Fitting. Photonics 2023, 10, 187. [Google Scholar] [CrossRef]

- Du, X.; Jiang, B.; Wu, L.; Xiao, M. Checkerboard corner detection method based on neighborhood linear fitting. Appl. Opt. 2023, 62, 7736–7743. [Google Scholar] [CrossRef] [PubMed]

- Donné, S.; De Vylder, J.; Goossens, B.; Philips, W. MATE: Machine Learning for Adaptive Calibration Template Detection. Sensors 2016, 16, 1858. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Wan, Y. A highly accurate and robust deep checkerboard corner detector. Electron. Lett. 2021, 57, 317–320. [Google Scholar] [CrossRef]

- Zhu, H.; Zhou, Z.; Liang, B.; Han, X.; Tao, Y. Sub-Pixel Checkerboard Corner Localization for Robust Vision Measurement. IEEE Signal Process. Lett. 2024, 31, 21–25. [Google Scholar] [CrossRef]

- Grana, C.; Borghesani, D.; Cucchiara, R. Optimized Block-Based Connected Components Labeling With Decision Trees. IEEE Trans. Image Process. 2010, 19, 1596–1609. [Google Scholar] [CrossRef] [PubMed]

- Suzuki, S. Topological structural analysis of digitized binary images by border following. Comput. Vision Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Duda, A.; Frese, U. Accurate Detection and Localization of Checkerboard Corners for Calibration. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

| Dataset | Standard Deviation | Method | Total Number of Corners | Average Error (Pixels) | Biggest Error (Pixels) |

|---|---|---|---|---|---|

| Ideal images | 0 | OpenCV | 27,075 | 0.0802 | 0.2846 |

| Method in [22] | 27,075 | 0.0621 | 0.4185 | ||

| GESeF | 27,075 | 0.1193 | 0.4819 | ||

| Blurred images | 1.5 | OpenCV | 24,909 | 0.3595 | 4.5769 |

| Method in [22] | 18,050 | 0.1688 | 1.0679 | ||

| GESeF | 27,075 | 0.0930 | 0.4377 | ||

| Blurred images | 3 | OpenCV | 22,742 | 0.4978 | 2.9159 |

| Method in [22] | 6859 | 0.2898 | 1.2968 | ||

| GESeF | 27,075 | 0.1498 | 0.6747 |

| Method | Pattern | Number of Images | Reprojection Error |

|---|---|---|---|

| Method in [22] | Checkerboard | 40 | 0.332409 |

| GESeF | Grid | 40 | 0.172571 |

| Pattern | Actual Interval | Mean Interval | Error | Actual Diagonal | Mean Diagonal | Error | Plane MSE |

|---|---|---|---|---|---|---|---|

| Checkerboard | 0.250000 | 0.250837 | 0.000837 | 5.700877 | 5.706677 | 0.005800 | 0.000123 |

| Grid | 0.200000 | 0.200275 | 0.000275 | 6.505382 | 6.509250 | 0.003868 | 0.000063 |

| Corner Detector Used in Calibration | Mean Interval | Error | Mean Diagonal | Error | Plane MSE |

|---|---|---|---|---|---|

| Method in [22] | 0.501287 | 0.001287 | 5.670797 | 0.013943 | 0.000048 |

| GESeF | 0.500351 | 0.000351 | 5.659878 | 0.003024 | 0.000038 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Zhao, W.; Li, K.; Wang, J.; Yi, S.; Jiang, H.; Zhang, H. A Segment-Based Algorithm for Grid Junction Corner Detection Used in Stereo Microscope Camera Calibration. Photonics 2024, 11, 688. https://doi.org/10.3390/photonics11080688

Liu J, Zhao W, Li K, Wang J, Yi S, Jiang H, Zhang H. A Segment-Based Algorithm for Grid Junction Corner Detection Used in Stereo Microscope Camera Calibration. Photonics. 2024; 11(8):688. https://doi.org/10.3390/photonics11080688

Chicago/Turabian StyleLiu, Junjie, Weiren Zhao, Keming Li, Jiahui Wang, Shuangping Yi, Huan Jiang, and Hui Zhang. 2024. "A Segment-Based Algorithm for Grid Junction Corner Detection Used in Stereo Microscope Camera Calibration" Photonics 11, no. 8: 688. https://doi.org/10.3390/photonics11080688

APA StyleLiu, J., Zhao, W., Li, K., Wang, J., Yi, S., Jiang, H., & Zhang, H. (2024). A Segment-Based Algorithm for Grid Junction Corner Detection Used in Stereo Microscope Camera Calibration. Photonics, 11(8), 688. https://doi.org/10.3390/photonics11080688