First Demonstration of Calibrated Color Imaging by the CAOS Camera

Abstract

:1. Introduction

2. CMOS and CAOS Camera Color Imaging Testing Methods

- (1)

- Use the camera under test to image the LG3 lightbox source illuminated test area without the transmissive color chart target placed in the lightbox. Confirm ≥ 95% spatial response uniformity of camera output over the white light screen test area given the LG3 lightbox is specified with a ≥95% spatial response uniformity over its illumination area. It has been pointed out earlier that camera spatial response uniformity is important for color imaging camera testing [30].

- (2)

- Using the TE 188 color checker chart placed at the LG3 lightbox illuminated test area, use the camera under test to take the three red (R), green (G), and blue (B) primary color images in time sequence using the selected Thorlabs (Newton, NJ, USA) R, G, B color filters models FD1R, FD1G, FD1B, respectively. The raw RGB pixel data is next averaged over its specific test patch area to provide averaged raw RGB data values Rraw, Graw, and Braw for the 24 test patches.

- (3)

- The test chart must include a white color patch that is needed for white balancing the raw RGB image data to introduce color constancy [31]. The averaged raw RGB data for the white patch is used to compute the camera raw RGB data weight balancing factors labelled as wR = 1/[Rraw (white)], wG = 1/[Graw (white)], wB = 1/[Braw (white)]. For example, with camera provided white patch raw vector [R, G, B] = [5 7 9], the white balancing weights are wR, = 1/5, wG = 1/7, wB =1/9. One implements the white balancing operation on the raw tri-simulus RGB data by using the formulas RWB = wR Rraw, GWB = wG Graw, and BWB = wB Braw.

- (4)

- Using the camera acquired RGB image data with the pre-calibrated CIE XYZ 3-D color space data provided by Image Engineering for the 24 patches of the TE-188 chart under LG3 illumination, next compute via an irradiance independent least squares regression optimization technique [32] the deployed camera color correction matrix that maps the camera white balanced RGB values to the CIE XYZ color space standard. The basics of the computation of the camera under test color correction matrix are as follows:

- -

- Let A represent the 3 × N matrix of experimentally acquired white balanced RGB (RWB, GWB, BWB) camera outputs for N known color patches. Let B represent the 3 × N matrix of the known corresponding tristimulus X, Y, Z values provided by the commercial vendor using the measured spectral power distribution of the illuminant, the spectral function of each patch, and the CIE color matching functions. Using the known A and B matrices, compute the color correction matrix using the formula Φ = (AAT)−1.

- -

- Find the XYZ values for unknown (or new color patches) using the same illumination and deployed color filters for the test camera by deploying the computed 3 × 3 color correction Φ-matrix and using the following equation:

- (5)

- Both CIE XYZ and CIE L*a*b* are used for color camera performance analysis. First to assess visual observation via calibration error, Image Engineering-provided ground truth XYZ values are compared with the test camera measured XYZ values called X′, Y′, Z′ using the following root mean square (RMS) error and percentage error metrics [34]:where ΔX = X − X′, ΔY = Y − Y′ and ΔZ = Z − Z′.

- (6)

- In addition, to calculate Delta E (CIE 2000) that is often considered for assessing the calibration error in terms of visual performance, we convert the XYZ values to L*a*b* values [35]. This conversion requires a reference white Xr, Yr, Zr which in our case is:Xr = 95.047Yr = 100Zr = 108.833where:

- (7)

- In order to visually observe both the Image Engineering-provided TE188 chart XYZ values and the test camera captured TE188 target 24 color patches on a standard 8-bit computer display, the following procedure is implemented [38]:

- (8)

- Implemented is Gamma encoding and 8-bit scale conversion for the computed linear RGB values to display via the s-RGB standard on a commercial 8-bit color computer display. This operation is done using the following equations with a 2.4 gamma rating for the display [39]:

- (9)

- As the final step in the test camera pre-calibrated color imaging evaluation, in order to visually observe and compare the ground truth and test camera 24 color patches on standard 8-bit computer display, the final 8-bit s-RGB data for the two comparative images is fed to a display. One should note that for regular camera color imaging operations with unknown color scenes versus a comparative color checking operation using LOOCV with a known color checker, one deploys a single color correction matrix derived using a large (e.g., 140) set of known colors using Calibrite Digital ColorChecker SG [40] that can enable accurate and robust recovery of unknown colors on a per pixel basis [41].

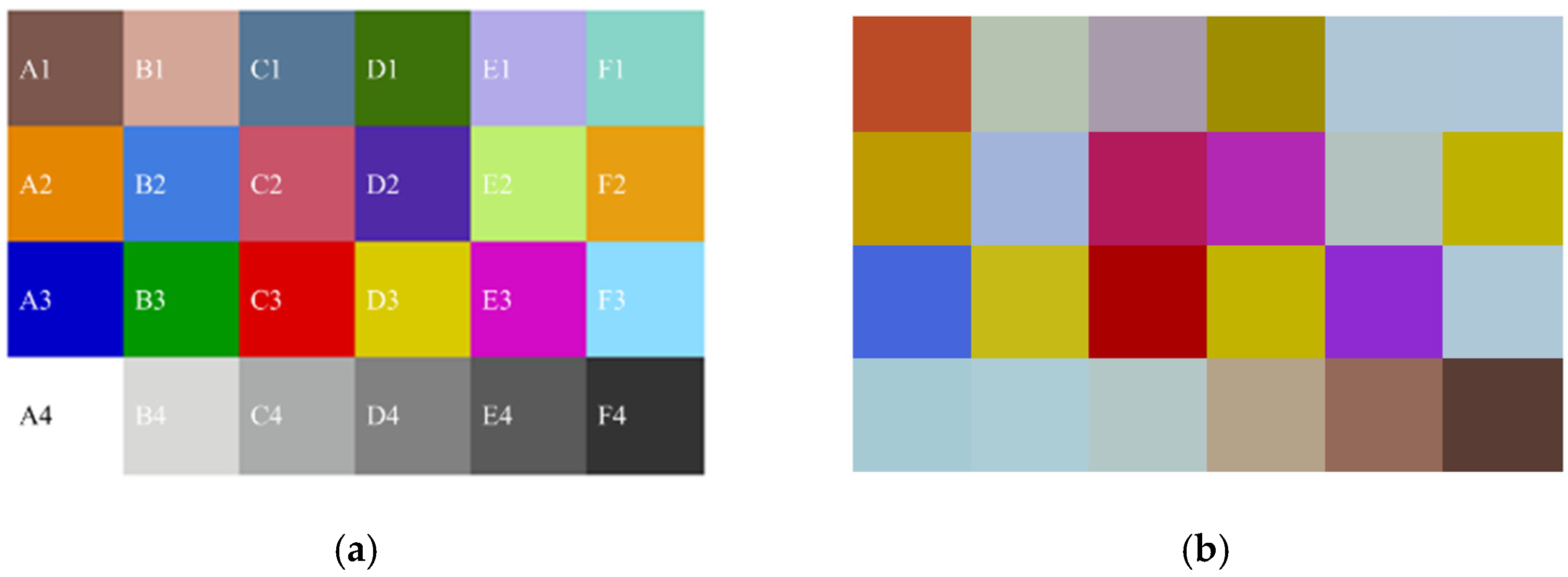

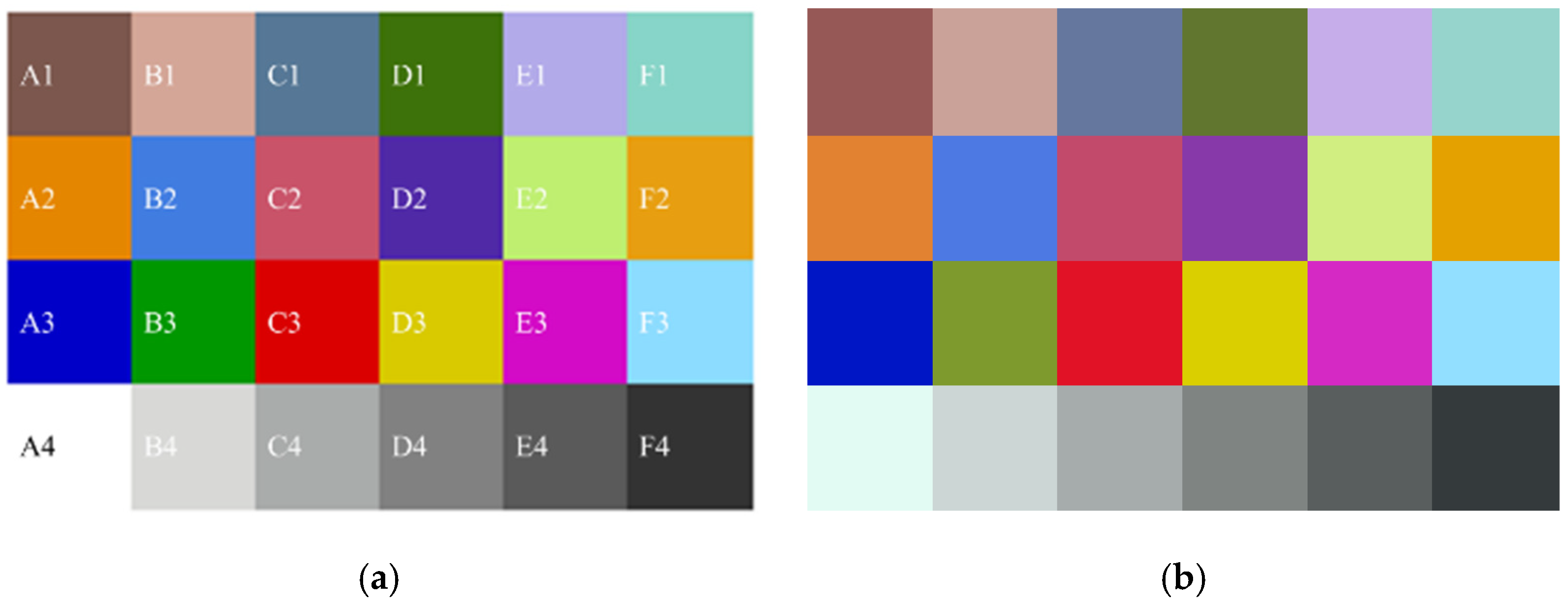

3. CMOS Camera Color Imaging Experiment

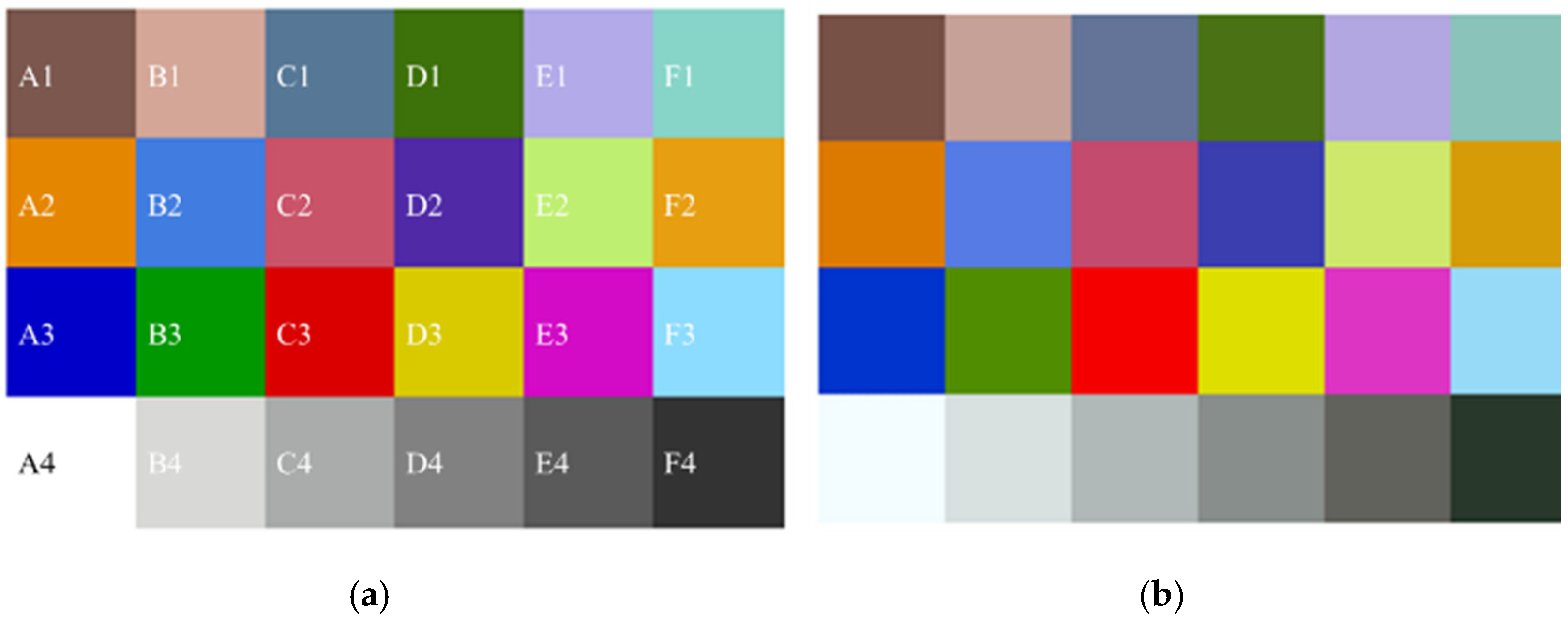

4. CAOS Camera Color Imaging Experiment

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- McCann, J.J.; Rizzi, A. The Art and Science of HDR Imaging; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Reinhard, E.; Ward, G.; Pattanaik, S.; Debevec, P. High Dynamic Range Imaging: Acquisition, Display, and Image-Based Lighting; Elsevier Morgan Kaufmann Publisher: Burlington, MA, USA, 2005. [Google Scholar]

- Banterle, F.; Artusi, A.; Debattista, K.; Chalmers, A. Advanced High Dynamic Range Imaging—Theory and Practice; CRC Press, Taylor & Francis Group: Oxfordshire, UK, 2011. [Google Scholar]

- Gouveia, L.C.P.; Choubey, B. Advances on CMOS image sensors. Sens. Rev. 2016, 36, 231–239. [Google Scholar] [CrossRef] [Green Version]

- Riza, N.A.; Mazhar, M.A. Robust Low Contrast Image Recovery over 90 dB Scene Linear Dynamic Range using CAOS Camera. In Proceedings of the IS & T Electronic Imaging; Society for Imaging Science and Technology: Burlingame, CA, USA, 2020; pp. 1–10. [Google Scholar]

- Academy of Motion Picture Arts and Sciences (AMPAS). S-2008–001. Academy Color Encoding Specification (ACES) Version 1.0; Academy of Motion Picture Arts and Sciences: Los Angeles, CA, USA, 2008. [Google Scholar]

- Apple. Pro Display XDR. Available online: https://www.apple.com/ie/pro-display-xdr (accessed on 20 February 2021).

- Hoffmann, G. CIE Color Space; Technical Report; University of Applied Sciences: Emden, Germany, 2000. [Google Scholar]

- Vargas, A.; Johnson, P.; Kim, J.; Hoffman, D. A perceptually uniform tone curve for OLED and other high dynamic range displays. J. Vis. 2014, 14, 83. [Google Scholar] [CrossRef]

- Golay, M.J.E. Multi-Slit Spectrometry. J. Opt. Soc. Am. 1949, 39, 437–444. [Google Scholar] [CrossRef]

- Fellgett, P.I. —les principes généraux des méthodes nouvelles en spectroscopie interférentielle-A propos de la théorie du spectromètre interférentiel multiplex. J. Phys. Radium 1958, 19, 187–191. [Google Scholar] [CrossRef] [Green Version]

- Gottlieb, P. A television scanning scheme for a detector-noise limited system. IEEE Trans. Inform. Theory 1968, 14, 428–433. [Google Scholar] [CrossRef]

- Ibbett, R.N.; Aspinall, D.; Grainger, J.F. Real-Time Multiplexing of Dispersed Spectra in Any Wavelength Region. Appl. Opt. 1968, 7, 1089–1093. [Google Scholar] [CrossRef]

- Decker, J.A., Jr.; Harwitt, M.O. Sequential Encoding with Multislit Spectrometers. Appl. Opt. 1968, 7, 2205–2209. [Google Scholar] [CrossRef]

- Decker, J.A., Jr. Hadamard-Transform Image Scanning. Appl. Opt. 1970, 9, 1392–1395. [Google Scholar] [CrossRef]

- Kearney, K.; Ninkov, Z. Characterization of a digital micro-mirror device for use as an optical mask in imaging and spectroscopy. Proc. SPIE 1998, 3292, 81–92. [Google Scholar]

- Castracane, J.; Gutin, M. DMD-based bloom control for intensified imaging systems. Proc. SPIE 1999, 3633, 234–242. [Google Scholar]

- Nayar, S.; Branzoi, V.; Boult, T. Programmable imaging using a digital micro-mirror array. Proc. IEEE Conf. Comput. Vis. Pattern Recognit. 2004, 1, 436–443. [Google Scholar]

- Sumriddetchkajorn, S.; Riza, N.A. Micro-electro-mechanical system-based digitally controlled optical beam profiler. Appl. Opt. 2002, 41, 3506–3510. [Google Scholar] [CrossRef]

- Riza, N.A.; Mughal, M.J. Optical power independent optical beam profiler. Opt. Eng. 2004, 43, 793–797. [Google Scholar] [CrossRef]

- Takhar, D.; Laska, J.N.; Wakin, M.; Duarte, M.; Baron, D.; Sarvotham, S.; Kelly, K.; Baraniuk, R.G. A new compressive imaging camera architecture using optical-domain compression. Proc. SPIE 2006, 6065, 606509. [Google Scholar] [CrossRef]

- Durán, V.; Clemente, P.; Fernández-Alonso, M.; Tajahuerce, E.; Lancis, J. Single-pixel polarimetric imaging. Opt. Lett. 2012, 37, 824–826. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shin, J.; Bosworth, B.T.; Foster, M.A. Single-pixel imaging using compressed sensing and wavelength-dependent scattering. Opt. Lett. 2016, 41, 886–889. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, S.; Peng, J.; Yao, M.; Zheng, G.; Zhong, J. Simultaneous spatial, spectral, and 3D compressive imaging via efficient Fourier single-pixel measurements. Optica 2018, 5, 315–319. [Google Scholar] [CrossRef]

- Riza, N.A.; Mazhar, M.A. 177 dB Linear Dynamic Range Pixels of Interest DSLR CAOS Camera. IEEE Photonics J. 2019, 11, 1–10. [Google Scholar] [CrossRef]

- Riza, N.A.; La Torre, J.P.; Amin, M.J. CAOS-CMOS camera. Opt. Express 2016, 24, 13444–13458. [Google Scholar] [CrossRef]

- Riza, N.A.; Mazhar, M.A. Demonstration of CAOS Smart Camera Imaging for Color and Super Blue Moon Targets. In Proceedings of the OSA Advanced Photonics Congress on Sensors, Zurich, Switzerland, 2–5 July 2018. paper SeW2E.3. [Google Scholar]

- Riza, N.A.; Mazhar, M.A.; Ashraf, N. Solar Limb Darkening Color Imaging of the Sun with the Extreme Brightness Capability CAOS Camera. In London Imaging Meeting; Society for Imaging Science and Technology: London, UK, 2020; Volume 2020, pp. 69–73. [Google Scholar]

- Ramantha, R.; Snyder, W.E.; Yoo, Y.; Drew, M.S. Color Image Processing Pipeline: A general survey of digital still cameras. IEEE Signal Process. Mag. 2005, 22, 34–43. [Google Scholar] [CrossRef]

- Pointer, M.R.; Attridge, G.G.; Jacobson, R.E. Practical camera characterization for colour measurement. Imaging Sci. J. 2001, 49, 63–80. [Google Scholar] [CrossRef]

- Ebner, M. Color Constancy; Kriss, M.A., Ed.; Wiley-IS&T Series in IS&T; Wiley: Hoboken, NJ, USA, 2007. [Google Scholar]

- Bastani, P.; Funt, B. Simplifying irradiance independent color calibration. Proc. SPIE 2014, 9015, 90150N. [Google Scholar]

- Celisse, A. Optimal cross-validation in density estimation with the L2-loss. Ann. Stat. 2014, 42, 1879–1910. [Google Scholar] [CrossRef]

- Hyndman, R.; Koehler, A.B. Another look at measures of forecast accuracy. Int. J. Forecast. 2006, 22, 679–688. [Google Scholar] [CrossRef] [Green Version]

- Lindblom, B. XYZ to Lab. Available online: http://www.brucelindbloom.com/index.html?Eqn_XYZ_to_Lab.html (accessed on 15 January 2021).

- Fraser, B.; Murphy, C.; Bunting, F. Real World Color Management; Peachpit Press: San Francisco, CA, USA, 2004. [Google Scholar]

- Lindblom, B. Delta E (CIE 2000 B). Available online: http://www.brucelindbloom.com/index.html?Eqn_DeltaE_CIE2000.htm (accessed on 15 January 2021).

- Lindblom, B. RGB/XYZ Matrices. Available online: http://www.brucelindbloom.com/Eqn_RGB_XYZ_Matrix.html (accessed on 15 January 2021).

- IEC 61966-2-1:1999. IEC Webstore; International Electrotechnical Commission: Geneva, Switzerland, 1999. [Google Scholar]

- Imatest LLC. Calibrite Digital ColorChecker SG. Available online: https://store.imatest.com/test-charts/color-charts/colorchecker-sg.html (accessed on 15 January 2021).

- Imatest LLC. Color Correction Matrix. Available online: http://www.imatest.com/docs/colormatrix (accessed on 15 January 2021).

- Riza, N.A.; Ashraf, N. Calibration Empowered Minimalistic MultiExposure Image Processing Technique for Camera Linear Dynamic Range Extension. In Proceedings of the IS & T Elect. Imaging Conference; Society for Imaging Science and Technology: Burlingame, CA, USA, 2020; pp. 2131–2136. [Google Scholar]

- Riza, N.A.; Mazhar, M.A. Robust Testing of Displays using the Extreme Linear Dynamic Range CAOS Camera. In Proceedings of the 2019 IEEE 2nd British and Irish Conference on Optics and Photonics (BICOP), London, UK, 11–13 December 2019. [Google Scholar]

| Raw Values | Estimated | Real (Theoretical) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| R | G | B | X | Y | Z | X | Y | Z | RMS | % | ||

| A1 | dark skin | 1665.06 | 3690.63 | 1485.27 | 0.14 | 0.12 | 0.09 | 0.14 | 0.12 | 0.09 | 0.0022 | 1.91 |

| B1 | light skin | 4498.57 | 14,979.84 | 5808.80 | 0.44 | 0.43 | 0.36 | 0.49 | 0.45 | 0.36 | 0.03 | 6.17 |

| C1 | blue sky | 1208.35 | 6426.06 | 4931.85 | 0.17 | 0.17 | 0.29 | 0.15 | 0.17 | 0.30 | 0.01 | 6.38 |

| D1 | foliage | 580.55 | 6437.22 | 367.16 | 0.10 | 0.14 | 0.04 | 0.07 | 0.12 | 0.04 | 0.02 | 20.69 |

| E1 | blue flower | 4106.65 | 15,479.12 | 13,703.65 | 0.49 | 0.46 | 0.79 | 0.47 | 0.44 | 0.79 | 0.02 | 3.50 |

| F1 | bluish green | 2074.91 | 23,971.33 | 10,455.57 | 0.43 | 0.56 | 0.64 | 0.41 | 0.55 | 0.64 | 0.01 | 2.72 |

| A2 | orange | 5998.90 | 9353.95 | 833.50 | 0.44 | 0.34 | 0.06 | 0.46 | 0.36 | 0.07 | 0.02 | 4.54 |

| B2 | purplish blue | 904.73 | 6560.23 | 11,425.79 | 0.20 | 0.19 | 0.64 | 0.21 | 0.20 | 0.69 | 0.03 | 7.22 |

| C2 | moderate red | 4471.01 | 3337.52 | 2797.74 | 0.31 | 0.19 | 0.16 | 0.34 | 0.21 | 0.16 | 0.02 | 8.61 |

| D2 | purple | 667.83 | 1459.02 | 6238.31 | 0.10 | 0.07 | 0.34 | 0.11 | 0.06 | 0.34 | 0.01 | 3.11 |

| E2 | yellow green | 4142.87 | 30,837.50 | 3639.99 | 0.57 | 0.72 | 0.27 | 0.54 | 0.73 | 0.33 | 0.04 | 6.34 |

| F2 | orange yellow | 6024.13 | 14,545.68 | 1355.44 | 0.49 | 0.45 | 0.11 | 0.50 | 0.44 | 0.10 | 0.01 | 3.03 |

| A3 | blue | 151.93 | 518.05 | 9545.91 | 0.09 | 0.05 | 0.53 | 0.09 | 0.04 | 0.50 | 0.02 | 6.20 |

| B3 | green | 556.26 | 9678.52 | 297.83 | 0.13 | 0.20 | 0.05 | 0.09 | 0.21 | 0.06 | 0.02 | 16.20 |

| C3 | red | 6436.24 | 520.18 | 513.38 | 0.39 | 0.18 | 0.02 | 0.35 | 0.17 | 0.02 | 0.03 | 12.22 |

| D3 | yellow | 4884.49 | 24,368.69 | 321.45 | 0.52 | 0.62 | 0.10 | 0.50 | 0.57 | 0.05 | 0.04 | 8.71 |

| E3 | magenta | 6392.00 | 1251.79 | 9251.63 | 0.47 | 0.24 | 0.50 | 0.41 | 0.20 | 0.50 | 0.04 | 10.45 |

| F3 | cyan | 2358.29 | 26,752.05 | 17,481.68 | 0.53 | 0.66 | 1.04 | 0.50 | 0.62 | 1.01 | 0.03 | 4.22 |

| A4 | white | 7373.14 | 34,331.59 | 18,062.25 | 0.87 | 0.91 | 1.08 | 0.95 | 1.00 | 1.09 | 0.07 | 6.85 |

| B4 | neutral 65 | 5103.04 | 26,305.31 | 12,254.68 | 0.64 | 0.69 | 0.74 | 0.65 | 0.69 | 0.74 | 0.01 | 1.26 |

| C4 | neutral 39 | 3189.23 | 16,336.23 | 7453.54 | 0.40 | 0.43 | 0.45 | 0.38 | 0.41 | 0.44 | 0.02 | 4.28 |

| D4 | neutral 21 | 1817.73 | 9042.79 | 4101.63 | 0.22 | 0.24 | 0.25 | 0.21 | 0.22 | 0.24 | 0.01 | 6.65 |

| E4 | neutral 10 | 992.74 | 4331.11 | 2038.16 | 0.11 | 0.12 | 0.12 | 0.10 | 0.10 | 0.11 | 0.01 | 14.29 |

| F4 | neutral 3 | 401.19 | 1495.90 | 815.89 | 0.04 | 0.04 | 0.05 | 0.03 | 0.03 | 0.04 | 0.01 | 33.74 |

| RMSE | Percentage Difference | |||||||||||

| Mean | Median | 95% | Max | Mean | Median | 95% | Max | |||||

| 0.022 | 0.018 | 0.041 | 0.069 | 8.30 | 6.36 | 20.02 | 20.69 | |||||

| Patch | Camera Measured RGB Values | Camera Measured | Lab Image Engg. Reference | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R | G | B | L | a | b | L | a | b | ∆E00 | |

| A1 | 1665.06 | 3690.63 | 1485.27 | 41.43 | 17.94 | 11.92 | 41.23 | 16.55 | 11.79 | 0.96 |

| B1 | 4498.57 | 14,979.84 | 5808.80 | 71.64 | 10.04 | 13.25 | 72.58 | 18.37 | 14.17 | 6.49 |

| C1 | 1208.35 | 6426.06 | 4931.85 | 48.87 | 1.66 | −16.45 | 48.39 | −7.26 | −19.36 | 8.36 |

| D1 | 580.55 | 6437.22 | 367.16 | 44.63 | −27.03 | 38.38 | 41.93 | −37.07 | 35.23 | 5.71 |

| E1 | 4106.65 | 15,479.12 | 13,703.65 | 73.69 | 15.19 | −25.10 | 72.11 | 14.68 | −27.82 | 1.90 |

| F1 | 2074.91 | 23,971.33 | 10,455.57 | 79.69 | −28.11 | −2.94 | 79.21 | −32.76 | −2.98 | 2.04 |

| A2 | 5998.90 | 9353.95 | 833.50 | 65.08 | 36.26 | 62.15 | 66.36 | 36.53 | 61.82 | 1.07 |

| B2 | 904.73 | 6560.23 | 11,425.79 | 50.72 | 11.18 | −52.14 | 52.18 | 6.59 | −54.09 | 3.55 |

| C2 | 4471.01 | 3337.52 | 2797.74 | 50.77 | 55.33 | 10.54 | 53.38 | 54.42 | 14.97 | 3.49 |

| D2 | 667.83 | 1459.02 | 6238.31 | 31.43 | 31.93 | −54.49 | 29.46 | 45.42 | −57.34 | 6.15 |

| E2 | 4142.87 | 30,837.50 | 3639.99 | 88.09 | −27.94 | 53.33 | 88.56 | −35.82 | 46.06 | 5.27 |

| F2 | 6024.13 | 14,545.68 | 1355.44 | 72.93 | 18.74 | 59.67 | 71.97 | 23.75 | 62.02 | 2.81 |

| A3 | 151.93 | 518.05 | 9545.91 | 26.47 | 42.63 | −83.80 | 22.95 | 61.04 | −86.92 | 8.20 |

| B3 | 556.26 | 9678.52 | 297.83 | 52.36 | −38.49 | 48.66 | 53.39 | −67.75 | 42.32 | 10.73 |

| C3 | 6436.24 | 520.18 | 513.38 | 50.09 | 87.03 | 62.97 | 48.76 | 77.63 | 58.60 | 2.45 |

| D3 | 4884.49 | 24,368.69 | 321.45 | 82.84 | −17.45 | 81.20 | 80.20 | −10.32 | 92.36 | 5.28 |

| E3 | 6392.00 | 1251.79 | 9251.63 | 55.90 | 85.78 | −29.91 | 51.94 | 85.25 | −37.50 | 4.56 |

| F3 | 2358.29 | 26,752.05 | 17,481.68 | 84.75 | −21.67 | −23.46 | 83.03 | −22.34 | −24.48 | 1.25 |

| A4 | 7373.14 | 34,331.59 | 18,062.25 | 96.35 | 1.75 | −5.78 | 100.00 | 0.00 | 0.00 | 5.94 |

| B4 | 5103.04 | 26,305.31 | 12,254.68 | 86.64 | −4.87 | 0.82 | 86.33 | −0.41 | 0.43 | 5.70 |

| C4 | 3189.23 | 16,336.23 | 7453.54 | 71.62 | −3.88 | 1.68 | 69.85 | −0.29 | −0.13 | 5.15 |

| D4 | 1817.73 | 9042.79 | 4101.63 | 56.03 | −2.34 | 1.79 | 54.04 | −0.28 | 0.00 | 3.76 |

| E4 | 992.74 | 4331.11 | 2038.16 | 40.91 | 1.35 | 1.35 | 38.27 | −0.22 | −0.15 | 3.53 |

| F4 | 401.19 | 1495.90 | 815.89 | 24.55 | 4.24 | −1.04 | 21.14 | 0.22 | −0.61 | 5.79 |

| ∆E | ||||||||||

| Mean | Median | 0.95 | Max | |||||||

| 4.59 | 4.85 | 8.34 | 10.73 | |||||||

| Raw Values | Estimated | Real (Theoretical) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| R | G | B | X | Y | Z | X | Y | Z | RMS | % | |

| A1 | 0.173 | 0.078 | 0.064 | 0.12 | 0.11 | 0.08 | 0.14 | 0.12 | 0.09 | 0.01 | 11.82 |

| B1 | 0.519 | 0.327 | 0.298 | 0.43 | 0.40 | 0.35 | 0.49 | 0.45 | 0.36 | 0.04 | 9.83 |

| C1 | 0.114 | 0.149 | 0.270 | 0.16 | 0.17 | 0.31 | 0.15 | 0.17 | 0.30 | 0.01 | 3.79 |

| D1 | 0.041 | 0.151 | 0.016 | 0.09 | 0.13 | 0.04 | 0.07 | 0.12 | 0.04 | 0.01 | 10.93 |

| E1 | 0.428 | 0.339 | 0.660 | 0.46 | 0.43 | 0.73 | 0.47 | 0.44 | 0.79 | 0.03 | 5.76 |

| F1 | 0.191 | 0.485 | 0.444 | 0.37 | 0.47 | 0.54 | 0.41 | 0.55 | 0.64 | 0.08 | 14.54 |

| A2 | 0.693 | 0.206 | 0.039 | 0.41 | 0.31 | 0.05 | 0.46 | 0.36 | 0.07 | 0.04 | 12.13 |

| B2 | 0.100 | 0.157 | 0.649 | 0.23 | 0.21 | 0.71 | 0.21 | 0.20 | 0.69 | 0.02 | 4.84 |

| C2 | 0.545 | 0.080 | 0.161 | 0.31 | 0.19 | 0.16 | 0.34 | 0.21 | 0.16 | 0.02 | 7.52 |

| D2 | 0.062 | 0.031 | 0.352 | 0.10 | 0.07 | 0.38 | 0.11 | 0.06 | 0.34 | 0.02 | 10.83 |

| E2 | 0.463 | 0.742 | 0.190 | 0.57 | 0.72 | 0.31 | 0.54 | 0.73 | 0.33 | 0.02 | 3.88 |

| F2 | 0.630 | 0.318 | 0.054 | 0.43 | 0.39 | 0.09 | 0.50 | 0.44 | 0.10 | 0.05 | 12.17 |

| A3 | 0.014 | 0.010 | 0.492 | 0.10 | 0.06 | 0.52 | 0.09 | 0.04 | 0.50 | 0.02 | 6.69 |

| B3 | 0.033 | 0.238 | 0.011 | 0.12 | 0.20 | 0.05 | 0.09 | 0.21 | 0.06 | 0.02 | 12.41 |

| C3 | 0.845 | 0.008 | 0.021 | 0.43 | 0.19 | −0.02 | 0.35 | 0.17 | 0.02 | 0.06 | 24.69 |

| D3 | 0.598 | 0.638 | 0.009 | 0.57 | 0.68 | 0.12 | 0.50 | 0.57 | 0.05 | 0.08 | 18.65 |

| E3 | 0.735 | 0.034 | 0.495 | 0.46 | 0.24 | 0.50 | 0.41 | 0.20 | 0.50 | 0.04 | 8.99 |

| F3 | 0.237 | 0.606 | 0.823 | 0.51 | 0.62 | 0.95 | 0.50 | 0.62 | 1.01 | 0.03 | 4.60 |

| A4 | 0.817 | 0.870 | 0.910 | 0.89 | 0.96 | 1.08 | 0.95 | 1.00 | 1.09 | 0.04 | 4.15 |

| B4 | 0.603 | 0.654 | 0.671 | 0.68 | 0.73 | 0.81 | 0.65 | 0.69 | 0.74 | 0.05 | 7.09 |

| C4 | 0.380 | 0.428 | 0.430 | 0.44 | 0.47 | 0.52 | 0.38 | 0.41 | 0.44 | 0.07 | 16.07 |

| D4 | 0.214 | 0.241 | 0.234 | 0.24 | 0.26 | 0.28 | 0.21 | 0.22 | 0.24 | 0.04 | 18.12 |

| E4 | 0.107 | 0.108 | 0.101 | 0.11 | 0.12 | 0.12 | 0.10 | 0.10 | 0.11 | 0.02 | 14.46 |

| F4 | 0.016 | 0.035 | 0.022 | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 | 0.04 | 0.01 | 17.28 |

| RMSE | Percentage Difference | ||||||||||

| Mean | Median | 95% | Max | Mean | Median | 95% | Max | ||||

| 0.034 | 0.034 | 0.077 | 0.082 | 10.88 | 10.88 | 18.57 | 24.69 | ||||

| Patch | Camera Measured RGB Values | Camera Measured | Camera Measured RGB Values | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R | G | B | L | a | b | L | a | b | ∆E00 | |

| A1 | 0.173 | 0.078 | 0.064 | 38.95 | 17.14 | 12.65 | 41.23 | 16.55 | 11.79 | 2.09 |

| B1 | 0.519 | 0.327 | 0.298 | 69.59 | 14.44 | 10.27 | 72.58 | 18.37 | 14.17 | 3.84 |

| C1 | 0.114 | 0.149 | 0.270 | 48.34 | 0.60 | −20.29 | 48.39 | −7.26 | −19.36 | 7.27 |

| D1 | 0.041 | 0.151 | 0.016 | 43.29 | −31.34 | 34.64 | 41.93 | −37.07 | 35.23 | 2.63 |

| E1 | 0.428 | 0.339 | 0.660 | 71.27 | 15.12 | −24.92 | 72.11 | 14.68 | −27.82 | 1.66 |

| F1 | 0.191 | 0.485 | 0.444 | 74.10 | −24.40 | −2.71 | 79.21 | −32.76 | −2.98 | 5.26 |

| A2 | 0.693 | 0.206 | 0.039 | 62.54 | 38.88 | 64.91 | 66.36 | 36.53 | 61.82 | 3.30 |

| B2 | 0.100 | 0.157 | 0.649 | 52.96 | 14.20 | −54.92 | 52.18 | 6.59 | −54.09 | 4.88 |

| C2 | 0.545 | 0.080 | 0.161 | 51.04 | 56.33 | 9.02 | 53.38 | 54.42 | 14.97 | 3.96 |

| D2 | 0.062 | 0.031 | 0.352 | 32.12 | 30.87 | −57.53 | 29.46 | 45.42 | −57.34 | 7.91 |

| E2 | 0.463 | 0.742 | 0.190 | 87.86 | −26.02 | 47.85 | 88.56 | −35.82 | 46.06 | 4.80 |

| F2 | 0.630 | 0.318 | 0.054 | 68.96 | 17.36 | 59.98 | 71.97 | 23.75 | 62.02 | 4.28 |

| A3 | 0.014 | 0.010 | 0.492 | 29.03 | 39.16 | −79.11 | 22.95 | 61.04 | −86.92 | 9.37 |

| B3 | 0.033 | 0.238 | 0.011 | 52.06 | −43.03 | 44.93 | 53.39 | −67.75 | 42.32 | 8.33 |

| C3 | 0.845 | 0.008 | 0.021 | 51.06 | 94.49 | 118.79 | 48.76 | 77.63 | 58.60 | 15.94 |

| D3 | 0.598 | 0.638 | 0.009 | 85.98 | −18.20 | 80.88 | 80.20 | −10.32 | 92.36 | 6.62 |

| E3 | 0.735 | 0.034 | 0.495 | 56.19 | 80.58 | −30.03 | 51.94 | 85.25 | −37.50 | 4.68 |

| F3 | 0.237 | 0.606 | 0.823 | 82.88 | −19.65 | −20.89 | 83.03 | −22.34 | −24.48 | 1.90 |

| A4 | 0.817 | 0.870 | 0.910 | 98.28 | −2.97 | −2.58 | 100.00 | 0.00 | 0.00 | 4.72 |

| B4 | 0.603 | 0.654 | 0.671 | 88.40 | −3.29 | −1.26 | 86.33 | −0.41 | 0.43 | 4.36 |

| C4 | 0.380 | 0.428 | 0.430 | 74.39 | −4.13 | −0.43 | 69.85 | −0.29 | −0.13 | 6.08 |

| D4 | 0.214 | 0.241 | 0.234 | 58.43 | −3.54 | 0.83 | 54.04 | −0.28 | 0.00 | 5.99 |

| E4 | 0.107 | 0.108 | 0.101 | 41.28 | −0.89 | 2.39 | 38.27 | −0.22 | −0.15 | 3.69 |

| F4 | 0.016 | 0.035 | 0.022 | 21.62 | −11.48 | 5.61 | 21.14 | 0.22 | −0.61 | 13.35 |

| ∆E | ||||||||||

| Mean | Median | 95% | Max | |||||||

| 5.70416 | 4.75777 | 12.7495 | 15.9409 | |||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Riza, N.A.; Ashraf, N. First Demonstration of Calibrated Color Imaging by the CAOS Camera. Photonics 2021, 8, 538. https://doi.org/10.3390/photonics8120538

Riza NA, Ashraf N. First Demonstration of Calibrated Color Imaging by the CAOS Camera. Photonics. 2021; 8(12):538. https://doi.org/10.3390/photonics8120538

Chicago/Turabian StyleRiza, Nabeel A., and Nazim Ashraf. 2021. "First Demonstration of Calibrated Color Imaging by the CAOS Camera" Photonics 8, no. 12: 538. https://doi.org/10.3390/photonics8120538

APA StyleRiza, N. A., & Ashraf, N. (2021). First Demonstration of Calibrated Color Imaging by the CAOS Camera. Photonics, 8(12), 538. https://doi.org/10.3390/photonics8120538