Three-Dimensional Laser Imaging with a Variable Scanning Spot and Scanning Trajectory

Abstract

:1. Introduction

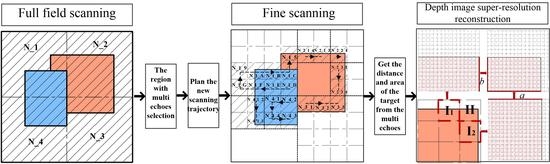

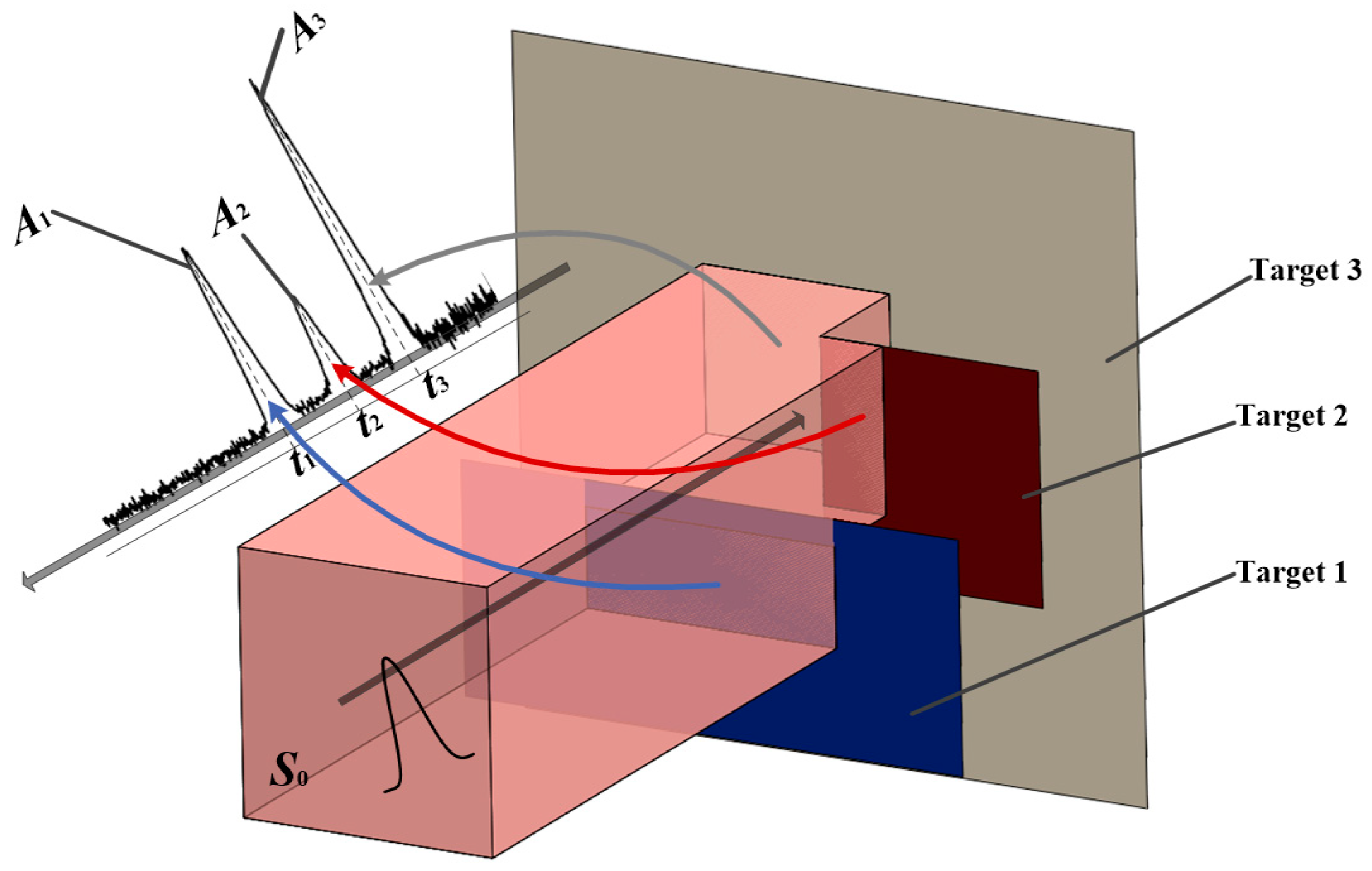

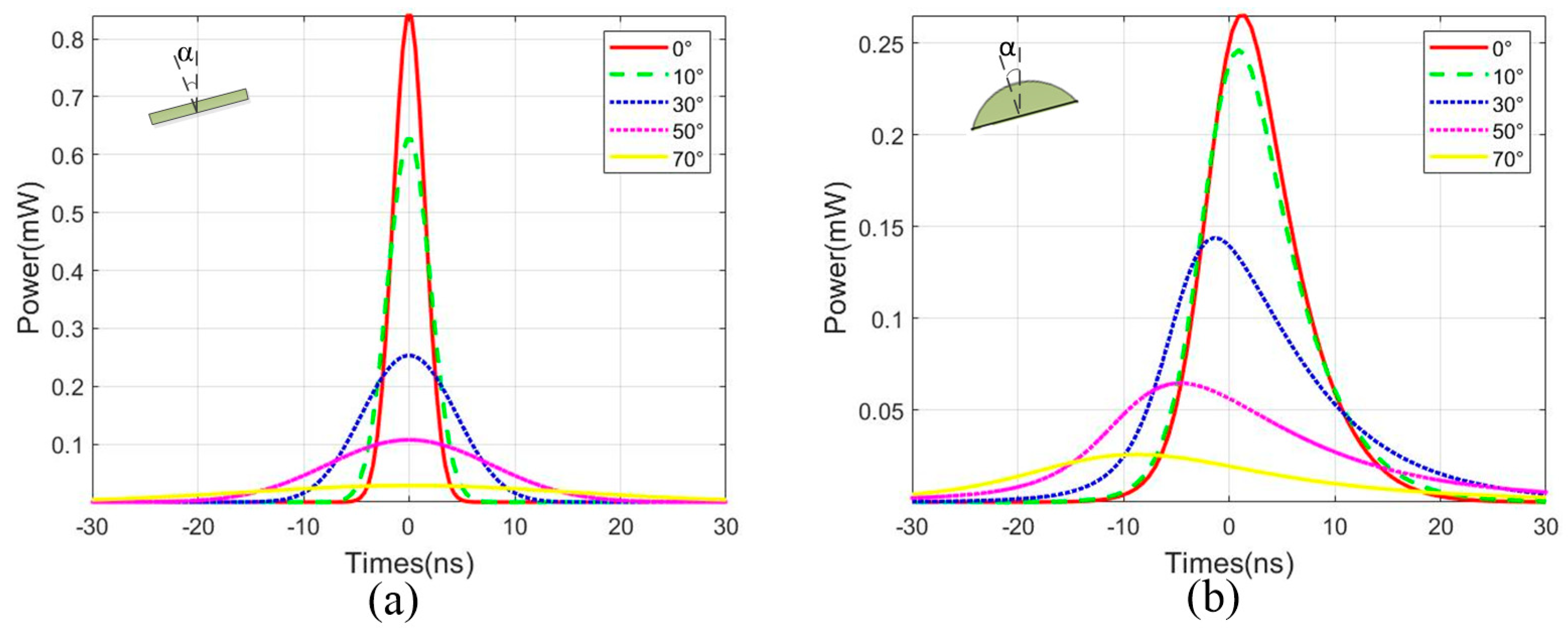

2. Multi Echoes in 3D Laser Imaging

3. Scanning Strategy and Depth Image Reconstruction

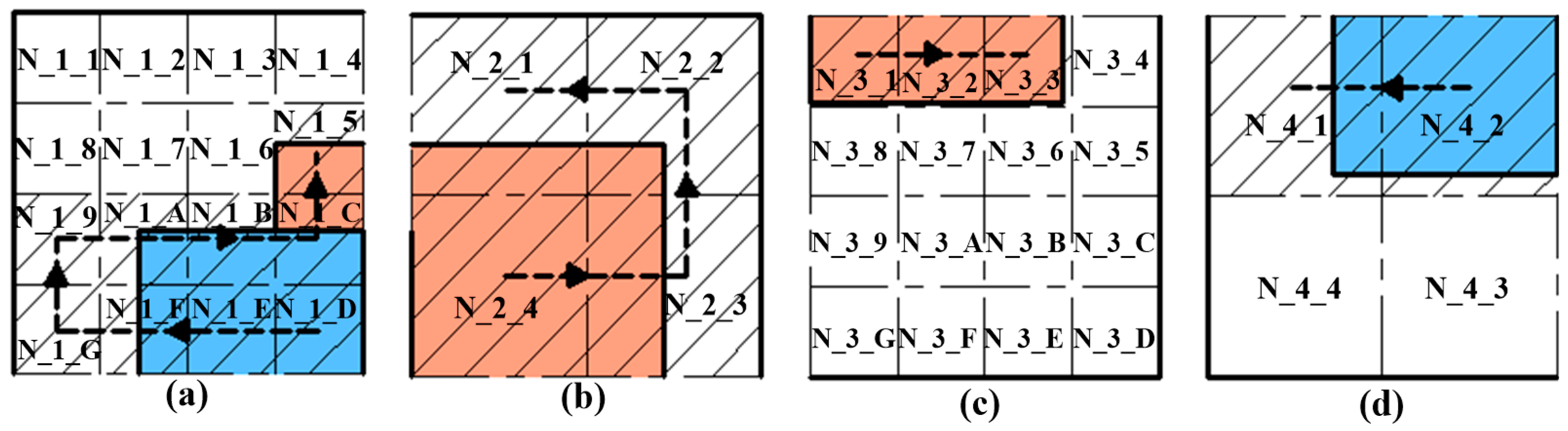

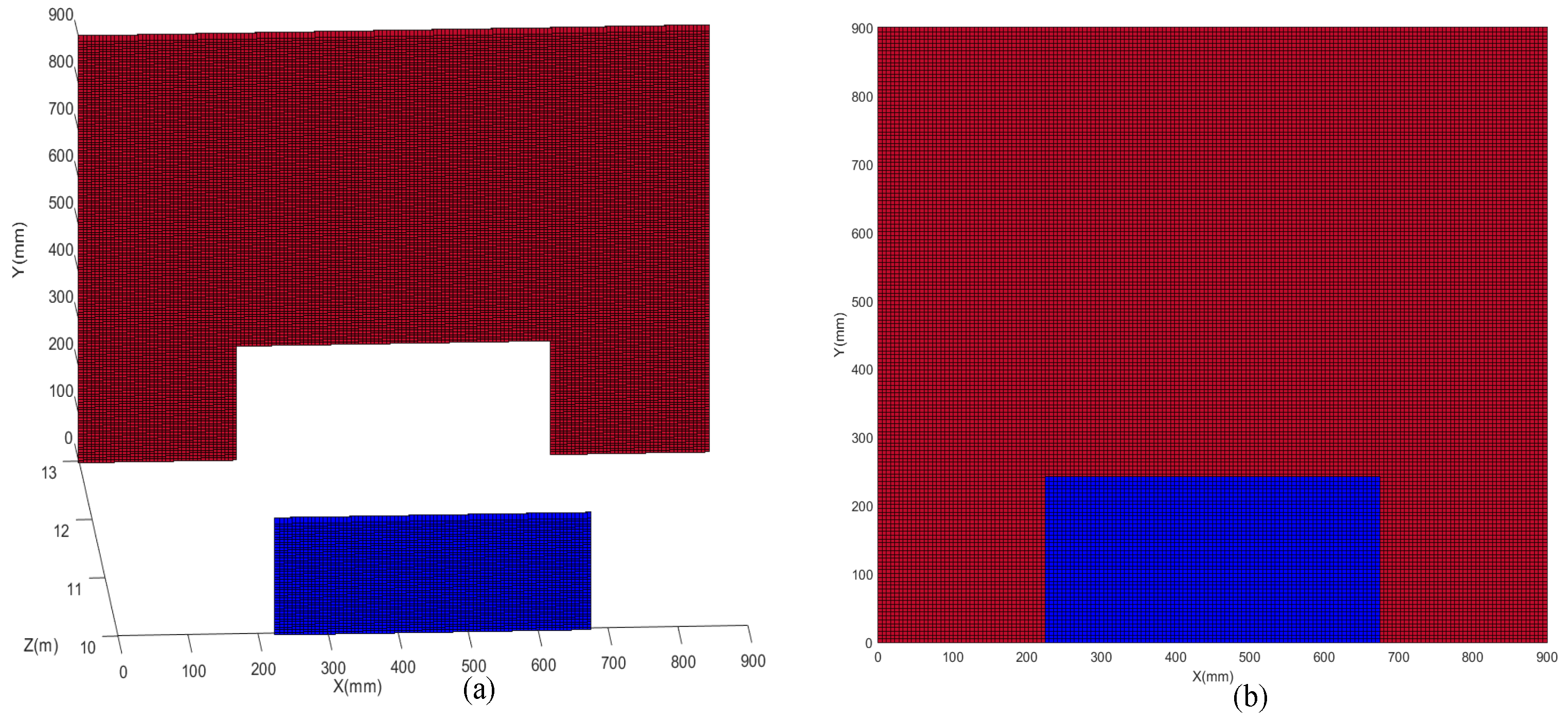

3.1. Scanning Strategy of Variable Scanning Spot and Scanning Trajectory

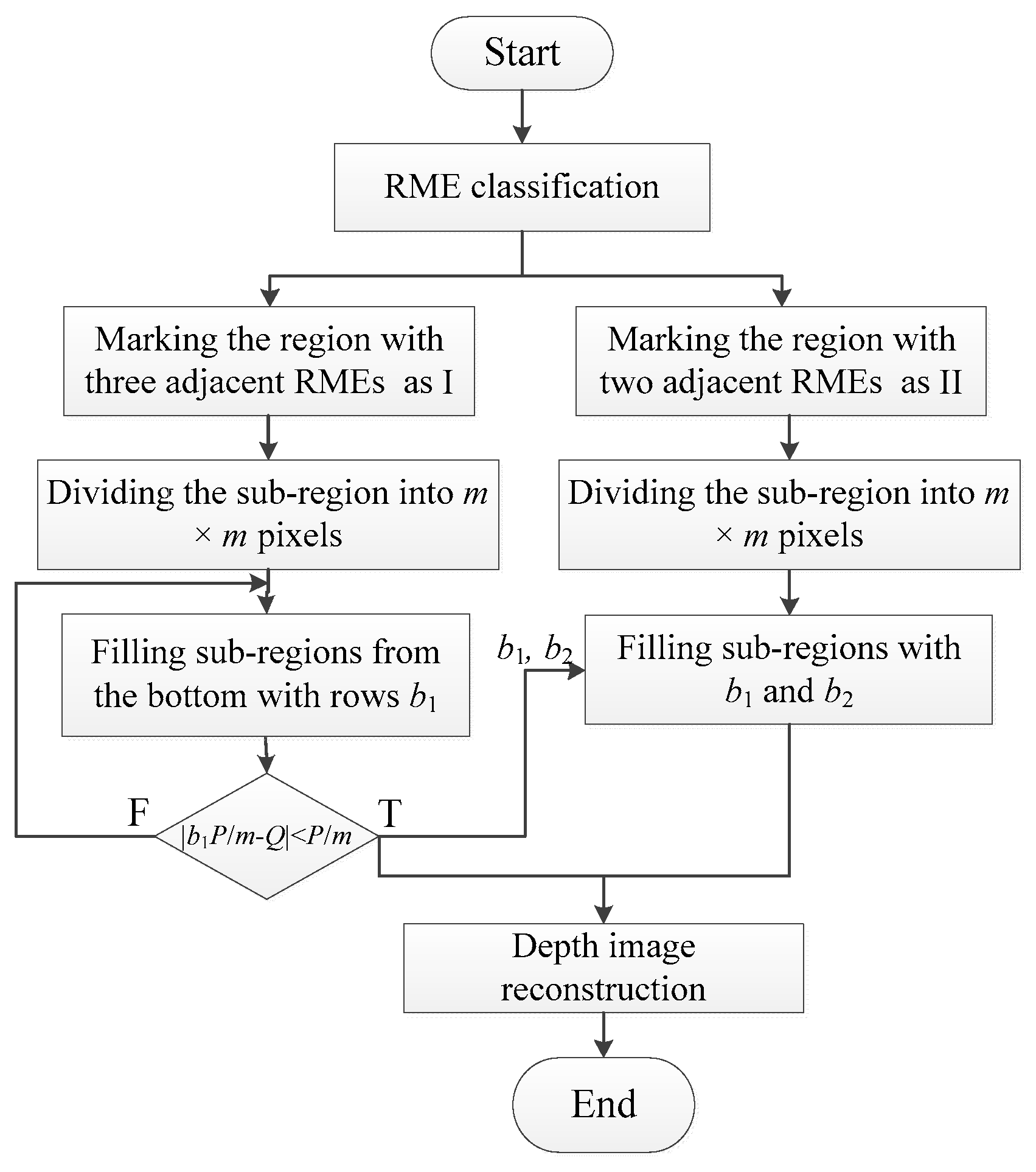

3.2. Super-Resolution Reconstruction of Depth Image

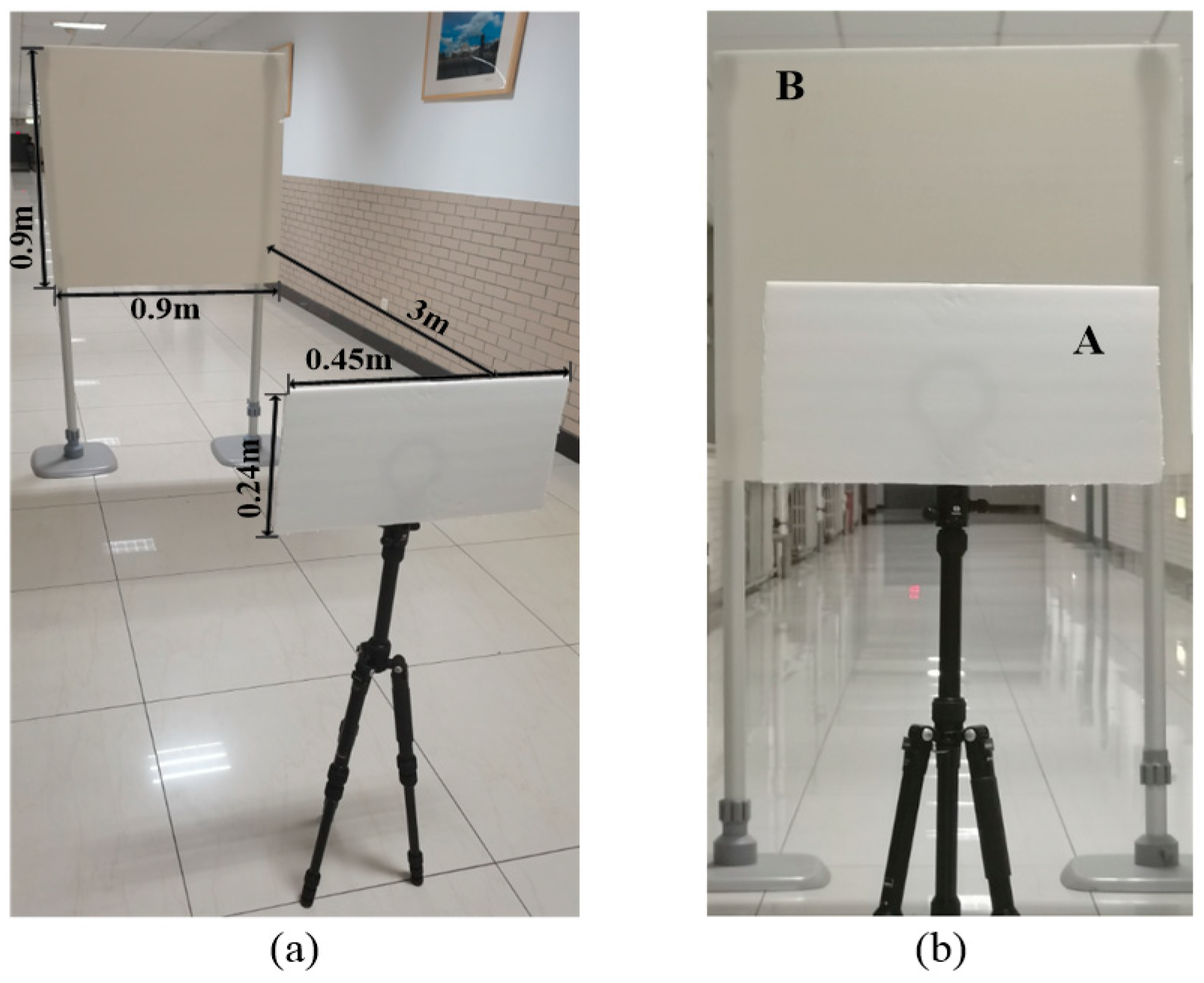

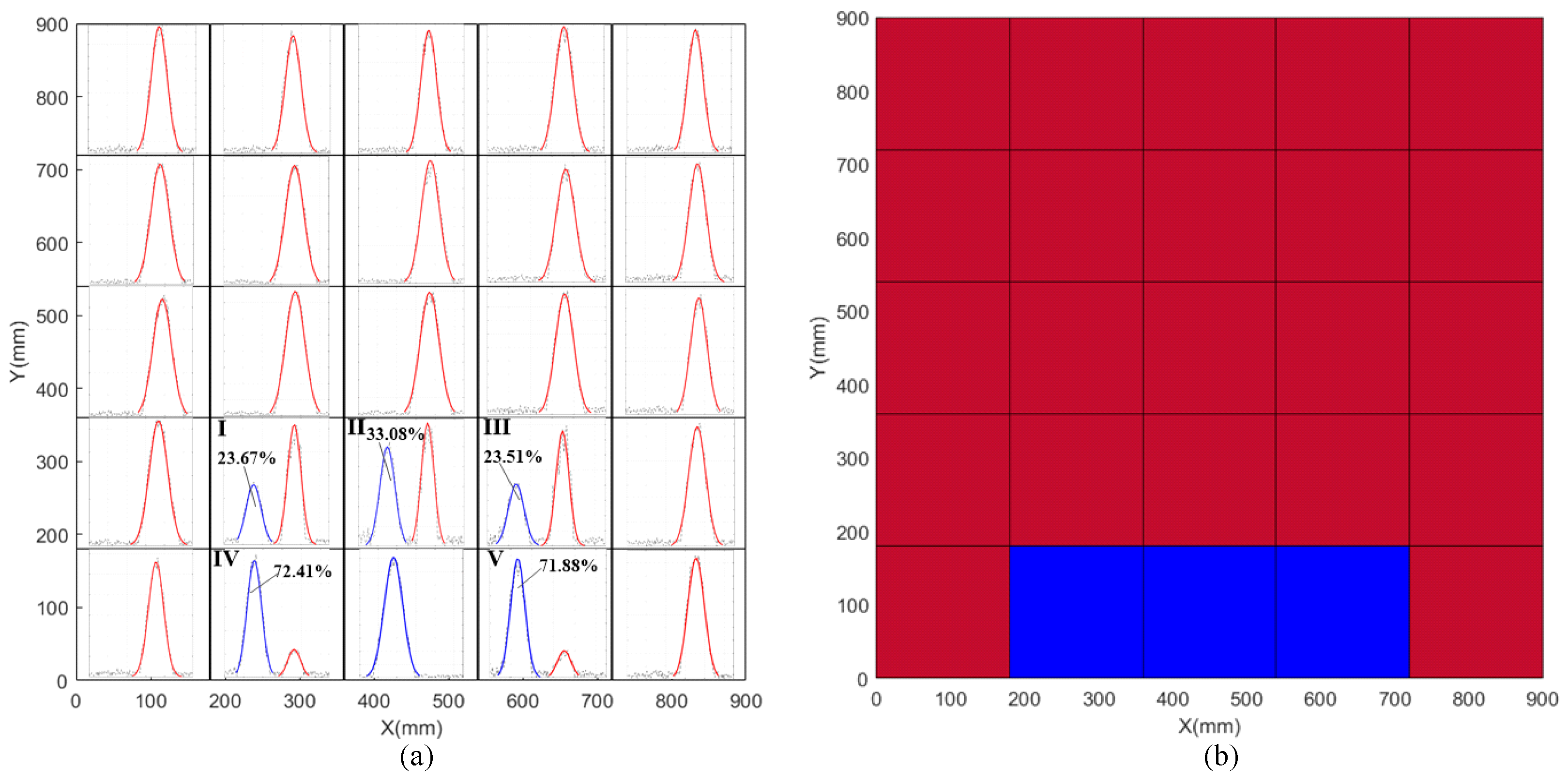

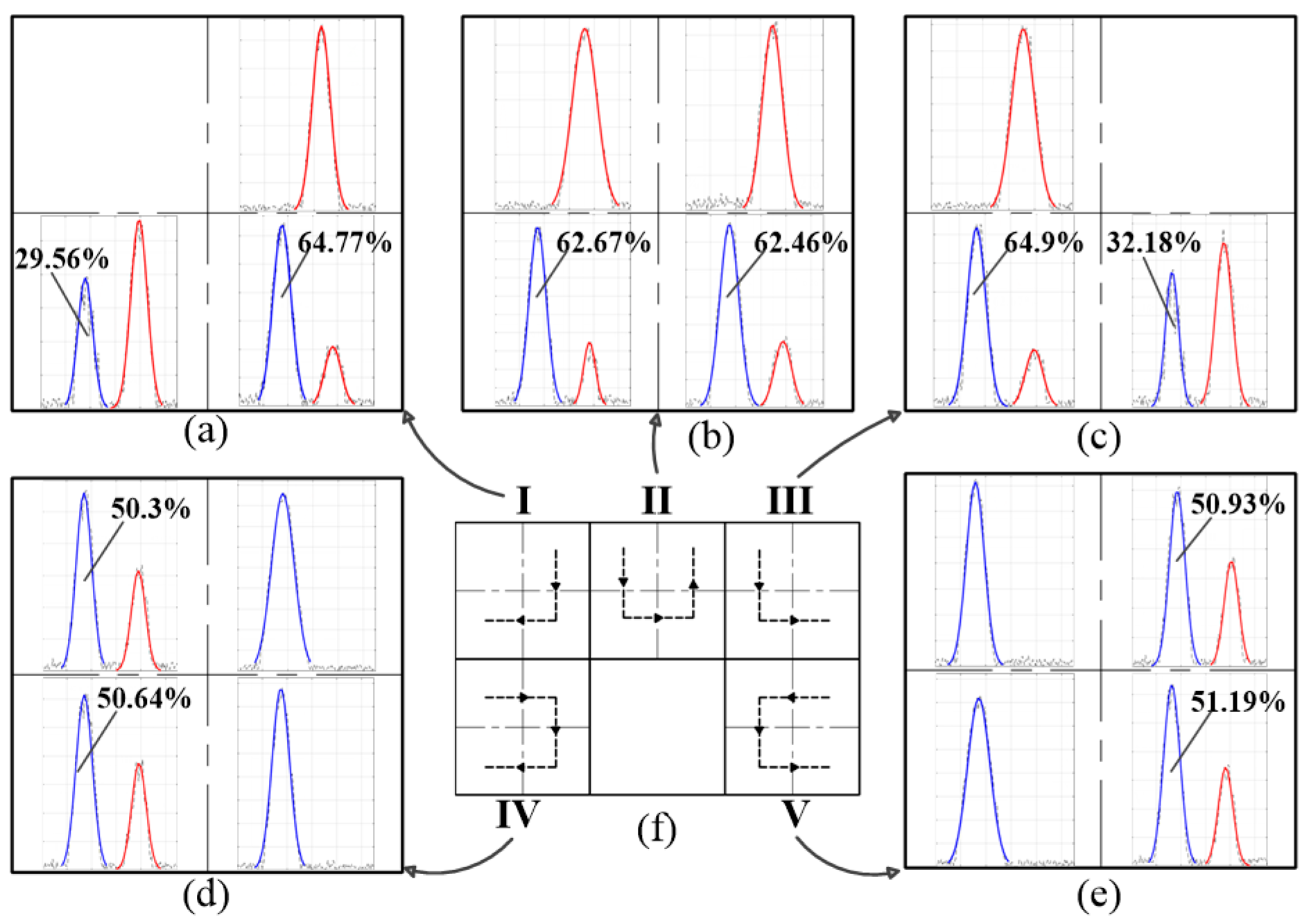

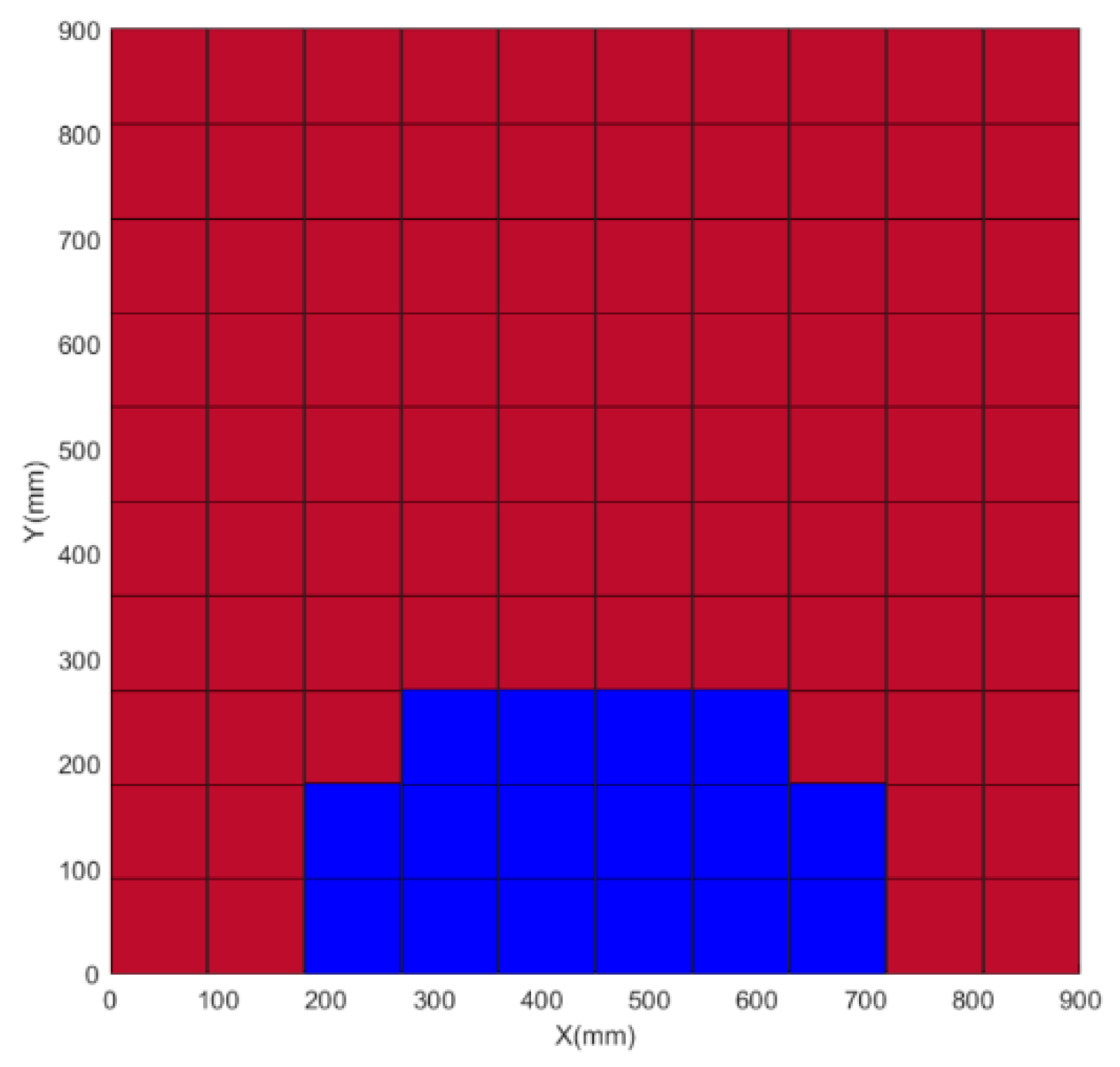

4. Experiment and Results

5. Discussion

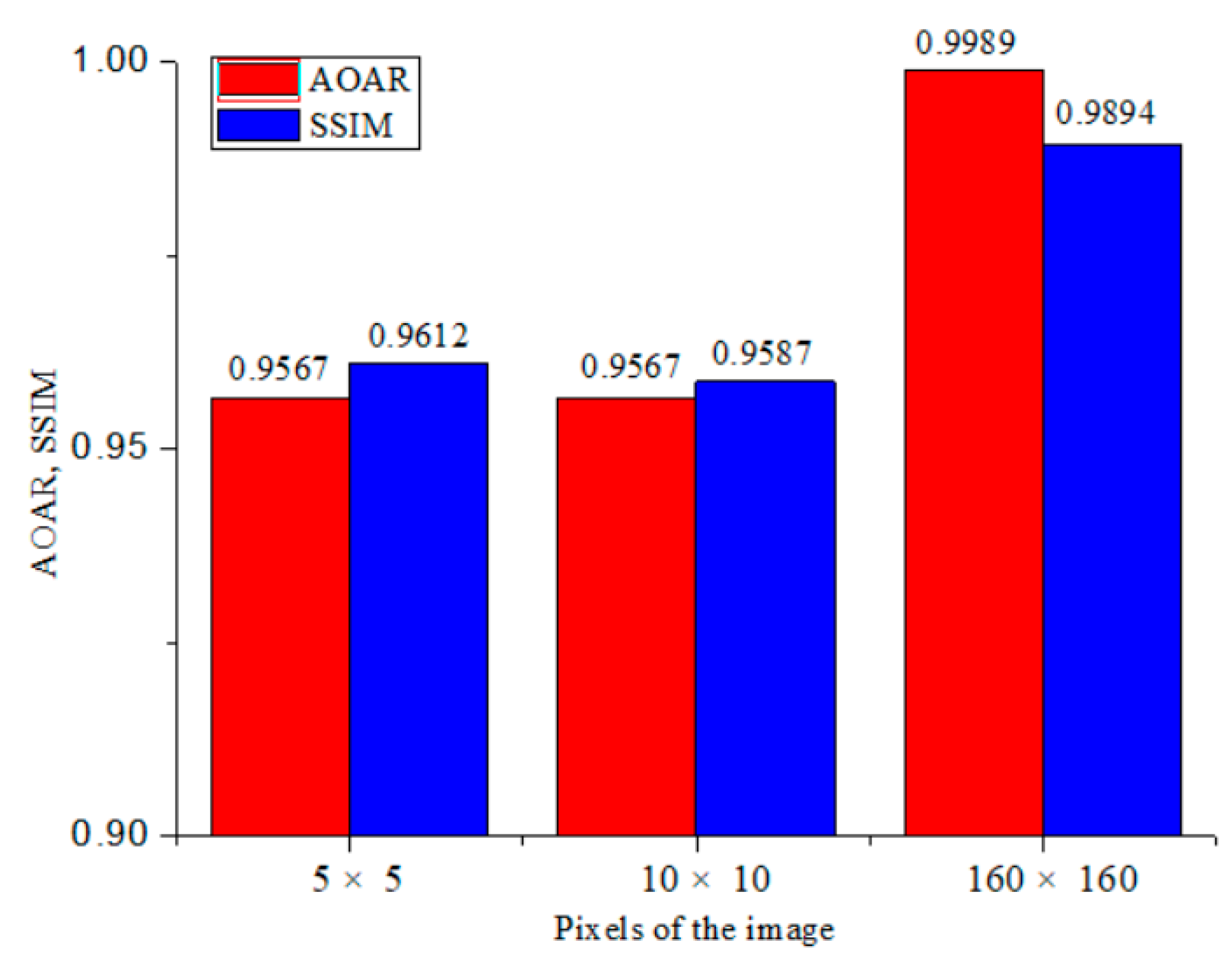

- When scanning the target with a large-scale scanning spot, a single scanning spot covers a large area of the target, which results in many RMEs. When an RME is represented by one pixel, this RME cannot be represented accurately. Therefore, the AOAR and SSIM of the reconstructed depth image with 5 × 5 pixels are relatively low.

- When the size of the scanning spot is reduced and the RME is scanned more precisely with the new scanning spot, the number of the RME decreases. However, due to the influence of the small scanning spot and the newly scanning trajectory, the PAET at the edge has changed. Therefore, these RMEs at the edge cannot be accurately reconstructed. As shown in Figure 12, the edge of the blue region is missing. It leads to the decrease of the SSIM of the reconstructed depth image with 10 × 10 pixels.

- When the depth image is super-resolution reconstructed, the RME is reconstructed more accurately by segmenting the RME and filling the sub-region. Therefore, the reconstructed depth image with 160 × 160 pixels is consistent with the real depth image in terms of AOAR and SSIM.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, J.; Kim, Y.-J.; Lee, K.; Lee, S.; Kim, S.-W. Time-of-flight measurement with femtosecond light pulses. Nat. Photonics 2010, 4, 716–720. [Google Scholar] [CrossRef]

- Wang, B.; Song, S.; Gong, W.; Cao, X.; He, D.; Chen, Z.; Lin, X.; Li, F.; Sun, J. Color Restoration for Full-Waveform Multispectral LiDAR Data. Remote Sens. 2020, 12, 593. [Google Scholar] [CrossRef] [Green Version]

- Chan, T.K.; Megens, M.; Yoo, B.W.; Wyras, J.; Chang-Hasnain, C.J.; Wu, M.C.; Horsley, D.A. Optical beamsteering using an 8 × 8 MEMS phased array with closed-loop interferometric phase control. Opt. Express 2013, 21, 9. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.-P.; Huang, X.; Jiang, P.-Y.; Hong, Y.; Yu, C.; Cao, Y.; Zhang, J.; Xu, F.; Pan, J.-W. Super-resolution single-photon imaging at 82 km. Opt. Express 2020, 28. [Google Scholar] [CrossRef] [Green Version]

- McCarthy, A.; Collins, R.J.; Krichel, N.J.; Fernández, V.; Wallace, A.M.; Buller, G.S. Long-range time-of-flight scanning sensor based on high-speed time-correlated single-photon counting. Appl. Opt. 2009, 48, 6241–6251. [Google Scholar] [CrossRef] [Green Version]

- Wallace, A.M.; Ye, J.; Krichel, N.J.; McCarthy, A.; Collins, R.J.; Buller, G.S. Full Waveform Analysis for Long-Range 3D Imaging Laser Radar. Eurasip J. Adv. Signal Process. 2010, 2010. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Andresen, B.F.; Jack, M.; Chapman, G.; Edwards, J.; Mc Keag, W.; Veeder, T.; Wehner, J.; Roberts, T.; Robinson, T.; Neisz, J.; et al. Advances in ladar components and subsystems at Raytheon. In Infrared Technology and Applications XXXVIII; International Society for Optics and Photonics: San Diego, CA, USA, 2012; Volume 8353, p. 83532F. [Google Scholar]

- Itzler, M.A.; Verghese, S.; Campbell, J.C.; McIntosh, K.A.; Liau, Z.L.; Sataline, C.; Shelton, J.D.; Donnelly, J.P.; Funk, J.E.; Younger, R.D.; et al. Arrays of 128 × 32 InP-based Geiger-mode avalanche photodiodes. In Advanced Photon Counting Techniques III; International Society for Optics and Photonics: San Diego, CA, USA, 2009; Volume 7320, p. 73200M. [Google Scholar]

- Pawlikowska, A.M.; Halimi, A.; Lamb, R.A.; Buller, G.S. Single-photon three-dimensional imaging at up to 10 km range. Opt. Express 2017, 25. [Google Scholar] [CrossRef]

- Yang, X.; Su, J.; Hao, L.; Wang, Y. Optical OCDMA coding and 3D imaging technique for non-scanning full-waveform LiDAR system. Appl. Opt. 2019, 59. [Google Scholar] [CrossRef]

- Li, Y.; Ibanez-Guzman, J. Lidar for Autonomous Driving: The Principles, Challenges, and Trends for Automotive Lidar and Perception Systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar] [CrossRef]

- Liu, X.; Sun, X.; Xia, X. LiDAR point’s elliptical error model and laser positioning for autonomous vehicles. Meas. Sci. Technol. 2021, 32. [Google Scholar] [CrossRef]

- Schwarz, B. Mapping the world in 3D. Nat. Photonics 2010, 4, 429–430. [Google Scholar] [CrossRef]

- Morales, J.; Plaza-Leiva, V.; Mandow, A.; Gomez-Ruiz, J.A.; Serón, J.; García-Cerezo, A. Analysis of 3D Scan Measurement Distribution with Application to a Multi-Beam Lidar on a Rotating Platform. Sensors 2018, 18, 395. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ravi, R.; Lin, Y.-J.; Elbahnasawy, M.; Shamseldin, T.; Habib, A. Bias Impact Analysis and Calibration of Terrestrial Mobile LiDAR System With Several Spinning Multibeam Laser Scanners. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5261–5275. [Google Scholar] [CrossRef]

- Hu, T.; Sun, X.; Su, Y.; Guan, H.; Sun, Q.; Kelly, M.; Guo, Q. Development and Performance Evaluation of a Very Low-Cost UAV-Lidar System for Forestry Applications. Remote Sens. 2020, 13, 77. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Berard, O. Supervised wetland classification using high spatial resolution optical, SAR, and LiDAR imagery. J. Appl. Remote Sens. 2020, 14. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, Z.; Chen, S.; Chen, H.; Guo, P.; Chen, S.; He, J.; Wang, J.; Nian, X. Classification and source analysis of low-altitude aerosols in Beijing using fluorescence–Mie polarization lidar. Opt. Commun. 2021, 479. [Google Scholar] [CrossRef]

- Akbulut, M.; Kotov, L.; Wiersma, K.; Zong, J.; Li, M.; Miller, A.; Chavez-Pirson, A.; Peyghambarian, N. An Eye-Safe, SBS-Free Coherent Fiber Laser LIDAR Transmitter with Millijoule Energy and High Average Power. Photonics 2021, 8, 15. [Google Scholar] [CrossRef]

- Kirmani, A.; Venkatraman, D.; Shin, D.; Colaço, A.; Wong, F.N.; Shapiro, J.H.; Goyal, V.K. First-photon imaging. Science 2014, 343, 58–61. [Google Scholar] [CrossRef]

- McCarthy, A.; Ren, X.; Della Frera, A.; Gemmell, N.R.; Krichel, N.J.; Scarcella, C.; Ruggeri, A.; Tosi, A.; Buller, G.S. Kilometer-range depth imaging at 1550 nm wavelength using an InGaAs_InP single-photon avalanche. Opt. Express 2013, 21, 16. [Google Scholar] [CrossRef] [Green Version]

- Zheng, T.; Shen, G.; Li, Z.; Yang, L.; Zhang, H.; Wu, E.; Wu, G. Frequency-multiplexing photon-counting multi-beam LiDAR. Photonics Res. 2019, 7. [Google Scholar] [CrossRef]

- Poulton, C.V.; Yaacobi, A.; Cole, D.B.; Byrd, M.J.; Raval, M.; Vermeulen, D.; Watts, M.R. Coherent solid-state LIDAR with silicon photonic optical phased arrays. Opt. Lett. 2017, 42. [Google Scholar] [CrossRef] [PubMed]

- Lio, G.E.; Ferraro, A. LIDAR and Beam Steering Tailored by Neuromorphic Metasurfaces Dipped in a Tunable Surrounding Medium. Photonics 2021, 8, 65. [Google Scholar] [CrossRef]

- Li, Z.; Wu, E.; Pang, C.; Du, B.; Tao, Y.; Peng, H.; Zeng, H.; Wu, G. Multi-beam single-photon-counting three-dimensional imaging lidar. Opt. Express 2017, 25. [Google Scholar] [CrossRef] [PubMed]

- Kamerman, G.W.; Marino, R.M.; Davis, W.R.; Rich, G.C.; McLaughlin, J.L.; Lee, E.I.; Stanley, B.M.; Burnside, J.W.; Rowe, G.S.; Hatch, R.E.; et al. High-resolution 3D imaging laser radar flight test experiments. In Laser Radar Technology and Applications X; International Society for Optics and Photonics: San Diego, CA, USA, 2005; Volume 5791, pp. 138–151. [Google Scholar]

- Li, S.; Cao, J.; Cheng, Y.; Meng, L.; Xia, W.; Hao, Q.; Fang, Y. Spatially Adaptive Retina-Like Sampling Method for Imaging LiDAR. IEEE Photonics J. 2019, 11, 1–16. [Google Scholar] [CrossRef]

- Cheng, Y.; Cao, J.; Zhang, F.; Hao, Q. Design and modeling of pulsed-laser three-dimensional imaging system inspired by compound and human hybrid eye. Sci. Rep. 2018, 8. [Google Scholar] [CrossRef]

- Ye, L.; Gu, G.; He, W.; Dai, H.; Lin, J.; Chen, Q. Adaptive Target Profile Acquiring Method for Photon Counting 3-D Imaging Lidar. IEEE Photonics J. 2016, 8, 1–10. [Google Scholar] [CrossRef]

- Wagner, W.; Ullrich, A.; Ducic, V.; Melzer, T.; Studnicka, N. Gaussian decomposition and calibration of a novel small-footprint full-waveform digitising airborne laser scanner. Isprs J. Photogramm. Remote Sens. 2006, 60, 100–112. [Google Scholar] [CrossRef]

- Hofton, M.A.; Minster, J.B.; Blair, J.B. Decomposition of laser altimeter waveforms. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1989–1996. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Hao, Q.; Cheng, Y.; Cao, J.; Zhang, F.; Zhang, X.; Yu, H. Analytical and numerical approaches to study echo laser pulse profile affected by target and atmospheric turbulence. Opt. Express 2016, 24, 25026–25042. [Google Scholar] [CrossRef] [Green Version]

| System Parameters | Values |

|---|---|

| Wavelength | 532 nm |

| Laser Repetition Rate | 10 kHz |

| Laser Pulse Width | 1.5 ns |

| Average Output Power | 500 mW |

| Receiving lens | f = 50 mm |

| Emission lens | f = 35 mm/f = 45 mm |

| Photosensitive surface of APD | 3 × 3 mm2 |

| Scanning speed | 1 s/point |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, A.; Cao, J.; Cheng, Y.; Chen, C.; Hao, Q. Three-Dimensional Laser Imaging with a Variable Scanning Spot and Scanning Trajectory. Photonics 2021, 8, 173. https://doi.org/10.3390/photonics8060173

Yang A, Cao J, Cheng Y, Chen C, Hao Q. Three-Dimensional Laser Imaging with a Variable Scanning Spot and Scanning Trajectory. Photonics. 2021; 8(6):173. https://doi.org/10.3390/photonics8060173

Chicago/Turabian StyleYang, Ao, Jie Cao, Yang Cheng, Chuanxun Chen, and Qun Hao. 2021. "Three-Dimensional Laser Imaging with a Variable Scanning Spot and Scanning Trajectory" Photonics 8, no. 6: 173. https://doi.org/10.3390/photonics8060173