Preliminary Characterization of Robust Detection Method of Solar Cell Array for Optical Wireless Power Transmission with Differential Absorption Image Sensing

Abstract

:1. Introduction

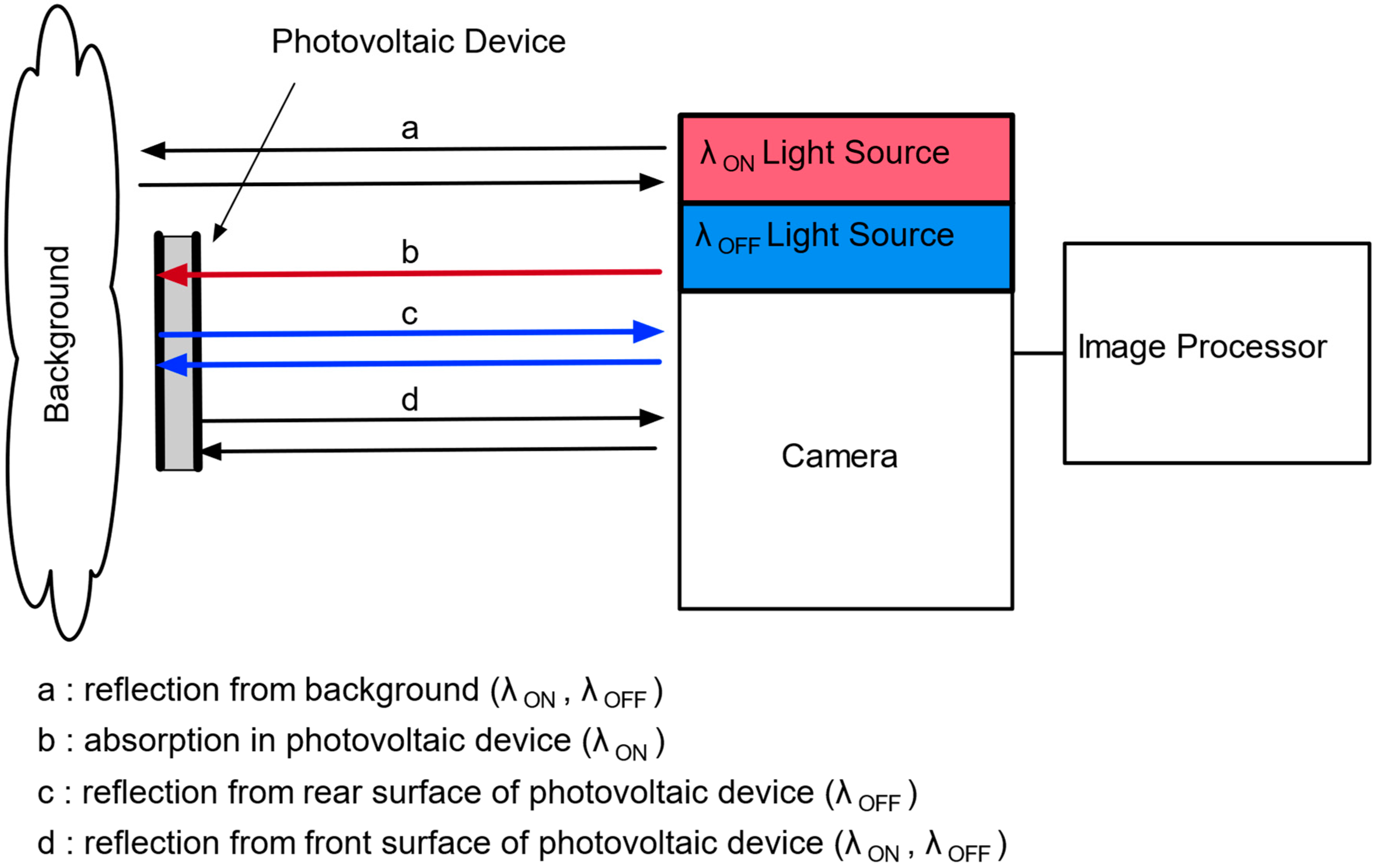

2. Principle of Differential Absorption Image Sensor

3. Detection Experiments of Simulated PV

3.1. Design of Experiments

3.2. Acquired Raw Data in the Experiment

3.3. Data Processing and Software Tools

3.4. Calibration of Experiment System

3.5. Data Processing of Acquired Image

- First, 100 raw 640 × 480 px image data points of the frost glass target ( image: ) and 100 same-size images of the Si target ( image: ) are taken for each combination of parameter set (Exp = 39, 78, …, 10,000 μsec and P = 0, 1, …, 20, i = 1, 2, …, 100).

- These images are trimmed down to each image size (48 × 49, 72 × 71, 95 × 95, 143 × 143, 237 × 237, 331 × 331, 384 × 448, 384 × 480, 640 × 480 px) and grayscaled.

- For each , image data point, P20 data are regarded as background data, and their grayscaled images are subtracted from and . Then, background-free 100 and 100 grayscale images are generated, respectively.

- One hundred differential images are generated for each combination of the parameter set of exposure time and the number of filter papers by subtracting the grayscale level of the image from the image.

- Differential images are accumulated n times n=1, 2, …, 100.

- 7.

- From the binarized image by Equation (6), the connected component with maximum area is extracted, and this is regarded as the Si image.

- 8.

- The center coordinates are calculated as the center of the boundary rectangle of the extracted Si image.

- 9.

- The area is calculated as the area of the extracted Si image.

- 10.

- Regarding signal intensity, the Si portion is extracted from the image by image multiplication of the image and the binarized Si image. Then, signal intensity is calculated as the mean intensity of the extracted Si portion of the image. Noise intensity is calculated as the P20 mean intensity of the frost glass target with light source OFF. SNR is calculated as the ratio of signal intensity to the noise intensity. To avoid instability, such as that which occurred in estimation of , described in Appendix A.2, the definition of SNR uses raw image data.

4. Discussion

4.1. Detectability Criteria

4.1.1. Cooperative OWPT Utilizing Fly Eye Module

4.1.2. Cooperative/Non-Cooperative OWPT without Fly Eye Module

4.2. Assessment of Detectable Range

4.3. Improvement of Detectable Range

4.3.1. Increase of Effective Diameter of Camera Optics

4.3.2. Beam Size (Beam Divergence) Control

4.4. Effect of Background

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Appendix A. Estimation of Calibration Parameters

Appendix A.1. Estimation of Parameter

Appendix A.2. Estimation of Intensity Reduction Factor

- Estimation of

- Estimation of

Appendix B. Differential Images and Binarized Images of Trimming Size 48 × 49 px, Exposure Time 10,000

Appendix C. Determination Error of X, Y Center Coordinates and Area (48 × 49 px Trimming Size)

Appendix D. Derivation of Equation (10)

References

- Wang, Z.; Zhang, Y.; He, X.; Luo, B.; Mai, R. Research and Application of Capacitive Power Transfer System: A Review. Electronics 2022, 11, 1158. [Google Scholar] [CrossRef]

- Wi-Charge. The Wireless Power Company. Available online: https://www.wi-charge.com (accessed on 9 May 2022).

- Baraskar, A.; Yoshimura, Y.; Nagasaki, S.; Hanada, T. Space Solar Power Satellite for the Moon and Mars Mission. J. Space Saf. Eng. 2022, 9, 96–105. [Google Scholar] [CrossRef]

- PowerLight Technologies. Available online: https://powerlighttech.com/ (accessed on 23 May 2022).

- Asaba, K.; Miyamoto, T. System Level Requirement Analysis of Beam Alignment and Shaping for Optical Wireless Power Transmission System by Semi–Empirical Simulation. Photonics 2022, 9, 452. [Google Scholar] [CrossRef]

- Setiawan Putra, A.W.; Kato, H.; Adinanta, H.; Maruyama, T. Optical Wireless Power Transmission to Moving Object Using Galvano Mirror. In Free-Space Laser Communications XXXII; Hemmati, H., Boroson, D.M., Eds.; SPIE: Bellingham, WA, USA, 2020; p. 50. [Google Scholar] [CrossRef]

- Setiawan Putra, A.W.; Kato, H.; Maruyama, T. Infrared LED Marker for Target Recognition in Indoor and Outdoor Applications of Optical Wireless Power Transmission System. Jpn. J. Appl. Phys. 2020, 59, SOOD06. [Google Scholar] [CrossRef]

- Sovetkin, E.; Steland, A. Automatic Processing and Solar Cell Detection in Photovoltaic Electroluminescence Images. ICA 2019, 26, 123–137. [Google Scholar] [CrossRef] [Green Version]

- Imai, H.; Watanabe, N.; Chujo, K.; Hayashi, H.; Yamauchi, A.A. Beam-Tracking Technology Developed for Free-Space Optical Communication and Its Application to Optical Wireless Power Transfer. In Proceedings of the 4th Optical Wireless and Fiber Power Transmission Conference (OWPT2022), Yokohama, Japan, 18–21 April 2022; p. OWPT-5-01. [Google Scholar]

- XiaoJie, M.; Tomoyuki, M. Safety System of Optical Wireless Power Transmission by Suppressing Light Beam Irradiation to Human Using Camera. In Proceedings of the 3rd Optical Wireless and Fiber Power Transmission Conference (OWPT2021), Online, 19–22 April 2021; p. OWPT-8-03. [Google Scholar]

- He, T.; Yang, S.-H.; Zhang, H.-Y.; Zhao, C.-M.; Zhang, Y.-C.; Xu, P.; Muñoz, M.Á. High-Power High-Efficiency Laser Power Transmission at 100 m Using Optimized Multi-Cell GaAs Converter. Chin. Phys. Lett. 2014, 31, 104203. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Miyamoto, T. Numerical Analysis of Multi-Particle Mie Scattering Characteristics for Improvement of Solar Cell Appearance in OWPT System. In Proceedings of the 3rd Optical Wireless and Fiber Power Transmission Conference (OWPT2021), Online, 19–22 April 2021; p. OWPT-P-01. [Google Scholar]

- Kawashima, N.; Takeda, K.; Yabe, K. Application of the Laser Energy Transmission Technology to Drive a Small Airplane. Chin. Opt. Lett. 2007, 5, S109–S110. [Google Scholar]

- Differential Absorption Lidar|Elsevier Enhanced Reader. Available online: https://reader.elsevier.com/reader/sd/pii/B9780123822253002048?token=ACBBE2F8761774F5628FC13C0F6B8E339140949E50BF9DE9F5063F95873CC081FB2353E862E10CA4576416252562C600&originRegion=us-east-1&originCreation=20220824035349 (accessed on 22 August 2022).

- Dual-Wavelength Measurements Compensate for Optical Interference. 7 May 2004. Available online: https://www.biotek.com/resources/technical-notes/dual-wavelength-measurements-compensate-for-optical-interference/ (accessed on 24 August 2022).

- Herrlin, K.; Tillman, C.; Grätz, M.; Olsson, C.; Pettersson, H.; Svahn, G.; Wahlström, C.G.; Svanberg, S. Contrast-Enhanced Radiography by Differential Absorption, Using a Laser-Produced X-Ray Source. Invest Radiol 1997, 32, 306–310. [Google Scholar] [CrossRef] [PubMed]

- Intel. Intel® RealSenseTM D400 Series Data Sheet. Available online: https://www.intelrealsense.com/wp-content/uploads/2022/05/Intel-RealSense-D400-Series-Datasheet-April-2022.pdf?_ga=2.148303735.1760514517.1667622478-1298527653.1667622478/ (accessed on 23 August 2022).

- Intel® RealSenseTM. Intel® RealSenseTM Depth and Tracking Cameras. Available online: https://www.intelrealsense.com/sdk-2/ (accessed on 23 August 2022).

- Open, CV. Available online: https://opencv.org/ (accessed on 23 August 2022).

- Python.org. Welcome to Python.org. Available online: https://www.python.org/ (accessed on 24 August 2022).

- Wolfram Mathematica: Modern Technical Computing. Available online: https://www.wolfram.com/mathematica/ (accessed on 15 August 2022).

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Binarize Wolfram Language Documentation. Available online: https://reference.wolfram.com/language/ref/Binarize.html.en?source=footer (accessed on 15 August 2022).

- Tang, J.; Matsunaga, K.; Miyamoto, T. Numerical Analysis of Power Generation Characteristics in Beam Irradiation Control of Indoor OWPT System. Opt. Rev. 2020, 27, 170–176. [Google Scholar] [CrossRef]

- Mori, N. Design of Projection System for Optical Wireless Power Transmission Using Multiple Laser Light Sources, Fly-Eye Lenses, and Zoom Lens. In Proceedings of the 1st Optical Wireless and Fiber Power Transmission Conference (OWPT2019), Yokohama, Japan, 23–25 April 2019; p. OWPT-4-02. [Google Scholar]

- Katsuta, Y.; Miyamoto, T. Design, Simulation and Characterization of Fly-Eye Lens System for Optical Wireless Power Transmission. Jpn. J. Appl. Phys. 2019, 58, SJJE02. [Google Scholar] [CrossRef]

- GPS.gov: GPS Accuracy. Available online: https://www.gps.gov/systems/gps/performance/accuracy/ (accessed on 9 May 2022).

- Quuppa. Indoor Positioning System. Available online: https://www.quuppa.com/indoor-positioning-system/ (accessed on 9 May 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asaba, K.; Moriyama, K.; Miyamoto, T. Preliminary Characterization of Robust Detection Method of Solar Cell Array for Optical Wireless Power Transmission with Differential Absorption Image Sensing. Photonics 2022, 9, 861. https://doi.org/10.3390/photonics9110861

Asaba K, Moriyama K, Miyamoto T. Preliminary Characterization of Robust Detection Method of Solar Cell Array for Optical Wireless Power Transmission with Differential Absorption Image Sensing. Photonics. 2022; 9(11):861. https://doi.org/10.3390/photonics9110861

Chicago/Turabian StyleAsaba, Kaoru, Kenta Moriyama, and Tomoyuki Miyamoto. 2022. "Preliminary Characterization of Robust Detection Method of Solar Cell Array for Optical Wireless Power Transmission with Differential Absorption Image Sensing" Photonics 9, no. 11: 861. https://doi.org/10.3390/photonics9110861

APA StyleAsaba, K., Moriyama, K., & Miyamoto, T. (2022). Preliminary Characterization of Robust Detection Method of Solar Cell Array for Optical Wireless Power Transmission with Differential Absorption Image Sensing. Photonics, 9(11), 861. https://doi.org/10.3390/photonics9110861