An Unknown Hidden Target Localization Method Based on Data Decoupling in Complex Scattering Media

Abstract

1. Introduction

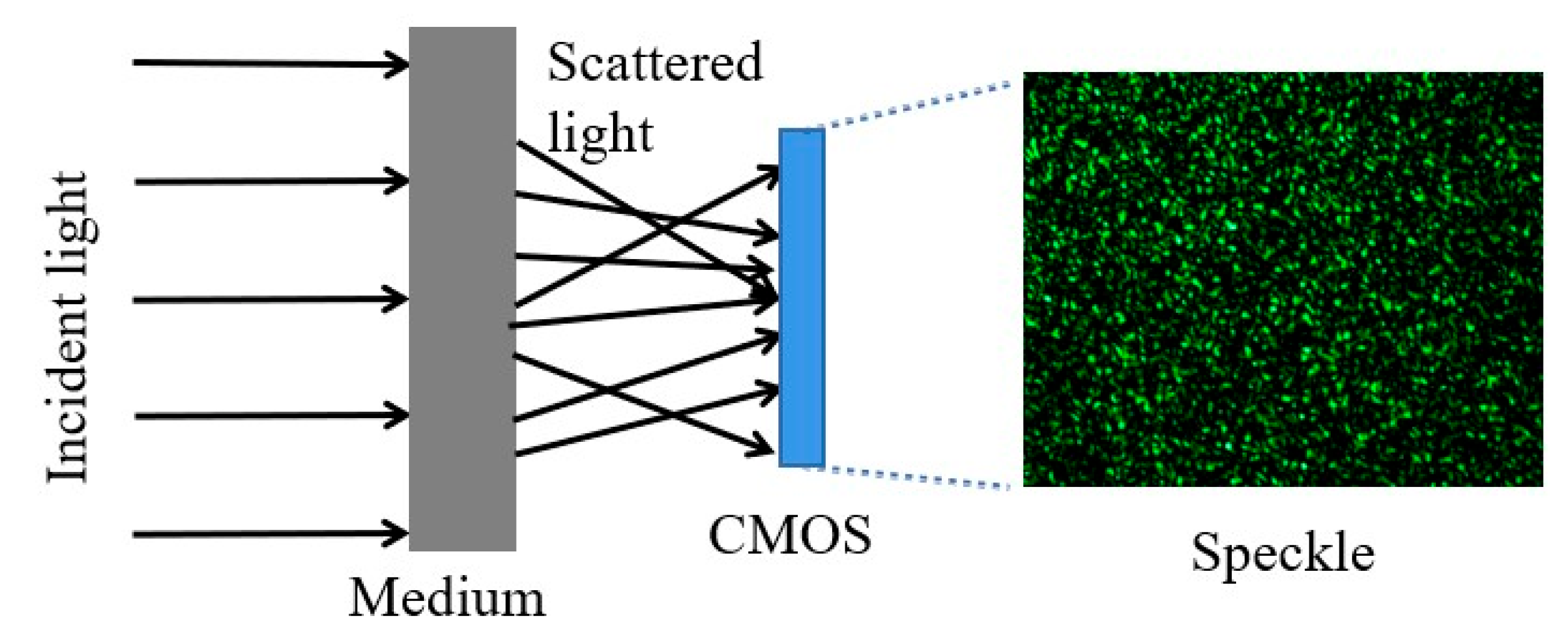

2. Principle

2.1. Theory

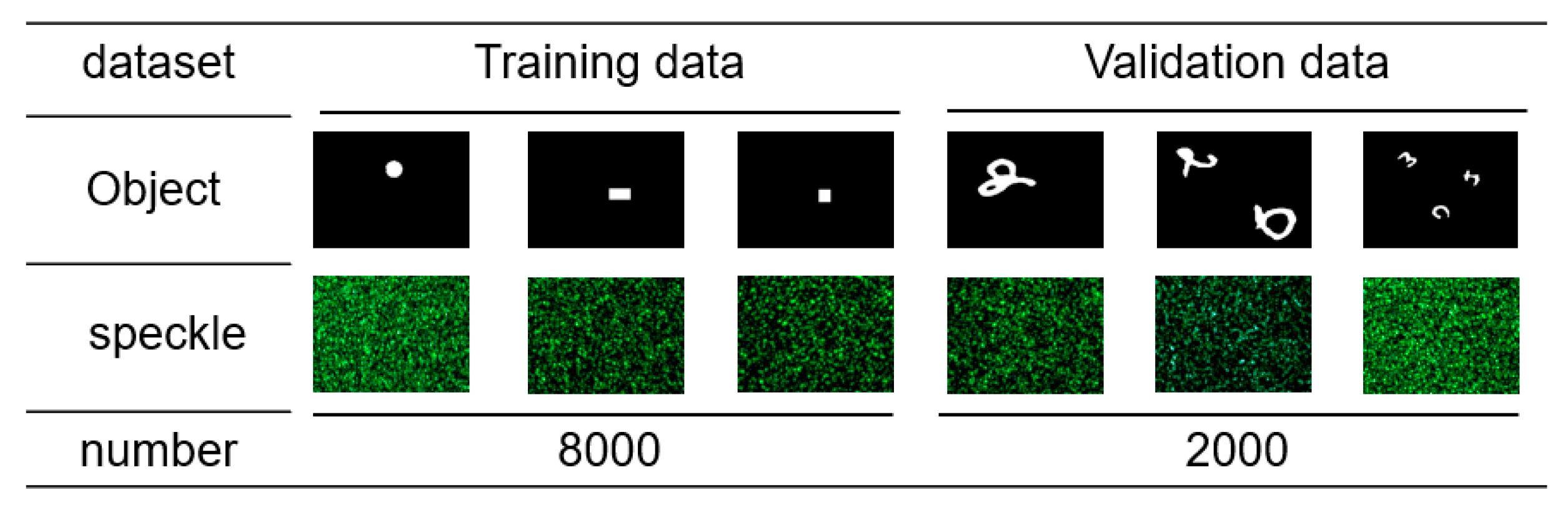

2.2. Data Setup

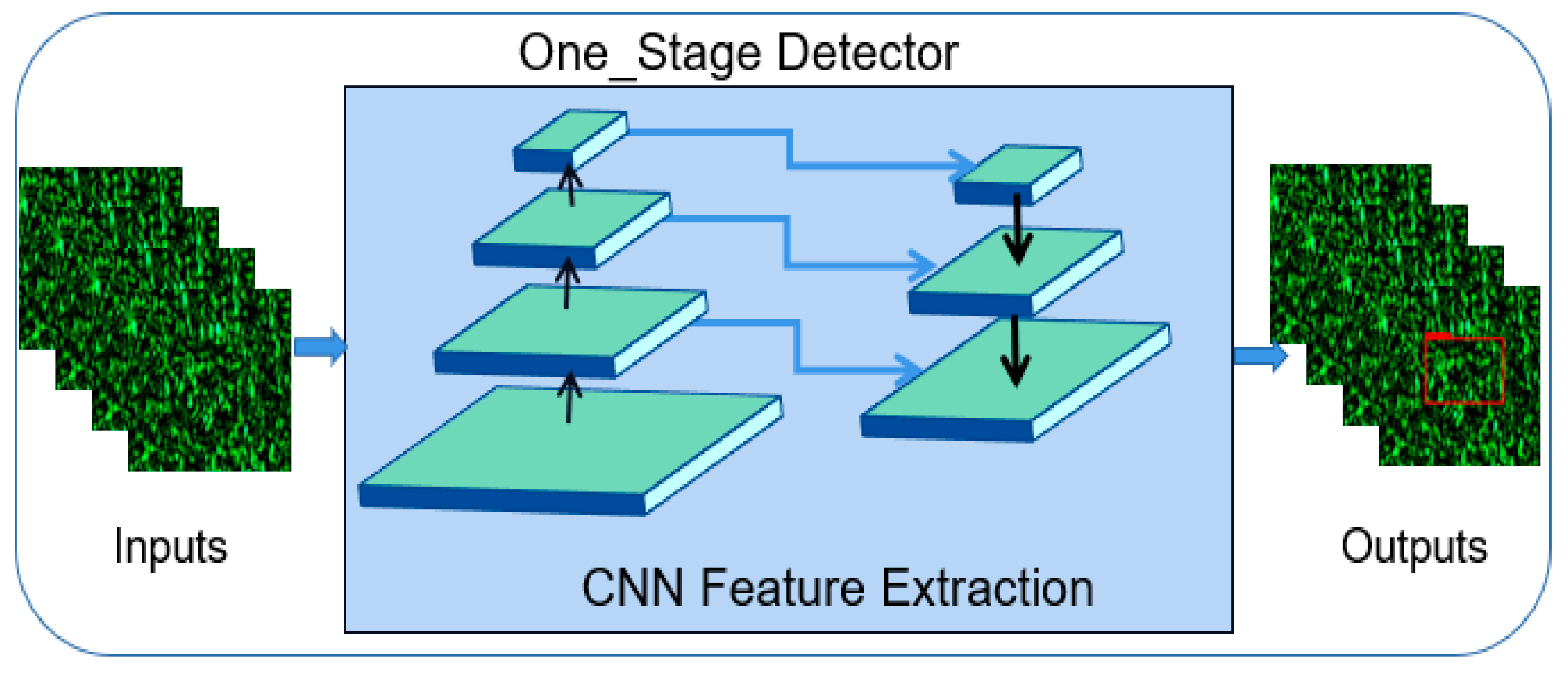

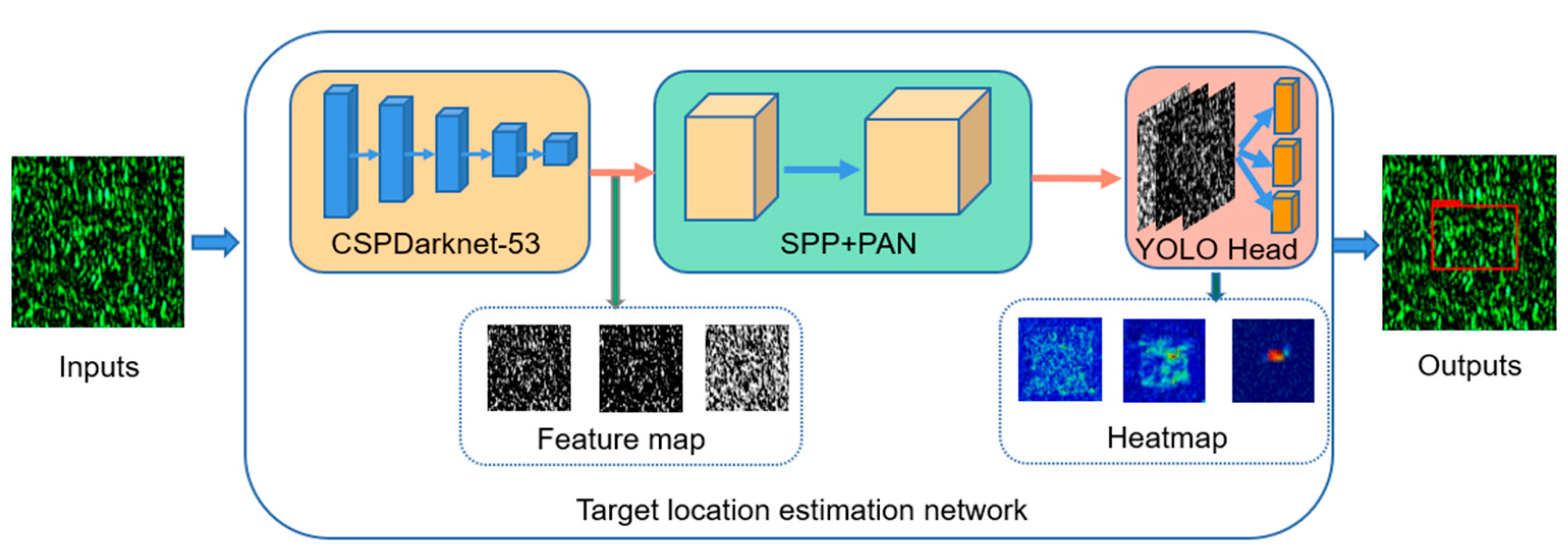

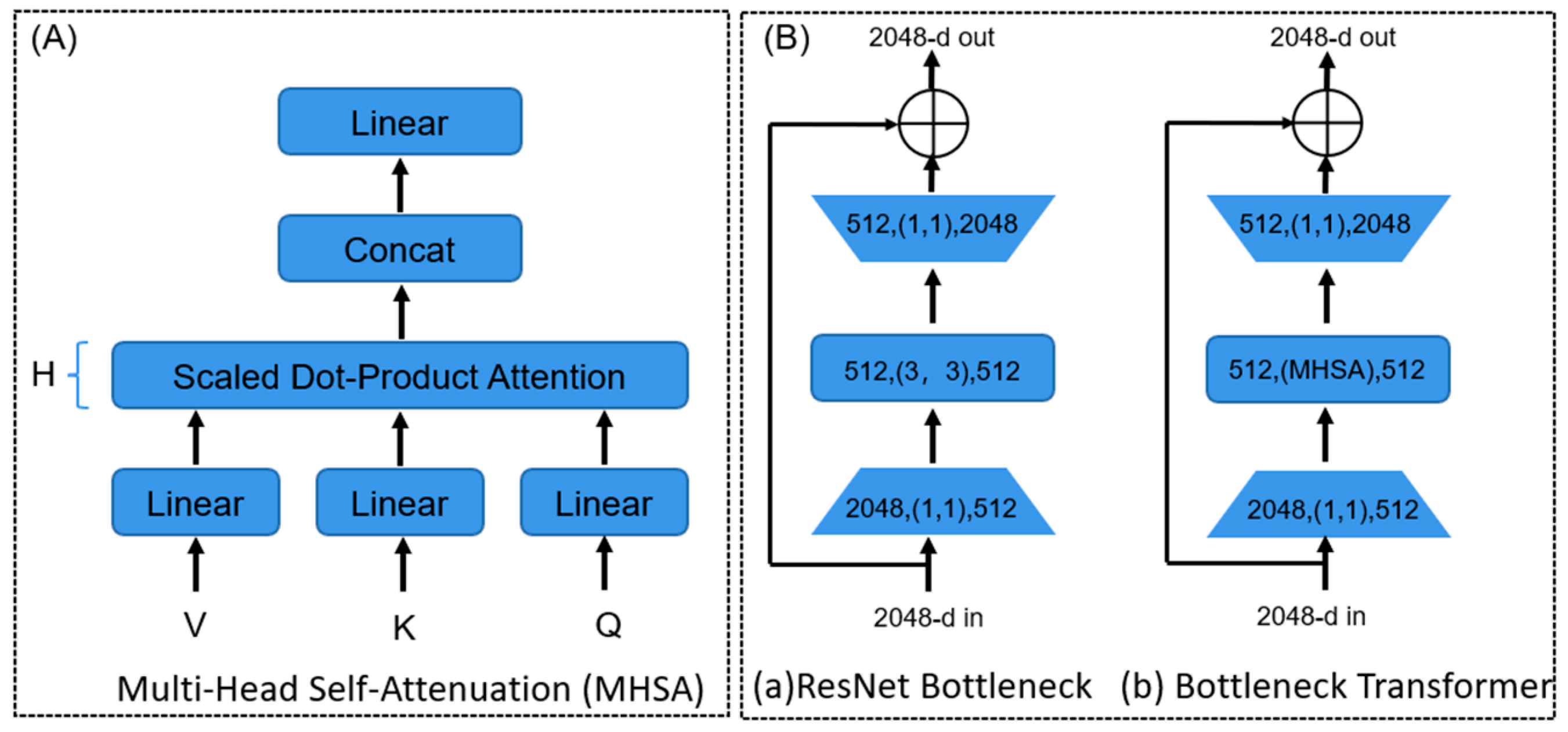

2.3. Model Design

3. Results and Analysis

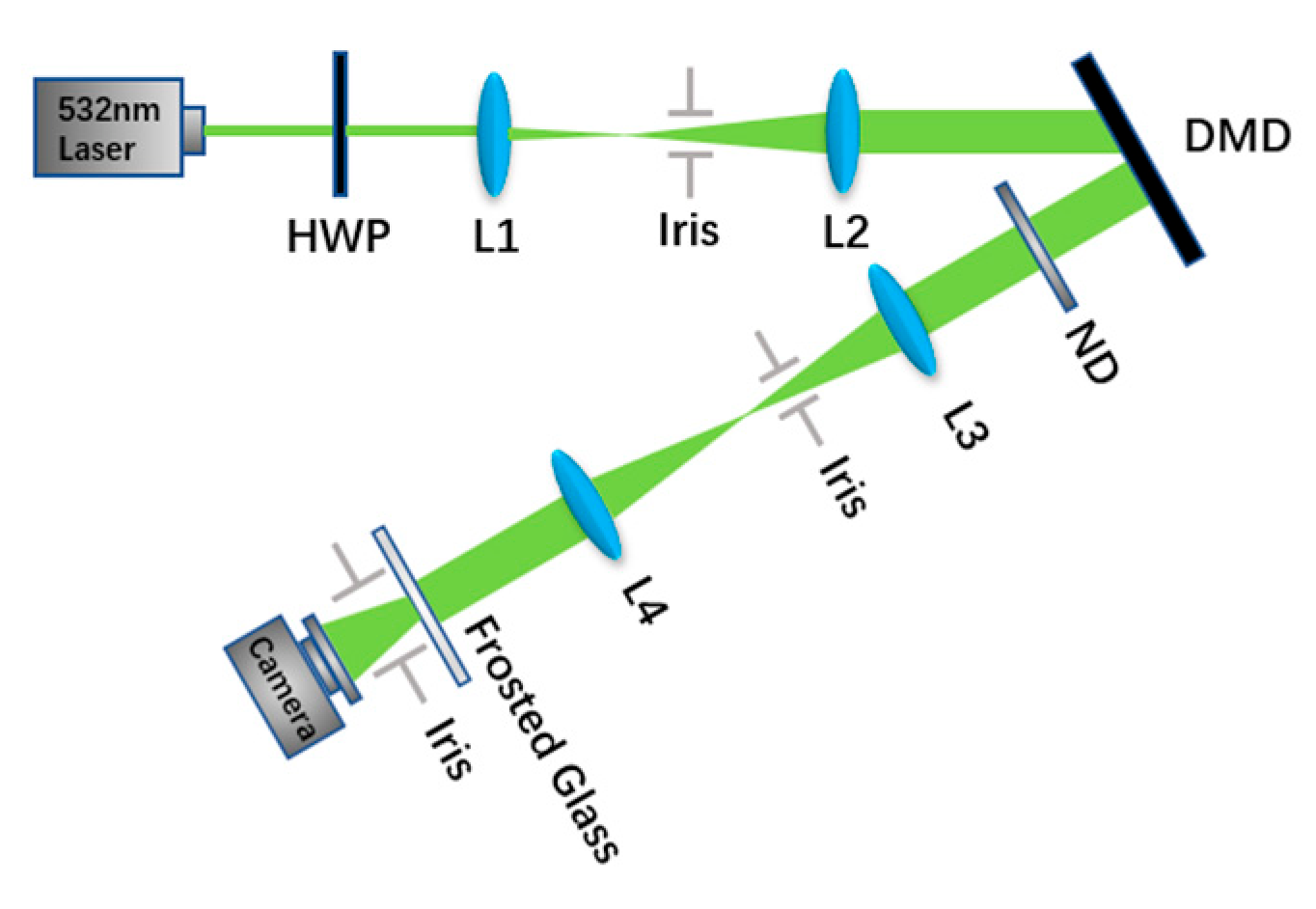

3.1. Optical Imaging System

3.2. Data Preprocessing and Model Training

3.3. Evaluation Indices

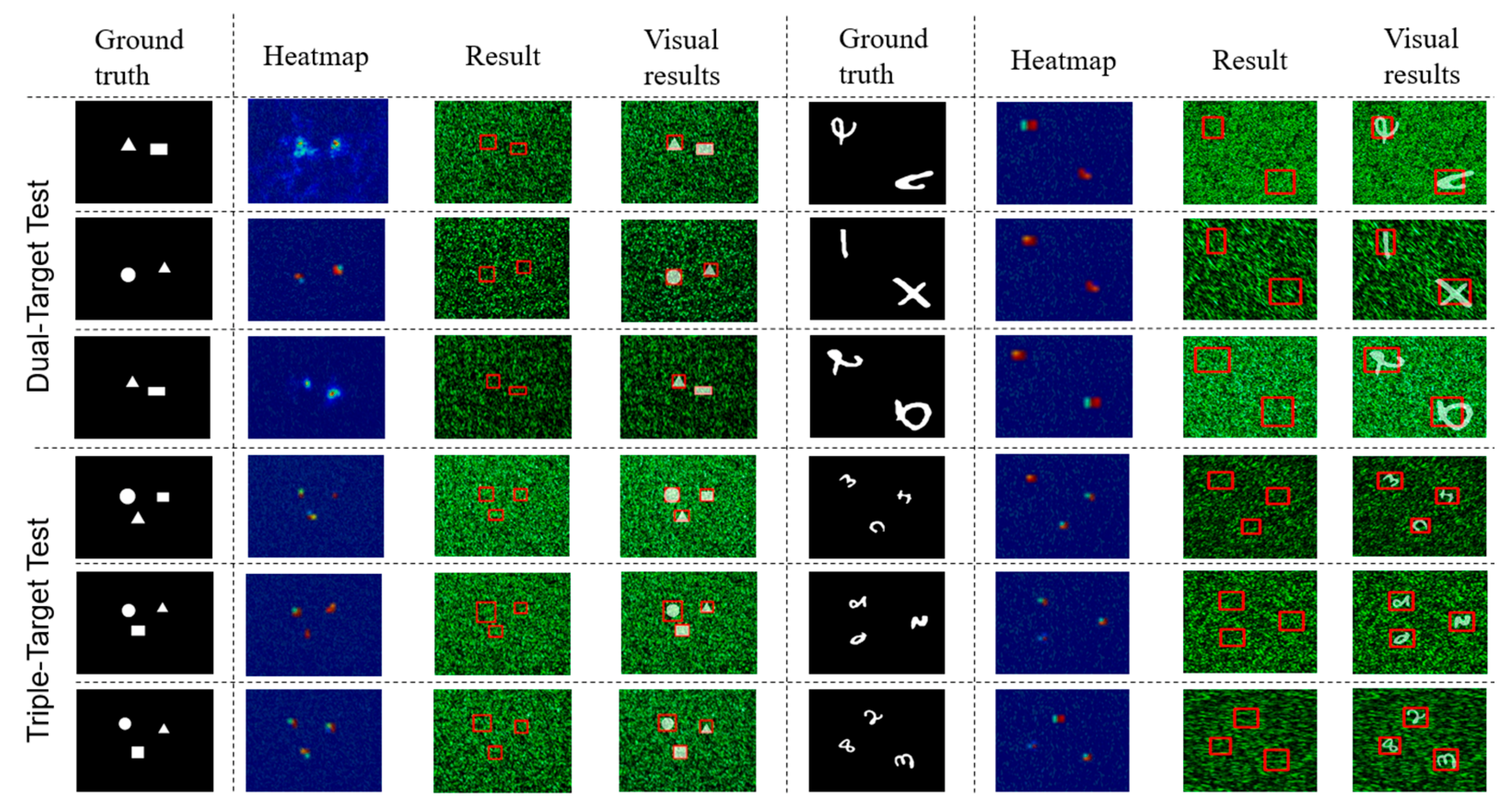

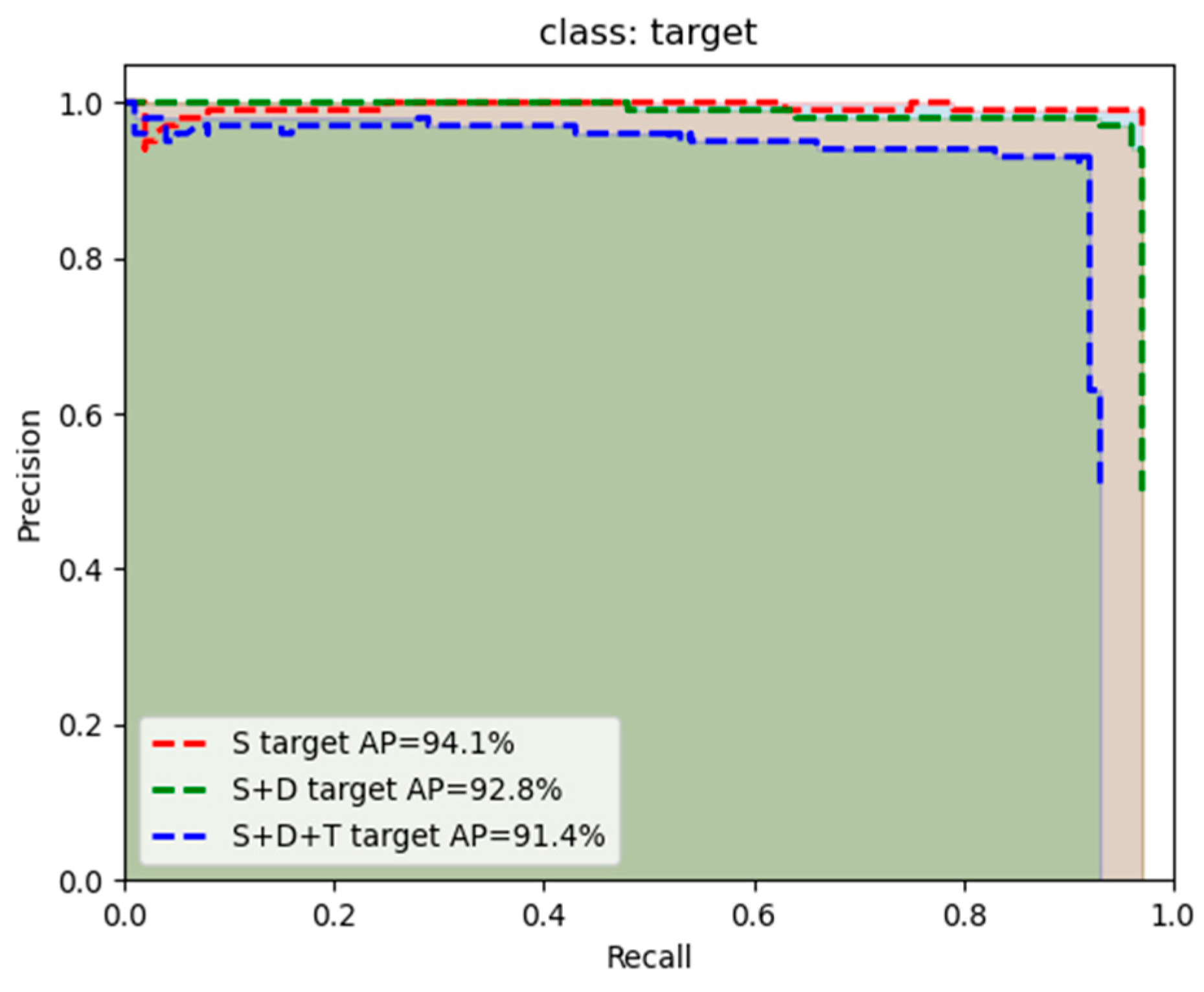

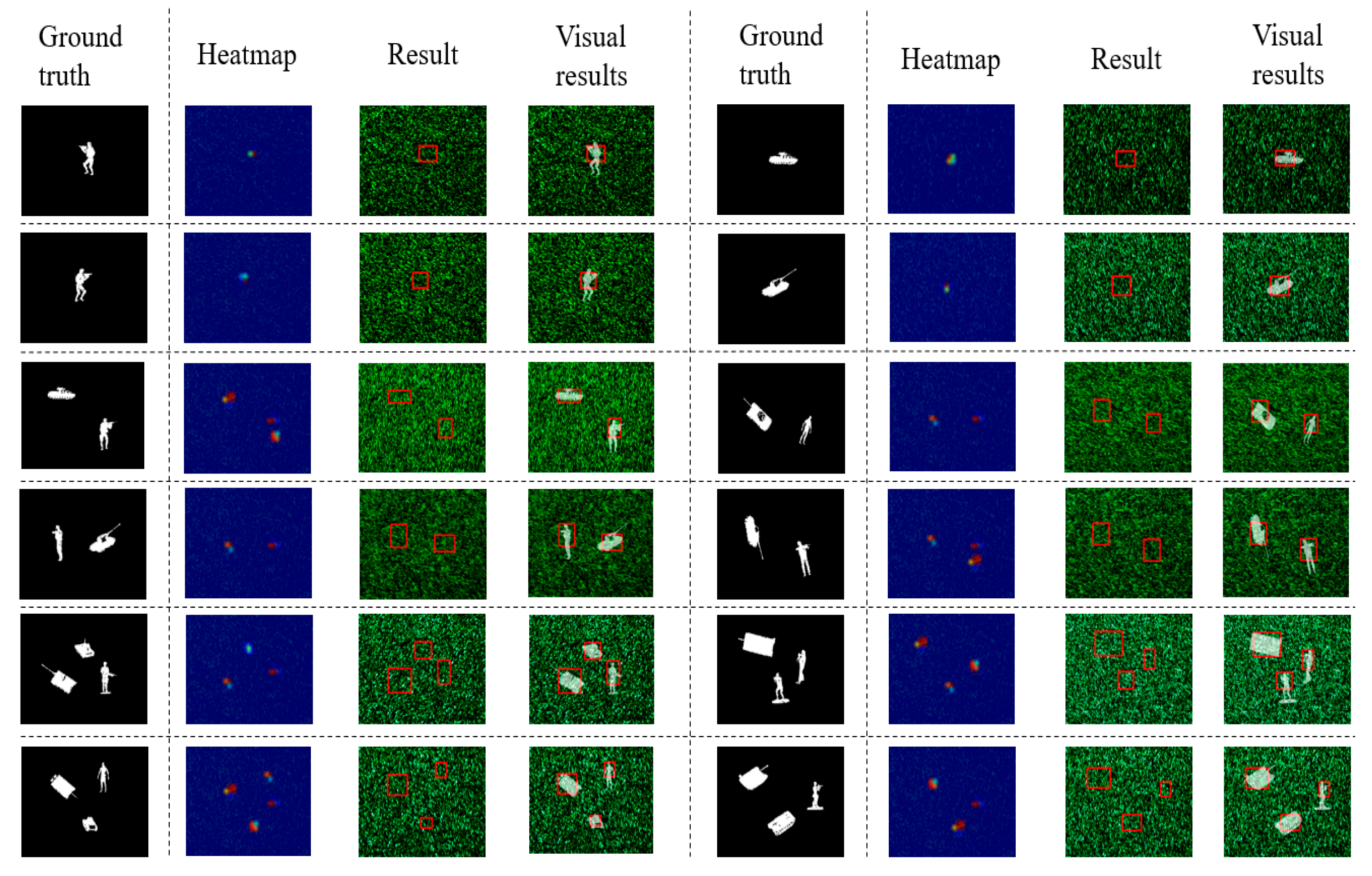

3.4. Experimental Results and Analysis

3.5. Ablation Experiments

3.6. Supplementary Experiments

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Katz, O.; Heidmann, P.; Fink, M.; Gigan, S. Non-invasive single-shot imaging through scattering layers and around corners via speckle correlations. Nat. Photon. 2014, 8, 784–790. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Ntziachristos, V. Going deeper than microscopy: The optical imaging frontier in biology. Nat Methods. 2010, 7, 603–614. [Google Scholar] [CrossRef] [PubMed]

- Goodman, J.W. Speckle Phenomena in Optics: Theory and Applications. J. Stat. Phys. 2008, 130, 413–414. [Google Scholar]

- Leal-Junior, A.G.; Frizera, A.; Marques, C.; Pontes, M.J. Optical Fiber Specklegram Sensors for Mechanical Measurements: A Review. IEEE Sens. J. 2020, 20, 569–576. [Google Scholar] [CrossRef]

- Mosk, A.P.; Lagendijk, A.; Lerosey, G.; Fink, M. Controlling waves in space and time for imaging and focusing in complex media. Nat. Photon. 2012, 6, 283–292. [Google Scholar] [CrossRef]

- Vellekoop, I.M.; Mosk, A.P. Focusing coherent light through opaque strongly scattering media. Opt. Lett. 2007, 32, 2309–2311. [Google Scholar] [CrossRef]

- Li, S.; Deng, M.; Lee, J.; Sinha, A.; Barbastathis, G. Imaging through glass diffusers using densely connected convolutional networks. Optica 2018, 5, 803–813. [Google Scholar] [CrossRef]

- Popoff, S.M.; Lerosey, G.; Carminati, R.; Fink, M.; Boccara, A.C.; Gigan, S. Measuring the transmission matrix in optics: An approach to the study and control of light propagation in disordered media. Phys. Rev. Lett. 2010, 104, 100601. [Google Scholar] [CrossRef]

- Meng, R.; Shao, C.; Li, P.; Dong, Y.; Hou, A.; Li, C.; Lin, L.; He, H.; Ma, H. Transmission Mueller matrix imaging with spatial filtering. Opt. Lett. 2021, 46, 4009–4012. [Google Scholar] [CrossRef]

- Huang, G.; Wu, D.; Luo, J.; Huang, Y.; Shen, Y. Retrieving the optical transmission matrix of a multimode fiber using the extended Kalman filter. Opt. Express 2020, 28, 9487–9500. [Google Scholar] [CrossRef]

- Chen, L.; Singh, R.K.; Chen, Z.; Pu, J. Phase shifting digital holography with the Hanbury Brown-T wiss approach. Opt. Lett. 2020, 45, 212–215. [Google Scholar] [CrossRef]

- Chen, L.; Chen, Z.; Singh, R.K.; Pu, J. Imaging of polarimetric-phase object through scattering medium by phase shifting. Opt. Express 2020, 28, 8145–8155. [Google Scholar] [CrossRef]

- Bertolotti, J.; Van Putten, E.G.; Blum, C.; Lagendijk, A.; Vos, W.L.; Mosk, A.P. Non-invasive imaging through opaque scattering layers. Nature 2012, 491, 232–234. [Google Scholar] [CrossRef]

- Zhu, S.; Guo, E.; Gu, J.; Cui, Q.; Zhou, C.; Bai, L.; Han, J. Efficient color imaging through unknown opaque scattering layers via physics-aware learning. Opt. Express 2021, 29, 40024–40037. [Google Scholar] [CrossRef]

- Fienup, J.R. Reconstruction of an object from the modulus of its Fourier transform. Opt. Lett. 1978, 3, 27–29. [Google Scholar] [CrossRef]

- Fienup, J.R. Phase retrieval algorithms: A comparison. Appl. Opt. 1982, 21, 2758–2769. [Google Scholar] [CrossRef]

- Takajo, H.; Takahashi, T.; Itoh, K.; Fujisaki, T. Reconstruction of an object from its Fourier modulus: Development of the combination algorithm composed of the hybrid input-output algorithm and its converging part. Appl. Opt. 2002, 41, 6143–6153. [Google Scholar] [CrossRef]

- Guo, C.; Liu, J.; Wu, T.; Zhu, L.; Shao, X. Tracking moving targets behind a scattering medium via speckle correlation. Appl. Opt. 2018, 57, 905–913. [Google Scholar] [CrossRef]

- Jakobsen, M.L.; Yura, H.T.; Hanson, S.G. Spatial filtering velocimetry of objective speckles for measuring out-of-plane motion. Appl. Opt. 2012, 51, 1396–1406. [Google Scholar] [CrossRef]

- Jo, K.; Gupta, M.; Nayar, S.K. SpeDo: 6 DOF Ego-Motion Sensor Using Speckle Defocus Imaging. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4319–4327. [Google Scholar] [CrossRef]

- Akhlaghi, M.I.; Dogariu, A. Tracking hidden objects using stochastic probing. Optica 2017, 4, 447–453. [Google Scholar] [CrossRef]

- Chen, H.; Gao, Y.; Liu, X.; Zhou, Z. Imaging through scattering media using speckle pattern classification based support vector regression. Opt. Express 2018, 26, 26663–26678. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.; Liu, Z.H.; Cheng, Z.D.; Xu, J.S.; Li, C.F.; Guo, G.C. Deep hybrid scattering image learning. J. Phys. D Appl. Phys. 2019, 52, 115105. [Google Scholar] [CrossRef]

- Guo, E.; Zhu, S.; Sun, Y.; Bai, L.; Zuo, C.; Han, J. Learning-based method to reconstruct complex targets through scattering medium beyond the memory effect. Opt. Express 2020, 28, 2433–2446. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. Available online: https://arxiv.org/abs/2004.10934 (accessed on 23 April 2020).

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Wang, T.; Yuan, L.; Zhang, X.; Feng, J. Distilling Object Detectors with Fine-Grained Feature Imitation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4928–4937. [Google Scholar] [CrossRef]

- Wang, C.; Zhong, C. Adaptive Feature Pyramid Networks for Object Detection. IEEE Access 2021, 9, 107024–107032. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar] [CrossRef]

- Zhang, X.; Gao, J.; Gan, Y.; Song, C.; Zhang, D.; Zhuang, S.; Han, S.; Lai, P.; Liu, H. Different Channels to Transmit Information in a Scattering Medium. arXiv 2022, arXiv:2207.10270. Available online: https://arxiv.org/abs/2207.10270 (accessed on 21 July 2022).

- Sasaki, T.; Leger, J.R. Non-line-of-sight object location estimation from scattered light using plenoptic data. J. Opt. Soc. Am. A 2021, 38, 211–228. [Google Scholar] [CrossRef]

- Wang, X.; Jin, X.; Li, J. Blind position detection for large field-of-view scattering imaging. Photon. Res. 2020, 8, 920–928. [Google Scholar] [CrossRef]

- Xu, Q.; Sun, B.; Zhao, J.; Wang, Z.; Du, L.; Sun, C.; Li, X.; Li, X. Imaging and Tracking Through Scattering Medium with Low Bit Depth Speckle. IEEE Photonics J. 2020, 12, 1–7. [Google Scholar] [CrossRef]

- Lu, Z.; Cao, Y.; Peng, T.; Han, B.; Dong, Q. Tracking objects outside the line of sight using laser Doppler coherent detection. Opt. Express 2022, 30, 31577–31583. [Google Scholar] [CrossRef]

- Li, Z.; Liu, B.; Wang, H.; Yi, H.; Chen, Z. Advancement on target ranging and tracking by single-point photon counting lidar. Opt. Express 2022, 30, 29907–29922. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, X.; Jiang, Z.; Wen, Y. Deep learning-based scattering removal of light field imaging. Chin. Opt. Lett. 2022, 20, 041101. [Google Scholar] [CrossRef]

- Zhan, X.; Gao, J.; Gan, Y.; Song, C.; Zhang, D.; Zhuang, S.; Han, S.; Lai, P.; Liu, H. Roles of scattered and ballistic photons in imaging through scattering media: A deep learning-based study. arXiv 2022, arXiv:2207.10263. Available online: https://arxiv.org/abs/2207.10263 (accessed on 21 July 2022).

- Tan, H.; Liu, X.; Yin, B.; Li, X. MHSA-Net: Multihead Self-Attention Network for Occluded Person Re-Identification. IEEE Trans. Neural Netw. Learn. Systems. 2022, 1–15. [Google Scholar] [CrossRef]

- Xiao, X.; Zhang, D.; Hu, G.; Jiang, Y.; Xia, S. CNN–MHSA: A Convolutional Neural Network and multi-head self-attention combined approach for detecting phishing websites. Neural Netw. 2020, 125, 303–312. [Google Scholar] [CrossRef]

| Imaging Domain | Main Dataset Name | Quantity |

|---|---|---|

| Object Detection | MS COCO | 300,000+ |

| Image Classification | Fashion-MNIST | 70,000+ |

| Image Segmentation | PASCAL VOC | 33,043+ |

| F1/% | AP/% | mAP/% | |

|---|---|---|---|

| S Target Test | 92.3 | 94.1 | 94.1 |

| S + D Target Test | 91.0 | 92.8 | 92.8 |

| S + D + T Target Test | 90.2 | 91.4 | 91.4 |

| Params [M] | F1 [%] | AP [%] | mAP [%] | |

|---|---|---|---|---|

| YOLO V4 | 64.36 | 92.3 | 94.1 | 94.1 |

| MHSA + YOLO V4 | 66.12 | 95.2 | 97.3 | 97.3 |

| F1/% | AP/% | mAP/% | |

|---|---|---|---|

| S Target Test | 91.2 | 92.6 | 92.6 |

| S + D Target Test | 90.1 | 91.3 | 91.3 |

| S + D + T Target Test | 89.8 | 90.2 | 90.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Zhuang, J.; Ye, S.; Liu, W.; Yuan, Y.; Zhang, H.; Xiao, J. An Unknown Hidden Target Localization Method Based on Data Decoupling in Complex Scattering Media. Photonics 2022, 9, 956. https://doi.org/10.3390/photonics9120956

Wang C, Zhuang J, Ye S, Liu W, Yuan Y, Zhang H, Xiao J. An Unknown Hidden Target Localization Method Based on Data Decoupling in Complex Scattering Media. Photonics. 2022; 9(12):956. https://doi.org/10.3390/photonics9120956

Chicago/Turabian StyleWang, Chen, Jiayan Zhuang, Sichao Ye, Wei Liu, Yaoyao Yuan, Hongman Zhang, and Jiangjian Xiao. 2022. "An Unknown Hidden Target Localization Method Based on Data Decoupling in Complex Scattering Media" Photonics 9, no. 12: 956. https://doi.org/10.3390/photonics9120956

APA StyleWang, C., Zhuang, J., Ye, S., Liu, W., Yuan, Y., Zhang, H., & Xiao, J. (2022). An Unknown Hidden Target Localization Method Based on Data Decoupling in Complex Scattering Media. Photonics, 9(12), 956. https://doi.org/10.3390/photonics9120956