Abstract

Photonic neural network chips have been widely studied because of their low power consumption, high speed and large bandwidth. Using amplitude and phase to encode, photonic chips can accelerate complex-valued neural network computations. In this article, a photonic complex-valued neural network (PCNN) chip is designed. The scale of the single-core PCNN chip is limited because of optical losses, and the multicore architecture of the chip is used to improve computing capability. Further, for improving the performance of the PCNN, we propose the transformation layer, which can be implemented by the designed photonic chip to transform real-valued encoding to complex-valued encoding, which has richer information. Compared with real-valued input, the transformation layer can effectively improve the classification accuracy from 93.14% to 97.51% of a 64-dimensional input on the MNIST test set. Finally, we analyze the multicore computation of the PCNN. Compared with the single-core architecture, the multicore architecture can improve the classification accuracy by implementing larger neural networks and has better phase noise robustness. The proposed architecture and algorithms are beneficial to promote the accelerated computing of photonic chips for complex-valued neural networks and are promising for use in many applications, such as image recognition and signal processing.

1. Introduction

In recent years, deep learning [1] has developed rapidly and is widely used in many fields, such as in the optimization of organic solar cells [2] and the design of subwavelength photonic devices [3]. The development of deep learning is inseparable from the support of computing chips, such as the central processing unit (CPU), graphics processing unit (GPU) and tensor processing unit (TPU). As Moore’s Law is slowing down, electronic chips have encountered more and more serious problems, such as high power consumption. Compared with electronic chips, photonic neural network chips have the advantages of low power consumption, high computation speed, large bandwidth and high parallelism and have been widely studied in recent years. There are many different schemes for photonic neural network chips. Shen et al. demonstrated a Mach–Zehnder interferometer (MZI)-based photonic processor and used it for vowel recognition [4]. Tait et al. reported silicon photonic neural networks based on microring weight banks [5]. Xu et al. demonstrated an optical coherent dot-product chip and accomplished the image reconstruction task [6]. On et al. proposed the photonic matrix multiplication accelerator based on wavelength division multiplexing and coherent detection [7].

Unlike real-valued neural networks, complex-valued neural networks [8] use complex numbers for data processing and have many applications in image processing, audio signal processing and radar signal processing. In electronic chips, complex numbers need to be encoded with two real numbers, which will increase parameter and computation complexity. Light has multiple dimensions, such as wavelength, amplitude, phase and polarization. The calculation of complex-valued neural networks can be efficiently implemented by using the amplitude and phase of light to encode signals [9]. Compared with real-valued neural networks, complex-valued neural networks can achieve higher classification accuracies in image recognition tasks [10]. Images are usually encoded with real values. However, complex-valued images based on the Fourier transform can effectively improve classification accuracy of the complex-valued neural network [11]. Fourier transform processing is also used for photonic neural networks [12]. Lenses can efficiently realize the Fourier transform, but the integration of lenses and photonic chips is very complex [13]. On the other hand, chip-level Fourier transform structures are usually different from those of photonic neural network chips [14].

In this article, the photonic complex-valued neural network (PCNN) chip was designed including input, weights and output parts. The photonic chip can realize complex number computations by changing the phase and amplitude of light. Though photonics continues developing, the scale of photonic integration chips is still relatively small because of optical losses and device noise, especially compared with the scale of neural networks, which have millions of parameters. Therefore, the multicore PCNN architecture is used to improve the computing parallelism and computing capability of photonic chips. For improving classification accuracy of the PCNN, the transformation layer is proposed that can convert real-valued images into complex-valued images. On the MNIST test set, the classification accuracy of the 64-dimensional transformation layer is 97.51%, which is almost the same as the accuracy of the Fourier transform-based complex-valued inputs and 4.37% higher than the accuracy of real-valued inputs. The PCNN chip has plenty of phase shifters, and phase noise will decrease the performance of PCNNs. We analyze the effect of phase noise on the single-core architecture and the multicore architecture. The larger single-core PCNN has worse phase noise robustness, and the multicore PCNN with more cores and a smaller single core has better phase noise robustness. The main contributions of this article are as follows:

- Using the multicore PCNN architecture to improve computing capability;

- Proposing the transformation layer, which can be implemented by the designed PCNN chip for improving performance of the PCNN;

- Analyzing the effect of phase noise on the multicore PCNN.

The remainder of this article is organized as follows: In Section 2, we introduce architectures of the PCNN chip and the multicore PCNN. In Section 3, we describe the transformation layer, calculate optical losses of the single-core PCNN chip and analyze phase noise robustness of the PCNN. Section 4 compares the multicore PCNN with other photonic neural networks, and Section 5 concludes the article.

2. Multicore Photonic Complex-Valued Neural Network

2.1. Photonic Complex-Valued Neural Network Chip

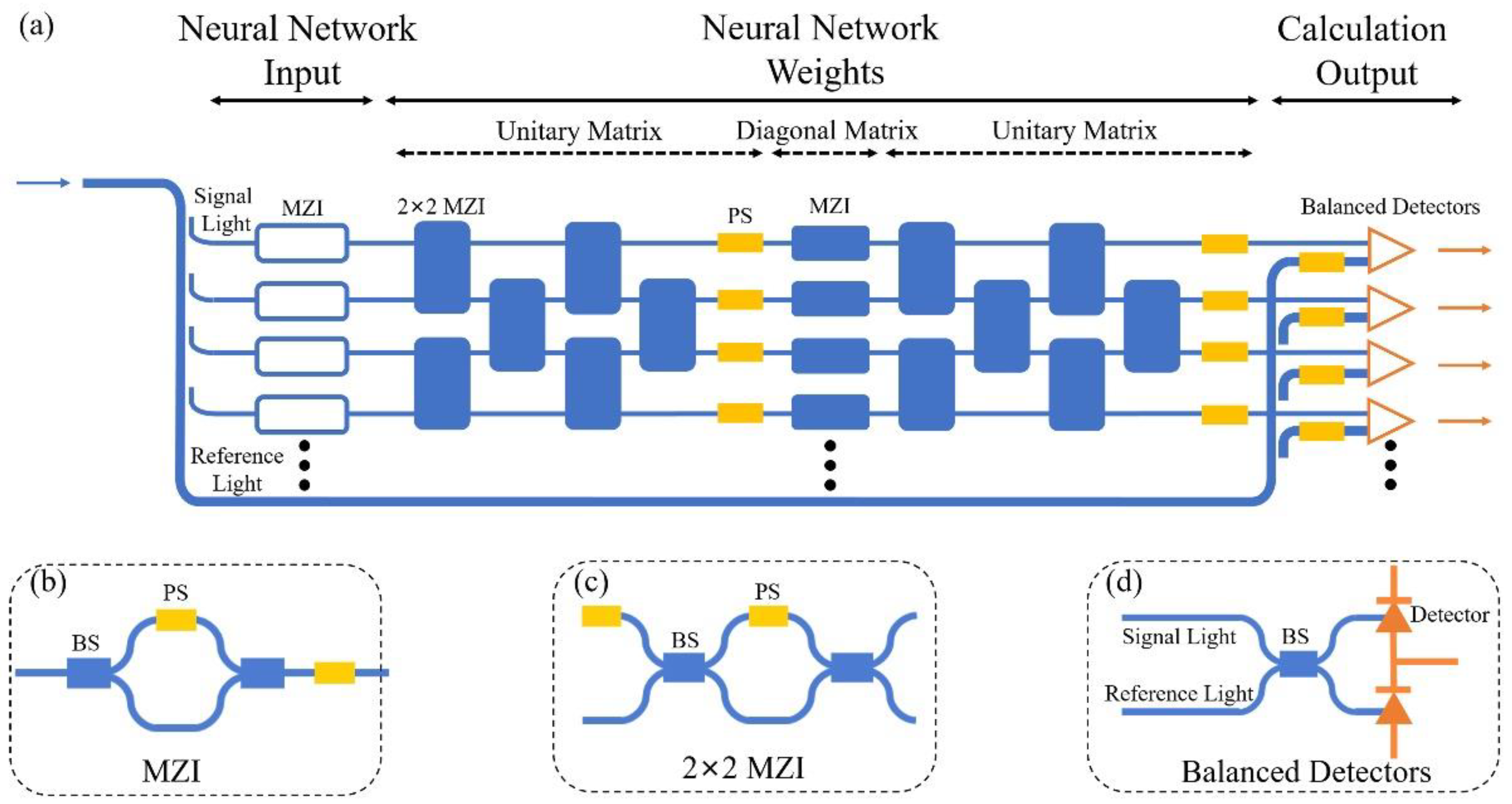

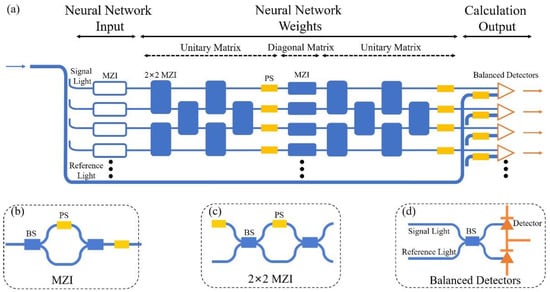

The architecture of the PCNN chip is shown in Figure 1a. The chip is composed of three parts, which are neural network input, neural network weights and calculation output. The MZI, 2 × 2 MZI and balanced detectors are basic units of the PCNN chip. Detailed variables of different parts are shown in Table 1.

Figure 1.

(a) The photonic complex-valued neural network (PCNN) chip. (b) MZI, (c) 2 × 2 MZI and (d) balanced detectors are basic units of neural network input part, neural network weights part and calculation output part. MZI: Mach–Zehnder interferometer; PS: phase shifter; BS: beam splitter.

Table 1.

Variables of the PCNN chip.

The input light is split into reference light and multiple signal light. Signal light and reference light transmit into the input part and output part, respectively. The input part uses MZIs and phase shifters to encode the input of the neural network, including amplitude encoding and phase encoding, as shown in Figure 1b. The weights part can realize an arbitrary complex-valued matrix through singular value decomposition,

The complex-valued matrix is decomposed into the product of two unitary matrices , and a diagonal matrix . The unitary matrix can be realized by a cascaded array of 2 × 2 MZIs and phase shifters. Figure 1a shows a rectangular mesh [15] of 2 × 2 MZIs. Figure 1c is a 2 × 2 MZI including two phase shifters. The inner phase shifter (phase shift value ) changes the amplitude of light, and the outer phase shifter (phase shift value ) changes the phase of light. The 2 × 2 MZI can realize unitary transformation ,

The diagonal matrix can be realized by a column of MZIs. The balanced detectors in Figure 1d can detect amplitude and phase of the light. The signal light from weights part is

and the reference light from beam splitters is

where are amplitudes of the light, are phases of the light, and is the frequency of the light. The output photocurrent of balanced detectors is

After adding π/2 phase shift to the reference light, the output photocurrent is

Then the phase and the amplitude of light can be calculated [9]. Balanced detectors array is used to realize the output of complex numbers. The designed PCNN chip can parallel process multichannel data.

2.2. Multicore Architecture of Photonic Chip

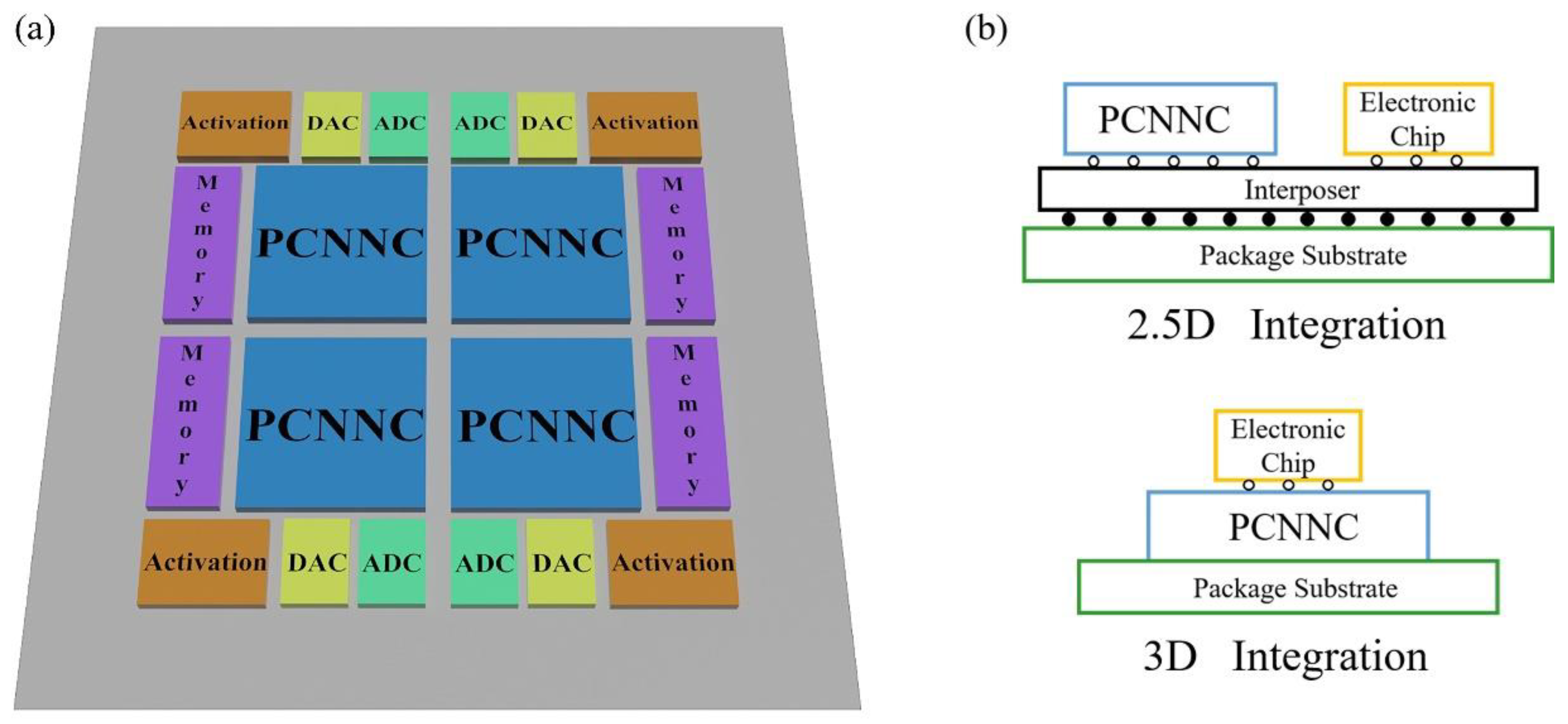

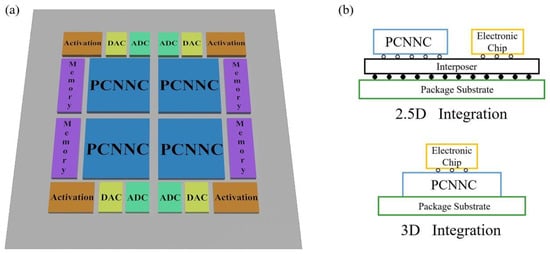

In recent years, the scale of photonic chips has continued to increase. Photonic neural network chips based on MZIs have grown from 4 × 4 [4] to 64 × 64 [16], which has thousands of photonic devices. However, due to various factors, such as optical losses and device noise, the scale of photonic chips is relatively small, and the computing capability of single-core photonic neural network chips is limited. The multicore architecture of photonic chips can be used to improve computation capability. As shown in Figure 2a, the multicore architecture consists of multiple photonic and electronic chips. PCNN chips realize the accelerated computing of matrices of complex-valued neural networks. The PCNN chip is connected to a digital-to-analog converter (DAC) and an analog-to-digital converter (ADC), which realize the conversion of digital signals and analog signals, i.e., conversion of optical signals and electrical signals. The activation units realize nonlinear functions calculations in the electrical domain, and memories store input data, weights data and output data. PCNN chips and electronic chips can be fabricated in different complimentary metal-oxide semiconductor (CMOS) process nodes and integrated by 2.5D or 3D integration as shown in Figure 2b. PCNN chips and electronic chips are packaged on an interposer in 2.5D integration, and they are vertically packaged in 3D integration [17].

Figure 2.

(a) The multicore architecture of the PCNN chip. (b) Shown are 2.5D integration and 3D integration of the PCNN chip and the electronic chip. PCNNC: photonic complex-valued neural network chip; DAC: digital-to-analog converter; ADC: analog-to-digital converter.

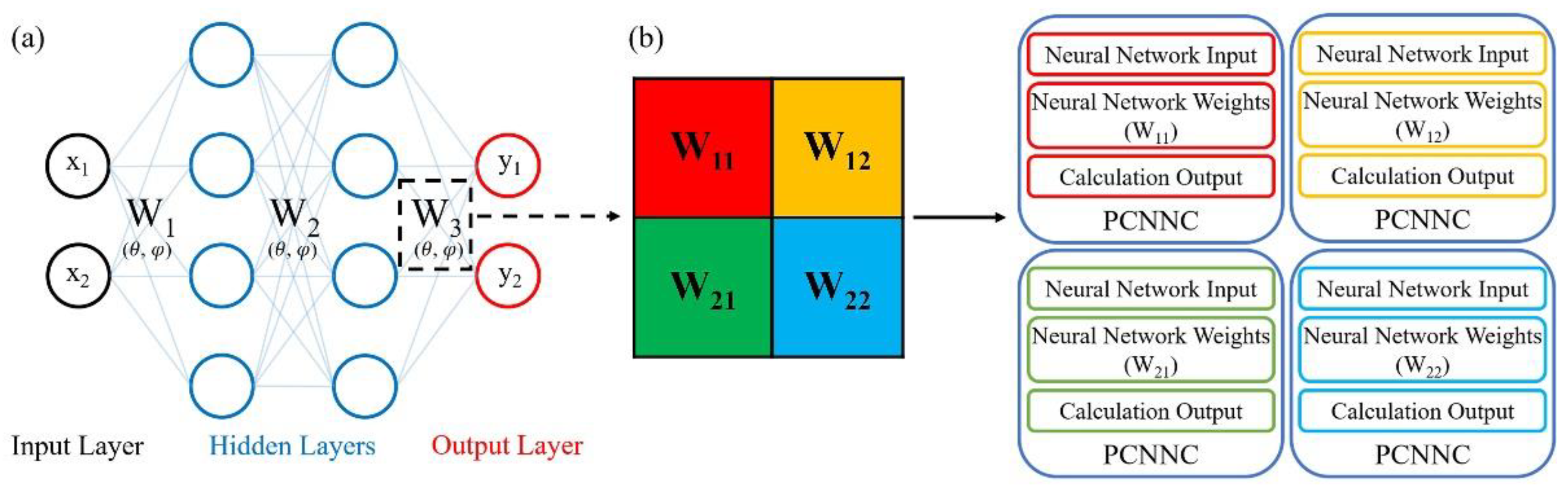

2.3. Multicore Photonic Complex-Valued Neural Network

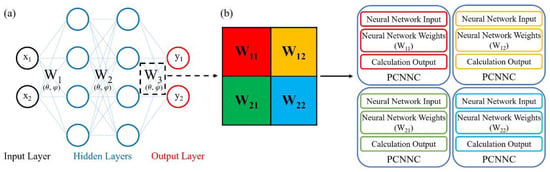

As shown in Figure 3a, the PCNN includes an input layer, one or more hidden layers and an output layer. For the i-th hidden layer,

, , and are input, output, weights and nonlinear activation functions of the hidden layer, respectively. Unlike traditional neural networks, matrices computation of the PCNN is realized by adjusting values of phase shifters () in Figure 1a. The schematic diagram of the multicore matrix calculation is shown in Figure 3b. Weights matrix of the neural network can be split into multiple small matrices, , , and , which are calculated by different PCNN chips. Correspondingly, the input is split, and the output of multiple chips can be obtained.

Figure 3.

(a) The PCNN with an input layer, multiple hidden layers and an output layer. (b) Schematic diagram of multicore matrix calculation. PCNNC: photonic complex-valued neural network chip.

3. Results

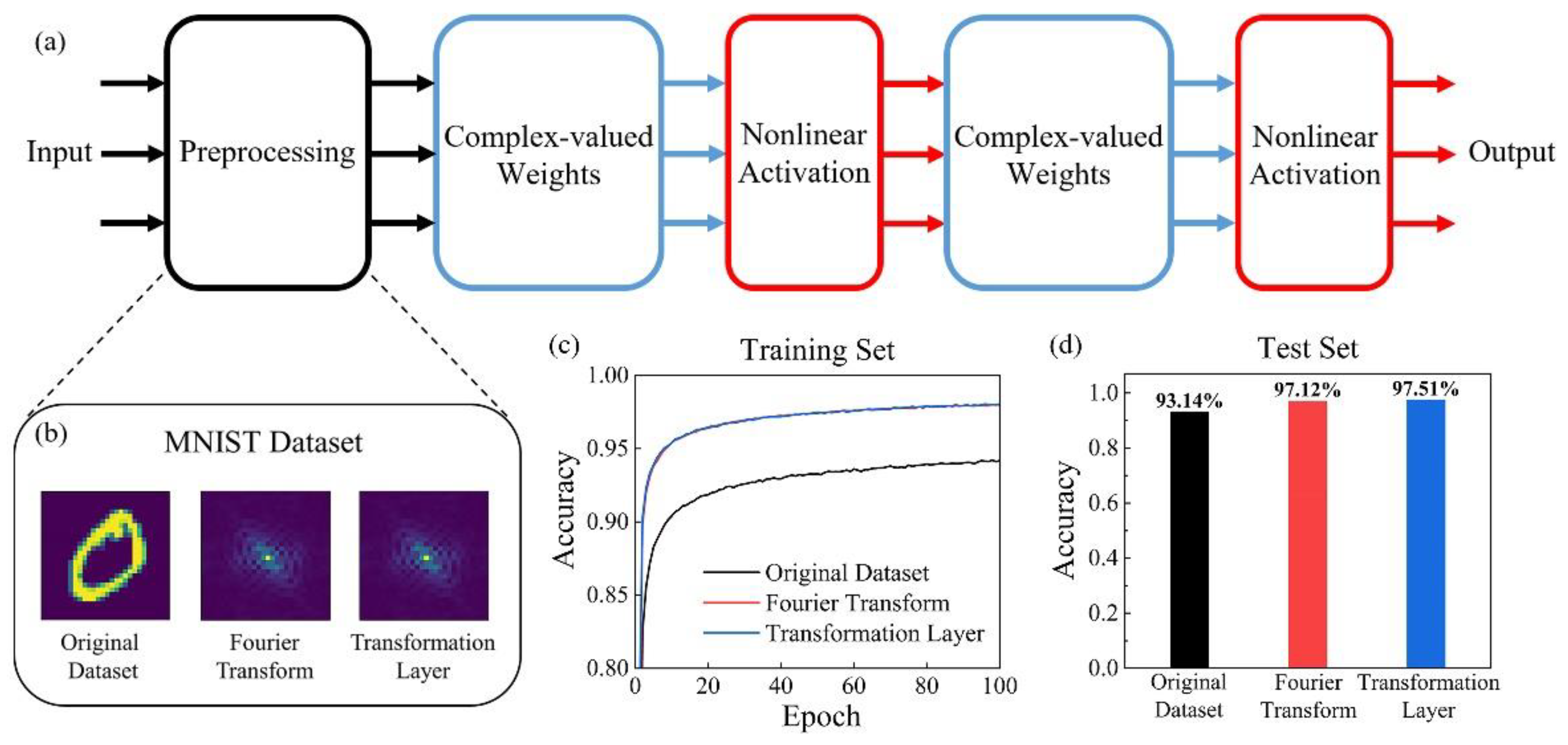

3.1. Photonic Complex-Valued Neural Network with Transformation Layer

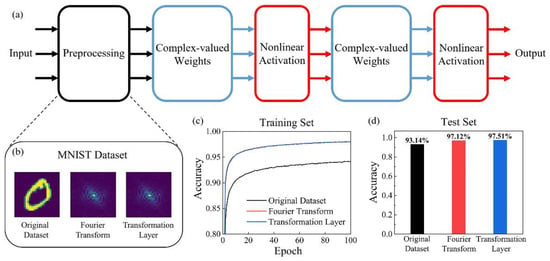

We used Tensorflow and Neurophox [18] to simulate the PCNN. In Figure 4a, a two-layer PCNN is constructed. The nonlinear activation function is CReLU, the loss function is the mean square error, and the optimizer is the Adam optimizer [19]. The neural network is trained on the MNIST dataset. The MNIST dataset contains ten handwritten numbers from 0 to 9. It is split into the training set and test set, which have 60,000 and 10,000 images, respectively. Dataset preprocessing has an important influence on the performance of neural networks. Recently, the Fourier transform-based image preprocessing shown in Figure 4b has been used for photonic neural networks. Figure 4c shows the classification accuracy of a PCNN with a 64-dimensional input on the MNIST training set. After trained for 100 epochs, the PCNN based on Fourier transform preprocessing can achieve 98.01% accuracy, while the PCNN trained on the original MNSIT dataset can only achieve 94.35% accuracy. The classification accuracy on the MNIST test set is shown in Figure 4d. The test accuracy of the Fourier transform preprocessing-based PCNN is 4.37% higher than that of the PCNN with the original MNIST test set.

Figure 4.

(a) A two-layer PCNN with preprocessing. (b) Different preprocessing of the MNIST dataset. Classification accuracies of the PCNN on the MNIST (c) training set and (d) the test set with different preprocessing, which are original dataset (black), Fourier transform (red) and the transformation layer (blue).

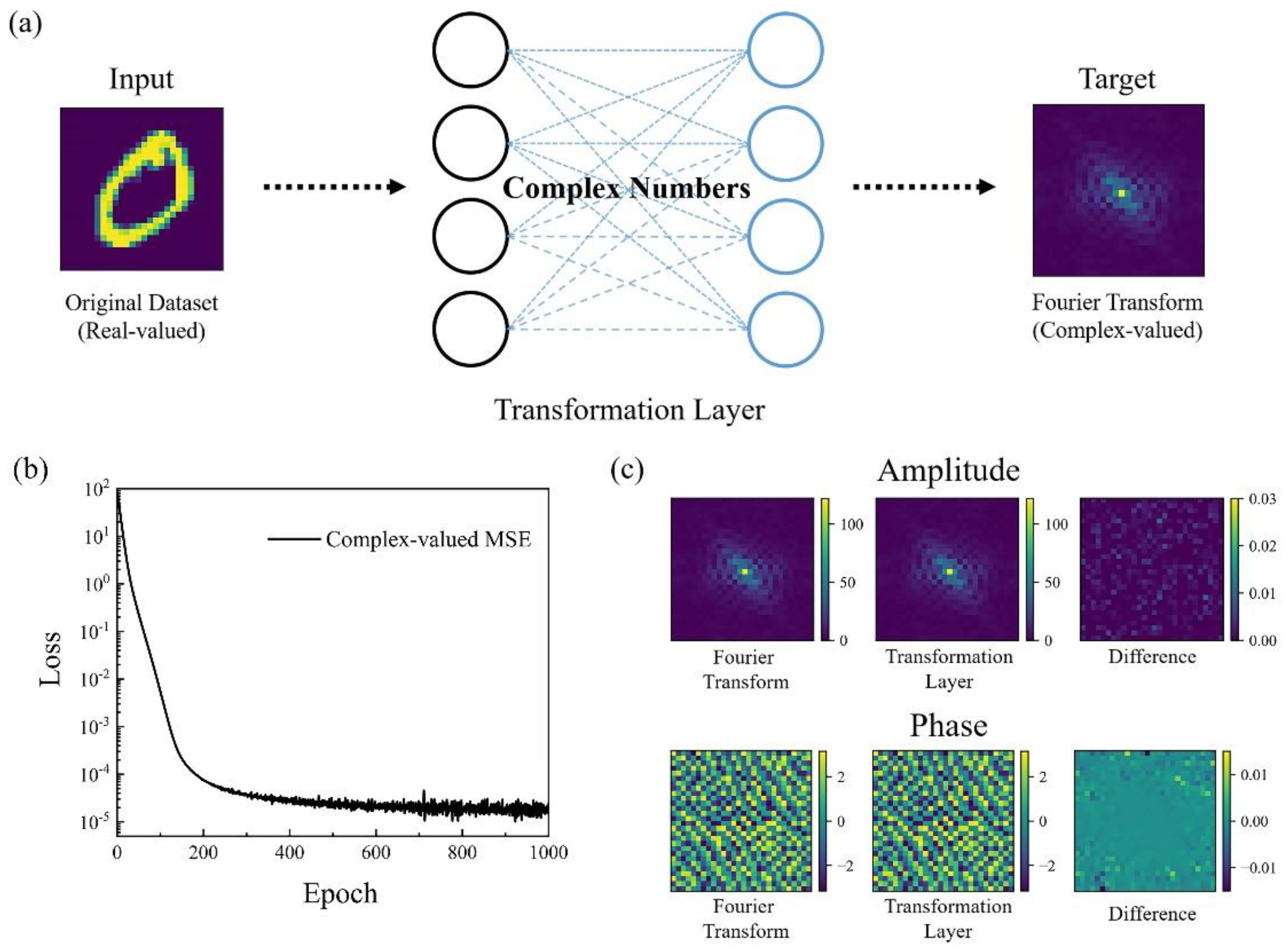

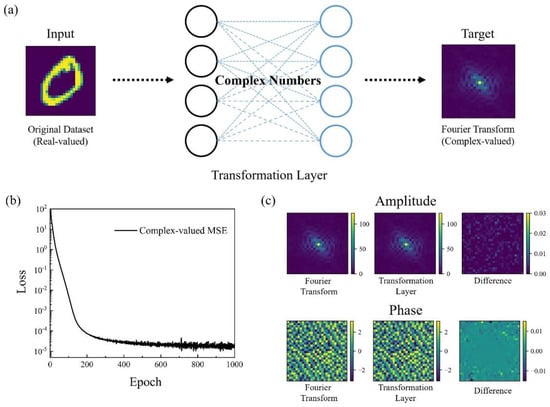

Using photonic devices to implement the Fourier transform is fast and very energy efficient. However, the architecture of the photonic Fourier transform is quite different from that of a designed PCNN chip. A transformation layer that can transform real-valued encoding to complex-valued encoding is proposed. Furthermore, the transformation layer can be implemented by the designed PCNN chip through singular value decomposition. Figure 5a is the schematic diagram of the transformation layer. It is a single-layer neural network without activation functions. The input is real-valued images of the MNIST dataset, and the target is the Fourier transform-based complex-valued images of the MNIST dataset. The loss function of the single-layer neural network is the complex-valued mean square error, and the optimizer is Adam.

Figure 5.

(a) Schematic diagram of the transformation layer. (b) The loss function curve of the transformation layer on the MNIST training set. (c) The amplitude and phase images of the MNIST dataset after Fourier transform and the transformation layer. Difference images are the difference between them.

Figure 5b shows the loss function curve of the transformation layer on the MNIST training set. After 200 epochs, the loss function value is less than 10−4, and the loss function value on the test set is 1.45 × 10−5. Figure 5c shows the amplitude and phase images of the Fourier transform, the transformation layer and difference between them. The maximum value of the amplitude difference image is less than 0.01% of the amplitude image, and the maximum value of the phase difference image is less than 0.4% of the phase image. The transformation layer can achieve a precise fit. The classification accuracies of the PCNN with a transformation layer on the MNIST training set and test set are shown in Figure 4c,d. The PCNN with a transformation layer can achieve 97.51% test accuracy, which is almost equivalent to that of the Fourier transform and much better than that of the original MNIST dataset. Therefore, the transformation layer of optical domain can be used to replace the Fourier transform in the applications of PCNNs.

3.2. Multicore Architecture Analysis

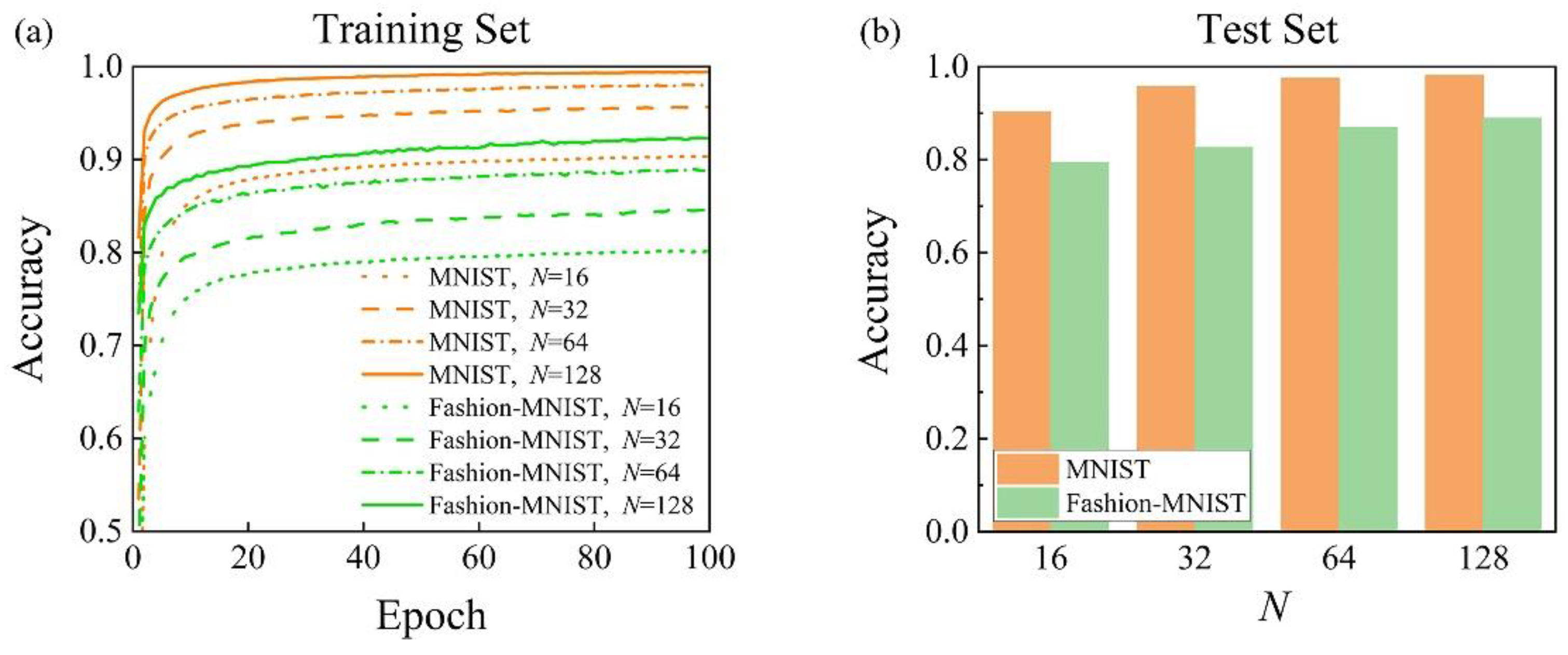

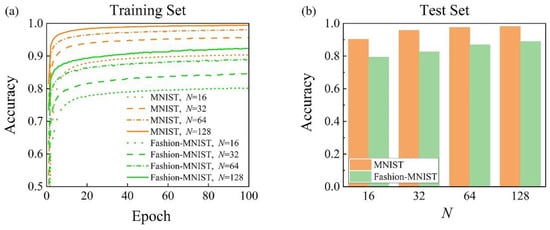

The N × N PCNN with the transformation layer, which has an N × N complex-valued weights matrix, is trained on the MNIST and Fashion-MNIST datasets. The Fashion-MNIST dataset is similar to the MNIST dataset, and it has ten types of clothing. The classification accuracies of different sizes of single-core N × N PCNNs are shown in Figure 6a,b. Larger PCNNs have more parameters and can achieve higher classification accuracies. The accuracies of the 128 × 128 PCNN on the MNIST and Fashion-MNIST test sets are 98.12% and 88.95%, respectively, which is much better than 90.33% and 79.45% of the 16 × 16 PCNN.

Figure 6.

Classification accuracies of different sizes of single-core N × N PCNNs on the MNIST and Fashion-MNIST (a) training dataset and (b) test set.

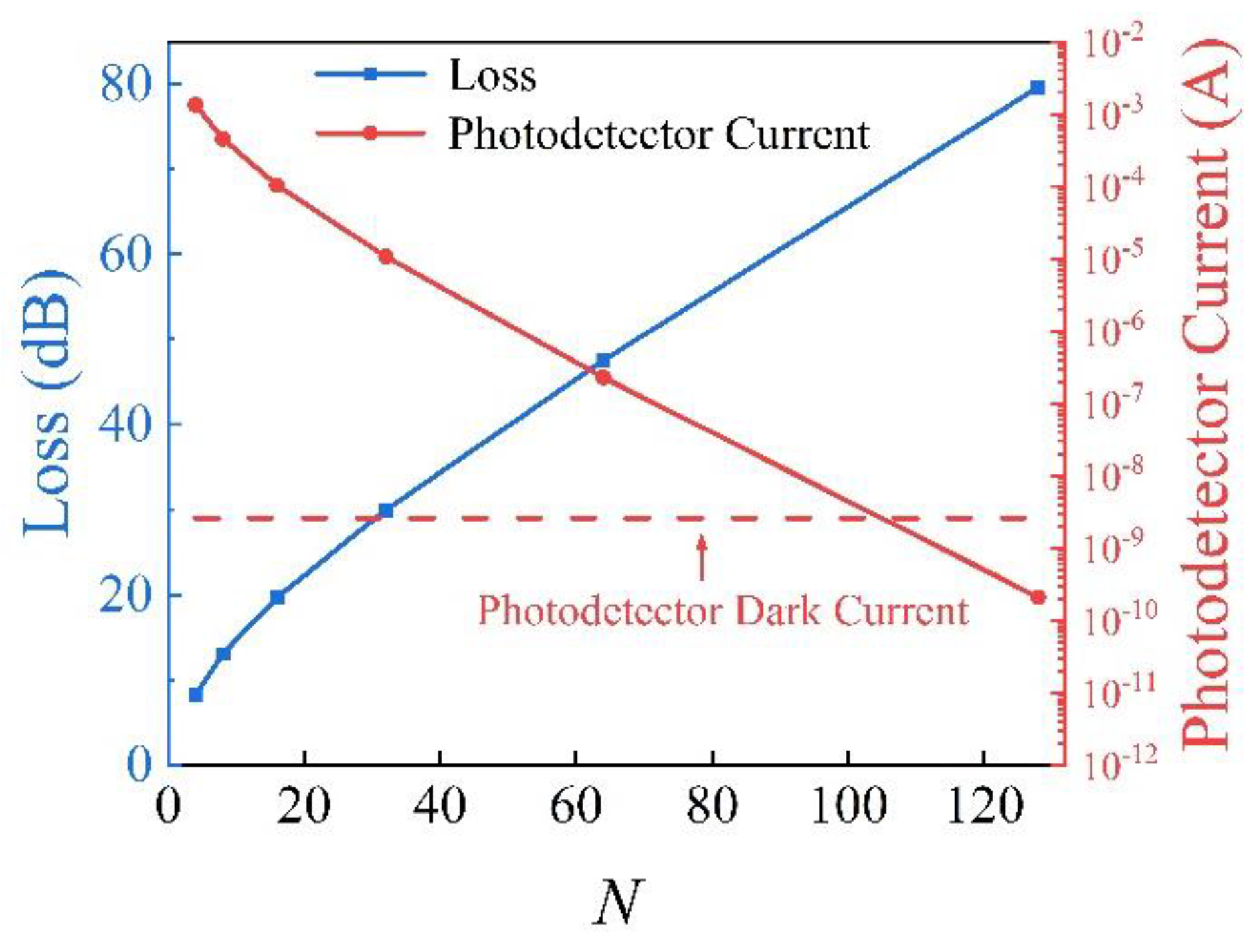

However, the integration of photonic circuits is limited due to the optical losses of photonic devices. For the PCNN chip shown in Figure 1a, the losses of splitters of input part and the MZI arrays of weights parts are most influential [20] and are analyzed.

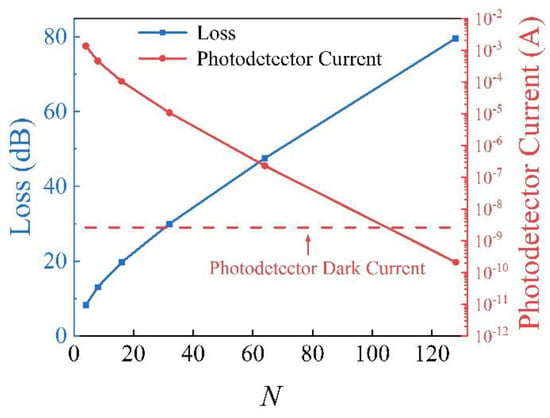

is the loss of splitters of the input part including insertion loss and excess loss . The of a PCNN chip with N-dimensional input is 10log10N. The loss of the MZI arrays of weights parts, , depends on the number of MZIs through which light travels and the loss of a MZI . The weights part of an N × N PCNN chip has two rectangular meshes of MZIs and a column of MZIs. The optical depth [15] of rectangular meshes of MZIs and a column of MZIs is N and 1, respectively. Every 2 × 2 MZI in the weights parts consists of two thermo-optic phase shifters and two splitters. The loss of the phase shifter is negligible [21], and the losses of the 2 × 2 splitters and waveguide are considered. For simplicity, the length of a 2 × 2 MZI is the sum of the lengths of two phase shifters. The device parameters are given in Table 2, and the loss of the PCNN chip is shown in Figure 7. Furthermore, the photodetector (PD) current is calculated when the laser power is 10 dBm. The detailed parameters of PD are shown in Table 2. It can be seen from Figure 7 that the photocurrent of a 128 × 128 PCNN chip is less than the dark current of PD. If the chip scale exceeds the limitation of optical losses, the single-core PCNN chip can no longer complete signal detection and neural network calculations.

Table 2.

Device parameters used for loss analysis of the PCNN chip.

Figure 7.

Loss (blue) and detector current (red) of the single-core N × N PCNN chip.

It is more effective to improve computing capability and classification accuracy by the multicore architecture because the scale of a single-core PCNN chip is limited. Table 3 shows the classification accuracies of different sizes of single-core and multicore PCNNs with the transformation layer on the MNIST and Fashion-MNIST test sets. The classification accuracy of the multicore N × N PCNN is higher than the single-core N × N PCNN. For example, the classification accuracy of a 4-core 64 × 64 (per core) PCNN is 88.96% on the Fashion-MNIST test set, while the classification accuracy of a single-core 64 × 64 PCNN is 87.01%. PCNNs of similar sizes can achieve approximate accuracy, such as single-core 128 × 128, 4-core 64 × 64 and 16-core 32 × 32 PCNNs, but 4-core 64 × 64 and 16-core 32 × 32 PCNNs are more practical.

Table 3.

Classification accuracies of different sizes of single-core and multicore PCNNs on the MNIST and Fashion-MNIST test sets.

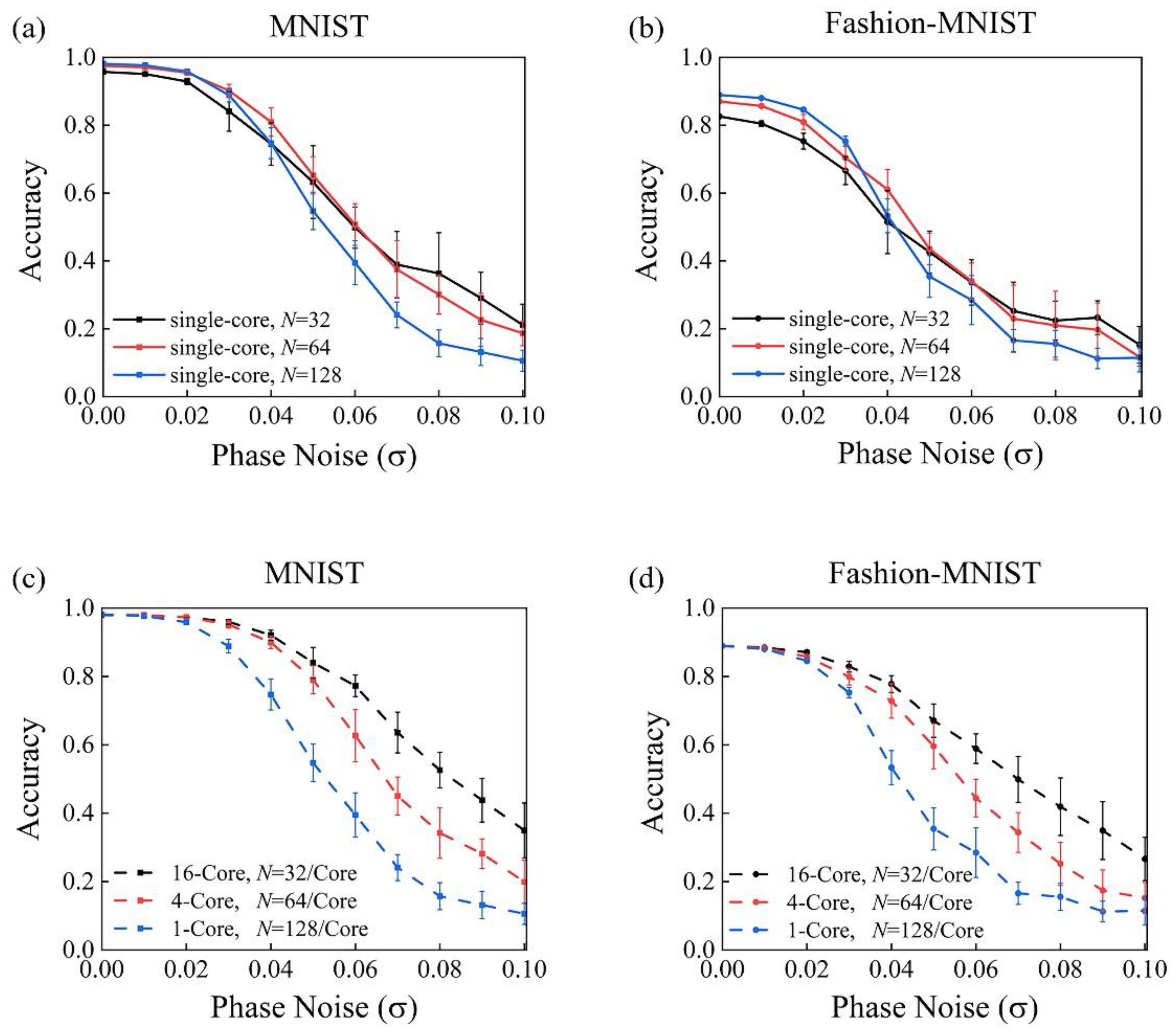

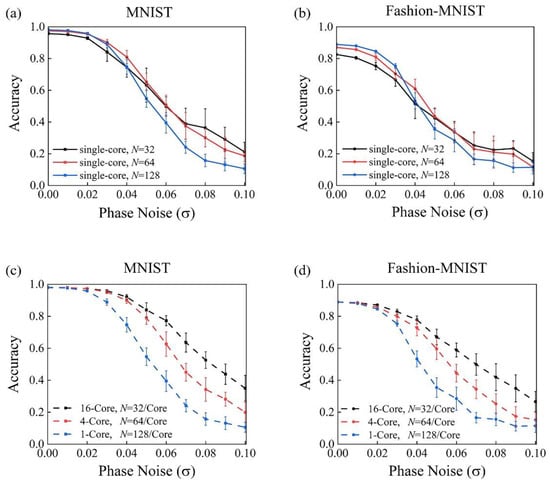

The noise of photonic chips will decrease the performance of photonic neural networks [25]. The designed PCNN chip, especially the weights part, contains a lot of phase shifters, which are susceptible to thermal noise. The phase noise is modelled as Gaussian noise with a mean 0 and a standard deviation [26]. Figure 8a,b show the classification accuracies of single-core PCNNs on the MNIST and Fashion-MNIST test sets, respectively. The standard deviation σ of phase noise ranges from 0 to 0.1 rad. For every standard deviation, phase noise was sampled ten times, and the mean and standard deviations of the classification accuracy were calculated. From Figure 8a,b it can be seen that the larger single-core PCNN is more seriously affected by phase noise. When the σ is 0.08 rad, the 32 × 32 PCNN has the highest mean accuracy of 36.33% on the MNIST test set, and the 128 × 128 PCNN is 15.73%. We further analyzed the effect of phase noise on the multicore PCNN. Figure 8c,d show multicore PCNNs with phase noise that are tested on the MNIST and Fashion-MNIST test sets. Compared with the single-core PCNN, the multicore PCNN has better phase noise robustness. With the same phase noise, the multicore PCNN with more cores and a smaller single core has a better performance. For example, when the standard deviation of phase noise is 0.06 rad, the mean classification accuracies of 1-core, 4-core and 16-core on the MNIST test set are 39.48%, 62.71% and 77.26%, respectively.

Figure 8.

Phase noise robustness analysis of (a,b) the single-core PCNN and (c,d) the multicore PCNN on the MNIST and Fashion-MNIST test sets. The mean (square or circle) and standard deviations (short line) of classification accuracies change with phase noise changes.

4. Discussion

Table 4 shows a comparison of the multicore PCNN with other photonic neural networks in terms of neural network types, neural network sizes, datasets, preprocessing and classification accuracies. Weights matrices of the PCNN are arbitrary complex-valued matrices, and those of the photonic unitary neural network (PUNN) are unitary matrices. The unitary matrix is a complex-valued square matrix, and its inverse is also its conjugate transpose. All photonic neural networks are tested on the MNIST test set.

Table 4.

Comparison of the multicore PCNN with photonic neural networks in the literature. PUNN: photonic unitary neural network.

Compared with the real-valued input of the original MNIST dataset, complex-valued input has richer input information and effectively improves the classification accuracy of the PCNN. Complex-valued images based on the Fourier transform are used in [9] and [18]. The Fourier transform can be implemented by Fourier optics, but components of Fourier optics are different from those of PCNNs. The transformation layer proposed in this work can also improve the performance of the PCNN and has better compatibility with the designed PCNN chip.

Larger photonic neural networks can achieve higher classification accuracies. Accuracies of the 8 × 8 PCNN, the 36 × 36 PUNN and the 64 × 64 PCNN are 93.50%, 96.60% and 97.51%, respectively. However, larger photonic neural networks have worse phase noise robustness as reported in [18] and shown in Figure 8. The photonic chips, which are used for calculating photonic neural networks in Table 4, have many MZIs. The MZIs are arranged in a rectangular or triangular mesh, and noise of phase shifters is also cascaded. The larger single-core photonic neural network requires more photonic devices to perform calculations and is less robust against phase noise. Unlike the single-core architecture analyzed above, the phase noise of the multicore architecture consists of cascaded noise of the single core and the accumulated noise of multiple cores. The multicore PCNN is more robust against phase noise as shown in Figure 8. Moreover, the optical losses of every photonic core in the multicore architecture are relatively low. The multicore architecture can be used for more complex calculations, and the multicore PCNNs have the best performance (98.07% accuracy) compared with other photonic neural networks in Table 4.

5. Conclusions

In summary, a PCNN chip was designed, and the PCNN with a transformation layer, which effectively improves image classification accuracy, was proposed. A single-core PCNN chip measuring 128 × 128 or larger cannot work because of optical losses. Therefore, the multicore architecture is used for more powerful computing capability. PCNNs were tested on the MNIST test set and Fashion-MNIST test set. Furthermore, the effect of phase noise on PCNNs was analyzed. The multicore PCNN has higher classification accuracy and better phase noise robustness than the single-core PCNN. The multicore PCNN is promising for use in image processing, signal processing and other applications.

Author Contributions

Conceptualization and methodology, R.W.; software, P.W.; validation, C.L.; investigation, G.L., H.Y. and X.Z.; writing—original draft preparation, R.W.; writing—review and editing, Y.Z. and J.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Natural Science Foundation of China (61934007, 62090053), the Beijing Natural Science Foundation (4222078, Z200006) and the Frontier Science Research Project of CAS (QYZDY-SSW-JSC021).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Sciuto, G.L.; Capizzi, G.; Coco, S.; Shikler, R. Geometric shape optimization of organic solar cells for efficiency enhancement by neural networks. In Advances on Mechanics, Design Engineering and Manufacturing; Springer: Berlin/Heidelberg, Germany, 2017; pp. 789–796. [Google Scholar]

- Gostimirovic, D.; Winnie, N.Y. An open-source artificial neural network model for polarization-insensitive silicon-on-insulator subwavelength grating couplers. IEEE J. Sel. Top. Quantum Electron. 2018, 25, 8200205. [Google Scholar] [CrossRef]

- Shen, Y.; Harris, N.C.; Skirlo, S.; Prabhu, M.; Baehr-Jones, T.; Hochberg, M.; Sun, X.; Zhao, S.; Larochelle, H.; Englund, D. Deep learning with coherent nanophotonic circuits. Nat. Photonics 2017, 11, 441–446. [Google Scholar] [CrossRef]

- Tait, A.N.; De Lima, T.F.; Zhou, E.; Wu, A.X.; Nahmias, M.A.; Shastri, B.J.; Prucnal, P.R. Neuromorphic photonic networks using silicon photonic weight banks. Sci. Rep. 2017, 7, 7430. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Wang, J.; Shu, H.; Zhang, Z.; Yi, S.; Bai, B.; Wang, X.; Liu, J.; Zou, W. Optical coherent dot-product chip for sophisticated deep learning regression. arXiv 2021, arXiv:2105.12122. [Google Scholar] [CrossRef] [PubMed]

- On, M.B.; Lu, H.; Chen, H.; Proietti, R.; Yoo, S.B. Wavelength-space domain high-throughput artificial neural networks by parallel photoelectric matrix multiplier. In Proceedings of the 2020 Optical Fiber Communications Conference and Exhibition (OFC), San Diego, CA, USA, 8–12 March 2020; pp. 1–3. [Google Scholar]

- Trabelsi, C.; Bilaniuk, O.; Zhang, Y.; Serdyuk, D.; Subramanian, S.; Santos, J.F.; Mehri, S.; Rostamzadeh, N.; Bengio, Y.; Pal, C.J. Deep complex networks. arXiv 2017, arXiv:1705.09792. [Google Scholar]

- Zhang, H.; Gu, M.; Jiang, X.; Thompson, J.; Cai, H.; Paesani, S.; Santagati, R.; Laing, A.; Zhang, Y.; Yung, M. An optical neural chip for implementing complex-valued neural network. Nat. Commun. 2021, 12, 457. [Google Scholar] [CrossRef]

- Popa, C.-A. Complex-valued convolutional neural networks for real-valued image classification. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 816–822. [Google Scholar]

- Popa, C.-A.; Cernăzanu-Glăvan, C. Fourier transform-based image classification using complex-valued convolutional neural networks. In Proceedings of the International Symposium on Neural Networks, Minsk, Belarus, 25–28 June 2018; pp. 300–309. [Google Scholar]

- Williamson, I.A.; Hughes, T.W.; Minkov, M.; Bartlett, B.; Pai, S.; Fan, S. Reprogrammable electro-optic nonlinear activation functions for optical neural networks. IEEE J. Sel. Top. Quantum Electron. 2019, 26, 7700412. [Google Scholar] [CrossRef] [Green Version]

- Hu, Z.; Miscuglio, M.; George, J.; Alkabani, Y.; El Gazhawi, T.; Sorger, V.J. Highly-parallel optical fourier intensity convolution filter for image classification. In Proceedings of the Frontiers in Optics, Washington, DC, USA, 15–19 September 2019; p. JW4A. 101. [Google Scholar]

- Ahmed, M.; Al-Hadeethi, Y.; Bakry, A.; Dalir, H.; Sorger, V.J. Integrated photonic FFT for photonic tensor operations towards efficient and high-speed neural networks. Nanophotonics 2020, 9, 4097–4108. [Google Scholar] [CrossRef]

- Clements, W.R.; Humphreys, P.C.; Metcalf, B.J.; Kolthammer, W.S.; Walmsley, I.A. Optimal design for universal multiport interferometers. Optica 2016, 3, 1460–1465. [Google Scholar] [CrossRef]

- Ramey, C. Silicon photonics for artificial intelligence acceleration: HotChips 32. In Proceedings of the 2020 IEEE Hot Chips 32 symposium (HCS), Palo Alto, CA, USA, 16–18 August 2020; pp. 1–26. [Google Scholar]

- Abrams, N.C.; Cheng, Q.; Glick, M.; Jezzini, M.; Morrissey, P.; O’Brien, P.; Bergman, K. Silicon photonic 2.5 D multi-chip module transceiver for high-performance data centers. J. Lightwave Technol. 2020, 38, 3346–3357. [Google Scholar] [CrossRef]

- Pai, S.; Williamson, I.A.; Hughes, T.W.; Minkov, M.; Solgaard, O.; Fan, S.; Miller, D.A. Parallel programming of an arbitrary feedforward photonic network. IEEE J. Sel. Top. Quantum Electron. 2020, 26, 6100813. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Al-Qadasi, M.; Chrostowski, L.; Shastri, B.; Shekhar, S. Scaling up silicon photonic-based accelerators: Challenges and opportunities. APL Photonics 2022, 7, 020902. [Google Scholar] [CrossRef]

- Jacques, M.; Samani, A.; El-Fiky, E.; Patel, D.; Xing, Z.; Plant, D.V. Optimization of thermo-optic phase-shifter design and mitigation of thermal crosstalk on the SOI platform. Opt. Express 2019, 27, 10456–10471. [Google Scholar] [CrossRef] [PubMed]

- Chrostowski, L.; Shoman, H.; Hammood, M.; Yun, H.; Jhoja, J.; Luan, E.; Lin, S.; Mistry, A.; Witt, D.; Jaeger, N.A. Silicon photonic circuit design using rapid prototyping foundry process design kits. IEEE J. Sel. Top. Quantum Electron. 2019, 25, 8201326. [Google Scholar] [CrossRef]

- Sia, J.X.B.; Li, X.; Wang, J.; Wang, W.; Qiao, Z.; Guo, X.; Lee, C.W.; Sasidharan, A.; Gunasagar, S.; Littlejohns, C.G. Wafer-Scale Demonstration of Low-Loss (~0.43 dB/cm), High-Bandwidth (>38 GHz), Silicon Photonics Platform Operating at the C-Band. IEEE Photonics J. 2022, 14, 6628609. [Google Scholar] [CrossRef]

- Dumais, P.; Wei, Y.; Li, M.; Zhao, F.; Tu, X.; Jiang, J.; Celo, D.; Goodwill, D.J.; Fu, H.; Geng, D. 2 × 2 multimode interference coupler with low loss using 248 nm photolithography. In Proceedings of the Optical Fiber Communication Conference, Anaheim, CA, USA, 20–24 March 2016; p. W2A.19. [Google Scholar]

- Zhang, D.; Zhang, Y.; Zhang, Y.; Su, Y.; Yi, J.; Wang, P.; Wang, R.; Luo, G.; Zhou, X.; Pan, J. Training and Inference of Optical Neural Networks with Noise and Low-Bits Control. Appl. Sci. 2021, 11, 3692. [Google Scholar] [CrossRef]

- Pai, S.; Bartlett, B.; Solgaard, O.; Miller, D.A. Matrix optimization on universal unitary photonic devices. Phys. Rev. Appl. 2019, 11, 064044. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).