Abstract

Background: The aim of this study was to examine publication bias associated with a failure to report research results of studies that were initially posted on the ClinicalTrials.gov registry and to examine factors associated with this phenomenon. Methods: A search was conducted in the ClinicalTrials.gov registry using six dental-related topics. Corresponding publications for trials completed between 2016 and 2019 were then searched using PUBMED, EMBASE and Google Scholar. For studies lacking matching publications, we emailed the primary investigator and received some additional data. For included studies, we recorded additional variables: industry funding, site setting (academic, private research facilities or private practice), design (single or multi-center), geographical location and commencement date vis a vis registration and publication dates. Results: A total of 744 entries were found, of which 7 duplicates were removed; an additional 67 entries just recently completed were removed. An additional 7 studies were in different fields and thus removed. Thus, 663 trials were included; of these, only 337 studies (50.8%) were published. The mean registration to publication interval was 29.01 ± 25.7 months, ranging from +142 to −34 months (post factum registration). Less than 1/3 of the studies were posted prior to commencement, of which much smaller proportions were published (37.3%). Studies that were posted after commencement (n = 462) had a much higher publication rate (56.7%), p < 0.001. Multi-center studies and those conducted in commercial facilities had much higher, though non-significant, publication rates (56.5% and 58.3%, respectively). Conclusions: With only half of the studies registered being published, a major source for publication bias is imminent.

1. Introduction

Poor quality of research design has long been associated with publication bias. However, other factors might have an equal or even greater effect. These include under-reporting resulting from failure to submit manuscripts or editors’ inclination to reject unfavorable research findings while favoring positive results. Another source of bias involves failures to report external sources of funding [1]. A recent investigation of more than 4600 scientific publications concluded that publication bias has increased and continues to increase over time. When referring to clinical trials, withholding negative results from publication not only distorts the literature but may even potentially have major public health implications [2,3]. On the other hand, academic careers and marketing promotions are significantly favored by the publication of positive results. From the journals’ perspective, positive results are more likely associated with higher citation rates, leading to an increased impact factor [2]. Thus, the incentives to publish positive results and withhold negative or unfavorable findings are clear. In addition to the complexities of getting their results published, investigators also need to wrestle with the dilemma of acquiring funding for their projects. Hence, upholding high ethical standards while maintaining long-term relationships with industry sponsors is a challenging task [4].

The US government created a clinical trials registry, in part to help address the above concerns. In it, investigators can disclose information that is intended for a wide audience, including members of the public, health care providers, and researchers alike. Since its inception in early 2000, ClinicalTrials.gov has led to the culmination of the largest trial registry database in the world, with each clinical trial having a unique 8-digit identifier known as its “NCT” number.

In its early years, only NIH-funded trials were posted on the website. Over time, the popularity of the database grew, as it eventually became a standard prerequisite for the publication of clinical trial manuscripts by many peer-reviewed journals. The International Committee of Medical Journal Editors (ICMJE) recommends that all medical journal editors require the registration of clinical trials in a public trials registry at or before the time of first patient enrollment as a condition of consideration for publication [5]. The US Food and Drug Administration (FDA) Amendments Act (FDAAA) of 2007 requires that all registered US clinical trials report results to www.ClinicalTtrials.gov within a year of study completion. Although ClinicalTrials.gov was launched in 2000, legislation mandating reporting did not happen until 2007, and additional details of the law went into effect in 2017. Nonetheless, these recommendations are not being followed, which leads to continuous publication bias associated with under-reporting.

In some but not all medical journals, a lack of published data on ClinicalTrials.gov does not necessarily prevent publication, which has resulted in a lackadaisical approach in following the FDA mandate. Although the FDA has the authority to fine non-compliant investigators (in excess of $10,000 per day) for being in violation, this is not readily enforced. DeVito and coworkers examined 4209 trials on ClinicalTrials.gov from March 2018 to September 2019 and noted that only 40.9% published results within the 1-year time period. They also noted compliance had not improved since July 2018, and the median delay from study completion to submission was 424 days [6]. Over time, the proportion of registered trials increased from 38.5% in 2005 to 78.6% in 2014 [7].

Another caveat is that sometimes, even if the study was registered, the authors fail to disclose the associated clinical trial NCT number in the actual publication in many dental journals.

An additional layer of complexity in clinical trial reporting is the source of funding. Although ClinicalTrials.gov requires the source of funding to be disclosed, many reported trials choose to label their source of funding as unspecified “other” even if the trials were in fact industry-funded. This loophole may increase the possibility of erroneous reporting and selective bias when these trials ultimately reach publication [8,9]. It has been established that funding by industry sponsors can lead to a potential bias of efficacy results and more favorable conclusions [10,11,12]. Likewise, Popelut et al. [13] examined the relationship between sponsorship and the reported failure rate of dental implants. Industry-associated studies (OR = 0.21; 95% CI [0.12–0.38]) and unknown funding sources (OR = 0.33; (95% CI [0.21–0.51]) had lower annual failure rates.

Therefore, the aim of this study was to examine publication bias associated with a failure to report clinical trial results of studies in the dental field that were initially posted on the ClinicalTrials.gov registry and further explore variables that might have affected these publication rates, the hypothesis being that a substantial portion of these studies were never published.

2. Materials and Methods

A manual search was conducted in the ClinicalTrials.gov registry using the following individual search terms (keywords) under the ‘condition or disease’ entry on the main website. While we could not cover the entire dental field, we chose to limit our search to topics related to periodontal and implant topics. These included toothbrushes, dental lasers, dental implants, peri-implantitis, regenerative procedures (GTR, GBR, bone grafts), and root coverage.

2.1. Study Selection and Data Collection

Data extraction was jointly performed by the two primary authors (JT and KM) for entries updated until 26 May 2021. In those few cases when inconclusive information was encountered, the other two co-authors (EM and CC) joined the discussion, and a joint decision was made.

Inclusion criteria: Clinical trials listed on ClinicalTrials.gov associated with the above keywords and listed as completed on or before 31 December 2019. Studies were grouped according to these keywords. If more than one keyword was associated with a trial, the first one listed served to designate the study. Additionally, “overdue” trials were identified and included: these were trials listed on or before 31 December 2016 with no reference to results or completion, after manual searching on ClinicalTrials.gov (however, they were never marked withdrawn or terminated). These clinical trials were assumed to be terminated.

Exclusion criteria: Studies listed as not completed or completed after 31 December 2019.

The search results matching our criteria for completed studies were then cross-referenced with PUBMED, EMBASE, and Google Scholar to confirm how many of the clinical trials had corresponding published papers. Our protocol for finding the published papers matching the clinical trials registered on ClinicalTrials.gov was as follows:

- First, the NCT # and/or Official Title (listed under “Study Design”) was manually searched on a search engine database like PUBMED, EMBASE, and Google Scholar.

- Occasionally, there is an accompanying publication link on some completed studies on their respective ClinicalTrials.gov. This was found in the ‘more information: results’ section of the clinical trial in question (if available).

- If the NCT # search, Official Title search, and manual search of each trial page revealed no positive matching entries, then the name of the PI listed on ClinicalTrials.gov was individually searched on PUBMED, EMBASE, and Google Scholar. The resulting publications list was manually searched for words similar to the titles listed on the clinical trial webpage. The data in the paper (location, sponsor, sample size, endpoints, etc.) were compared with those in the ClinicalTrials.gov website (if available), and if it matched, the study was deemed ‘published.’

For the studies for which we could not find a positive matching publication, we attempted to contact (by email) the primary investigator listed on the site, with a 4-week response time. One hundred and eighty-eight emails were sent, 60 of which returned un-delivered. We received responses from forty-eight PIs and, thus, were able to locate 15 additional publications through this channel, and the database was further updated (Table 1). In cases where publication appeared to be absent, and the PI did not respond or was nowhere to be found, the study was deemed unpublished.

Table 1.

Direct attempt to contact the principle investigators of missing publications.

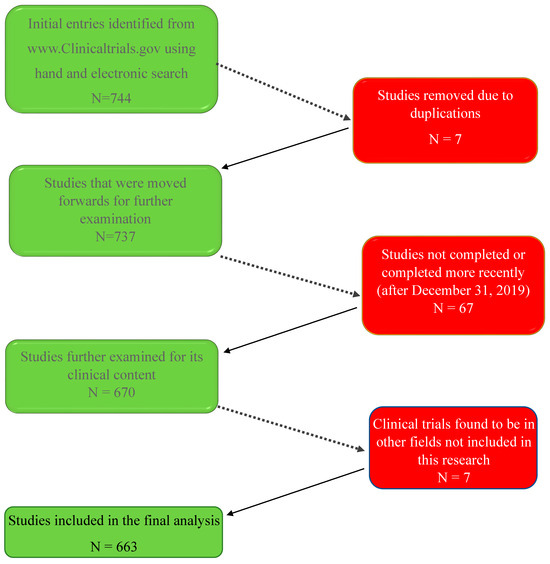

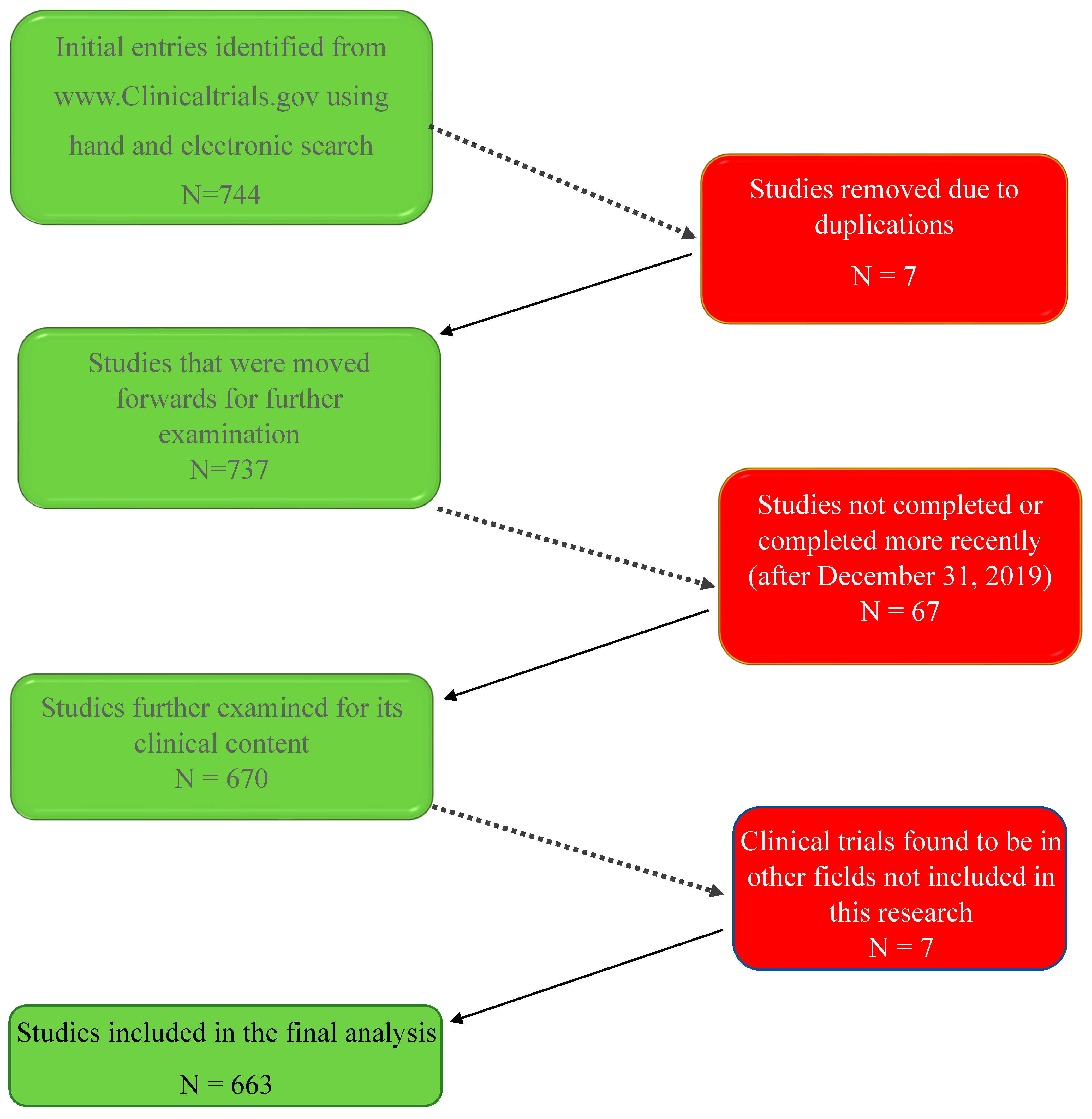

The following flowchart diagram (Figure 1) summarizes the process:

- ◦

- Seven hundred forty-four clinical trial entries were initially included.

- ◦

- Seven clinical trials were removed as duplicates as they appeared multiple times under different keyword searches.

- ◦

- An additional 67 clinical trial entries that did not fit the inclusion criteria (trials on topics other than the ones we studied) were removed.

- ◦

- Seven more studies were found to be beyond the scope (clinical entities) defined for this research and were therefore removed.

Thus, a total of 663 clinical trial entries were included in the final database for analysis.

Figure 1.

Seven hundred forty-four studies were initially identified. After the removal of duplicates, un-completed studies, or studies not pertaining to our research topics, 663 clinical trials were included in the present study.

Figure 1.

Seven hundred forty-four studies were initially identified. After the removal of duplicates, un-completed studies, or studies not pertaining to our research topics, 663 clinical trials were included in the present study.

For each study that was included, we recorded some additional variables that might have affected the outcome; these included industry funding (yes/no), study site setting (academic, private research facilities, or private practice), study design (single or multi-center), study geographical location (US and non-US) and, finally, the timing of commencement date vis a vis registration and publication dates.

2.2. Statistical Analysis

In an initial descriptive analysis, we reported the absolute counts and percentages of clinical trials within each category of the variables considered and whether they were ultimately published. We then used univariate logistic regression models to report the unadjusted odds ratio of publication for each variable, along with 95% confidence intervals and p-values to identify any marginally significant associations at the 0.05 level. Since each variable is categorical, the odds ratios are measured with respect to a given reference category. For variables with more than two categorical outcomes (i.e., research setting and topic), we further performed analysis of variable (ANOVA) to test the overall association with publication success across all categories.

These univariate analyses provide some intuition about which variables are most influential on publication, but they may ignore important interactions with other variables that can modify effect sizes and significance. Thus, we also used multivariate logistic regression to estimate the joint effects of all variables together. We also fit a “reduced” model only, including statistically significant variables in the full model, accounting for multiple hypothesis testing.

3. Results

Of the 663 studies that were posted during this time frame, with the designated keywords, only 337 studies (50.8%) were published, while an almost equal number of studies (326) were never published (Table 2).

Table 2.

Publication rate of studies posted on ClinicalTrials.gov 2015–2019.

The mean interval from registration to publication was 29.01 ± 25.7 months, ranging from +142 to −34 months (post factum registration). Of the 663 included, only 201 studies (30.3%) were posted on ClinicalTrials.gov prior to commencement as suggested in the Food and Drug Administration Amendments Act (FDAAA 801). Even much smaller proportions were eventually published (37.3%). To the contrary, studies that were posted after commencement (n = 462) had a much higher publication rate (56.7%), p < 0.001.

When comparing industry-supported studies to non-industry-supported research (188 and 475 studies, respectively), similar rates of publication were observed: 48.4% (91/188) for the former and 51.8% (246/475) for the latter (p = 0.430). When single center studies (n = 594) were explored, similar proportions were observed (50.2%), while for multi-center studies (n = 69), a somewhat higher percentile were published (56.5%); however, these differences were not statistically significant (p = 0.320). Likewise, the geographical location (US- or non-US-based studies) or research setting (academia, private practice, or commercial research facility) had no effect on the publication rates.

Next, we looked at the publication rate for each research field (Table 3). Studies of dental implants (n = 304) had the lowest rate of publication (44.7%). When comparing the other research topics, studies on peri-implantitis had significantly higher publication rates (61.5%, p = 0.015). Similar publication rates were recorded for studies on toothbrushing (62.2%, p = 0.006), root coverage (66.0%, p = 0.008), and dental lasers (62.1%, p = 0.078).

Table 3.

Publication rates sorted by area of research.

We further looked into a sub-set of this database (Table 4) pertaining to studies of dental implants, n = 304. Here, too, studies that were posted on the website after commencement had a much higher publication rate (49.5%), compared to studies that were posted prior to the commencement of the study (34.0%, p = 0.012). Multi-center studies had higher publication rates (58.1%) compared to single-center studies (43.2%); however, these differences did not reach statistical significance (p = 0.120).

Table 4.

Publication rate of studies of dental implants posted on ClinicalTrials.gov.

Finally, we used those variables that were found to be statistically significant in the previous univariable model in multiple logistic regression models to help us understand whether there are any “joint” effects among these variables (Table 5). The magnitude and significance of the adjusted odds ratios in this multivariate analysis are generally the same as reported in the univariate analyses. However, the adjusted odds ratio for multi-center studies is higher, though not statistically significant (1.67, p = 0.060), compared to the unadjusted (1.29, p = 0.320). Then, as shown in Table 6, we evaluated a multivariate model with only variables exhibiting adjusted p-values near or below the 5% significance level: single versus multi-center, commencement prior to versus after registration, and research topic. The results in this reduced model are essentially unchanged compared to the full model, which suggests that there was no major confounding from the discarded variables, and hence, the joint effects are sufficiently captured by the three variables.

Table 5.

Multiple logistic regression of all variables.

Table 6.

Multiple logistic regression of variables was found to be significant at about the 5% level in the full model.

Finally, we evaluate a reduced multivariate model (Table 6) with only variables exhibiting adjusted p-values below 0.05/6 to account for multiple hypothesis testing across six different variables: commencement prior to vs. after registration (p < 0.001) and research topic (p = 0.004) were statistically significant.

4. Discussion

Approximately fifty percent of the clinical trials related to dentistry that were posted on ClinicalTrials.gov have never been published. Staggering as the numbers are, it may even be an underestimation of this disturbing phenomenon. It is very likely that quite a few clinical trials were never registered and, therefore, went unnoticed when they failed to be published. To the best of our knowledge, this is the first paper in dentistry that examines the publication rate of registered trials and the timing between registration, commencement, and publication dates. Our results are in line with similar figures found in other fields of medicine. For example, DePasse et al. [14] examined factors predicting spinal cord injury trials registered on ClinicalTrials.gov. Of the 250 studies identified regarding the treatment of spinal cord injuries and reported to be completed, only 119 (47.6%) were published, which appears to be in line with our results. Another example of this striking phenomenon was published more recently by Tan et al. [15] in a study looking at the characteristics and publication status of gastrointestinal endoscopy clinical trials registered on the same ClinicalTrials.gov database. The authors analyzed a total of 1338 trials, of which 501 were completed, and only 281 (56.1%) of those were published. Interestingly, van Heteren et al. confirmed this pattern once again in Otology studies, where only 225 (53.7%) of 419 trials posted on ClinicalTrials.gov had corresponding publications [16].

Only two-thirds of the 663 studies included in this database were posted appropriately (i.e., prior to the study commencement). Furthermore, those studies that were registered post factum (i.e., after the study was already ongoing and, at times, even after it was completed) had a significantly greater publication rate (56.7%) compared to only 37.3% in the ‘posted on time’ group (p < 0.001). This might disclose a potentially dangerous and previously unknown practice, in which researchers withhold registration until they obtain initial results, and only if they are positive, would they go on and register the study. This unique and disturbing phenomenon has not yet been reported in dental literature. Boccia et al. [17] analyzed registration practices for observational studies posted on ClinicalTrials.gov specifically pertaining to cancer research. Out of a total of 1109 studies, only 13.8% were registered prior to commencement with 19.5% coinciding with the date of commencement, both of which would be considered as registered “on time”, which is far lower than the on-time record in our study (66.7%). Furthermore, Smaïl—Faugeron et al. [18] found an increasing trend towards retrospective registration (91%) among randomized clinical trials published in 15 selected oral health journals in 2013. More alarmingly, 32% did not report trial registration in the published article. This confirms our finding that the commencement of studies prior to, or even without registration, is a common practice.

Studies on dental implants had a lower publication rate (44.74%) compared to most other topics in this study (Table 3). It is generally agreed that studies of dental implants are often supported by its manufacturers; this collaboration seems to have increased over the past decades (Pereira et al., 2022) [19]. Lee et al., in a study whose purpose was to identify factors associated with the completion and publication of dental implants trials, reported that trials that were industry-funded had lower rates of completion but not publication [20]. Hence, the abortion of studies (possibly with unfavorable results) might be the venue taken by some researchers in industry-supported dental implants studies.

Study limitations: There are several limitations that must be mentioned when interpreting the results of this study, the primary limitation being that our scope of analysis is restricted to trials registered only under the U.S. database, ClinicalTrials.gov. There are other similar databases around the world, such as www.ClinicalTrialsregister.eu and www.ema.europa.eu, which may provide more global insight pertaining to the publication of clinical trials outside the U.S. On a similar note, only clinical trials registered and published in the English-language literature were examined. This linguistic limitation may account for some skewing towards the unpublished group. Finally, an extended grace period might result in an increase in the publication rate, as some studies may encounter recruitment or technical problems that required much longer time from commencement to publication.

Studies conducted in private practices had much lower publication rates (41.9%) compared to academic (51.0%) and commercial research centers (58.3%), yet the very small numbers of studies in the private practice group (n = 31) might account for the lack of statistical significance. Magnani et al. assessed 8636 clinical trials from 2007 to 2019; he noted that only 3541 (41%) were completed, and of these completed trials, only 21.6% were published [21]. Among these, academic-sponsored trials were more likely to be published with an adjusted OR of 1.72 (95% CI 1.25–2.37), p = < 0.001. These higher publication rates of studies performed in academia may indicate the commitment by academicians to make their results public as is the standard in academia, while the vested interest of commercial research centers is to meet the needs of their clients for a sustainable enterprise.

In Conclusion: Only half of the studies that were registered initially were eventually published. This under-reporting is probably of greater proportions, as some studies may fail to register a priori. Thus, our ‘best evidence data’ might only represent a sub-set of results, which might not reflect the accurate effect. Moreover, it is most likely that those aborted and non-published studies had unfavorable results. Thus, our meta-analyses, on which we base our treatment protocols, are based on data that are likely skewed to a favorable territory.

Author Contributions

Conceptualization, E.E.M.; Methodology, X.L., T.C. and E.E.M.; Software, X.L. and T.C.; Investigation, J.T. and K.M.; Writing—review & editing, D.M.K.; Supervision, C.-Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This study was self-funded by authors and their respective academic institutions.

Institutional Review Board Statement

The data for this study are in the public domain; hence, IRB waiver was granted by Harvard medical center.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Scholey, J.M.; Harrison, J.E. Publication bias: Raising awareness of a potential problem in dental research. Br. Dent. J. 2003, 194, 235–237. [Google Scholar] [CrossRef] [PubMed]

- Joober, R.; Schmitz, N.; Annable, L.; Boksa, P. Publication bias: What are the challenges and can they be overcome? J. Psychiatry Neurosci. 2012, 37, 149–152. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Lin, L.; Chu, H. Quantifying publication bias in meta-analysis. Biometrics 2018, 74, 785–794. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Buchkowsky, S.S.; Jewesson, P.J. Industry sponsorship and authorship of clinical trials over 20 years. Ann. Pharmacother. 2004, 38, 579–585. [Google Scholar] [CrossRef] [PubMed]

- International Committee of Medical Journal Editors. [Homepage on the Internet]. Recommendations for the Conduct, Reporting, Editing and Publication of Scholarly Work in Medical Journals. Available online: http://www.ICMJE.org (accessed on 23 July 2024).

- DeVito, N.J.; Bacon, S.; Goldacre, B. Compliance with legal requirement to report clinical trial results on ClinicalTrials.gov: A cohort study. The Lancet 2020, 395, 361–369. [Google Scholar] [CrossRef] [PubMed]

- Hunter, K.E.; Seidler, A.L.; Askie, L.M. Prospective registration trends, reasons for retrospective registration and mechanisms to increase prospective registration compliance: Descriptive analysis and survey. BMJ Open 2018, 8, e019983. [Google Scholar] [CrossRef] [PubMed]

- Tan, A.C.; Jiang, I.; Askie, L.; Hunter, K.; Simes, R.J.; Seidler, A.L. Prevalence of trial registration varies by study characteristics and risk of bias. J. Clin. Epidemiol. 2019, 113, 64–74. [Google Scholar] [CrossRef] [PubMed]

- Faggion, C.M., Jr.; Atieh, M.; Zanicotti, D.G. Reporting of sources of funding in systematic reviews in periodontology and implant dentistry. Br. Dent. J. 2014, 216, 109–112. [Google Scholar] [CrossRef] [PubMed]

- Saric, F.; Barcot, O.; Puljak, L. Risk of bias assessments for selective reporting were inadequate in the majority of Cochrane reviews. J. Clin. Epidemiol. 2019, 112, 53–58. [Google Scholar] [CrossRef] [PubMed]

- Lexchin, J. Sponsorship bias in clinical research. Int. J. Risk Saf. Med. 2012, 24, 233–242. [Google Scholar] [CrossRef] [PubMed]

- Gadde, P.; Penmetsa, G.S.; Rayalla, K. Do dental research journals publish only positive results? A retrospective assessment of publication bias. J. Indian Soc. Periodontol. 2018, 22, 294–297. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Popelut, A.; Valet, F.; Fromentin, O.; Thomas, A.; Bouchard, P. Relationship between sponsorship and failure rate of dental implants: A systematic approach. PLoS ONE 2010, 5, e10274. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- DePasse, J.M.; Park, S.; Eltorai, A.E.M.; Daniels, A.H. Factors predicting publication of spinal cord injury trials registered on www.ClinicalTrials.gov. J. Back Musculoskelet. Rehabil. 2018, 31, 45–48. [Google Scholar] [CrossRef] [PubMed]

- Tan, S.; Chen, Y.; Dai, L.; Zhong, C.; Chai, N.; Luo, X.; Xu, J.; Fu, X.; Peng, Y.; Linghu, E.; et al. Characteristics and publication status of gastrointestinal endoscopy clinical trials registered in ClinicalTrials.gov. Surg. Endosc. 2021, 35, 3421–3429. [Google Scholar] [CrossRef] [PubMed]

- Van Heteren, J.A.A.; van Beurden, I.; Peters, J.P.M.; Smit, A.L.; Stegeman, I. Trial registration, publication rate and characteristics in the research field of otology: A cross-sectional study. PLoS ONE 2019, 14, e0219458. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Boccia, S.; Rothman, K.J.; Panic, N.; Flacco, M.E.; Rosso, A.; Pastorino, R.; Manzoli, L.; La Vecchia, C.; Villari, P.; Boffetta, P.; et al. Registration practices for observational studies on ClinicalTrials.gov indicated low adherence. J. Clin. Epidemiol. 2016, 70, 176–182. [Google Scholar] [CrossRef] [PubMed]

- Smaïl-Faugeron, V.; Fron-Chabouis, H.; Durieux, P. Clinical trial registration in oral health journals. J. Dent. Res. 2015, 94 (Suppl. S3), 8S–13S. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Pereira, M.M.A.; Dini, C.; Souza, J.G.S.; Barão, V.A.R.; de Avila, E.D. Industry support for dental implant research: A metatrend study of industry partnership in the development of new technologies. J. Prosthet. Dent. 2024, 132, 72–80. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.C.; Wu, B.W.; Chuang, S.K. Which Factors Affect the Completion and Publication of Dental Implant Trials? J. Oral Maxillofac. Surg. 2020, 78, 1726–1735. [Google Scholar] [CrossRef] [PubMed]

- Magnani, C.J.; Steinberg, J.R.; Harmange, C.I.; Zhang, X.; Driscoll, C.; Bell, A.; Larson, J.; You, J.G.; Weeks, B.T.; Hernandez-Boussard, T.; et al. Clinical Trial Outcomes in Urology: Assessing Early Discontinuation, Results Reporting and Publication in ClinicalTrials.Gov Registrations 2007–2019. J. Urol. 2021, 205, 1159–1168. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).