Quality Issues of CRIS Data: An Exploratory Investigation with Universities from Twelve Countries

Abstract

:1. Introduction

2. Literature Overview

2.1. The Concept of Data Quality

- the data must be accessible to the user,

- the user must be able to interpret the data, and

- the data must be relevant to the user.

2.2. Current Research Information Systems

2.3. Data Quality Issues in CRIS

- a highly structured data model (cf. CERIF),

- the comprehensiveness of the data model

- definition and standardization of a core dataset,

- definition and standardization of terminology and dictionaries,

- mapping of data from different sources,

- a batch-oriented data collection system with controls before data are entered into CRIS which helps to improve, in particular, data completeness,

- generation of quality assurance reports on different levels,

- usage of unique identifiers for persons and organizations,

- semantic enrichment of data by automatic indexing and classification of objects,

- an iterative process for quality enhancement,

- availability of data through an open web interface so that researchers can correct errors involving their own data,

- allowing dynamic links to be added from local web pages to the CRIS, thus encouraging researchers to register more errors and to assist in improving the quality of the data,

- crowdsourcing to improve linkages between publications and CRIS records,

- text mining to help efforts for the attribution of articles to authors or their linking to a project and to enhance the completeness of data,

3. Methodology

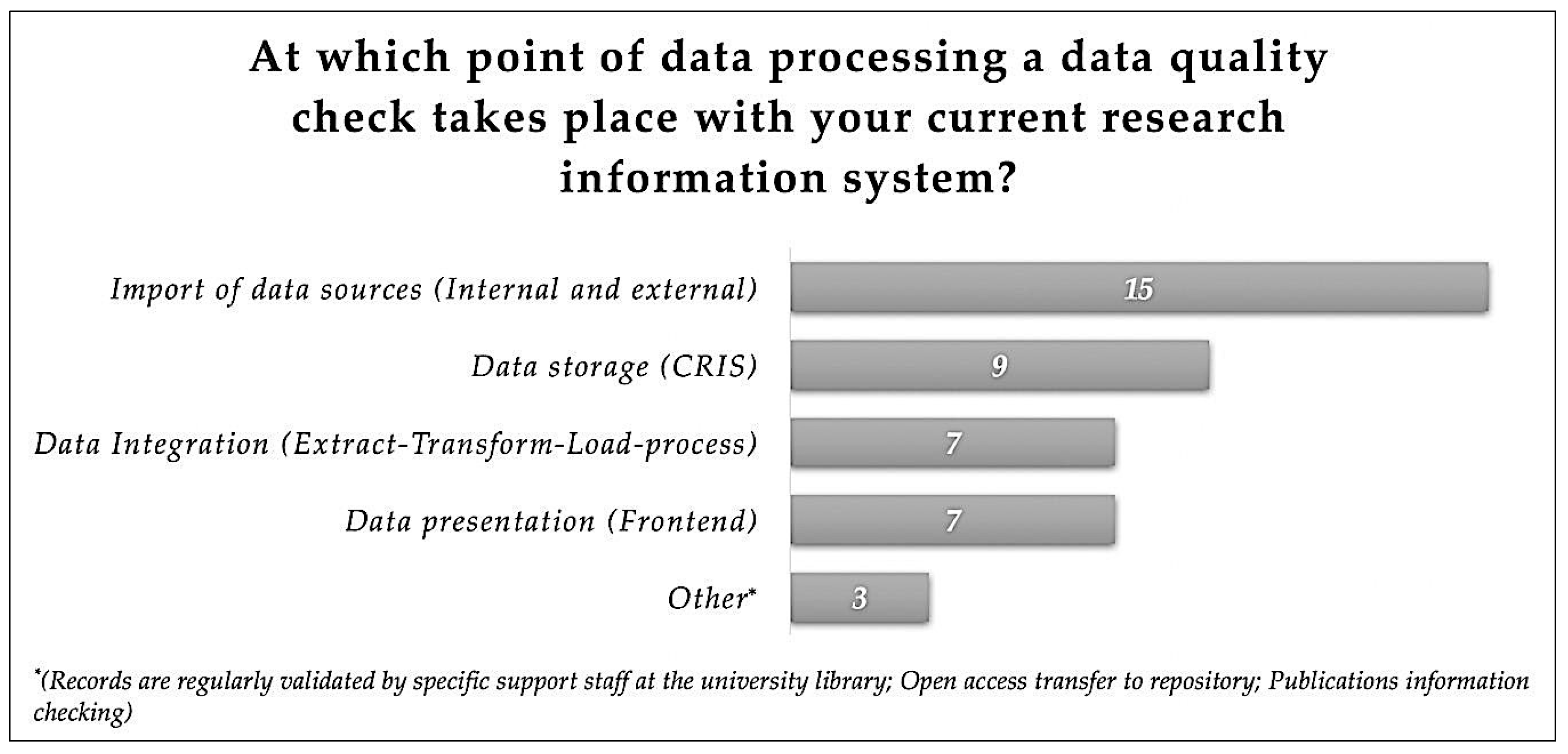

4. Results

5. Discussion

5.1. Data Cleansing

5.2. Data Monitoring

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Stempfhuber, M. Information quality in the context of CRIS and CERIF. In Proceedings of the 9th International Conference on Current Research Information Systems (CRIS2008), Maribor, Slovenia, 5–7 June 2008. [Google Scholar]

- Wang, R.Y.; Strong, D.M. Beyond accuracy: What data quality means to data consumers. J. Manag. Inf. Syst. 1996, 12, 5–33. [Google Scholar] [CrossRef]

- Miller, H. The multiple dimensions of information quality. Inf. Syst. Manag. 1996, 13, 79–82. [Google Scholar] [CrossRef]

- English, L.P. Information Quality Applied: Best Practices for Improving Business Information, Processes, and Systems; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- English, L.P. Seven Deadly Misconceptions about Information Quality; Information Impact International, Inc.: Brentwood, TN, USA, 1999. [Google Scholar]

- Evans, B.; Druken, K.; Wang, J.; Yang, R.; Richards, C.; Wyborn, L. A data quality strategy to enable FAIR, programmatic access across large, diverse data collections for high performance data analysis. Informatics 2017, 4, 45. [Google Scholar] [CrossRef]

- Schöpfel, J.; Prost, H.; Rebouillat, V. Research data in current research information systems. In Proceedings of the 13th International Conference on Current Research Information Systems (CRIS2016), St Andrews, Scotland, 9–11 June 2016. [Google Scholar]

- Hahnen, H.; Güdler, J. Quality is the product is quality—Information management as a closed-loop process. In Proceedings of the 9th International Conference on Current Research Information Systems (CRIS2008), Maribor, Slovenia, 5–7 June 2008. [Google Scholar]

- Azeroual, O.; Saake, G.; Abuosba, M. Data quality measures and data cleansing for research information systems. J. Digit. Inf. Manag. 2018, 16, 12–21. [Google Scholar]

- Azeroual, O.; Saake, G.; Wastl, J. Data measurement in research information systems: Metrics for the evaluation of the data quality. Scientometrics 2018, 115, 1271–1290. [Google Scholar] [CrossRef]

- Azeroual, O.; Saake, G.; Abuosba, M. Investigations of concept development to improve data quality in research information systems. In Proceedings of the 30th GI-Workshop on Foundations of Databases (Grundlagen von Datenbanken), Wuppertal, Germany, 22–25 May 2018; Volume 2126, pp. 29–34. [Google Scholar]

- Azeroual, O.; Abuosba, M. Improving the data quality in the research information systems. Int. J. Comput. Sci. Inf. Secur. 2017, 15, 82–86. [Google Scholar]

- Azeroual, O.; Saake, G.; Schallehn, E. Analyzing data quality issues in research information systems via data profiling. Int. J. Inf. Manag. 2018, 41, 50–56. [Google Scholar] [CrossRef]

- Mugabushaka, A.M.; Papazoglou, T. Information systems of research funding agencies in the “era of the big data”. The case study of the research information system of the European Research Council. In Proceedings of the 11th International Conference on Current Research Information Systems (CRIS2012), Prague, Czech Republic, 6–9 June 2012. [Google Scholar]

- Ilva, J. Towards reliable data—Counting the Finnish open access publications. In Proceedings of the 13th International Conference on Current Research Information Systems (CRIS2016), St Andrews, Scotland, 9–11 June 2016. [Google Scholar]

- Krause, J. Current research information as part of digital libraries and the heterogeneity problem. In Proceedings of the 6th International Conference on Current Research Information Systems (CRIS2002), Kassel, Germany, 29–31 August 2002. [Google Scholar]

- Lopatenko, A.; Asserson, A.; Jeffery, K.G. CERIF—Information retrieval of research information in a distributed heterogeneous environment. In Proceedings of the 6th International Conference on Current Research Information Systems (CRIS2002), Kassel, Germany, 29–31 August 2002. [Google Scholar]

- Chudlarský, T.; Dvořák, J. A national CRIS infrastructure as the cornerstone of transparency in the research domain. In Proceedings of the 11th International Conference on Current Research Information Systems (CRIS2012), Prague, Czech Republic, 6–9 June 2012. [Google Scholar]

- Lingjærde, G.C.; Sjøgren, A. Quality assurance in the research documentation system frida. In Proceedings of the 9th International Conference on Current Research Information Systems (CRIS2008), Maribor, Slovenia, 5–7 June 2008. [Google Scholar]

- Johansson, A.; Ottosson, M.O. A national current research information system for Sweden. In Proceedings of the 11th International Conference on Current Research Information Systems (CRIS2012), Prague, Czech Republic, 6–9 June 2012. [Google Scholar]

- Rybiński, H.; Koperwas, J.; Skonieczny, A. OMEGA-PSIR—A solution for implementing university research knowledge base. In Proceedings of the 21st EUNIS Annual Congress, Dundee, Scotland, 10–12 June 2015. [Google Scholar]

- McCutcheon, V.; Kerridge, S.; Grout, C.; Clements, A.; Baker, D.; Newnham, H. CASRAI-UK: Using the CASRAI approach to develop standards for communicating and sharing research information in the UK. In Proceedings of the 13th International Conference on Current Research Information Systems (CRIS2016), St Andrews, Scotland, 9–11 June 2016. [Google Scholar]

- Jörg, B.; Höllrigl, T.; Sicilia, M.A. Entities and identities in research information systems. In Proceedings of the 11th International Conference on Current Research Information Systems (CRIS2012), Prague, Czech Republic, 6–9 June 2012. [Google Scholar]

- Ribeiro, L.; de Castro, P.; Mennielli, M. EUNIS—EUROCRIS Joint Survey on CRIS and IR; Final Report; ERAI EUNIS Research and Analysis Initiative: Paris, France, 2016. [Google Scholar]

- Biesenbender, S.; Hornbostel, S. The research core dataset for the German science system: Challenges, processes and principles of a contested standardization project. Scientometrics 2016, 106, 837–847. [Google Scholar] [CrossRef]

- Van den Berghe, S.; Van Gaeveren, K. Data quality assessment and improvement: A Vrije Universiteit Brussel case study. In Proceedings of the 13th International Conference on Current Research Information Systems (CRIS2016), St Andrews, Scotland, 9–11 June 2016. [Google Scholar]

- Berkhoff, K.; Ebeling, B.; Lübbe, S. Integrating research information into a software for higher education administration—Benefits for data quality and accessibility. In Proceedings of the 11th International Conference on Current Research Information Systems (CRIS2012), Prague, Czech Republic, 6–9 June 2012. [Google Scholar]

- Nevolin, I. Crowdsourcing opportunities for research information systems. In Proceedings of the 13th International Conference on Current Research Information Systems (CRIS2016), St Andrews, Scotland, 9–11 June 2016. [Google Scholar]

- Azeroual, O.; Schöpfel, J.; Saake, G. Implementation and user acceptance of research information systems. An empirical survey of German universities and research organisations. Data Technol. Appl. submitted.

- Apel, D.; Behme, W.; Eberlein, R.; Merighi, C. Datenqualität Erfolgreich Steuern. Praxislösungen für Business Intelligence-Projekte, 3rd ed.; Dpunkt Verlag: Heidelberg, Germany, 2015. [Google Scholar]

- Hildebrand, K.; Gebauer, M.; Hinrichs, H.; Mielke, M. Daten- und Informationsqualität, Auf dem Weg zur Information Excellence; Springer Vieweg: Wiesbaden, Germany, 2015. [Google Scholar]

- Haak, L.; Baker, D.; Probus, M.A. Creating a data infrastructure for tracking knowledge flow. In Proceedings of the 11th International Conference on Current Research Information Systems (CRIS2012), Prague, Czech Republic, 6–9 June 2012. [Google Scholar]

| 1 | The nomenclature for research information systems is more or less unstandardized, including RIMS (Research Information Management System), RIS (Research Information System), RNS (Research Networking System), RPS (Research Profiling System) or FAR (Faculty Activity Reporting). In this paper, the preferred term is CRIS (Current Research Information System), because it is widely used in European countries, i.e., the main context of the survey. |

| 2 | Mainly based on the Dublin Core and bibliographic standards. |

| 3 | See, for instance, the work of CASRAI and euroCRIS. |

| 4 | euroCRIS, founded in 2002, is an international not-for-profit association, that brings together experts on research information in general and research information systems (CRIS) in particular, see https://www.eurocris.org/. |

| 5 | |

| 6 | |

| 7 |

| Country | Number of Respondents |

|---|---|

| Netherland | 3 |

| United Kingdom | 3 |

| Finland | 2 |

| Austria | 1 |

| Belgium | 1 |

| Norway | 1 |

| Russia | 1 |

| Serbia | 1 |

| Singapore | 1 |

| Slovakia | 1 |

| Sweden | 1 |

| Switzerland | 1 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Azeroual, O.; Schöpfel, J. Quality Issues of CRIS Data: An Exploratory Investigation with Universities from Twelve Countries. Publications 2019, 7, 14. https://doi.org/10.3390/publications7010014

Azeroual O, Schöpfel J. Quality Issues of CRIS Data: An Exploratory Investigation with Universities from Twelve Countries. Publications. 2019; 7(1):14. https://doi.org/10.3390/publications7010014

Chicago/Turabian StyleAzeroual, Otmane, and Joachim Schöpfel. 2019. "Quality Issues of CRIS Data: An Exploratory Investigation with Universities from Twelve Countries" Publications 7, no. 1: 14. https://doi.org/10.3390/publications7010014