Ten Hot Topics around Scholarly Publishing

Abstract

:1. Introduction

2. Ten Hot Topics to Address

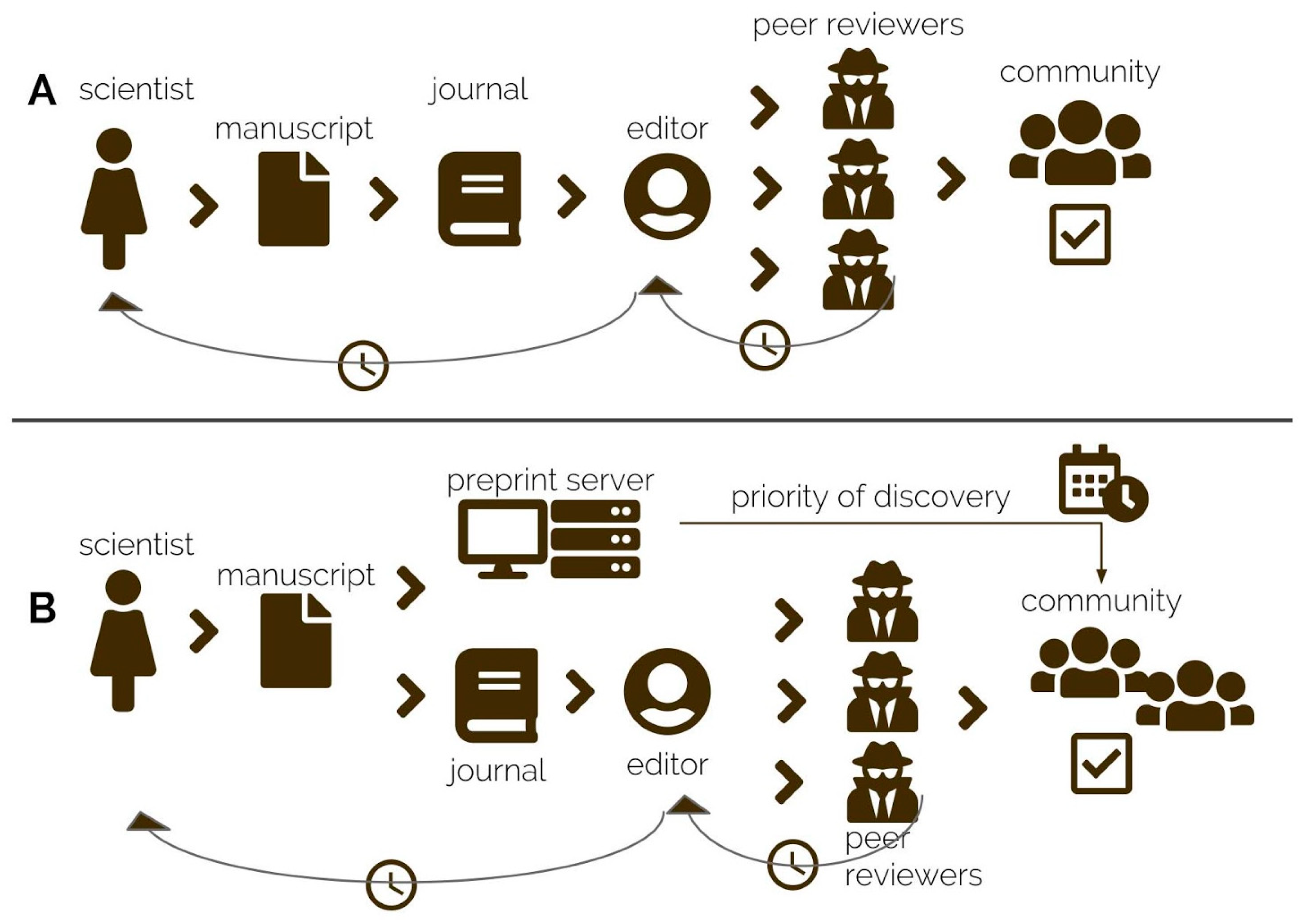

2.1. Topic 1: Will preprints get your research ‘scooped’?

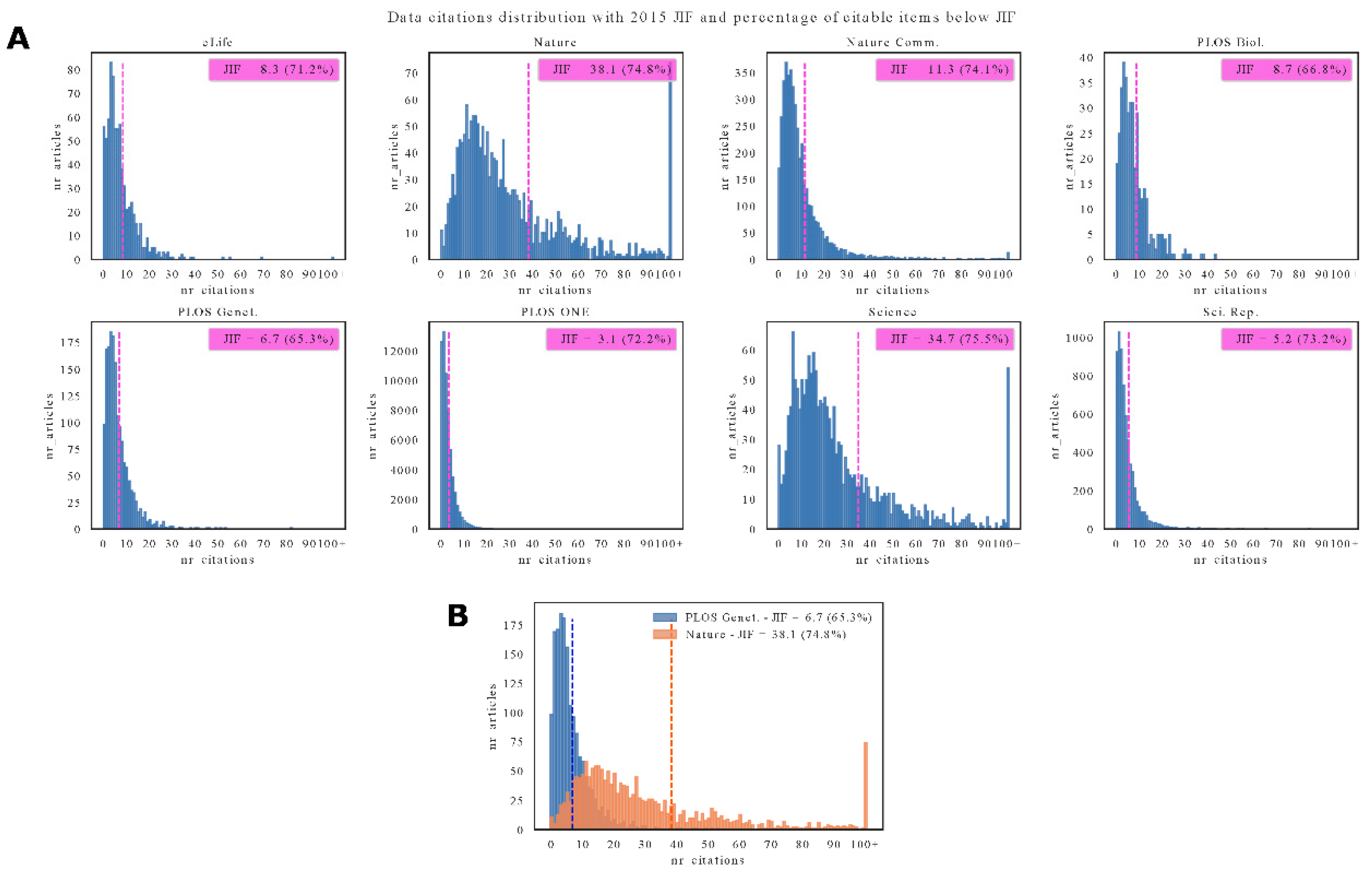

2.2. Topic 2: Do the Journal Impact Factor and journal brand measure the quality of authors and their research?

2.3. Topic 3: Does approval by peer review prove that you can trust a research paper, its data and the reported conclusions?

2.4. Topic 4: Will the quality of the scientific literature suffer without journal-imposed peer review?

2.5. Topic 5: Is Open Access responsible for creating predatory publishers?

2.6. Topic 6: Is copyright transfer required to publish and protect authors?

2.7. Topic 7: Does gold Open Access have to cost a lot of money for authors, and is it synonymous with the APC business model?

2.8. Topic 8: Are embargo periods on ‘green’ OA needed to sustain publishers?

2.9. Topic 9: Are Web of Science and Scopus global platforms of knowledge?

2.10. Topic 10: Do publishers add value to the scholarly communication process?

3. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Glossary

| Altmetrics | Altmetrics are metrics and qualitative data that are complementary to traditional, citation-based metrics. |

| Article-Processing Charge (APC) | An APC is a fee which is sometimes charged to authors to make a work available Open Access in either a fully OA journal or hybrid journal. |

| Gold Open Access | Immediate access to an article at the point of journal publication. |

| Green Open Access | Where an author self-archives a copy of their article in a freely accessible subject-specific, universal, or institutional repository. |

| Directory for Open Access Journals (DOAJ) | An online directory that indexes and provides access to quality Open Access, peer-reviewed journals. |

| Journal Impact Factor (JIF) | A measure of the yearly average number of citations to recent articles published in a particular journal. |

| Postprint | Version of a research paper subsequent to peer review (and acceptance), but before any type-setting or copy-editing by the publisher. Also sometimes called a ‘peer reviewed accepted manuscript’. |

| Preprint | Version of a research paper, typically prior to peer review and publication in a journal. |

References

- Alperin, J.P.; Fischman, G. Hecho en Latinoamérica. Acceso Abierto, Revistas Académicas e Innovaciones Regionales; 2015; ISBN 978-987-722-067-4. [Google Scholar]

- Vincent-Lamarre, P.; Boivin, J.; Gargouri, Y.; Larivière, V.; Harnad, S. Estimating open access mandate effectiveness: The MELIBEA score. J. Assoc. Inf. Sci. Technol. 2016, 67, 2815–2828. [Google Scholar] [CrossRef]

- Ross-Hellauer, T.; Schmidt, B.; Kramer, B. Are Funder Open Access Platforms a Good Idea? PeerJ Inc.: San Francisco, CA, USA, 2018. [Google Scholar]

- Publications Office of the European Union. Future of scholarly publishing and scholarly communication: Report of the Expert Group to the European Commission. Available online: https://publications.europa.eu/en/publication-detail/-/publication/464477b3-2559-11e9-8d04-01aa75ed71a1 (accessed on 16 February 2019).

- Matthias, L.; Jahn, N.; Laakso, M. The Two-Way Street of Open Access Journal Publishing: Flip It and Reverse It. Publications 2019, 7, 23. [Google Scholar] [CrossRef]

- Ginsparg, P. Preprint Déjà Vu. EMBO J. 2016, e201695531. [Google Scholar] [CrossRef] [PubMed]

- Neylon, C.; Pattinson, D.; Bilder, G.; Lin, J. On the origin of nonequivalent states: How we can talk about preprints. F1000Research 2017, 6, 608. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tennant, J.P.; Bauin, S.; James, S.; Kant, J. The evolving preprint landscape: Introductory report for the Knowledge Exchange working group on preprints. BITSS 2018. [Google Scholar] [CrossRef]

- Balaji, B.P.; Dhanamjaya, M. Preprints in Scholarly Communication: Re-Imagining Metrics and Infrastructures. Publications 2019, 7, 6. [Google Scholar] [CrossRef]

- Bourne, P.E.; Polka, J.K.; Vale, R.D.; Kiley, R. Ten simple rules to consider regarding preprint submission. PLOS Comput. Biol. 2017, 13, e1005473. [Google Scholar] [CrossRef] [PubMed]

- Sarabipour, S.; Debat, H.J.; Emmott, E.; Burgess, S.J.; Schwessinger, B.; Hensel, Z. On the value of preprints: An early career researcher perspective. PLOS Biol. 2019, 17, e3000151. [Google Scholar] [CrossRef]

- Powell, K. Does it take too long to publish research? Nat. News 2016, 530, 148. [Google Scholar] [CrossRef]

- Vale, R.D.; Hyman, A.A. Priority of discovery in the life sciences. eLife 2016, 5, e16931. [Google Scholar] [CrossRef]

- Crick, T.; Hall, B.; Ishtiaq, S. Reproducibility in Research: Systems, Infrastructure, Culture. J. Open Res. Softw. 2017, 5, 32. [Google Scholar] [CrossRef]

- Gentil-Beccot, A.; Mele, S.; Brooks, T. Citing and Reading Behaviours in High-Energy Physics. How a Community Stopped Worrying about Journals and Learned to Love Repositories. arXiv 2009, arXiv:0906.5418. [Google Scholar]

- Curry, S. Let’s move beyond the rhetoric: it’s time to change how we judge research. Nature 2018, 554, 147. [Google Scholar] [CrossRef]

- Lariviere, V.; Sugimoto, C.R. The Journal Impact Factor: A brief history, critique, and discussion of adverse effects. arXiv 2018, arXiv:1801.08992. [Google Scholar]

- McKiernan, E.C.; Schimanski, L.A.; Nieves, C.M.; Matthias, L.; Niles, M.T.; Alperin, J.P. Use of the Journal Impact Factor in Academic Review, Promotion, and Tenure Evaluations; PeerJ Inc.: San Francisco, CA, USA, 2019. [Google Scholar]

- Lariviere, V.; Kiermer, V.; MacCallum, C.J.; McNutt, M.; Patterson, M.; Pulverer, B.; Swaminathan, S.; Taylor, S.; Curry, S. A simple proposal for the publication of journal citation distributions. bioRxiv 2016, 062109. [Google Scholar] [CrossRef] [Green Version]

- Priem, J.; Taraborelli, D.; Groth, P.; Neylon, C. Altmetrics: A Manifesto. 2010. Available online: http://altmetrics.org/manifesto (accessed on 11 May 2019).

- Hicks, D.; Wouters, P.; Waltman, L.; de Rijcke, S.; Rafols, I. Bibliometrics: The Leiden Manifesto for research metrics. Nat. News 2015, 520, 429. [Google Scholar] [CrossRef]

- Falagas, M.E.; Alexiou, V.G. The top-ten in journal impact factor manipulation. Arch. Immunol. Ther. Exp. 2008, 56, 223. [Google Scholar] [CrossRef]

- Tort, A.B.L.; Targino, Z.H.; Amaral, O.B. Rising Publication Delays Inflate Journal Impact Factors. PLOS ONE 2012, 7, e53374. [Google Scholar] [CrossRef]

- Fong, E.A.; Wilhite, A.W. Authorship and citation manipulation in academic research. PLOS ONE 2017, 12, e0187394. [Google Scholar] [CrossRef]

- Adler, R.; Ewing, J.; Taylor, P. Citation statistics. A Report from the Joint. 2008. Available online: https://www.jstor.org/stable/20697661?seq=1#page_scan_tab_contents (accessed on 11 May 2019).

- Lariviere, V.; Gingras, Y. The impact factor’s Matthew effect: A natural experiment in bibliometrics. arXiv 2009, arXiv:0908.3177. [Google Scholar] [CrossRef]

- Brembs, B. Prestigious Science Journals Struggle to Reach Even Average Reliability. Front. Hum. Neurosci. 2018, 12, 37. [Google Scholar] [CrossRef] [PubMed]

- Brembs, B.; Button, K.; Munafò, M. Deep impact: Unintended consequences of journal rank. Front. Hum. Neurosci. 2013, 7, 291. [Google Scholar] [CrossRef]

- Vessuri, H.; Guédon, J.-C.; Cetto, A.M. Excellence or quality? Impact of the current competition regime on science and scientific publishing in Latin America and its implications for development. Curr. Sociol. 2014, 62, 647–665. [Google Scholar] [CrossRef]

- Guédon, J.-C. Open Access and the divide between “mainstream” and “peripheral. Como Gerir E Qualif. Rev. Científicas 2008, 1–25. [Google Scholar]

- Alperin, J.P.; Nieves, C.M.; Schimanski, L.; Fischman, G.E.; Niles, M.T.; McKiernan, E.C. How Significant Are the Public Dimensions of Faculty Work in Review, Promotion, and Tenure Documents? 2018. Available online: https://hcommons.org/deposits/item/hc:21015/ (accessed on 11 May 2019).

- Rossner, M.; Epps, H.V.; Hill, E. Show me the data. J Cell Biol 2007, 179, 1091–1092. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Owen, R.; Macnaghten, P.; Stilgoe, J. Responsible research and innovation: From science in society to science for society, with society. Sci. Public Policy 2012, 39, 751–760. [Google Scholar] [CrossRef]

- Moore, S.; Neylon, C.; Paul Eve, M.; Paul O’Donnell, D.; Pattinson, D. “Excellence R Us”: University research and the fetishisation of excellence. Palgrave Commun. 2017, 3, 16105. [Google Scholar] [CrossRef]

- Csiszar, A. Peer review: Troubled from the start. Nat. News 2016, 532, 306. [Google Scholar] [CrossRef] [PubMed]

- Moxham, N.; Fyfe, A. THE ROYAL SOCIETY AND THE PREHISTORY OF PEER REVIEW, 1665–1965. Hist. J. 2017. [Google Scholar] [CrossRef]

- Moore, J. Does peer review mean the same to the public as it does to scientists? Nature 2006. [Google Scholar] [CrossRef]

- Kumar, M. A review of the review process: Manuscript peer-review in biomedical research. Biol. Med. 2009, 1, 16. [Google Scholar]

- Budd, J.M.; Sievert, M.; Schultz, T.R. Phenomena of Retraction: Reasons for Retraction and Citations to the Publications. JAMA 1998, 280, 296–297. [Google Scholar] [CrossRef]

- Ferguson, C.; Marcus, A.; Oransky, I. Publishing: The peer-review scam. Nat. News 2014, 515, 480. [Google Scholar] [CrossRef]

- Smith, R. Peer review: A flawed process at the heart of science and journals. J. R. Soc. Med. 2006, 99, 178–182. [Google Scholar] [CrossRef] [PubMed]

- Ross-Hellauer, T. What is open peer review? A systematic review. F1000Research 2017, 6, 588. [Google Scholar] [CrossRef] [PubMed]

- Tennant, J.P.; Dugan, J.M.; Graziotin, D.; Jacques, D.C.; Waldner, F.; Mietchen, D.; Elkhatib, Y.; Collister, L.; Pikas, C.K.; Crick, T.; et al. A multi-disciplinary perspective on emergent and future innovations in peer review. F1000Research 2017, 6, 1151. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wong, V.S.S.; Avalos, L.N.; Callaham, M.L. Industry payments to physician journal editors. PLoS ONE 2019, 14, e0211495. [Google Scholar] [CrossRef] [PubMed]

- Weiss, G.J.; Davis, R.B. Discordant financial conflicts of interest disclosures between clinical trial conference abstract and subsequent publication. PeerJ 2019, 7, e6423. [Google Scholar] [CrossRef] [PubMed]

- Flaherty, D.K. Ghost- and Guest-Authored Pharmaceutical Industry–Sponsored Studies: Abuse of Academic Integrity, the Peer Review System, and Public Trust. Ann Pharm. 2013, 47, 1081–1083. [Google Scholar] [CrossRef] [PubMed]

- DeTora, L.M.; Carey, M.A.; Toroser, D.; Baum, E.Z. Ghostwriting in biomedicine: A review of the published literature. Curr. Med Res. Opin. 2019. [Google Scholar] [CrossRef] [PubMed]

- Squazzoni, F.; Brezis, E.; Marušić, A. Scientometrics of peer review. Scientometrics 2017, 113, 501–502. [Google Scholar] [CrossRef]

- Squazzoni, F.; Grimaldo, F.; Marušić, A. Publishing: Journals Could Share Peer-Review Data. Available online: https://www.nature.com/articles/546352a (accessed on 22 April 2018).

- Allen, H.; Boxer, E.; Cury, A.; Gaston, T.; Graf, C.; Hogan, B.; Loh, S.; Wakley, H.; Willis, M. What does better peer review look like? Definitions, essential areas, and recommendations for better practice. Open Sci. Framew. 2018. [Google Scholar] [CrossRef]

- Tennant, J.P. The state of the art in peer review. FEMS Microbiol. Lett. 2018, 365. [Google Scholar] [CrossRef]

- Bravo, G.; Grimaldo, F.; López-Iñesta, E.; Mehmani, B.; Squazzoni, F. The effect of publishing peer review reports on referee behavior in five scholarly journals. Nat. Commun. 2019, 10, 322. [Google Scholar] [CrossRef] [PubMed]

- Fang, F.C.; Casadevall, A. Retracted Science and the Retraction Index. Infect. Immun. 2011, 79, 3855–3859. [Google Scholar] [CrossRef]

- Moylan, E.C.; Kowalczuk, M.K. Why articles are retracted: A retrospective cross-sectional study of retraction notices at BioMed Central. BMJ Open 2016, 6, e012047. [Google Scholar] [CrossRef] [PubMed]

- Collaboration, O.S. Estimating the reproducibility of psychological science. Science 2015, 349, 4716. [Google Scholar] [CrossRef] [PubMed]

- Munafò, M.R.; Nosek, B.A.; Bishop, D.V.M.; Button, K.S.; Chambers, C.D.; du Sert, N.P.; Simonsohn, U.; Wagenmakers, E.-J.; Ware, J.J.; Ioannidis, J.P.A. A manifesto for reproducible science. Nat. Hum. Behav. 2017, 1, 21. [Google Scholar] [CrossRef] [Green Version]

- Fanelli, D. Opinion: Is science really facing a reproducibility crisis, and do we need it to? Proc. Natl. Acad. Sci. USA 2018, 201708272. [Google Scholar] [CrossRef] [PubMed]

- Goodman, S.N. Manuscript Quality before and after Peer Review and Editing at Annals of Internal Medicine. Ann. Intern. Med. 1994, 121, 11. [Google Scholar] [CrossRef]

- Pierson, C.A. Peer review and journal quality. J. Am. Assoc. Nurse Pract. 2018, 30, 1. [Google Scholar] [CrossRef]

- Siler, K.; Lee, K.; Bero, L. Measuring the effectiveness of scientific gatekeeping. Proc. Natl. Acad. Sci. USA 2015, 112, 360–365. [Google Scholar] [CrossRef] [PubMed]

- Caputo, R.K. Peer Review: A Vital Gatekeeping Function and Obligation of Professional Scholarly Practice. Fam. Soc. 2018, 1044389418808155. [Google Scholar] [CrossRef]

- Bornmann, L. Scientific peer review. Annu. Rev. Inf. Sci. Technol. 2011, 45, 197–245. [Google Scholar] [CrossRef]

- Resnik, D.B.; Elmore, S.A. Ensuring the Quality, Fairness, and Integrity of Journal Peer Review: A Possible Role of Editors. Sci. Eng. Ethics 2016, 22, 169–188. [Google Scholar] [CrossRef] [PubMed]

- Richard Feynman Cargo Cult Science. Available online: http://calteches.library.caltech.edu/51/2/CargoCult.htm (accessed on 13 February 2019).

- Fyfe, A.; Coate, K.; Curry, S.; Lawson, S.; Moxham, N.; Røstvik, C.M. Untangling Academic Publishing. A History of the Relationship between Commercial Interests, Academic Prestige and the Circulation of Research. 2017. Available online: https://theidealis.org/untangling-academic-publishing-a-history-of-the-relationship-between-commercial-interests-academic-prestige-and-the-circulation-of-research/ (accessed on 11 May 2019).

- Priem, J.; Hemminger, B.M. Decoupling the scholarly journal. Front. Comput. Neurosci. 2012, 6. [Google Scholar] [CrossRef] [Green Version]

- McKiernan, E.C.; Bourne, P.E.; Brown, C.T.; Buck, S.; Kenall, A.; Lin, J.; McDougall, D.; Nosek, B.A.; Ram, K.; Soderberg, C.K.; et al. Point of View: How open science helps researchers succeed. Elife Sci. 2016, 5, e16800. [Google Scholar] [CrossRef] [PubMed]

- Bowman, N.D.; Keene, J.R. A Layered Framework for Considering Open Science Practices. Commun. Res. Rep. 2018, 35, 363–372. [Google Scholar] [CrossRef]

- Crane, H.; Martin, R. The RESEARCHERS.ONE Mission. 2018. Available online: https://zenodo.org/record/546100#.XNaj4aSxUvg (accessed on 11 May 2019).

- Brembs, B. Reliable novelty: New should not trump true. PLoS Biol. 2019, 17, e3000117. [Google Scholar] [CrossRef]

- Stern, B.M.; O’Shea, E.K. A proposal for the future of scientific publishing in the life sciences. PLoS Biol. 2019, 17, e3000116. [Google Scholar] [CrossRef]

- Crane, H.; Martin, R. In peer review we (don’t) trust: How peer review’s filtering poses a systemic risk to science. Res. ONE 2018. [Google Scholar]

- Silver, A. Pay-to-view blacklist of predatory journals set to launch. Nat. News 2017. [Google Scholar] [CrossRef]

- Djuric, D. Penetrating the Omerta of Predatory Publishing: The Romanian Connection. Sci. Eng. Ethics 2015, 21, 183–202. [Google Scholar] [CrossRef]

- Strinzel, M.; Severin, A.; Milzow, K.; Egger, M. “Blacklists” and “Whitelists” to Tackle Predatory Publishing: A Cross-Sectional Comparison and Thematic Analysis; PeerJ Inc.: San Francisco, CA, USA, 2019. [Google Scholar]

- Shen, C.; Björk, B.-C. ‘Predatory’ open access: A longitudinal study of article volumes and market characteristics. BMC Med. 2015, 13, 230. [Google Scholar] [CrossRef]

- Perlin, M.S.; Imasato, T.; Borenstein, D. Is predatory publishing a real threat? Evidence from a large database study. Scientometrics 2018, 116, 255–273. [Google Scholar] [CrossRef]

- Bohannon, J. Who’s Afraid of Peer Review? Science 2013, 342, 60–65. [Google Scholar] [CrossRef]

- Olivarez, J.D.; Bales, S.; Sare, L.; vanDuinkerken, W. Format Aside: Applying Beall’s Criteria to Assess the Predatory Nature of both OA and Non-OA Library and Information Science Journals. Coll. Res. Libr. 2018, 79, 52–67. [Google Scholar] [CrossRef]

- Shamseer, L.; Moher, D.; Maduekwe, O.; Turner, L.; Barbour, V.; Burch, R.; Clark, J.; Galipeau, J.; Roberts, J.; Shea, B.J. Potential predatory and legitimate biomedical journals: Can you tell the difference? A cross-sectional comparison. BMC Med. 2017, 15, 28. [Google Scholar] [CrossRef]

- Crawford, W. GOAJ3: Gold Open Access Journals 2012–2017; Cites & Insights Books: Livermore, CA, USA, 2018. [Google Scholar]

- Eve, M. Co-operating for gold open access without APCs. Insights 2015, 28, 73–77. [Google Scholar] [CrossRef] [Green Version]

- Björk, B.-C.; Solomon, D. Developing an Effective Market for Open Access Article Processing Charges. Available online: http://www.wellcome.ac.uk/stellent/groups/corporatesite/@policy_communications/documents/web_document/wtp055910.pdf (accessed on 13 June 2014).

- Oermann, M.H.; Conklin, J.L.; Nicoll, L.H.; Chinn, P.L.; Ashton, K.S.; Edie, A.H.; Amarasekara, S.; Budinger, S.C. Study of Predatory Open Access Nursing Journals. J. Nurs. Scholarsh. 2016, 48, 624–632. [Google Scholar] [CrossRef]

- Oermann, M.H.; Nicoll, L.H.; Chinn, P.L.; Ashton, K.S.; Conklin, J.L.; Edie, A.H.; Amarasekara, S.; Williams, B.L. Quality of articles published in predatory nursing journals. Nurs. Outlook 2018, 66, 4–10. [Google Scholar] [CrossRef] [PubMed]

- Topper, L.; Boehr, D. Publishing trends of journals with manuscripts in PubMed Central: Changes from 2008–2009 to 2015–2016. J. Med. Libr. Assoc. 2018, 106, 445–454. [Google Scholar] [CrossRef]

- Kurt, S. Why do authors publish in predatory journals? Learn. Publ. 2018, 31, 141–147. [Google Scholar] [CrossRef]

- Frandsen, T.F. Why do researchers decide to publish in questionable journals? A review of the literature. Learn. Publ. 2019, 32, 57–62. [Google Scholar] [CrossRef]

- Omobowale, A.O.; Akanle, O.; Adeniran, A.I.; Adegboyega, K. Peripheral scholarship and the context of foreign paid publishing in Nigeria. Curr. Sociol. 2014, 62, 666–684. [Google Scholar] [CrossRef]

- Bell, K. ‘Predatory’ Open Access Journals as Parody: Exposing the Limitations of ‘Legitimate’ Academic Publishing. TripleC 2017, 15, 651–662. [Google Scholar] [CrossRef]

- Nwagwu, W.E. Open Access in the Developing Regions: Situating the Altercations About Predatory Publishing/L’accès libre dans les régions en voie de développement: Situation de la controverse concernant les pratiques d’édition déloyales. Can. J. Inf. Libr. Sci. 2016, 40, 58–80. [Google Scholar]

- Nobes, A. Critical thinking in a post-Beall vacuum. Res. Inf. 2017. [Google Scholar] [CrossRef]

- Polka, J.K.; Kiley, R.; Konforti, B.; Stern, B.; Vale, R.D. Publish peer reviews. Nature 2018, 560, 545. [Google Scholar] [CrossRef]

- Memon, A.R. Revisiting the Term Predatory Open Access Publishing. J. Korean Med. Sci. 2019, 34. [Google Scholar] [CrossRef] [PubMed]

- Bachrach, S.; Berry, R.S.; Blume, M.; Foerster, T.; von Fowler, A.; Ginsparg, P.; Heller, S.; Kestner, N.; Odlyzko, A.; Okerson, A.; et al. Who Should Own Scientific Papers? Science 1998, 281, 1459–1460. [Google Scholar] [CrossRef]

- Willinsky, J. Copyright Contradictions in Scholarly Publishing. First Monday 2002, 7. [Google Scholar] [CrossRef]

- Gadd, E.; Oppenheim, C.; Probets, S. RoMEO studies 4: An analysis of journal publishers’ copyright agreements. Learn. Publ. 2003, 16, 293–308. [Google Scholar] [CrossRef]

- Carroll, M.W. Why Full Open Access Matters. PLoS Biol. 2011, 9, e1001210. [Google Scholar] [CrossRef] [PubMed]

- Matushek, K.J. Take another look at the instructions for authors. J. Am. Vet. Med Assoc. 2017, 250, 258–259. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fyfe, A.; McDougall-Waters, J.; Moxham, N. Credit, copyright, and the circulation of scientific knowledge: The Royal Society in the long nineteenth century. Vic. Period. Rev. 2018. [Google Scholar] [CrossRef]

- Gadd, E.; Oppenheim, C.; Probets, S. RoMEO studies 1: The impact of copyright ownership on academic author self-archiving. J. Doc. 2003, 59, 243–277. [Google Scholar] [CrossRef]

- Davies, M. Academic freedom: A lawyer’s perspective. High Educ 2015, 70, 987–1002. [Google Scholar] [CrossRef]

- Dodds, F. The changing copyright landscape in academic publishing. Learn. Publ. 2018, 31, 270–275. [Google Scholar] [CrossRef]

- Morrison, C.; Secker, J. Copyright literacy in the UK: A survey of librarians and other cultural heritage sector professionals. Libr. Inf. Res. 2015, 39, 75–97. [Google Scholar] [CrossRef]

- Dawson, P.H.; Yang, S.Q. Institutional Repositories, Open Access and Copyright: What Are the Practices and Implications? Sci. Technol. Libr. 2016, 35, 279–294. [Google Scholar] [CrossRef] [Green Version]

- Björk, B.-C. Gold, green, and black open access. Learn. Publ. 2017, 30, 173–175. [Google Scholar] [CrossRef]

- Chawla, D.S. Publishers take ResearchGate to court, alleging massive copyright infringement. Science 2017. [Google Scholar] [CrossRef]

- Jamali, H.R. Copyright compliance and infringement in ResearchGate full-text journal articles. Scientometrics 2017, 112, 241–254. [Google Scholar] [CrossRef]

- Lawson, S. Access, ethics and piracy. Insights 2017, 30, 25–30. [Google Scholar] [CrossRef] [Green Version]

- Laakso, M.; Polonioli, A. Open access in ethics research: An analysis of open access availability and author self-archiving behaviour in light of journal copyright restrictions. Scientometrics 2018, 116, 291–317. [Google Scholar] [CrossRef]

- Lovett, J.; Rathemacher, A.; Boukari, D.; Lang, C. Institutional Repositories and Academic Social Networks: Competition or Complement? A Study of Open Access Policy Compliance vs. ResearchGate Participation. Tech. Serv. Dep. Fac. Publ. 2017. [Google Scholar] [CrossRef]

- Biasi, B.; Moser, P. Effects of Copyrights on Science—Evidence from the US Book Republication Program; National Bureau of Economic Research: Cambridge, MA, USA, 2018. [Google Scholar]

- Morrison, H. From the Field: Elsevier as an Open Access Publisher. Available online: https://www.ingentaconnect.com/content/charleston/chadv/2017/00000018/00000003/art00014 (accessed on 10 January 2019).

- Frass, W.; Cross, J.; Gardner, V. Open Access Survey: Exploring the views of Taylor & Francis and Routledge Authors. 2013. Available online: https://www.tandf.co.uk//journals/pdf/open-access-survey-march2013.pdf (accessed on 11 May 2019).

- Tickell, P.A. Open Access to Research Publications 2018. 2018. Available online: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/774956/Open-access-to-research-publications-2018.pdf (accessed on 11 May 2019).

- Piwowar, H.; Priem, J.; Larivière, V.; Alperin, J.P.; Matthias, L.; Norlander, B.; Farley, A.; West, J.; Haustein, S. The state of OA: A large-scale analysis of the prevalence and impact of Open Access articles. PeerJ 2018, 6, e4375. [Google Scholar] [CrossRef]

- Pinfield, S.; Salter, J.; Bath, P.A. The “total cost of publication” in a hybrid open-access environment: Institutional approaches to funding journal article-processing charges in combination with subscriptions. J. Assoc. Inf. Sci. Technol. 2015, 67, 1751–1766. [Google Scholar] [CrossRef] [Green Version]

- Björk, B.-C. Growth of hybrid open access, 2009–2016. PeerJ 2017, 5, e3878. [Google Scholar] [CrossRef]

- Green, T. We’ve failed: Pirate black open access is trumping green and gold and we must change our approach. Learn. Publ. 2017, 30, 325–329. [Google Scholar] [CrossRef]

- Björk, B.-C.; Solomon, D. Article processing charges in OA journals: Relationship between price and quality. Scientometrics 2015, 103, 373–385. [Google Scholar] [CrossRef]

- Lawson, S. APC Pricing 2014. Available online: https://figshare.com/articles/APC_pricing/1056280 (accessed on 11 May 2019).

- Schönfelder, N. APCs—Mirroring the Impact Factor or Legacy of the Subscription-Based Model? Universität Bielefeld: Bielefeld, Germany, 2018. [Google Scholar]

- Schimmer, R.; Geschuhn, K.K.; Vogler, A. Disrupting the Subscription Journals’ Business Model for the Necessary Large-Scale Transformation to Open Access. 2015. Available online: https://pure.mpg.de/pubman/faces/ViewItemOverviewPage.jsp?itemId=item_2148961 (accessed on 11 May 2019).

- Blackmore, P.; Kandiko, C.B. Motivation in academic life: A prestige economy. Res. Post-Compuls. Educ. 2011, 16, 399–411. [Google Scholar] [CrossRef]

- Gadd, E.; Troll Covey, D. What does ‘green’ open access mean? Tracking twelve years of changes to journal publisher self-archiving policies. J. Librariansh. Inf. Sci. 2019, 51, 106–122. [Google Scholar] [CrossRef]

- Berners-Lee, T.; De Roure, D.; Harnad, S.; Shadbolt, N. Journal Publishing and Author Self-Archiving: Peaceful Co-Existence and Fruitful Collaboration. Available online: https://eprints.soton.ac.uk/261160/ (accessed on 9 January 2019).

- Swan, A.; Brown, S. Open Access Self-Archiving: An Author Study; UK FE and HE Funding Councils: London, UK, 2005. [Google Scholar]

- Henneken, E.A.; Kurtz, M.J.; Eichhorn, G.; Accomazzi, A.; Grant, C.; Thompson, D.; Murray, S.S. Effect of E-printing on Citation Rates in Astronomy and Physics. arXiv 2006, arXiv:cs/0604061. [Google Scholar] [CrossRef]

- Houghton, J.W.; Oppenheim, C. The economic implications of alternative publishing models. Prometheus 2010, 28, 41–54. [Google Scholar] [CrossRef] [Green Version]

- Bernius, S.; Hanauske, M.; Dugall, B.; König, W. Exploring the effects of a transition to open access: Insights from a simulation study. J. Am. Soc. Inf. Sci. Technol. 2013, 64, 701–726. [Google Scholar] [CrossRef]

- Mongeon, P.; Paul-Hus, A. The journal coverage of Web of Science and Scopus: A comparative analysis. Scientometrics 2016, 106, 213–228. [Google Scholar] [CrossRef]

- Archambault, É.; Campbell, D.; Gingras, Y.; Larivière, V. Comparing bibliometric statistics obtained from the Web of Science and Scopus. J. Am. Soc. Inf. Sci. Technol. 2009, 60, 1320–1326. [Google Scholar] [CrossRef] [Green Version]

- Alonso, S.; Cabrerizo, F.J.; Herrera-Viedma, E.; Herrera, F. h-Index: A review focused in its variants, computation and standardization for different scientific fields. J. Informetr. 2009, 3, 273–289. [Google Scholar] [CrossRef] [Green Version]

- Harzing, A.-W.; Alakangas, S. Google Scholar, Scopus and the Web of Science: A longitudinal and cross-disciplinary comparison. Scientometrics 2016, 106, 787–804. [Google Scholar] [CrossRef]

- Rafols, I.; Ciarli, T.; Chavarro, D. Under-Reporting Research Relevant to Local Needs in the Global South. Database Biases in the Representation of Knowledge on Rice. 2015. Available online: https://www.semanticscholar.org/paper/Under-Reporting-Research-Relevant-to-Local-Needs-in-Rafols-Ciarli/c784ac36533a87934e4be48d814c3ced3243f57a (accessed on 11 May 2019).

- Chadegani, A.A.; Salehi, H.; Yunus, M.M.; Farhadi, H.; Fooladi, M.; Farhadi, M.; Ebrahim, N.A. A Comparison between Two Main Academic Literature Collections: Web of Science and Scopus Databases. Asian Soc. Sci. 2013, 9, 18. [Google Scholar] [CrossRef]

- Ribeiro, L.C.; Rapini, M.S.; Silva, L.A.; Albuquerque, E.M. Growth patterns of the network of international collaboration in science. Scientometrics 2018, 114, 159–179. [Google Scholar] [CrossRef]

- Chinchilla-Rodríguez, Z.; Miao, L.; Murray, D.; Robinson-García, N.; Costas, R.; Sugimoto, C.R. A Global Comparison of Scientific Mobility and Collaboration According to National Scientific Capacities. Front. Res. Metr. Anal. 2018, 3. [Google Scholar] [CrossRef]

- Boshoff, N.; Akanmu, M.A. Scopus or Web of Science for a bibliometric profile of pharmacy research at a Nigerian university? S. Afr. J. Libr. Inf. Sci. 2017, 83. [Google Scholar] [CrossRef]

- Wang, Y.; Hu, R.; Liu, M. The geotemporal demographics of academic journals from 1950 to 2013 according to Ulrich’s database. J. Informetr. 2017, 11, 655–671. [Google Scholar] [CrossRef]

- Gutiérrez, J.; López-Nieva, P. Are international journals of human geography really international? Prog. Hum. Geogr. 2001, 25, 53–69. [Google Scholar] [CrossRef]

- Wooliscroft, B.; Rosenstreich, D. How international are the top academic journals? The case of marketing. Eur. Bus. Rev. 2006, 18, 422–436. [Google Scholar] [CrossRef]

- Ciarli, T.; Rafols, I.; Llopis, O. The under-representation of developing countries in the main bibliometric databases: A comparison of rice studies in the Web of Science, Scopus and CAB Abstracts. In Proceedings of the Science and Technology Indicators Conference 2014 Leiden: “Context Counts: Pathways to Master Big and Little Data”, Leiden, The Netherlands, 3–5 September 2014; pp. 97–106. [Google Scholar]

- Chavarro, D.; Tang, P.; Rafols, I. Interdisciplinarity and research on local issues: Evidence from a developing country. Res. Eval. 2014, 23, 195–209. [Google Scholar] [CrossRef]

- Ssentongo, J.S.; Draru, M.C. Justice and the Dynamics of Research and Publication in Africa: Interrogating the Performance of “Publish or Perish”; Uganda Martyrs University: Nkozi, Uganda, 2017; ISBN 978-9970-09-009-9. [Google Scholar]

- Alperin, J.P.; Eglen, S.; Fiormonte, D.; Gatto, L.; Gil, A.; Hartley, R.; Lawson, S.; Logan, C.; McKiernan, E.; Miranda-Trigueros, E.; et al. Scholarly Publishing, Freedom of Information and Academic Self-Determination: The UNAM-Elsevier Case. 2017. Available online: https://figshare.com/articles/Scholarly_Publishing_Freedom_of_Information_and_Academic_Self-Determination_The_UNAM-Elsevier_Case/5632657 (accessed on 11 May 2019).[Green Version]

- Paasi, A. Academic Capitalism and the Geopolitics of Knowledge. In The Wiley Blackwell Companion to Political Geography; John Wiley & Sons, Ltd: Hoboken, NJ, USA, 2015; pp. 507–523. ISBN 978-1-118-72577-1. [Google Scholar]

- Tietze, S.; Dick, P. The Victorious English Language: Hegemonic Practices in the Management Academy. J. Manag. Inq. 2013, 22, 122–134. [Google Scholar] [CrossRef]

- Aalbers, M.B. Creative Destruction through the Anglo-American Hegemony: A Non-Anglo-American View on Publications, Referees and Language. Area 2004, 36, 319–322. [Google Scholar] [CrossRef]

- Hwang, K. The Inferior Science and the Dominant Use of English in Knowledge Production: A Case Study of Korean Science and Technology. Sci. Commun. 2005, 26, 390–427. [Google Scholar] [CrossRef]

- Rivera-López, B.S. Uneven Writing Spaces in Academic Publishing: A Case Study on Internationalisation in the Disciplines of Biochemistry and Molecular Biology. 2016. Available online: https://thesiscommons.org/8cypr/ (accessed on 11 May 2019). [CrossRef]

- Lillis, T.; Curry, M.J. Academic Writing in a Global Context: The Politics and Practices of Publishing in English; Routledge: London, UK, 2013. [Google Scholar]

- Minca, C. (Im)mobile geographies. Geogr. Helv. 2013, 68, 7–16. [Google Scholar] [CrossRef]

- Royal, Society (Ed.) Knowledge, Networks and Nations: Global Scientific Collaboration in the 21st Century; Policy Document; The Royal Society: London, UK, 2011; ISBN 978-0-85403-890-9. [Google Scholar]

- Okune, A.; Hillyer, R.; Albornoz, D.; Posada, A.; Chan, L. Whose Infrastructure? Towards Inclusive and Collaborative Knowledge Infrastructures in Open Science. In ELPUB 2018; Chan, L., Mounier, P., Eds.; Toronto, ON, Canada, 2018. [Google Scholar]

- Beverungen, A.; Böhm, S.; Land, C. The poverty of journal publishing. Organization 2012, 19, 929–938. [Google Scholar] [CrossRef] [Green Version]

- Luzón, M.J. The Added Value Features of Online Scholarly Journals. J. Tech. Writ. Commun. 2007, 37, 59–73. [Google Scholar] [CrossRef]

- Van Noorden, R. Open access: The true cost of science publishing. Nat. News 2013, 495, 426. [Google Scholar] [CrossRef] [PubMed]

- Inchcoombe, S. The changing role of research publishing: A case study from Springer Nature. Insights 2017, 30, 10–16. [Google Scholar] [CrossRef]

- de Camargo, K.R. Big Publishing and the Economics of Competition. Am. J. Public Health 2014, 104, 8–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tennant, J.P.; Crane, H.; Crick, T.; Davila, J.; Enkhbayar, A.; Havemann, J.; Kramer, B.; Martin, R.; Masuzzo, P.; Nobes, A.; et al. Ten Hot Topics around Scholarly Publishing. Publications 2019, 7, 34. https://doi.org/10.3390/publications7020034

Tennant JP, Crane H, Crick T, Davila J, Enkhbayar A, Havemann J, Kramer B, Martin R, Masuzzo P, Nobes A, et al. Ten Hot Topics around Scholarly Publishing. Publications. 2019; 7(2):34. https://doi.org/10.3390/publications7020034

Chicago/Turabian StyleTennant, Jonathan P., Harry Crane, Tom Crick, Jacinto Davila, Asura Enkhbayar, Johanna Havemann, Bianca Kramer, Ryan Martin, Paola Masuzzo, Andy Nobes, and et al. 2019. "Ten Hot Topics around Scholarly Publishing" Publications 7, no. 2: 34. https://doi.org/10.3390/publications7020034

APA StyleTennant, J. P., Crane, H., Crick, T., Davila, J., Enkhbayar, A., Havemann, J., Kramer, B., Martin, R., Masuzzo, P., Nobes, A., Rice, C., Rivera-López, B., Ross-Hellauer, T., Sattler, S., Thacker, P. D., & Vanholsbeeck, M. (2019). Ten Hot Topics around Scholarly Publishing. Publications, 7(2), 34. https://doi.org/10.3390/publications7020034