1. Introduction

Reservoir simulations play critical roles in reservoir management, as simulators provide one way to validate production plans in the early stages and to predict future oil and gas production. Many simulators have been developed and applied over the past decades, such as CMG STARS and Eclipse. Those simulators have been widely used in reservoir management. For example, CMG STARS is the most popular thermal reservoir simulator, which has been developed for around 40 year. It has been applied worldwide in various thermal recovery processes, such as in situ combustion, CSS (Cyclic Steam Stimulation) and SAGD (Steam-Assisted Gravity Drainage). When multiple chemicals are considered in a model, more physics features are applied, or if the geological model is complex, a simulator may take very long to complete one simulation. A model may be run dozens of times to match history and to optimize oil production so the long running time reduces the productivity of reservoir engineers. Acceleration of simulations is important to oil and gas industry.

Reservoir simulation is an interdisciplinary research topic, which involves petroleum modeling, applied mathematics, computational methods, and computer sciences. It has been studied for decades, and various models and methods have been proposed [

1]. Crookston et al. [

2] proposed a simple two-dimensional model, which handled three-phase flow and vaporization–condensation effects. Grabowski and his collaborators developed a sequential implicit method for thermal reservoir model [

3]. Coats was a pioneer in reservoir simulations, and he developed models and numerical methods for black oil model, compositional model and thermal model, including in situ combustion mode [

4]. In [

4], a general four-phase multicomponent in situ combustion model was proposed by Coats, which was improved later by Rubin [

5]. Zhu et al. proposed new upscaling methods for their simulators [

6,

7]. There are a few popular numerical schemes for thermal and compositional models, such as variable substitution [

4] methods and pseudo-equilibrium ratio (PER) methods [

2]. The variable substitution method changes variables, such as temperature and saturation, depending on the phase status, while the PER method assumes phases do not disappear and modifies the

K-value calculation formula. Both methods have been employed in simulator development. Another scheme was also proposed by Mifflin et al. [

8], which utilized global variables, such as pressure, moles, and energy as unknowns. For the global variable scheme, phase status is computed after obtaining the global variables. Numerical methods and algorithms were proposed by Barua [

9] to improve the nonlinear system and linear system. A key to reservoir simulations is to develop effective solution techniques, especially preconditioners. Many preconditioners have been proposed to accelerate the solution of linear systems, such as constrained pressure residual (CPR) methods [

10,

11]; multiple level preconditioners [

12]; multi-stage methods [

13]; CPR-FP, CPR-FPF, and CPR-FFPF methods [

14]; and FASP (fast auxiliary space preconditioners) [

15,

16]. Parallel computers have more memory and better performance, which provide excellent approaches to accelerate reservoir simulations [

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28]. Wang [

29,

30] implemented a fully implicit equation-of-state compositional simulator for distributed-memory parallel computers, and large-scale reservoir models were simulated [

31]. Reservoir models with millions of grid blocks on parallel computers were reported [

32]. Killough [

33] reviewed the parallel reservoir models and parallel computing technologies. Saudi Aramco developed new-generation massively-parallel reservoir simulator [

34,

35,

36,

37]. Zhang et al. developed a scalable general-purpose platform for parallel adaptive finite element methods and adaptive finite volume methods [

38,

39], which was applied to reservoir simulations [

40].

This paper introduces our work on developing a parallel thermal simulator, including a mathematical model and numerical methods. The simulator works for three-dimensional domain and has three phases: water, oil, and gas. The model has one water component, arbitrary oil components, and arbitrary non-condensable gas components. Here, water is assumed to stay in the water and gas phases. Oil components have two types: heavy oil components and light components. The heavy oil components stay in oil phase only but the light oil components exist in oil phase and gas phase. The distribution of water and light oil in gas phase is determined by pressure, temperature, K-values and composition of water, and light oil and non-condensable gas components. This paper gives full mathematical details of a thermal model, including mass conservation law, energy conservation law, phase equilibrium calculation, density, viscosity, enthalpy, relative permeability, and well modeling. Numerical methods are introduced, especially physics-based preconditioning methods, including decoupling methods and preconditioners. In the numerical experiment section, our simulator is compared with CMG STARS (a popular commercial reservoir simulator), and results show that they match very well, from which we can conclude our implementations are correct. Furthermore, numerical testings show that our numerical methods are effective. From the scalability testings, we can see that the thermal simulator has good scalability, and it can compute large-scale thermal reservoir models. A scalable simulator enables us to run large models and to calculate a model in shorter simulation time. Furthermore, as we can use hundreds of computation nodes and lots of memory, we can model physical problems in a much smaller scale, such as nanoscale.

The structure of the paper is as follows. In

Section 2, the thermal reservoir model is introduced and the equations for various properties are presented. In

Section 3, numerical methods and parallel computing approaches are proposed. In

Section 4, numerical experiments are carried out to validate our results against commercial simulator, CMG STARS, and to show the scalability of the parallel thermal simulator.

2. Mathematical Model

Most simulators share the same theory framework [

1,

41,

42,

43,

44]. For the sake of completeness, the mathematical model of the thermal simulator is introduced here, and the models are almost the same as in [

1,

41,

42,

43,

44,

45]. Some content of this section is borrowed from our previous paper [

1,

44], CMG STARS [

41], and the textbook in [

45]. In [

1], the following assumptions were made: water component exists in water and gas phases; all oil components exist in oil and gas phases, which means all oil components are light oil; non-condensable gas components exist in gas phase only; and all three phases co-exist during the entire simulation. Furthermore, the PER method is applied to one light oil component only, in which the light oil is the one has the largest molecular weight. In this paper, different assumptions are made: the water component exists in water and gas phases, heavy oil components exist in oil phase only, light oil components exist in both oil and gas phases, and non-condensable gas components exist in gas phase. Gas phase appearance and disappearance are allowed. Depending on the input, arbitrary oil components and non-condensable gas components are allowed.

2.1. Darcy’s Law

The fluid is assumed to be Darcy flow, and the Darcy’s law is applied to model the velocity of a fluid phase, which defines the relation among permeability, viscosity, saturation, pressure difference, gravity, and temperature. The thermal model has three phases, water phase (

w), oil phase (

o), and gas phase (

g) [

1,

45]:

Here, is velocity, is relative permeability, is viscosity, is permeability, is pressure, and z is depth.

2.2. Mass Conservation Equations

For a multi-phase multicomponent reservoir model, we use

,

, and

to denote the mole fraction of a component in the

-phase, the mole number of a component in the phase, and the total mole number of the phase, respectively [

1,

45]. Then, the mole fraction of a component in a phase is defined as

If only water exists in the water phase,

. If the gas phase exists, it may contains water, light oil, and non-condensable gas components. Here,

y is employed to note gas mole fraction,

where

is total chemicals in gas phase. Moreover, if the oil phase exists,

x is employed to note oil mole fraction,

where

is total chemicals in oil phase.

A component

c may exist in several phases, so its mass at a reservoir is summation of masses in all phases, which is written as below [

45]:

where

is density and

is well rate.

The water, oil, and gas saturation have the following constraint:

2.3. Energy Conservation Equation

The energy conservation equation for a thermal reservoir model [

45] is described as

where

U denotes the internal energy and

H is enthalpy. This equation considers fluid energy, rock heat energy, heat conduct, heat loss, and energy changes through wells. The heat conduction is noted by

, the bulk thermal conductivity, which is a combination of liquid and rock, where a linear mixing rule is applied [

41],

In the equation,

denote thermal conductivities for water phase, oil phase, gas phase, and rock, respectively. This rule is also called simple mixing rule (CMG STARS) [

41]. A more complicated model is also available [

41].

Note that there are different ways to model rock internal energy:

. In the above equation, the porosity,

, is a function of pressure and temperature, and

is a function of temperature, so the rock internal energy is a function of pressure and temperature. This method assumes the volume of a grid block does not change but

changes depending on pressure and temperature. Another way is to assume the rock volume,

, does not change, even if

changes during the simulation. This method preserves the rock energy and mass. The second method is applied as the default method by us and CMG STARS [

41]. A heat loss term is modeled by the semi-analytical method developed by Vinsome et al. [

46].

2.4. Capillary Pressure

Capillary pressure

is the pressure difference between two phases, which are usually functions of saturation [

45]:

from which we can see if we know the oil phase pressure, water saturation, and gas saturation, the water phase pressure and the gas phase pressure can be computed.

2.5. Phase Equilibrium Constraints

The

K-value (or an equilibrium ratio) is defined as the ratio of the mole fractions of a component in two phases (gas phase vs. water phase and gas phase vs. oil phase in this paper):

Here, a

K-value of water component and light oil component is a function of pressure and temperature, which is calculated using the following method:

The K-value is valid only if the gas phase exists. Furthermore, each chemical has different parameters. The five parameters are user input.

In our thermal model, the calculations of

K-values are modified, where the PER (Pseudo-Equilibrium Ratios) method [

2,

47] is applied for water and light oil,

In calculations of pseudo K-values, is a small number of the order of . The water phase and oil phase exist through the entire simulation. However, the gas phase is allowed to disappear. The gas phase mole fraction for the oil components and water component are functions of .

2.6. Phase Changes

Gas phase is allowed to reappear and disappear, which has to be checked and determined in each Newton iteration. The K-value only validates when gas phase exists.

When gas phase exists, its saturation, , is positive. If a non-positive gas saturation is detected, then gas phase disappears. is set to 0.

When gas phase does not exist,

is 0. If the following relationship is detected:

then gas phase reappears. A small gas saturation is set, such as

. A cell type boolean variable is applied to store the gas phase status.

2.7. Compressibility Factor of Real Gas

The gas phase mole density is calculated as

where

Z is calculated by EOS equation.

In the thermal model, the Redlich–Kwong EOS [

48] is used to calculate the Z factor.

where

p is pressure,

T is temperature,

is critial pressure, and

is critical temperature. These represent the user input for a gas component, which is determined by a lab.

In addition, the following mixing method is applied:

Then, after we have the coefficients A and B, the compressibility factor of real gas satisfies the equation [

41]

This cubic equation has three roots, in which the biggest real root is chosen [

45]. The

Z factor is a function of

p,

T,

,

,

, and

.

2.8. Density

The water component and oil components have the same equation:

where

is the reference density of a component at the reference temperature,

, and pressure, and

,

,

,

, and

are parameters. The density of oil phase,

, which is mixture of multiple oil components, is calculated as

2.9. Viscosity

There are a few ways to calculate viscosity, such as table input and analytical correlations. For the table input method, interpolations are required to calculate the viscosity of a component or a phase at a given temperature. In the following, an analytical method is introduced for oil, water, and gas.

The viscosity of oil component in oil phase and water component in water phase has the same formula, which is a function of temperature,

where

and

are parameters. The viscosity of oil phase is computed by a mixing rule,

The viscosity of a component in gas phase is calculated as

where

and

are parameters. The gas phase viscosity is calculated as

where

is molecular weight of

i-th component.

2.10. Porosity

Porosity is the ratio of the pore volume to the bulk volume, which changes as pressure and temperature change. A total compressibility of porosity [

45] is defined as

which defines a function of pressure and temperature. There are two ways to model porosity, linear, and exponential. Linear model is described as

and exponential model is described as

The linear model is applied by default.

2.11. Relative Permeabilities

There are two ways for calculating relative permeabilities: The first one is to use analytical correlations, and the second one is to use input tables. The water phase relative permeability,

, is a function of

(and/or temperature):

The gas phase relative permeability,

, is a function of

(and/or temperature):

Sometime temperature is considered, a set of relative permeabilities are provided for each temperature. Furthermore, a reservoir model may have several rock types, and each rock type has one set of relative permeabilities.

Several models are available [

49,

50,

51,

52] to calculate oil phase relative permeability,

. In this paper, the Stone’s model II method [

53] is applied, which is defined as

where

is the oil–water relative permeability to oil at connate water saturation,

is the oil–gas relative permeability to oil, and

is the oil–water relative permeability to oil.

2.12. Energy

There are a few ways to model enthalpy (energy), such as a gas-based model and a liquid-based model. Here, the gas-based model is introduced. The enthalpy of a gas component is calculated as follows [

41]:

,

,

,

, and

are constants for component

i. The gas phase enthalpy can be calculated by a weighted mean with gas mole fractions

:

For the oil and water components, vaporization is considered, which can be calculated by

However, if an oil component is heavy oil, which stays as liquid (oil phase), the vaporization energy is zero. The enthalpy of a liquid component is calculated as

where

is the enthalpy of component

i in the gas phase. The water phase enthalpy is

The enthalpy of oil phase is

The internal energy for oil, gas, and water phases [

41] are calculated as

For rock, a similar formula is used:

The internal energy for rock has a unit of energy per unit volume, while others have energy per mole. As mentioned above, there are two ways to calculate the volume of rock. The following equation defines relationship among bulk volume

, rock volume

, and pore volume

,

where

is calculated by porosity correlations and

is used when calculating the internal energy of rock. The first one assumes the volume of rock (non-null) does not change, which is noted as constant rock. It assumes that

is constant, which preserves rock mass and heat, and

changes as

changes. The second one assumes that the volume of the grid block does not change, which is noted as constant bulk. It means

is constant and

(

) changes as

changes. The default method is the first one, rock constant.

2.13. Well Modeling

Peaceman’s model is adopted for well modeling. A well may have different directions, such as vertical and horizontal, and it may have many perforations, each of which belongs to a grid cell (block). Each perforation has its own properties, such as well index, rates, and mobility. Each well has its own properties, such as well rates and bottom hole pressure. For a perforation, its well rate for phase

,

, is calculated by the following formula [

54]:

where

WI is the well index and mobility,

, is explicit or implicit. Explicit means its value is from the last time step, while implicit means its value is from the last Newton iteration. Well rate can also be calculated using a third method, unweighted method,

where

is user input value. A well index defines the relationship among a well bottom hole pressure, a flow rate, and a grid block pressure.

is the bottom hole pressure defined at the reference depth z,

is the depth of the perforation in grid cell, and

is the phase pressure in grid block

m. Well index can be read from modeling file and it can also be calculated using analytical method. For a vertical well, it can be defined as

where

is equivalent radius,

is well fraction, and

is completion factor. Horizontal well is defined similarly. We should mention that well modeling is the most complicated part in reservoir simulations and various operation constraints can be defined, such as fixed bottom hole pressure, fix liquid, and gas rate constraints and thermal constraints.

2.13.1. Fixed Bottom Hole Pressure

When the fixed bottom hole pressure condition is applied to a well, the well equation is written as

where

c is pressure and is a constant. The bottom hole pressure is defined at a reference depth or a grid block. If neither is provided, the grid block containing the first perforation serves as the reference grid block.

2.13.2. Fixed Rates

Fixed rate constraints are commonly used, including fixed oil rate, fixed water rate, fixed gas rate, and fixed liquid rate (oil and water). The rate can be reservoir rate or surface rate. The volume of a fluid in reservoir condition can be obtained easily. However, the volume of a fluid in surface condition requires a flash calculation to determine the distribution in oil, water, and gas phases. There are two ways to separate phase: segregated method and PT-flash method. The segregated method is easy but the PT-flash is tricky. The segregated method is the default. For phase

, its fixed rate constraint is described by the following equation:

where

c is a constant rate and known. The fixed liquid rate is written as

The fixed total fluid rate is written as

2.14. Boundary Conditions

A no-flow boundary condition is applied to fluid, which is coupled with each mass conservation equation. For the energy conservation equation, heat loss to underburden and overburden is considered, which is modeled by a semi-analytical method [

46]. Each well may have multiple constraints, which is user input. They are determined and switched dynamically during the simulations.

2.15. Initial Conditions

A few initial methods are supported. The easiest one is to use explicit initial conditions, such as pressure, temperature, mole fraction, and saturation. Another one uses the gravity average, in which the pressure is calculated by depth difference to reference depth (grid block). The saturation, mole fractions, and temperature can be computed or be given by user input.

3. Numerical Methods

In our previous previous work, a few reservoir simulators and their numerical methods have been reported [

14,

55,

56]. The simulators share similar methods, such as time discretization scheme, spatial discretization scheme, decoupling method, linear solver, and preconditioners [

14]. For the sake of completeness, the numerical methods are introduced in this section. There are two main sets of unknowns: natural variables and overall variables. The natural variables use pressure, temperature, saturation, and mole fractions. The overall variables use pressure, temperature, and overall mole fractions. Phase changes have to be checked in each nonlinear iteration and time step if we use natural variables. When liquid and gas phases co-exist, temperature and pressure are not independent, and only one variable is required, such as pressure or temperature. Some researchers applied the variable substitution trick to switch unknowns and to save computation. To overall variables, phase status is determined after obtaining solutions. In this paper, a fully implicit method is employed, which is friendly to large time step and to accuracy. However, it is possible to apply some techniques to speed simulation, such as adaptive methods.

In this paper, one additional equation is adopted, when enables us to treat pressure and temperature as independent variables through the entire simulation, which only introduces a little more computation but simplifies the numerical treatment and linear systems.

3.1. Spatial Discretization

The natural variables are applied as unknowns, which are also called Type A variables, including pressure, temperature, saturations, and mole fractions (oil components in oil phase and non-condensable gas in gas phase). The variables do not change during the simulation. However, depending on the gas phase status, one constrained equation is switched. If gas phase exist, the following equation is applied:

If gas phase does not exist, the following equation is switched:

The status of gas phase has to be checked block by block in each Newton iteration.

When fluids move in a reservoir, there may be fluid exchange in two neighboring grid blocks, which is described by transmissibility. Assuming

is a space direction and

A be the area of a face in the

d direction, the transmissibility

for phase

is defined as

where

is the grid block length in the

d direction,

K is the absolute permeability,

is the relative permeability of phase

,

is the viscosity of phase

, and

is the mole density of phase

. The transmissibility is defined on each face of a grid block. If a face is an internal face shared shared by two grid blocks, its value is the same for these two blocks. If the face is a boundary face, the transmissibility is zero, as the no-flow boundary condition is applied. Different weighting schemes must be applied to average different properties at an interface. The left part,

, is geometric properties, and the harmonic averaging method is applied. The right part,

, relies on fluid properties, and the upstream averaging method is applied [

45]. The upstream finite difference method is employed to discretize the model.

3.2. Linear Solver

The Jacobian matrix from Newton method is highly ill-conditioned, and the Krylov subspace solvers are applied to solve the linear system

. The key to an effective solution method is to choose a proper preconditioner

M, which should be easy to setup and effective. In our previous work, a family of scalable CPR-type methods [

14] has been developed for reservoir simulations, which have been applied to black oil model, compositional, in situ combustion, and the general thermal model in this paper. The unknowns are numbered grid block by grid block and the resulted matrix in each iteration is block-wise,

where each sub-matrix

is a square matrix. In-house distributed-memory matrix, vector, and their operations have been developed, such as adding entries, assembling, getting sub-matrix (for CPR-type preconditioners), factorization, sparse BLAS, and point-wise and block-wise matrices. Base on these operations, internal parallel solvers, such as GMRES, LGMRES, CG, and BICGSTAB, and preconditioners, such as RAS, AMG, CPR-FP, CPR-PF, CPR-FPF, ILU(k), and ILUT, have been implemented.

3.3. Decoupling Methods

A proper decoupling method is critical to the success of the CPR-type preconditioners. In general, the decoupling method is applied before applying the CPR-type preconditioners, which converts the original linear system to an equivalent linear system,

Several decoupling methods have been proposed, such as Quasi-IMPES, True-IMPES [

57], Alternate Block Factorization (ABF) [

58], full row sum (FRS), and dynamic row sum (DRS) [

59] methods. The idea of ABF method is simple, which is defined as

It converts the block diagonal part to identity matrix. This method requires to calculate the inverse of each diagonal part, and the matrix–matrix multiplications are performed for each sub-matrix. The FRS decoupling method is described as

where,

The diagonal part and the first row are 1 and all other locations are 0, which means to add the all rows to the first row. The DFS decoupling method is a simplified version of the FRS method, and details can be read in [

59].

The Guass–Jordan elimination (Gauss elimination; GJE) method [

1] has been used to solve linear systems. Its idea is to convert

to an equivalent linear system

by Gauss–Jordan elimination method, and the

is the final solution. In this paper, it is adopted as a decoupling method and is applied grid block by grid block to turn the diagonal matrices to identity matrix. Pivoting technique is used and only row reordering is involved. Since the decoupling is processed block by block, no communication is required, which is friendly to parallel computing. The GJE decoupling is more efficient than the ABF method. These decoupling methods are naturally parallel, and they have ideal scalability for parallel computing.

When the CPR-type preconditioners are applied to reservoir simulations, it is important to keep the pressure matrix positive definite. The FRS method helps to enhance this property, from which the CPR-type preconditioners can benefit. In the first stage, FRS or DRS methods are applied; then, ABF or GJE methods are used as the second stage. In this case, two-stage decoupling methods are developed, which are noted as FRS + ABF, FRS + GJE, DRS + ABF, and DRS + GJE.

3.4. Preconditioners

Several scalable CPR-type preconditioners have been proposed [

14], such as CPR-FP, CPR-PF, CPR-FPF, and CPR-FFPF methods. According to our practices, the CPF-FPF method, which is a three-stage preconditioner, is effective for black oil model and thermal model. The first step is to solve an approximate solution using restricted additive Schwarz (RAS) method, the third step is to solve the sub-problem by algebraic multi-grid method (AMG), the fifth step is to get an approximate solution again using restricted additive Schwarz method, and the second step and the forth step are to calculate residual.

It is well-known that the RAS method is scalable for parallel computing. Parallel AMG method is also scalable. The default overlap of the RAS method is 1. If it’s 0, then it is equivalent to block Jacobi method. When there are too many MPI processes, we may increase the overlap to maintain convergence of the preconditioner, such as 2 and 3. However, more communications are introduced in the setup phase of the RAS method, which needs to construct a local sub-problem by requesting more entries of the distributed matrix from other MPI processes. The sub-problem in each MPI process (CPU core) from RAS method is solved by ILUT by default, which can also be solved by ILU(k) or block ILU(k) [

14]. The size of the lower triangular matrix and the upper triangular matrix can be reduced by dropping small entries or using smaller

p for ILUT and

k for ILUK, and the convergence of ILU methods should be well balanced. The recommended level (

k) for ILUK is 1. The AMG is from Hypre [

60].

We should mention here that the RAS method requires very few communications. In each solution phase, it requires some off-process component information of the right-hand side. After this, no communication is required. In parallel computing, the RAS method has ideal scalability. If overlap 0 is applied, there is no communication during the solution phase. The AMG method also has good scalability. In addition, the decoupling methods are local, which means there is no communication. We can see that the solution methods here are designed for parallel computing and good scalability is ensured.

3.5. Parallel Computing

The key to scalability is to partition the given grid in a proper way. The partitioning method should minimize the total communications and maximal communication per MPI. Furthermore, each sub-grid should be connected with a few other sub-grids. In our simulator, two methods are supported. The first one is a graph partitioning method, which sees each grid as a dual graph. The dual graph models communication patterns. When the grid is partitioned, we make sure the cut edges are minimized, which makes sure communications are minimized. The package ParMETIS provides excellent partitioning speed and quality. The second method is to use geometry-based method. In our simulator, an in-house Hilbert space-filling curve method is implemented, which maps all grid blocks to . Then, the interval is partitioned into m sub-intervals, and each interval is assigned to one MPI process. Grid blocks belong to the same interval belong to the same MPI process.

The grid partition determines the grid distribution and communication pattern, which is the key to scalability. Furthermore, each MPI forms its own part of the Jacobian system. The grid partition also determines the scalability of RAS method and its convergence. Assuming that non-overlapped RAS is chosen, then each MPI extracts a diagonal matrix from its local Jacobian system. If overlapped RAS is applied, then neighbors of each local diagonal matrix is determined by the matrix structure, which is from the grid partition. We can see that the grid partition is the key to simulator scalability and solver scalability.

If we keep the grid unchanged, then each sub-grid keeps the same, which means the communication pattern does not change. The pattern can be saved and attached to the grid. When communications are required, each sub-grid knows where to send data, where to receive data, and how much data should be exchanged. By storing the communication pattern, the communication operations are easy to perform. When we compute sparse matrix vector multiplication, a similar communication pattern can be computed and attached to a matrix.

In parallel simulation, a large data file may be read and written to initialize the reservoir and to save results. In our simulator, the MPI-IO is applied to read and write in parallel. The simplest way to do this is to save a grid data in one file, such as pressure and saturation. The read and write operations are easy to implement. Another way is to save data in multiple column format. For example, the first column is pressure, the second one is density, and the third one is temperature. It is easy for MPI-IO to handle the formatted data file. In our implementation, we assume each column has a length of 20 letters, so we can compute the offset in relative easy way, where the offset is required by MPI-IO.

4. Numerical Studies

Numerical experiments are presented here, which occupy a few sections. The first section validates our results against CMG STARS, which is the most widely applied thermal simulator. The purpose is to prove the correctness of our numerical methods, models, and implementation. The second section studies numerical performance of our methods. The third section tests the scalability of our thermal simulator using some giant models. In the following sections, each MPI runs on one core, and the number of MPI processes is the same as the number of CPU cores. A better way is each MPI runs on one CPU and multiple threads run on the multi-cores. In our simulator, the OpenMP is disabled by default. The reason we disable OpenMP is that efficient usage of OpenMP requires us to change a lot of codes, especially that the solver needs major re-factorization, which is our future work.

4.1. Validation

This section has two validation models. The first one has water heavy oil. The second one has water, heavy oil, light oil, and non-condensable gas (NCG). They are created to validate if our results match commercial simulator when equivalent models are used, where CMG STARS is employed. The CMG STARS is the most popular thermal simulator.

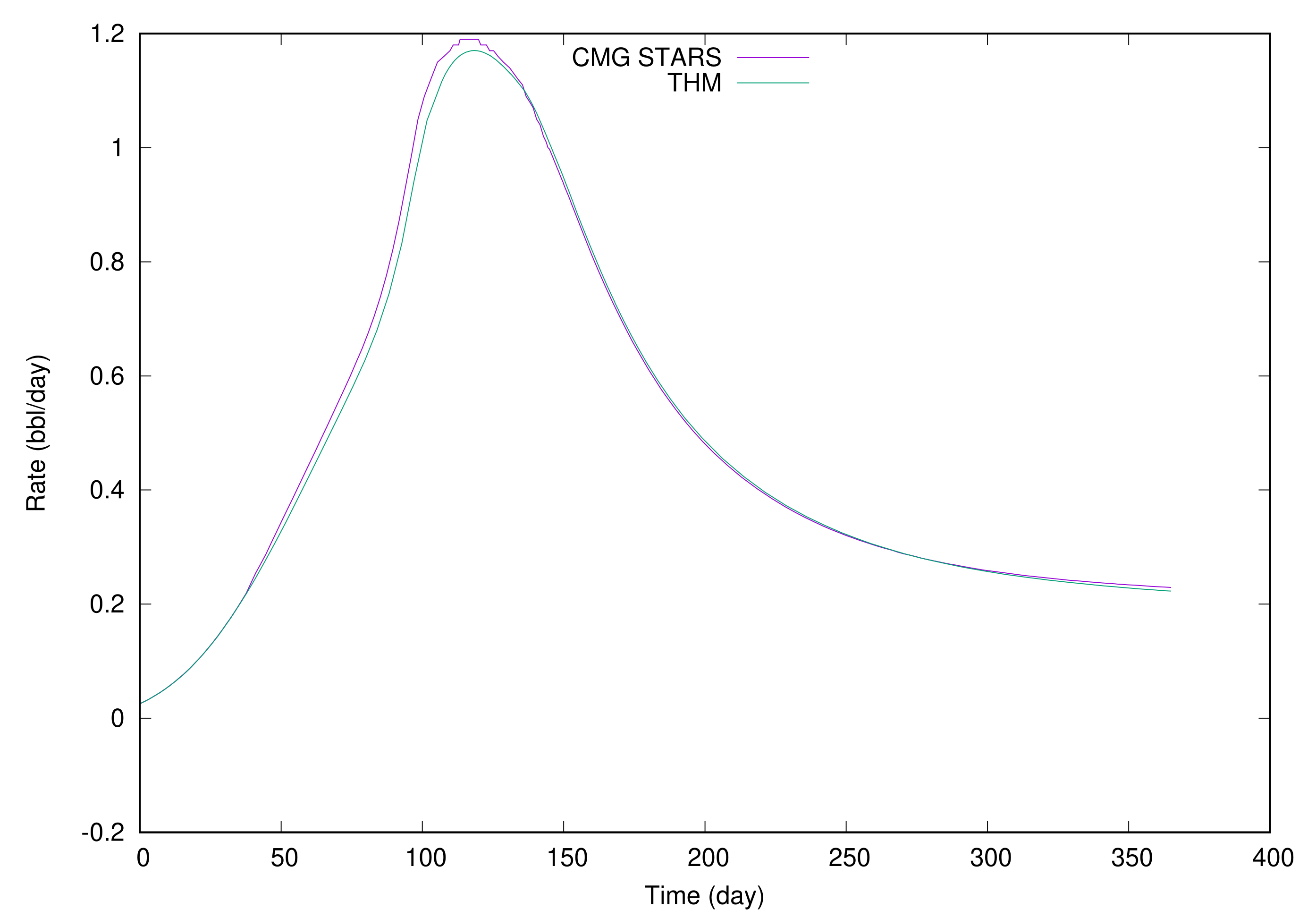

4.1.1. Heavy Oil

Example 1. This model has water and one heavy oil component. The heavy oil component stays in oil phase only. It has three vertical wells: one injection well at (1,1) and two production wells at (9, 1) and (5, 5). Table 1 and Table 2 show the oil–water relative permeability curve and liquid–gas relative permeability curve. Here, the capillary pressures are ignored. Table 3 presents chemical properties. Table 4 shows well data, including well index and operations. Table 5 gives initial conditions. The simulation period is 365 days and the initial time step is day. The model is small, and its grid dimension is . The grid size is . The standard Newton method is applied to solve the nonlinear system, in which the termination tolerance is and the maximal iterations are 10. The linear solver is BICGSTAB and the preconditioner is CPR-FPF, in which the tolerance is 0.0001 and the maximal iterations are 100. The GJE decoupling method is applied to the linear systems. All wells apply the implicit modeling. Each perforation of the production wells is heated at Btu/day. Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8 show bottom hole pressure and liquid rates, which are compared with CMG STARS. Figure 1 is the bottom hole pressure of the injection well. In this figure, the results from our simulator are marked as “THM” and the results from CMG STARS are marked as “CMG STARS”. From now on, all results are marked the same way. From

Figure 1, we can see that the bottom hole pressures from two simulator match exactly. In the beginning, the bottom hole pressures change dramatically.

Figure 2 is the water rate of the injection well. The model has an injection rate of 100 bbl/day.

Figure 2 shows that both simulators have the correct injection rates and the error is small.

Figure 3,

Figure 4 and

Figure 5 show the water production rate of the first production well, the water production rate of the second production well, and the total water production rate of all production wells. All three figures show that our simulator has the same results as CMG STARS in the early stage. However, as each simulator has its own internal numerical tunings, the curves have slight differences, which are totally acceptable in real production.

Figure 6,

Figure 7 and

Figure 8 are the oil production rate of the first production well, the oil production rate of the second production well, and the total oil production rate of all production wells. Again, we can see that our results match CMG STARS. In this example, we can conclude that our results match CMG STARS very well, which confirms our methods and implementation are correct.

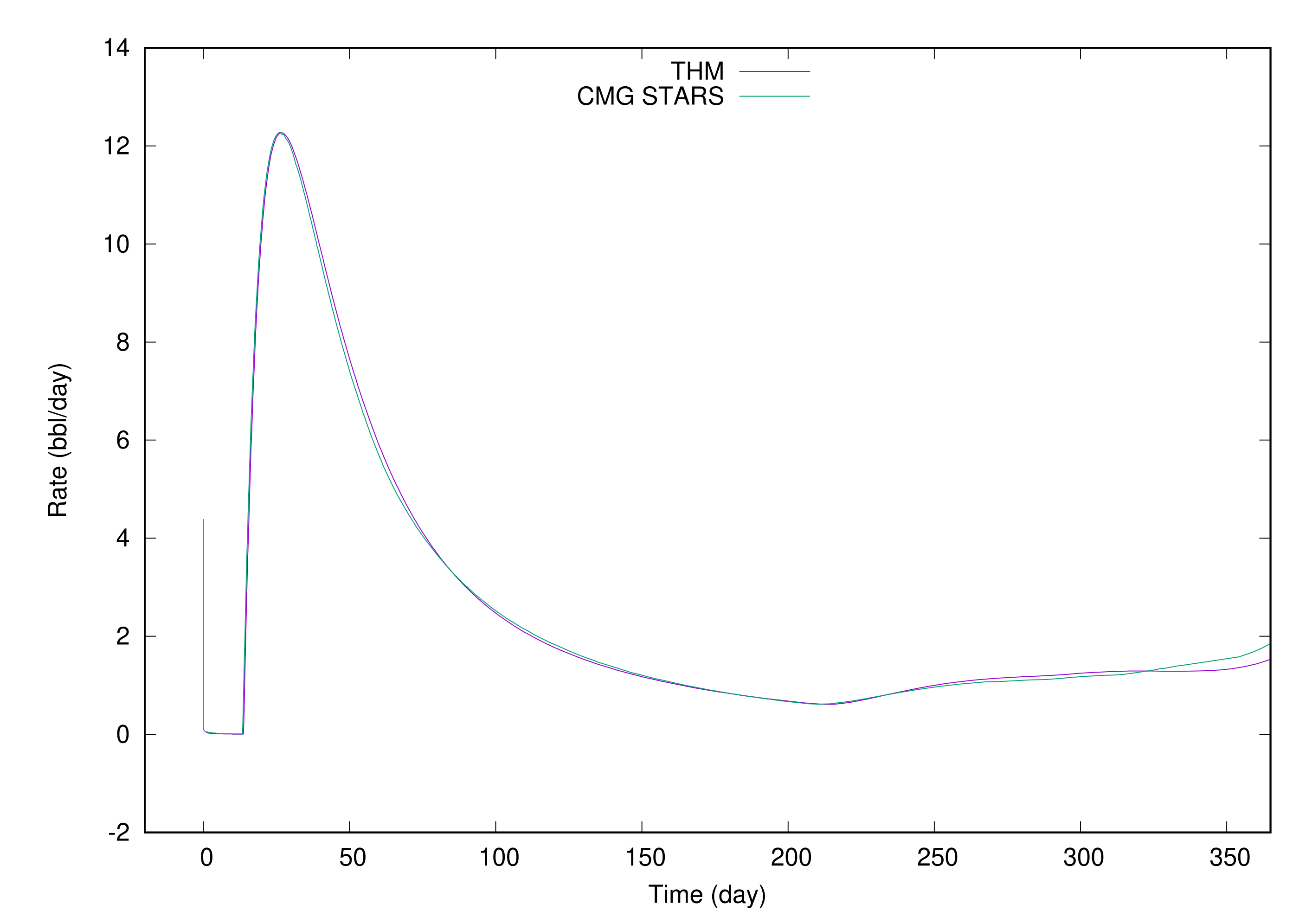

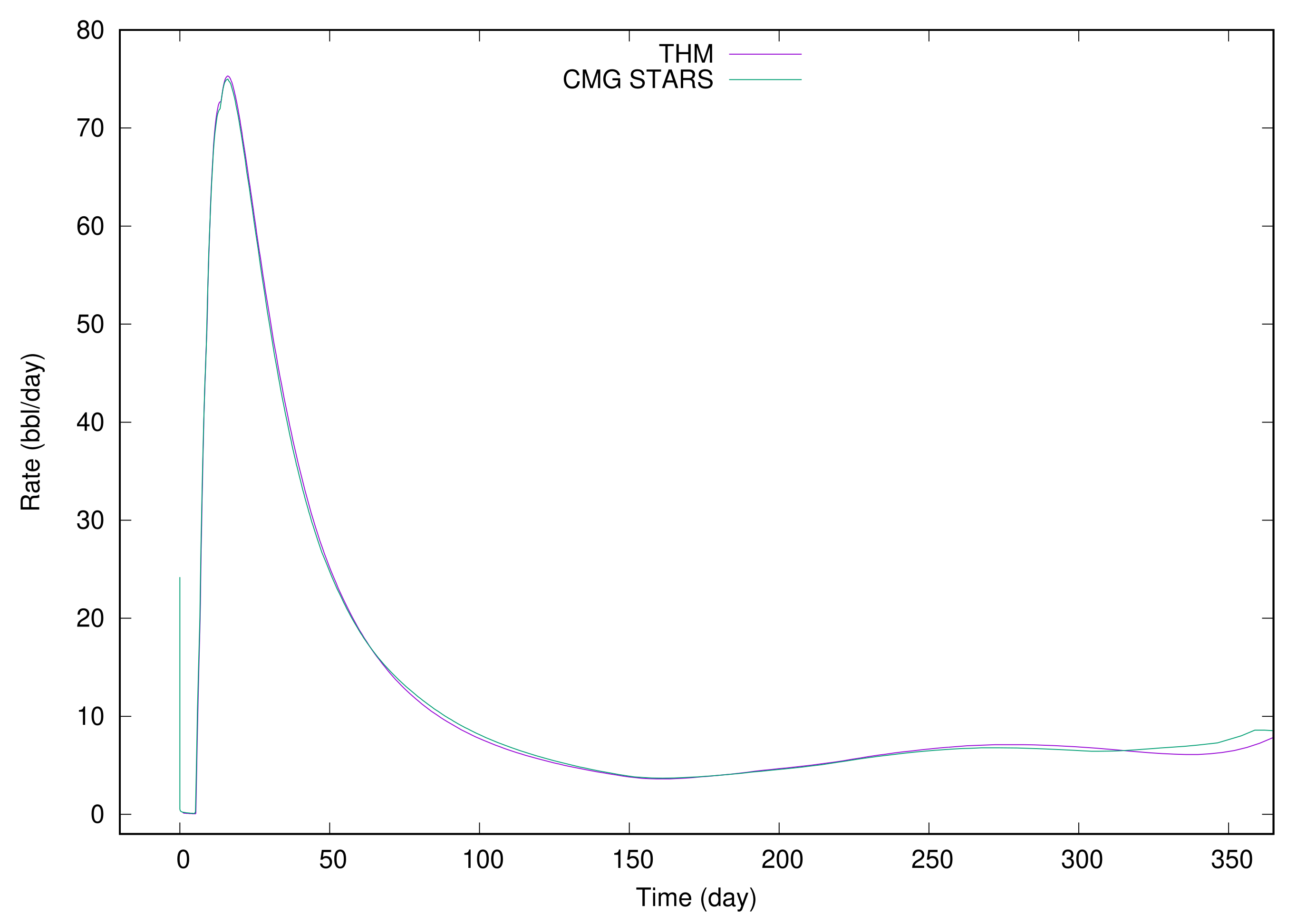

4.1.2. Light Oil and Non-Condensable Gas

Example 2. This model has water, one heavy oil component, one light oil component, and two non-condensable gas (NCG) components. The water and heavy oil component have the same properties as Example 1. Table 6 and Table 7 provide data for light oil component and NCG. Table 8 and Table 9 present well data and initial conditions. It has five vertical wells: one injection well in the center (5, 5), and four production wells in four corners, (1, 1), (1, 9), (9, 1), and (9, 9). The grid dimension is , and the grid size is . The standard Newton method is applied to solve the nonlinear system, in which the termination tolerance is 0.0001 and the maximal iterations are 10. The linear solver is BICGSTAB and the preconditioner is CPR-FPF, in which the tolerance is 0.0001 and the maximal iterations are 100. The GJE decoupling method is applied to the linear systems. All wells apply the implicit modeling. Figure 9, Figure 10, Figure 11, Figure 12, Figure 13, Figure 14 and Figure 15 present bottom hole pressure for injection well and fluid rates, including water rate, oil rate, and gas rate. As each production well has similar results, only results from the first production well are reported. All results are compared with CMG STARS. Example 1 has only one oil component, which stays in oil phase only, while this example has light oil component and non-condensable gas components. Example 1 has no gas production. Furthermore, under reservoir conditions, gas may or may not appear. The physics of this example is more complicated.

Figure 9 is the bottom hole pressure of the injection well, and from the curves, we can see that our results match the CMG STARS simulator.

Figure 10 and

Figure 11 are water production rate of the first production well and the total water production rate of all production wells. Again, a good match can be observed.

Figure 12 and

Figure 13 are the oil production rate of the first production well and the total oil production rate of all production wells.

Figure 14 and

Figure 15 are the gas production rate of the first production well and the total gas production rate of all production wells. These figures show that our results match CMG STARS very well, which confirms our methods and implementation are correct.

4.2. Numerical Performance

Example 3. This example tests a SAGD model with 25 well pairs, which includes one water component, one heavy component, one light component, and two inert gase components, and their properties are the same as Example 2. The grid dimension is and gird size is . The simulation time is 200 days and the maximal time step is 10 days. The Newton tolerance is and its maximal iterations are 15. The linear solver is BICGSTAB, its tolerance is also and its maximal iterations are 60. GJE is the decoupling method. All injectors operate at 3 bbl/day water injection with steam quality of 0.2 and temperature of 450 F . All producers operate at bottom hole pressure of 2000 psi and steam trap temperature difference of 20 F. summaries of our simulator are shown in Table 10. As the model is small, only one computing node is employed. Table 10 provides numerical summaries for time steps (and time cut), total Newton iterations, total linear solver iterations, average linear iterations per Newton iteration, and total simulation time at different CPU cores (MPIs). The RAS method solves a smaller local problem on each CPU core, and the parallel AMG method balances scalability and convergence rate. As expected, when more CPU cores (MPIs) are used, time steps and linear iterations increase. Regardless, the results show that our numerical methods are effective, which can solve a time step in less than 4 Newton iterations and solve a linear system in less than 20 iterations. When more CPU cores are used, the simulation time is cut, which shows that parallel computing is a powerful tool for reservoir simulation.

Example 4. This example tests one water component, one heavy component, and one light component. SAGD process with 756 well pairs is simulated. The grid has a dimension of and size of . All wells are horizontal wells along x direction, if the index of y direction of a grid block equals to 3, 7, 11, 15, 19, 23, 27, 31, 35, 39, 43, 47, 51, 55, 59, 63, 67, 71, 75, 79, 83, 87, 91, 95, 99, 103, 107, 111, 115, 119, 123, 127, 131, 135, 139, 143, 147, 151, 155, 159, 163, 167, 171, 175, 179, 183, 187, 191, 195, 199, 203, 207, 211, or 215, and the index of z direction equals to 4, 10, 16, 22, 28, 34, 40, 46, 52, 58, 64, 70, 76, or 82, then an injection well is defined. For example, (1:60, 3, 10) defines an injection well at (3,10) of -plane, and its perforations are from 1 to 60. This defines 756 injection wells. A production well is defined two blocks under an injection well. Therefore, 756 well pairs and total 1512 wells are defined in the model. All injection wells operate at 10 bbl/day water injection, with a steam quality of 0.2 and temperature of 450 F. All production wells operate at fixed bottom hole pressure of 100 psi. Each perforation of an injection well is heated at rate of btu/day. The simulation time is 100 days. Eight CPU cores (8 MPIs) are employed. The Newton tolerance is 0.0001 and its maximal iterations are 15. The linear solver is BICGSTAB, its tolerance is also 0.0001, and its maximal iterations are 100. Different preconditioners and decoupling methods are employed. Table 11 shows numerical summaries. Table 11 presents preconditioners, decoupling methods, total time steps and time cuts, total Newton iterations, and total linear iteration. Here,

NA means the combination fails to simulate the model. The CPR-FP and the CPR-PF are two-stage CPR preconditioner and the CPR-FPF is a three-stage CPR preconditioner. From the table, we can see the simulations fail if no decoupling method is used. Furthermore, FRS or DRS does not work for thermal reservoir simulations. The ABF decoupling method works very well for black oil simulation, where temperature does not change. Here, the ABF method fails two cases out of three cases while the GJE method works for all cases. Even when the ABF method works with the CPR-PF preconditioner, the GJE decoupling method has much less time steps, Newton iterations, and linear iterations. It is clear that the GJE decoupling works much better than the ABF method. For CPR-FP and CPR-FPF preconditioners, two stage decoupling methods improve the efficiency of Newton method and linear solver. The results clearly show that a proper decoupling method is critical to the success of linear solver and CPR-type preconditioners. The GJE decoupling and the FRS+GJE decoupling work better than the ABF decoupling.

Example 5. This example tests one water component, one heavy component and one light component. SAGD process with 7406 well pairs (14,812 wells, 7406 injectors, and 7406 producers) is simulated. The grid has a dimension of , 11 million grid blocks, and size of . All wells are horizontal wells along x direction. All injection wells operate at 5 bbl/day water injection, with a steam quality of 0.2 and temperature of 450 F. All production wells operate at fixed bottom hole pressure of 300 psi. All wells are modeled by implicit method. The model file has around 185,000 lines. The simulation time is 100 time steps due to system running time limit. The initial time step is days. 10 nodes and 200 CPU cores (200 MPIs) are employed on Niagara, Compute Canada. The Newton tolerance is and its maximal iterations are 15. The linear solver is BICGSTAB, its tolerance is also 0.0001 and its maximal iterations are 100. The maximal changes in a time step for pressure, saturation, mole fraction and temperature are 500 psi, 0.1, 0.1, and 15 F. Numerical summaries are shown by Table 12. Table 12 shows that all tests pass. The Newton method converges in around three iterations, while linear solver converges in four to five iterations in average. For a specific preconditioner, the FRS + GJE decoupling method is always better than the GJE method. When two-stage decoupling method FRS + GJE is applied, less Newton iterations and linear iterations are required.

4.3. Scalability

The parallel computers from Compute Canada are employed. The Niagara supercomputer consists of 1500 nodes, and each node has 40 Intel Skylake cores at 2.4 GHz, for a total of 60,000 cores. Each node has 202 GB (188 GiB) RAM, and EDR Infiniband network is used to communicate. The Cedar supercomputer has a hybrid architecture, which uses Intel E5-2683 v4 “Broadwell” at 2.1 Ghz, E5-2650 v4 at 2.2 GHz, Intel E7-4809 v4 “Broadwell” at 2.1 Ghz, and Intel Platinum 8160F “Skylake” at 2.1 Ghz. It has a total of 58,416 CPU cores for computation, and 584 GPU devices.

A Cray XC30 supercomputer is also employed. Each computation node contains two 2.7 GHz, 12-core Intel E5-2697 v2 CPUs, and 64 GB of memory is shared between the two processors. The memory bandwidth is ~103 Gb/s. The memory access is nonuniform access (NUMA): each processor owns a single NUMA region of 32 GB. Accessing its own region has a lower latency than accessing the other NUMA region. Also, these 24 cores compete the memory channels. The Aries interconnect connects all computation nodes in a Dragonfly topology.

Example 6. This example studies a large thermal model with a grid dimension of , 230 million grid blocks. Twelve nodes and up to 192 CPU cores are employed using the Niagara supercomputer. The Newton method is applied with a tolerance of and maximal iterations of 10. The linear solver is BICGSTAB with a tolerance of and maximal iterations of 100. The preconditioner is the CPR-FPF method. Table 13 presents running time and memory used. Figure 16 shows the scalability. Table 13 shows total time, linear solver time, speedup and total memory, from which we can see that huge amount of memory is required. The simulation is not possible for desktop computers. The running time and

Figure 16 show the simulator, linear solver, and preconditioner have good scalability.

Example 7. This example studies a large thermal model with a grid dimension of , 3.46 billion grid blocks and the resulted linear systems have 17.3 billion unknowns. 360 nodes are employed using the Cedar supercomputer. The Newton method is applied with a tolerance of and maximal iterations of 10. The linear solver is BICGSTAB with a tolerance of and maximal iterations of 100. The preconditioner is the CPR-FPF method with GJE decoupling. Table 14 presents running time and memory used. Table 14 shows that the simulator, linear solver, and preconditioner have excellent scalability. This example proves that our thermal simulator can handle extreme large-scale models. If more computing resources are available, a larger model can be simulated. Our linear solver and preconditioner can solve linear systems with dozens of billions of unknowns. In an ideal case, when the size of MPIs doubles, the simulation time should be cut by half and the ideal speedup should be 2. This example shows a speedup of 1.65 and an efficiency of 82.5%, and we believe the reason is that when more CPU cores are used in one node, these processors compete memory and computing, which reduces the effective memory communication bandwidth. Therefore, the speedup is reduced.

Example 8. This example studies a large thermal model with a grid dimension of , 4.6 billion grid blocks and the resulted linear systems have 23 billion unknowns; 1024 nodes are employed. The Newton method is applied with a tolerance of 0.0001 and maximal iterations of 10. The linear solver is BICGSTAB with a tolerance of and maximal iterations of 100. The preconditioner is the CPR-FPF method with GJE decoupling. Table 15 presents running time and memory used and Figure 17 shows the scalability. Table 15 shows overall time, linear solver time, linear solver speedup, overall speedup, and total memory. When 4096 and 6144 cores are used, the scalabilities are 1.89 and 2.7, respectively, while the best scalabilities should be 2 and 3. In this case, the parallel efficiencies are 94% and 90%, which are good for parallel numerical simulations. However, this example shows linear solver has better speedup and parallel efficiency. If special optimization techniques are applied, such as multi-level load balancing that considers the architecture of the system and multi-layer communications, the communication volume and latency will be reduced and scalability can be improved. When 12,288 cores are employed, each node runs 12 cores and 12 MPIs, and each processor uses its 6 cores. In this case, memory access may be an important issue, which may reduce the effective memory bandwidth of each MPI and increase computation time.

Example 9. This example studies a simplified problem with a grid dimension of 216 billion grid blocks. The linear solver is BICGSTAB and the preconditioner is the RAS method. Table 16 presents running time and memory used for 4096 computation nodes. Table 17 presents running time and memory used for 4200 computation nodes. Each node uses 2, 4, 6, 12, and 24 cores. Figure 18 is the speedup curve. Table 16 and

Table 17 show numerical summaries, including CPU cores, total simulation time, solver time, speedup, and memory usage. The first table uses 4024 computation nodes, and the second table uses 4200 computation nodes. The tables and curves show our parallel simulator has good scalability and efficiency. To the best of our knowledge, this is the largest thermal model, and it uses the most CPU cores for thermal reservoir simulation.