In-Orchard Sizing of Mango Fruit: 1. Comparison of Machine Vision Based Methods for On-The-Go Estimation

Abstract

1. Introduction

1.1. Context

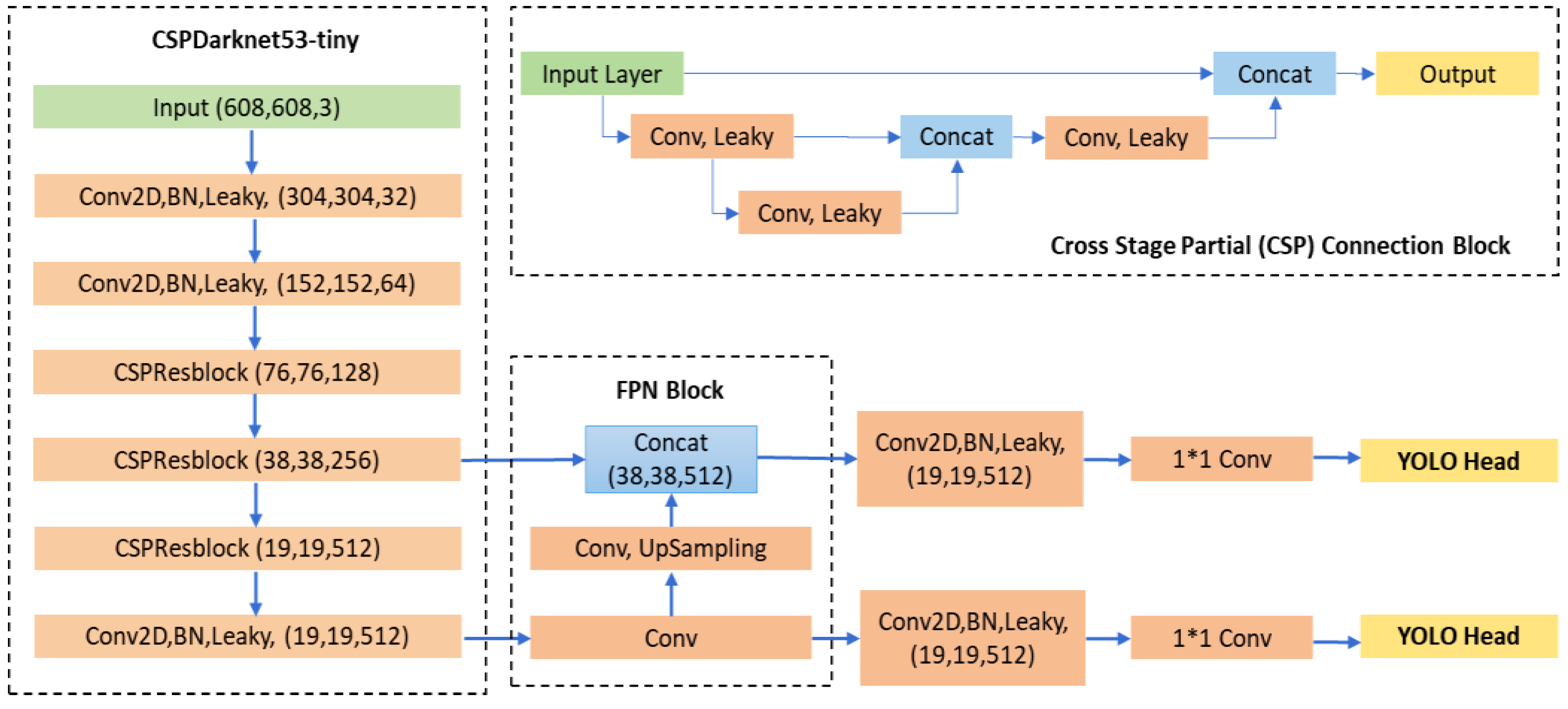

1.2. Object Detectors

1.3. Segmentation

1.4. Objectives

2. Materials and Methods

2.1. Image Sets

2.2. Training of YOLO and Mask R-CNN Models

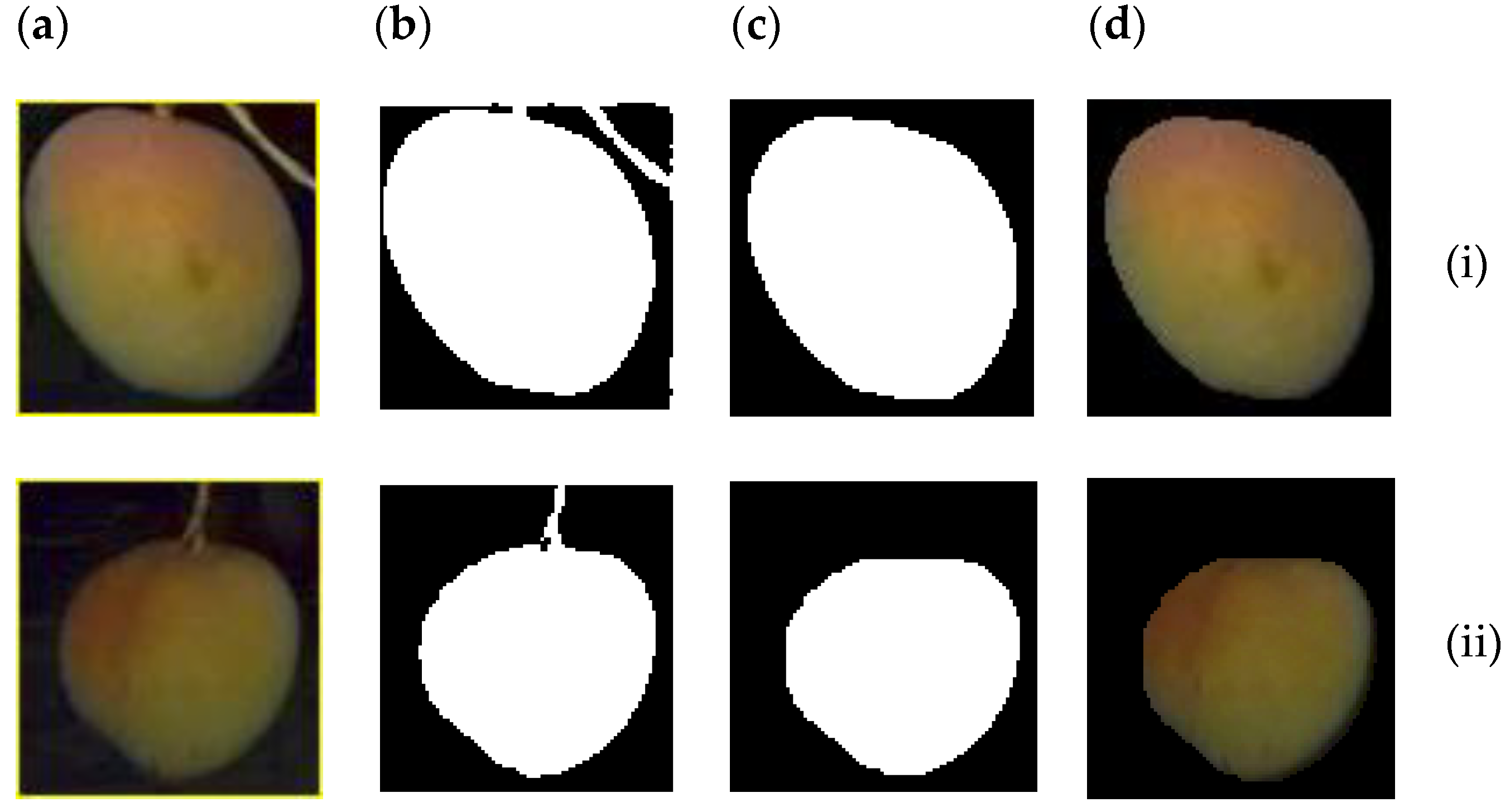

2.3. Fruit Sizing Methods

2.4. Estimation of Fruit Mass

2.5. Exclusion Criteria

2.6. Fruit Sizing Exercises

2.7. Statistics

3. Results and Discussion

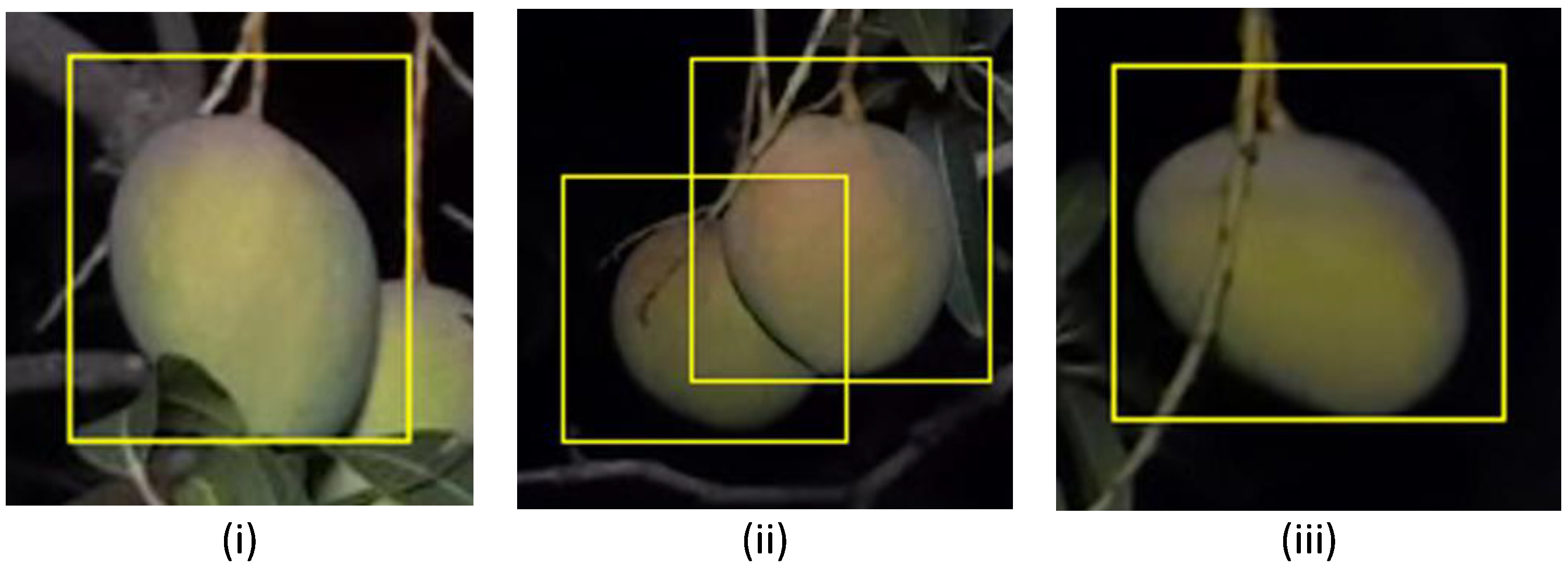

3.1. Model Performance for Fruit Detection

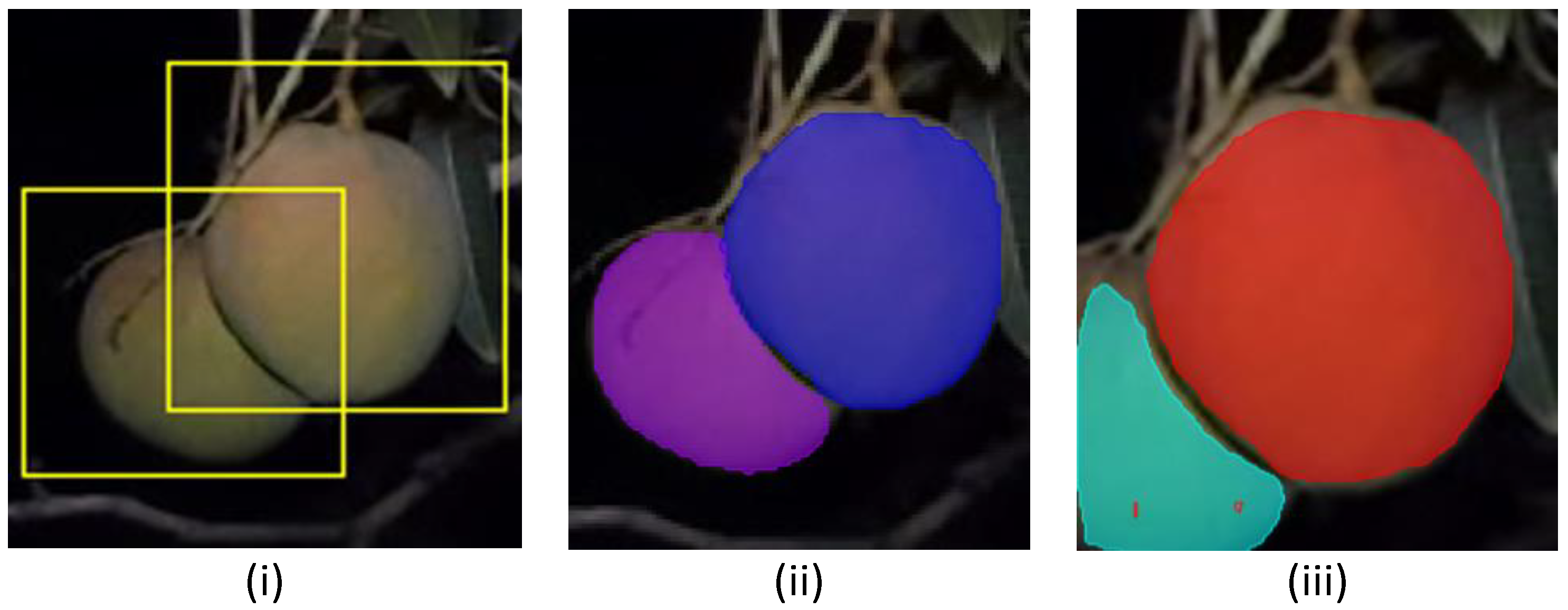

3.2. Exclusion of Occluded Fruit

3.3. Fruit Sizing Method Evaluation

3.4. Fruit Weight Estimation

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Anderson, N.T.; Walsh, K.B.; Koirala, A.; Wang, Z.; Amaral, M.H.; Dickinson, G.R.; Sinha, P.; Robson, A.J. Estimation of Fruit Load in Australian Mango Orchards Using Machine Vision. Agronomy 2021, 11, 1711. [Google Scholar] [CrossRef]

- Moreda, G.; Ortiz-Cañavate, J.; García-Ramos, F.J.; Ruiz-Altisent, M. Non-destructive technologies for fruit and vegetable size determination–a review. J. Food Eng. 2009, 92, 119–136. [Google Scholar] [CrossRef]

- Utai, K.; Nagle, M.; Hämmerle, S.; Spreer, W.; Mahayothee, B.; Müller, J. Mass estimation of mango fruits (Mangifera indica L., cv. ‘Nam Dokmai’) by linking image processing and artificial neural network. Eng. Agric. Environ. Food 2019, 12, 103–110. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Martínez-Guanter, J.; Egea, G.; Raja, P.; Pérez-Ruiz, M. Deep learning techniques for estimation of the yield and size of citrus fruits using a UAV. Eur. J. Agron. 2020, 115, 126030. [Google Scholar] [CrossRef]

- Kurtser, P.; Ringdahl, O.; Rotstein, N.; Berenstein, R.; Edan, Y. In-Field Grape Cluster Size Assessment for Vine Yield Estimation Using a Mobile Robot and a Consumer Level RGB-D Camera. IEEE Robot. Autom. Lett. 2020, 5, 2031–2038. [Google Scholar] [CrossRef]

- Gené-Mola, J.; Sanz-Cortiella, R.; Rosell-Polo, J.R.; Escolà, A.; Gregorio, E. In-field apple size estimation using photogrammetry-derived 3D point clouds: Comparison of 4 different methods considering fruit occlusions. Comput. Electron. Agric. 2021, 188, 106343. [Google Scholar] [CrossRef]

- Lin, G.; Tang, Y.; Zou, X.; Li, J.; Xiong, J. In-field citrus detection and localisation based on RGB-D image analysis. Biosyst. Eng. 2019, 186, 34–44. [Google Scholar] [CrossRef]

- Zheng, B.; Sun, G.; Meng, Z.; Nan, R. Vegetable Size Measurement Based on Stereo Camera and Keypoints Detection. Sensors 2022, 22, 1617. [Google Scholar] [CrossRef]

- Wang, Z.; Walsh, K.B.; Verma, B. On-tree mango fruit size estimation using RGB-D images. Sensors 2017, 17, 2738. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning—Method overview and review of use for fruit detection and yield estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning for real-time fruit detection and orchard fruit load estimation: Benchmarking of ‘MangoYOLO’. Precis. Agric. 2019, 20, 1107–1135. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022. [Google Scholar] [CrossRef]

- Shi, R.; Li, T.; Yamaguchi, Y. An attribution-based pruning method for real-time mango detection with YOLO network. Comput. Electron. Agric. 2020, 169, 105214. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Sabzi, S.; Abbaspour-Gilandeh, Y.; Hernandez-Hernandez, J.L.; Azadshahraki, F.; Karimzadeh, R. The use of the combination of texture, color and intensity transformation features for segmentation in the outdoors with emphasis on video processing. Agriculture 2019, 9, 104. [Google Scholar] [CrossRef]

- Zu, L.; Zhao, Y.; Liu, J.; Su, F.; Zhang, Y.; Liu, P. Detection and Segmentation of Mature Green Tomatoes Based on Mask R-CNN with Automatic Image Acquisition Approach. Sensors 2021, 21, 7842. [Google Scholar] [CrossRef]

- Lee, J.; Nazki, H.; Baek, J.; Hong, Y.; Lee, M. Artificial intelligence approach for tomato detection and mass estimation in precision agriculture. Sustainability 2020, 12, 9138. [Google Scholar] [CrossRef]

- Hsieh, K.-W.; Huang, B.-Y.; Hsiao, K.-Z.; Tuan, Y.-H.; Shih, F.-P.; Hsieh, L.-C.; Chen, S.; Yang, I.C. Fruit maturity and location identification of beef tomato using R-CNN and binocular imaging technology. J. Food Meas. Charact. 2021, 15, 5170–5180. [Google Scholar] [CrossRef]

- Neupane, C.; Koirala, A.; Wang, Z.; Walsh, K.B. Evaluation of depth cameras for use in fruit localization and sizing: Finding a successor to Kinect v2. Agronomy 2021, 11, 1780. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Walsh, K.B.; McGlone, V.A.; Wohlers, M. Sampling and statistics in assessment of fresh produce. In Developing Smart Agri-Food Supply Chains: Using Technology to Improve Safety and Quality; Burleigh Dodds Science Publishing: Cambridge, UK, 2021. [Google Scholar]

- Spreer, W.; Müller, J. Estimating the mass of mango fruit (Mangifera indica, cv. Chok Anan) from its geometric dimensions by optical measurement. Comput. Electron. Agric. 2011, 75, 125–131. [Google Scholar] [CrossRef]

- Anderson, N.T.; Subedi, P.P.; Walsh, K.B. Manipulation of mango fruit dry matter content to improve eating quality. Sci. Hortic. 2017, 226, 316–321. [Google Scholar] [CrossRef]

- Gonzalez, J.P.B.; Ortiz, F.A.P.; Rodriguez, C.P.P. Mass and volume estimation of passion fruit using digital images. IEEE Lat. Am. Trans. 2017, 15, 275–282. [Google Scholar] [CrossRef]

- Huynh, T.; Tran, L.; Dao, S. Real-time size and mass estimation of slender axi-symmetric fruit/vegetable using a single top view image. Sensors 2020, 20, 5406. [Google Scholar] [CrossRef]

| Dataset | Description |

|---|---|

| Dataset-A | Training set of 1300 tiles and test set of 130 tiles of 512 × 612 pixels RGB images of cultivar Calypso fruit at Childers, QLD, Australia. The images were captured using a 5 MP (megapixels) ace 1300ac camera (Basler, Ahrensburg, Germany) operated at night under 400 W LED flood lighting, with a GNSS system for geolocation, on an imaging rig mounted to a farm vehicle, as described in [1]. Dataset acquired from [13]. |

| Dataset-B | Training set of 454 tiles and test set of 92 images of 540 × 640 pixels. Images were of cultivar Honey Gold and Keitt at Bungundara, QLD, Australia, captured at night with an Azure Kinect RGB-D camera mounted on the imaging rig described in [1]. |

| Dataset-C | Training set of 1080 and test set of 120 bounding box snips acquired using YOLOv4-tiny detection on Dataset-B images. Image size is variable with width and height < 256 pixels. |

| Criteria | Criteria Set-B | Criteria Set-A |

|---|---|---|

| Threshold for ellipse area in pixels | 500 to 12,000 | 500 to 12,000 |

| Area ratio between area inside contours and ellipse area | >0.97 | >0.90 |

| Eccentricity of ellipse (fitted ellipse being closer to circle) | <0.75 | <0.8 |

| Absolute difference between refined bounding box height and ellipse major axis length (in pixels) | <5 | <8 |

| Length (mm) | Width (mm) | Weight (g) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAX | MIN | AVG | SD | MAX | MIN | AVG | SD | MAX | MIN | AVG | SD | |

| HG (n = 60) | 128.2 | 82.7 | 107.5 | 9.4 | 110.3 | 65.1 | 91.2 | 10.1 | - | - | - | - |

| Keitt (n = 44) | 160.9 | 75.5 | 110.1 | 17.0 | 122.8 | 66 | 88.6 | 12.6 | 1430 | 180 | 499 | 247 |

| Models | mAP IoU = 0.5 | At Confidence Threshold 0.80 | Speed (ms) | BFLOP | Model Weights (MB) | ||

|---|---|---|---|---|---|---|---|

| F1 | FP | Avg. IoU (%) | |||||

| MangoYOLO | 98.55 | 0.95 | 22 | 79.1 | 5.71 | 15.6 | 52.5 |

| YOLOv3 | 98.96 | 0.94 | 26 | 82.6 | 28.9 | 139.5 | 234.9 |

| YOLOv3-tiny | 98.06 | 0.93 | 23 | 80.7 | 5.2 | 11.6 | 33.1 |

| YOLOv4 | 99.2 | 0.97 | 26 | 82.9 | 32.2 | 127.3 | 244.2 |

| YOLOv4-tiny | 98.63 | 0.94 | 22 | 82.4 | 5.5 | 14.5 | 22.4 |

| YOLOv7 | 99.11 | 0.89 | 1 | 86.8 | 27.6 | 103.2 | 139.4 |

| YOLOv7-tiny | 99.02 | 0.91 | 3 | 86 | 7.7 | 11.8 | 23 |

| Model | mAP (Tiled Images) | mAP (Bounding Box Snips) |

|---|---|---|

| Mask R-CNN (ResNet101) | 95.6 | 85.28 |

| Mask R-CNN (Resnet50) | 88.35 | 78.75 |

| Exclusion Criteria | Fruit Acceptance (%) | |||

|---|---|---|---|---|

| HG | Keitt | Total | ||

| Criteria—A | Method 1 | 80 | 68 | 75 |

| Method 2 | 80 | 77 | 79 | |

| Method 3 | 67 | 68 | 67 | |

| Average | 74 | |||

| Criteria—B | Method 1 | 45 | 52 | 48 |

| Method 2 | 71 | 68 | 70 | |

| Method 3 | 58 | 50 | 55 | |

| Average | 58 | |||

| Retained HG (mm) | Excluded HG (mm) | Retained Keitt (mm) | Excluded Keitt (mm) | ||

|---|---|---|---|---|---|

| Criteria—A | L | 107.3 ± 10.0 | 108.0 ± 6.0 | 113.3 ± 17.3 | 99.1 ± 9.9 |

| W | 90.9 ± 10.6 | 91.6 ± 8.2 | 91.17 ± 12.4 | 79.8 ± 8.8 | |

| Criteria—B | L | 107.4 ± 9.7 | 107.7 ± 8.4 | 110.6 ± 15.8 | 108.9 ± 18.5 |

| W | 92.2 ± 9.9 | 88.8 ± 10.3 | 89.13 ± 11.3 | 87.4 ± 14.5 |

| Honey Gold | Keitt | ||||||

|---|---|---|---|---|---|---|---|

| M1 | M2 | M3 | M1 | M2 | M3 | ||

| Criteria—A | RMSE | 6 | 5.2 | 6 | 7.6 | 7.8 | 6.5 |

| R2 | 0.7 | 0.8 | 0.7 | 0.9 | 0.9 | 0.9 | |

| Bias | 0.7 | 2 | 1.5 | −1.3 | −3.8 | −4.2 | |

| Criteria—B | RMSE | 5.9 | 4.7 | 5.4 | 6.8 | 5.1 | 5.6 |

| R2 | 0.8 | 0.9 | 0.8 | 0.9 | 0.9 | 0.9 | |

| Bias | 3 | 3.1 | 1 | −2.1 | −2.4 | −3.8 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Neupane, C.; Koirala, A.; Walsh, K.B. In-Orchard Sizing of Mango Fruit: 1. Comparison of Machine Vision Based Methods for On-The-Go Estimation. Horticulturae 2022, 8, 1223. https://doi.org/10.3390/horticulturae8121223

Neupane C, Koirala A, Walsh KB. In-Orchard Sizing of Mango Fruit: 1. Comparison of Machine Vision Based Methods for On-The-Go Estimation. Horticulturae. 2022; 8(12):1223. https://doi.org/10.3390/horticulturae8121223

Chicago/Turabian StyleNeupane, Chiranjivi, Anand Koirala, and Kerry B. Walsh. 2022. "In-Orchard Sizing of Mango Fruit: 1. Comparison of Machine Vision Based Methods for On-The-Go Estimation" Horticulturae 8, no. 12: 1223. https://doi.org/10.3390/horticulturae8121223

APA StyleNeupane, C., Koirala, A., & Walsh, K. B. (2022). In-Orchard Sizing of Mango Fruit: 1. Comparison of Machine Vision Based Methods for On-The-Go Estimation. Horticulturae, 8(12), 1223. https://doi.org/10.3390/horticulturae8121223