1. Introduction

In this section, we first discuss the basic concepts of social robots and proactivity and their relevance in our daily lives. We then converge these concepts to form the primary objective of creating additional value by adding proactivity in robot operating system (ROS) powered indoor social robots by using a belief–desire–intention (BDI) model.

1.1. Social Robots

The idea of the robot gives an impression of human-like machines that are primarily created to serve their creators. The way how robots serve the human has a broader scope. The robots can be used in daily household chores to space exploration missions depending upon how they are built and how much intelligence is installed on them. With the evolution of time, the way to define a robot has changed from

“a term for mechanical men that were built to work for assembling products in a factory” to

“an entity which humans can have social interactions with”. The inception of social robots was biologically inspired, and social robots were initially used to study the swarms or the behavior of insects [

1]. Later, social robots were used for interaction with humans.

According to social scientist Kate Darling,

“A social robot is a physically embodied, autonomous agent that communicates and interacts with humans on an emotional level” [

2]. For this article, it is important to distinguish social robots from inanimate computers and industrial or service robots (not designed to elicit human feelings and mimic social cues). Social robots also follow social behavior patterns, have various “states of mind”, and adapt to what they learn through their interactions. Usually, the social robots are in humanoid or animaloid form to create an emotional connection with the human as forms and shapes of social robots are very important. The social interaction is expected to be similar to verbal communication with visual and tactile perception. Based on the interactions, social robots can be classified into the following categories [

3].

Socially evocative. These robots rely on human action to generate a particular set of feelings [

4].

Socially situated. These robots react to the perceptions derived from the social environment in which they are situated. The robots can distinguish the social agents and objects in the environment [

1].

Sociable. These robots have models for social cognition and proactively engage with the human for some social aims [

4].

Socially intelligent. These robots try to replicate the social intelligence of humans based on the model of cognition and social competence [

5].

The rise of demand for personal care for ageing people and technological advancements have made it possible for social robots to be used extensively for elderly care. Assistive technologies in the form of social robots help the older population to live an independent life in their homes. Social robots can provide a wide range of interactive services like telecare and robot-assisted therapy. Such robots can be used for patient care for older people with mental and cognitive impairments as well [

6]. Some of the social robots can monitor the movement, blood pressure, breathing or heart problems and warn a related person in case of any danger or risk [

7]. Social robots have been used for a wide range of interactions with children as well. A social companion robot called ’Arash’ was built to provide therapeutic intervention in pediatric hospitals [

8]. The social robot was also utilized to assist the children with cancer [

8]. Social robots have been recently reported to be used in the education and care of children with developmental disabilities [

9]. Proactive robots have been used as tutors and peer learners to deliver education as presented in [

10]. As such, social robots are currently used in hospitals, homes, shopping malls, and convention centers to interact with people to either welcome, converse or take care of the people [

11]. Social robots have taken challenges of interacting with, assisting, serving and exploring with humans to help humans in different ways. For these applications, proactive behaviors are necessary for social robots.

1.2. Proactivity

Social robots are autonomous robots that communicate with humans following a set of social rules defined for them [

12]. These robots use three different control architectures to decide the actions required to respond to the environment—deliberative, reactive and hybrid. In deliberative control, robots have thoughtfulness in decision making as there are capacities to relate to the past or future states, beyond the present sensor inputs and stimuli, to take relevant action. Reactive control is similar to ‘stimulus-response’ control mechanism in which the robots respond very quickly to changing and unstructured environments by tightly coupling the sensory inputs and effector outputs. In the hybrid control, one component deals with planning the actions while other deals with immediate reactions that do not usually require learning abilities. The coupling of two different mechanisms in hybrid architecture can be difficult as two control mechanisms have to communicate with each other continuously.

Based on these control architectures, a social robot can interact with humans in two ways. The robots can be asked to do things for humans and in such cases, the robots are reactive. In contrast, robots can automatically help the user without being asked, and in such cases, robots are proactive. The notion of proactivity in social robots can be a useful utility as social robots are primarily meant to interact with the human in a more humanly ways. An example (adopted from [

13]) to understand the difference between reactive and proactive behavior in social robots is included in

Appendix A.

1.3. Robot Operating System (ROS)

ROS is an open-source, C++ based, a general software framework for robot software development which gives operating system functionalities [

14]. Those functionalities are hardware abstraction, low-level device control, implementation of commonly-used features, message-passing between processes, and package management. It is based on a graph architecture where each node receives and process several messages from/to sensor, actuators about their state. This operating system works on top of Linux (Ubuntu) and can be used in Windows with some reduced features. ROS creates an ecosystem where different components called nodes are interconnected using a system of message communication between them. ROS is one of the key driving components, that power the commercial indoor robots.

1.4. BDI

BDI is one of the major approaches to build a multi-agent system by intelligent programming agents. This model is inspired by human reasoning and based on three entities, namely, beliefs, desire, and intentions [

15]. It gives a mechanism for separating the activity of choosing an action from the execution of currently active plans. The agents in a system are defined in terms of these entities. This model also considers the resource bound so that an intention will result after the agent’s reasoning. BDI model expects the agent to act in a dynamic environment such that the agent’s reasoning should take the environment changes into account to make an action. The three entities of a BDI model are explained as follow:

Beliefs. Beliefs are the entities that represent the informational state of the agent. Beliefs reflect the knowledge of the robot. Beliefs are stored in belief sets.

Desires. Desires are the entities that represent the motivational state of an agent or the goals, objectives or situation which the agent would like to achieve. Desires are what the agent wants to accomplish.

Intentions. Intentions are the entities that represent the deliberative state of an agent. Plans are sequences of actions that are taken by an agent to accomplish the goal.

For social robots, the sensor outputs build the belief sets to signify the environment around the robots in terms of different values of different parameters. A particular belief set describes a specific situation in which the robot is located in a specific instant of time. Based on the location, a goal is defined for the robot which can be referred to as desire. A BDI interpreter or engine selects a particular action (intention) from a plan library, which is a collection of intentions, based on the situation.

1.5. Rationale

Social robots have become integral and inseparable parts of our life. From the hospitals to convention stores like home, social robots are being extensively used to interact with the human in one way or another. The social robots are now considered as a substitute for human wherever and whenever human is not able to be present for the specific tasks. The robots, therefore, are expected to act more as humans and proactivity can be the feature in such robots that will enable this capability. Many studies have tried to develop proactive behaviors in robots. But when it comes to the commercial indoor social robots powered by ROS, there are no well-defined system architectures and implementations. Given the influence of such robots in a commercial indoor environment, this study tries to explore the possibilities of enhancing proactivity in such commercial indoor social robots. This work defines a clear method to implement different activities of a social robot as proactive behaviors by integrating human reasoning-based BDI framework into the system architecture. The work validates the implementation by developing a vision-based proactive behavior enabled by PROFETA (BDI) framework in an indoor social robot, Waldo, operated by ROS. The specific contribution of the study are listed below:

A validated modular system architecture, with features such as modularity, flexibility, and rational work distribution, that facilitates different logical blocks to be integrated with robots controlled by ROS for proactive behaviors.

A foundation for the development of human-like behaviors in ROS-controlled robots for daily-life applications.

The rest of the paper is organized as follow:

Section 2 discusses the related work, while

Section 3 explains the case study set up along with the system overview.

Section 4 discusses the results, while

Section 5 concludes the paper.

2. Related Works

In this section, we describe the background of different BDI models and different frameworks built to integrate behavior models in robots. Furthermore, we present different works where researchers have tried to incorporate such models in robots for various daily life applications.

2.1. BDI Model

Whenever the design of the cognition model for software agents comes into play, the BDI model is one of the most popular architectural choices. BDI models provide an explicit and declarative representation of informational attitudes, motivational attitudes, and deliberative commitments. Myers et al. [

16] divided the BDI models into two broad categories of B-DOING and Delegative models. In the B-DOING model, motivational attitudes are highly adapted, and desires correspond to what the agent wishes. Furthermore, obligations corresponded to the responsibilities of other agents and norms correspond to conventions derived from the agent’s role in the environment. The goal created for the agent needs to be consistent and achievable [

17]. According to the definition of the goal, the intentions for executions are planned. In the delegative model, the goals are defined as candidate goals and adopted goals [

18]. Candidate goals are those that can be inconsistent internally while Adopted goals are the consistent and coherent ones in the BDI model. This model can even incorporate user-specified guidance and preferences from the user in the form of advice. The B-DOING framework lacks the distinctions between types of goals for proactive assistance, while the delegative BDI framework lacks the distinctions between types of motivational attitudes [

16].

2.2. BDI Frameworks

Russel et al. [

19] developed the agent factory framework as an open-source collection of various tools, platforms and languages that ultimately facilitate the development and development of multi-agent systems. Winikoff [

20] built a highly portable, robust and cross-platform environment called JACK for building, running and integrating commercial-grade multi-agent systems. In the BDI framework called JADE [

21], the agent platform can be distributed among different independent machines and controlled remotely. The configuration can even be changed at run-time by the moving agents from one machine to another one during the implementation. Braubach and Pokahr [

22] developed a framework called

JADEX, based on XML and Java, that follows BDI model and facilitates easier intelligent agent system construction with an engineering perspective. JASON is a super flexible platform developed as an extension of AgentSpeak [

23] by [

24], that implements the semantics of the language and provides a good platform for development of multi-agent systems with many customizable features. The comparison between different behavior model platform is given in

Table 1.

ROS supports the C++ and Python programming languages for communication between different distributed nodes in its ecosystem. Because of various BDI frameworks available in Python, a pythonic framework is considered in this study.

2.3. Application of BDI Models in Robots

The behavior model was adapted to study the natural engagement of robots with humans to show exhibit proactive behaviors. The proactive behaviors in robots were imagined to increase the human-robot interaction and utility value in the use of robots. As such, the works related to proactive behavior in robots were initiated with mixed-initiative approaches. Finzi and Orlandini [

25] developed an architecture based on a planner mixed-initiative approach for robots used in search and rescue operations. The study had a model-based execution monitoring and reactive planner for the execution of the tasks. Adams et al. [

26] proposed an effect based mixed-initiative interaction approach for human-robot interaction. The robot took initiatives upon changes in human emotions like detecting drowsiness and inattentiveness. The robot, as developed by Acosta et al. [

27] showed some proactive behaviors by monitoring activities and defining tasks as a schedule. Satake et al. [

28] proposed a behavior model to initiate a conversation with pedestrians walking on the streets. The appropriate instant of time to start the conversation or interaction with people was studied in work done by Shi et al. [

29]. Moreover, Garrel et al. [

30] proposed a behavior model for a proactive model that tries to convince people to initiate a conversation with different behaviors and emotions. The study carried out by Araiza-Illan et al. [

31] proposed the use of the BDI model to increase the level of realism and human-like simulation of the robots. An automated testbench was implemented for simulation of cooperative task assembly between a humanoid robot and people in the robot operating system and Gazebo. A soccer-playing robot based on BDI architecture was developed by Gottifredi et al. [

32] which allowed the specification of declarative goal-driven behavior based on high-level reasoning and reactivity when required. The work of Duffy et al. [

33] developed a multi-layered BDI architecture with an egocentric robot control strategy to make robots capable of explicit social behavior. Pereira et al. [

34] proposed an extension to BDI architecture to support artificial emotions in the form of emotional-BDI architecture.

Given the current state of proactivity in social robots, this study tries to extend the capabilities of such robots to include vision-based activity in a social robot. The integration is based on a modular architecture onto which other logical blocks can easily be integrated for more advanced proactive behaviors in a think-like-human fashion.

3. Case Study Setup

In this section, we explain the use case scenario created to develop a proactive behavior in a social robot Waldo. Furthermore, we define a system framework based on existing technologies to integrate modular blocks of OpenCV and BDI reasoning with the ROS ecosystem with a detailed explanation of each step as follows.

3.1. Use Case Scenario

For this study, we consider a visual image-based activity for adding a proactivity behavior in the social robot. The indoor robot Waldo has cameras installed in its eyes which gather the image feeds from the environment. This image-feeds help to set up a belief about the situation. The camera feeds are processed using a module in OpenCV for person detection. This module establishes a belief for the robot concerning the presence of a person in the environment. In case a person detected by the OpenCV module, Waldo sets up a goal of greeting the person without any explicit commands from the person. In this experiment, the robot can perform two precise actions. Upon the detection of the person, using the BDI framework, the robot greets the detected people with a sentence. Upon continuous detection of the person for a fixed time, the robot changes its belief and offers additional help to the person by speaking a different sentence. For this work, the actions which Waldo can perform is only limited to speech, but advanced services can easily replace these actions. This provision is made in the experiment to show how beliefs can be changed according to the environment so that the actions taken by the robot can be relevant and more interactive.

3.2. Social Indoor Robot—Waldo

The robot under consideration for this study is Waldo, which is a multi-service robot manufactured by Immersive Robotics [

35]. Waldo is a telepresence service robot with advanced vision capabilities. The robot has an Arduino card for basic control functions and a Linux card with ROS installed as an operating system for more advanced and elaborate functionalities. Waldo has an adjustable height of 130 cm to 170 cm. The robot is autonomous with LIDARs, sonars, microphones, and cameras. Waldo is built in a humanoid shape as an indoor social robot for a welcoming, talking, understanding and communicating with people. The movement of Waldo can be controlled remotely by using a mouse, keyboard, joysticks, pads, smartphones, tablets or any other desired peripherals. Waldo is shown in

Figure 1.

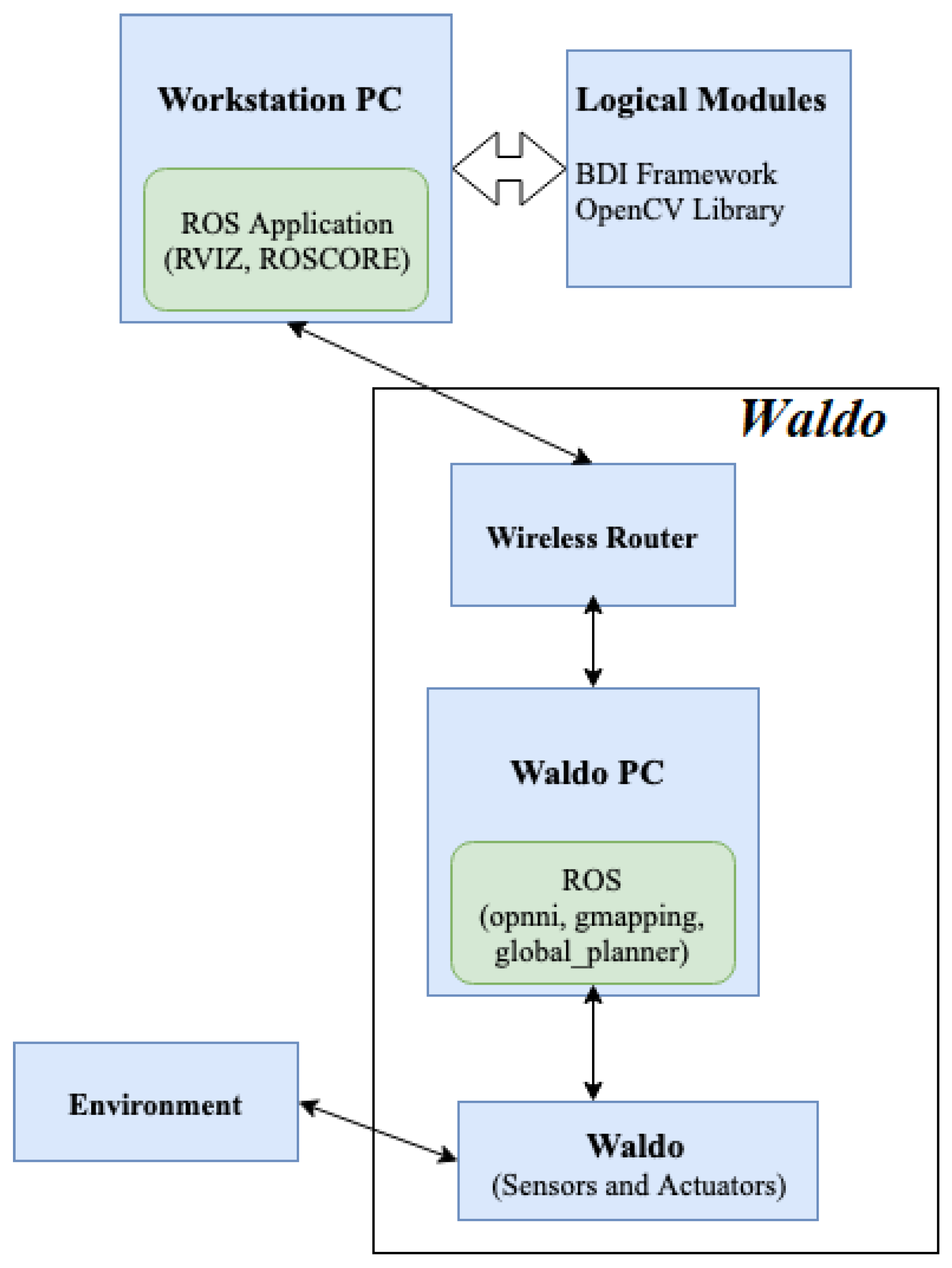

3.3. System Overview

The main goal of this study is to develop a flexible and modular framework using existing technologies that could facilitate the integration of different blocks as modules to the framework, which ultimately contribute in the development of proactive behaviors in a social robot controlled by ROS. The overall system overview for achieving the behavior model of the environment in indoor models is reflected in

Figure 2. Different sensors installed in the indoor robot Waldo collect the information about the environment. The cameras, LiDAR and Kinect sensor help to collect information about the environment for a given instant of time. The information is relayed to Waldo PC, which is connected to the monitoring PC through a wireless connection. Because of the limited capabilities of Waldo PC, the compute-intensive logical modules can be run on a more powerful monitoring PC. The logical models are responsible for deriving various knowledge from the data about the environment collected by sensors of the robot. Based on this knowledge base, the BDI framework establishes the beliefs and set up goals for any given instant of time. The framework also chooses a set of actions from the predefined plan list to accomplish the goal. The actions are relayed to

Waldo PC through a wireless connection that directs different actuators in

Waldo to perform a wide range of action. The internal mechanisms in ROS manage the communication between the nodes and

Waldo PC. The Waldo PC, which is equipped with Linux and ROS, can have several logical modules installed on it or monitoring PC to establish one or more beliefs about the environment. The modules in the logical layer can be BDI framework, OpenCV for vision processing and other intelligent bricks for establishing important beliefs about the environment. The BDI framework responds to the established beliefs and set up goals for any instant of time. The engine in the framework chooses a plan of actions from the predefined libraries for goal accomplishment. The plan execution is relayed to Waldo PC, which generates actual actions in the robot to respond to the environment. The flow operation can be monitored using a monitoring PC connected to Waldo PC over a wireless network.

3.4. BDI Modeling

The BDI modeling of the problem should be able to answer the following question effectively and efficiently.

When to act? The robot works whenever it detects a person inside the room. So, an efficient mechanism for the detection of a person should be integrated into the robot. An effective block to trigger actions has to be adopted.

What actions to take? The actions which are expected to be made by robots hugely depend upon the detection of the people. The action can be a simple greeting message delivered to the person or message delivered to the person offering some help or no action at all.

How to perform the actions? Based on the set of beliefs about the environment, the robot can decide to take action. For any case of detection of a person, the robot employs its text to speech node for speaking out sentences to either greet or offer help to the people. For non-detection of the person, the robot can deliberate itself to stay idle or go to sleep mode.

The knowledge about the surrounding is collected by the cameras installed on the eyes of the robot. For the experiment, the camera feeds are only used. The person detection block from OpenCV is used to define the belief for the system design explicitly. Moreover, the goals and actions are defined accordingly to realize a use case of proactive behavior. The possible actions which the robot could take were limited only to the speech. The set of beliefs, desires, and intentions as per BDI model for the case study are defined as follows:

Belief: personDetected(“Yes”), personDetected(“No”) and personDetected(“Next”)

Desire: DoNothing(), GreetPeople() and OfferHelp()

Intentions: stayIdle(), speak()

The PROFETA framework can be implemented for behavior modeling using the following steps:

| Algorithm 1 Implementation of PROFETA framework. |

| 1: Import necessary PROFETA libraries |

| 2: Define beliefs and goals as classes in the script |

| 3: Define user actions by creating classes and overriding the method execute() |

| 4: Start PROFETA engine |

| 5: Define rules by using declarative syntax |

| 6: Run the engine |

Moreover, PROFETA framework also facilitates the definition of sensor class which can itself add or remove a set of beliefs depending upon the environment. This can be done in PROFETA by declaring a subclass Sensor, overriding the sense() method and informing the PROFETA engine about the addition of a new sensor in the program. PROFETA uses declarative language so as to express the behaviors of agents. The declarative syntax for the behavior of an agent is described below:

“Event”/“Condition” >> “setofActions”

In this declarative syntax, an event can be any one of belief assert or retract, goal accomplishment or request or even goal failures. The condition in the syntax refers to a particular set of knowledge base while actions can be goal accomplishment request, user-defined set of actions, or adding or removing beliefs. This syntax can be exemplified as:

+objectAt(“A”, “B”)/objectGot(“no”) >> [moveTo(“A”,“B”), pickObject()]

3.5. Person Detection Using OpenCV

For person detection, open-source computer vision library (OpenCV), a freely available open-source library for computer vision and machine learning, is used. The libraries and algorithms in OpenCV are directly used in the experiments to detect a person based on the histogram of oriented gradient (HOG) features and support vector machine (SVM) classifier. The performance improvement of the OpenCV algorithms for person detection is beyond the scope of this work. ROS has its own image format used for communication between nodes through subscription and publishing. This image format has to be converted into OpenCV format to use the OpenCV libraries for person detection. CvBridge, a library in ROS, facilitates the conversion of ROS images to OpenCV image format and vice-versa. The CvBridge interface is represented in

Figure 3.

3.6. Experimental Setup

The test use case is implemented in Waldo with the works distributed over two PCS. The workstation PC has Ubuntu 16.04 installed on an Intel i5-5300U processor with ROS Kinetic whereas the Waldo PC has the same operating system installed on an Intel(R) Atom(TM) processor. The proactive behavior of Waldo is well implemented using the BDI framework of PROFETA.

4. Results and Discussion

In this section, we present the validation of the proposed system design for the integration of various logical modules like the BDI model and OpenCV into ROS for proactive behaviors. The capabilities of the proposed framework are validated using a qualitative approach, where several features such as modularity, flexibility, and rational work distribution are investigated. Furthermore, we explain the associated findings in detail and include a quantitative analysis of the results for logical OpenCV module.

4.1. Work Distribution in Proposed System Architecture

One of the key features expected from the proposed framework is the rational distribution of works required in the development of proactive behaviors in the robot. The study implements the test use case in ROS with an overlaid layer of the BDI framework of PROFETA. A module in the OpenCV library does the image processing. The proposed system design distributed the operations over two PCs, Waldo PC, installed in the robot and Workstation PC. The test experiments utilized the distributed working architecture of ROS as the design offloaded the Waldo PC from heavy processing of image feeds collected from the cameras. The bulky and more compute-intensive modules of person detection and practical reasoning (BDI framework) are installed in a comparatively powerful Workstation PC. The actual actions and the management of different ROS nodes are handled in Waldo PC. These actions are not compute-intensive. As such, the proposed architecture supports a reasonable work distribution in the development of proactive behaviors. Consequently, more advanced activities can be thought of as an extension as more compute-intensive modular blocks can be easily integrated into the robot, thanks to the architecture. More powerful machines can assume the role of Workstation PC for such capabilities while Waldo PC can assume the light roles of information collection and effectors. Moreover, the framework allows us to add additional computational devices to consider different workloads required for various proactive behaviors. Such capabilities make the proposed framework flexible as well.

4.2. Validation of Proposed System Design with Test Use Case

The validation of the proposed system design focused on investigating whether the features of flexibility, modularity, and rational work distribution are achieved during the exhibition of proactive behavior by Waldo. For validating the proposed system design, we created several distributed nodes within and outside the ROS ecosystem. There is one ROS node each for camera feed, person detection block and speech block. The different ROS nodes communicate with each other through the topic messages. Roscore manages the communication between the nodes. As required, the nodes can be created and removed for adding or removing functionalities. The nodes can be created in any of the computing devices available within the proposed framework, providing flexibility and modularity. Initially, the camera node publishes the image feed collected by the eyes of Waldo. The converter node, with CvBridge interface, subscribes to the topic messages of camera node and converts the ROS images to OpenCV images. The node then publishes the converted images. There is a node called person detector running in Workstation PC, that subscribes to the converted image messages. This node executes the person detection module of OpenCV library. There is an additional node called BDI engine in Workstation PC that subscribes to the messages about person detection published by the person detector. BDI engine executes all the necessary behavior modeling to publish the action to be done by the robot finally. There is another node, listener, that subscribes to BDI engine node and publishes messages for actuators in the robot to undertake the actions. The speech node that subscribes to listener makes the robot speak out the sentences to achieve the goal. The entire interaction between different nodes in a widely distributed ROS ecosystem is shown in

Figure 4. As can be seen in the figure, different workloads required for the proactive behaviors are distributed over Waldo PC and Workstation PC. Such rational work distribution is one of the strengths of the proposed framework, where computing devices can be easily added or removed based on the requirements. Furthermore, additional logical blocks/modular can be created as new nodes within the ROS ecosystem in the proposed framework to develop additional functionality in the social robot. In our study, we created a node for the movement of the head of the robot during the person detection to demonstrate the modularity in the proposed framework. The newly created node communicates with the person detector node to create any movement.

4.3. Performance Analysis

For the performance analysis and evaluation, Waldo is kept at a fixed position in the corridor. The eyes of the robot, which have cameras, are at the height of about 162 cm. The tests are carried repeatedly where both natural and artificial lights influence the lighting condition.

Figure 5 and

Figure 6 represent the execution of BDI actions and image feeds collected by the camera installed in the robot, for no person detected and person detected cases, respectively. When a person is not detected in the image feed, the BDI engine establishes the belief about the environment and create a goal of not greeting the people. Accordingly, the engine selects the action of NoTalk to achieve the goal, as shown in

Figure 5a. Similarly, when a person is detected, the BDI engine asserts a belief of encountering a person, enabled by the person detection module running on the Workstation PC. Based on this belief set, the engine has to set up a goal of either greeting the people or offering additional help to the people. For the distinction between the two, we add logical operation of tracking for how long the person has been encountered, as represented by the counter in

Figure 6a. Based on the value of counter and person detected in the image feed, the BDI engine establishes two distinct belief sets requiring two different goals. The greeting action to achieve the goal of greeting people is represented as talk action in

Figure 6a. The help action is executed to achieve the purpose of offering help to people when the person is detected continuously for a more extended period.

Moreover, we tested the working of the overall system considering different distances from the robot in two different scenes. The analysis of the performance is presented in

Table 2 and

Table 3.

The performance analysis of the entire system showed that the robot performed quite poorly for the region beyond 2–10 m. The respective values of precision and recall for Scene 1 are 0.822 and 0.782, while the same for Scene 2 are 0.756 and 0.728. Scene 2 had more lighting inconsistencies when compared to Scene 1. The false negatives and positives (as shown in

Figure 7 and

Figure 8) during the test were caused by the change in lighting conditions brought about by the movement of objects. Moreover, such negatives were caused by the shadows of the people formed by several sources of lights (natural and artificial). Because of the unequal distribution of data sets, we also calculated the F1-Score for each scenario. The best F1-score was obtained for Scene 1 as 0.801 for the region of 2–10 m. The high value of F1-score (closer to 1) shows the efficiency of the module when detecting the person so that the robot can exhibit proactive behavior in greeting and offering help to the person detected. Similar to the analysis given by precision and recall, F1-score for Scene 2 in the region of <2 m is the lowest, highlighting the fact that the logical block does not perform so effectively in that location. Furthermore, we plotted the precision-recall curve for our experiments, which are shown in

Figure 9. The curve also confirms the findings and establishes the best performance of the module in Scene 1 (2–10 m region) as the area under the curve for the case is the highest (see

Figure 9). The performance of the module is affected by several factors such as artificial light, shadow, multiple sources of light and the distance between the camera and the person. The accuracy of the system design in the given test case can be improved by enhancing the performance of the OpenCV logical block.

4.4. Limitation

For this study, the image frames without the entire body of the person are not considered. Since the cameras are placed at a certain height and the angular movement of the head is limited, the camera cannot cover the distance that was less than a meter. Thus, the region in front of the robot, less than 2 m away, is a blind spot. Such blind spots give poor performance in relation to person detection. Moreover, beyond the distance of about 10 m, the person detection module is not able to detect the person. As for the system design, the architecture still offers modularity and flexibility. The accuracy of the entire system is largely dependent upon the logical block used to develop proactive behaviors in the robot. Apart from the limitation in the logical block, the study focuses more on the qualitative validation of the proposed framework, where the features, such as modularity, flexibility, and rational work distributions, are investigated. The work is primarily determined in developing a basic flexible foundation using existing and freely available tools for the development of proactive behaviors in social robots. Because of no readily available comparable architecture and case studies, a comparative analysis is not included in the paper. Moreover, the advanced capabilities of the social robot are also not considered as of now. These limitations of the study will be enhanced in the future.

5. Conclusions

The recent rise of humanoid and animaloid indoor social robots in commercial spaces, including home has necessitated the “act-like-human” behavior in those robots for a more friendly human-robot interaction. The proactivity in such social robots adds more utility to the robots. As such, in this paper, we presented a validated use-case of such proactive behavior in an indoor commercial social robot, Waldo enabled by the behavior model framework, PROFETA. We clearly defined a fundamental system architecture with the features of flexibility, modularity, and rational work distribution to integrate the BDI framework into the distributed ecosystem of ROS. In the architecture, multiple ROS nodes can be independently created over multiple machines, connected by wireless communication. We demonstrated how an external module, such as OpenCV library, can be used to enhance the capability of an indoor robot in a plug-and-play fashion. We expect the proposed system architecture to lay a solid foundation to develop a wide range of proactive behaviors in an indoor social robot to behave and act like a human. Such behaviors can be possible with the addition of various logical modules into the proposed architecture.

This work validated the working of the proposed architecture with the basic actions of the robot. Preliminary works are underway to integrate the blocks of artificial intelligence into the proposed architecture to develop more intelligent actions in the robot. The expansion of the actions which Waldo can perform, is also ongoing. The future work can be centered around establishing a more accurate belief within the human reasoning paradigm, BDI, by using data collected by multiple sensors in the robot. Other than the camera, LiDAR and Kinect sensor can be used to represent the state of the environment better. In terms of the action of the robot, further works can focus on integrating the autonomous navigation of the robot to achieve the goal set up based on different beliefs. The addition of learning mechanisms, to keep improving, can be thought of as an essential extension.

Author Contributions

U.K.C. contributed to the project conceptualization, literature review, methodology, tool integration, testing, validation and experimentation. J.C. contributed to the project development, administration, conceptualization, methodology, validation and supervision. Both authors contributed to the paper writing, reviewing, and editing.

Funding

This research received no external funding.

Acknowledgments

The authors would like to thank all the members of Home and Family Communication (HFC) team of Orange Labs, Lannion for their kind help and support during the project. Additionally, the authors are grateful to everyone who helped us to improve the paper quality during various stages of paper publication.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Reactive vs. Proactive Behaviors.

Table A1.

Reactive vs. Proactive Behaviors.

| | Reactive | Proactive |

|---|

| User | Could you help me? | |

| | | It seems like your computer |

| Robot | Yes, What is wrong? | is not responding, let me |

| | | know if I can do anything? |

| User | It seems like my computer | Could you help me? |

| | is not responding | |

| Robot | You should end the process | You should end the process |

| | by pressing ALT+CTRL+DEL. | by pressing ALT+CTRL+DEL. |

| User | Won’t that restart my computer? | Won’t that restart my computer? |

| Robot | Don’t worry; it will just | Don’t worry; it will just |

| | end the process. | end the process. |

| User | Hmm, it’s not doing anything. | OK |

| Robot | Press ALT+CTRL+DEL together. | Press ALT+CTRL+DEL together. |

| User | Ok and now? | |

| | | Then you should end the process. |

| Robot | Then you should end the process. | Do you have a back-up? |

| User | But then I lost my work? | I’m not sure. |

| Robot | Don’t worry your computer makes a | Don’t worry your computer makes a |

| | backup every five minutes | backup every five minutes |

| User | How can I find a backup? | Where is my backup? |

| Robot | You can open your program | To find your program, you can |

| | again and select the file. | open your program again and |

| | | select the file. Try it! |

References

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A survey of socially interactive robots. Robot. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef]

- Campa, R. The rise of social robots: A review of the recent literature. J. Evol. Technol. 2016, 26, 106–113. [Google Scholar]

- Dautenhahn, K. Socially intelligent robots: dimensions of human–robot interaction. Philos. Trans. R. Soc. B Biol. Sci. 2007, 362, 679–704. [Google Scholar] [CrossRef] [PubMed]

- Breazeal, C.L. Designing Sociable Robots; MIT Press: Cambridge, MA, USA, 2004. [Google Scholar]

- Dautenhahn, K. The art of designing socially intelligent agents: Science, fiction, and the human in the loop. Appl. Artif. Intell. 1998, 12, 573–617. [Google Scholar] [CrossRef]

- Ferland, F.; Agrigoroaie, R.; Tapus, A. Assistive Humanoid Robots for the Elderly with Mild Cognitive Impairment. In Humanoid Robotics: A Reference; Springer: Berlin/Heidelberg, Germany, 2019; pp. 2377–2396. [Google Scholar]

- Flandorfer, P. Population ageing and socially assistive robots for elderly persons: The importance of sociodemographic factors for user acceptance. Int. J. Popul. Res. 2012, 2012, 829835. [Google Scholar] [CrossRef]

- Meghdari, A.; Shariati, A.; Alemi, M.; Vossoughi, G.R.; Eydi, A.; Ahmadi, E.; Mozafari, B.; Amoozandeh Nobaveh, A.; Tahami, R. Arash: A social robot buddy to support children with cancer in a hospital environment. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2018, 232, 605–618. [Google Scholar] [CrossRef] [PubMed]

- Conti, D.; Di Nuovo, S.; Buono, S.; Di Nuovo, A. Robots in education and care of children with developmental disabilities: A study on acceptance by experienced and future professionals. Int. J. Soc. Robot. 2017, 9, 51–62. [Google Scholar] [CrossRef]

- Belpaeme, T.; Kennedy, J.; Ramachandran, A.; Scassellati, B.; Tanaka, F. Social robots for education: A review. Sci. Robot. 2018, 3, eaat5954. [Google Scholar] [CrossRef]

- Siciliano, B.; Khatib, O. Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Bartneck, C.; Forlizzi, J. A design-centred framework for social human-robot interaction. In Proceedings of the RO-MAN 2004. 13th IEEE International Workshop on Robot and Human Interactive Communication (IEEE Catalog No. 04TH8759), Kurashiki, Japan, 22–22 September 2004; pp. 591–594. [Google Scholar]

- Kemper, N. Effects of Proactive Behavior and Physical Interaction with a Social Robot. Master’s Thesis, University of Amsterdam, Amsterdam, The Netherlands, 2009. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 17 May 2009; Volume 3, p. 5. [Google Scholar]

- Bratman, M. Intention, Plans, and Practical Reason; Harvard University Press Cambridge: Cambridge, MA, USA, 1987; Volume 10. [Google Scholar]

- Myers, K.; Yorke-Smith, N. Proactive behavior of a personal assistive agent. In Proceedings of the AAMAS Workshop on Metareasoning in Agent-Based Systems, Honolulu, HI, USA, 14 May 2007; pp. 31–45. [Google Scholar]

- Dignum, F.; Kinny, D.; Sonenberg, L. From desires, obligations and norms to goals. Cogn. Sci. Q. 2002, 2, 407–430. [Google Scholar]

- Myers, K.L.; Yorke-Smith, N. A cognitive framework for delegation to an assistive user agent. In Proceedings of the AAAI 2005 Fall Symposium on Mixed-Initiative Problem-Solving Assistants, Arlington, Virginia, 4–6 November 2005; pp. 94–99. [Google Scholar]

- Russell, S.; Jordan, H.; O’Hare, G.M.; Collier, R.W. Agent factory: A framework for prototyping logic-based AOP languages. In Proceedings of the German Conference on Multiagent System Technologies, Berlin, Germany, 6–7 October 2011; pp. 125–136. [Google Scholar]

- Winikoff, M. JACKTM intelligent agents: An industrial strength platform. In Multi-Agent Programming; Springer: Berlin/Heidelberg, Germany, 2005; pp. 175–193. [Google Scholar]

- Jedrzejowicz, P.; Wierzbowska, I. JADE-Based a-team environment. In Proceedings of the International Conference on Computational Science, Reading, UK, 28–31 May 2006; pp. 719–726. [Google Scholar]

- Braubach, L.; Pokahr, A. The jadex project: Simulation. In Multiagent Systems and Applications; Springer: Berlin/Heidelberg, Germany, 2013; pp. 107–128. [Google Scholar]

- Rao, A.S. AgentSpeak (L): BDI agents speak out in a logical computable language. In Proceedings of the European Workshop on Modelling Autonomous Agents in a Multi-Agent World, Eindhoven, The Netherlands, 22–25 January 1996; pp. 42–55. [Google Scholar]

- Bordini, R.H.; Hübner, J.F.; Wooldridge, M. Programming Multi-Agent Systems in AgentSpeak Using Jason; John Wiley & Sons: Hoboken, NJ, USA, 2007; Volume 8. [Google Scholar]

- Finzi, A.; Orlandini, A. Human-Robot Interaction Through Mixed-Initiative Planning for Rescue and Search Rovers. In AI*IA 2005: Advances in Artificial Intelligence; Bandini, S., Manzoni, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 483–494. [Google Scholar]

- Adams, J.A.; Rani, P.; Sarkar, N. Mixed initiative interaction and robotic systems. In Proceedings of the AAAI Workshop on Supervisory Control of Learning and Adaptive Systems, San Jose, CA, USA, 25–26 July 2004; pp. 6–13. [Google Scholar]

- Acosta, M.; Kang, D.; Choi, H.J. Robot with emotion for triggering mixed-initiative interaction planning. In Proceedings of the 2008 IEEE 8th International Conference on Computer and Information Technology Workshops, Sydney, Australia, 8–11 July 2008; pp. 98–103. [Google Scholar]

- Satake, S.; Kanda, T.; Glas, D.F.; Imai, M.; Ishiguro, H.; Hagita, N. How to approach humans?: Strategies for social robots to initiate interaction. In Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction, La Jolla, CA, USA, 9–13 March 2009; pp. 109–116. [Google Scholar]

- Shi, C.; Shimada, M.; Kanda, T.; Ishiguro, H.; Hagita, N. Spatial Formation Model for Initiating Conversation. In Proceedings of the 7th Annual Robotics: Science and Systems Conference, Los Angeles, CA, USA, 16–20 October 2011. [Google Scholar] [CrossRef]

- Garrell, A.; Villamizar, M.; Moreno-Noguer, F.; Sanfeliu, A. Proactive behavior of an autonomous mobile robot for human-assisted learning. In Proceedings of the 2013 IEEE RO-MAN, Gyeongju, Korea, 26–29 August 2013; pp. 107–113. [Google Scholar]

- Araiza-Illan, D.; Pipe, T.; Eder, K. Model-Based Testing, Using Belief-Desire-Intentions Agents, of Control Code for Robots in Collaborative Human-Robot Interactions. arXiv 2016, arXiv:1603.00656. [Google Scholar]

- Gottifredi, S.; Tucat, M.; Corbatta, D.; García, A.J.; Simari, G.R. A BDI architecture for high level robot deliberation. In Proceedings of the XIV Congreso Argentino de Ciencias de la Computación, Chilecito, Argentina, 8–12 August 2008. [Google Scholar]

- Duffy, B.R.; Dragone, M.; O’Hare, G.M. Social robot architecture: A framework for explicit social interaction. In Proceedings of the Toward Social Mechanisms of Android Science: A CogSci 2005 Workshop, Stresa, Italy, 25–26 July 2005. [Google Scholar]

- Pereira, D.; Oliveira, E.; Moreira, N.; Sarmento, L. Towards an architecture for emotional BDI agents. In Proceedings of the 2005 Portuguese Conference on Artificial Intelligence, Covilha, Portugal, 5–8 December 2005; pp. 40–46. [Google Scholar]

- Immersive Robotics. Available online: http://immersive-robotics.com/ (accessed on 12 February 2017).

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).