Color-Patterns to Architecture Conversion through Conditional Generative Adversarial Networks

Abstract

:1. Introduction

1.1. The Relevance of AI and Its Impact in Recent Years

1.1.1. Applications of Different Types of Machine Learning

1.1.2. Generative Adversarial Networks

1.1.3. Conditional GAN

1.1.4. Machine Learning and Architecture

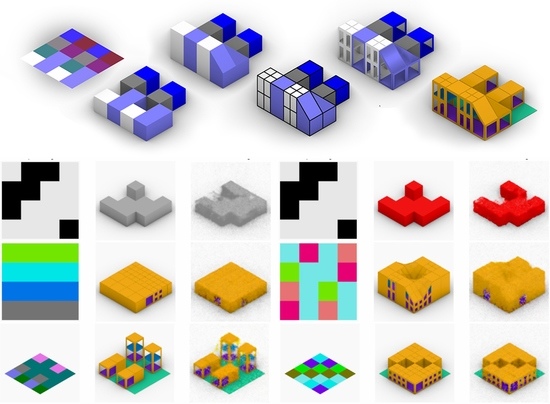

1.2. Pattern Images as Genotypic Data for Architecture in Gans

1.2.1. Patterns

1.2.2. Data Relation and Generation

1.2.3. Parametric Design to Service Machine Learning

2. Materials and Methods

2.1. Tools and Systems

2.1.1. Software and Hardware

- TensorFlow v 2.3.1

- Keras v 2.3.1.

- Scikit-learn v 0.22.2.post1

- Matplotlib v 3.2.2

- Numpy v 1.19.4

- CPU: Intel(R) Xeon(R) CPU @ 2.20GHz

- GPU: Tesla K80

- RAM: 12 GB

- CPU: Core i7-7700HQ de Intel (MSI Apache)

- GPU: GeForce GTX 1050 Ti de 4 GB

- RAM: 16 GB (DDR4)

- HD: 256 GB SSD

2.1.2. Evo- Devo Modelling and Color-Data Systems

2.1.3. Data Color System 1

2.1.4. Data Color System 2

2.2. Test Settings and Training

- Images used have been specifically dimensioned to 250 by 250 pixels.

- Output images set to 10.

- Ratio for generator-discriminator set to 80–20%.

- Group A of the tests is aimed at checking the hypothesis and assessing the impact of the settings on the algorithm.

- Group B’s objective is testing the response to the increasing complexity.

- Group C tests propose a different approach based on the pixel’s relations.

- Group D checks the flexibility of networks trained with external inputs.

- 5.

- Cubic volumes (group A).

- 6.

- Architectural volumes (group B, C and D).

2.2.1. Test A0#—Binary Patterns

- Test A01 (250-50) considers the simplest and initial case of conversion into grey volumes.

- Test A02 (250-50) compares the relevance of color by introducing red volumes that might help to distinguish between shadows and geometry.

- Test A03 (500-50) checks the impact of the database size.

- Test A04 (250-100) checks the impact of the number of epochs.

2.2.2. Test B0#—Color Patterns

- Test B01 (250-50) introduces for the first-time color patterns.

- Test B02 (350-70) increases the size of the database.

2.2.3. Test C0#—Isometric Patterns

- Test C01 (250-50) introduces isometric patterns. To be compared with B01.

- Test C02 (350-70) increases database size. To be compared with B02.

- Test C03 (500-100) developed in-depth to use as a base for Test D##.

2.2.4. Test D0#—External Patterns

- Test D01 adds extra cells to the grid (5 × 5)

- Test D02 randomly fills the image with cells.

- Test D03 checks organic patterns: Voronoi, reaction-diffusion, l-system.

3. Results

3.1. Training Outputs (Test A-C)

3.2. External Outputs (Test D)

- (D01) Enlarging the original grid to 5 × 5 pixels.

- (D02) Deconstructing the grid and spreading it through the image.

- (D03) Using other patterns, like reaction-diffusion or voronoi.

4. Discussion and Conclusions

5. Limitations

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Arjovsky, M.; Bottou, L. Towards Principled Methods for Training Generative Adversarial Networks. Available online: https://arxiv.org/abs/1701.04862 (accessed on 17 January 2017).

- Arvin, S.A.; House, D.H. Making Designs Come Alive: Using Physically Based Modeling Techniques in Space Layout Planning. In Computers in Building; Springer: Berlin/Heidelberg, Germany, 1999; pp. 245–262. [Google Scholar]

- Back, T.; Hammel, U.; Schwefel, H.-P. Evolutionary computation: Comments on the history and current state. IEEE Trans. Evol. Comput. 1997, 1, 3–17. [Google Scholar] [CrossRef] [Green Version]

- Bansal, A.; Russell, B.; Gupta, A. Marr Revisited: 2D-3D Alignment via Surface Normal Prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: San Juan, PR, USA, 2016; pp. 5965–5974. [Google Scholar]

- Barbier, I.; Perez-Carrasco, R.; Schaerli, Y. Controlling spatiotemporal pattern formation in a concentration gradient with a synthetic toggle switch. Mol. Syst. Biol. 2020, 16, e9361. [Google Scholar] [CrossRef]

- Benyus, J.M. Biomimicry: Innovation Inspired by Nature; Morrow: New York, NY, USA, 1997. [Google Scholar]

- Bornholt, J.; Lopez, R.; Carmean, D.M.; Ceze, L.; Seelig, G.; Strauss, K. A DNA-Based Archival Storage System. In Proceedings of the Twenty-First International Conference on Architectural Support for Programming Languages and Operating Systems, Atlanta, GA, USA, 2–6 April 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 637–649. [Google Scholar]

- Borok, M.J.; Tran, D.A.; Ho, M.C.W.; Drewell, R.A. Dissecting the regulatory switches of development: Lessons from enhancer evolution in Drosophila. Development 2009, 137, 5–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Carlson, C. Arc Forms: Interactive Exploration of a Discrete Combinatorial Design Space. In Proceedings of Bridges 2011: Mathematics, Music, Art, Architecture, Culture; 201–Tessellations Publishing: Apache Junction, AZ, USA, 2011. [Google Scholar]

- Carroll, S. Endless Forms Most Beautiful: The New Science of Evo Devo and the Making of the Animal Kingdom; Norton: New York, NY, USA, 2005. [Google Scholar]

- Carter, B.; Mueller, J.; Jain, S.; Gifford, D. What Made You Do This? Understanding Black-Box Decisions with Sufficient Input Subsets. In Proceedings of the 22nd International Conference on Artificial Intelligence and Statistics, Naha, Japan, 16–18 April 2019; PMLR: Naha, Japan, 2019; pp. 567–576. [Google Scholar]

- Castelvecchi, D. Can we open the black box of AI? Nat. Cell Biol. 2016, 538, 20–23. [Google Scholar] [CrossRef] [Green Version]

- Chaillou, S. AI+ Architecture: Towards a New Approach; Harvard University: Cambridge, MA, USA, 2019. [Google Scholar]

- Stewart, D.E.; Corbusier, L. Towards a New Architecture. Art Educ. 1971, 24, 30. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Las Vegas, NV, USA, 2016; pp. 3213–3223. [Google Scholar]

- Dai, A.; Qi, C.R.; NieBner, M. Shape Completion Using 3D-Encoder-Predictor CNNs and Shape Synthesis. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Honolulu, HI, USA; pp. 6545–6554.

- Dawkins, R. River out of Eden: A Darwinian View of Life; Basic books: New York, NY, USA, 2008. [Google Scholar]

- Dillenburger, B.; Hansmeyer, M. The Resolution of Architecture in the Digital Age. In Proceedings of the Programmieren für Ingenieure und Naturwissenschaftler; Springer: Berlin/Heidelberg, Germany, 2013; Volume 369, pp. 347–357. [Google Scholar]

- Doulgerakis, A. Genetic and Embryology in Layout Planning. Master’s Thesis, Science in Adaptive Architecture and Computation, University of London 2, London, UK, 2007. [Google Scholar]

- Duarte, J.P. Customizing Mass Housing: A Discursive Grammar for Siza’s Malagueira Houses (Alvaro Siza, Portugal). Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2003. [Google Scholar]

- Elezkurtaj, T.; Franck, G. Genetic Algorithms in Support of Creative Architectural Design. In Proceedings of the Architectural Computing from Turing to 2000, Liverpool, UK, 15–17 September 1999. [Google Scholar]

- Fan, H.; Su, H.; Guibas, L. A Point Set Generation Network for 3D Object Reconstruction from a Single Image. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Honolulu, HI, USA, 2017; pp. 2463–2471. [Google Scholar]

- Firman, M.; Mac Aodha, O.; Julier, S.; Brostow, G.J. Structured Prediction of Unobserved Voxels from a Single Depth Image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Las vegas, NV, USA, 2016; pp. 5431–5440. [Google Scholar]

- Frazer, J. An Evolutionary Architecture. University of Minnesota: Architectural Association. Available online: http://www.aaschool.ac.uk/publications/ea/intro.html (accessed on 15 April 2015).

- Fukushima, K. Neocognition: A Self. Biol. Cybern. 1980, 36, 193–202. [Google Scholar] [CrossRef] [PubMed]

- Garcia, M. Prologue for a History, Theory and Future of Patterns of Architecture and Spatial Design. Arch. Des. 2009, 79, 6–17. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mehdi, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Griffin, L.D.; Mylonas, D. Categorical colour geometry. PLoS ONE 2019, 14, e0216296. [Google Scholar] [CrossRef]

- Hensel, M.U. Performance-oriented Architecture: Towards a Biological Paradigm for Architectural Design and the Built Environment. FORMAkademisk 2010, 3, 36–56. [Google Scholar] [CrossRef] [Green Version]

- Hesse, C. Image-to-Image Demo—Affine Layer. Available online: https://affinelayer.com/pixsrv/ (accessed on 19 February 2017).

- Hillier, B. The Hidden Geometry of Deformed Grids: Or, Why Space Syntax Works, when it Looks as Though it Shouldn’t. Environ. Plan. B Plan. Des. 1999, 26, 169–191. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Hitawala, S. Comparative Study on Generative Adversarial Networks. Available online: https://arxiv.org/abs/1801.04271 (accessed on 12 January 2018).

- Hopfield, J.J. Neural Networks and Physical Systems with Emergent Collective Computational Abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hsu, Y.-C.; Krawczyk, R.J. New Generation of Computer Aided Design in Space Planning Methods-a Survey and A Proposal. In Proceedings of the 8th International Conference on Computer Aided Architectural Design Research in Asia, Bangkok, Thailand, 18–20 October 2003. [Google Scholar]

- Huang, W.; Hao, Z. Architectural Drawings Recognition and Generation through Machine Learning. In Proceedings of the 38th Annual Conference of the Association for Computer Aided Design in Architecture (ACADIA), Mexico City, Mexico, 18–20 October 2018. [Google Scholar]

- Hueber, S.D.; Weiller, G.F.; Djordjevic, M.A.; Frickey, T. Improving Hox Protein Classification across the Major Model Organisms. PLoS ONE 2010, 5, e10820. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Identify Similarity between Two Pictures in % Online—IMG Online. Available online: https://www.imgonline.com.ua/eng/similarity-percent.php (accessed on 7 January 2021).

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar]

- Ivakhnenko, A.G.; Valentin, G.L. Cybernetics and Forecasting Techniques; North-Holland: New York, NY, USA, 1967. [Google Scholar]

- Jagielski, R.; Gero, J.S. A Genetic Programming Approach to the Space Layout Planning Problem. In CAAD Futures 1997; Springer: Berlin/Heidelberg, Germany, 1997; pp. 875–884. [Google Scholar]

- Johnson, S. Emergence: The Connected Lives of Ants, Brains, Cities and Software; Simon and Schuster: New York, NY, USA, 2002. [Google Scholar]

- De Jong, K. Evolutionary Computation: A Unified Approach. Optimization; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Kroll, J.A.; Barocas, S.; Felten, E.W.; Reidenberg, J.R.; Robinson, D.G.; Yu, H. Accountable Algorithms. U. Pa. L. Rev. 2016, 165, 633. [Google Scholar]

- KT, A.; Sakurikar, P.; Saini, S.; Narayanan, P.J. A Flexible Neural Renderer for Material Visuali-zation. In Proceedings of the SIGGRAPH Asia 2019 Technical Briefs, Brisbane, Australia, 17–20 November 2019; pp. 83–86. [Google Scholar]

- Leroi, A.M. Mutants: On Genetic Variety and the Human Body; Viking: New York, NY, USA, 2003. [Google Scholar]

- Liu, F.; Xu, M.; Li, G.; Pei, J.; Shi, L.; Zhao, R. Adversarial symmetric GANs: Bridging adversarial samples and adversarial networks. Neural Netw. 2021, 133, 148–156. [Google Scholar] [CrossRef] [PubMed]

- Lobos, D.; Donath, D. The problem of space layout in architecture: A survey and reflections. Arquitetura Rev. 2010, 6, 136–161. [Google Scholar] [CrossRef] [Green Version]

- Loonen, R.C.G.M. Bio-inspired Adaptive Building Skins. In Biotechnologies and Biomimetics for Civil Engineering; Springer: Berlin/Heidelberg, Germany, 2015; pp. 115–134. [Google Scholar]

- Maxwell, J.C. The Scientific Papers of James Clerk Maxwell; Courier Corporation: North Chelmsford, MA, USA, 2013. [Google Scholar]

- McCarthy, J. The Inversion of Functions Defined by Turing Machines. In Automata Studies. (AM-34); Walter de Gruyter GmbH: Berlin, Germany, 1956; pp. 177–182. [Google Scholar]

- McCulloch, W.; Pitts, W. A Logical Calculus of the Ideas Immanent in Nervous Activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Medjdoub, B.; Yannou, B. Dynamic space ordering at a topological level in space planning. Artif. Intell. Eng. 2001, 15, 47–60. [Google Scholar] [CrossRef] [Green Version]

- Mirza, M.; Simon, O. Conditional Generative Adversarial Nets. Available online: https://arxiv.org/abs/1411.1784 (accessed on 6 November 2014).

- Mohammad, A.S.A.Q. Hybrid Elevations Using GAN Networks. Ph.D. Thesis, The University of North Carolina at Charlotte, Charlotte, NC, USA, 2019. [Google Scholar]

- Navarro-Mateu, D.; Cocho-Bermejo, A. Colourimetry as Strategy for Geometry Conversion: Computing Evo-Devo Patterns. In Graphic Imprints; Springer: Berlin/Heidelberg, Germany, 2018; pp. 118–130. [Google Scholar]

- Navarro-Mateu, D.; Cocho-Bermejo, A. Evo-Devo Algorithms: Gene-Regulation for Digital Architecture. Biomimetics 2019, 4, 58. [Google Scholar] [CrossRef] [Green Version]

- Nüsslein-Volhard, C.; Wieschaus, E. Mutations affecting segment number and polarity in Drosophila. Nature 1980, 287, 795–801. [Google Scholar] [CrossRef] [PubMed]

- Oxman, N.; Rosenberg, J.L. Material-based Design Computation an Inquiry into Digital Simulation of Physical Material Properties as Design Generators. Int. J. Arch. Comput. 2007, 5, 25–44. [Google Scholar] [CrossRef]

- Park, T.; Liu, M.-Y.; Wang, T.-C.; Zhu, J.-Y. Semantic Image Synthesis with Spatially-Adaptive Normalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 2332–2341. [Google Scholar]

- Pessa, E. Self-Organization and Emergence in Neural Networks. Electron. J. Theor. Phys. 2009, 6. [Google Scholar]

- Prusinkiewicz, P.; Lindenmayer, A. The Algorithmic Beauty of Plants; Springer: Berlin/Heidelberg, Germany, 1990. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016—Conference Track Proceedings, San Juan, PR, USA, 2–4 May 2016. [Google Scholar]

- Rittel, H.W.J.; Webber, M.M. Dilemmas in a general theory of planning. Policy Sci. 1973, 4, 155–169. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nat. Cell Biol. 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Schumacher, P. Parametricism: A New Global Style for Architecture and Urban Design. Arch. Des. 2009, 79, 14–23. [Google Scholar] [CrossRef]

- Spyropoulos, T.; Steele, B.D.; Holland, J.H.; Dillon, R.; Claypool, M.; Frazer, J.; Patrik, S.; Makoto, S.W.; David, R.; Mark, B. Adaptive Ecologies: Correlated Systems of Living; Architectural Association: London, UK, 2013. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. Conf. Proc. 2016, 2818–2826. [Google Scholar] [CrossRef] [Green Version]

- Tedeschi, A.; Lombardi, D. The Algorithms-Aided Design (AAD). In Informed Architecture; Springer: Berlin/Heidelberg, Germany, 2017; pp. 33–38. [Google Scholar]

- Tero, A.; Takagi, S.; Saigusa, T.; Ito, K.; Bebber, D.P.; Fricker, M.D.; Yumiki, K.; Kobayashi, R.; Nakagaki, T. Rules for Biologically Inspired Adaptive Network Design. Science 2010, 327, 439–442. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tewari, A.; Fried, O.; Thies, J.; Sitzmann, V.; Lombardi, S.; Sunkavalli, K.; Martin-Brualla, R.; Simon, T.; Saragih, J.; Nießner, M.; et al. State of the Art on Neural Rendering. Comput. Graph. Forum 2020, 39, 701–727. [Google Scholar] [CrossRef]

- Turing, A.M. Computing Machinery and Intelligence-AM Turing. Mind 1950, 59, 433. [Google Scholar] [CrossRef]

- Mathison, T.A. The chemical basis of morphogenesis. Philos. Trans. R. Soc. London. Ser. B Biol. Sci. 1952, 237, 37–72. [Google Scholar] [CrossRef]

- Vincent, J.F.; A Bogatyreva, O.; Bogatyrev, N.R.; Bowyer, A.; Pahl, A.-K. Biomimetics: Its practice and theory. J. R. Soc. Interface 2006, 3, 471–482. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, T.-C.; Liu, M.-Y.; Zhu, J.-Y.; Tao, A.; Kautz, J.; Catanzaro, B. High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8798–8807. [Google Scholar]

- Webb, G.I. Discovering Significant Patterns. Mach. Learn. 2007, 68, 1–33. [Google Scholar] [CrossRef]

- Hensel, M.; Menges, A.; Weinstock, M. Emergence in Architecture. In Emergence: Morphogenetic Design Strategies; Wiley Academy: Hoboken, NJ, USA, 2004; pp. 6–9. [Google Scholar]

- West, G.B.; James, H.B.; Brian, J.E. The Fourth Dimension of Life: Fractal Geometry and Allometric Scaling of Organisms. Science 1999, 284, 1677–1679. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wolfram, S. A New Kind of Science; Wolfram Media: Champaign, IL, USA, 2002. [Google Scholar]

- Wu, J.; Yifan, W.; Tianfan, X.; Xingyuan, S.; William, T.F.; Joshua, B. Tenenbaum. MarrNet: 3D Shape Reconstruction via 2.5D Sketches. Available online: https://arxiv.org/abs/1711.03129 (accessed on 8 November 2017).

- Wu, J.; Zhang, C.; Zhang, X.; Zhang, Z.; Freeman, W.T.; Tenenbaum, J.B. Learning Shape Priors for Single-View 3D Completion and Reconstruction. In Constructive Side-Channel Analysis and Secure Design; Springer: Berlin/Heidelberg, Germany, 2018; pp. 673–691. [Google Scholar]

- Yazici, S. A Course on Biomimetic Design Strategies. In Proceedings of the Real Time-Proceedings of the 33rd ECAADe Conference, Vienna, Austria, 16–18 September 2015; Martens, B., Wurzer, G., Grasl, T., Lorenz, W.E., Schaffranek, R., Eds.; eCAADe and Faculty of Architecture and Urban Planning: RU Wien, Vienna, Austria; pp. 111–118.

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV); Institute of Electrical and Electronics Engineers (IEEE), Venice, Italy, 22–29 October 2017; Institute of Electrical and Electronics Engineers: Piscataway, NJ, USA, 2017; pp. 2223–2232. [Google Scholar]

| Channel | Transformation | Dark Color/Event | Light Color/Event |

|---|---|---|---|

| Luminance | Existence | Void | Fill |

| Red | Allometry | One floor | Two floors |

| Green | Subdivision | No subdivision | Catmull-Clark subd. |

| Blue | Homeobox | Solid | Openings |

| Levels | Individuals Tested | Dark Colors (Empty Cell) | Light Colors | Total Colors | Percentage of Dark Colors |

|---|---|---|---|---|---|

| 2 | 500 | 411 | 2877 | 3288 | 12.50% |

| 4 | 500 | 998 | 2282 | 3280 | 30.43% |

| 8 | 500 | 1288 | 1996 | 3284 | 39.22% |

| Channel Value | Existence % | Total % | Event % |

|---|---|---|---|

| 255 | 66% Cell creation | 21.8% Light event | 33.3% of events |

| 125 | 44.5% Dark event | 66.6% of events | |

| 0 | 33% Cell deletion | 33.3% No event | - |

| Test Name | Input Image | Target Image | # Images | # Epochs | # Total Images |

|---|---|---|---|---|---|

| A01 | pattern binary | iso gray | 250 | 50 | 12,500 |

| A02 | pattern binary | iso red | 250 | 50 | 12,500 |

| A03 | pattern binary | iso gray | 500 | 50 | 25,000 |

| A04 | pattern binary | iso gray | 250 | 100 | 25,000 |

| B01 | pattern color | iso color | 250 | 50 | 12,500 |

| B02 | pattern color | iso color | 350 | 70 | 24,500 |

| C01 | pattern iso color | iso color | 250 | 50 | 12,500 |

| C02 | pattern iso color | iso color | 350 | 70 | 24,500 |

| C03 | pattern iso color | iso color | 500 | 100 | 50,000 |

| A01 | A02 | A03 | A04 | |

|---|---|---|---|---|

| Average | 98.60% | 98.06% | 97.23% | 99.13% |

| Min. value | 97.90% | 96.70% | 93.42% | 98.41% |

| Max. value | 99.10% | 98.67% | 99.33% | 99.45% |

| B01 | B02 | |

|---|---|---|

| Average | 88.91% | 93.46% |

| Min. value | 85.98% | 89.74% |

| Max. value | 94.88% | 97.17% |

| C01 | C02 | C03 | D01 | |

|---|---|---|---|---|

| Average | 96.84% | 98.10% | 99.01% | 88.23% |

| Min. value | 93.20% | 96.47% | 97.80% | 85.13% |

| Max. value | 98.95% | 99.34% | 99.48% | 91.30% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Navarro-Mateu, D.; Carrasco, O.; Cortes Nieves, P. Color-Patterns to Architecture Conversion through Conditional Generative Adversarial Networks. Biomimetics 2021, 6, 16. https://doi.org/10.3390/biomimetics6010016

Navarro-Mateu D, Carrasco O, Cortes Nieves P. Color-Patterns to Architecture Conversion through Conditional Generative Adversarial Networks. Biomimetics. 2021; 6(1):16. https://doi.org/10.3390/biomimetics6010016

Chicago/Turabian StyleNavarro-Mateu, Diego, Oriol Carrasco, and Pedro Cortes Nieves. 2021. "Color-Patterns to Architecture Conversion through Conditional Generative Adversarial Networks" Biomimetics 6, no. 1: 16. https://doi.org/10.3390/biomimetics6010016

APA StyleNavarro-Mateu, D., Carrasco, O., & Cortes Nieves, P. (2021). Color-Patterns to Architecture Conversion through Conditional Generative Adversarial Networks. Biomimetics, 6(1), 16. https://doi.org/10.3390/biomimetics6010016