A Review of Myoelectric Control for Prosthetic Hand Manipulation

Abstract

1. Introduction

- The switch control strategy is a simple technique that uses smoothed and rectified sEMG signals and predefined thresholds to achieve single-degree-of-freedom control of prosthetic hands, such as grasp or wrist rotation. Specifically, the principle of this strategy is to establish a mapping between sEMG amplitude and activation of prosthetic hand movement. If the amplitude is greater than a manually preset threshold, the prosthetic hand will execute the action at a constant speed/force;

- The proportional control method can achieve variable speed/force movements of the prosthetic hand based on the proportion of user input signals. The proportional control strategy establishes a mapping between sEMG amplitude and the degree of movement of the prosthetic hand, where the description variable of the degree of movement can be force, speed, position, or another mechanical output;

- Pattern recognition technology is a method based on feature engineering and classification techniques and is currently a research hotspot in myoelectric control. The principle of pattern recognition control strategy is that similar sEMG signal features will be reproduced in experiments with the same action pattern. These features can be used as the basis for distinguishing different action patterns, thereby recognizing a wider variety of action patterns than the input channel number. The pattern recognition control strategy simplifies the representation of hand movements, effectively reduces the difficulty of the task, and significantly improves the accuracy of traditional motion intent recognition;

- The simultaneous and proportional control strategy aims to capture the entire process of the user’s execution of hand movements, including the different completion stages of a single action and the transition stages between different actions, which is a more complex and dynamic process. Compared to the above control strategy, the multi-degree-of-freedom simultaneous proportional control strategy does not rely on pre-set action patterns but instead estimates the hand state at a single moment in real-time based on regression methods, such as joint angles, positions, or torques. This feature allows users to control the myoelectric prosthetic hand more intuitively and naturally, making it a new research hotspot in the field of myoelectric control in recent years.

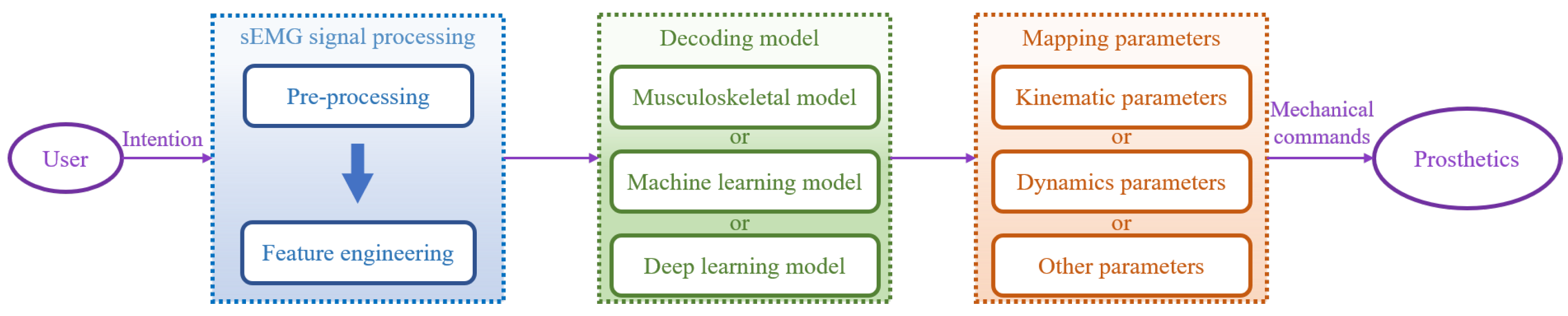

2. Basic Concepts of Myoelectric Control

2.1. sEMG Signal Processing

2.1.1. Pre-Processing

2.1.2. Feature Engineering

2.2. Decoding Model

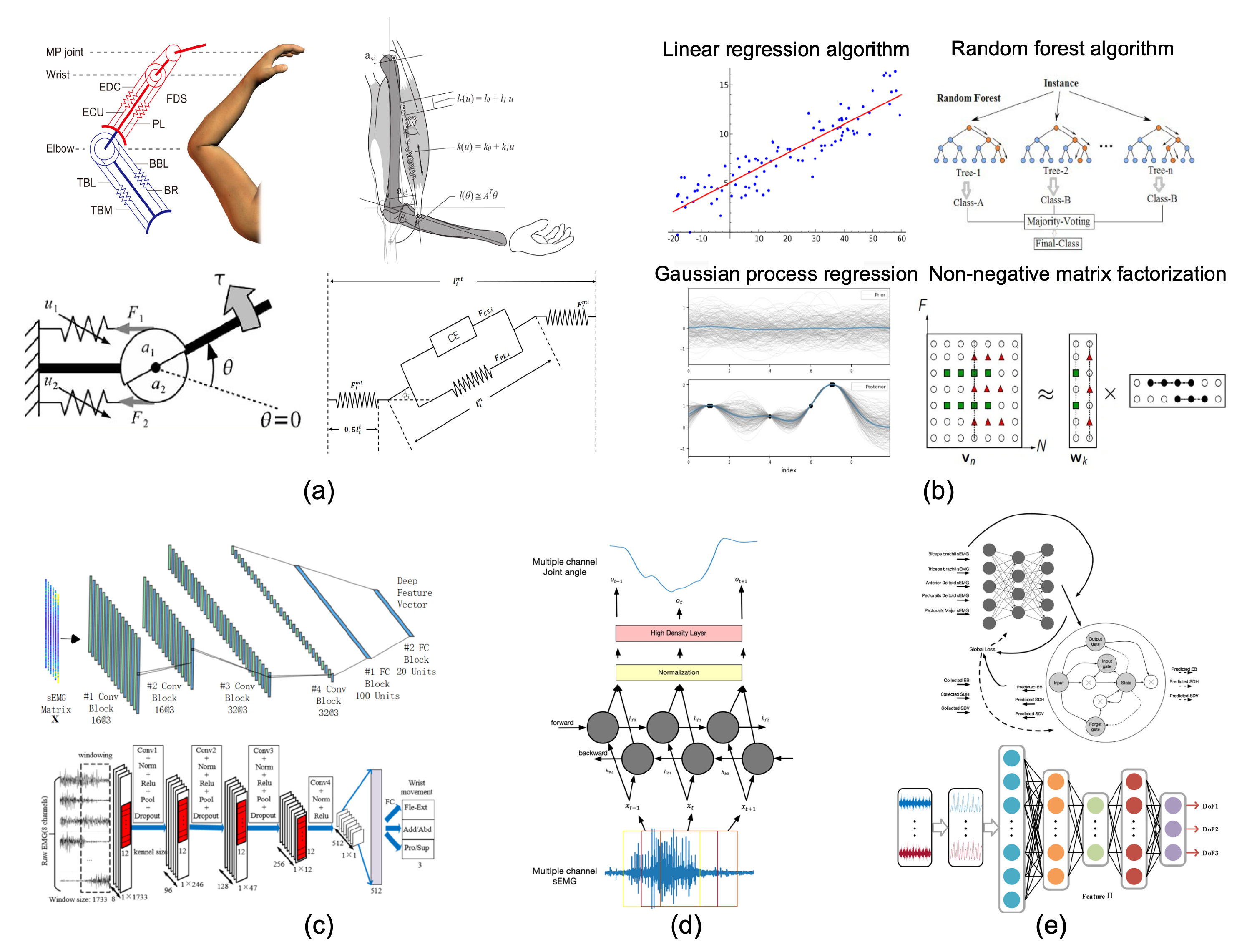

2.2.1. Musculoskeletal Models

2.2.2. Traditional Machine Learning Models

2.2.3. Deep Learning Models

2.3. Mapping Parameters

2.3.1. Kinematic Parameters

2.3.2. Dynamics Parameters

2.3.3. Other Parameters

3. Current Research Status

3.1. Advances in Intention Recognition Research

3.1.1. Type of Motion Intention

3.1.2. Discrete Motion Classification

3.1.3. Continuous Motion Estimation

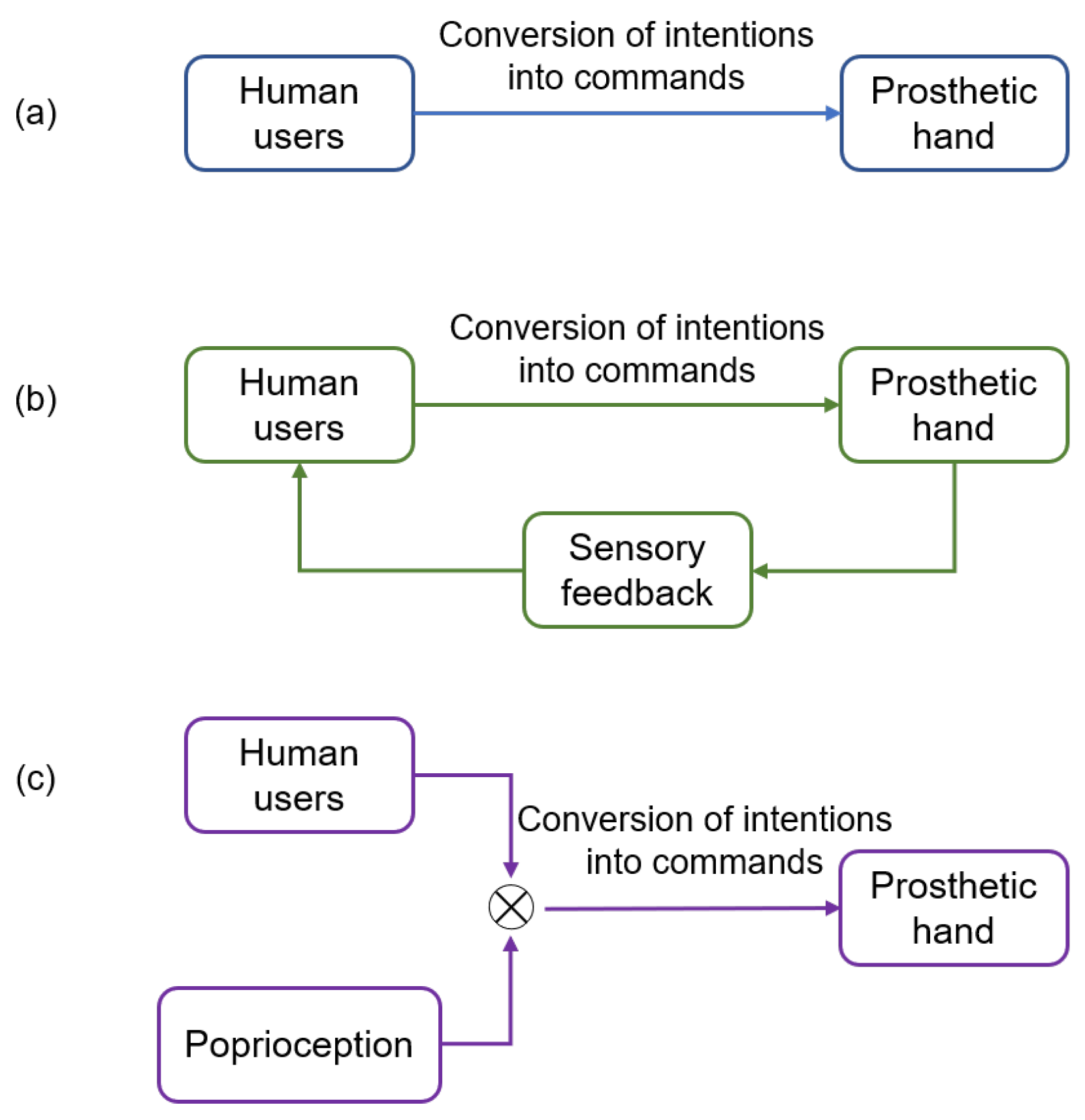

3.2. Advances in Control Strategy Research

3.2.1. Unidirectional Control

3.2.2. Feedback Control

3.2.3. Shared Control

4. Challenges and Opportunities

- Although there has been progress in decoding motion intentions for a variety of basic hand movements, there is a lack of functional motion intention decoding that facilitates prosthetic hand manipulation, which means that current prosthetic hands are only able to perform simple grasping tasks;

- Existing myoelectric control research primarily focuses on basic hand grasping functions in humans (see Table 2), whereas more investigations are required to explore complex daily manipulation tasks that demand continuous manipulation and dynamic grasping force adjustment;

- Prioritizing recognition and generalization capabilities while neglecting the high abandonment rate and subjective user experience of prosthetic hands is a flawed approach. During the processes of both myoelectric training and control, users need to exert a significant amount of attention and effort.

4.1. Functionality-Augmented Prosthetic Hands

- The activation method of functionality-augmented technology must be intuitive and natural. If it requires complex pre-actions from the user, it will significantly increase their cognitive and control burden, such as requiring extensive long-term training. The multimodal human–machine interface for prosthetic hands may be an effective solution to this challenge [120,121]. It uses sEMG as the primary signal source, with other biological signals from the hand used as an auxiliary signal source to achieve natural and implicit control of functionality-augmented technology;

- The hardware equipment that provides functionality-augmented technology needs to be highly integrated, ensuring that the overall volume and weight of the prosthetic hand remain within an acceptable range for the user;

- Hand function augmentation may cause changes in the biological hand representation of the user, which is also a problem that needs to be addressed in functionality-augmented prosthetic hands. Functionality-augmented technology should not affect the user’s ability to control basic hand functions. Instead, it should produce a beneficial gain in the user’s own motion control capability, rather than a confusing adverse effect.

4.2. User Burden Reduction

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Xia, M.; Chen, C.; Sheng, X.; Zhu, X. On Detecting the Invariant Neural Drive to Muscles during Repeated Hand Motions: A Preliminary Study. In Proceedings of the 2021 27th International Conference on Mechatronics and Machine Vision in Practice (M2VIP), Shanghai, China, 26–28 November 2021; pp. 192–196. [Google Scholar]

- De Oliveira, D.S.; Casolo, A.; Balshaw, T.G.; Maeo, S.; Lanza, M.B.; Martin, N.R.; Maffulli, N.; Kinfe, T.M.; Eskofier, B.M.; Folland, J.P.; et al. Neural decoding from surface high-density EMG signals: Influence of anatomy and synchronization on the number of identified motor units. J. Neural Eng. 2022, 19, 046029. [Google Scholar] [CrossRef] [PubMed]

- Farina, D.; Jiang, N.; Rehbaum, H.; Holobar, A.; Graimann, B.; Dietl, H.; Aszmann, O.C. The extraction of neural information from the surface EMG for the control of upper-limb prostheses: Emerging avenues and challenges. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 797–809. [Google Scholar] [CrossRef]

- Zi-You, L.; Xin-Gang, Z.; Bi, Z.; Qi-Chuan, D.; Dao-Hui, Z.; Jian-Da, H. Review of sEMG-based motion intent recognition methods in non-ideal conditions. Acta Autom. Sin. 2021, 47, 955–969. [Google Scholar]

- Konrad, P. The ABC of EMG: A Practical Introduction to Kinesiological Electromyography; Noraxon Inc.: Scottsdale, AZ, USA, 2005. [Google Scholar]

- Englehart, K.; Hudgins, B. A robust, real-time control scheme for multifunction myoelectric control. IEEE Trans. Biomed. Eng. 2003, 50, 848–854. [Google Scholar] [CrossRef] [PubMed]

- Sartori, M.; Durandau, G.; Došen, S.; Farina, D. Robust simultaneous myoelectric control of multiple degrees of freedom in wrist-hand prostheses by real-time neuromusculoskeletal modeling. J. Neural Eng. 2018, 15, 066026. [Google Scholar] [CrossRef]

- Bi, L.; Guan, C. A review on EMG-based motor intention prediction of continuous human upper limb motion for human-robot collaboration. Biomed. Signal Process. Control 2019, 51, 113–127. [Google Scholar] [CrossRef]

- Xiong, D.; Zhang, D.; Zhao, X.; Zhao, Y. Deep learning for EMG-based human-machine interaction: A review. IEEE/CAA J. Autom. Sin. 2021, 8, 512–533. [Google Scholar] [CrossRef]

- Mohebbian, M.R.; Nosouhi, M.; Fazilati, F.; Esfahani, Z.N.; Amiri, G.; Malekifar, N.; Yusefi, F.; Rastegari, M.; Marateb, H.R. A Comprehensive Review of Myoelectric Prosthesis Control. arXiv 2021, arXiv:2112.13192. [Google Scholar]

- Cordella, F.; Ciancio, A.L.; Sacchetti, R.; Davalli, A.; Cutti, A.G.; Guglielmelli, E.; Zollo, L. Literature review on needs of upper limb prosthesis users. Front. Neurosci. 2016, 10, 209. [Google Scholar] [CrossRef]

- Zhang, Q.; Pi, T.; Liu, R.; Xiong, C. Simultaneous and proportional estimation of multijoint kinematics from EMG signals for myocontrol of robotic hands. IEEE/ASME Trans. Mech. 2020, 25, 1953–1960. [Google Scholar] [CrossRef]

- Dantas, H.; Warren, D.J.; Wendelken, S.M.; Davis, T.S.; Clark, G.A.; Mathews, V.J. Deep learning movement intent decoders trained with dataset aggregation for prosthetic limb control. IEEE Trans. Biomed. Eng. 2019, 66, 3192–3203. [Google Scholar] [CrossRef]

- Lukyanenko, P.; Dewald, H.A.; Lambrecht, J.; Kirsch, R.F.; Tyler, D.J.; Williams, M.R. Stable, simultaneous and proportional 4-DoF prosthetic hand control via synergy-inspired linear interpolation: A case series. J. Neuroeng. Rehabil. 2021, 18, 50. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.; Wang, B.; Jiang, N.; Farina, D. Robust extraction of basis functions for simultaneous and proportional myoelectric control via sparse non-negative matrix factorization. J. Neural Eng. 2018, 15, 026017. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Zeng, H.; Chen, D.; Zhu, J.; Song, A. Real-time continuous hand motion myoelectric decoding by automated data labeling. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 6951–6957. [Google Scholar]

- Guo, W.; Ma, C.; Wang, Z.; Zhang, H.; Farina, D.; Jiang, N.; Lin, C. Long exposure convolutional memory network for accurate estimation of finger kinematics from surface electromyographic signals. J. Neural Eng. 2021, 18, 026027. [Google Scholar] [CrossRef] [PubMed]

- Dwivedi, A.; Kwon, Y.; McDaid, A.J.; Liarokapis, M. A learning scheme for EMG based decoding of dexterous, in-hand manipulation motions. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 2205–2215. [Google Scholar] [CrossRef] [PubMed]

- Ma, C.; Guo, W.; Zhang, H.; Samuel, O.W.; Ji, X.; Xu, L.; Li, G. A novel and efficient feature extraction method for deep learning based continuous estimation. IEEE Robot. Autom. Lett. 2021, 6, 7341–7348. [Google Scholar] [CrossRef]

- Piazza, C.; Rossi, M.; Catalano, M.G.; Bicchi, A.; Hargrove, L.J. Evaluation of a simultaneous myoelectric control strategy for a multi-DoF transradial prosthesis. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2286–2295. [Google Scholar] [CrossRef]

- Winters, J.M. Hill-based muscle models: A systems engineering perspective. In Multiple Muscle Systems: Biomechanics and Movement Organization; Springer: Berlin/Heidelberg, Germany, 1990; pp. 69–93. [Google Scholar]

- Pan, L.; Crouch, D.L.; Huang, H. Myoelectric control based on a generic musculoskeletal model: Toward a multi-user neural-machine interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1435–1442. [Google Scholar] [CrossRef]

- Shin, D.; Kim, J.; Koike, Y. A myokinetic arm model for estimating joint torque and stiffness from EMG signals during maintained posture. J. Neurophysiol. 2009, 101, 387–401. [Google Scholar] [CrossRef]

- Stapornchaisit, S.; Kim, Y.; Takagi, A.; Yoshimura, N.; Koike, Y. Finger angle estimation from array EMG system using linear regression model with independent component analysis. Front. Neurorobot. 2019, 13, 75. [Google Scholar] [CrossRef]

- Crouch, D.L.; Huang, H. Lumped-parameter electromyogram-driven musculoskeletal hand model: A potential platform for real-time prosthesis control. J. Biomech. 2016, 49, 3901–3907. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Yu, Y.; Wang, X.; Ma, S.; Sheng, X.; Zhu, X. A musculoskeletal model driven by muscle synergy-derived excitations for hand and wrist movements. J. Neural Eng. 2022, 19, 016027. [Google Scholar] [CrossRef] [PubMed]

- Ngeo, J.G.; Tamei, T.; Shibata, T. Continuous and simultaneous estimation of finger kinematics using inputs from an EMG-to-muscle activation model. J. Neuroeng. Rehabil. 2014, 11, 122. [Google Scholar] [CrossRef]

- Xiloyannis, M.; Gavriel, C.; Thomik, A.A.; Faisal, A.A. Gaussian process autoregression for simultaneous proportional multi-modal prosthetic control with natural hand kinematics. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1785–1801. [Google Scholar] [CrossRef] [PubMed]

- Jiang, N.; Englehart, K.B.; Parker, P.A. Extracting simultaneous and proportional neural control information for multiple-DOF prostheses from the surface electromyographic signal. IEEE Trans. Biomed. Eng. 2008, 56, 1070–1080. [Google Scholar] [CrossRef]

- Ison, M.; Vujaklija, I.; Whitsell, B.; Farina, D.; Artemiadis, P. Simultaneous myoelectric control of a robot arm using muscle synergy-inspired inputs from high-density electrode grids. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 6469–6474. [Google Scholar]

- Kapelner, T.; Vujaklija, I.; Jiang, N.; Negro, F.; Aszmann, O.C.; Principe, J.; Farina, D. Predicting wrist kinematics from motor unit discharge timings for the control of active prostheses. J. Neuroeng. Rehabil. 2019, 16, 47. [Google Scholar] [CrossRef]

- Hahne, J.M.; Schweisfurth, M.A.; Koppe, M.; Farina, D. Simultaneous control of multiple functions of bionic hand prostheses: Performance and robustness in end users. Sci. Robot. 2018, 3, eaat3630. [Google Scholar] [CrossRef]

- Yang, W.; Yang, D.; Liu, Y.; Liu, H. Decoding simultaneous multi-DOF wrist movements from raw EMG signals using a convolutional neural network. IEEE Trans. Hum. Mach. Syst. 2019, 49, 411–420. [Google Scholar] [CrossRef]

- Ameri, A.; Akhaee, M.A.; Scheme, E.; Englehart, K. Regression convolutional neural network for improved simultaneous EMG control. J. Neural Eng. 2019, 16, 036015. [Google Scholar] [CrossRef]

- Yu, Y.; Chen, C.; Sheng, X.; Zhu, X. Wrist torque estimation via electromyographic motor unit decomposition and image reconstruction. IEEE J. Biomed. Health Inform. 2020, 25, 2557–2566. [Google Scholar] [CrossRef]

- Qin, Z.; Stapornchaisit, S.; He, Z.; Yoshimura, N.; Koike, Y. Multi–Joint Angles Estimation of Forearm Motion Using a Regression Model. Front. Neurorobot. 2021, 15, 685961. [Google Scholar] [CrossRef] [PubMed]

- Ma, C.; Lin, C.; Samuel, O.W.; Guo, W.; Zhang, H.; Greenwald, S.; Xu, L.; Li, G. A bi-directional LSTM network for estimating continuous upper limb movement from surface electromyography. IEEE Robot. Autom. Lett. 2021, 6, 7217–7224. [Google Scholar] [CrossRef]

- Hu, X.; Zeng, H.; Song, A.; Chen, D. Robust continuous hand motion recognition using wearable array myoelectric sensor. IEEE Sens. J. 2021, 21, 20596–20605. [Google Scholar] [CrossRef]

- Salatiello, A.; Giese, M.A. Continuous Decoding of Daily-Life Hand Movements from Forearm Muscle Activity for Enhanced Myoelectric Control of Hand Prostheses. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Bao, T.; Zaidi, S.A.R.; Xie, S.; Yang, P.; Zhang, Z.Q. A CNN-LSTM hybrid model for wrist kinematics estimation using surface electromyography. IEEE Trans. Instrum. Meas. 2020, 70, 2503809. [Google Scholar] [CrossRef]

- Ma, C.; Lin, C.; Samuel, O.W.; Xu, L.; Li, G. Continuous estimation of upper limb joint angle from sEMG signals based on SCA-LSTM deep learning approach. Biomed. Signal Process. Control 2020, 61, 102024. [Google Scholar] [CrossRef]

- Bao, T.; Zhao, Y.; Zaidi, S.A.R.; Xie, S.; Yang, P.; Zhang, Z. A deep Kalman filter network for hand kinematics estimation using sEMG. Pattern Recognit. Lett. 2021, 143, 88–94. [Google Scholar] [CrossRef]

- Chen, C.; Guo, W.; Ma, C.; Yang, Y.; Wang, Z.; Lin, C. sEMG-based continuous estimation of finger kinematics via large-scale temporal convolutional network. Appl. Sci. 2021, 11, 4678. [Google Scholar] [CrossRef]

- Raj, R.; Rejith, R.; Sivanandan, K. Real time identification of human forearm kinematics from surface EMG signal using artificial neural network models. Procedia Technol. 2016, 25, 44–51. [Google Scholar] [CrossRef]

- Nasr, A.; Bell, S.; He, J.; Whittaker, R.L.; Jiang, N.; Dickerson, C.R.; McPhee, J. MuscleNET: Mapping electromyography to kinematic and dynamic biomechanical variables by machine learning. J. Neural Eng. 2021, 18, 0460d3. [Google Scholar] [CrossRef]

- Yu, Y.; Chen, C.; Zhao, J.; Sheng, X.; Zhu, X. Surface electromyography image-driven torque estimation of multi-DoF wrist movements. IEEE Trans. Ind. Electron. 2021, 69, 795–804. [Google Scholar] [CrossRef]

- Kim, D.; Koh, K.; Oppizzi, G.; Baghi, R.; Lo, L.C.; Zhang, C.; Zhang, L.Q. Simultaneous estimations of joint angle and torque in interactions with environments using EMG. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3818–3824. [Google Scholar]

- Chen, C.; Yu, Y.; Sheng, X.; Zhu, X. Non-invasive analysis of motor unit activation during simultaneous and continuous wrist movements. IEEE J. Biomed. Health Inform. 2021, 26, 2106–2115. [Google Scholar] [CrossRef] [PubMed]

- Yang, D.; Liu, H. An EMG-based deep learning approach for multi-DOF wrist movement decoding. IEEE Trans. Ind. Electron. 2021, 69, 7099–7108. [Google Scholar] [CrossRef]

- Yang, W.; Yang, D.; Liu, Y.; Liu, H. A 3-DOF hemi-constrained wrist motion/force detection device for deploying simultaneous myoelectric control. Med. Biol. Eng. Comput. 2018, 56, 1669–1681. [Google Scholar] [CrossRef]

- Dwivedi, A.; Lara, J.; Cheng, L.K.; Paskaranandavadivel, N.; Liarokapis, M. High-density electromyography based control of robotic devices: On the execution of dexterous manipulation tasks. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3825–3831. [Google Scholar]

- Chen, C.; Yu, Y.; Sheng, X.; Farina, D.; Zhu, X. Simultaneous and proportional control of wrist and hand movements by decoding motor unit discharges in real time. J. Neural Eng. 2021, 18, 056010. [Google Scholar] [CrossRef] [PubMed]

- Hermens, H.J.; Freriks, B.; Disselhorst-Klug, C.; Rau, G. Development of recommendations for SEMG sensors and sensor placement procedures. J. Electromyogr. Kinesiol. 2000, 10, 361–374. [Google Scholar] [CrossRef] [PubMed]

- Drake, J.D.; Callaghan, J.P. Elimination of electrocardiogram contamination from electromyogram signals: An evaluation of currently used removal techniques. J. Electromyogr. Kinesiol. 2006, 16, 175–187. [Google Scholar] [CrossRef]

- Reaz, M.B.I.; Hussain, M.S.; Mohd-Yasin, F. Techniques of EMG signal analysis: Detection, processing, classification and applications. Biol. Proced. Online 2006, 8, 11–35. [Google Scholar] [CrossRef]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef]

- Oskoei, M.A.; Hu, H. Myoelectric control systems—A survey. Biomed. Signal Process. Control 2007, 2, 275–294. [Google Scholar] [CrossRef]

- Turner, A.; Shieff, D.; Dwivedi, A.; Liarokapis, M. Comparing machine learning methods and feature extraction techniques for the emg based decoding of human intention. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Guadalajara, Mexico, 1–5 November 2021; pp. 4738–4743. [Google Scholar]

- Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Feature reduction and selection for EMG signal classification. Expert Syst. Appl. 2012, 39, 7420–7431. [Google Scholar] [CrossRef]

- He, Z.; Qin, Z.; Koike, Y. Continuous estimation of finger and wrist joint angles using a muscle synergy based musculoskeletal model. Appl. Sci. 2022, 12, 3772. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, Z.; Li, Z.; Yang, Z.; Dehghani-Sanij, A.A.; Xie, S. An EMG-driven musculoskeletal model for estimating continuous wrist motion. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 3113–3120. [Google Scholar] [CrossRef] [PubMed]

- Kawase, T.; Sakurada, T.; Koike, Y.; Kansaku, K. A hybrid BMI-based exoskeleton for paresis: EMG control for assisting arm movements. J. Neural Eng. 2017, 14, 016015. [Google Scholar] [CrossRef]

- Buchanan, T.S.; Lloyd, D.G.; Manal, K.; Besier, T.F. Neuromusculoskeletal modeling: Estimation of muscle forces and joint moments and movements from measurements of neural command. J. Appl. Biomech. 2004, 20, 367–395. [Google Scholar] [CrossRef]

- Inkol, K.A.; Brown, C.; McNally, W.; Jansen, C.; McPhee, J. Muscle torque generators in multibody dynamic simulations of optimal sports performance. Multibody Syst. Dyn. 2020, 50, 435–452. [Google Scholar] [CrossRef]

- Pan, L.; Crouch, D.L.; Huang, H. Comparing EMG-based human-machine interfaces for estimating continuous, coordinated movements. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 2145–2154. [Google Scholar] [CrossRef]

- Ahsan, M.R.; Ibrahimy, M.I.; Khalifa, O.O. EMG signal classification for human computer interaction: A review. Eur. J. Sci. Res. 2009, 33, 480–501. [Google Scholar]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Jarque-Bou, N.J.; Sancho-Bru, J.L.; Vergara, M. A systematic review of emg applications for the characterization of forearm and hand muscle activity during activities of daily living: Results, challenges, and open issues. Sensors 2021, 21, 3035. [Google Scholar] [CrossRef] [PubMed]

- Sartori, M.; Llyod, D.G.; Farina, D. Neural data-driven musculoskeletal modeling for personalized neurorehabilitation technologies. IEEE Trans. Biomed. Eng. 2016, 63, 879–893. [Google Scholar] [CrossRef]

- Todorov, E.; Ghahramani, Z. Analysis of the synergies underlying complex hand manipulation. In IEEE Engineering in Medicine and Biology Magazine; IEEE: New York, NY, USA, 2004; Volume 2, pp. 4637–4640. [Google Scholar]

- Suzuki, M.; Shiller, D.M.; Gribble, P.L.; Ostry, D.J. Relationship between cocontraction, movement kinematics and phasic muscle activity in single-joint arm movement. Exp. Brain Res. 2001, 140, 171–181. [Google Scholar] [CrossRef]

- Jiang, N.; Vujaklija, I.; Rehbaum, H.; Graimann, B.; Farina, D. Is accurate mapping of EMG signals on kinematics needed for precise online myoelectric control? IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 22, 549–558. [Google Scholar] [CrossRef]

- Atzori, M.; Gijsberts, A.; Castellini, C.; Caputo, B.; Hager, A.G.M.; Elsig, S.; Giatsidis, G.; Bassetto, F.; Müller, H. Electromyography data for non-invasive naturally-controlled robotic hand prostheses. Sci. Data 2014, 1, 140053. [Google Scholar] [CrossRef]

- Simão, M.; Mendes, N.; Gibaru, O.; Neto, P. A review on electromyography decoding and pattern recognition for human-machine interaction. IEEE Access 2019, 7, 39564–39582. [Google Scholar] [CrossRef]

- Asghar, A.; Jawaid Khan, S.; Azim, F.; Shakeel, C.S.; Hussain, A.; Niazi, I.K. Review on electromyography based intention for upper limb control using pattern recognition for human-machine interaction. J. Eng. Med. 2022, 236, 628–645. [Google Scholar] [CrossRef] [PubMed]

- Ghaderi, P.; Nosouhi, M.; Jordanic, M.; Marateb, H.R.; Mañanas, M.A.; Farina, D. Kernel density estimation of electromyographic signals and ensemble learning for highly accurate classification of a large set of hand/wrist motions. Front. Neurosci. 2022, 16, 796711. [Google Scholar] [CrossRef]

- Pizzolato, S.; Tagliapietra, L.; Cognolato, M.; Reggiani, M.; Müller, H.; Atzori, M. Comparison of six electromyography acquisition setups on hand movement classification tasks. PloS ONE 2017, 12, e0186132. [Google Scholar] [CrossRef]

- Sri-Iesaranusorn, P.; Chaiyaroj, A.; Buekban, C.; Dumnin, S.; Pongthornseri, R.; Thanawattano, C.; Surangsrirat, D. Classification of 41 hand and wrist movements via surface electromyogram using deep neural network. Front. Bioeng. Biotechnol. 2021, 9, 548357. [Google Scholar] [CrossRef]

- Zhai, X.; Jelfs, B.; Chan, R.H.; Tin, C. Self-recalibrating surface EMG pattern recognition for neuroprosthesis control based on convolutional neural network. Front. Neurosci. 2017, 11, 379. [Google Scholar] [CrossRef] [PubMed]

- Ghazaei, G.; Alameer, A.; Degenaar, P.; Morgan, G.; Nazarpour, K. Deep learning-based artificial vision for grasp classification in myoelectric hands. J. Neural Eng. 2017, 14, 036025. [Google Scholar] [CrossRef] [PubMed]

- Dai, C.; Hu, X. Finger joint angle estimation based on motoneuron discharge activities. IEEE J. Biomed. Health Inform. 2019, 24, 760–767. [Google Scholar] [CrossRef]

- Ding, Q.; Xiong, A.; Zhao, X.; Han, J. A review on researches and applications of sEMG-based motion intent recognition methods. Acta Autom. Sin. 2016, 42, 13–25. [Google Scholar]

- Luchetti, M.; Cutti, A.G.; Verni, G.; Sacchetti, R.; Rossi, N. Impact of Michelangelo prosthetic hand: Findings from a crossover longitudinal study. J. Rehabil. Res. Dev. 2015, 52, 605–618. [Google Scholar] [CrossRef]

- Van Der Niet Otr, O.; Reinders-Messelink, H.A.; Bongers, R.M.; Bouwsema, H.; Van Der Sluis, C.K. The i-LIMB hand and the DMC plus hand compared: A case report. Prosthetics Orthot. Int. 2010, 34, 216–220. [Google Scholar] [CrossRef]

- Belter, J.T.; Segil, J.L.; SM, B. Mechanical design and performance specifications of anthropomorphic prosthetic hands: A review. J. Rehabil. Res. Dev. 2013, 50, 599. [Google Scholar] [CrossRef]

- Di Domenico, D.; Marinelli, A.; Boccardo, N.; Semprini, M.; Lombardi, L.; Canepa, M.; Stedman, S.; Bellingegni, A.D.; Chiappalone, M.; Gruppioni, E.; et al. Hannes prosthesis control based on regression machine learning algorithms. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 5997–6002. [Google Scholar]

- Sensinger, J.W.; Dosen, S. A review of sensory feedback in upper-limb prostheses from the perspective of human motor control. Front. Neurosci. 2020, 14, 345. [Google Scholar] [CrossRef]

- Kim, K. A review of haptic feedback through peripheral nerve stimulation for upper extremity prosthetics. Curr. Opin. Biomed. Eng. 2022, 21, 100368. [Google Scholar] [CrossRef]

- Stephens-Fripp, B.; Alici, G.; Mutlu, R. A review of non-invasive sensory feedback methods for transradial prosthetic hands. IEEE Access 2018, 6, 6878–6899. [Google Scholar] [CrossRef]

- Xu, H.; Chai, G.; Zhang, N.; Gu, G. Restoring finger-specific tactile sensations with a sensory soft neuroprosthetic hand through electrotactile stimulation. Soft Sci. 2022, 2, 19. [Google Scholar] [CrossRef]

- Shehata, A.W.; Scheme, E.J.; Sensinger, J.W. Audible feedback improves internal model strength and performance of myoelectric prosthesis control. Sci. Rep. 2018, 8, 8541. [Google Scholar] [CrossRef]

- Li, K.; Zhou, Y.; Zhou, D.; Zeng, J.; Fang, Y.; Yang, J.; Liu, H. Electrotactile Feedback-Based Muscle Fatigue Alleviation for Hand Manipulation. Int. J. Humanoid Robot. 2021, 18, 2050024. [Google Scholar] [CrossRef]

- Cha, H.; An, S.; Choi, S.; Yang, S.; Park, S.; Park, S. Study on Intention Recognition and Sensory Feedback: Control of Robotic Prosthetic Hand Through EMG Classification and Proprioceptive Feedback Using Rule-based Haptic Device. IEEE Trans. Haptics 2022, 15, 560–571. [Google Scholar] [CrossRef]

- Dwivedi, A.; Shieff, D.; Turner, A.; Gorjup, G.; Kwon, Y.; Liarokapis, M. A shared control framework for robotic telemanipulation combining electromyography based motion estimation and compliance control. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 9467–9473. [Google Scholar]

- Fang, B.; Ma, X.; Wang, J.; Sun, F. Vision-based posture-consistent teleoperation of robotic arm using multi-stage deep neural network. Robot. Auton. Syst. 2020, 131, 103592. [Google Scholar] [CrossRef]

- Fang, B.; Ding, W.; Sun, F.; Shan, J.; Wang, X.; Wang, C.; Zhang, X. Brain-computer interface integrated with augmented reality for human-robot interaction. IEEE Trans. Cogn. Dev. Syst. 2022, 1. [Google Scholar] [CrossRef]

- Gillini, G.; Di Lillo, P.; Arrichiello, F. An assistive shared control architecture for a robotic arm using eeg-based bci with motor imagery. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 4132–4137. [Google Scholar]

- Abbink, D.A.; Carlson, T.; Mulder, M.; De Winter, J.C.; Aminravan, F.; Gibo, T.L.; Boer, E.R. A topology of shared control systems—Finding common ground in diversity. IEEE Trans. Hum. Mach. Syst. 2018, 48, 509–525. [Google Scholar] [CrossRef]

- Zhou, T.; Wachs, J.P. Early prediction for physical human robot collaboration in the operating room. Auton. Robot. 2018, 42, 977–995. [Google Scholar] [CrossRef]

- Li, G.; Li, Q.; Yang, C.; Su, Y.; Yuan, Z.; Wu, X. The Classification and New Trends of Shared Control Strategies in Telerobotic Systems: A Survey. IEEE Trans. Haptics 2023, 16, 118–133. [Google Scholar] [CrossRef]

- Mouchoux, J.; Carisi, S.; Dosen, S.; Farina, D.; Schilling, A.F.; Markovic, M. Artificial perception and semiautonomous control in myoelectric hand prostheses increases performance and decreases effort. IEEE Trans. Robot. 2021, 37, 1298–1312. [Google Scholar] [CrossRef]

- Castro, M.N.; Dosen, S. Continuous Semi-autonomous Prosthesis Control Using a Depth Sensor on the Hand. Front. Neurorobot. 2022, 16, 814973. [Google Scholar] [CrossRef]

- Starke, J.; Weiner, P.; Crell, M.; Asfour, T. Semi-autonomous control of prosthetic hands based on multimodal sensing, human grasp demonstration and user intention. Robot. Auton. Syst. 2022, 154, 104123. [Google Scholar] [CrossRef]

- Vasile, F.; Maiettini, E.; Pasquale, G.; Florio, A.; Boccardo, N.; Natale, L. Grasp Pre-shape Selection by Synthetic Training: Eye-in-hand Shared Control on the Hannes Prosthesis. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 13112–13119. [Google Scholar]

- Cipriani, C.; Zaccone, F.; Micera, S.; Carrozza, M.C. On the shared control of an EMG-controlled prosthetic hand: Analysis of user–prosthesis interaction. IEEE Trans. Robot. 2008, 24, 170–184. [Google Scholar] [CrossRef]

- Zhuang, K.Z.; Sommer, N.; Mendez, V.; Aryan, S.; Formento, E.; D’Anna, E.; Artoni, F.; Petrini, F.; Granata, G.; Cannaviello, G.; et al. Shared human–robot proportional control of a dexterous myoelectric prosthesis. Nat. Mach. Intell. 2019, 1, 400–411. [Google Scholar] [CrossRef]

- Seppich, N.; Tacca, N.; Chao, K.Y.; Akim, M.; Hidalgo-Carvajal, D.; Pozo Fortunić, E.; Tödtheide, A.; Kühn, J.; Haddadin, S. CyberLimb: A novel robotic prosthesis concept with shared and intuitive control. J. Neuroeng. Rehabil. 2022, 19, 41. [Google Scholar] [CrossRef]

- Mouchoux, J.; Bravo-Cabrera, M.A.; Dosen, S.; Schilling, A.F.; Markovic, M. Impact of shared control modalities on performance and usability of semi-autonomous prostheses. Front. Neurorobot. 2021, 15, 172. [Google Scholar] [CrossRef] [PubMed]

- Furui, A.; Eto, S.; Nakagaki, K.; Shimada, K.; Nakamura, G.; Masuda, A.; Chin, T.; Tsuji, T. A myoelectric prosthetic hand with muscle synergy–based motion determination and impedance model–based biomimetic control. Sci. Robot. 2019, 4, eaaw6339. [Google Scholar] [CrossRef]

- Wang, Y.; Tian, Y.; She, H.; Jiang, Y.; Yokoi, H.; Liu, Y. Design of an effective prosthetic hand system for adaptive grasping with the control of myoelectric pattern recognition approach. Micromachines 2022, 13, 219. [Google Scholar] [CrossRef] [PubMed]

- Shi, C.; Yang, D.; Zhao, J.; Jiang, L. i-MYO: A Hybrid Prosthetic Hand Control System based on Eye-tracking, Augmented Reality and Myoelectric signal. arXiv 2022, arXiv:2205.08948. [Google Scholar]

- Luo, Q.; Niu, C.M.; Chou, C.H.; Liang, W.; Deng, X.; Hao, M.; Lan, N. Biorealistic control of hand prosthesis augments functional performance of individuals with amputation. Front. Neurosci. 2021, 15, 1668. [Google Scholar] [CrossRef]

- Volkmar, R.; Dosen, S.; Gonzalez-Vargas, J.; Baum, M.; Markovic, M. Improving bimanual interaction with a prosthesis using semi-autonomous control. J. Neuroeng. Rehabil. 2019, 16, 140. [Google Scholar] [CrossRef]

- Kieliba, P.; Clode, D.; Maimon-Mor, R.O.; Makin, T.R. Robotic hand augmentation drives changes in neural body representation. Sci. Robot. 2021, 6, eabd7935. [Google Scholar] [CrossRef]

- Frey, S.T.; Haque, A.T.; Tutika, R.; Krotz, E.V.; Lee, C.; Haverkamp, C.B.; Markvicka, E.J.; Bartlett, M.D. Octopus-inspired adhesive skins for intelligent and rapidly switchable underwater adhesion. Sci. Adv. 2022, 8, eabq1905. [Google Scholar] [CrossRef]

- Chang, M.H.; Kim, D.H.; Kim, S.H.; Lee, Y.; Cho, S.; Park, H.S.; Cho, K.J. Anthropomorphic prosthetic hand inspired by efficient swing mechanics for sports activities. IEEE/ASME Trans. Mech. 2021, 27, 1196–1207. [Google Scholar] [CrossRef]

- Lee, J.; Kim, J.; Park, S.; Hwang, D.; Yang, S. Soft robotic palm with tunable stiffness using dual-layered particle jamming mechanism. IEEE/ASME Trans. Mech. 2021, 26, 1820–1827. [Google Scholar] [CrossRef]

- Heo, S.H.; Kim, C.; Kim, T.S.; Park, H.S. Human-palm-inspired artificial skin material enhances operational functionality of hand manipulation. Adv. Funct. Mater. 2020, 30, 2002360. [Google Scholar] [CrossRef]

- Zhou, H.; Alici, G. Non-invasive human-machine interface (hmi) systems with hybrid on-body sensors for controlling upper-limb prosthesis: A review. IEEE Sens. J. 2022, 22, 10292–10307. [Google Scholar] [CrossRef]

- Xue, Y.; Ju, Z.; Xiang, K.; Chen, J.; Liu, H. Multiple sensors based hand motion recognition using adaptive directed acyclic graph. Appl. Sci. 2017, 7, 358. [Google Scholar] [CrossRef]

- Biddiss, E.; Beaton, D.; Chau, T. Consumer design priorities for upper limb prosthetics. Disabil. Rehabil. Assist. Technol. 2007, 2, 346–357. [Google Scholar] [CrossRef]

- Jang, C.H.; Yang, H.S.; Yang, H.E.; Lee, S.Y.; Kwon, J.W.; Yun, B.D.; Choi, J.Y.; Kim, S.N.; Jeong, H.W. A survey on activities of daily living and occupations of upper extremity amputees. Ann. Rehabil. Med. 2011, 35, 907–921. [Google Scholar] [CrossRef]

- Prahm, C.; Schulz, A.; Paaßen, B.; Schoisswohl, J.; Kaniusas, E.; Dorffner, G.; Hammer, B.; Aszmann, O. Counteracting electrode shifts in upper-limb prosthesis control via transfer learning. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 956–962. [Google Scholar] [CrossRef]

- Côté-Allard, U.; Fall, C.L.; Drouin, A.; Campeau-Lecours, A.; Gosselin, C.; Glette, K.; Laviolette, F.; Gosselin, B. Deep learning for electromyographic hand gesture signal classification using transfer learning. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 760–771. [Google Scholar] [CrossRef]

- Park, K.H.; Suk, H.I.; Lee, S.W. Position-independent decoding of movement intention for proportional myoelectric interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 24, 928–939. [Google Scholar] [CrossRef]

- Matsubara, T.; Morimoto, J. Bilinear modeling of EMG signals to extract user-independent features for multiuser myoelectric interface. IEEE Trans. Biomed. Eng. 2013, 60, 2205–2213. [Google Scholar] [CrossRef] [PubMed]

- Xiong, A.; Zhao, X.; Han, J.; Liu, G.; Ding, Q. An user-independent gesture recognition method based on sEMG decomposition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 4185–4190. [Google Scholar]

- Yang, D.; Gu, Y.; Jiang, L.; Osborn, L.; Liu, H. Dynamic training protocol improves the robustness of PR-based myoelectric control. Biomed. Signal Process. Control 2017, 31, 249–256. [Google Scholar] [CrossRef]

- Kristoffersen, M.B.; Franzke, A.W.; Van Der Sluis, C.K.; Bongers, R.M.; Murgia, A. Should hands be restricted when measuring able-bodied participants to evaluate machine learning controlled prosthetic hands? IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1977–1983. [Google Scholar] [CrossRef] [PubMed]

- Engdahl, S.M.; Acuña, S.A.; King, E.L.; Bashatah, A.; Sikdar, S. First demonstration of functional task performance using a sonomyographic prosthesis: A case study. Front. Bioeng. Biotechnol. 2022, 10, 876836. [Google Scholar] [CrossRef]

- Wang, J.; Bi, L.; Fei, W.; Tian, K. EEG-Based Continuous Hand Movement Decoding Using Improved Center-Out Paradigm. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2845–2855. [Google Scholar] [CrossRef]

| Module | Types | Reference |

|---|---|---|

| Feature engineering | Single time-domain feature | MAV [12,13,14], RMS [15,16,17] |

| Combined time-domain features | RMS+WL+ZC [18], ZOM+SOM+FOM+PS+SE+USTD [19], MAV+WL+ZC+SSC+SOAMC [20] | |

| Decoding model | Musculoskeletal model | Hill-type muscle model [7,21,22], Mykin model [23,24], Lumped-parameter model [25,26] |

| Traditional machine learning model | Gaussian processes [12,27,28], NMF [15,29,30], Linear regression [31,32] | |

| Deep learning model | CNN-based model [33,34,35,36], RNN-based model [37,38,39], Hybrid-structured model [40,41,42] | |

| Mapping parameters | Kinematic parameters | Joint angle [12,17,43], Joint angular velocity [28,44], Joint angular acceleration [39,45] |

| Dynamics parameters | Joint torque [35,46,47,48] | |

| Other parameters | 3D coordinate value [49,50], Movement of the in-hand object [51], Multidimensional arrays [14], Movement activation level [52] |

| Manipulation Scenarios | Tasks | Reference |

|---|---|---|

| Grasp test | [81,110,111,112] | |

| Box-and-blocks test | [32,107,113] | |

| Simple manipulation tasks | Relocation test | [32,102,103] |

| Pouring or drinking | [20,49,107] | |

| Screwing test | [49,108] | |

| Block building | [49] | |

| Complex manipulation tasks | Squeezing toothpaste | [20] |

| Bimanual interaction | [114] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Min, H.; Wang, D.; Xia, Z.; Sun, F.; Fang, B. A Review of Myoelectric Control for Prosthetic Hand Manipulation. Biomimetics 2023, 8, 328. https://doi.org/10.3390/biomimetics8030328

Chen Z, Min H, Wang D, Xia Z, Sun F, Fang B. A Review of Myoelectric Control for Prosthetic Hand Manipulation. Biomimetics. 2023; 8(3):328. https://doi.org/10.3390/biomimetics8030328

Chicago/Turabian StyleChen, Ziming, Huasong Min, Dong Wang, Ziwei Xia, Fuchun Sun, and Bin Fang. 2023. "A Review of Myoelectric Control for Prosthetic Hand Manipulation" Biomimetics 8, no. 3: 328. https://doi.org/10.3390/biomimetics8030328

APA StyleChen, Z., Min, H., Wang, D., Xia, Z., Sun, F., & Fang, B. (2023). A Review of Myoelectric Control for Prosthetic Hand Manipulation. Biomimetics, 8(3), 328. https://doi.org/10.3390/biomimetics8030328