Robot Arm Reaching Based on Inner Rehearsal

Abstract

:1. Introduction

- The internal models are established based on the relative positioning method. We limit the output of the inverse model to a small-scale displacement toward the target to smooth the reaching trajectory. The loss of the inverse model during training is defined as the distance in Cartesian space calculated by the forward model.

- The models are pre-trained with an FK model and then fine-tuned in a real environment. The approach not only increases the learning efficiency of the internal models but also decreases the mechanical wear and tear of the robots.

- The motion planning approach based on inner rehearsal improves the reaching performance via predictions of the motion command. During the whole reaching process, the planning procedure is divided into two stages, proprioception-based rough reaching planning and visual-feedback-based iterative adjustment planning.

2. Related Work

2.1. Reaching with Visual Servoing

2.2. Learning-Based Internal Model

2.3. Inner Rehearsal

2.4. Issues Associated with Related Work

- We use image-based visual servoing to construct a closed-loop control so that the reaching process can be more robust than that without visual information.

- We build refined internal models for robots using deep neural networks. After coarse IK-based models generate commands, we adjust the commands with learning-based models to eliminate the influence of potential measurement errors.

- Inner rehearsal is applied before the commands are actually executed. The original commands are adjusted and then executed according to the result of inner rehearsal.

3. Methodology

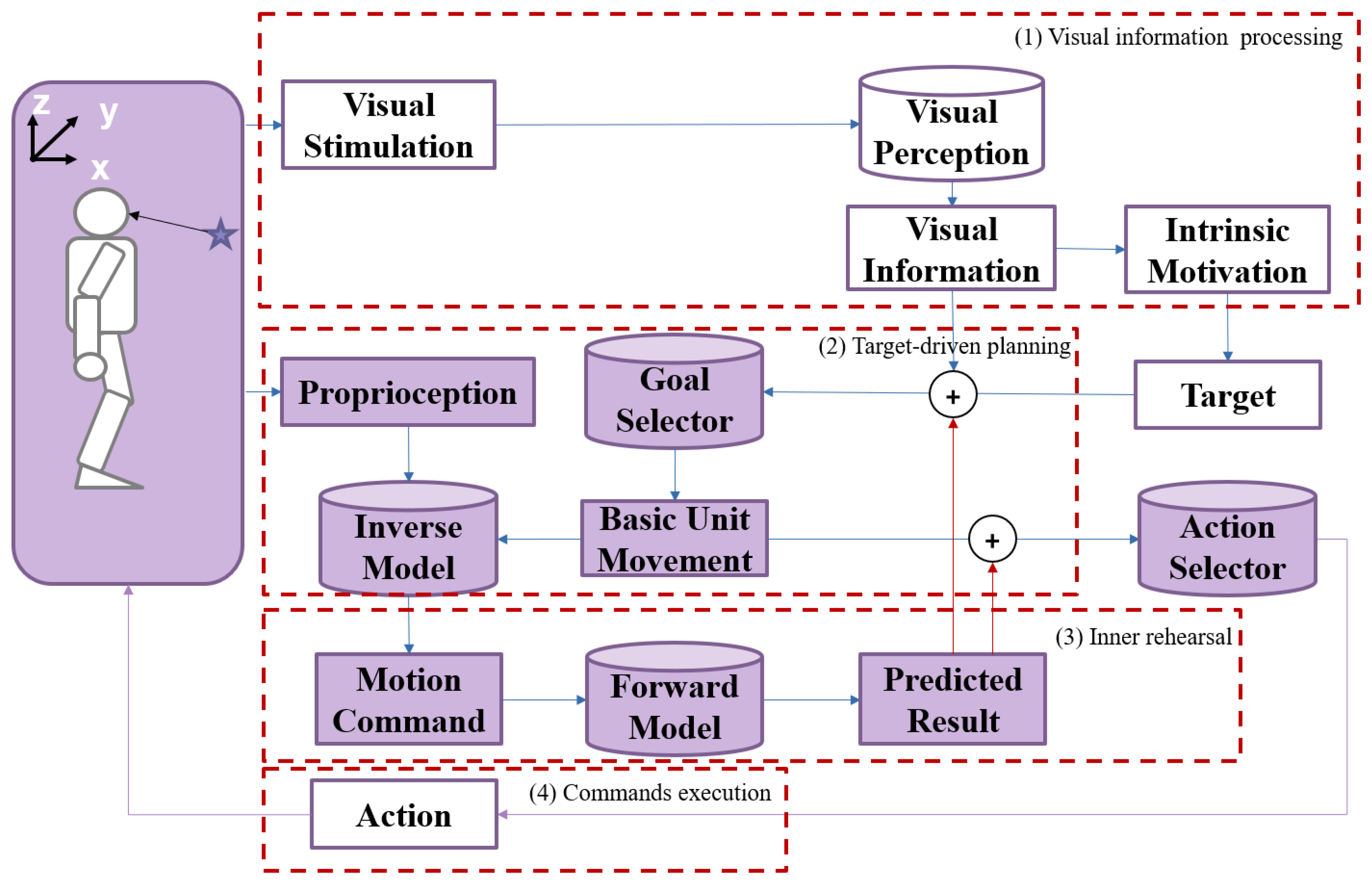

3.1. Overall Framework

- The aim of movement is generated by the relative position between the target and the end-effector. The inverse model generates the motion command based on the current arm state and the expected movement. Each movement is supposed to be a small-scale displacement of the end-effector toward the target.

- The forward model will predict the result of the motion command without actual execution. The predictions of the current movement are considered to be the next state of the robot so that the robot can generate the next motion command accordingly. In this way, a sequence of motion commands will be generated. The robot conducts (2) and (3) repeatedly until the prediction of movements exactly reflects the target.

- The robot executes these commands and reaches the target.

3.2. Establishment of Internal Models

3.2.1. Modeling of the Internal Models

3.2.2. Two-Stage Learning for the Internal Models

3.3. Motion Planning Based on Inner Rehearsal

3.3.1. Proprioception-Based Rough Reaching

3.3.2. Visual-Feedback-Based Iterative Adjustments

| Algorithm 1 Algorithm of motion planning based on inner rehearsal |

Input: Current joint states and target position Output: Motion commands

|

4. Experiments

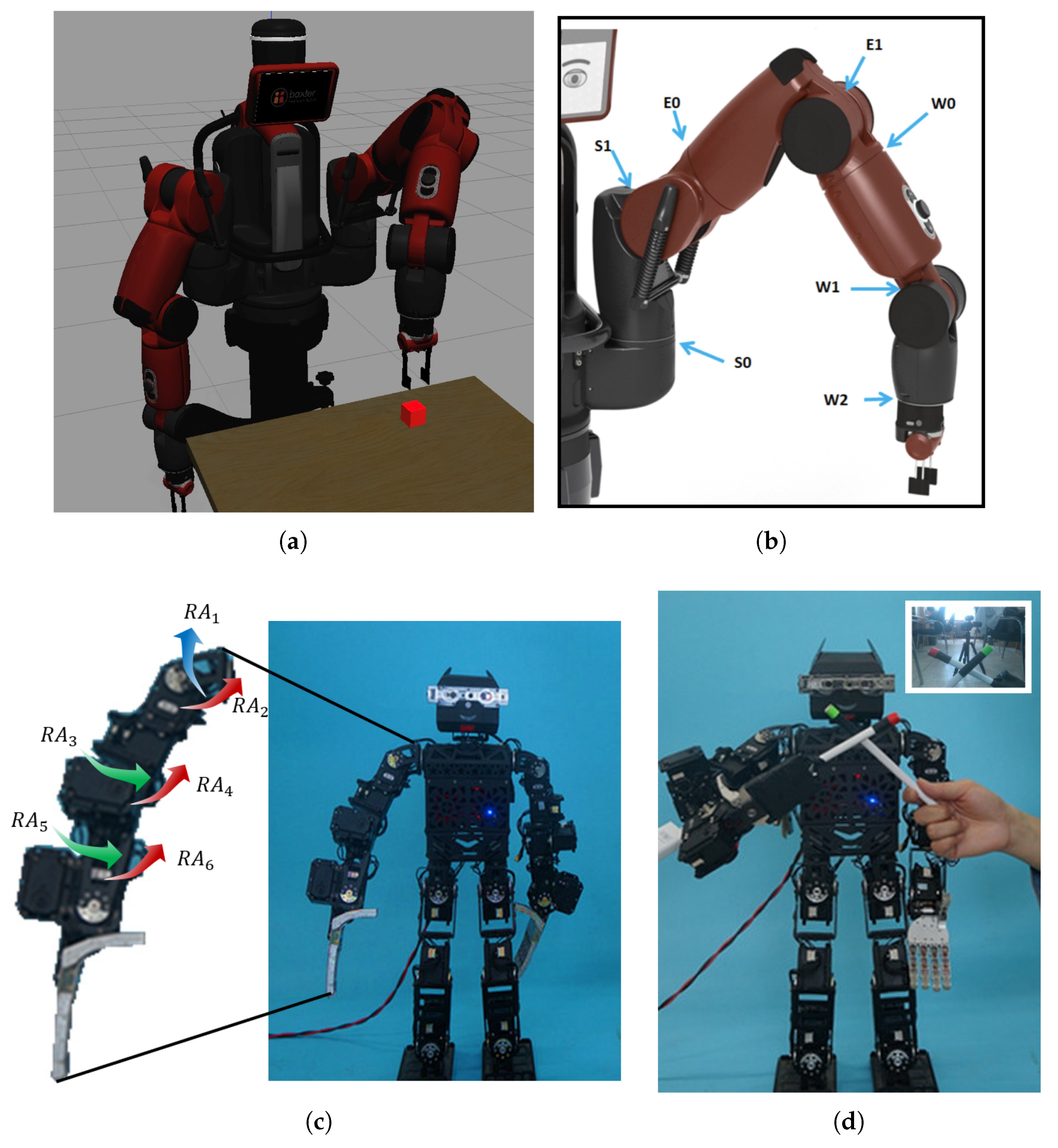

4.1. Experimental Platforms

4.2. Evaluation of the Internal Models

4.2.1. Model Parameters

4.2.2. Data Preparation

4.2.3. Performance of the Internal Models

4.3. Evaluation of the Inner-Rehearsal-Based Motion Planning

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| FK | Forward Kinematics |

| IK | Inverse Kinematics |

| IMC | Internal Model Controller |

| DIM | Divided Inverse Model |

| FM | Forward Kinematics Model |

| IM | Inverse Kinematics Model |

| DoF | Degree of Freedom |

References

- Rosenbaum, D.A. Human Motor Control; Academic Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Cangelosi, A.; Schlesinger, M. Developmental Robotics: From Babies to Robots; MIT Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Rüßmann, M.; Lorenz, M.; Gerbert, P.; Waldner, M.; Justus, J.; Engel, P.; Harnisch, M. Industry 4.0: The Future of Productivity and Growth in Manufacturing Industries; Boston Consulting Group: Boston, MA, USA, 2015; Volume 9, pp. 54–89. [Google Scholar]

- Bushnell, E.W.; Boudreau, J.P. Motor development and the mind: The potential role of motor abilities as a determinant of aspects of perceptual development. Child Dev. 1993, 64, 1005–1021. [Google Scholar] [CrossRef] [PubMed]

- Hofsten, C.V. Action, the foundation for cognitive development. Scand. J. Psychol. 2009, 50, 617–623. [Google Scholar] [CrossRef] [PubMed]

- Garcia, C.E.; Morari, M. Internal model control. A unifying review and some new results. Ind. Eng. Chem. Process Des. Dev. 1982, 21, 308–323. [Google Scholar] [CrossRef]

- Wolpert, D.M.; Miall, R.; Kawato, M. Internal models in the cerebellum. Trends Cogn. Sci. 1998, 2, 338–347. [Google Scholar] [CrossRef] [PubMed]

- Wolpert, D.; Kawato, M. Multiple paired forward and inverse models for motor control. Neural Netw. 1998, 11, 1317–1329. [Google Scholar] [CrossRef]

- Kavraki, L.E.; LaValle, S.M. Motion Planning. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 139–162. [Google Scholar] [CrossRef]

- Angeles, J. On the Numerical Solution of the Inverse Kinematic Problem. Int. J. Robot. Res. 1985, 4, 21–37. [Google Scholar] [CrossRef]

- Shimizu, M. Analytical inverse kinematics for 5-DOF humanoid manipulator under arbitrarily specified unconstrained orientation of end-effector. Robotica 2014, 33, 747–767. [Google Scholar] [CrossRef]

- Tong, Y.; Liu, J.; Liu, Y.; Yuan, Y. Analytical inverse kinematic computation for 7-DOF redundant sliding manipulators. Mech. Mach. Theory 2021, 155, 104006. [Google Scholar] [CrossRef]

- Lee, C.; Ziegler, M. Geometric Approach in Solving Inverse Kinematics of PUMA Robots. IEEE Trans. Aerosp. Electron. Syst. 1984, AES-20, 695–706. [Google Scholar] [CrossRef]

- Liu, T.; Nie, M.; Wu, X.; Luo, D. Developing Robot Reaching Skill via Look-ahead Planning. In Proceedings of the 2019 WRC Symposium on Advanced Robotics and Automation (WRC SARA), Beijing, China, 21–22 August 2019; pp. 25–31. [Google Scholar] [CrossRef]

- Bouganis, A.; Shanahan, M. Training a spiking neural network to control a 4-DoF robotic arm based on Spike Timing-Dependent Plasticity. In Proceedings of the The 2010 International Joint Conference on Neural Networks (IJCNN), Barcelona, Spain, 18–23 July 2010; pp. 1–8. [Google Scholar] [CrossRef]

- Huang, D.W.; Gentili, R.; Reggia, J. A Self-Organizing Map Architecture for Arm Reaching Based on Limit Cycle Attractors. EAI Endorsed Trans. Self-Adapt. Syst. 2016, 16, e1. [Google Scholar] [CrossRef]

- Lampe, T.; Riedmiller, M. Acquiring visual servoing reaching and grasping skills using neural reinforcement learning. In Proceedings of the The 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–8. [Google Scholar] [CrossRef]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar]

- Wang, Y.G.; Wu, Z.Z.; Chen, X.D. Kind of analytical inverse kinematics method. Appl. Res. Comput. 2009, 26, 2368. [Google Scholar]

- Dimarco, G.; Pareschi, L. Numerical methods for kinetic equations. Acta Numer. 2014, 23, 369–520. [Google Scholar] [CrossRef]

- Billard, A.; Kragic, D. Trends and challenges in robot manipulation. Science 2019, 364, eaat8414. [Google Scholar] [CrossRef] [PubMed]

- Wieser, E.; Cheng, G. A Self-Verifying Cognitive Architecture for Robust Bootstrapping of Sensory-Motor Skills via Multipurpose Predictors. IEEE Trans. Cogn. Dev. Syst. 2018, 10, 1081–1095. [Google Scholar] [CrossRef]

- Kumar, V.; Todorov, E.; Levine, S. Optimal control with learned local models: Application to dexterous manipulation. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 378–383. [Google Scholar]

- Berthier, N.E.; Clifton, R.K.; Gullapalli, V.; McCall, D.D.; Robin, D.J. Visual information and object size in the control of reaching. J. Mot. Behav. 1996, 28, 187–197. [Google Scholar] [CrossRef]

- Churchill, A.; Hopkins, B.; Rönnqvist, L.; Vogt, S. Vision of the hand and environmental context in human prehension. Exp. Brain Res. 2000, 134, 81–89. [Google Scholar] [CrossRef]

- Luo, D.; Nie, M.; Zhang, T.; Wu, X. Developing robot reaching skill with relative-location based approximating. In Proceedings of the 2018 Joint IEEE 8th International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob), Tokyo, Japan, 16–20 September 2018; pp. 147–154. [Google Scholar]

- Dingsheng, L.; Fan, H.; Tao, Z.; Yian, D.; Xihong, W. How Does a Robot Develop Its Reaching Ability Like Human Infants Do? IEEE Trans. Cogn. Dev. Syst. 2018, 10, 795–809. [Google Scholar]

- Kerzel, M.; Wermter, S. Neural end-to-end self-learning of visuomotor skills by environment interaction. In Proceedings of the International Conference on Artificial Neural Networks, Alghero, Italy, 11–14 September 2017; Springer: Cham, Switzerland, 2017; pp. 27–34. [Google Scholar]

- Alterovitz, R.; Koenig, S.; Likhachev, M. Robot planning in the real world: Research challenges and opportunities. AI Mag. 2016, 37, 76–84. [Google Scholar] [CrossRef]

- Krivic, S.; Cashmore, M.; Magazzeni, D.; Ridder, B.; Szedmak, S.; Piater, J.H. Decreasing Uncertainty in Planning with State Prediction. In Proceedings of the IJCAI, Melbourne, Australia, 19–25 August 2017; pp. 2032–2038. [Google Scholar]

- Nie, M.; Luo, D.; Liu, T.; Wu, X. Action Selection Based on Prediction for Robot Planning. In Proceedings of the 2019 Joint IEEE International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob), Valparaiso, Chile, 26–30 October 2019. [Google Scholar]

- Shanahan, M. Cognition, action selection, and inner rehearsal. In Proceedings of the IJCAI Workshop on Modelling Natural Action Selection, Edinburgh, Scotland, 30 July– 5 August 2005; pp. 92–99. [Google Scholar]

- Chaumette, F.; Hutchinson, S.; Corke, P. Visual servoing. In Handbook of Robotics; Springer: Cham, Switzerland, 2016; pp. 841–866. [Google Scholar]

- Nagahama, K.; Hashimoto, K.; Noritsugu, T.; Takaiawa, M. Visual servoing based on object motion estimation. In Proceedings of the 2000 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2000) (Cat. No.00CH37113), Takamatsu, Japan, 31 October–5 November 2000; Volume 1, pp. 245–250. [Google Scholar] [CrossRef]

- Shen, Y.; Sun, D.; Liu, Y.-H.; Li, K. Asymptotic trajectory tracking of manipulators using uncalibrated visual feedback. IEEE/ASME Trans. Mechatronics 2003, 8, 87–98. [Google Scholar] [CrossRef]

- Kosuge, K.; Furuta, K.; Yokoyama, T. Virtual internal model following control of robot arms. In Proceedings of the Robotics and Automation, Raleigh, NC, USA, 31 March–3 April 1987. [Google Scholar]

- Koivisto, H.; Ruoppila, V.; Koivo, H. Properties of the neural network internal model controller. In Artificial Intelligence in Real-Time Control 1992; Verbruggen, H., Rodd, M., Eds.; IFAC Symposia Series; Pergamon: Oxford, UK, 1993; pp. 61–66. [Google Scholar] [CrossRef]

- Winfield, A.F.; Blum, C.; Liu, W. Towards an ethical robot: Internal models, consequences and ethical action selection. In Proceedings of the Advances in Autonomous Robotics Systems: 15th Annual Conference, TAROS 2014, Birmingham, UK, 1–3 September 2014; Proceedings 15. Springer: Cham, Switzerland, 2014; pp. 85–96. [Google Scholar]

- Demiris, Y. Prediction of intent in robotics and multi-agent systems. Cogn. Process. 2007, 8, 151–158. [Google Scholar] [CrossRef]

- Cisek, P.; Kalaska, J.F. Neural correlates of mental rehearsal in dorsal premotor cortex. Nature 2004, 431, 993. [Google Scholar] [CrossRef] [PubMed]

- Pezzulo, G. Coordinating with the Future: The Anticipatory Nature of Representation. Minds Mach. 2008, 18, 179–225. [Google Scholar] [CrossRef]

- Braun, J.J.; Bergen, K.; Dasey, T.J. Inner rehearsal modeling for cognitive robotics. In Proceedings of the Multisensor, Multisource Information Fusion: Architectures, Algorithms, and Applications, Orlando, FL, USA, 25–29 April 2011; International Society for Optics and Photonics: Bellingham, WA, USA, 2011; Volume 8064, p. 80640A. [Google Scholar]

- Jayasekara, B.; Watanabe, K.; Kiguchi, K.; Izumi, K. Interpreting Fuzzy Linguistic Information by Acquiring Robot’s Experience Based on Internal Rehearsal. J. Syst. Des. Dyn. 2010, 4, 297–313. [Google Scholar] [CrossRef]

- Erdemir, E.; Frankel, C.B.; Kawamura, K.; Gordon, S.M.; Ulutas, B. Towards a cognitive robot that uses internal rehearsal to learn affordance relations. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, Nice, France, 22–26 September 2008. [Google Scholar]

- Lyons, D.; Nirmal, P.; Benjamin, D.P. Navigation of uncertain terrain by fusion of information from real and synthetic imagery. Proc. SPIE-Int. Soc. Opt. Eng. 2012, 8407, 113–120. [Google Scholar] [CrossRef]

- Atkinson, C.; McCane, B.; Szymanski, L.; Robins, A. Pseudo-recursal: Solving the catastrophic forgetting problem in deep neural networks. arXiv 2018, arXiv:1802.03875. [Google Scholar]

- Atkinson, C.; McCane, B.; Szymanski, L.; Robins, A. Pseudo-rehearsal: Achieving deep reinforcement learning without catastrophic forgetting. Neurocomputing 2021, 428, 291–307. [Google Scholar] [CrossRef]

- Man, D.; Vision, A. A Computational Investigation into the Human Representation and Processing of Visual Information; WH Freeman and Company: San Francisco, CA, USA, 1982. [Google Scholar]

- Aoki, T.; Nakamura, T.; Nagai, T. Learning of motor control from motor babbling. IFAC-PapersOnLine 2016, 49, 154–158. [Google Scholar] [CrossRef]

- Luo, D.; Nie, M.; Wei, Y.; Hu, F.; Wu, X. Forming the Concept of Direction Developmentally. IEEE Trans. Cogn. Dev. Syst. 2019, 12, 759–773. [Google Scholar] [CrossRef]

- Luo, D.; Nie, M.; Wu, X. Generating Basic Unit Movements with Conditional Generative Adversarial Networks. Chin. J. Electron. 2019, 28, 1099–1107. [Google Scholar] [CrossRef]

- Krakauer, J.W.; Ghilardi, M.F.; Ghez, C. Independent learning of internal models for kinematic and dynamic control of reaching. Nat. Neurosci. 1999, 2, 1026–1031. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

| Classification | Approaches |

|---|---|

| Numerical method [10] | |

| Conventional IK-based | Analytical method [11,12] |

| Geometric method [13] | |

| Learning-based | Supervised learning: deep neural networks [14], spiking neural networks [15] Unsupervised learning: self-organizing maps [16], reinforcement learning [17,18] |

| Platform | Network | Size | Learning Rate |

|---|---|---|---|

| Baxter | DIM | 0.0001 0.0001 | |

| PKU-HR6.0 II | 0.0001 | ||

| 0.0001 | |||

| 0.0001 | |||

| 0.0002 |

| Platform | Condition | Distance after Reaching (cm) |

|---|---|---|

| Baxter | Without inner rehearsal | 2.72 ± 0.19 |

| With inner rehearsal | 0.55 ± 0.12 | |

| PKU-HR6.0 II | Without inner rehearsal | 0.85 ± 0.16 |

| With inner rehearsal | 0.53 ± 0.09 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Zou, Y.; Wei, Y.; Nie, M.; Liu, T.; Luo, D. Robot Arm Reaching Based on Inner Rehearsal. Biomimetics 2023, 8, 491. https://doi.org/10.3390/biomimetics8060491

Wang J, Zou Y, Wei Y, Nie M, Liu T, Luo D. Robot Arm Reaching Based on Inner Rehearsal. Biomimetics. 2023; 8(6):491. https://doi.org/10.3390/biomimetics8060491

Chicago/Turabian StyleWang, Jiawen, Yudi Zou, Yaoyao Wei, Mengxi Nie, Tianlin Liu, and Dingsheng Luo. 2023. "Robot Arm Reaching Based on Inner Rehearsal" Biomimetics 8, no. 6: 491. https://doi.org/10.3390/biomimetics8060491