Bilateral Elimination Rule-Based Finite Class Bayesian Inference System for Circular and Linear Walking Prediction

Abstract

:1. Introduction

2. Materials and Methods

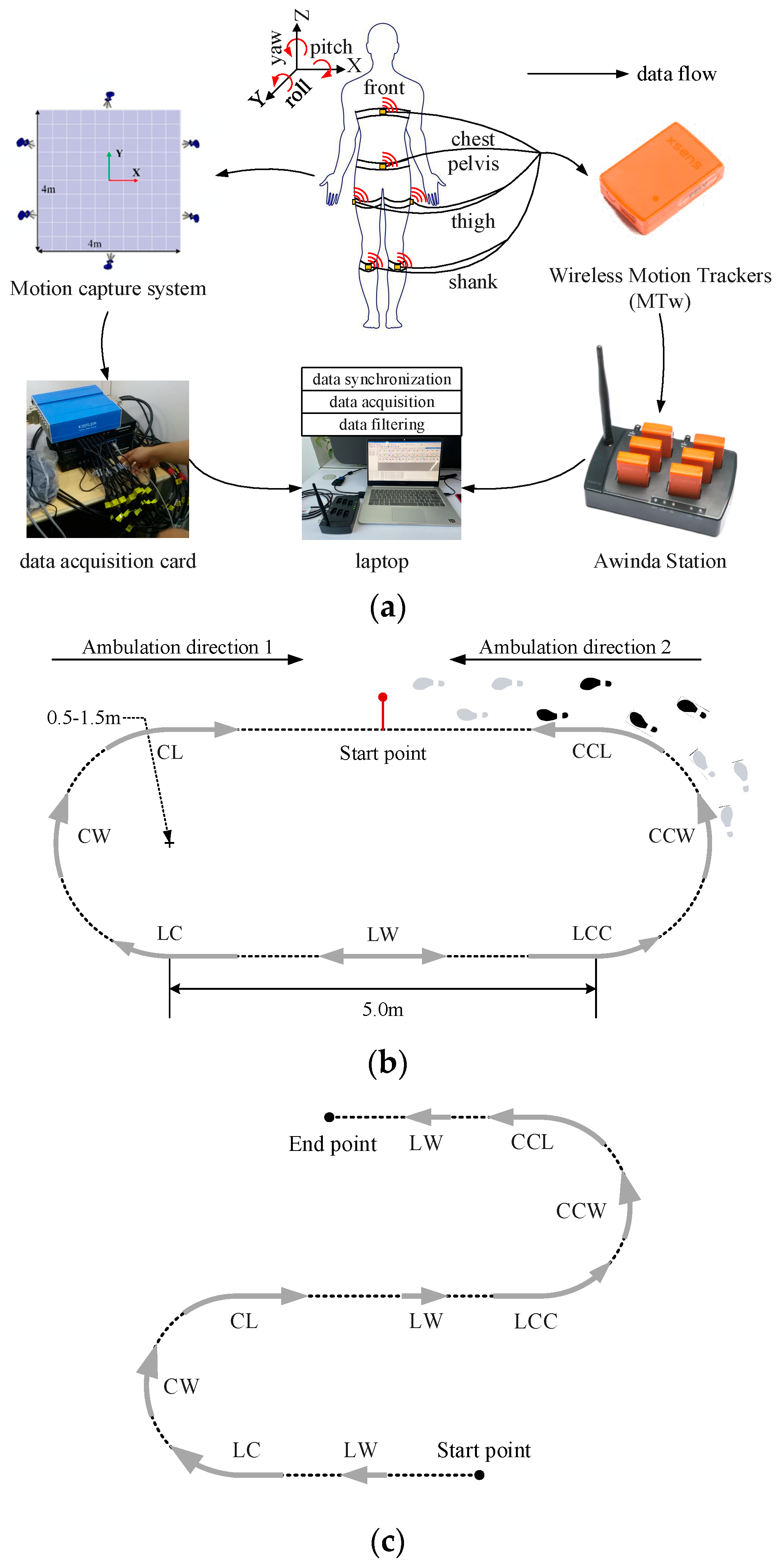

2.1. Subjects and Data Measurements

2.2. Data Processing

2.3. Motion Feature Extraction

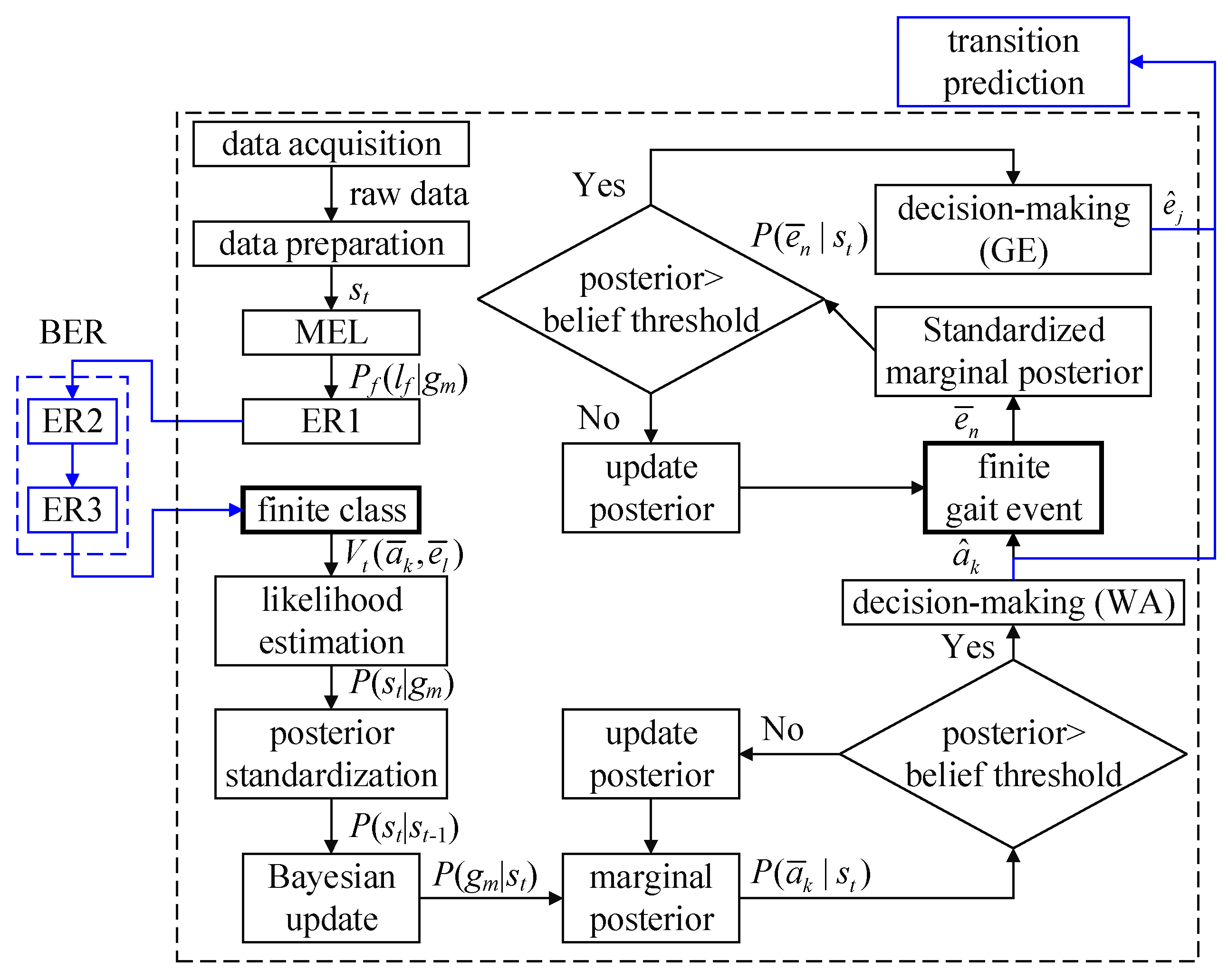

2.4. Finite Class Bayesian Inference System

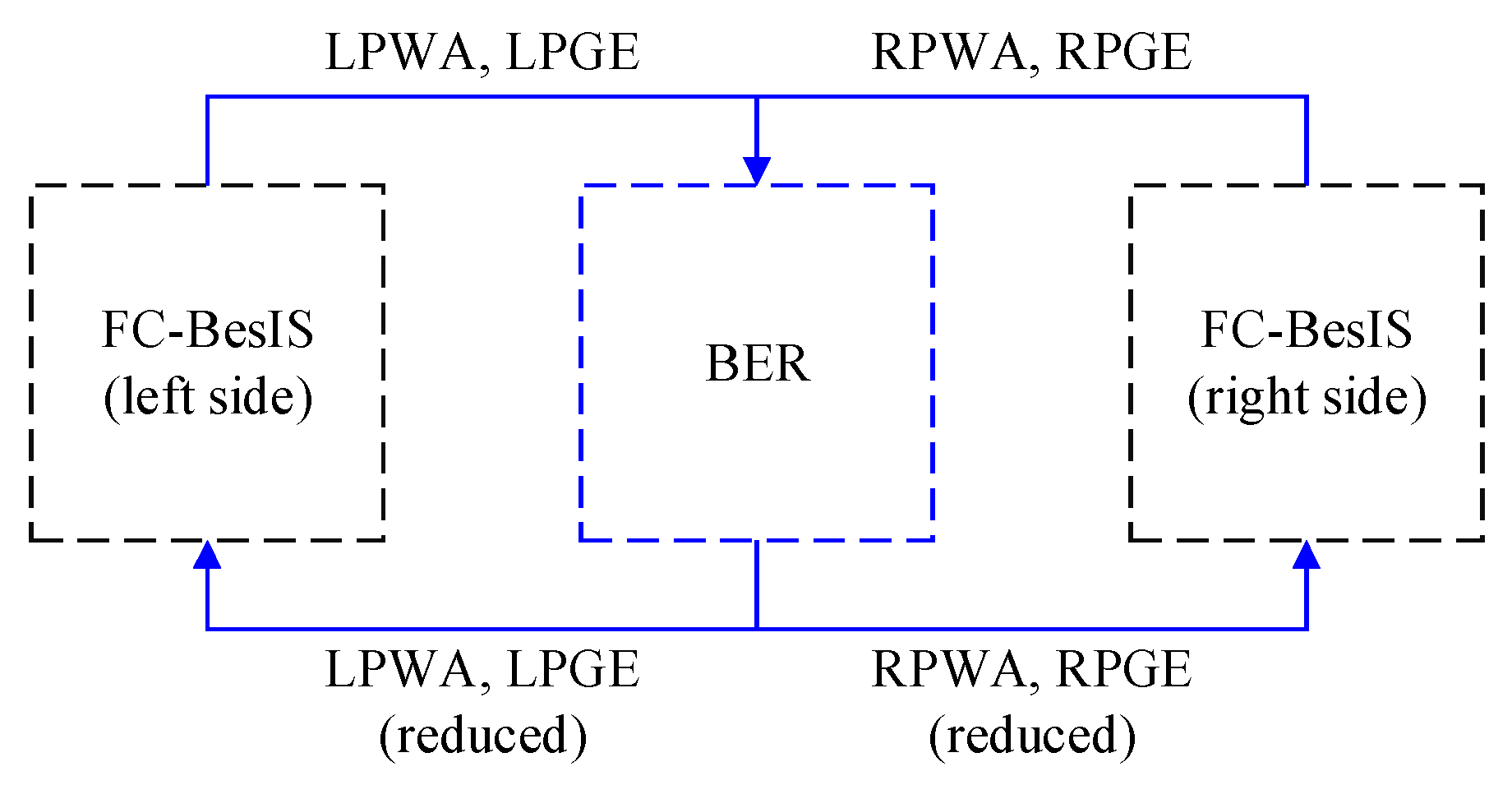

2.5. Bilateral Elimination Rules

2.6. Bilateral Elimination Rules-Based Finite Class Bayesian Inference System

2.7. Statistical Analysis

3. Results

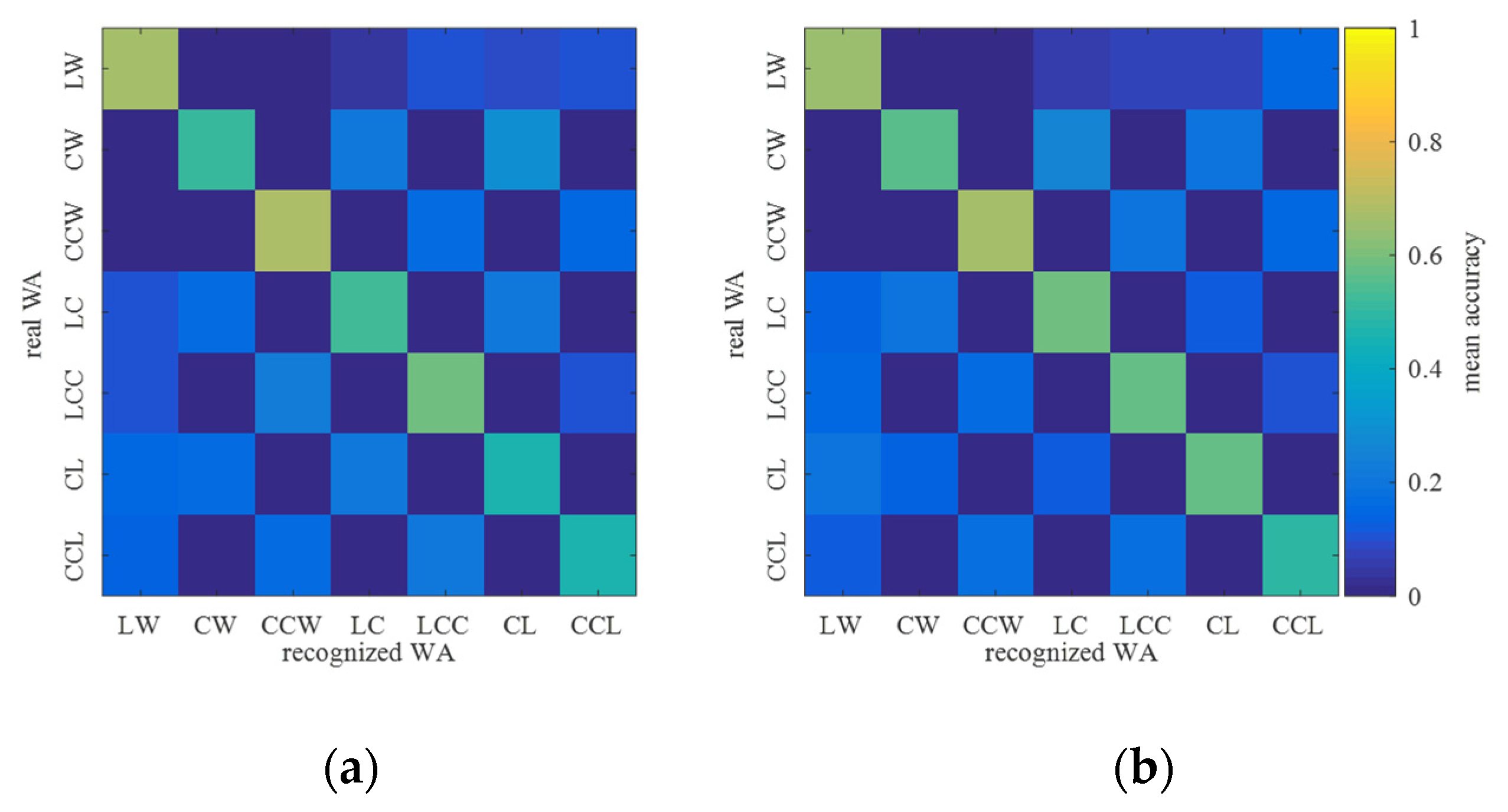

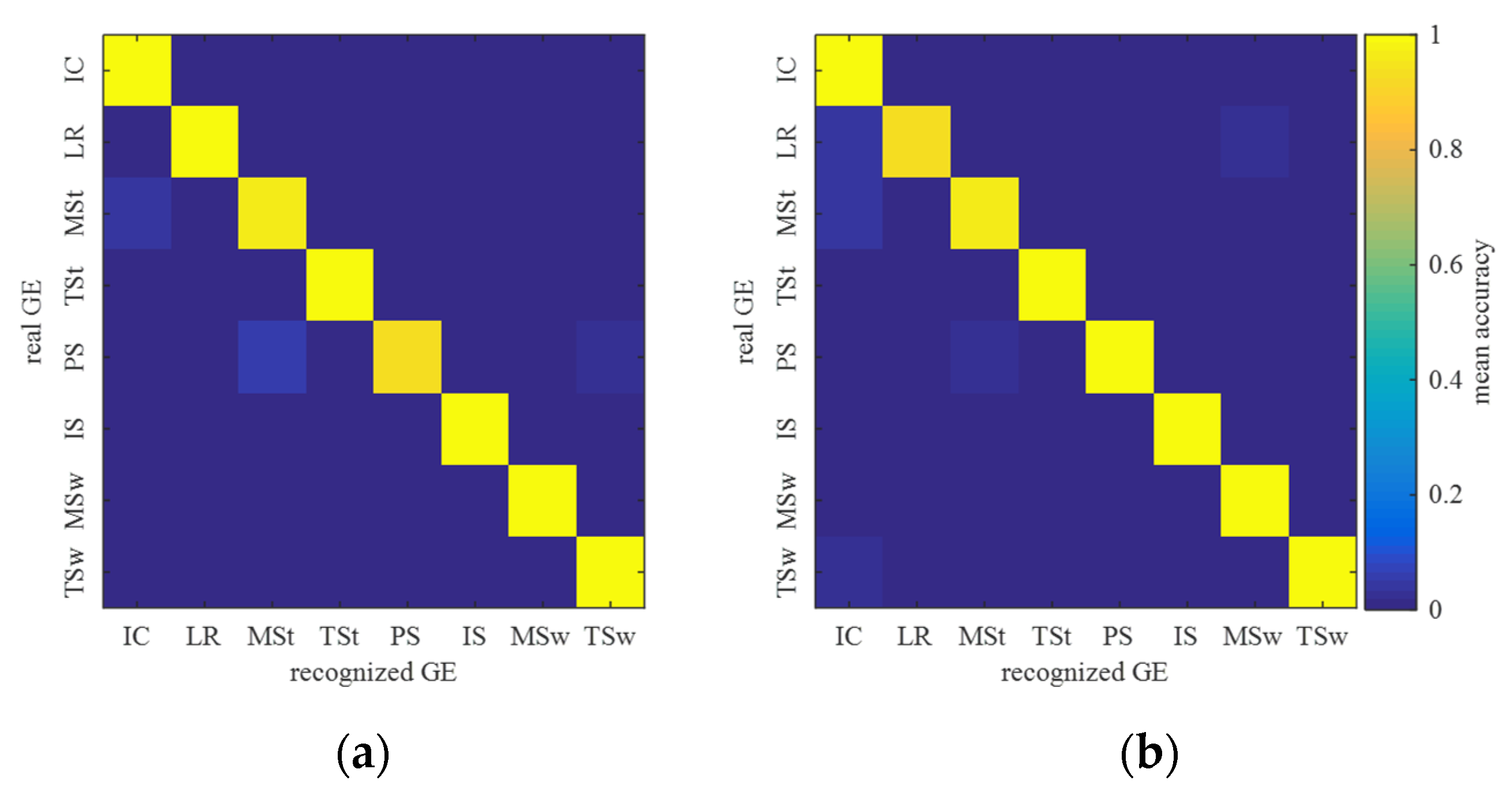

3.1. Walking Activity Recognition Accuracy of FC-BesIS

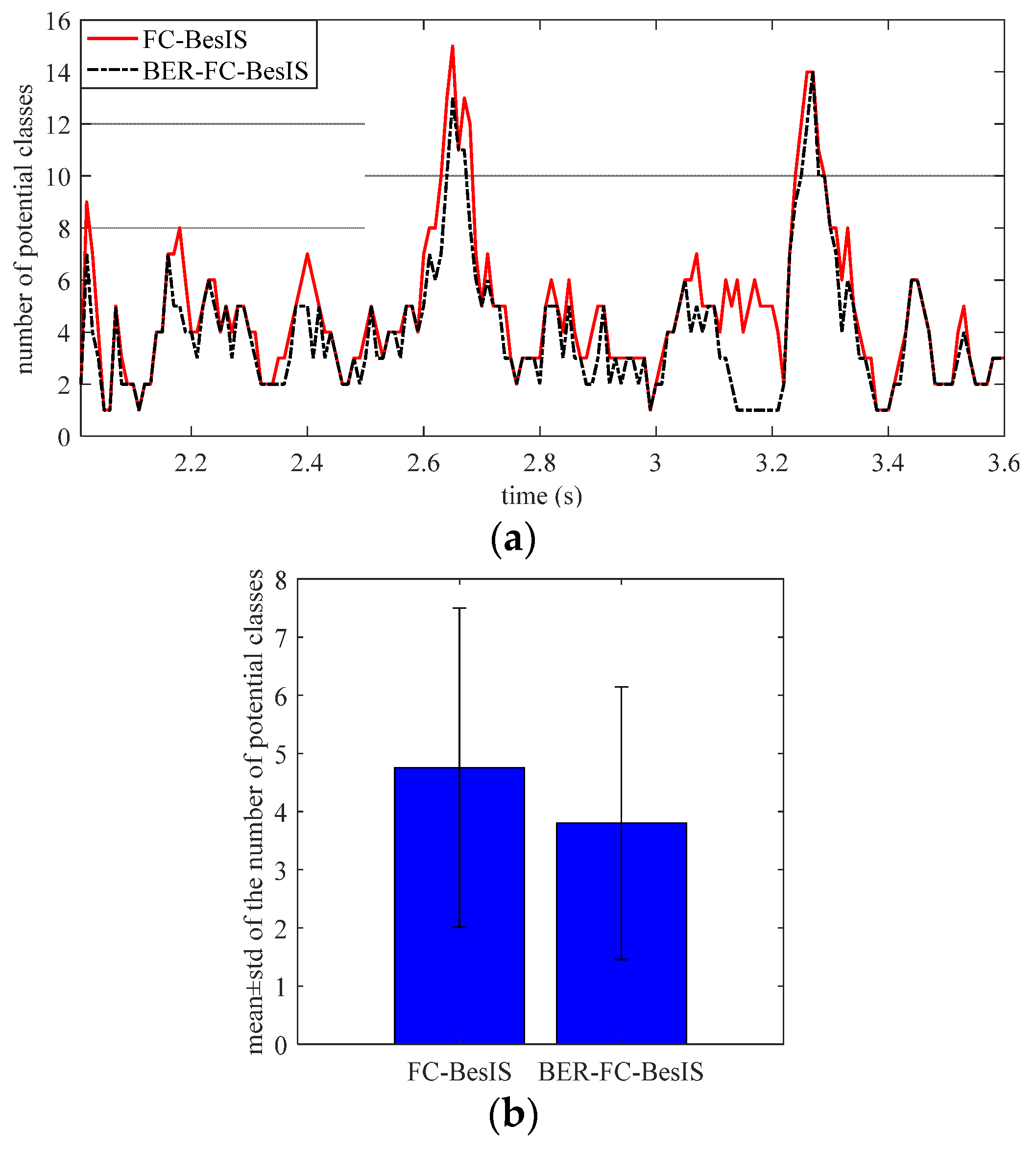

3.2. Gait Event Recognition Performance of BER-FC-BesIS

3.3. Walking Activity Prediction Performance of BER-FC-BesIS

4. Discussion

4.1. Summary

4.2. Advantages of BER-FC-BesIS

4.3. Potential Improvements and Future Works

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Blakemore, S.-J.; Decety, J. From the perception of action to the understanding of intention. Nat. Rev. Neurosci. 2001, 2, 561–567. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, J.; de Silva, C.W.; Fu, C. Unsupervised cross-subject adaptation for predicting human locomotion intent. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 646–657. [Google Scholar] [CrossRef]

- Ledoux, E.D. Inertial sensing for gait event detection and transfemoral prosthesis control strategy. IEEE Trans. Biomed. Eng. 2018, 65, 2704–2712. [Google Scholar] [CrossRef] [PubMed]

- Young, A.J.; Ferris, D.P. State of the art and future directions for lower limb robotic exoskeletons. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 171–182. [Google Scholar] [CrossRef] [PubMed]

- Zha, F.; Sheng, W.; Guo, W.; Qiu, S.; Wang, X.; Chen, F. The exoskeleton balance assistance control strategy based on single step balance assessment. Appl. Sci. 2019, 9, 884. [Google Scholar] [CrossRef]

- Ding, Y.; Kim, M.; Kuindersma, S.; Walsh, C.J. Human-in-the-loop optimization of hip assistance with a soft exosuit during walking. Sci. Robot. 2018, 3, eaar5438. [Google Scholar] [CrossRef]

- Stolyarov, R.; Burnett, G.; Herr, H. Translational Motion Tracking of Leg Joints for Enhanced Prediction of Walking Tasks. IEEE Trans. Biomed. Eng. 2018, 65, 763–769. [Google Scholar] [CrossRef]

- Gates, D.; Lelas, J.; Croce, U.; Herr, H.; Bonato, P. Characterization of ankle function during stair ambulation. In Proceedings of the 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Francisco, CA, USA, 1–5 September 2004; pp. 4248–4251. [Google Scholar]

- Wang, Q.; Yuan, K.; Zhu, J.; Wang, L. Walk the walk: A lightweight active transtibial prosthesis. IEEE Robot. Autom. Mag. 2015, 22, 80–89. [Google Scholar] [CrossRef]

- Miller, J.D.; Beazer, M.S.; Hahn, M.E. Myoelectric walking mode classification for transtibial amputees. IEEE Trans. Biomed. Eng. 2013, 60, 2745–2750. [Google Scholar] [CrossRef]

- Liu, M.; Zhang, F.; Huang, H. An adaptive classification strategy for reliable locomotion mode recognition. Sensors 2017, 17, 2020. [Google Scholar] [CrossRef]

- Du, L.; Zhang, F.; Liu, M.; Huang, H. Toward design of an environment-aware adaptive locomotion-mode-recognition system. IEEE Trans. Biomed. Eng. 2012, 59, 2716–2725. [Google Scholar] [CrossRef] [PubMed]

- Gong, C.; Xu, D.; Zhou, Z.; Vitiello, N.; Wang, Q. Real-time on-board recognition of locomotion modes for an active pelvis orthosis. In Proceedings of the 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids), Beijing, China, 6–9 November 2018; pp. 346–350. [Google Scholar]

- Gong, C.; Xu, D.; Zhou, Z.; Vitiello, N.; Wang, Q. BPNN-Based real-time recognition of locomotion modes for an active pelvis orthosis with different assistive strategies. Int. J. Humanoid Robot. 2020, 17, 2050004. [Google Scholar] [CrossRef]

- Long, Y.; Du, Z.-J.; Wang, W.-D.; Zhao, G.-Y.; Xu, G.-Q.; He, L.; Mao, X.-W.; Dong, W. PSO-SVM-Based online locomotion mode identification for rehabilitation robotic exoskeletons. Sensors 2016, 16, 1408. [Google Scholar] [CrossRef] [PubMed]

- Ai, Q.; Zhang, Y.; Qi, W.; Liu, Q.; Chen, K. Research on lower limb motion recognition based on fusion of sEMG and accelerometer signals. Symmetry 2017, 9, 147. [Google Scholar] [CrossRef]

- Spanias, J.A.; Simon, A.M.; Finucane, S.; Perreault, E.; Hargrove, L. Online adaptive neural control of a robotic lower limb prosthesis. J. Neural Eng. 2018, 15, 016015. [Google Scholar] [CrossRef] [PubMed]

- Artemiadis, P.K.; Kyriakopoulos, K.J. An EMG-Based robot control scheme robust to time-varying EMG signal features. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 582–588. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.W.; Wilson, K.M.; Lock, B.A.; Kamper, D.G. Subject-specific myoelectric pattern classification of functional hand movements for stroke survivors. IEEE Trans. Neural Syst. Rehabil. Eng. 2011, 19, 558–566. [Google Scholar] [CrossRef] [PubMed]

- Taborri, J.; Palermo, E.; Rossi, S.; Cappa, P. Gait partitioning methods: A systematic review. Sensors 2016, 16, 66. [Google Scholar] [CrossRef]

- Liu, M.; Wang, D.; Huang, H. Development of an environment-aware locomotion mode recognition system for powered lower limb prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 434–443. [Google Scholar] [CrossRef]

- Gopura, R.A.R.C.; Bandara, D.S.V.; Gunasekara, J.M.P.; Jayawardane, T.S.S. Recent trends in EMG-based control methods for assistive robots. In Electrodiagnosis in New Frontiers of Clinical Research; InTech Open: London, UK, 2013; p. 32. [Google Scholar]

- Glaister, B.C.; Bernatz, G.C.; Klute, G.K.; Orendurff, M.S. Video task analysis of turning during activities of daily living. Gait Posture 2007, 25, 289–294. [Google Scholar] [CrossRef]

- Akiyama, Y.; Okamoto, S.; Toda, H.; Ogura, T.; Yamada, Y. Gait motion for naturally curving variously shaped corners. Adv. Robot. 2017, 32, 77–88. [Google Scholar] [CrossRef]

- Sheng, W.; Zha, F.; Guo, W.; Qiu, S.; Sun, L.; Jia, W. Finite Class Bayesian Inference System for Circle and Linear Walking Gait Event Recognition Using Inertial Measurement Units. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2869–2879. [Google Scholar] [CrossRef] [PubMed]

- Grant, A.D. Gait analysis: Normal and pathological function. JAMA-J. Am. Med. Assoc. 2010, 304, 907. [Google Scholar] [CrossRef]

- Pew, C.; Klute, G.K. Turn intent detection for control of a lower limb prosthesis. IEEE Trans. Biomed. Eng. 2018, 65, 789–796. [Google Scholar] [CrossRef] [PubMed]

- Bartlett, H.L.; Goldfarb, M. A phase variable approach for IMU-based locomotion activity recognition. IEEE Trans. Biomed. Eng. 2018, 65, 1330–1338. [Google Scholar] [CrossRef] [PubMed]

- Prateek, G.V.; Mazzoni, P.; Earhart, G.M.; Nehorai, A. Gait cycle validation and segmentation using inertial sensors. IEEE Trans. Biomed. Eng. 2020, 67, 2132–2144. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.; Hsiao-Wecksler, E.T. Detection of gait modes using an artificial neural network during walking with a powered ankle-foot orthosis. J. Biophys. 2016, 2016, 7984157. [Google Scholar] [CrossRef]

- Martinez-Hernandez, U.; Dehghani-Sanij, A.A. Adaptive Bayesian inference system for recognition of walking activities and prediction of gait events using wearable sensors. Neural Netw. 2018, 102, 107–119. [Google Scholar] [CrossRef]

| Subject | Gender | Age (Years) | Height (cm) | Weight (kg) |

|---|---|---|---|---|

| 1 | Male | 28 | 180.0 | 75.2 |

| 2 | Male | 32 | 178.2 | 72.4 |

| 3 | Male | 34 | 175.5 | 69.5 |

| 4 | Male | 22 | 181.3 | 78.0 |

| 5 | Male | 42 | 169.2 | 67.3 |

| 6 | Female | 23 | 165.0 | 51.5 |

| 7 | Female | 21 | 160.3 | 47.2 |

| 8 | Female | 45 | 158.4 | 48.0 |

| Mean [SD] | - | 30.9 [9.1] | 171.0 [9.0] | 63.6 [12.7] |

| Features | Signals |

|---|---|

| 1 | Pelvis yaw angular velocity |

| 2 | Chest yaw angular velocity |

| 3 | Left thigh yaw angular velocity |

| 4 | Right thigh yaw angular velocity |

| 5 | Pelvis roll angular velocity |

| 6 | Left shank yaw angular velocity |

| 7 | Right shank yaw angular velocity |

| 8 | Chest pitch angular velocity |

| 9 | Right shank pitch angular velocity |

| 10 | Right shank pitch angular velocity |

| 11 | Left shank pitch angular velocity |

| 12 | Left thigh pitch angular velocity |

| Right Lower Limb’s Potential Walking Activities | Left Lower Limb’s Potential Walking Activities |

|---|---|

| 1 | 1, 4, 5, 6, 7 |

| 2 | 2, 4, 6 |

| 3 | 3, 5, 7 |

| 4 | 1, 2, 4 |

| 5 | 1, 3, 5 |

| 6 | 1, 2, 6 |

| 7 | 1, 3, 7 |

| After the potential classes have been eliminated by elimination rule 1 |

| IF (right lower limb’s potential walking activity, left lower limb’s potential walking activity) belongs to walking activity pairs in Table 3 THEN DO reserve potential classes with same right lower limb’s potential walking activities |

| ELSE DO eliminate potential classes with same right lower limb’s potential walking activities |

| END IF |

| Right Lower Limb’s Potential Gait Events | Left Lower Limb’s Potential Gait Events |

|---|---|

| 1 | 5 |

| 2 | 5, 6 |

| 3 | 6, 7 |

| 4 | 7, 8 |

| 5 | 1, 2, 8 |

| 6 | 2, 3 |

| 7 | 3, 4 |

| 8 | 4, 5 |

| After the potential classes have been eliminated by ER 2 |

| IF (right lower limb’s potential gait event, left lower limb’s potential gait event) belongs to gait event pair in Table 5 THEN DO reserve potential classes with same right lower limb’s potential gait events |

| ELSE DO eliminate potential classes with same right lower limb’s potential gait events |

| END IF |

| After the walking activity and gait event are recognized |

| DO Calculate MGCT (the mean time of the last three gait cycles, MGCT). IF transition walking activity is recognized THEN IF gait event is IC or LR THEN DO The first HC of the next steady walking activity will occur after 0.9*MGCT ELSE IF gait event is MSt THEN DO The first HC of the next steady walking activity will occur after 0.7*MGCT ELSE IF gait event is TSt THEN DO The first HC of the next steady walking activity will occur after 0.5*MGCT ELSE IF gait event is PSw THEN DO The first HC of the next steady walking activity will occur after 0.4*MGCT ELSE IF gait event is IS THEN DO The first HC of the next steady walking activity will occur after 0.27*MGCT ELSE IF gait event is MSw THEN DO The first HC of the next steady walking activity will occur after 0.13*MGCT ELSE DO The first HC of the next steady walking activity will occur within 0.13*MGCT END IF |

| ELSE DO The transition prediction module is skipped |

| END IF |

| MPT ± STD (ms) | MTD (±STD) (ms) | |

|---|---|---|

| Right | 119.32 ± 9.71 | 14.22 ± 3.74 |

| Left | 113.75 ± 11.83 | 13.59 ± 4.92 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sheng, W.; Gao, T.; Liang, K.; Wang, Y. Bilateral Elimination Rule-Based Finite Class Bayesian Inference System for Circular and Linear Walking Prediction. Biomimetics 2024, 9, 266. https://doi.org/10.3390/biomimetics9050266

Sheng W, Gao T, Liang K, Wang Y. Bilateral Elimination Rule-Based Finite Class Bayesian Inference System for Circular and Linear Walking Prediction. Biomimetics. 2024; 9(5):266. https://doi.org/10.3390/biomimetics9050266

Chicago/Turabian StyleSheng, Wentao, Tianyu Gao, Keyao Liang, and Yumo Wang. 2024. "Bilateral Elimination Rule-Based Finite Class Bayesian Inference System for Circular and Linear Walking Prediction" Biomimetics 9, no. 5: 266. https://doi.org/10.3390/biomimetics9050266

APA StyleSheng, W., Gao, T., Liang, K., & Wang, Y. (2024). Bilateral Elimination Rule-Based Finite Class Bayesian Inference System for Circular and Linear Walking Prediction. Biomimetics, 9(5), 266. https://doi.org/10.3390/biomimetics9050266