A Survey on Biomimetic and Intelligent Algorithms with Applications

Abstract

:1. Introduction

2. Related Work

3. Bio-Inspired Neural Network Models

3.1. Origin

3.2. Convergence and Stability

3.3. Accelerated Convergence

- •

- General linear AF:

- •

- Power AF:where n is an odd integer, and n > 3.

- •

- Bipolar sigmoid AF:where .

- •

- Hyperbolic sine AF:where .

- •

- Power-sigmoid AF:

- •

- Constant-sign–bi-power AF:

- •

- Sign–bi-power AF:where with the parameters and sgnn defined as

- •

- Weighted-sign–bi-power AF:where are tunable positive design parameters.

- •

- Nonlinear AF 1:where .

- •

- Nonlinear AF 2:where , and .

3.3.1. Finite-Time Neural Network

3.3.2. Predefined-Time Neural Network

3.4. Noise Tolerance Neural Network

3.5. Convergence Accuracy

- •

- In view of time, any time-varying problem can be considered a causal system. The computation should be based on existing data, which include present and/or previous data. For instance, when solving the time-varying matrix inversion problem discretely, at time instant , we can only use known information, such as and , not unknown information, such as and , to compute the inverse of , i.e., . Therefore, a fundamental requirement for constructing the discrete-time ZNN model is that unknown data cannot be used.

- •

- This means that a potential numerical differentiation formula for discretizing a continuous-time ZNN model should have and should only have one point ahead of the target point. Thus, in the process of discretizing the continuous-time ZNN model using the numerical differentiation formulation, the discrete-time ZNN model has only one unknown point, , to be computed. Thus, no matter how minimal the truncation error of each formula is, discrete-time ZNN models cannot be constructed using backward and multiple-point central differentiation rules. Only the one-step-ahead forward differentiation formulas can be considered for discrete-time ZNNs.

- •

- Time is valuable for solving time-varying problems. It is crucial to design a very straightforward discrete-time ZNN model with minimal time consumption.

3.6. Time-Varying Linear System Solving

3.7. Time-Varying Nonlinear System Solving

3.8. Robot Control

3.9. Discussion and Challenges

- (1)

- Keep exploring and discovering more useful, one-step-ahead numerical differential formulas to construct discrete-time ZNNs. One of the goals is that further discrete-time ZNN models possess larger step sizes and higher computational accuracy. Larger step sizes consume less computation time. Such advancements align closely with the development of applied mathematics and computational mathematics.

- (2)

- How to achieve faster convergence still remains an open problem. Additionally, it is meaningful in the development of ZNNs to understand how to achieve convergence conditions.

- (3)

- Apart from global stability, more theory is also needed to improve robustness.

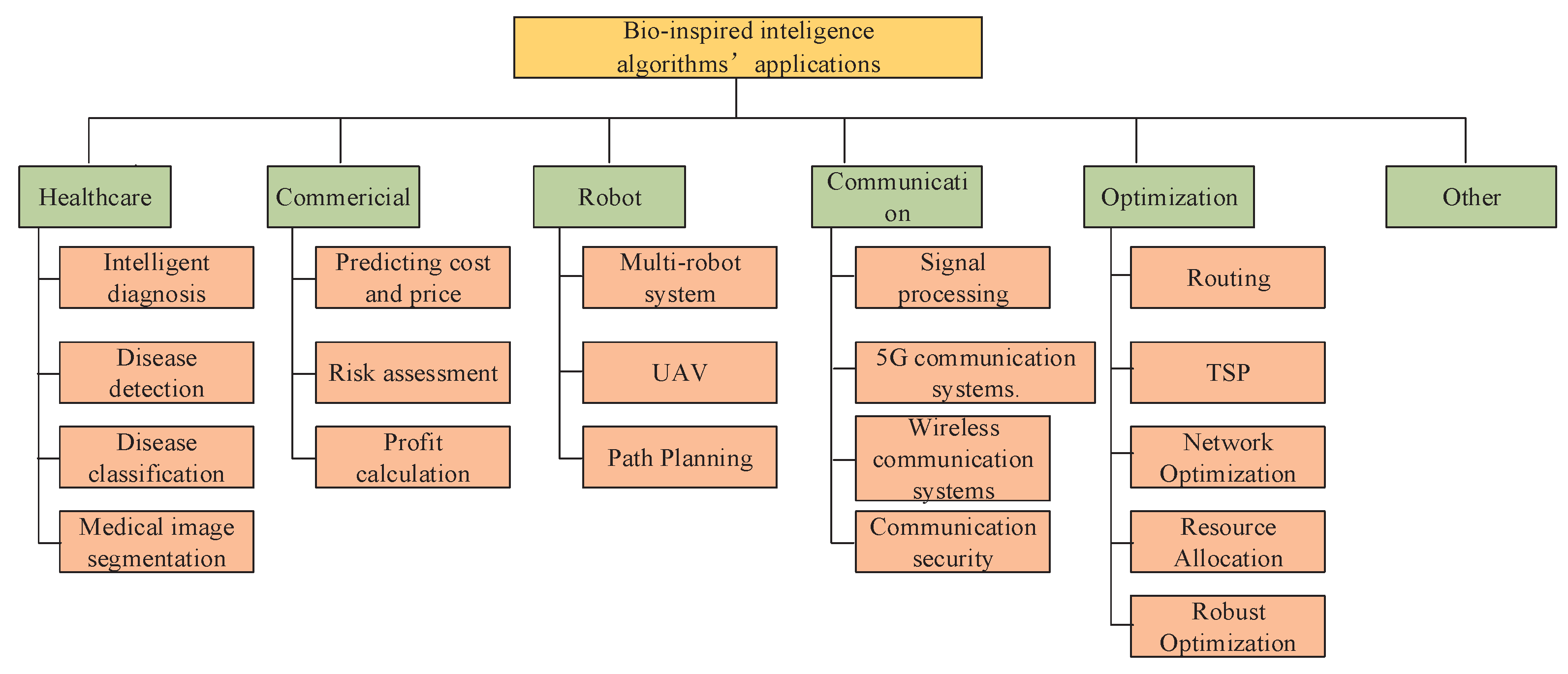

4. Intelligence Algorithms with Applications

4.1. Bio-Inspired Intelligence Algorithm

- •

- Distributed robustness: Individuals are distributed across the search space, and they interact with each other. Due to the lack of a centralized control center, these algorithms exhibit strong robustness. Even if certain individuals fail or become inactive, the overall optimization process is not significantly affected.

- •

- Simple structure and easy implementation: Individuals have simple structures and behavior rules. They typically only perceive local information and interact with others through simple mechanisms. This simplicity makes the algorithms easy to implement and understand.

- •

- Self-organization: Individuals exhibit complex self-organizing behaviors through interactions and information exchanges. The intelligent behavior of the entire swarm emerges from the interactions among simple individuals.

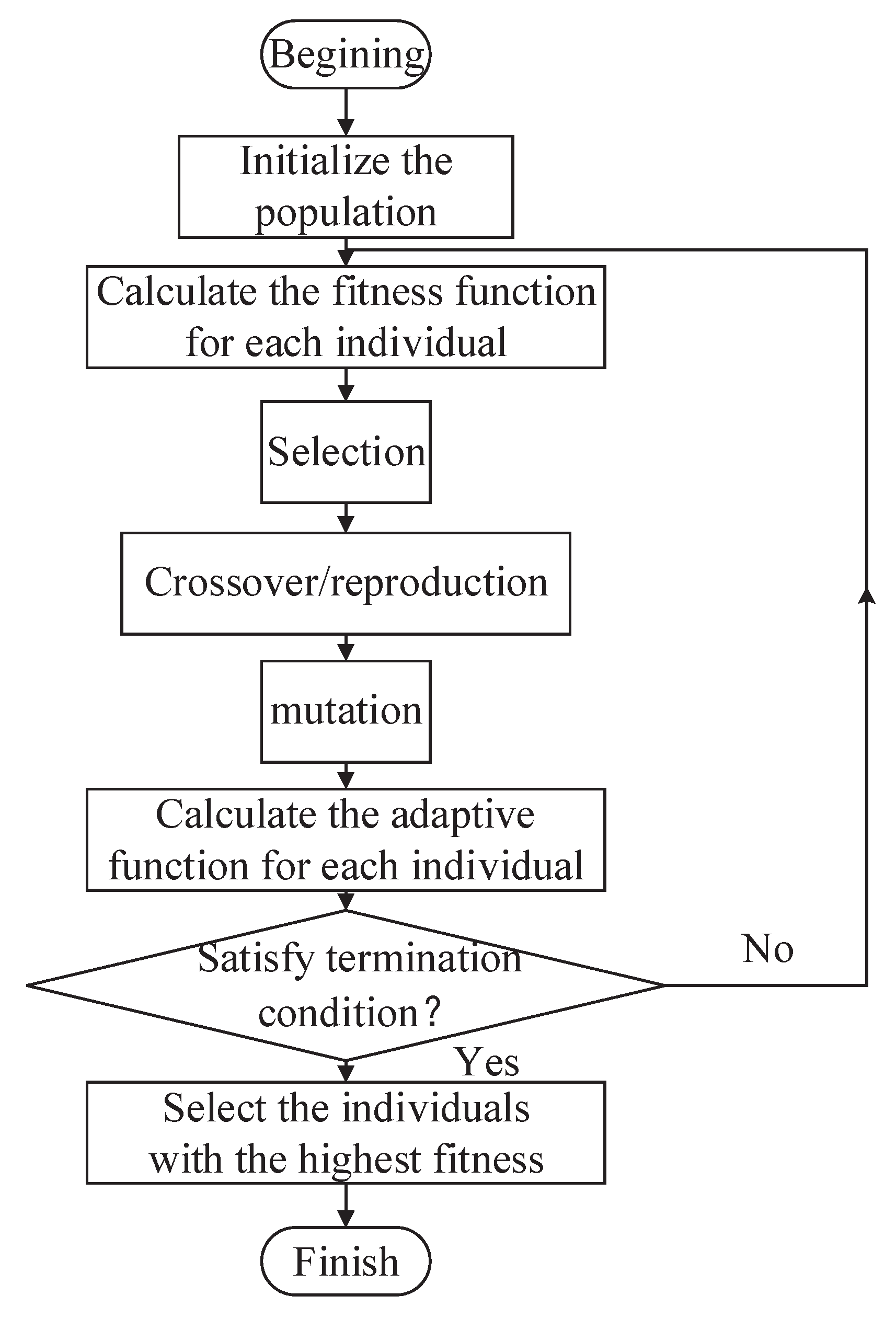

4.1.1. Genetic Algorithm

- (1)

- Initialize the population.

- (2)

- Calculate the fitness function for each individual.

- (3)

- Select individuals with a high fitness function, and let them undergo crossover/reproduction and mutation to generate offspring.

- (4)

- Calculate the fitness function for each individual.

- (5)

- If the termination condition is satisfied, select the individual with the highest fitness, or else return to step (2).

4.1.2. Particle Swarm Optimization Algorithm

- (1)

- Initialize the particle swarm.

- (2)

- Calculate the fitness function for each particle.

- (3)

- Update each particle’s current optimal solution.

- (4)

- Update the whole particle’s current optimal solution.

- (5)

- Update each particle’s position and velocity.

- (6)

- If the termination condition is satisfied, select the individual with the highest fitness or else return to step (2).

4.2. Ant Colony Optimization Algorithm

4.3. Artificial Fish Swarm

4.4. Harris Hawks Optimizer

4.5. The Rest of the Swarm Intelligence Algorithms

4.6. Gene Feature Extraction

4.7. Intelligence Communication

4.8. Image Processing

4.9. Other Applications

4.10. Discussion and Future Direction

- (1)

- Multi-objective optimization: Extending intelligence algorithms to multi-objective optimization domains is still a hot topic.

- (2)

- Adaptive and self-learning techniques: Developing adaptive and self-learning algorithms that automatically adjust parameters and strategies based on problem and environmental changes improves algorithm robustness and adaptability.

- (3)

- Hybridization and fusion algorithms: Integrating intelligence algorithms with other optimization methods, such as deep learning and reinforcement learning, to leverage the strengths of various algorithms enhances solution efficiency and accuracy.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sun, Q.; Wu, X. A deep learning-based approach for emotional analysis of sports dance. PeerJ Comput. Sci. 2023, 9, e1441. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Peng, C.; Zheng, Y.; Li, S.; Ha, T.T.; Shutyaev, V.; Katsikis, V.; Stanimirovic, P. Neural Networks for Portfolio Analysis in High-Frequency Trading. Available online: https://ieeexplore.ieee.org/abstract/document/10250899 (accessed on 13 September 2023).

- Zhang, Y.; Li, S.; Weng, J.; Liao, B. GNN Model for Time-Varying Matrix Inversion With Robust Finite-Time Convergence. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 559–569. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, Z.; Yi, C.; Chen, K. Zhang neural network versus gradient neural network for online time-varying quadratic function minimization. In Advanced Intelligent Computing Theories and Applications. With Aspects of Artificial Intelligence, Proceedings of the 4th International Conference on Intelligent Computing, ICIC 2008, Shanghai, China, 15–18 September 2008; Proceedings 4; Springer: Berlin/Heidelberg, Germany, 2008; pp. 807–814. [Google Scholar]

- Xu, H.; Li, R.; Pan, C.; Li, K. Minimizing energy consumption with reliability goal on heterogeneous embedded systems. J. Parallel Distrib. Comput. 2019, 127, 44–57. [Google Scholar] [CrossRef]

- Peng, C.; Liao, B. Heavy-head sampling for fast imitation learning of machine learning based combinatorial auction solver. Neural Process. Lett. 2023, 55, 631–644. [Google Scholar] [CrossRef]

- Xu, H.; Li, R.; Zeng, L.; Li, K.; Pan, C. Energy-efficient scheduling with reliability guarantee in embedded real-time systems. Sustain. Comput. Inform. Syst. 2018, 18, 137–148. [Google Scholar] [CrossRef]

- Qin, F.; Zain, A.M.; Zhou, K.Q. Harmony search algorithm and related variants: A systematic review. Swarm Evol. Comput. 2022, 74, 101126. [Google Scholar] [CrossRef]

- Wu, W.; Tian, Y.; Jin, T. A label based ant colony algorithm for heterogeneous vehicle routing with mixed backhaul. Appl. Soft Comput. 2016, 47, 224–234. [Google Scholar] [CrossRef]

- Sindhuja, P.; Ramamoorthy, P.; Kumar, M.S. A brief survey on nature inspired algorithms: Clever algorithms for optimization. Asian J. Comput. Sci. Technol. 2018, 7, 27–32. [Google Scholar] [CrossRef]

- Sakunthala, S.; Kiranmayi, R.; Mandadi, P.N. A review on artificial intelligence techniques in electrical drives: Neural networks, fuzzy logic, and genetic algorithm. In Proceedings of the 2017 International Conference on Smart Technologies for Smart Nation (SmartTechCon), Bengaluru, India, 17–19 August 2017; pp. 11–16. [Google Scholar]

- Lachhwani, K. Application of neural network models for mathematical programming problems: A state of art review. Arch. Comput. Methods Eng. 2020, 27, 171–182. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, Z.; Huang, Y.; Liao, B.; Li, S. Applications of Zeroing neural networks: A survey. IEEE Access 2024, 12, 51346–51363. [Google Scholar] [CrossRef]

- Zheng, Y.J.; Chen, S.Y.; Lin, Y.; Wang, W.L. Bio-inspired optimization of sustainable energy systems: A review. Math. Probl. Eng. 2013, 2013, e354523. [Google Scholar] [CrossRef]

- Zajmi, L.; Ahmed, F.Y.; Jaharadak, A.A. Concepts, methods, and performances of particle swarm optimization, backpropagation, and neural networks. Appl. Comput. Intell. Soft Comput. 2018, 2018, e9547212. [Google Scholar] [CrossRef]

- Cosma, G.; Brown, D.; Archer, M.; Khan, M.; Pockley, A.G. A survey on computational intelligence approaches for predictive modeling in prostate cancer. Expert Syst. Appl. 2017, 70, 1–19. [Google Scholar] [CrossRef]

- Goel, L. An extensive review of computational intelligence-based optimization algorithms: Trends and applications. Soft Comput. 2020, 24, 16519–16549. [Google Scholar] [CrossRef]

- Ding, S.; Li, H.; Su, C.; Yu, J.; Jin, F. Evolutionary artificial neural networks: A review. Artif. Intell. Rev. 2013, 39, 251–260. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Dahou, A.; Abualigah, L.; Yu, L.; Alshinwan, M.; Khasawneh, A.M.; Lu, S. Advanced metaheuristic optimization techniques in applications of deep neural networks: A review. Neural Comput. Appl. 2021, 33, 14079–14099. [Google Scholar] [CrossRef]

- Alqushaibi, A.; Abdulkadir, S.J.; Rais, H.M.; Al-Tashi, Q. A review of weight optimization techniques in recurrent neural networks. In Proceedings of the 2020 International Conference on Computational Intelligence (ICCI), Bandar Seri Iskandar, Malaysia, 8–9 October 2020; pp. 196–201. [Google Scholar]

- Ganapathy, S.; Kulothungan, K.; Muthurajkumar, S.; Vijayalakshmi, M.; Yogesh, P.; Kannan, A. Intelligent feature selection and classification techniques for intrusion detection in networks: A survey. EURASIP J. Wirel. Commun. Netw. 2013, 2013, 271. [Google Scholar] [CrossRef]

- Vijh, S.; Gaurav, P.; Pandey, H.M. Hybrid bio-inspired algorithm and convolutional neural network for automatic lung tumor detection. Neural Comput. Appl. 2023, 35, 23711–23724. [Google Scholar] [CrossRef]

- Hassani, S.; Dackermann, U. A systematic review of optimization algorithms for structural health monitoring and optimal sensor placement. Sensors 2023, 23, 3293. [Google Scholar] [CrossRef]

- Gad, A.G. Particle swarm optimization algorithm and its applications: A systematic review. Arch. Comput. Methods Eng. 2022, 29, 2531–2561. [Google Scholar] [CrossRef]

- Hopfield, J.J. Neurons, Dynamics and Computation. Phys. Today 1994, 47, 40–46. [Google Scholar] [CrossRef]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [PubMed]

- Hopfield, J.J. Neurons with graded response have collective computational properties like those of two-state neurons. Proc. Natl. Acad. Sci. USA 1984, 81, 3088–3092. [Google Scholar] [CrossRef] [PubMed]

- Hopfield, J.; Tank, D. Neural Computation of Decisions in Optimization Problems. Biol. Cybern. 1985, 52, 141–152. [Google Scholar] [CrossRef] [PubMed]

- Liao, B.; Hua, C.; Cao, X.; Katsikis, V.N.; Li, S. Complex noise-resistant zeroing neural network for computing complex time-dependent Lyapunov equation. Mathematics 2022, 10, 2817. [Google Scholar] [CrossRef]

- Liao, B.; Han, L.; He, Y.; Cao, X.; Li, J. Prescribed-time convergent adaptive ZNN for time-varying matrix inversion under harmonic noise. Electronics 2022, 11, 1636. [Google Scholar] [CrossRef]

- Liao, B.; Huang, Z.; Cao, X.; Li, J. Adopting nonlinear activated beetle antennae search algorithm for fraud detection of public trading companies: A computational finance approach. Mathematics 2022, 10, 2160. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, X. Structural Analysis of the Evolution Mechanism of Online Public Opinion and its Development Stages Based on Machine Learning and Social Network Analysis. Int. J. Comput. Intell. Syst. 2023, 16, 99. [Google Scholar] [CrossRef]

- Chen, S.; Zhou, C.; Li, J.; Peng, H. Asynchronous introspection theory: The underpinnings of phenomenal consciousness in temporal illusion. Minds Mach. 2017, 27, 315–330. [Google Scholar] [CrossRef]

- Luo, M.; Ke, W.; Cai, Z.; Liu, A.; Li, Y.; Cheang, C. Using Imbalanced Triangle Synthetic Data for Machine Learning Anomaly Detection. Comput. Mater. Contin. 2019, 58, 15–26. [Google Scholar] [CrossRef]

- Jin, L.; Zhang, Y. Continuous and discrete Zhang dynamics for real-time varying nonlinear optimization. Numer. Algorithms 2016, 73, 115–140. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, L.; Weng, J.; Mao, Y.; Lu, W.; Xiao, L. A New Varying-Parameter Recurrent Neural-Network for Online Solution of Time-Varying Sylvester Equation. IEEE Trans. Cybern. 2018, 48, 3135–3148. [Google Scholar] [CrossRef] [PubMed]

- Xiao, L.; Liao, B. A convergence-accelerated Zhang neural network and its solution application to Lyapunov equation. Neurocomputing 2016, 193, 213–218. [Google Scholar] [CrossRef]

- Peng, C.; Ling, Y.; Wang, Y.; Yu, X.; Zhang, Y. Three new ZNN models with economical dimension and exponential convergence for real-time solution of moore-penrose pseudoinverse. In Proceedings of the 2014 International Joint Conference on Neural Networks (IJCNN), Beijing, China, 6–11 July 2014; pp. 2788–2793. [Google Scholar]

- Guo, D.; Peng, C.; Jin, L.; Ling, Y.; Zhang, Y. Different ZFs lead to different nets: Examples of Zhang generalized inverse. In Proceedings of the 2013 Chinese Automation Congress, Changsha, China, 7–8 November 2013; pp. 453–458. [Google Scholar]

- Lv, X.; Xiao, L.; Tan, Z.; Yang, Z.; Yuan, J. Improved gradient neural networks for solving Moore–Penrose inverse of full-rank matrix. Neural Process. Lett. 2019, 50, 1993–2005. [Google Scholar] [CrossRef]

- Xiao, L. A nonlinearly activated neural dynamics and its finite-time solution to time-varying nonlinear equation. Neurocomputing 2016, 173, 1983–1988. [Google Scholar] [CrossRef]

- Liu, M.; Liao, B.; Ding, L.; Xiao, L. Performance analyses of recurrent neural network models exploited for online time-varying nonlinear optimization. Comput. Sci. Inf. Syst. 2016, 13, 691–705. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, G.; Jin, L.; Cheng, C.; Zhang, B.; Yu, J. Noise-suppressing zeroing neural network for online solving time-varying matrix square roots problems: A control-theoretic approach. Expert Syst. Appl. 2022, 192, 116272. [Google Scholar] [CrossRef]

- Jin, L.; Zhang, Y.; Li, S.; Zhang, Y. Modified ZNN for time-varying quadratic programming with inherent tolerance to noises and its application to kinematic redundancy resolution of robot manipulators. IEEE Trans. Ind. Electron. 2016, 63, 6978–6988. [Google Scholar] [CrossRef]

- Xiao, L.; Liao, B.; Li, S.; Chen, K. Nonlinear recurrent neural networks for finite-time solution of general time-varying linear matrix equations. Neural Netw. 2018, 98, 102–113. [Google Scholar] [CrossRef]

- Xiao, L.; Lu, R. Finite-time solution to nonlinear equation using recurrent neural dynamics with a specially-constructed activation function. Neurocomputing 2015, 151, 246–251. [Google Scholar] [CrossRef]

- Xiao, L. A nonlinearly-activated neurodynamic model and its finite-time solution to equality-constrained quadratic optimization with nonstationary coefficients. Appl. Soft Comput. 2016, 40, 252–259. [Google Scholar] [CrossRef]

- Liao, B.; Zhang, Y. From different ZFs to different ZNN models accelerated via Li activation functions to finite-time convergence for time-varying matrix pseudoinversion. Neurocomputing 2014, 133, 512–522. [Google Scholar] [CrossRef]

- Lv, X.; Xiao, L.; Tan, Z. Improved Zhang neural network with finite-time convergence for time-varying linear system of equations solving. Inf. Process. Lett. 2019, 147, 88–93. [Google Scholar] [CrossRef]

- Xiao, L.; Tan, H.; Jia, L.; Dai, J.; Zhang, Y. New error function designs for finite-time ZNN models with application to dynamic matrix inversion. Neurocomputing 2020, 402, 395–408. [Google Scholar] [CrossRef]

- Lv, X.; Xiao, L.; Tan, Z.; Yang, Z. Wsbp function activated Zhang dynamic with finite-time convergence applied to Lyapunov equation. Neurocomputing 2018, 314, 310–315. [Google Scholar] [CrossRef]

- Xiao, L. A new design formula exploited for accelerating Zhang neural network and its application to time-varying matrix inversion. Theor. Comput. Sci. 2016, 647, 50–58. [Google Scholar] [CrossRef]

- Jin, L.; Li, S.; Liao, B.; Zhang, Z. Zeroing neural networks: A survey. Neurocomputing 2017, 267, 597–604. [Google Scholar] [CrossRef]

- Hua, C.; Cao, X.; Liao, B.; Li, S. Advances on intelligent algorithms for scientific computing: An overview. Front. Neurorobot. 2023, 17, 1190977. [Google Scholar] [CrossRef] [PubMed]

- Cordero, A.; Soleymani, F.; Torregrosa, J.R.; Ullah, M.Z. Numerically stable improved Chebyshev–Halley type schemes for matrix sign function. J. Comput. Appl. Math. 2017, 318, 189–198. [Google Scholar] [CrossRef]

- Soleymani, F.; Tohidi, E.; Shateyi, S.; Khaksar Haghani, F. Some Matrix Iterations for Computing Matrix Sign Function. J. Appl. Math. 2014, 2014, 425654. [Google Scholar] [CrossRef]

- Li, W.; Liao, B.; Xiao, L.; Lu, R. A recurrent neural network with predefined-time convergence and improved noise tolerance for dynamic matrix square root finding. Neurocomputing 2019, 337, 262–273. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y.; Dai, J.; Chen, K.; Yang, S.; Li, W.; Liao, B.; Ding, L.; Li, J. A new noise-tolerant and predefined-time ZNN model for time-dependent matrix inversion. Neural Netw. 2019, 117, 124–134. [Google Scholar] [CrossRef]

- Xiao, L.; Li, L.; Tao, J.; Li, W. A predefined-time and anti-noise varying-parameter ZNN model for solving time-varying complex Stein equations. Neurocomputing 2023, 526, 158–168. [Google Scholar] [CrossRef]

- Jim, K.C.; Giles, C.; Horne, W. An analysis of noise in recurrent neural networks: Convergence and generalization. Neural Netw. IEEE Trans. 1996, 7, 1424–1438. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Sodhi, S.S. Comparative analysis of gaussian filter, median filter and denoise autoenocoder. In Proceedings of the 2020 7th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 12–14 March 2020; pp. 45–51. [Google Scholar]

- Liao, B.; Zhang, Y. Different Complex ZFs Leading to Different Complex ZNN Models for Time-Varying Complex Generalized Inverse Matrices. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 1621–1631. [Google Scholar] [CrossRef]

- Li, W.; Xiao, L.; Liao, B. A Finite-Time Convergent and Noise-Rejection Recurrent Neural Network and Its Discretization for Dynamic Nonlinear Equations Solving. IEEE Trans. Cybern. 2020, 50, 3195–3207. [Google Scholar] [CrossRef]

- Liao, B.; Xiang, Q.; Li, S. Bounded Z-type neurodynamics with limited-time convergence and noise tolerance for calculating time-dependent Lyapunov equation. Neurocomputing 2019, 325, 234–241. [Google Scholar] [CrossRef]

- Liao, B.; Wang, Y.; Li, J.; Guo, D.; He, Y. Harmonic Noise-Tolerant ZNN for Dynamic Matrix Pseudoinversion and Its Application to Robot Manipulator. Front. Neurorobot. 2022, 16, 928636. [Google Scholar] [CrossRef]

- Jin, L.; Zhang, Y.; Li, S. Integration-Enhanced Zhang Neural Network for Real-Time-Varying Matrix Inversion in the Presence of Various Kinds of Noises. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 2615–2627. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Katsikis, V.N.; Li, S. Integration enhanced and noise tolerant ZNN for computing various expressions involving outer inverses. Neurocomputing 2019, 329, 129–143. [Google Scholar] [CrossRef]

- Liao, B.; Han, L.; Cao, X.; Li, S.; Li, J. Double integral-enhanced Zeroing neural network with linear noise rejection for time-varying matrix inverse. CAAI Trans. Intell. Technol. 2024, 9, 197–210. [Google Scholar] [CrossRef]

- Xiao, L.; He, Y.; Dai, J.; Liu, X.; Liao, B.; Tan, H. A Variable-Parameter Noise-Tolerant Zeroing Neural Network for Time-Variant Matrix Inversion With Guaranteed Robustness. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 1535–1545. [Google Scholar] [CrossRef] [PubMed]

- Xiang, Q.; Liao, B.; Xiao, L.; Lin, L.; Li, S. Discrete-time noise-tolerant Zhang neural network for dynamic matrix pseudoinversion. Soft Comput. 2019, 23, 755–766. [Google Scholar] [CrossRef]

- Zhang, Y.; Cai, B.; Liang, M.; Ma, W. On the variable step-size of discrete-time Zhang neural network and Newton iteration for constant matrix inversion. In Proceedings of the 2008 Second International Symposium on Intelligent Information Technology Application, Shanghai, China, 20–22 December 2008; Volume 1, pp. 34–38. [Google Scholar]

- Zhang, Y.; Ma, W.; Cai, B. From Zhang neural network to Newton iteration for matrix inversion. IEEE Trans. Circuits Syst. I Regul. Pap. 2008, 56, 1405–1415. [Google Scholar] [CrossRef]

- Mao, M.; Li, J.; Jin, L.; Li, S.; Zhang, Y. Enhanced discrete-time Zhang neural network for time-variant matrix inversion in the presence of bias noises. Neurocomputing 2016, 207, 220–230. [Google Scholar] [CrossRef]

- Zhang, Y.; Jin, L.; Guo, D.; Yin, Y.; Chou, Y. Taylor-type 1-step-ahead numerical differentiation rule for first-order derivative approximation and ZNN discretization. J. Comput. Appl. Math. 2015, 273, 29–40. [Google Scholar] [CrossRef]

- Guo, D.; Zhang, Y. Zhang neural network, Getz–Marsden dynamic system, and discrete-time algorithms for time-varying matrix inversion with application to robots’ kinematic control. Neurocomputing 2012, 97, 22–32. [Google Scholar] [CrossRef]

- Liao, B.; Zhang, Y.; Jin, L. Taylor O(h3) Discretization of ZNN Models for Dynamic Equality-Constrained Quadratic Programming With Application to Manipulators. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 225–237. [Google Scholar] [CrossRef]

- Guo, D.; Nie, Z.; Yan, L. Novel discrete-time Zhang neural network for time-varying matrix inversion. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 2301–2310. [Google Scholar] [CrossRef]

- Zhang, J. Analysis and Construction of Software Engineering OBE Talent Training System Structure Based on Big Data. Secur. Commun. Netw. 2022, 2022, e3208318. [Google Scholar]

- Jin, J. Low power current-mode voltage controlled oscillator for 2.4GHz wireless applications. Comput. Electr. Eng. 2014, 40, 92–99. [Google Scholar] [CrossRef]

- Tan, W.; Huang, W.; Yang, X.; Shi, Z.; Liu, W.; Fan, L. Multiuser precoding scheme and achievable rate analysis for massive MIMO system. EURASIP J. Wirel. Commun. Netw. 2018, 2018, 210. [Google Scholar] [CrossRef]

- Yang, X.; Lei, K.; Peng, S.; Cao, X.; Gao, X. Analytical Expressions for the Probability of False-Alarm and Decision Threshold of Hadamard Ratio Detector in Non-Asymptotic Scenarios. IEEE Commun. Lett. 2018, 22, 1018–1021. [Google Scholar] [CrossRef]

- Dai, Z.; Guo, X. Investigation of E-Commerce Security and Data Platform Based on the Era of Big Data of the Internet of Things. Mob. Inf. Syst. 2022, 2022, 3023298. [Google Scholar] [CrossRef]

- Lu, J.; Li, W.; Sun, J.; Xiao, R.; Liao, B. Secure and Real-Time Traceable Data Sharing in Cloud-Assisted IoT. IEEE Internet Things J. 2024, 11, 6521–6536. [Google Scholar] [CrossRef]

- Xiao, L.; Li, K.; Tan, Z.; Zhang, Z.; Liao, B.; Chen, K.; Jin, L.; Li, S. Nonlinear gradient neural network for solving system of linear equations. Inf. Process. Lett. 2019, 142, 35–40. [Google Scholar] [CrossRef]

- Xiao, L.; Yi, Q.; Dai, J.; Li, K.; Hu, Z. Design and analysis of new complex zeroing neural network for a set of dynamic complex linear equations. Neurocomputing 2019, 363, 171–181. [Google Scholar] [CrossRef]

- Lu, H.; Jin, L.; Luo, X.; Liao, B.; Guo, D.; Xiao, L. RNN for Solving Perturbed Time-Varying Underdetermined Linear System With Double Bound Limits on Residual Errors and State Variables. IEEE Trans. Ind. Inform. 2019, 15, 5931–5942. [Google Scholar] [CrossRef]

- Xiao, L.; Jia, L.; Zhang, Y.; Hu, Z.; Dai, J. Finite-Time Convergence and Robustness Analysis of Two Nonlinear Activated ZNN Models for Time-Varying Linear Matrix Equations. IEEE Access 2019, 7, 135133–135144. [Google Scholar] [CrossRef]

- Zhang, Z.; Deng, X.; Qu, X.; Liao, B.; Kong, L.D.; Li, L. A Varying-Gain Recurrent Neural Network and Its Application to Solving Online Time-Varying Matrix Equation. IEEE Access 2018, 6, 77940–77952. [Google Scholar] [CrossRef]

- Li, S.; Li, Y. Nonlinearly activated neural network for solving time-varying complex Sylvester equation. IEEE Trans. Cybern. 2013, 44, 1397–1407. [Google Scholar] [CrossRef] [PubMed]

- Liao, S.; Liu, J.; Xiao, X.; Fu, D.; Wang, G.; Jin, L. Modified gradient neural networks for solving the time-varying Sylvester equation with adaptive coefficients and elimination of matrix inversion. Neurocomputing 2020, 379, 1–11. [Google Scholar] [CrossRef]

- Xiao, L. A finite-time convergent neural dynamics for online solution of time-varying linear complex matrix equation. Neurocomputing 2015, 167, 254–259. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y.; Li, K.; Liao, B.; Tan, Z. A novel recurrent neural network and its finite-time solution to time-varying complex matrix inversion. Neurocomputing 2019, 331, 483–492. [Google Scholar] [CrossRef]

- Long, C.; Zhang, G.; Zeng, Z.; Hu, J. Finite-time stabilization of complex-valued neural networks with proportional delays and inertial terms: A non-separation approach. Neural Netw. 2022, 148, 86–95. [Google Scholar] [CrossRef]

- Ding, L.; Xiao, L.; Liao, B.; Lu, R.; Peng, H. An improved recurrent neural network for complex-valued systems of linear equation and its application to robotic motion tracking. Front. Neurorobot. 2017, 11, 45. [Google Scholar] [CrossRef] [PubMed]

- Xiao, L.; Dai, J.; Lu, R.; Li, S.; Li, J.; Wang, S. Design and Comprehensive Analysis of a Noise-Tolerant ZNN Model With Limited-Time Convergence for Time-Dependent Nonlinear Minimization. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 5339–5348. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, L.; Li, L.; Deng, X.; Xiao, L.; Huang, G. A new finite-time varying-parameter convergent-differential neural-network for solving nonlinear and nonconvex optimization problems. Neurocomputing 2018, 319, 74–83. [Google Scholar] [CrossRef]

- Xiao, L.; He, Y.; Wang, Y.; Dai, J.; Wang, R.; Tang, W. A Segmented Variable-Parameter ZNN for Dynamic Quadratic Minimization With Improved Convergence and Robustness. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 2413–2424. [Google Scholar] [CrossRef]

- Xiao, L.; Li, S.; Yang, J.; Zhang, Z. A new recurrent neural network with noise-tolerance and finite-time convergence for dynamic quadratic minimization. Neurocomputing 2018, 285, 125–132. [Google Scholar] [CrossRef]

- Xiao, L.; Li, K.; Duan, M. Computing Time-Varying Quadratic Optimization With Finite-Time Convergence and Noise Tolerance: A Unified Framework for Zeroing Neural Network. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3360–3369. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, S.; Kadry, S.; Liao, B. Recurrent Neural Network for Kinematic Control of Redundant Manipulators With Periodic Input Disturbance and Physical Constraints. IEEE Trans. Cybern. 2019, 49, 4194–4205. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Xu, Z.; Su, Z.; Wang, H.; Li, S. Distance- and Velocity-Based Simultaneous Obstacle Avoidance and Target Tracking for Multiple Wheeled Mobile Robots. IEEE Trans. Intell. Transp. Syst. 2024, 25, 1736–1748. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y.; Liao, B.; Zhang, Z.; Ding, L.; Jin, L. A velocity-level bi-criteria optimization scheme for coordinated path tracking of dual robot manipulators using recurrent neural network. Front. Neurorobot. 2017, 11, 47. [Google Scholar] [CrossRef] [PubMed]

- Tang, Z.; Zhang, Y. Refined Self-Motion Scheme With Zero Initial Velocities and Time-Varying Physical Limits via Zhang Neurodynamics Equivalency. Front. Neurorobot. 2022, 16, 945346. [Google Scholar] [CrossRef] [PubMed]

- Jin, L.; Liao, B.; Liu, M.; Xiao, L.; Guo, D.; Yan, X. Different-Level Simultaneous Minimization Scheme for Fault Tolerance of Redundant Manipulator Aided with Discrete-Time Recurrent Neural Network. Front. Neurorobot. 2017, 11, 50. [Google Scholar] [CrossRef] [PubMed]

- Liao, B.; Hua, C.; Xu, Q.; Cao, X.; Li, S. Inter-robot management via neighboring robot sensing and measurement using a zeroing neural dynamics approach. Expert Syst. Appl. 2024, 244, 122938. [Google Scholar] [CrossRef]

- Zhang, C.X.; Zhou, K.Q.; Ye, S.Q.; Zain, A.M. An improved cuckoo search algorithm utilizing nonlinear inertia weight and differential evolution for function optimization problem. IEEE Access 2021, 9, 161352–161373. [Google Scholar] [CrossRef]

- Ye, S.Q.; Zhou, K.Q.; Zhang, C.X.; Mohd Zain, A.; Ou, Y. An improved multi-objective cuckoo search approach by exploring the balance between development and exploration. Electronics 2022, 11, 704. [Google Scholar] [CrossRef]

- Chen, Z.; Francis, A.; Li, S.; Liao, B.; Xiao, D.; Ha, T.T.; Li, J.; Ding, L.; Cao, X. Egret Swarm Optimization Algorithm: An Evolutionary Computation Approach for Model Free Optimization. Biomimetics 2022, 7, 144. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.T.; Cao, X.; Liao, B.; Francis, A. Bio-inspired Machine Learning for Distributed Confidential Multi-Portfolio Selection Problem. Biomimetics 2022, 7, 124. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.H.; Cao, X.; Xu, B.; Li, S. Beetle Antennae Search: Using Biomimetic Foraging Behaviour of Beetles to Fool a Well-Trained Neuro-Intelligent System. Biomimetics 2022, 7, 84. [Google Scholar] [CrossRef]

- Ou, Y.; Yin, P.; Mo, L. An Improved Grey Wolf Optimizer and Its Application in Robot Path Planning. Biomimetics 2023, 8, 84. [Google Scholar] [CrossRef] [PubMed]

- Holland, J.H. Genetic algorithms and the optimal allocation of trials. SIAM J. Comput. 1973, 2, 88–105. [Google Scholar] [CrossRef]

- Ou, Y.; Ye, S.Q.; Ding, L.; Zhou, K.Q.; Zain, A.M. Hybrid knowledge extraction framework using modified adaptive genetic algorithm and BPNN. IEEE Access 2022, 10, 72037–72050. [Google Scholar] [CrossRef]

- Li, H.C.; Zhou, K.Q.; Mo, L.P.; Zain, A.M.; Qin, F. Weighted fuzzy production rule extraction using modified harmony search algorithm and BP neural network framework. IEEE Access 2020, 8, 186620–186637. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Peng, Y.; Lei, K.; Yang, X.; Peng, J. Improved chaotic quantum-behaved particle swarm optimization algorithm for fuzzy neural network and its application. Math. Probl. Eng. 2020, 2020, 9464593. [Google Scholar]

- Yang, L.; Ding, B.; Liao, W.; Li, Y. Identification of preisach model parameters based on an improved particle swarm optimization method for piezoelectric actuators in micro-manufacturing stages. Micromachines 2022, 13, 698. [Google Scholar] [CrossRef]

- Bullnheimer, B.; Hartl, R.F.; Strauss, C. A New Rank Based Version of the Ant System–A Computational Study. Cent. Eur. J. Oper. Res. 1999, 7, 25–38. [Google Scholar]

- Hu, X.M.; Zhang, J.; Li, Y. Orthogonal methods based ant colony search for solving continuous optimization problems. J. Comput. Sci. Technol. 2008, 23, 2–18. [Google Scholar] [CrossRef]

- Gupta, D.K.; Arora, Y.; Singh, U.K.; Gupta, J.P. Recursive ant colony optimization for estimation of parameters of a function. In Proceedings of the 2012 1st International Conference on Recent Advances in Information Technology (RAIT), Dhanbad, India, 15–17 March 2012; pp. 448–454. [Google Scholar]

- Gao, S.; Wang, Y.; Cheng, J.; Inazumi, Y.; Tang, Z. Ant colony optimization with clustering for solving the dynamic location routing problem. Appl. Math. Comput. 2016, 285, 149–173. [Google Scholar] [CrossRef]

- Hemmatian, H.; Fereidoon, A.; Sadollah, A.; Bahreininejad, A. Optimization of laminate stacking sequence for minimizing weight and cost using elitist ant system optimization. Adv. Eng. Softw. 2013, 57, 8–18. [Google Scholar] [CrossRef]

- Li, X.l. An optimizing method based on autonomous animats: Fish-swarm algorithm. Syst. Eng. Theory Pract. 2002, 22, 32–38. [Google Scholar]

- Shen, W.; Guo, X.; Wu, C.; Wu, D. Forecasting stock indices using radial basis function neural networks optimized by artificial fish swarm algorithm. Knowl. Based Syst. 2011, 24, 378–385. [Google Scholar] [CrossRef]

- Neshat, M.; Sepidnam, G.; Sargolzaei, M.; Toosi, A.N. Artificial fish swarm algorithm: A survey of the state-of-the-art, hybridization, combinatorial and indicative applications. Artif. Intell. Rev. 2014, 42, 965–997. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Timmis, J.; Andrews, P.; Hart, E. On artificial immune systems and swarm intelligence. Swarm Intell. 2010, 4, 247–273. [Google Scholar] [CrossRef]

- Gao, K.; Cao, Z.; Zhang, L.; Chen, Z.; Han, Y.; Pan, Q. A review on swarm intelligence and evolutionary algorithms for solving flexible job shop scheduling problems. IEEE/CAA J. Autom. Sin. 2019, 6, 904–916. [Google Scholar] [CrossRef]

- Roy, S.; Chaudhuri, S.S. Cuckoo search algorithm using Lévy flight: A review. Int. J. Mod. Educ. Comput. Sci. 2013, 5, 10. [Google Scholar] [CrossRef]

- Duan, H.; Qiao, P. Pigeon-inspired optimization: A new swarm intelligence optimizer for air robot path planning. Int. J. Intell. Comput. Cybern. 2014, 7, 24–37. [Google Scholar] [CrossRef]

- Yang, X.S.; Hossein Gandomi, A. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Hofmeyr, S.A.; Forrest, S. Architecture for an artificial immune system. Evol. Comput. 2000, 8, 443–473. [Google Scholar] [CrossRef]

- Pan, W.T. A new fruit fly optimization algorithm: Taking the financial distress model as an example. Knowl. Based Syst. 2012, 26, 69–74. [Google Scholar] [CrossRef]

- Krishnanand, K.; Ghose, D. Glowworm swarm optimization for simultaneous capture of multiple local optima of multimodal functions. Swarm Intell. 2009, 3, 87–124. [Google Scholar] [CrossRef]

- Mehrabian, A.R.; Lucas, C. A novel numerical optimization algorithm inspired from weed colonization. Ecol. Inform. 2006, 1, 355–366. [Google Scholar] [CrossRef]

- Wang, F.; Li, Z.S.; Liao, G.P. Multifractal detrended fluctuation analysis for image texture feature representation. Int. J. Pattern Recognit. Artif. Intell. 2014, 28, 1455005. [Google Scholar] [CrossRef]

- Chu, H.M.; Kong, X.Z.; Liu, J.X.; Zheng, C.H.; Zhang, H. A New Binary Biclustering Algorithm Based on Weight Adjacency Difference Matrix for Analyzing Gene Expression Data. IEEE/ACM Trans. Comput. Biol. Bioinform. 2023, 20, 2802–2809. [Google Scholar] [CrossRef]

- Liu, J.; Feng, H.; Tang, Y.; Zhang, L.; Qu, C.; Zeng, X.; Peng, X. A novel hybrid algorithm based on Harris Hawks for tumor feature gene selection. PeerJ Comput. Sci. 2023, 9, e1229. [Google Scholar] [CrossRef]

- Qu, C.; Zhang, L.; Li, J.; Deng, F.; Tang, Y.; Zeng, X.; Peng, X. Improving feature selection performance for classification of gene expression data using Harris Hawks optimizer with variable neighborhood learning. Briefings Bioinform. 2021, 22, bbab097. [Google Scholar] [CrossRef]

- Liu, J.; Qu, C.; Zhang, L.; Tang, Y.; Li, J.; Feng, H.; Zeng, X.; Peng, X. A new hybrid algorithm for three-stage gene selection based on whale optimization. Sci. Rep. 2023, 13, 3783. [Google Scholar] [CrossRef] [PubMed]

- Xie, X.; Peng, S.; Yang, X. Deep learning-based signal-to-noise ratio estimation using constellation diagrams. Mob. Inf. Syst. 2020, 2020, 8840340. [Google Scholar] [CrossRef]

- Yang, X.; Lei, K.; Peng, S.; Hu, L.; Li, S.; Cao, X. Threshold Setting for Multiple Primary User Spectrum Sensing via Spherical Detector. IEEE Wirel. Commun. Lett. 2019, 8, 488–491. [Google Scholar] [CrossRef]

- Jin, J. Multi-function current differencing cascaded transconductance amplifier (MCDCTA) and its application to current-mode multiphase sinusoidal oscillator. Wirel. Pers. Commun. 2016, 86, 367–383. [Google Scholar] [CrossRef]

- Jin, J. Resonant amplifier-based sub-harmonic mixer for zero-IF transceiver applications. Integration 2017, 57, 69–73. [Google Scholar] [CrossRef]

- Peng, S.; Gao, R.; Zheng, W.; Lei, K. Adaptive Algorithms for Bayesian Spectrum Sensing Based on Markov Model. KSII Trans. Internet Inf. Syst. (TIIS) 2018, 12, 3095–3111. [Google Scholar]

- Peng, S.; Zheng, W.; Gao, R.; Lei, K. Fast cooperative energy detection under accuracy constraints in cognitive radio networks. Wirel. Commun. Mob. Comput. 2017, 2017, 3984529. [Google Scholar] [CrossRef]

- Liu, H.; Xie, L.; Liu, J.; Ding, L. Application of butterfly Clos-network in network-on-Chip. Sci. World J. 2014, 2014, 102651. [Google Scholar] [CrossRef]

- Yu, Y.; Wang, D.; Faisal, M.; Jabeen, F.; Johar, S. Decision support system for evaluating the role of music in network-based game for sustaining effectiveness. Soft Comput. 2022, 26, 10775–10788. [Google Scholar] [CrossRef]

- Xiang, Z.; Guo, Y. Controlling Melody Structures in Automatic Game Soundtrack Compositions With Adversarial Learning Guided Gaussian Mixture Models. IEEE Trans. Games 2021, 13, 193–204. [Google Scholar] [CrossRef]

- Xiang, Z.; Xiang, C.; Li, T.; Guo, Y. F A self-adapting hierarchical actions and structures joint optimization framework for automatic design of robotic and animation skeletons. Soft Comput. 2021, 25, 263–276. [Google Scholar] [CrossRef]

- Qin, Z.; Tang, Y.; Tang, F.; Xiao, J.; Huang, C.; Xu, H. Efficient XML query and update processing using a novel prime-based middle fraction labeling scheme. China Commun. 2017, 14, 145–157. [Google Scholar] [CrossRef]

- Sun, L.; Mo, Z.; Yan, F.; Xia, L.; Shan, F.; Ding, Z.; Song, B.; Gao, W.; Shao, W.; Shi, F.; et al. Adaptive Feature Selection Guided Deep Forest for COVID-19 Classification With Chest CT. IEEE J. Biomed. Health Inform. 2020, 24, 2798–2805. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Yang, G.; Zhu, Y.; Ding, X.; Gong, R. Probability model-based early Merge mode decision for dependent views in 3D-HEVC. ACM Trans. Multimed. Comput. Commun. Appl. 2018, 14, 1–15. [Google Scholar] [CrossRef]

- Yang, F.; Chen, K.; Yu, B.; Fang, D. A relaxed fixed point method for a mean curvature-based denoising model. Optim. Methods Softw. 2014, 29, 274–285. [Google Scholar] [CrossRef]

- Osamy, W.; El-Sawy, A.A.; Salim, A. CSOCA: Chicken swarm optimization based clustering algorithm for wireless sensor networks. IEEE Access 2020, 8, 60676–60688. [Google Scholar] [CrossRef]

- Cekmez, U.; Ozsiginan, M.; Sahingoz, O.K. Multi colony ant optimization for UAV path planning with obstacle avoidance. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 47–52. [Google Scholar] [CrossRef]

- Wai, R.J.; Prasetia, A.S. Adaptive neural network control and optimal path planning of UAV surveillance system with energy consumption prediction. IEEE Access 2019, 7, 126137–126153. [Google Scholar] [CrossRef]

- Tsai, S.H.; Chen, Y.W. A novel fuzzy identification method based on ant colony optimization algorithm. IEEE Access 2016, 4, 3747–3756. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, C.; Li, Y.; Wang, P.; Li, C.; Lu, P.; Mo, L. A multi-objective multi-population ant colony optimization for economic emission dispatch considering power system security. Appl. Math. Model. 2017, 45, 684–704. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, X.; He, Z. An ACO-based algorithm for parameter optimization of support vector machines. Expert Syst. Appl. 2010, 37, 6618–6628. [Google Scholar] [CrossRef]

- Qamhan, A.A.; Ahmed, A.; Al-Harkan, I.M.; Badwelan, A.; Al-Samhan, A.M.; Hidri, L. An exact method and ant colony optimization for single machine scheduling problem with time window periodic maintenance. IEEE Access 2020, 8, 44836–44845. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, W.; Wang, X.; Qiu, Y.; Shen, W. Adaptive gradient descent enabled ant colony optimization for routing problems. Swarm Evol. Comput. 2022, 70, 101046. [Google Scholar] [CrossRef]

- Zhao, D.; Liu, L.; Yu, F.; Heidari, A.A.; Wang, M.; Oliva, D.; Muhammad, K.; Chen, H. Ant colony optimization with horizontal and vertical crossover search: Fundamental visions for multi-threshold image segmentation. Expert Syst. Appl. 2021, 167, 114122. [Google Scholar] [CrossRef]

- Ejigu, D.A.; Liu, X. Gradient descent-particle swarm optimization based deep neural network predictive control of pressurized water reactor power. Prog. Nucl. Energy 2022, 145, 104108. [Google Scholar] [CrossRef]

- Papazoglou, G.; Biskas, P. Review and comparison of genetic algorithm and particle swarm optimization in the optimal power flow problem. Energies 2023, 16, 1152. [Google Scholar] [CrossRef]

- Tiwari, S.; Kumar, A. Advances and bibliographic analysis of particle swarm optimization applications in electrical power system: Concepts and variants. Evol. Intell. 2023, 16, 23–47. [Google Scholar] [CrossRef]

- Souza, D.A.; Batista, J.G.; dos Reis, L.L.; Júnior, A.B. PID controller with novel PSO applied to a joint of a robotic manipulator. J. Braz. Soc. Mech. Sci. Eng. 2021, 43, 377. [Google Scholar] [CrossRef]

- Abbas, M.; Alshehri, M.A.; Barnawi, A.B. Potential Contribution of the Grey Wolf Optimization Algorithm in Reducing Active Power Losses in Electrical Power Systems. Appl. Sci. 2022, 12, 6177. [Google Scholar] [CrossRef]

- Abasi, A.K.; Aloqaily, M.; Guizani, M. Grey wolf optimizer for reducing communication cost of federated learning. In Proceedings of the GLOBECOM 2022-2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 1049–1054. [Google Scholar]

- Li, Y.; Lin, X.; Liu, J. An improved gray wolf optimization algorithm to solve engineering problems. Sustainability 2021, 13, 3208. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Zamani, H.; Mirjalili, S. Enhanced whale optimization algorithm for medical feature selection: A COVID-19 case study. Comput. Biol. Med. 2022, 148, 105858. [Google Scholar] [CrossRef] [PubMed]

- Husnain, G.; Anwar, S. An intelligent cluster optimization algorithm based on Whale Optimization Algorithm for VANETs (WOACNET). PLoS ONE 2021, 16, e0250271. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Yang, J. A discrete cuckoo search algorithm for traveling salesman problem and its application in cutting path optimization. Comput. Ind. Eng. 2022, 169, 108157. [Google Scholar] [CrossRef]

- Zhang, L.; Yu, Y.; Luo, Y.; Zhang, S. Improved cuckoo search algorithm and its application to permutation flow shop scheduling problem. J. Algorithms Comput. Technol. 2020, 14, 1748302620962403. [Google Scholar] [CrossRef]

- Harshavardhan, A.; Boyapati, P.; Neelakandan, S.; Abdul-Rasheed Akeji, A.A.; Singh Pundir, A.K.; Walia, R. LSGDM with biogeography-based optimization (BBO) model for healthcare applications. J. Healthc. Eng. 2022, 2022, 2170839. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Wen, S.; Wang, D. Multi-population biogeography-based optimization algorithm and its application to image segmentation. Appl. Soft Comput. 2022, 124, 109005. [Google Scholar] [CrossRef]

- Albashish, D.; Hammouri, A.I.; Braik, M.; Atwan, J.; Sahran, S. Binary biogeography-based optimization based SVM-RFE for feature selection. Appl. Soft Comput. 2021, 101, 107026. [Google Scholar] [CrossRef]

- Zhang, Y.; Gu, X. Biogeography-based optimization algorithm for large-scale multistage batch plant scheduling. Expert Syst. Appl. 2020, 162, 113776. [Google Scholar] [CrossRef]

- Lalljith, S.; Fleming, I.; Pillay, U.; Naicker, K.; Naidoo, Z.J.; Saha, A.K. Applications of flower pollination algorithm in electrical power systems: A review. IEEE Access 2021, 10, 8924–8947. [Google Scholar] [CrossRef]

- Ong, K.M.; Ong, P.; Sia, C.K. A new flower pollination algorithm with improved convergence and its application to engineering optimization. Decis. Anal. J. 2022, 5, 100144. [Google Scholar] [CrossRef]

- Subashini, S.; Mathiyalagan, P. A cross layer design and flower pollination optimization algorithm for secured energy efficient framework in wireless sensor network. Wirel. Pers. Commun. 2020, 112, 1601–1628. [Google Scholar] [CrossRef]

- Kumari, G.V.; Rao, G.S.; Rao, B.P. Flower pollination-based K-means algorithm for medical image compression. Int. J. Adv. Intell. Paradig. 2021, 18, 171–192. [Google Scholar] [CrossRef]

- Alyasseri, Z.A.A.; Khader, A.T.; Al-Betar, M.A.; Yang, X.S.; Mohammed, M.A.; Abdulkareem, K.H.; Kadry, S.; Razzak, I. Multi-objective flower pollination algorithm: A new technique for EEG signal denoising. Neural Comput. Appl. 2022, 11, 7943–7962. [Google Scholar] [CrossRef]

- Shen, X.; Wu, Y.; Li, L.; Zhang, T. A modified adaptive beluga whale optimization based on spiral search and elitist strategy for short-term hydrothermal scheduling. Electr. Power Syst. Res. 2024, 228, 110051. [Google Scholar] [CrossRef]

- Omar, M.B.; Bingi, K.; Prusty, B.R.; Ibrahim, R. Recent advances and applications of spiral dynamics optimization algorithm: A review. Fractal Fract. 2022, 6, 27. [Google Scholar] [CrossRef]

- Ekinci, S.; Izci, D.; Al Nasar, M.R.; Abu Zitar, R.; Abualigah, L. Logarithmic spiral search based arithmetic optimization algorithm with selective mechanism and its application to functional electrical stimulation system control. Soft Comput. 2022, 26, 12257–12269. [Google Scholar] [CrossRef]

- Nonita, S.; Xalikovich, P.A.; Kumar, C.R.; Rakhra, M.; Samori, I.A.; Maquera, Y.M.; Gonzáles, J.L.A. Intelligent water drops algorithm-based aggregation in heterogeneous wireless sensor network. J. Sensors 2022, 2022, e6099330. [Google Scholar] [CrossRef]

- Kaur, S.; Chaudhary, G.; Dinesh Kumar, J.; Pillai, M.S.; Gupta, Y.; Khari, M.; García-Díaz, V.; Parra Fuente, J. Optimizing Fast Fourier Transform (FFT) Image Compression Using Intelligent Water Drop (IWD) Algorithm. 2022. Available online: https://reunir.unir.net/handle/123456789/13930 (accessed on 3 November 2021).

- Gao, B.; Hu, X.; Peng, Z.; Song, Y. Application of intelligent water drop algorithm in process planning optimization. Int. J. Adv. Manuf. Technol. 2020, 106, 5199–5211. [Google Scholar] [CrossRef]

- Kowalski, P.A.; Łukasik, S.; Charytanowicz, M.; Kulczycki, P. Optimizing clustering with cuttlefish algorithm. In Information Technology, Systems Research, and Computational Physics; Springer: Berlin/Heidelberg, Germany, 2020; pp. 34–43. [Google Scholar]

- Joshi, P.; Gavel, S.; Raghuvanshi, A. Developed Optimized Routing Based on Modified LEACH and Cuttlefish Optimization Approach for Energy-Efficient Wireless Sensor Networks. In Microelectronics, Communication Systems, Machine Learning and Internet of Things: Select Proceedings of MCMI 2020; Springer: Berlin/Heidelberg, Germany, 2022; pp. 29–39. [Google Scholar]

| Algorithm | Applications |

|---|---|

| Ant colony optimization (ACO) | Scheduling problem [163] |

| Routing problem [164] | |

| Image processing [165] | |

| Particle swarm optimization (PSO) | Neural network training [166] |

| Power system [167,168] | |

| Robots [169] | |

| Gray wolf optimizer (GWO) | Electrical engineering [170] |

| Communication [171] | |

| Mechanical engineering [172] | |

| Whale optimization algorithm (WOA) | Feature selection [173] |

| Data cluster [174] | |

| Cuckoo search (CS) | Path optimization [175] |

| Scheduling problem [176] | |

| Biogeography-based optimization (BBO) | Healthcare [177] |

| Image segmentation [178] | |

| Feature selection [179] | |

| Scheduling problem [180] | |

| Flower pollination (FPA) | Electrical power systems [181] |

| Engineering optimization [182] | |

| Wireless and network domain [183] | |

| Signal and image processing [184,185] | |

| Spiral optimization (SOA) | Scheduling problem [186] |

| Path optimization [187] | |

| Electrical system [188] | |

| Intelligent water drop (IWD) | Wireless sensor network [189] |

| Image process [190] | |

| Path optimization [191] | |

| Cuttlefish optimization (CFO) | Data clustering [192] |

| Signal processing [193] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Liao, B.; Li, J.; Li, S. A Survey on Biomimetic and Intelligent Algorithms with Applications. Biomimetics 2024, 9, 453. https://doi.org/10.3390/biomimetics9080453

Li H, Liao B, Li J, Li S. A Survey on Biomimetic and Intelligent Algorithms with Applications. Biomimetics. 2024; 9(8):453. https://doi.org/10.3390/biomimetics9080453

Chicago/Turabian StyleLi, Hao, Bolin Liao, Jianfeng Li, and Shuai Li. 2024. "A Survey on Biomimetic and Intelligent Algorithms with Applications" Biomimetics 9, no. 8: 453. https://doi.org/10.3390/biomimetics9080453