Abstract

Many argue that the standard understanding of the second law of thermodynamics combined with the supposition, backed by recent scientific evidence, that the future is infinite entails that one is, most likely, a momentary Boltzmann brain that will quickly disintegrate into the cosmos. The argument is as follows: (1) Given infinite time, the universe will eventually reach thermodynamic equilibrium; (2) once there, every possible fluctuation away from equilibrium, no matter how improbable, will recur, ad infinitum; (3) those fluctuations that create stable, long-lived creatures, such as we take ourselves to be, will be extremely rare compared to those that create short-lived brains that mistakenly think they are ordinary human beings; hence, by statistical reasoning, (4) one is, with overwhelming probability, just a fleeting instantiation of experience. I argue that this reasoning is invalid since it rests on an error regarding the relationship between infinite sets and their subsets. Once this error is eliminated, the power of the argument fades, and the evidence that we are ordinary human beings becomes decisive. Surprisingly, I find that the best argument for the Boltzmann brain hypothesis requires the assumption that the future is very long but finite.

1. Introduction

It has been argued that the accepted understanding of the second law of thermodynamics combined with the supposition, backed by recent scientific evidence, that the future is infinite entails that one is, most likely, a momentary Boltzmann brain that will quickly disintegrate into the cosmos. The argument is that, given infinite time, the universe will eventually reach thermodynamic equilibrium and that, once there, every possible fluctuation away from equilibrium, no matter how improbable, will recur, ad infinitum. The fluctuations that create stable, long-lived creatures, such as we take ourselves to be, will be extremely rare compared to those that create short-lived brains that mistakenly think they are ordinary human beings. The conclusion, by statistical reasoning, is that one is, most likely, just a fleeting instantiation of experience. In what follows, I argue that this reasoning is invalid since it rests on an error regarding the relationship between infinite sets and their many possible, infinite subsets. Once this error is eliminated, the power of the argument fades, and the evidence that we are ordinary human beings becomes decisive. I conclude with some speculative reasons to believe that the only valid argument for the Boltzmann brain hypothesis requires the assumption that the future is very long but finite.

2. The Boltzmann Brain Argument

Why does the universe become more disordered with time? That is, why does entropy increase rather than decrease toward the future? Since the late 19th century, the accepted explanation is due to Ludwig Boltzmann ([1]), according to whom entropy increase is a statistical phenomenon, as follows. Any closed system, including the universe in its entirety, that finds itself in an ordered state of low entropy, is in fact in a state whose micro-components, such as fundamental particles, are in a relatively low probability configuration; in other words, high order corresponds to low probability. This means that any natural evolution of the system will, most likely, result in a more probable state, which will naturally evolve into an even higher probability state, and so on, until a state of maximum probability is reached. Since higher probability corresponds to greater entropy, systems will naturally break down over time ([2], pp. 59–76).

To imagine this, suppose that you have a bag of marbles, with red ones neatly resting on top of green. Any natural disturbance, such as the bag rolling down a hill, will tend to mix the colors. If the bag reaches an evenly distributed state, then any further jostling will fail to re-separate the marbles, not because this is logically impossible but because it is extremely improbable; there are vastly many more configurations of the marbles that correspond to disordered macro-states, such as ‘red and green marbles evenly mixed’, than separated ones, so chance will most likely not move things from the former to the latter.1

Let us consider a simpler but more precise example. Imagine a spatial volume, such as a room, that contains four gas molecules, M(a–d), randomly moving. We shall consider the distribution of these molecules between the left (L) and right (R) sides of the room. A specification of the position (side of the room) of each molecule is a description of the micro-state of the gas in the room. There are 24 = 16 such micro-states and they give rise to five possible macro-states: (1) two molecules on each side of the room; (2) one molecule on the left, three on the right; (3) three molecules on the left, one on the right; (4) all molecules on the left; and (5) all molecules on the right. This is summarized in the following Table 1:

Table 1.

Probability and Entropy.

Notice that the most common macro-state, involving 6/16 possible micro-states, corresponds to evenly distributed molecules, which is also the most disordered or randomized distribution. The two most ordered distributions, those with all the molecules concentrated on one side of the room, each occur with the lowest frequency, 1/16 each. The two states between the extremes, three on one side and one on the other, each occur with an intermediate frequency of 4/16. Hence, no matter what the initial state of the system, random motion or change will most likely result in a state of high entropy, since natural redistribution of molecules can be viewed as a random selection of a row from the chart. If we consider models with a large number of molecules, then the percentage of micro-states that correspond to the most disordered macro-state greatly increases. Indeed, in any physically realistic scenario, with trillions of molecules, the relative frequency of micro-states corresponding to highly ordered macro-states is minuscule ([4]).

What this tells us is that if a system is in a low entropy macro-state, such as all molecules being on one side of a room, then random motions will push the room toward a high entropy macro-state as a simple matter of probability. In the simple system represented in the chart above, there is a small number of molecules, so a small number of states. This means that even if the system is in the least probable state, then it will not take very long, i.e., a few random motions, for the system to reach the most probable state. On the other hand, any system that has very many molecules and is in a low probability state will most likely take a long period of time to reach maximum entropy, since more changes of position will be required to move from low-frequency to high-frequency configurations. This is because molecules do not all change at once when motion is natural and uncoordinated. Nonetheless, chance redistribution of micro-components will eventually result in one of the most probable macro-states.

This means that, if the Big Bang is the first moment of time, then we may conclude that the universe began in a very improbable state. This is because entropy has been, overall, rising ever since, and matter 2 remains, to this day, very, very far from evenly mixed. All of this suggests a very ordered, low-probability starting point because 14 billion years of natural perturbation have failed to push things close to the state of maximum probability. Put differently, the Big Bang is the most improbable state that the universe has ever occupied.

How improbable? Roger Penrose ([3] pp. 726–732) considers the phase space that describes all possible values of position (x, y, z) and momentum (one for each spatial dimension) for each particle in a spatially finite universe composed of 1080 baryons, which is roughly the number that we believe the observable universe contains. For 1080 particles, therefore, the phase space has 6 1080 dimensions. He estimates that, of the volume of all values of entropy definable on such a space, only one part in corresponds to a value at the Big Bang that is compatible with conditions observed in the universe today, such as the relatively organized structures that make life possible. He concludes that this suggests an ‘extraordinarily special Big Bang’ ([3] p. 726; see, also, [5] p. 36).

As Michael Huemer ([6]) points out, this casts legitimate doubt on the Big Bang hypothesis. So, he opts for an eternal model in which the universe is temporally infinite in both past and future directions, removing the need to posit a beginning of any sort, improbable or otherwise. Even the standard Big Bang theory, however, suggests that time will continue without end,3 so both current cosmology and Huemer’s model agree on the future: it is infinite in duration. So, it is worth considering the implications of the assumption that once the entropy of the universe reaches maximum, then it will persist forever in a condition of permanent disorder save for natural, random perturbation. We must recall that, however improbable it is for some matter to randomly fluctuate out of a state of maximal disorder into one of relative order, it is not impossible. Occasionally, though perhaps extraordinarily rarely, an unlikely, chance vacillation away from maximum entropy toward greater order will occur. Given an infinite future, then, it is a simple matter of probability that every possible material configuration will infinitely recur. The more improbable the configuration, the rarer it will be, but it will still repeat ad infinitum.

Accordingly, we may, given our assumption, conclude that, infinitely often, some matter will reconfigure itself, randomly, into a state that is an exact qualitative duplicate of, e.g., your brain, as it is right now. Most such reconfigurations, known as Boltzmann brains, will be momentary, but they will nonetheless duplicate the entirety of your brain structure at this moment. So, the temporary brain will, during its brief existence, be in a state that includes a range of apparent memories, anticipations, feelings, plans, and so on. It will quickly decay back into a more probable, disordered configuration, but for that short time, the Boltzmann brain thinks it is you, with a full past, an upcoming future, friends, family, and so on.

Randomly formed Boltzmann brains that are longer-lived are far less probable than momentary ones because long lives involve a larger fluctuation away from maximum toward minimum probability. In other words, the longer a brain persists before dissipating, the lower its initial entropy had to be, which is why it takes a relatively long time for it to naturally decay. Accordingly, of the infinitely many brains that exist in infinite time, most are near-instantaneous fluctuations; only a tiny minority are long-lived, and fewer still are long-lived and housed in full bodies on the surface of a long-lived planet:

The most likely fluctuation consistent with everything you know is simply your brain (complete with “memories” of the Deep Hubble fields, WMAP date, etc.) fluctuating briefly out of chaos and then immediately equilibrating back into chaos again.([9] p. 063528-5.)

Any given observer is a collection of particles in some particular state, and that state will occur infinitely often, and the number of times it will be surrounded by high-entropy chaos will be enormously higher than the number of times it will arise as part of an “ordinary” universe.([10] p. 233.)

We are led by statistical reasoning to the conclusion that, of all conscious experiences in the universe, most, by far, are to be found in momentary Boltzmann brains that erroneously believe they are long-lived human beings. Accordingly, it is most probable that anyone having any experience at all is a momentary instance of order, alone in the universe, about to dissipate into disorder.

3. The Argument and the Countably Infinite

Some believe that the argument for the Boltzmann brain hypothesis is unstable:

How do we learn that most minds like mine are BBs [Boltzmann brains]? We learn this only via acquiring a huge batch of scientific evidence. That batch includes in it lots that says we’re in ordinary human bodies, on ordinary earth, which has existed and circled the sun for billions of years, and so on and so on… on our total evidence, it’s not rational to make the statistical inference [that most experiences are housed in Boltzmann Brains, so that is probably what one is].([11] p. 3719; original emphasis.)

In other words, given all our evidence, the belief that one is a Boltzmann brain is self-undermining, standing in conflict with the evidence used to justify it.4

While I think this response is on the right track, I do not think that it is convincing as presented in the literature. This is because the Boltzmann brain hypothesis is taken, by its proponents, to rest primarily on theoretical considerations. That is, the argument appeals to the content of Boltzmann’s statistical explanation of the second law of thermodynamics and the mathematical laws of probability to conclude that in any material universe like the one we take ourselves to inhabit, there are very many more Boltzmann brains with experiences as if ordinary brains in ordinary bodies on an ordinary Earth (‘ordinary brains’ hereafter) than there are the latter:

If you think that you can be pretty sure that you are not one of these Boltzmann brains, then you haven’t understood what’s being proposed… If Boltzmann brains can have the same kinds of experience as you or I, and brains of this sort vastly outnumber ordinary human beings, then the conclusion is obvious.([14] p. 103; original emphasis.)

In other words, the proponents will insist that the large portions of our total evidence that suggest we are ordinary human beings must, upon reflection, be reframed as misleading, much as our ordinary experience of time must be taken as misleading upon learning of Relativity Theory ([15]). No amount of ordinary experience with activities such as making doctor’s appointments, timing track and field events, or planning trips to the grocery store can refute Einstein’s theory, and this is due to the latter’s theoretical power and confirmation in a relatively small but important number of experimental contexts.5 Accordingly, that our total evidence, theoretical plus empirical, is unstable does not tell us which way things should be adjusted because the quality of the theoretical considerations may be taken, with a great deal of justification, to outweigh the quantity of ordinary experience that points in the opposite direction, especially when the theory suggests that there is, as a percentage of the whole, vastly less ordinary experience and evidence than we think.

Though this is correct, the situation is not, in fact, a standoff because there is a problem with the Boltzmann brain argument that undermines its theoretical power. Uncovering it will allow us to see why philosophers such as Dogramaci are, ultimately, correct. To make it easier to see the difficulty, I will first lay out the Boltzmann brain argument explicitly:

| 1. The universe is temporally infinite, at least toward the future. | Assumption |

| 2. The statistical explanation of entropy increase is correct. | Assumption |

| 3. The universe will eventually reach thermodynamic equilibrium. | (1), (2) |

| 4. By chance, every physically possible fluctuation away from equilibrium infinitely recurs. | (1)–(3) |

| 5. Some random fluctuations result in experiencing brains. | (2)–(4) |

| 6. Infinitely many experiencing brains will be created. | (4), (5) |

| 7. Short-lived fluctuations away from equilibrium are most probable. | (2) |

| Therefore, | |

| 8. Most brains are short-lived Boltzmann brains. | (6), (7) |

| 9. One is most likely a Boltzmann brain. | (8) |

Two comments on the argument are in order before proceeding to its analysis.

First, notice that premise (3) is potentially a controversial proposition because there is no guarantee that the universe will reach thermal equilibrium. For example, the large-scale impact of gravity might be to push the universe away from a ‘smooth’, uniform distribution and toward a non-equilibrium state. If so, then we have reason to doubt the soundness of the argument on empirical grounds. Since, however, my aim is to examine the reasoning behind the argument, I want to consider the strongest possible version of it by granting the premises as presented by its defenders. Even if a premise is false for empirical reasons, then I believe it remains of interest to reveal a flaw in the argument simply because any empirical claim can be overturned by future evidence, so a premise that is seen as false at one time might turn out to be true, in which case, it remains important to be aware of any errors in the structure of the argument itself.6

Secondly, there is the question as to whether we should view the start of any evolutionary process that ultimately leads to a normal, human-like brain as a chance event or as something that is determined, or at least rendered relatively probable, by earlier conditions. I do not think we have any clear reason to believe that evolution had to occur, or that the beginning of life is anything more than the result of a chance occurrence (though, perhaps, given enough time, the odds are such that conscious life will eventually arise on planets like the Earth). Hence, even though there is a difference between a momentary brain coming into existence by quantum fluctuation in a vacuum, on the one hand, and evolution by natural selection on the other, it still makes sense to view the brains produced in both cases as, ultimately, deriving from a chance, physical occurrence or set of occurrences simply because the conditions that allow for evolution to occur are the result of lawlike but non-teleological material processes. In other words, in infinite time, there will be infinitely many moments available for chance to produce a short-lived Boltzmann brain, but also infinitely many moments for chance to initiate the lengthy process of forming a planet suitable to host evolution by natural selection. Accordingly, we can treat both kinds of brains as the result of chance processes.7

Returning, now, to the argument outlined above, let us, accordingly, grant all its assumptions. It remains the case that the conclusion does not follow. This is because, even though the random fluctuations leading to Boltzmann brains have a much higher probability of occurrence than the processes that lead to ordinary brains, in infinite time, the products of these two kinds of fluctuations do not differ in number. That is, even given (1)–(7), there are, in infinite time, just as many ordinary brains as there are Boltzmann brains. Let us examine why this is the case.

Suppose for the sake of argument that the probability, m, of a Boltzmann brain forming by random shuffling is one trillion times greater than the probability, n, of an ordinary brain forming by an evolutionary process. That is, m = n × 1012. Accordingly, if we were to select any long but finite portion of time, say 10100 years, there would be 1012 Boltzmann brains for each ordinary one. If, however, we consider the infinite set of all experiencing brains, Boltzmann or ordinary, that are randomly produced in infinite time, then there will be equally many of each.

To see why, consider the set of natural numbers, N = {1, 2, 3, …}, which, since it is an infinitely large set, will represent the set of all Boltzmann brains randomly produced in infinite time. Next, consider the subset NT = {1T, 2T, 3T, …}, which selects every trillionth member of N; this represents the ordinary brains. The elements of these two sets stand in a one-to-one correspondence to each other:

N: 1 2 3 …

NT: 1T 2T 3T …

No matter how long we continue the lists, N will never outpace NT. Accordingly, the sets are equal in number (have the same cardinality). It remains the case, however, that if we were to count every member of N, starting at 1, performing one operation per second, then it would take 31,688 years before we reached the first member of NT. This might make it seem as though there are vastly more members of N than of NT, but that is not the case. There are exactly as many in one set as in the other.

We may, therefore, suppose that, upon reaching thermodynamic equilibrium, the universe randomly fluctuates forever such that, on average, one trillion, or one trillion trillion, or one trillion trillion trillion, etc., short-lived Boltzmann brains are produced before one much more unlikely ordinary brain occurs. While it may seem as though this entails that the overwhelming majority of experiencing brains will be the short-lived ones, that is not correct. It is not as though infinite time will produce infinitely many Boltzmann brains but only finitely many ordinary ones, even if the latter are far less probable than the former. On the contrary, no matter how small n is, there will be infinitely many ordinary brains.8 So, the set of randomly formed ordinary brains and the set of randomly formed Boltzmann brains have the same cardinality, and (8) does not follow from (1) to (7).

4. The Argument and the Non-Countably Infinite

The foregoing argument assumes that, in an unending universe, the number of brains, either Boltzmann or ordinary, will be countably infinite. It is, however, possible that time is continuous, in which case it would be inappropriate to think of it on analogy with the set of natural numbers. Instead, we would have to conceive of time as like the real number line, such that between any two moments there are infinitely many more. Since the cardinality of the real numbers, R, is 2N, which is infinitely larger than N ([17] pp. 32–34), they are uncountable, i.e., they outstrip the natural numbers. So, in continuous infinite time, it might be the case that 2N-many Boltzmann brains are produced, rather than N-many.

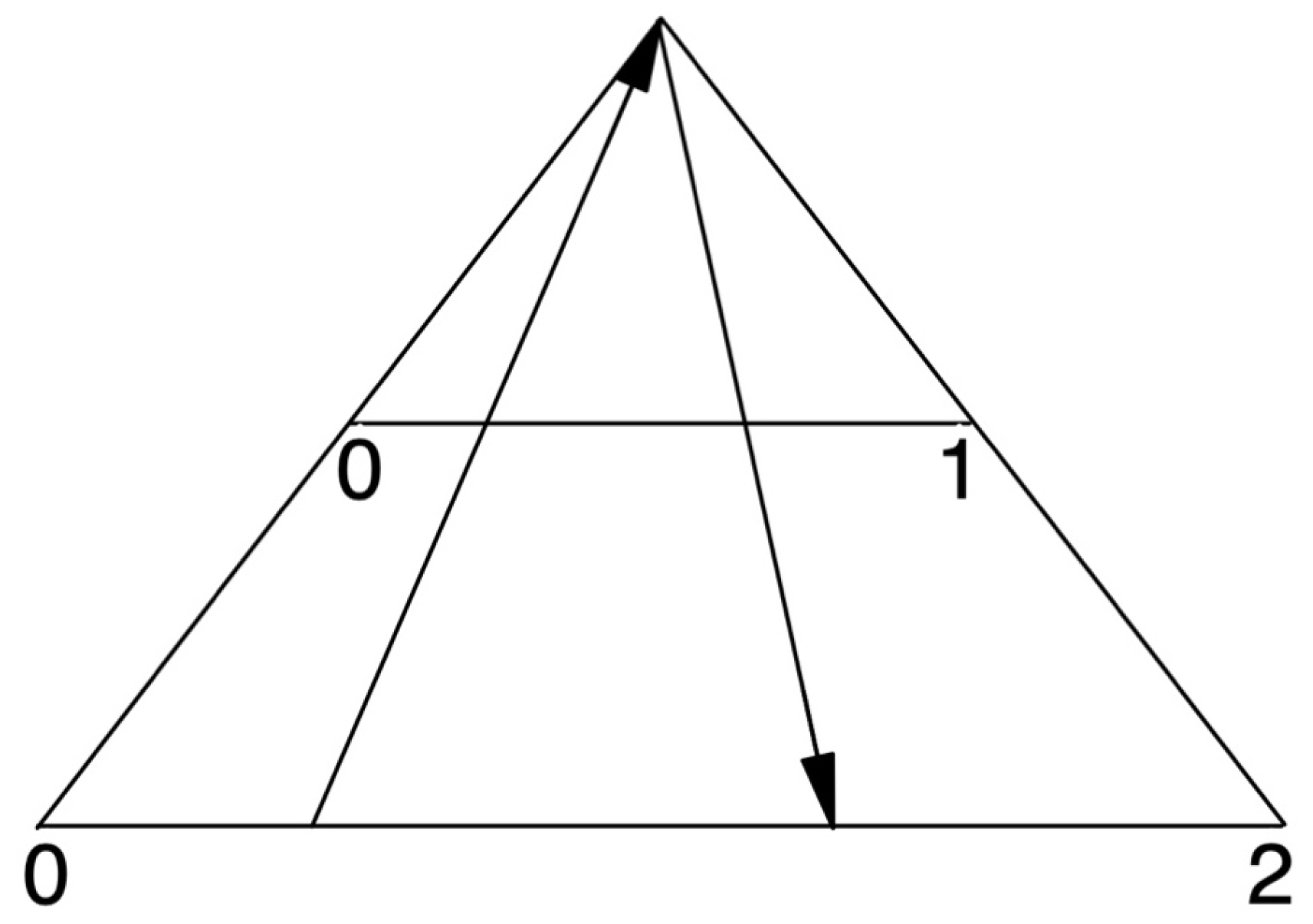

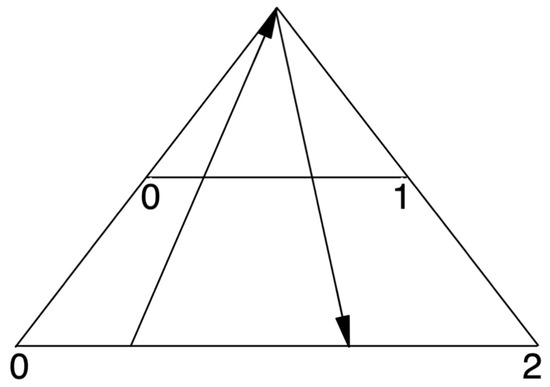

To see why this is plausible, consider Figure 1, which compares two lines, one double the length of the other, and embeds them in a triangle:

Figure 1.

Comparing Uncountable Infinities.

Let us assume that the lines, 0–1 and 0–2, are lengths embedded in a continuous space, so each is analogous to a real number line and contains an uncountable infinity of points. Imagine that we draw a line from the top of the triangle to each point in the longer line. This drawn line will intersect the shorter line at exactly one point. At the same time, any such drawn line can be read in reverse, i.e., as running from each point on the second line to the top, passing through exactly one point on the first line. Hence, there is a one-to-one correspondence between the points on each line, and we may conclude that each line consists of a set of points with the same cardinality, 2N.

We could repeat this exercise as often as we like. We could, for example, replace the 0–2 line with a 0–3, 0–4, 0–5, …, line, and obtain the same result: a one-to-one correspondence between the points on each. Hence, the number of points in any continuous line is the same as in any other. To put it the other way around, any sliver, no matter how tiny, of the 0–1 line in Figure 1 will have as many points as the original. Similarly, the number of instants in any span of continuous time is the same as that in any other.

Accordingly, if time is continuously infinite, then there are 2N potential locations of a randomly formed Boltzmann brain. Suppose that the probability of a Boltzmann brain forming at any instant is m. The number of Boltzmann brains is, therefore, m × 2N = 2N, which is uncountably infinite, even though it is a subset of all moments of continuous time. This does not, however, resuscitate the Boltzmann brain argument because each moment of continuous time is equally the potential location of an ordinary brain; it is just that the probability, n, of such a brain existing at any moment is much lower than m. Still, no matter how small n is, so long as it is greater than zero, then n × 2N = 2N because any positive, finite number multiplied by 2N is 2N. Accordingly, the assumption that time is infinitely continuous, and so contains uncountably many short-lived Boltzmann brains, gives us no reason to suppose that they outnumber the ordinary brains. Again, (8) does not follow from (1) to (7).

5. Instantaneous Boltzmann Brains

There is another possibility that we might wish to consider, namely, that in infinite continuous time, 2N Boltzmann brains are produced but only N ordinary ones. If that were the case, then the existence of an infinite, continuous future would support the conclusion that one is most likely a Boltzmann brain since, as mentioned, 2N is infinitely larger than N. Is such a situation plausible? I am not aware of any argument for it, but I can imagine one running as follows. If we assume that time is composed of 2N instants, then each such moment is the possible location of an instantaneous brain, and in infinite time there will be 2N of these, as argued above. On the other hand, enduring brains do not exist instantaneously but, rather, occupy finite spans of time. Each such span is discrete and countable. Hence, there are only countably many ordinary brains compared to uncountably many Boltzmann brains in infinite, continuous time.

The problem with this is that even if instantaneous Boltzmann brains are possible, any random fluctuation away from maximum entropy that produces such a brain must take some time; the fluctuation itself is not instantaneous. We may suppose, for example, that some matter undergoes random evolution from equilibrium to order and back again during an interval, ti – tj, and that only at an instant, tm, ti < tm < tj, does a set of experiences exist, as a Boltzmann brain. Nevertheless, we cannot assume that the brain itself instantaneously forms at tm. At least, not if the laws of nature are as we currently understand them to be (e.g., matter cannot accelerate past the speed of light).

Accordingly, the scenario under consideration will not resuscitate the Boltzmann brain hypothesis because, in infinite continuous time, each instant is the possible starting point of a random process that leads either to a long-lived ordinary brain or an instantaneous Boltzmann brain.9 If m is the probability that a given moment will be the start of a process leading to an instantaneous Boltzmann brain, and n is the probability that a given moment will be the start of a random process leading to an ordinary brain, then in infinite, continuous time there are m × 2N of the former and n × 2N of the latter. Since both m and n are finite, both products give the same result, 2N. It does not matter how much larger m is than n, so long as both are finite. In continuous infinite time, then, the cardinality of the set of ordinary brains is the same as that of the set of instantaneous Boltzmann brains. Once again, (8) does not follow from (1) to (7).

6. Probability Measure

A measure is a function that assigns a number to every element of a set, or domain, D, in accordance with the following principles: (a) D is closed under the complement operation, i.e., if a set, S, is in D, then so is not-S, the set of everything outside of S; (b) any countably many subsets of D are such that their union is also in D; and (c) any countably many subsets of D are such that their intersection is also in D (see, e.g., [18] pp. 3–4).

A probability measure, P, is a measure that satisfies three further axioms:

- (i.)

- Non-negativity: for any element, e, in D, P(e) 0.

- (ii.)

- Additivity: for any countable collection of disjoint subsets of D, {ei}, P(e1 + e2 + … + ei) = P(e1) + P(e2) + … + P(e1).

- (iii.)

- Normality: P(D) = 1.

Informally, every element of the domain must have a non-negative probability, the probability of the sum of mutually exclusive events is the sum of their individual probabilities, and some element from the domain must occur (see, e.g., [19] p. 21).

In the Boltzmann brain argument, the relevant domain, D, is the set of times, T = {ti}, and T cannot sustain a relevant, well-defined probability measure because it is an infinite set with infinitely many subsets, each of which has, according to the argument itself, an equal probability of containing a Boltzmann brain. This means that the normality will be violated, given any probability measure that assigns a non-zero probability to the subsets of T. This is because it is an assumption of the argument that, once equilibrium is reached, Boltzmann brains are formed only by the purely random shuffling of the material contents of the universe. Accordingly, any way of dividing T into subsets will have the result that every such subset is an equally probable candidate for the existence of a Boltzmann brain. Hence, we cannot assign any non-zero probability to the subsets of T without the sum of probabilities adding up to infinity. The situation is formally analogous to that of trying to assign a probability to a randomly selected positive integer or real number: there is no such measure because every number is equally likely to be selected, so if we assign any finite value to P(ni), the probability of selecting number ni, then the sum of all the individual probabilities is infinite.

Sometimes probabilities involving infinite domains can be tamed, i.e., normalized, by defining, over the domain, a function that converges on a finite value. For example, if the domain satisfies a normal distribution with a finite mean, then, because the graph of this distribution approaches zero on both sides, the total area under the graph converges to a finite value and can be set to one. The graph in Figure 2 represents a normal distribution of some measure, P, over an infinite set of times.

Figure 2.

A Normal Distribution.

Even if the ‘tails’ of this graph infinitely extend to the left and right, the area under the curve is finite, and the total probability can be normalized to a value of one.

This, however, depends on the particulars of the function. In the case of a normal distribution, the function has the form , and the integral of this function, from negative to positive infinity , is finite. So, if were the form of our probability measure, then we could assign every element along the time axis, T, a positive value for the probability of a Boltzmann brain existing then and still satisfy normality. In the Boltzmann brain argument, however, there is, by hypothesis, no function of this sort to define a measure on T. On the contrary, the assumption is that once the universe reaches thermodynamic equilibrium, then what remains is nothing but random motion among its persisting material contents, with nothing favoring one part of the timeline over any other, in terms of the probability of matter coalescing into a brain then. In short, there is no well-defined probability measure on T by the terms of the argument itself.

Considering this, it is worth pausing to consider an argument put forth by the physicist Don N. Page, who employs Bayes Rule to derive the conclusion that theories ‘in which Boltzmann brains strongly dominate over Ordinary Observers’ have ‘lower posterior probabilities’ than theories according to which ordinary observers dominate ([20] p. 7). His argument depends, however, on the assumption that

initially plausible Boltzmann Brain theories tend to have the number NBB of Boltzmann Brains enormously larger than the number NOO of Ordinary Observers, and even enormously larger than the number of Ordinary Observers multiplied by the number m of observations they each make during their lifetime (this m being in comparison with 1 observation assumed here for each Boltzmann Brain during its typically very short lifetime), so that NBB/(mNOO) ≫≫ 1([20] p. 6)

But if NBB and NOO are infinite, then no measure has been defined that would support the inequality that he mentions, namely, NBB/(mNOO) ≫≫ 1. The reason for this is that, as I have just argued, each moment of infinite time has an equivalent, so undefined, chance of being either the point at which random fluctuation produces a Boltzmann brain or the start of a process that results in a normal brain. Page takes it that the numbers involved are subject to a measure that makes it possible to estimate this ratio, and I have argued that this is incorrect in the context of the standard argument for the Boltzmann brain hypothesis. While Page’s argument might go through if NBB is a larger infinity than NOO (NBB >> NOO in a transfinite series), the paper does not provide an argument for this, nor does it address the question of how to compare different infinite cardinalities in the framework of Bayesian analysis, so I see no way to evaluate this suggestion with any degree of precision.10

In analyzing the probability that one is, right now, a Boltzmann brain, I have accepted the reasoning of the argument’s proponents, which, at least implicitly, acknowledges the lack of a relevant probability measure. What this means, I have concluded, is that the cardinalities of all the subsets of T that contain a Boltzmann brain and all the subsets that contain an ordinary one must be equally infinite. There is no well-defined sense, therefore, in which there is more of one than the other, given this argument. This does not mean that it is impossible for there to be a measure, defined on the infinitely many moments of time that are assumed in the Boltzmann brain argument, that comes to be scientifically accepted. My point here is that the defenders of the hypothesis assume that there is no such measure and that this is why the number of Boltzmann brains is infinite. Since I am analyzing the reasoning behind this argument, I want to grant its defenders the proposition that the empirical facts are consistent with their premises since I want to assess their strongest possible case. As I reject the hypothesis, I am happy to accept that empirical arguments might also count against it.11

7. Why the Evidence Counts Against Boltzmann Brains

We can now see precisely why it is the case that our total evidence counts against the Boltzmann brain hypothesis. The theoretical considerations entail that, in any kind of temporally infinite universe, the cardinality of the set of Boltzmann brains is equal to that of the set of ordinary brains. Accordingly, we should assign equal prior probability to the Boltzmann brain and ordinary brain hypotheses. This means that any other evidence suggesting that we inhabit the ordinary case will tilt the balance of probabilities away from the Boltzmann brain conclusion and toward the ordinary one. As a matter of fact, we have a great deal of such evidence, namely, as Dogramaci suggests, all our ordinary experiences and memories, which plainly suggest that we are ordinary brain–body combinations, enduring through time, on earth. This huge mass of experience (see, also, ref. [21]) counts decisively against the Boltzmann brain hypothesis precisely because the theoretical considerations are a wash and provide no reason on their own to assign greater probability to the hypothesis than its negation. The epistemic situation is as if Einstein’s theory of relativity were to render absolute and relative time equally good theoretical posits, while all experience continues to point toward the absolute alternative; in such a case, accepting absolute time would be the right response. Accordingly, the conclusion that is supported, overall, given the assumption that the future is infinite, is that we are ordinary brains.12

8. A Surprising Conclusion

One way of summarizing the foregoing is that it would be an instance of the fallacy of composition to assume that if each part (subset) of infinite time has more Boltzmann brains than ordinary ones, it follows that the whole of time does as well. For finite sets, of course, this is not a fallacy. Hence, the problem with the Boltzmann brain argument is that it depends on transferring properties of the relationship between finite sets and their finitely many subsets to that between infinite sets and their infinitely many subsets. Our intuitions about how many Boltzmann brains there are compared to ordinary ones will be misleading if we fail to take heed of the difference between the two kinds of cases. Once we do, then the power of the argument dissipates, and ordinary experience carries the epistemic day.

There is, however, one final situation to consider, if only speculatively. Carroll argues that it could be that our universe will be subject to relatively fast vacuum decay that would not allow sufficient time for Boltzmann brains to form ([22]). As he points out, the rate of such decay depends on the cosmological constant, , which is a measure of the vacuum energy of spacetime. Accordingly, for some values of the cosmological constant, we are almost certainly not Boltzmann brains because there is just not enough time for any to form with reasonably high probability. However, this raises at least the logical possibility of intermediate values of the cosmological constant that would lead to vacuum decay in a finite period, but one that is long enough for the formation of Boltzmann brains to be sufficiently probable as to outnumber ordinary brains. For such values of , the universe would have enough time to randomly produce many, but only finitely many, such brains before vacuum decay. This would support the hypothesis that one is probably a Boltzmann brain because if there are finitely many Boltzmann brains, but many more of them than normal brains, then statistical reasoning suggests that one is not a normal brain.

While this is an interesting scenario to consider, it is difficult to evaluate. For example, it is unknown whether fast vacuum decay awaits our world or whether is constant or variable across time ([22] p. 12). Still, what is interesting, and perhaps surprising, is that it is the prospect of a very long but finite future that raises the Boltzmann brain specter, though, admittedly, the reality of such a future lacks definitive empirical support now. Infinite time, on the other hand, presents no substantial challenge to our ordinary self-understanding, at least not based on the standard argument for Boltzmann brains. Insofar as we have reason to believe that time will not come to an end, we have no reason to doubt that we are, overall, what we take ourselves to be.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflicts of interest.

Notes

| 1. | Human intervention can, of course, reverse the natural effects of entropy increase by, for example, rearranging the marbles in the bag. In such cases, the system is not closed, so the statistical reasoning no longer applies. If, however, we consider the system as consisting of a human intervener and a bag of marbles, then that in fact forms a closed system whose entropy will increase. In other words, intentional action can reverse entropy in an open system but not in the universe overall (see [3] pp. 699–702). |

| 2. | This includes the totality of material bodies as well as all energy. |

| 3. | The theory is compatible with collapse back into a singularity, but current observations suggest that nothing will stop the expansion of the universe, which is accelerating ([7,8]). |

| 4. | See also [10,12,13], all of which argue that the Boltzmann brain hypothesis is self-undermining in virtue of relying on scientific evidence to conclude that scientific evidence is unreliable. |

| 5. | Even if relativity were only confirmed in, e.g., high energy experiments beyond the realm of ordinary experience, this combined with its theoretical virtues would suffice for epistemic victory over the Newtonian character of experience, as ubiquitous as the latter is, since it fails in those crucial realms. |

| 6. | For similar reasons, I will, here, mention but not address the argument in [16] that Boltzmann brains will not arise in a De Sitter vacuum because that argument assumes the many-worlds interpretation of quantum mechanics, which is an empirical hypothesis that is not part of the standard argument for the Boltzmann brain hypothesis. Thanks to an anonymous referee for drawing this issue to my attention. |

| 7. | Thanks to an anonymous referee for pointing out the importance of clarifying this here. |

| 8. | At the risk of repetitiveness, let me reiterate that I assume that, in infinite time, there are infinitely many opportunities for a lengthy, evolutionary process, on a long-lived planet, to get started by chance. That is, I take it that, given infinite time in which all matter and energy fluctuate randomly, forever, natural selection will be initiated infinitely often because conditions similar to the early stages of the Earth will repeat by chance. |

| 9. | This holds even if time is directionless, for the endpoint of a random fluctuation can be viewed as the starting point if we wish, without changing the logic of the argument here, since any evolution away from equilibrium will be followed by evolution back up, no matter which temporal orientation we adopt. |

| 10. | Thanks to an anonymous referee for drawing my attention to the arguments in this paper. |

| 11. | Thanks to an anonymous referee for insisting that I clarify this point. |

| 12. | Rovelli and Wolpert ([13]) argue that versions of the Boltzmann brain argument that do not undermine themselves (see note 4 above) are ‘data-independent’ so ‘outside the purview of science ([13] p. 5). If my arguments here are correct, then any version that relies on infinitely many Boltzmann brains forming in infinite time will in fact fail for mathematical reasons, so perhaps we have at least some reason to be suspicious of even those arguments that are outside the purview of science in the ways they suggest. |

References

- Boltzmann, L. Lectures on Gas Theory; Brush, S.G., Translator; University of California Press: Berkeley, CA, USA, 1964. [Google Scholar]

- Horwich, P. Asymmetries in Time: Problems in the Philosophy of Science; MIT Press: Cambridge, MA, USA, 1987. [Google Scholar]

- Penrose, R. The Road to Reality: A Complete Guide to the Laws of the Universe; Vintage Books: London, UK, 2005. [Google Scholar]

- Johnson, E. Anxiety and the Equation; MIT Press: Cambridge, UK, 2018. [Google Scholar]

- Greene, B. Until the End of Time: Mind, Matter, and Our Search for Meaning in an Evolving Universe; Vintage Books: New York, NY, USA, 2021. [Google Scholar]

- Huemer, M. Existence is Evidence of Immortality. Noûs 2021, 55, 128–151. [Google Scholar] [CrossRef]

- Perlmutter, S.; Aldering, G.; Goldhaber, G.; Knop, R.A.; Nugent, P.; Castro, P.G.; Deustua, S.; Fabbro, S.; Goobar, A.; Groom, D.E.; et al. Measurements of Ω and Λ from 42 High-Redshift Supernovae. Astrophys. J. 1999, 517, 565–586. [Google Scholar] [CrossRef]

- Riess, A.G.; Filippenko, A.V.; Challis, P.; Clocchiatti, A.; Diercks, A.; Garnavich, P.M.; Gilliland, R.L.; Hogan, C.J.; Jha, S.; Kirshner, R.P.; et al. Observational Evidence from Supernovae for an Accelerating Universe and a Cosmological Constant. Astron. J. 1998, 116, 1009–1038. [Google Scholar] [CrossRef]

- Albrecht, A.; Sorbo, L. Can the Universe Afford Inflation? Phys. Rev. D 2004, 70, 063528. [Google Scholar] [CrossRef]

- Carroll, S. From Here to Eternity: The Quest for the Ultimate Theory of Time; Penguin: New York, NY, USA, 2010. [Google Scholar]

- Dogramaci, S. Does My Total Evidence Support that I’m a Boltzmann Brain? Philos. Stud. 2020, 177, 3717–3723. [Google Scholar] [CrossRef]

- Chalmers, D. Structuralism as a Response to Skepticism. J. Philos. 2018, 115, 625–660. [Google Scholar] [CrossRef]

- Rovelli, C.; Wolpert, D. Why You Do Not Need to Worry about the Standard Argument that You Are a Boltzmann Brain. arXiv 2024, arXiv:2407.13197. [Google Scholar]

- Dainton, B. Some Cosmological Implications of Temporal Experience. In Cosmological and Psychological Time; Dolev, Y., Roubach, M., Eds.; Springer International: Cham, Switzerland, 2016. [Google Scholar]

- Einstein, A. The Meaning of Relativity, 5th ed.; Princeton University Press: Princeton, NJ, USA, 1955. [Google Scholar]

- Boddy, K.K.; Carroll, S.M.; Pollack, J. Why Boltzmann Brains Don’t Fluctuate Into Existence from the De Sitter Vacuum. arXiv 2015, arXiv:1505.02780. [Google Scholar]

- Papineau, D. Philosophical Devices: Proofs, Probabilities, Possibilities, and Sets; Oxford University Press: Oxford, UK, 2012. [Google Scholar]

- Ash, R.B.; Doléans-Dade, C.A. Probability and Measure Theory, 2nd ed.; Harcourt Academic Press: San Diego, CA, USA, 2000. [Google Scholar]

- Chung, K.L. A Course in Probability Theory, 2nd ed.; Harcourt Academic Press: San Diego, CA, USA, 2001. [Google Scholar]

- Page, D.N. Bayes Keeps Boltzmann Brains at Bay. arXiv 2017, arXiv:1708.00449. [Google Scholar] [CrossRef]

- Huemer, M. Phenomenal Conservatism Uber Alles. In Seemings and Justification: New Essays on Dogmatism and Phenomenal Conservatism; Tucker, C., Ed.; Oxford University Press: Oxford, UK, 2013; pp. 328–350. [Google Scholar]

- Carroll, S. Why Boltzmann Brains are Bad. In Current Controversies in Philosophy of Science; Dasgupta, S., Dotan, R., Weslake, B., Eds.; Routledge: New York, NY, USA, 2020; pp. 7–20. Available online: https://arxiv.org/pdf/1702.00850 (accessed on 5 March 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).