Outsourcing Love, Companionship, and Sex: Robot Acceptance and Concerns

Abstract

1. Introduction

1.1. Overview of the Status of Robot Technology

1.2. Social Robots with Human-like Features

1.3. Robot Use in the Home

1.4. Robot Use for Companionship

1.5. Robot Use for Sexual Pleasure

1.6. Theoretical Framework for Robot Use at Home

1.7. The Current Study

2. Materials and Methods

2.1. Sample

2.2. Procedure

2.3. Measures

2.4. Analytic Strategy

3. Results

3.1. Is Companionship Different from Intimacy?

3.2. Overall Acceptance of Humanoid Robots

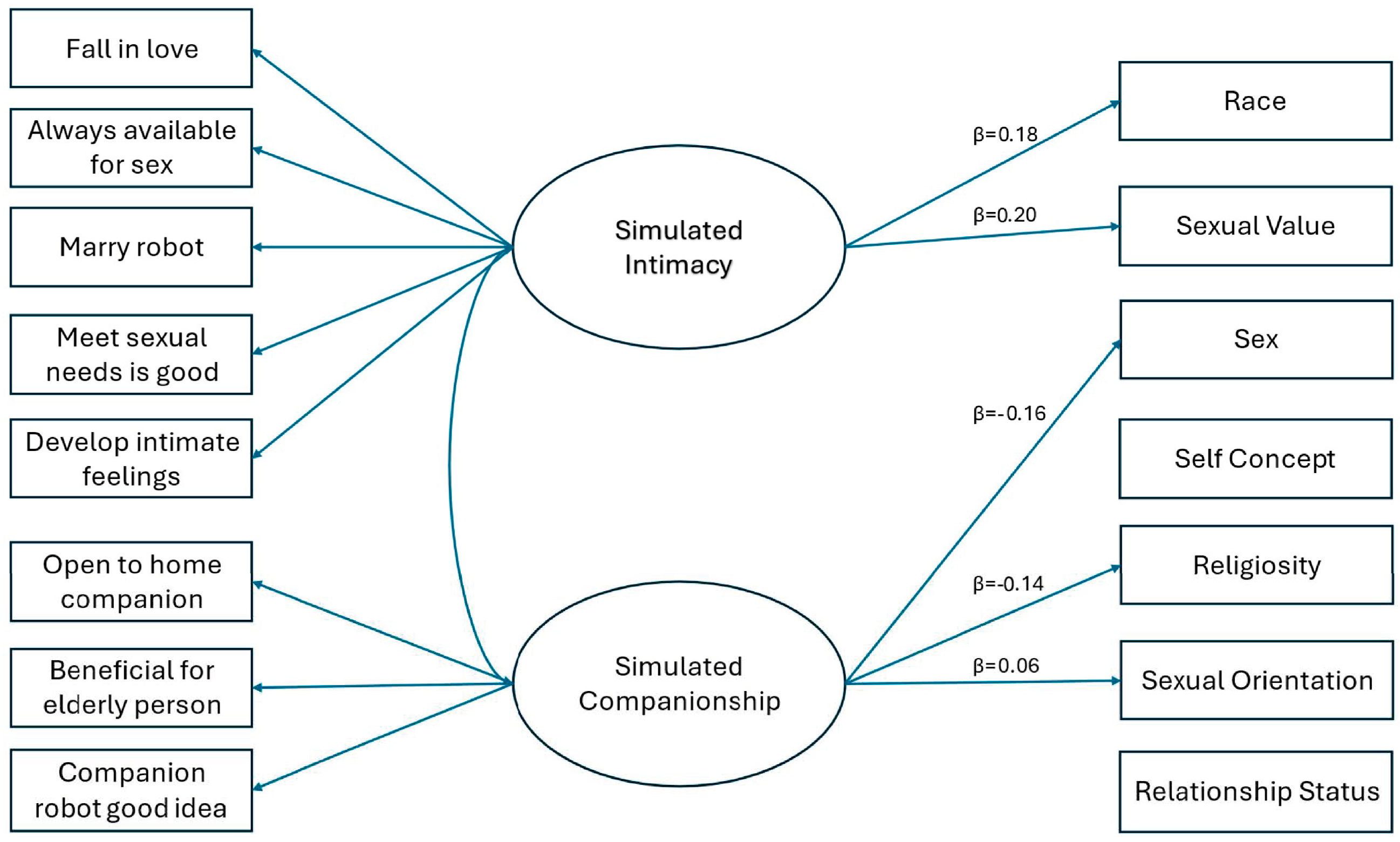

3.3. Predictors of Acceptance of Using Humanoid Robots for Simulated Companionship and Simulated Intimacy

4. Discussion

4.1. Implications

4.2. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Smith, A.; Anderson, M. Americans’ Attitudes Toward a Future in Which Robots and Computers Can Do Many Human Jobs. Pew Research Center. 2017. Available online: https://www.pewresearch.org/internet/2017/10/04/americans-attitudes-toward-a-future-in-which-robots-and-computers-can-do-many-human-jobs/ (accessed on 2 March 2025).

- Banks, J. Optimus Primed: Media Cultivation of Robot Mental Models and Social Judgments. Front. Robot. AI 2020, 7, 62. [Google Scholar] [CrossRef] [PubMed]

- DiTecco, D.; Karaian, L. New Technology, Same Old Stigma: Media Narratives of Sex Robots and Sex Work. Sex. Cult. 2023, 27, 539–569. [Google Scholar] [CrossRef]

- Onusseit, P. Demonized Inventions: From Sex Doll to AI. 2023. Available online: https://www.dw.com/en/demonized-inventions-from-railroads-to-ai/a-65658720 (accessed on 5 March 2025).

- Savela, N.; Turja, T.; Latikka, R.; Oksanen, A. Media effects on the perceptions of robots. Hum. Behav. Emerg. Technol. 2021, 3, 989–1003. [Google Scholar] [CrossRef]

- Guizzo, E. What Is a Robot? Robots Guide. Available online: https://robotsguide.com/learn/what-is-a-robot (accessed on 5 March 2024).

- Magaña, A.; Vlaeyen, M.; Haitjema, H.; Bauer, P.; Schmucker, B.; Reinhart, G. Viewpoint Planning for Range Sensors Using Feature Cluster Constrained Spaces for Robot Vision Systems. Sensors 2023, 23, 7964. [Google Scholar] [CrossRef]

- Van Wegen, M.; Herder, J.L.; Adelsberger, R.; Pastore-Wapp, M.; van Wegen, E.E.H.; Bohlhalter, S.; Nef, T.; Krack, P.; Vanbellingen, T. An Overview of Wearable Haptic Technologies and Their Performance in Virtual Object Exploration. Sensors 2023, 23, 1563. [Google Scholar] [CrossRef]

- Wang, C.; Liu, C.; Shang, F.; Niu, S.; Ke, L.; Zhang, N.; Ma, B.; Li, R.; Sun, X.; Zhang, S. Tactile Sensing Technology in Bionic Skin: A Review. Biosens. Bioelectron. 2023, 220, 114882. [Google Scholar] [CrossRef]

- Monroy, J.; Ruiz-Sarmiento, J.R.; Moreno, F.A.; Galindo, C.; Gonzalez-Jimenez, J. Olfaction, Vision, and Semantics for Mobile Robots: Results of the IRO Project. Sensors 2019, 19, 3488. [Google Scholar] [CrossRef]

- Paul, A. An ‘Electronic Tongue’ Could Help Robots Taste Food Like Humans. Popular Science. 2023. Available online: https://www.popsci.com/technology/electronic-tongue-ai-robot/ (accessed on 5 March 2025).

- Copeland, B.J. Artificial Intelligence; Encyclopedia Britannica: Chicago, IL, USA, 2024; Available online: https://www.britannica.com/technology/artificial-intelligence (accessed on 7 March 2024).

- Bo, Y.; Wang, H.; Niu, H.; He, X.; Xue, Q.; Li, Z.; Yang, H.; Niu, F. Advancements in materials, manufacturing, propulsion and localization: Propelling soft robotics for medical applications. Front. Bioeng. Biotechnol. 2024, 11, 1327441. [Google Scholar] [CrossRef]

- Hassani, H.; Silva, E.S.; Unger, S.; TajMazinani, M.; Mac Feely, S. Artificial Intelligence (AI) or Intelligence Augmentation (IA): What Is the Future? AI 2020, 1, 143–155. [Google Scholar] [CrossRef]

- García-Córdova, F.; Guerrero-González, A.; Zueco, J.; Cabrera-Lozoya, A. Simultaneous Sensing and Actuating Capabilities of a Triple-Layer Biomimetic Muscle for Soft Robotics. Sensors 2023, 23, 9132. [Google Scholar] [CrossRef]

- Ragno, L.; Borboni, A.; Vannetti, F.; Amici, C.; Cusano, N. Application of Social Robots in Healthcare: Review on Characteristics, Requirements, Technical Solutions. Sensors 2023, 23, 6820. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, P.; McGill, A. When brands seem human, do humans act like brands? Automatic behavioral priming effects of brand anthropomorphism. J. Consum. Res. 2012, 39, 307–323. [Google Scholar] [CrossRef]

- Momen, A.; Hugenberg, K.; Wiese, E. Social perception of robots is shaped by beliefs about their minds. Sci. Rep. 2024, 14, 5459. [Google Scholar] [CrossRef] [PubMed]

- Eyssel, F.; Hegel, F. (S)he’s got the look: Gender stereotyping of Robots. J. Appl. Soc. Psychol. 2012, 42, 2213–2230. [Google Scholar] [CrossRef]

- Fortunati, L.; Edwards, A.; Edwards, C.; Manganelli, A.E.; de Luca, F. Is Alexa Female, Male, or Neutral? A Cross-National and Cross-Gender Comparison of Perceptions of Alexa’s Gender and Status as a Communicator. Comput. Hum. Behav. 2022, 137, 107426. [Google Scholar] [CrossRef]

- Papadopoulos, I.; Lazzarino, R.; Miah, S.; Weaver, T.; Thomas, B.; Koulouglioti, C. A systematic review of the literature regarding socially assistive robots in pre-tertiary education. Comput. Educ. 2020, 155, 103924. [Google Scholar] [CrossRef]

- Kugler, L. Crossing the Uncanny Valley: The “Uncanny Valley Effect” May Be Holding Back the Field of Robotics. Commun. ACM 2022, 65, 14–15. [Google Scholar] [CrossRef]

- Prochazka, A.; Brooks, R. Digital Lovers and Jealousy: Anticipated Emotional Responses to Emotionally and Physically Sophisticated Sexual Technologies. Hum. Behav. Emerg. Technol. 2024, 2024, 1413351. [Google Scholar] [CrossRef]

- Hoffman, G.; Kshirsagar, A.; Law, M.V. Human-robot interaction challenges in the workplace. In The Psychology of Technology: Social Science Research in the Age of Big Data; Matzo, S., Ed.; American Psychological Association: Washington, DC, USA, 2022; pp. 305–348. [Google Scholar]

- Lee, M.C.; Chiang, S.Y.; Yeh, S.C.; Wen, T.F. Study on Emotion Recognition and Companion Chatbot Using Deep Neural Network. Multimed. Tools Appl. 2020, 79, 19629–19657. [Google Scholar] [CrossRef]

- Szondy, M.; Fazekas, P. Attachment to robots and therapeutic efficiency in mental health. Front. Psychol. 2024, 15, 1347177. [Google Scholar] [CrossRef]

- Andtfolk, M.; Nyholm, L.; Eide, H.; Fagerström, L. Humanoid Robots in the Care of Older Persons: A Scoping Review. Assist. Technol. 2022, 34, 518–526. [Google Scholar] [CrossRef] [PubMed]

- CBS News. Virtual Valentine: People Are Turning to AI in Search of Emotional Connections. CBS News, 14 February 2024. Available online: https://www.cbsnews.com/news/valentines-day-ai-companion-bot-replika-artificial-intelligence/ (accessed on 2 December 2024).

- Lee, O.E.; Lee, H.; Park, A.L.; Choi, N.G. My Precious Friend: Human-Robot Interactions in Home Care for Socially Isolated Older Adults. Clin. Gerontol. 2024, 47, 161–170. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.; Sommerlad, A.; Sakure, L.; Livingston, G. Socially assistive robots for people with dementia: Systematic review and meta-analysis of feasibility, acceptability and the effect on cognition, neuropsychiatric symptoms and quality of life. Ageing Res. Rev. 2022, 78, 101633. [Google Scholar] [CrossRef] [PubMed]

- Ostrowski, A.; Zhang, J.; Brezeal, C.; Park, H.W. Promising Directions for Human-Robot Interactions Defined by Older Adults. Front. Robot. AI 2024, 11, 1289414. [Google Scholar] [CrossRef]

- Zarecki, B. The Rise of Robotic Companions to Address Social Isolation. Center for Healthy Aging, Colorado State University. 2023. Available online: https://www.research.colostate.edu/healthyagingcenter/2023/10/25/the-rise-of-robotic-companions-to-address-social-isolation (accessed on 3 March 2024).

- Skjuve, M.; Følstad, A.; Fostervold, K.I.; Brandtzaeg, P.B. My Chatbot Companion—A Study of Human-Chatbot Relationships. Int. J. Hum.-Comput. Stud. 2021, 149, 102601. [Google Scholar] [CrossRef]

- Yueh, H.; Lin, W.; Wang, S.; Fu, L. Reading with robot and human companions in library literacy activities: A comparison study. Br. J. Educ. Technol. 2020, 51, 1884–1900. [Google Scholar] [CrossRef]

- Drouin, M.; Sprecher, S.; Nicola, R.; Perkins, T. Is Chatting with a Sophisticated Chatbot as Good as Chatting Online or FTF with a Stranger? Comput. Hum. Behav. 2022, 128, 107100. [Google Scholar] [CrossRef]

- Tobis, S.; Piasek-Skupna, J.; Neumann-Podczaska, A.; Suwalska, A.; Wieczorowska-Tobis, K. The Effects of Stakeholder Perceptions on the Use of Humanoid Robots in Care for Older Adults: Postinteraction Cross-Sectional Study. J. Med. Internet Res. 2023, 25, e46617. [Google Scholar] [CrossRef]

- Lee, O.E.K.; Nam, I.; Chon, Y.; Park, A.; Choi, N. Socially Assistive Humanoid Robots: Effects on Depression and Health-Related Quality of Life among Low-Income, Socially Isolated Older Adults in South Korea. J. Appl. Gerontol. Off. J. South. Gerontol. Soc. 2023, 42, 367–375. [Google Scholar] [CrossRef]

- Herold, E. Robots and the People Who Love Them: Holding on to Our Humanity in an Age of Social Robots; St. Martin’s Press: New York, NY, USA, 2024. [Google Scholar]

- Buhrmester, D.; Furman, W.; Buhrmester, D.; Furman, W. The Development of Companionship and Intimacy. Child Dev. 1987, 58, 1101–1113. [Google Scholar] [CrossRef]

- Bloch, I. The Sexual Life of Our Time in Its Relations to Modern Civilization; Paul, M.E., Translator; Dalton: London, UK, 1910. [Google Scholar]

- Viik, T. Falling in Love with Robots: A Phenomenological Study of Experiencing Technological Alterities. Paladyn J. Behav. Robot. 2020, 11, 52–65. [Google Scholar] [CrossRef]

- Beck, J.A. (Straight, Male) History of Sex Dolls. The Atlantic. 2014. Available online: https://www.theatlantic.com/health/archive/2014/08/a-straight-male-history-of-dolls/375623/ (accessed on 14 January 2024).

- Ferguson, A. The Sex Doll: A History; McFarland Publishing: Jefferson, NC, USA, 2010. [Google Scholar]

- Liberati, N. Making Out with the World and Valuing Relationships with Humans. Paladyn J. Behav. Robot. 2020, 11, 140–146. [Google Scholar] [CrossRef]

- Dubé, S.; Anctil, D. Foundations of Erbotics. Int. J. Soc. Robot. 2021, 12, 1205–1233. [Google Scholar] [CrossRef] [PubMed]

- 360 Market Updates. Sex Doll Market Size Will Reach US $644.09 Million in 2031. LinkedIn Pulse. 2024. Available online: https://www.linkedin.com/pulse/sex-doll-market-size-reach-us-64409-million-ln-2031-ynomf/ (accessed on 5 March 2025).

- Rigotti, C. How to Apply Asimov’s First Law to Sex Robots. Paladyn J. Behav. Robot. 2020, 11, 161–170. [Google Scholar] [CrossRef]

- Döring, N.; Poeschl, S. Experiences with Diverse Sex Toys Among German Heterosexual Adults: Findings from a National Online Survey. J. Sex Res. 2020, 57, 885–896. [Google Scholar] [CrossRef]

- Dubé, S.; Santaguida, M.; Anctil, D. Erobots as Research Tools: Overcoming the Ethical and Methodological Challenges of Sexology. J. Futur. Robot Life 2022, 11, 207–221. [Google Scholar] [CrossRef]

- Flippen, E.; Gaither, G. Prevalence, Comfort with, and Characteristics of Sex Toy Use in a US Convenience Sample Using Reddit.com. Grad. Stud. J. Psychol. 2023, 20, 5–25. [Google Scholar] [CrossRef]

- Shaddel, F.; Mayes, D. Considering Capacity to Use Sex Toys in Secure Care: Two Case Reports. Prog. Neurol. Psychiatry 2023, 2, 4–55. [Google Scholar] [CrossRef]

- New York Post. Inside Berlin’s Cybrothel, the World’s First AI Brothel Using Virtual Reality Sex Dolls. 2024. Available online: https://nypost.com/2024/02/04/lifestyle/inside-cybrothel-the-worlds-first-ai-brothel-using-sex-dolls/ (accessed on 6 March 2025).

- Morten, N.; Nass, J.O.; Husoy, M.F.; Arnestad, M.N. Friends, lovers or nothing: Men and women differ in their perceptions of sex robots and platonic love robots. Front. Psychol. 2020, 11, 355. [Google Scholar] [CrossRef]

- Olesky, T.; Wunk, A. Do Women Perceive Sex Robots as Threatening? The Role of Political Views and Presenting the Robot as a Female- vs. Male-Friendly Product. Comput. Hum. Behav. 2021, 117, 106664. [Google Scholar] [CrossRef]

- Kislev, E. The Robot-Gender Divide: How and Why Men and Women Differ in Their Attitudes Toward Social Robots. Soc. Sci. Comput. Rev. 2023, 41, 2230–2248. [Google Scholar] [CrossRef]

- Brandon, M.; Shlykova, N.; Morgentaler, A. Curiosity and Other Attitudes Towards Sex Robots: Results of an Online Survey. J. Futur. Robot Life 2022, 3, 3–16. [Google Scholar] [CrossRef]

- Monteiro, L.H.A. A Mathematical Model to Start a Discussion on Cybersex Addiction. Int. J. Math. Educ. Sci. Technol. 2024, 1–12. [Google Scholar] [CrossRef]

- Vanmali, B.; Osadchiy, V.; Shahinyan, R.; Mills, J.; Eleswarapu, S. Matters Into Their Own Hands: Men Seeking Pornography Addiction Advice From a Nontraditional Online Therapy Source. J. Sex. Med. 2020, 17 (Suppl. S1), S1. [Google Scholar] [CrossRef]

- Rousi, R. Me, My Bot and His Other (Robot) Woman? Keeping Your Robot Satisfied in the Age of Artificial Emotion. Robotics 2018, 7, 44. [Google Scholar] [CrossRef]

- Tschopp, M.; Gieselmann, M.; Sassenberg, K. Servant by Default? How Humans Perceive Their Relationship with Conversational AI. Cyberpsychology 2023, 17, 126–148. [Google Scholar] [CrossRef]

- Desbuleux, J.C.; Fuss, J. Is the Anthropomorphization of Sex Dolls Associated with Objectification and Hostility Toward Women? A Mixed Study Among Doll Users. J. Sex Res. 2023, 60, 206–220. [Google Scholar] [CrossRef]

- Dudek, S.Y.; Young, J.E. Fluid Sex Robots: Looking to the 2LGBTQIA+ Community to Shape the Future of Sex Robots. In Proceedings of the 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Sapporo, Japan, 7–10 March 2022; pp. 746–749. [Google Scholar] [CrossRef]

- Chatterjee, B.B. Child sex dolls and robots: Challenging the boundaries of the child protection framework. International Rev. Law Comput. Technol. 2020, 34, 22–43. [Google Scholar] [CrossRef]

- Grigoreva, A.D.; Rottman, J.; Tasimi, A. When does “no” mean no? Insights from sex robots. Cognition 2024, 244, 105687. [Google Scholar] [CrossRef]

- Turkle, S. Alone Together: Why We Expect More from Technology and Less from Each Other; Basic Books Inc.: New York, NY, USA, 2011. [Google Scholar]

- Weiss, D.M. Learning to Be Human with Sociable Robots. Paladyn J. Behav. Robot. 2020, 11, 19–30. [Google Scholar] [CrossRef]

- Davis, F. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Kim, W.; Cake, D.A. Gen Zers’ Travel-Related Experiential Consumption on Social Media: Integrative Perspective of Uses and Gratification Theory and Theory of Reasoned Action. J. Int. Consum. Mark. 2025, 37, 89–116. [Google Scholar] [CrossRef]

- Warraich, N.F.; Irfan, M.; Ali, I. Understanding Students’ Mobile Technology Usage Behavior During COVID-19 Through Use & Gratification and Theory of Planned Behavior. SAGE Open 2024, 14, 1–12. [Google Scholar] [CrossRef]

- Blau, P.M. Exchange and Power in Social Life; Wiley: New York, NY, USA, 1967. [Google Scholar]

- Hormans, G. Social behavior as exchange. Am. J. Sociol. 1958, 63, 597–606. Available online: http://www.jstor.org/stable/2772990 (accessed on 16 March 2025). [CrossRef]

- Lanigan, J.D. A Sociotechnological Model for Family Research and Intervention: How Information and Communication Technologies Affect Family Life. Marriage Fam. Rev. 2009, 45, 587–609. [Google Scholar] [CrossRef]

- Brown, T.A. Confirmatory Factor Analysis for Applied Research; Guilford Publications: New York, NY, USA, 2015. [Google Scholar]

- Kline, R.B. Principles and Practice of Structural Equation Modeling; Guilford Publications: New York, NY, USA, 2023. [Google Scholar]

- Levy, D. Love and Sex with Robots; Harper Collins: New York, NY, USA, 2007. [Google Scholar]

- Osborne, T.; Rose, N. Against Posthumanism: Notes Towards an Ethopolitics of Personhood. Theory Cult. Soc. 2024, 41, 3–21. [Google Scholar] [CrossRef]

- Cole-Turner, R. Posthumanism & Transhumanism; Wiley: Hoboken, NJ, USA, 2022; Available online: https://onlinelibrary.wiley.com/doi/abs/10.1002/9781118499528.ch122 (accessed on 4 May 2024).

- Díaz de Liaño, G.; Fernández-Götz, M. Posthumanism, New Humanism and Beyond. Camb. Archaeol. J. 2021, 31, 543–549. [Google Scholar] [CrossRef]

| 1 | 2 | |

|---|---|---|

| Simulated Companionship | ||

| I am open to the idea of having a humanoid robot as a personal companion at home | 0.66 * | 0 |

| I think an elderly person who is lonely could benefit from having a humanoid robot as a companion | 0.92 * | −0.02 |

| I think the development of humanoid robots to meet companionship needs is a good thing | 0.84 * | 0.05 |

| I do not want any humanoid robot in my home or living space | −0.44 * | −0.1 |

| Simulated Intimacy | ||

| I think it is possible to fall in love with a humanoid robot | 0.08 | 0.63 * |

| I humanoid robot would never have a “headache” and would always be available for sex | 0.02 | 0.74 * |

| I think people should be able to marry their humanoid robots if they want to | 0 | 0.67 * |

| I think the development of humanoid robots to meet sexual needs is a good idea | −0.06 | 0.82 * |

| The use of humanoid robots for having sex is stigmatized | 0.11 | 0.43 * |

| I could develop feelings of intimacy for a humanoid robot | −0.02 | 0.54 * |

| I think humanoid robots could become family members in the sense that parents would depend on the robot | 0.42 * | 0.37 * |

| M | SD | |

|---|---|---|

| Simulated Companionship | ||

| I am open to the idea of having a humanoid robot as a personal companion at home | 2.17 | 1.11 |

| I think an elderly person who is lonely could benefit from having a humanoid robot as a companion | 3.32 | 1.1 |

| I think the development of humanoid robots to meet companionship needs is a good thing | 2.8 | 1.07 |

| Simulated Intimacy | ||

| I think it is possible to fall in love with a humanoid robot | 2.12 | 1.14 |

| I humanoid robot would never have a “headache” and would always be available for sex | 2.81 | 1.32 |

| I think people should be able to marry their humanoid robots if they want to | 1.8 | 1.05 |

| I think the development of humanoid robots to meet sexual needs is a good idea | 2.03 | 1.08 |

| I could develop feelings of intimacy for a humanoid robot | 1.45 | 0.78 |

| Simulated Intimacy | Simulated Companionship | |||

|---|---|---|---|---|

| B | SE | B | SE | |

| Race | 0.18 * | 0.07 | 0.07 | 0.07 |

| Sexual Value | 0.20 * | 0.07 | 0.1 | 0.07 |

| Sex | −0.01 | 0.07 | −0.16 * | 0.07 |

| Self-Concept | −0.009 | 0.07 | 0.007 | 0.07 |

| Religiosity | −0.1 | 0.07 | −0.14 * | 0.07 |

| Sexual Orientation | 0.08 | 0.07 | 0.16 * | 0.06 |

| Relationship Status | 0.06 | 0.07 | 0.02 | 0.06 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, I.J.; Welch, T.S.; Knox, D.; Likcani, A.; Tsay, A.C. Outsourcing Love, Companionship, and Sex: Robot Acceptance and Concerns. Sexes 2025, 6, 17. https://doi.org/10.3390/sexes6020017

Chang IJ, Welch TS, Knox D, Likcani A, Tsay AC. Outsourcing Love, Companionship, and Sex: Robot Acceptance and Concerns. Sexes. 2025; 6(2):17. https://doi.org/10.3390/sexes6020017

Chicago/Turabian StyleChang, I. Joyce, Tim S. Welch, David Knox, Adriatik Likcani, and Allison C. Tsay. 2025. "Outsourcing Love, Companionship, and Sex: Robot Acceptance and Concerns" Sexes 6, no. 2: 17. https://doi.org/10.3390/sexes6020017

APA StyleChang, I. J., Welch, T. S., Knox, D., Likcani, A., & Tsay, A. C. (2025). Outsourcing Love, Companionship, and Sex: Robot Acceptance and Concerns. Sexes, 6(2), 17. https://doi.org/10.3390/sexes6020017