Abstract

Owing to the results of research in system science, artificial intelligence, and cognitive engineering, engineered systems are becoming more and more powered by knowledge. Complementing common-sense and scientific knowledge, system knowledge is maturing into a crucial productive asset. However, an overall theory of the knowledge of intellectualized systems does not exist. Some researchers suggest that time has come to establish a philosophically underpinned theoretical framework. This motion is seconded by the on-going intelligence revolution, in which artificial intelligence becomes a productive power, enabler of smart systems, and a strong transformer of the social life. The goal of this paper is to propose a skeleton of the needed generic theory of system knowledge (and a possible new branch of philosophical studies). The major assumption is that a significant part of the synthetic system knowledge (SSK) is “sympérasma”, that is, knowledge conjectured, inferred, constructed, or otherwise derived during the operation of systems. This part will become even more dominant in the future. Starting out from the above term, the paper suggests calling this theory “sympérasmology”. Some specific domains of “sympérasmological” studies, such as (i) manifestations of SSK, (ii) mechanisms of generating SSK, (iii) dependability of SSK, (iv) operational power of SSK, (v) composability of SSK, and (vi) advancement of SSK, are identified. It is understood that the essence and status of SSK cannot be investigated without considering the related cognitive processes and technological enablers. The paper presents a number of open questions relevant for follow-up discussions.

1. Introduction

1.1. The Broad Context of the Work

The consecutive industrial revolutions have fueled the invention, implementation, and exploitation of various systems [1]. The first industrial revolution mechanized the industrial production by water and thermal processes and systems. The second industrial revolution exploited fossil and electric energies in production and transportation processes and systems. In the third industrial revolution, electronics have been used to automate industrial processes and systems. In the current time of the fourth industrial revolution, computing environments and information engineering are rapidly proliferating in various industrial sectors, but also in everyday creative and administrative processes. In addition to material and energy transforming systems, information processing and informing systems are also rapidly proliferating. The emerging fifth industrial revolution, often called the revolution of intelligence, permeates the society with smart, cognizant, and even intelligent systems, which have significant impact practically on everything [2]. While the systems of the earlier industrial revolutions extended the human capabilities and capacities in the physical realm, intelligent systems are developed to extend the human capabilities and capacities in the cognitive realm. As many philosophers and professionals conceive and debate it, they may even substitute humans in certain roles and create an alternative world of intelligent systems [3].

Actually, this is prognosticated for the near-future sixth industrial revolution, which will be driven by the combination of the four fundamental entities, i.e., bits, atoms, neurons, and genes, and will lead to the so-called B.A.N.G. convergence [4], integration [5], and revolution [6]. As a transdisciplinary system science and technology of the sixth industrial revolution, cybermatics will bring nano-, computing, bio-, and connectivity technologies into an absolute synergy, which allows bio-physiological and sensory-cognitive augmentation, or even reconstruction of humans in the form of cyborgs [7]. The analysis of the mentioned confluences and disruptive changes made it clear that not only the types of systems have changed dramatically over the centuries, but also their functional/architectural complexity and disciplinary/technological heterogeneity [8]. In combination with this, the problem of building intelligent systems is rapidly crossing the narrow boundaries of academia to become an everyday industrial issue.

The confluence of the physical, cyber, and cognitive realms can be observed clearly in our current systems. This trend is exemplified by the paradigm of cyber-physical systems (CPSs), which were distinguished by this name only one and half decades ago [9]. They bring the analogue and digital hardware, control, and application software, as well as the natural and artificial cyberware, into a synergy. CPSs may manifest in different forms and multiple levels of intellect, just think of self-organizing assistive robots in home care, self-adaptive rehabilitation systems, a fleet of self-driving vehicles, or intelligent production systems of systems [10]. From the feedback controlled, plant type, first-generation CPSs, we are rapidly moving towards various forms of intellectualized CPSs such as smart CPSs (S-CPSs) that create a tight coupling between the physical and the cognitive domains through the cyber domain of operation.

What makes this possible is cognitive engineering of systems (CES), which is interested in (i) equipping S-CPSs with application-specific knowledge, (ii) constructing computational reasoning mechanisms that elaborate on that knowledge, and (iii) embedding S-CPSs in real-life environments as systems-in-the-loop. These together enable S-CPSs to (i) build awareness of the dynamic context of their operation and application, (ii) infer not pre-programmed “intellect” (data, information, knowledge, meta-knowledge) based on monitoring their states and environment, (iii) reason about the goals and change them, (iv) develop and validate plans for reorganization to meet the goals of working, (iv) adapt, evolve, and reproduce themselves on their own as needed by a better performance, (v) enable the interaction with and among S-CPSs on multiple levels, and (vi) share tasks with and among S-CPSs and human people in various contexts. Notwithstanding, the “science” of self-managing systems is still in a premature stage of development and is deemed to be a rather unsettled domain of inquiry. In particular, this applies to self-management of system knowledge.

1.2. Setting the Stage for Discussion: System Knowledge in the Literature

According to Wang’s abstract intelligence theory, intelligence is the power of a human or a system that enables transferring information into knowledge and performing a context-proper behavior [11]. Based on the level of reproducing/mimicking the operation of the human brain, system intelligence can be classified according to five levels, known as reflexive, imperative, adaptive, autonomous, and cognitive intelligence. In this work, intellectualization of engineered systems is seen as the process of equipping them with cognitive intelligence and capabilities, i.e., making them capable to possess, explore, aggregate, synthesize, share, and exploit knowledge and to reason with it to fulfil operational objectives [12]. Intellectualization is more than rationalization. While rationalization works with logical constructs and symbolic mechanisms in computation, intellectualization works with semantic constructs and cognitive mechanisms. Rationalization was typical for the early knowledge-based systems. Intellectualization is conjointly about the rational content (system knowledge) and the transformational agents (reasoning mechanisms). Intellectualized systems show various self-features such as self-awareness, self-learning, self-reasoning, self-control, self-adaptation, self-evolution, and self-reproduction [13]. These features make them nondeterministic, goal-orientated, context-aware, causality-driven, reasoning-enabled, and abductively learning systems [14].

The literature reports on a tremendous diversity in definitions of knowledge. Nevertheless, the overwhelming majority of the seminal publications agree that knowledge is a productive asset, no matter if human knowledge or system knowledge is concerned. The same viewpoint is imposed in this paper. On the one hand, quite a lot, though far not everything, is already known about the nature and management of human knowledge [15]. On the other hand, we do not know enough about the nature and evolution of system knowledge. By reason of the many possible forms of utilization of system knowledge and the rapidly growing amount of knowledge captured and generated by engineered systems, dealing more intensively with (synthetic) system knowledge has been a necessity [16]. The fact of the matter is that complex engineered systems will ultimately be driven by systelligence (i.e., real-time constructed and exploited dynamic system intelligence). This intelligence has two major constituents: (i) a dynamic body of system knowledge, and (ii) a set of self-adaptive processing mechanisms that purposefully alter and interact with each other while operating on this knowledge. Typically, the system-owned knowledge blends (i) structured, formalized, and pre-programmed human knowledge, and (ii) knowledge that is acquired by the system in whatever way during run-time. The two parts are supposed to be in synergy from syntactic (representation), semantic (interpretation), pragmatic (computation), and apobetic (purposive) viewpoints. The processing mechanisms can either be task-independent mechanisms or task-dependent mechanisms, but their application depends on the type and representation of the concerned chunks of the system knowledge.

Eventually, system knowledge not only overlaps with human knowledge, but also complements or substitutes it, and by this creates a kind of tensioned situation. The contemporary literature often refers to application of artificial intelligence (AI) technologies as a typical way of enabling cognitive behavior of systems and complementing human knowledge [17]. In both narrow and general AI, or strong and weak AI research, capturing system knowledge and development of processing mechanisms received significant attention [18]. Creation of machines with a general intelligence, like that of humans, is pursued as one of the most ambitious scientific goals ever set. However, much less attention has been paid to these from ontological and epistemological perspectives. Even in the field of enabling technologies, many other unexplored alternatives exist. For instance, the dynamic neural cluster (DNC) model, proposed by Wang, X, assumes that knowledge is not only retained in neurons as individual objects or attributes, but also dynamically exemplified by emergent and/or purposefully created synaptic connections [19]. In addition to logical, retrospective, probabilistic, deductive, and inductive approaches of knowledge generation, abductive approaches may also be considered depending on the purpose and contexts of applications [20]. Furthermore, application-specific reasoning mechanisms can also be considered, which attempt achieving a balance between application neutrality/specificity and performance dependability/efficiency [21].

Human intelligence research spreads in both the tangible (rational) and the intangible (affective) dimensions. There are convincing research results in the rational dimension, but much less is evidenced in the intangible dimension. For example, the questions about what knowledge and mechanisms, if any, might make systems able (i) to have empathy for people and animals, (ii) to care about the environment, (iii) to have a sense of humor, (iv) to appreciate art and beauty, (v) to feel love or loneliness, and (vi) to enjoy music or traveling are practically open (i.e., not yet addressed or just partially answered in the scientific literature). On the other hand, there is an obvious need for investigations in this direction, as well as for focused studies on, for example, (i) what the intangible system abilities would mean in the context of intellectualized engineered systems, and (ii) what the consequences of the technological affordances and restrictions are. As for now, not only experimental studies are scarce, but also philosophical speculations and argumentations, and consolidation of the meanings, values, impacts, and responsibilities of the above intangible system abilities [22]. Notwithstanding the importance of awareness of these research concerns, I will not be able to address them at all in this paper due to their complicatedness and the page limitations.

1.3. The Addressed Research Phenomenon and Why It Is of Importance

We are in a situation where the state of the art is ahead of the state of understanding. Many scholars argue that a comprehensive and deep-going philosophical disposition and clarification of system knowledge is still missing. Therefore, there is an uncertainty related to the overall status of system knowledge and how to deal with it beyond the scope of particular systems. Our orientating literature study revealed that there were no generic and broadly accepted answers to questions such as:

- What differentiates system knowledge from the other bodies of knowledge?

- What are typical manifestations and enactments of system knowledge?

- What are the cybernetic and computational fundamentals of system knowledge?

- What are the genuine sources of system knowledge?

- What does conscious knowing mean for intellectualized systems?

- What is a system able to know by its sensing, reasoning, and learning capabilities?

- Why and how does a system get to know something?

- What are the proper criteria for system knowledge?

- What are the genuine mechanisms of generating system knowledge?

- Can a system know, be aware of, and understand what it knows?

- What way can system knowledge be verified, validated, and consolidated?

- What is the future of system knowledge?

The research phenomenon investigated in our studies has been depicted as “efforts to study the status of synthetic system knowledge”. The words “study the status” express that the interest is in studying the overall position rather than the specific state of progression. The adverb “synthetic” is used to express that the constituting constructs, bodies, chunks, or pieces of system knowledge, or even its whole, are artificially created for a purpose (and constructed according to some objectives). In agreement with this, the acronym SSK will be used in the rest of the paper to refer to all kind of system knowledge that is (i) a synergetic (compositional) constituent of a system having an intellectualized character, and (ii) purposefully constructed from human knowledge and abstracted from signals, data, and information. SSK is dominantly artificially rather than naturally created, and it is a kind of speculative knowledge, like that of mathematics. Implicitly, SSK is supposed to be true in how it reflects the world. Obviously, these assumptions open the door for countless issues of existence, verification, validation, and consolidation. SSK is concretely and explicitly managed. Concreteness of system knowledge means that it is derived, elicited, compiled, structured, represented, stored, and/or transformed for the purpose of the intended operation. The particular bodies of SSK owned by various systems may be integrated into an organized (totaled) knowledge of a system of systems.

Concerning the specific interpretation of SSK, we followed the definition of knowledge, which interprets it as the ability of agents to take individual or joint actions [23]. The action was seen as the effort to do something correctly in particular contexts and to provide a successful solution to a real-life problem. From a computational viewpoint, knowledge is (i) regarded as experimental results or reasoned judgements aggregated by reasoning actions, (ii) captured as an organized set of statements of facts, ideas, and their relationships, and (iii) represented by various explicit and implicit formalisms. As examples, an explicit form is a pool of production rules, and an implicit form is a not-explained pattern learnt by a deep learning mechanism.

1.4. The Contents of the Paper

The next section summarizes the main findings of an extensive and critical survey of the related literature. It focuses on the features of human knowledge and system knowledge, and the computational mechanisms of knowledge reasoning and engineering. Section 2 gives an overview of the current state of the research field, including the characterization and categorization of human and system knowledge, and the main forms of knowledge inferring and engineering. Section 3 deals with the fundamentals and potentials of gnoseology, while Section 4 discusses the fundamentals and potentials of epistemology. Section 5 makes a proposal for the name of the theory of synthetic systems knowledge and sketches up some possible dimensions of dealing with SSK.

2. The Current State of the Research Field

2.1. Characteristics of Human Knowledge

The human knowledge is a long historical development and is in the focus of speculations, discussion, and investigations through many centuries [24,25]. As stated by Berkeley, G., the objects of human knowledge are all ideas that are either (i) actually imprinted on the senses, or (ii) perceived by attending to one’s own emotions and mental activities, or (iii) formed out of ideas of the first two types, with the help of memory and imagination, by compounding or dividing or simply reproducing ideas of those other two kinds [26]. Wiig, K. defined human knowledge as a person’s beliefs, perspectives, concepts, judgments, expectations, methodologies, and know-how (which is a kind of representation of who the individual is) [27], whereas Allee, V. defined it as experience of a person gained over time [28]. Blackler, F. discussed that knowledge can be located in the (i) minds of people (“embrained” knowledge), (ii) bodies (embodied knowledge), (iii) organizational routines (embedded knowledge), (iv) dialogues (encultured knowledge), and (v) symbols (encoded knowledge) [29]. It was regarded as the ability or capacity to act by Sveiby, K.E. [30], as actionable understanding by Sehai, E. [31], and as the capacity of effective action by Drucker, P.F. [32].

As revealed by the above concise overview, knowledge is interpreted both as an acquired cognitive capacity and as an actionable potential in context. Running a bit ahead, the definitions rooted in the notion of actionable potential will play a specific role in the context of systems. The interpretations as a cognitive capacity assume consciousness, which is still a debated phenomenon of scientific research, though its fundamentality in terms of underpinning sensations, perceptions, thoughts, awareness, attention, understanding, ideation, etc. cannot be refuted [33]. An adequate theory of consciousness is supposed to explain the (i) existence, (ii) causal efficacy, (iii) diversity, and (iv) phenomenal binding of knowledge [34]. Likewise, the manifestation of human intelligence in the intangible cognitive and perceptive domains, the issues of consciousness will not be further discussed in any depth in this paper.

An essential characteristic of knowledge is its potential to distinguish, describe, explain, predict, and control natural or artificial phenomena in the context of real-life problems. Introduced by Newell, A., the concept of knowledge level concerns the nature of system knowledge above the symbol (or program) level that influence the actions, which the system will take to meet its goals given its knowledge [35]. It is assumed that the intelligent behavior of the system is determined by its knowledge level potentials. Symbol-level mechanisms encode the system knowledge, whereas knowledge-level computational mechanisms purposefully transform and use knowledge in problem-solving. As Stokes, D.E. argued, “knowledge finds its purpose in action and action finds its reason in knowledge” [36]. Consequently, the problem-solving potential of knowledge is of importance and not its syntactic or semantic representations. The semantics determines a mapping between symbols, combinations of symbols, propositions of the language, and concepts of the world to which they refer.

Actually, the problem-solving potential can be regarded as the enthalpy (internal capacity) of knowledge. Evidently, its exploitation can be facilitated by proper structuring and representation schemes. However, this internal capacity is not a substance that can be stored—it is hiding or embedded in the representations [37]. This is often referred to as the knowledge engineering paradox. There are definitions that extrapolate from the personal knowledge and interpret it as an aggregated and stored asset of teams, collectives, and populations. However, only the explicit and formalized part of knowledge can be shared computationally as an asset. From an industrial management perspective, Zack, M.H. identified four characteristics of knowledge leading to difficulties: (i) complexity (cardinality and interactions of parts), (ii) uncertainty (lack of certain information), (iii) ambiguity (unclear meaning), and (iv) equivocality (multiple interpretations) [38].

Ackoff, R.L. proposed to make distinction between data, information, and knowledge, which is helpful in providing a practical procedural definition for knowledge in real life [39]. Based on this, human knowledge is interpreted from an information engineering perspective as the end product of subsequent aggregation and abstraction of signals, data, and information, even if they remain hidden or implicit in these processes. The construct exposing the hierarchical relationships of the mentioned cognitive objects is called knowledge pyramid [40]. Though this interpretation of knowledge creation rightly captures the involved main cognitive activities (integration and abstraction), it also carries uncertainties [41]. One source of uncertainty is that the underlying cognitive objects (i.e., signals, data, information, knowledge, wisdom, and intellect) do not have unique and universally accepted definitions. Inversely, they are either variously interpreted or interchangeably used in the literature. For instance, Gorman, M.E. proposed a taxonomy of knowledge, which considers information, skills, judgement, and wisdom as four types of knowledge [42]. However, for a correct discussion of the subject matter, these need to be clearly distinguished and used rigorously. For our purpose, signals are seen as carriers of changes in the indicators of a phenomenon. Data are distinct descriptors of the attributes and states of a phenomenon. Information is the meaning of the data that allows us to answer questions about a phenomenon. Knowledge is the total of facts and know-how of treating a phenomenon, which eventually allows solving related cognitive or physical problems. Wisdom is a kind of meta-knowledge of what is true or right coupled with proper judgment as to action. Intellect is a synergetic aggregate of all abovementioned concepts, which manifests in the faculty of objective understanding, reasoning, and acting, especially with regard to abstract matters.

2.2. Categories of Human Knowledge

In the broadest sense, knowledge (i) is a multi-faceted ingredient of human intellect, (ii) has many manifestations and distinguishing characteristics, and (iii) can be seen from many perspectives. The latter creates bases for various classifications. Perhaps the most fundamental classification of knowledge theories is according to the owner of knowledge, which introduces the categories of (i) individual (differentiated) knowledge and (ii) organizational (integrated) knowledge. In fact, human knowledge can be differentiated based on its owner (producer or utilizer) that can be (i) individual, (ii) group/team, (iii) community/organization, (iv) population/inhabitants, and (v) mankind/civilization. In the view of Barley, W.C. et al., knowledge can be characterized by its position on three interrelated axes of attributes: (i) whether knowledge is explicit, (ii) where knowledge resides, and (iii) how knowledge is enacted [43]. Byosiere, P. and Ingham, M. identified 40 different types of knowledge and categorized them into a broad typology of specific knowledge content areas (or domains of knowledge) [44].

A typology was proposed by Alavi, M. and Leidner, D. [45], which considered the explicit (formal and tangible) and implicit (tacit and intangible) nomenclature of Polányi, M. [46]. Tacit knowledge is related to subconscious operation of the human brain, which does something automatically without almost thinking. This type of knowledge (know-how) is included in personal communications of domain experts, as the main knowledge source, but it is difficult to extract, elicit, and share. Typically, three categories of tacit knowledge are distinguished (i) instinctive (subconscious) knowledge of individuals, (ii) objectified (codified) knowledge of social systems, and (iii) collective tacit knowledge of a social systems.

De Jong, T. and Ferguson-Hessler, M.G. suggested a differentiation between knowledge-in-archive and knowledge-in-use, and emphasized the task dependence of knowledge classification and characterization (the context in which knowledge has a function) [47]. Based on task analysis, they distinguished four types of knowledge: (i) situational, (ii) conceptual, (iii) procedural, and (iv) strategic knowledge. Other classifications sorted knowledge as (i) declarative (know-about), (ii) procedural (know-how), (iii) causal (know-why), (iv) conditional (know-when), and (v) relational (know-with) [38], or based on their origin as (i) intuitive, (ii) authoritative, (iii) logical, and (iv) empirical [48].

2.3. Characteristics of System Knowledge

All systems have to do with knowledge, but not all systems are knowledge-based with regard to their operation. The distinguishing characteristic of knowledge-based systems is a set of growing cognitive capabilities that makes their operation sophisticated, smart, or even intelligent. System knowledge is a purposeful higher-level organization and operationalization of information that creates operational potential for systems. It seems to be a defendable conjecture that there are as many forms of system knowledge as systems. As explained in the Introduction, the industrial revolutions brought about a confusingly broad spectrum of engineered systems, which reflect the trend of increasing intellectualization. In the context of human knowledge, Fahey, L. and Prusak, L. argued that knowledge does not exist independently of a knower [49]. If this argument is correct and valid in the context of intellectualized engineered systems, then we should simultaneously consider the characteristics of the systems and that of the knowledge handled by them. As Fuchs, C. stated, whenever a complex system organizes itself, it produces information, which in turn enables internal structuring, functional interaction, and synergetic behavior [50].

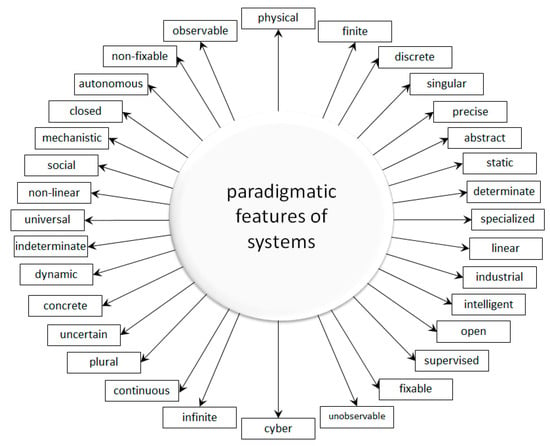

In the second part of the last century, various fundamental systems theories were developed, such as (i) complex systems theories, (ii) fuzzy system theories, (iii) chaos system theories, (iv) intelligent system theories, and (v) abstract system theories, to explain the essence, order, and cognitive capabilities of systems [51]. These theories point at the fact that there is a relationship between the paradigmatic features of engineered systems and the characteristics of knowledge they are able to handle and process [52]. Figure 1 shows the most important dimensions of opposing paradigmatic features. As an arbitrary example, system knowledge can be static, but it can also be dynamic (even emerging or evolving) in other cases. The paradigmatic features may show a gradation between the extremes on a line. For instance, between fully physical systems and fully cyber systems reside software systems, which show both physical (stored software) and cyber (digital code) features. Eventually, each body of system knowledge represents a particular composition of paradigmatic features, and vice versa. For instance, it needs a dedicated body of knowledge to establish a concrete, open, finite, dynamic, non-linear, tasks-specific, continuous, intellectualized, self-adaptive, plural, cyber-physical system of systems. Obviously, this introduces a complexity challenge at discussing the characteristics and the types of system knowledge.

Figure 1.

Distinguishing paradigmatic features of engineered systems.

What is the corpus of system knowledge? Systems knowledge is not about the knowledge that is needed to specify, design, implement, use, and recycle systems. Instead, it is the knowledge that is needed by systems to achieve the operational purposes or objectives, or in other words, it is the knowledge needed to function. That is, system knowledge is identical neither with engineering knowledge (the knowledge of making) [53], nor with technological knowledge (the knowledge of enabling) [54], though some elements of both are present in systems knowledge. System knowledge is a cognitive technology knowledge including (i) chunks and bodies of generic scientific knowledge (facts, definitions, and theories), (ii) specialized professional knowledge (principles, heuristics, and experiences), and (iii) every-day common knowledge (rule of thumbs). This purpose-driven knowledge may be implanted into a system by its developers or acquired by the system itself using its own resources, during its lifetime, while the system has been operating.

In a general sense, every system is designed and implemented to serve some predefined purposes and to fulfil tasks—that is, solving concrete problems. Therefore, system knowledge is viewed in our study as problem-solving knowledge (no matter what the notions “solving” and “problem” do actually mean in various contexts). Jonassen, D. suggested that success in problem-solving depends on a combination of (i) strong domain knowledge, (ii) knowledge of problem-solving strategies, and (iii) attitudinal components [55]. Anderson, J.D. approached this issue from a cognitive perspective, in which all components of knowledge play complementary roles [56]. He grouped the components needed to solve problems broadly into (i) factual (declarative), (ii) reasoning (procedural), and (iii) regulatory (metacognitive) knowledge, complemented with other elements of competence such as skills, experiences, and collaboration. The knowledge needed to solve complicated problems is complex in itself. It is a blend of problem-solving affordances rather than a roadmap or a receipt of arriving at a solution.

The lack of natural understanding of the meaning of the possessed knowledge by the systems themselves and the need to purposefully infer from computable representations put system knowledge into the position of context-dependently actionable potential. In real life, problem-solving is often a trial-and-error process rather than a well-defined puzzle-solving. In addition, both the complex problem to be solved and the problem-solving process may feature emergence, which can be combined with changes in the context. This typically makes (i) the knowledge imperfect and incomplete for the given purpose, (ii) the knowledge use process non-linear, and (iii) the needed reasoning process non-monotonic. From a cognitive perspective, the components of knowledge needed to solve such problems can be broadly grouped into (i) factual (declarative), (ii) conceptual (hypothetical), (iii) reasoning (procedural), and (iv) regulatory (metacognitive) knowledge/skills, and all play complementary roles. Friege, G. and Lind, G. reported that conceptual knowledge and problem scheme knowledge are excellent predictors of problem-solving performance [57]. As presented and evaluated for strengths and limitations by Goicoechea, A., there are six main methods known for reasoning with imperfect knowledge: (i) Bayes theory-based, (ii) Dempster-Shafer theory-based, (iii) fuzzy set theory-based, (iv) measure of (dis)belief theory-based, (v) inductive probabilities-based, and (vi) non-monotonic reasoning-based methods [58].

2.4. Categories of System Knowledge

Manifestation of knowledge is the primary basis for any classification. At large, it is about the roles of knowledge in knowing and reasoning. Systems knowledge may manifest in largely different forms [59]. Based on the literature (i) declarative knowledge (which captures descriptors and attributes of facts, concepts, and objects), (ii) structural knowledge (which establishes semantic relations between facts, concepts, and objects, (iii) procedural knowledge (which captures the know-how of doing or making something), (iv) abstracted knowledge (which generalizes both “what is” and “how to” types of knowledge within or over contexts, (v) heuristic knowledge (which represents intuitive, emergent, uncertain, and/or incomplete knowledge in a context, and (vi) meta knowledge (which apprehends wisdom and decisional knowledge about other types of knowledge) are differentiated as the main categories of system knowledge manifestation. These categories may include specific types of knowledge, which can be implanted in systems by knowledge engineers and/or generated or acquired by the systems. Particular knowledge representation schemes are applied to each abovementioned forms of manifestation [60]. When represented for computer processing, system knowledge is decomposed to primitives and thereby its synergy (or compositionality) is often lost.

Systems knowledge reflects intentional, functional, and normative aspects, and creates a dialogue between probabilities and possibilities. As discussed above, it involves “knowing what”, “knowing how”, and often also “knowing why” elements. An assumption is that the knowledge needed by systems for problem-solving can be categorized in the analogy of the knowledge employed in human problem-solving. Solaz-Portolés, J.J. and Sanjosé, V.C. identified the types of system knowledge used in concrete problem-solving and discussed how they affect the performance of problem-solvers. From the perspective of computational handling of problems, they identified three types of knowledge: (i) problem-relevant (context) knowledge, (ii) problem-scheme (framework) knowledge, and (iii) problem-solving (content) knowledge. The types of knowledge typically involved in scientific problem-solving are (i) declarative, (ii) procedural, (iii) schematic, (iv) strategic, (v) situational, (vi) metacognitive, and (vii) problem-translating knowledge [61].

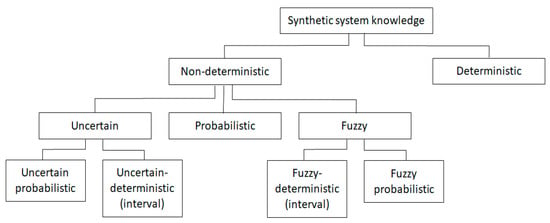

System developers and knowledge engineers agree that problem-solving knowledge possessed by systems is almost never perfect. It means that systems should, more often than not, reason with imperfect knowledge. As mentioned by Reed, S.K. and Pease, A., the goal for all descriptive reasoning models is to account for imperfections [62]. Imperfection of knowledge may be caused by incompleteness, ambiguity, conditionality, contradiction, fragmentation, inertness (relevance), misclassification, or uncertainty. Based on the general classification of information and systems proposed by Valdma, M., a categorization of non-deterministic system knowledge is shown in Figure 2 [63]. Though AI technologies offer possibilities for handling non-deterministic knowledge, many researchers agree that we are still far from the state when AI research will be able to build truly human-like intuitive systems. On the other hand, many engineered systems benefit from the use of AI technologies and methods, which lend themselves to a variety of enabling reasoning mechanisms [64].

Figure 2.

Categorization of non-deterministic system knowledge.

2.5. The Main Forms and Mechanisms of Knowledge Inferring/Reasoning

Research in knowledge inferring/reasoning faces one fundamental question. It was discussed above that all forms of knowledge are based typically on data constructs, data streams, data patterns, and/or data models. Information is patterned data and knowledge is the derived capability to act. Thus, the question is: How can a system synthesize functional and operational information from data and how can it infer knowledge and reason with it on its own? [65]. Humans are equipped with the formidable power of abstraction and imagination, but what does this mean in the context of intellectualized systems? [66]. How can they replicate human reasoning as artificial reasoning? Scientists of cognition constructed descriptive models of how people reason, whereas information scientists proposed prescriptive models to support human reasoning [67]. Many elements of a sufficing explanation have been mentioned in the literature, but providing an exhaustive answer still needs further efforts. As main forms of knowledge inferring/reasoning, both non-ampliative and ampliative mechanisms have been proposed over the last decades. Non-ampliative mechanisms are such as: (i) classification (placing into groups/classes), searching/looking up (selecting from a bulk), and contextualization (appending application context). There is a clearly observable progression concerning the implementation of truly ampliative mechanisms. These are such as (i) fusion, (ii) inferring, (iii) reasoning, (iv) abstraction, (v) learning, and (vi) adaptation of knowledge. Inferring is the operation of deriving information in context [68,69], reasoning is about synthesis of knowledge [70,71]. Inferences are not always made explicit in the process, but they serve as invisible connectors between the claims in the argument.

The term “reasoning” is used in both a narrower and a broader meaning. In its narrower meaning, the term refers to all ampliative computational mechanisms, which formally manipulate a finite set of the symbols representing a collection of believed propositions to produce representations of new ones. As stated by Krawczyk, C., reasoning has three important core features in this narrower context: (i) moves from multiple inputs to a single output, which can be a conclusion or an action, (ii) involves multiple steps and different ways through a state space to achieve final outcome, and (iii) operationalizes a mixture of objectives, previous knowledge, novel information, and dynamic contexts, which, however, depend on the type and state of the problem [72]. As part of computational reasoning, automated reasoning operates on knowledge primitives and tries to combine them based on explicit or implicit algorithms. Reasoning mechanisms can basically be (i) quantitatively based, (ii) qualitatively based, and (iii) hybrid-based reasoning. Quantitative reasoning is arithmetic evaluation. Qualitative reasoning is a form of calculation, not unlike arithmetic, but over symbols standing for propositions rather than numbers. In its ambiguous and non-trivial broader meaning, reasoning refers to a complex and intricate cognitive phenomenon, which is more than just formal application logics—it extends to semantics, pragmatics, and even apobetics of human intelligence. Because of its broad notional coverage, reasoning has become a complex and multidisciplinary area of study [73].

Studied not only from a computational point of view, but also from a philosophical point of view, reasoning has four generic patterns: (i) inductive reasoning, (ii) deductive reasoning, (iii) abductive, and retrospective reasoning [74]. Inductive reasoning is a method of reasoning in which the stated premises are viewed as sources of some evidence, but not full assurance, for the truth of the conclusion [75]. Inductive reasoning progresses from individual cases towards generalizable statements. Inductive inferences are all based on reasonable probability, not on absolute logical certainty. Having syllogism as the cognitive pattern, deductive reasoning starts out with a general statement or a hypothesis believed to be true and examines the possibilities to reach a correct logical conclusion. Johnson-Laird, P.N. proposed three theories of deductive performance: (i) deduction as a process based on factual knowledge, (ii) deduction as a formal, syntactic process, and (iii) deduction as a semantic process based on mental models [76]. He also distinguished (i) lexical, (ii) propositional, and (iii) quantified deduction [77].

Abductive reasoning allows finding the preconditions from the consequences of this phenomenon and inferring a best matching explanation for the phenomenon [78] Abduction makes a probable conclusion from what is known. Hirata, K. proposed a classification of abductive reasoning approaches: (i) theory/rule-selection abduction, (ii) theory/rule-finding abduction, (iii) theory/rule-generation abduction, based on the state of availability of the elements of the explanatory knowledge [79]. A central tenet of retrospective reasoning is causation, that is, argumentation with past causes as evidence for current effects [80]. The ability of retrospective reasoning is achieved by maintaining the evolutional information of a knowledge system. Discussed by Rollier, B. and Turner, J.A., retrospective thinking is utilized by designers and other creative individuals when they mentally envision the object they wish to create and then think about how it might be constructed [81,82]. Heuristic reasoning received a lot of attention also in artificial intelligence research, and resulted in various theories of reasoning about uncertainly [83]. Treur, J. interpreted heuristic reasoning as strategic reasoning [84]. Schittkowski, K. proposed to include heuristic knowledge of experts through heuristic reasoning in mathematical programming of software tools [85].

Historically, five major families of ampliative computational mechanisms have been evolving. First, symbolist approaches, such as (i) imperative programming language-based (procedures-based) reasoning, (ii) declarative logical languages-based reasoning, (iii) propositional logic-based inferring, (iv) production rule-based inferring, (v) decision table/tree-based inferring. The literature discusses several modes of logical reasoning, such as: (i) deductive, (ii) inductive, (iii) abductive, and (iv) retrospective modes. Second, analogist approaches, such as (i) process-based reasoning, (ii) qualitative physics-based reasoning, (iii) case-based reasoning, (iv) analogical (natural analogy-based) reasoning, (v) temporal (time-based) reasoning, (vi) pattern-based reasoning, and (vii) similarity-based reasoning. Third, probabilistic approaches, such as: (i) Bayesians reasoning, (ii) fuzzy reasoning, (iii) non-monotonic logic, and (iv) heuristic reasoning. Fourth, evolutionist approaches, such as: (i) genetic algorithms, (ii) bio-mimicry techniques, and (iii) self-adaptation-based techniques. Fifth, connectionist approaches, such as: (i) semantic network-based, (ii) swallow-learning neural networks, (iii) smart multi-agent networks, (iv) deep-learning neural networks, (v) convolutional neural networks, and (iv) extreme neural networks.

2.6. The Main Functions of Knowledge Engineering and Management

A rapidly extending domain of artificial intelligence research is knowledge engineering (KE) [86]. Its major purposes are: (i) aggregation and structuring raw knowledge, (ii) filtering and construction intellect for problem-solving, and (iii) modelling the actions, behavior, expertise, and decision making of humans in a domain. It considers the objective and structure of a task to identify how a solution or decision is reached. This differentiates it from knowledge management (KM) [87], which is the conscious process of defining, structuring, retaining, and sharing the knowledge and experience of employees within an organization in order to improve efficiency and to save resources. Knowledge management has emerged as an important field for practice and research in information systems [88]. Knowledge engineering may target productive organizations (organizational knowledge engineering—OKE) [89] as well as engineering systems (system knowledge engineering—SKE) [90]. Technically, KE (i) develops problem-solving methods and problem-specific knowledge, (ii) applies various structuring, coding, and documentation schemes, languages, and other formalisms to help symbolic, logical, procedural, or cognitive processing of knowledge by computer systems, and (iii) leverages these to solving real-life knowledge-intensive problems. Dedicated SKE technologies are used, which, according to their objective, can be differentiated as system-general and system-specific technologies. Traditional system-general KE is based on the assumption that the descriptive and prescriptive knowledge that a system needs already exists as human knowledge, but it has to be collected, structured, implemented, and tested. In our days, SKE is challenged not only by the amount of knowledge, but also by the complicatedness of the tasks to be solved.

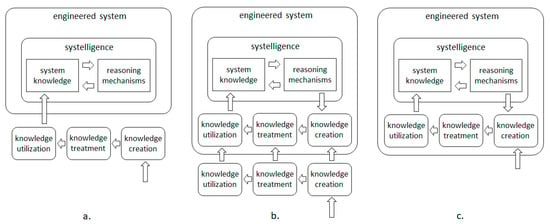

Specifically, (i) system-external, (ii) system-internal, and (iii) combined SKE approaches can be distinguished. Figure 3 shows a graphical comparison of these three approaches. In the case of a system-external approach, the tasks of knowledge creation and treatment are completed by knowledge engineers. Whereas, in the case of a system-internal approach, they are done by the concerned system. The arrows in the sub-figures of Figure 3 indicate the flows of knowledge between the highest-level system-internal, system external, and combined activities of system knowledge engineering. The oppositely pointed arrows in the systelligence blocks symbolizes the circular processing of system knowledge by the ampliative reasoning and learning mechanisms of a system in the context of application.

Figure 3.

Approaches of processing system knowledge: (a) system-external approach, (b) combined approach, and (c) system-internal approach.

According to the interpretation of Studer, R. et al., knowledge engineering can procedurally include (i) transfer processes, (ii) modelling processes, and (iii) contextualization processes [86]. Table 1 provides a restricted overview of the three main classes of knowledge engineering activities: (i) knowledge creation, (ii), knowledge treatment, and (iii) knowledge utilization, which lead to system knowledge. Common system-external methods/techniques of knowledge availing are (i) knowledge base construction, (ii) warehouse/repository construction, (iii) ontology construction, and (iv) knowledge graphs editing. System-internal methods/techniques of creating knowledge are (i) statistical data mining, (ii) knowledge discovery and interpretation, (iii) semantic relation analysis, (iv) pattern recognition and interpretation, (v) logical inferring, (vi) semantic reasoning, (vii) machine learning, (viii) deep learning, (ix) extreme learning, (x) task-specific reasoning, and (xi) causal modelling/inference. Numerous combinations of these external and internal knowledge availing and deriving methods/techniques have been applied in engineered systems so far.

Table 1.

A limited overview of the knowledge engineering activities.

The complex domain of SKE research decomposes to various subdomains such as (i) knowledge transfer (transfer of problem-solving expertise of human experts into a programs), (ii) knowledge representation (using formal models, symbols, structures, heuristics, languages, algorithms), (iii) decision-making (strategies, tactics, and actions of logical and heuristic thinking), (iv) creation of knowledge-based systems (explicit and implicit mechanisms and algorithms). The spectrum of knowledge-based systems has become wide, ranging from expert systems involving a large and expandable knowledge base integrated with a rules engine to unsupervised deep learning mechanisms with replaceable training data sets. Besides diversification, contemporary SKE also faces challenges rooted in (i) the interplay of the goal-setting and goal-keeping in systems, (ii) the dynamic changes in context information, (iii) possible self-adaptation and self-evolution of systems, (iv) the variety of tasks to be addressed in complex real life applications, and (v) the explicit handling of common-sense knowledge that is always present (activated) in the case of human problem-solving. Like in the case of organizations, integration of knowledge into a coherent “wholesome” body of knowledge for engineered systems is a complicated task [91].

Human-system dialogue has been proposed by Amiri, S. et al. as specific form of extending system knowledge [92]. Knowledge representation captures knowledge in many alternative forms, for instance, as (i) propositional rules, (ii) semantic constructs, (iii) procedural scenarios, (iv) image sequences, (v) life video stream, (vi) alphanumerical models, (vii) mathematical expressions/formulas, (viii) relational schemes, (ix) synthesized (understandable) patterns, (x) explicit algorithms, and (xi) implicit algorithms. Independent of which representation is applied, interpretation is needed to make it explicit what concrete knowledge the representation carries. It means that a pattern can be considered to be knowledge only if it conveys extractable meaning that is useful for solving a decisional problem. If this does not apply, then the represented system knowledge degrades to pseudo-knowledge.

3. Gnoseology of Common Knowledge

3.1. Fundamentals of Gnoseology

Common knowledge is a broad and multi-faceted family of human knowledge, which includes three basic categories: (i) empirical knowledge (obtained by sensing, sensations, signals, trial-errors, measurements, observations, etc.), (ii) intuitive knowledge (obtained by beliefs, self-evident notions, gut feelings, faiths, acquaintances, conventions, etc.), and (iii) authority knowledge (obtained by social norms, expertise, codified constructs, de facto rules, propaganda, ideology, etc.). These categories of knowledge are characterized by incompleteness and uncertainty, strong subject-dependence, situation-sensitiveness, context-reliance, time-relatedness, and limitations in generalization. Barwise, J. identified three views from which it can be viewed: (i) the iterate approach, (ii) the fixed-point approach, and (iii) the shared-environment approach [93]. They are not, or not completely, factual and teachable kinds of knowledge. The philosophical stance of this kind of knowledge was extensively addressed over the centuries [94], but it is still the subject of many ongoing discussions in the specialist literature [95]. Historically, the Greek word “gnosis” has been used as an idiomatic term to name these common forms of “knowing” [96]. This term is said to have multiple meanings, such as: “individual knowledge”, “perceptual knowledge”, “acquaintance”, “explicit opinion, belief, and trust”, and the Greek distinction of “doxa”. From the words “gnōsis” (for knowledge) and “logos” (discussion), the term “gnoseology” was coined to name the various studies concerning what can be known about common things and practices in a truly concrete sense.

By way of an example, in the 18th-century, the Latin term “gnoseologia” was used by Baumgarten, A.G. to name the study of non-teachable and not objectively testable knowledge related to aesthetic values and positions [97]. By many scholars, gnoseology is deemed to be the theory of cognition, since it has not become interested in foundations, generalizations, and abstractions. Gnoseology is also frequently defined as (i) the philosophy of knowledge, (ii) the philosophic theory of knowledge, (iii) the theory of human faculties for learning, and (iv) the theory of cognition. For some scholars, (classical) gnoseology is the metaphysical theory of knowledge or the metaphysics of truth [98] and it became coextensive with the whole of metaphysics. Gnoseology has been focused on socially premised and historically loaded human sensory and affective cognition viewing it as a process of achieving knowledge, the highest form of which is science [99]. Consequently, one can also understand gnoseology as a way of knowing of practical knowledge, reflexive knowledge, local knowledge, etc. without general and absolute cogency and validity [100]. As such, it could even cover understanding of knowledge gained though meditation.

Though the history of gnoseology is relatively long, it has not become common in philosophy and education for reasons that are difficult to uncover. The term is almost never used by English language philosophers. The fact of the matter is that the term has a varied etymology and played in different roles over time. As for now, the word “γνωσιολογία” has become more commonly used in Greek than in English for the study of the types of knowledge. For instance, gnoseology is often confused with gnosology, which is philosophical speculation and argumentation about gnosis, that is, esoteric or transcendental beliefs and mystical knowledge [101]. In the academic literature, gnoseology has been used with a dual meaning as “the philosophy of knowledge and cognition” and as “the study of knowledge”. In the Soviet philosophy, the word was often used as a synonym for epistemology [102]. Since intuitive and/or instinctual knowledge plays a less significant role in the productive segment of the industrialized society, the original concept of gnoseology is playing a less significant role. There are publications that refer to gnoseology as the theory of non-human rooted knowledge. Interestingly, Nikitchenko, M. proposed to use a gnoseology-based approach for developing methodological, conceptual, and formal levels of foundations of informatics [103]. Below, the modern (also meaning contemporary) interpretation of gnoseology is considered, together with the various views and perspectives related to it.

3.2. Potentials of Gnoseology

The Merriam-Webster online dictionary of English mentions four aspects of inquiry in which the philosophic theory of knowledge is interested in, namely: (i) the basis of knowledge, (ii) the nature of knowledge, (iii) the validity of knowledge, and (iv) the limits of knowledge. This applies to gnoseology too. At large, gnoseology focuses on the socio-cognitive aspects of common individual knowledge, and addresses (i) the process by which the subject is transferred to a state of knowledge, (ii) the human faculties for perceiving, thinking and learning, (iii) the universal relationship of common knowledge to reality, (iv) the conditions of its authenticity and truthfulness, (iv) the role and manifestation of human cognition, and (v) the preconditions and possibilities of cognition. Though it has a tight connection with the theory of cognition, it differs from it (i.e., from the study of the mental processes and information generation and processing of the mind). Gnoseology studies knowledge as an intermediate phenomenon between its two elements: the subject and the object of knowledge, so it is relevant the way both are conceived as entities. The concept of “gnosis” was regarded as alternative to the concept of “episteme” by Mignolo, W. He interpreted the role of gnoseology as a border thinking or border gnosis. By doing so, he positioned gnoseology as a third way of dealing with knowledge beyond “hermeneutics” (interpretation of beliefs) and “epistemics” (justification of beliefs) [104]. In the interpretation of Li, D., gnoseological research may deal with people’s spiritual and psychological phenomena, such as knowledge, emotion, and meaning, in particular with people’s value psychology, value perception, value concepts, and value evaluation [105].

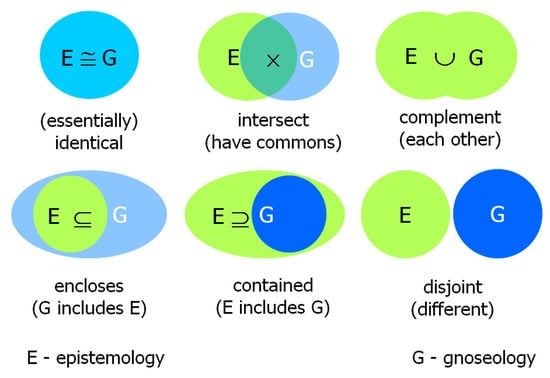

As the literature reflects, gnoseology is primarily concerned with the non-universal (particular, incomplete, non-justified) knowledge. Since it focuses on non-scientific knowledge (or the whole complement of scientific knowledge), it is often seen as the theory of partial approximate knowledge. It addresses scientifically not-justifiable domains of knowledge, such as instinctual, heuristic, intuitive, common-sense, instinctual, sensual, axiological, experienced, etc. knowledge of individuals. Besides concrete individual beliefs, gnoseology has many source fields, such as daily life, social interaction, culture, education, religion, politics, authority, and profession, from the perspective of what it means to “know” in these fields. Notwithstanding, some writers emphasize the significance of dealing with universal (generic, complete, justified) knowledge [106]. Some researchers and philosophers see a transition from gnoseology to epistemology (specifically with Auguste Comte’s works), as well as concrete roads from gnoseology to epistemology [107,108]. Others considered epistemology as a subdomain of gnoseology (Figure 4) [109]. Gnoseology leads to epistemic thinking, which lends itself to the scientific study of perceptive, cognitive, and linguistic processes by which knowledge and understanding are obtained and shared. Epistemological thinking may transcend gnoseology by means of its level of analysis, as it takes care of the specific conditions of production and validation of scientific knowledge (historical, psychological, sociological circumstances, and justification criteria). The exact criteria to distinguish scientific knowledge and general knowledge are not really known (where does qualitative knowledge become scientific?) and the border line is blurred. Therefore, my assumption is that gnoseology can be deemed to study knowledge in a priori point of view, and epistemology in a posteriori point of view. In this vein, the concept of gnoseology is a broader and looser concept than that of epistemology.

Figure 4.

Conceivable relationships between gnoseology and epistemology.

4. Epistemology of Scientific Knowledge

4.1. Fundamentals of Epistemology

While gnoseology was an important chapter of the books on philosophical debates about knowledge since the Aristotelian time, epistemology has become a more operational concept over the centuries [110]. Historically, we cannot find traces of modern epistemology until the last two centuries. This is in line with the consolidation of science as a social establishment. Epistemology refers to the “theory of well-grounded (testable and learnable) knowledge, which has been captured by the Greek word ‘episteme’”. The term “epistemology” was introduced by the Scottish philosopher, Ferrier, J.F. [111]. In most of the philosophical textbooks, epistemology is interpreted either as the branch of philosophy that studies the origin, nature, kinds, validity, and limits of human knowledge, or as the theory of knowledge, especially with regard to its scope, methods, and validity, and the distinction between justified belief and personal opinion [112]. Epistemology is seen as normative and critically evaluative, rather than descriptive and explanatory. In other words, epistemology appeals to the philosophy of science and experimentation, whereas, as said by many, gnoseology uses only armchair speculation. Goldman, A.I. conceived two interrelated fields of epistemology [113]: (i) individual epistemology (related to cognitive sciences) [114] and (ii) social epistemology (related to social sciences) [115].

In a narrow sense, epistemology is understood as the study of the conditions of production and validation of scientific knowledge [116]. It accepts beliefs as the basis and necessary condition of a scientific system of knowledge, though certain epistemologists argue over whether belief is the proper truth-bearer. Epistemology applies rigorous criteria to distinguish scientific knowledge from common (general) knowledge, sensations, memory, introspection, and reasoning are regarded the sources of beliefs. Opposing weakly grounded knowledge, scientific knowledge is a complex formation that includes laws of nature, empirical facts, tested theories, formal models, speculations, and hypotheses, all originated and formulated by humans [117]. These all coexist, evolve, and compete with each other.

Some philosophers think it is important to make a distinction between “knowing that” (know a concept), “knowing how” (understand a happening), and “acquaintance-knowledge” (known by relation). Epistemology is being primarily concerned with the first of these. Epistemology of knowledge accepts four theories of knowledge, namely: (i) connectionist (associative), (ii) cognitive, (iii) constructivist, and (iv) behaviorist theories. Connectionist approaches focus on the presence or absence of associations and their quantity, while constructivist theories contemplate the reasons of knowledge such as causality, probability, and context. Behaviorists theories interpret knowledge as behavioral responses to different external stimulus, but do not contemplate internal cognitive (thought) processes. In cognitive theories, knowledge is treated as integrated and abstracted structures of information of various kinds. Hjørland, B. proposed that there are four basic epistemological approaches to knowledge organization, namely: (i) empiricist, (ii) rationalist, (iii) historicist, and (iv) pragmatist [118].

4.2. Potentials of Epistemology

As the theory of knowledge, epistemology has an extremely wide range of concerns. It poses questions and tries to find defendable answers to them. Actually, abundant questions are posed as relevant questions for epistemology. It is difficult to make any prioritization since most of these concerns and questions are interrelated. For the sake of an overview, four notional families of them are considered here. One of the families of questions is about the very essence (nature) of knowledge. Typical questions are: (i) What is sensory experience? (ii) What are beliefs? (iii) What is the rationality of beliefs? (iv) How do beliefs turn into knowledge? (v) What are the criteria for knowledge? and (vi) What is the nature of knowledge? A second family is about how humans can come to know. Representative questions are: (i) What are the sources of knowledge? (ii) What is consciousness? (iii) What is the role of memory? (iv) What is the truth of reason? (v) What are the kinds of testimony? (vi) How do we know that we know? (vii) What is the relationship between the acquired knowledge and the knower? (viii) What is the role of skepticism? and (ix) How does language construct knowledge?

A third family is about the conditions of knowledge. Most related questions are: (i) What is justification? (ii) When is a belief justified? (iii) What makes justified beliefs justified? (iv) What is truth? (v) When is knowledge true? (vi) What is correctly proven true belief? What is correct inference and reasoning? (vii) When is knowledge coherent? (viii) What is dogmatism? (ix) What is the grounding of scientific knowledge? (x) What is moral knowledge? and (xi) What is religious knowledge? Lastly, a fourth family of questions is about the insinuations of knowledge. For instance: (i) When do we know something? (ii) What is fallibility? (iii) How do we ensure valid knowledge? (iv) What is belief-less knowledge? (v) What is common sense? (vi) What is evidence? (vii) What is abstraction? (viii) What is context? (ix) What are possibilities for knowledge integration? (x) What is intelligence? and (xi) What is wisdom?

In the last decades, epistemology-oriented thinking penetrated into systems science and system engineering with different purposes. Only some demonstrative contributions can be mentioned here. Helmer, O. and Rescher, N. discussed the need for a new epistemological approach to the inexact sciences, since explanation and prediction in the case of these sciences do not have the same logical structure as in exact sciences [119]. As new methodological approaches, they mention system simulation and expert judgment. Ratcliff, R. exposed a specific application of epistemology to support system engineering [120]. Hassan, N.R. et al. discussed the relationship of philosophy and information systems [121]. De Figueiredo, A.D. argued that the developing epistemologies of design and engineering contribute to a renewed epistemology of science [122]. Frey, D.D. discussed five fundamental questions to stimulate the establishment of epistemological foundations of engineering systems [123]. Striving for an epistemology of complex systems, Boulding, K.E. concluded that the primary obstacle for the development of robust knowledge platform for them is the lack of predictability [124].

Hooker, C. argued that, ultimately, the goal of science philosophy is to develop mature foundations/philosophy of complex systems, but attempting this is premature at this time. What is needed instead of the traditional approach of normative epistemology is capturing emergent macroscopic features generated by actor interactions [125]. Möbus, C. experienced that hypothesis testing plays a fundamental role in a cognitive-science-orientated theory of knowledge acquisition as well as in intelligent problem-solving environments, which are supposed to communicate problem-solving knowledge [126]. Houghton, L. argued that epistemology is the foundation of what researchers think knowledge could be and what shape it takes in the “real” world around them, and that a “systems” epistemology should use multiple ideas and conceptual frameworks in the business of sense making real-world problems [127]. Horváth, I. argued that research in system epistemology is in an early stage [128]. The epistemological assumptions made by different researchers resulted in an epistemological pluralism, which concerns (i) what knowledge systems are based on, (ii) what we know about them, (iii) what knowledge their implementation needs, and (iv) what knowledge they can possess and produce. However, as this concise overview implies, even the latest epistemological studies are not explicitly addressing synthetic system knowledge.

5. A Proposal for the Theory of Synthetic System Knowledge

5.1. The Major Findings and Their Implications

The previous sections of the paper were intended (i) to describe the background of thinking about system knowledge and (ii) to give an overview on the current academic progress. The major findings are as follows:

- Time has come to consider system knowledge not only as an asset, but also as the subject of knowledge theoretical investigations. It may provide not only better insights in the nature, development, and potentials of system knowledge, but it may advise on the trends and future possibilities.

- The picture the literature offers about system knowledge is rather blurred. The two major constituents of systelligence, the dynamic body of synthetic system knowledge and the set of self-adaptive processing mechanisms, are not yet treated in synergy.

- The basis of system knowledge is human knowledge, the tacit part of which is difficult to elicit and transfer into engineered systems. On the other hand, the intangible part of human knowledge is at least as important for the development of smartly behaving systems, as the tangible part.

- The literature did not offer generic and broadly accepted answers to several fundamental questions concerning the essence, creation, aggregation, handling, and exploitation of system knowledge.

- Human knowledge is one of the most multi-faceted phenomena, which has been intensively debated and investigated since the ancient time. Perhaps the largest challenge is not its heterogeneity, but the explosion of knowledge in both the individual and the organizational dimensions.

- Categorization, structuring, and formalization of human knowledge makes it possible to transfer it to engineering systems, but current computational processing is restricted mainly to syntactic (symbolic) level, and only partly to semantic level.

- System knowledge is characterized by an extreme heterogeneity not only with respect to its contents, but also to its representation, storage, and processing. The computational processing of these different bodies of knowledge need appropriate reasoning mechanisms.

- There are five major families of ampliative computational mechanisms, which, however, cannot be easily combined and directly interoperate due to the differences in the representation of knowledge and in the nature of the computational algorithms.

- The abovementioned issues pose challenges to both knowledge engineering and management, likewise, the issue of compositionality of smart and intelligent engineered systems. One of the challenges is self-acquisition and self–management of problem-solving knowledge by systems, as a consequence of their increasing of smartness and autonomy.

- The two known alternative theories of knowledge, offered by gnoseology and epistemology, focus on the manifestations of the common knowledge and of the scientific knowledge, respectively. Their relationship to synthetic system knowledge is unclear and uncertain.

- Synthetic system knowledge is composed of bodies/chunks of scientific knowledge and of common knowledge, as well as chunks/pieces of system inferred and reasoned knowledge. Its entirety and its run-time acquired parts are addressed neither by modern gnoseology nor contemporary epistemology.

5.2. Proposing Sympérasmology as the Key Theory of System Knowledge

The above findings imply the following question: If epistemology and gnoseology are not tailored to, and do not have any tradition in, theorizing synthetic system knowledge, then what is the name and essence of the theory of the knowledge that is partly derived based on human knowledge and partly self-acquired and self-aggregated knowledge by specific engineered systems equipped with cognitive (learning, reasoning, deciding) capabilities? Do we have, or is there any, emerging x-ology dedicated to the knowledge possessed and/or acquired by smart and intelligent engineered systems? Currently, not. If beliefs, truths, justification, foundationalism, and coherentism are the main conceptual pillars of the theory of knowledge in contemporary epistemology, what these will be in the theory of synthetic system knowledge? It is not illogical to think that this theory needs additional potentials different from those that gnoseology and epistemology have. At least the first results of the pioneering steps in research underpin this claim [129]. Though the need for it has emerged, a novel philosophical discipline and the new theory of SSK will evidently not fall down from the sky. Its starting points should be initiated by researchers and philosophers. To contribute to this has been the goal of this paper.

My five cents are as follows: Should we want to name a branch of inquiry, then the most appropriate approach is to start out from of what it investigates (what its subjects are). What an engineered system with cognitive capabilities can derive by inferring or reasoning resembles “personal opinion” as considered in gnoseology. In other words, a highly autonomously operating system generates pieces of knowledge that are closer to “gnosis” than to “episteme”. Anyway, building “system gnosis” on “human gnosis” and “human episteme” is what humans (knowledge engineers) already do. The system operates as a learning agent, which makes sense of the derived pieces of knowledge using its own hacks and tricks, admittedly on a larger scale. The overall reasoning process it implements includes observation -> inferring -> learning -> planning -> adaptation. One major assumption is that synthetic system knowledge (SSK) is a genuine third synthetic category of knowledge (of inferential nature), which just partially relies on human consciousness, perception, cognition, and/or emotion. Another major assumption is that a significant part of SSK is conjectured, inferred, constructed, or otherwise derived, during the operation (run-time) of systems and that this part becomes even more dominant in the future.

In the Greek language, the cognitive operation which is connected to this is depicted as “symperaíno̱” (συμπεραίνω). The mentioned type of inferred knowledge is called “sympérasma” (συμπέρασμα) in Greek. This word also has a second meaning, which expresses a bottom line of knowing or a consolidated conclusion. The word “symperaínetai” (συμπεραίνεται) is applied to something that is deductible and concluded. This name identifies SSK uniquely and differentiates it from the terminology of gnoseology and epistemology. Using the notion of “system gnosis” the theory of SSK could be called “meta-gnoseology”, “proxy gnoseology”, or “quasi-epistemic gnoseology”. However, for the sake of separating it from gnoseology and epistemology [130], I have called it “sympérasmology”. The first part of the term refers to the inferred intellect (as fundamental constituent of smart system operation) and the second part refers to the associated “logical, semantic, pragmatic, and apobetic discourse” [131]. Can it be accepted as the name of the sought-for pluralistic x-ology?

The study circumscribed so far is intended to focus on the whole of systems-owned knowledge, which is largely presumed, conjectured, deduced, inferred, or concluded in any proper way. Since the progression of the “sympérasmological” theory of SSK will go ahead in a hand-in-hand manner with the progression of systems science and engineering, it will need not only rational, but also empirical, investigations. In this sense, it goes beyond the methodological approaches (informed speculation and rational investigation) of gnoseology and epistemology. For these reasons, elaboration of a sympérasmological theory of SSK can be judged to be an overambitious effort, and teleological and pragmatic trade-off issues can be brought up. However, taking a position concerning the trade-offs is easier than in the case of ontology and epistemology. The benefits are not only in a clearer academic view, but also in the opportunity of more dependable innovation strategies and better engineering decisions about proper intellectualization of systems for industrial and social applications. “Sympérasmology” cannot be implemented as simple augmentation of gnoseology or epistemology, since it should be based on different fundamental concepts and principles originating in the very nature and procession mechanisms of synthetic system knowledge. In this sense, it may even hurt the epistemological hegemony. On the other hand, it may have a disruptive influence on the design, engineering, application, and utilization processes of smart (and intelligent) systems. According to this presented conceptualization, it concentrates only on knowledge that is formally or informally embedded in systems and is locked in their processes.

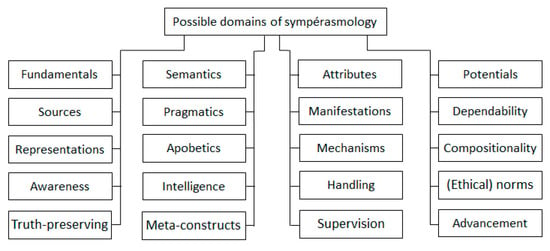

5.3. Domains of Sympérasmological Investigations

There are three basic requirements for “sympérasmology”, namely, it must be: (i) intelligible, i.e., comprehendible by professionals working on SSK, and (ii) robust, i.e., allow for the future developments of the domain, and (iii) distinguishing, i.e., should maintain its conceptual uniqueness and utility. Some possible specific domains of “sympérasmological” studies are indicated in Figure 5. The identified domains reflect the fact that the essence and status of SSK cannot be investigated without considering the knowledge concerning the related cognitive processes and technological enablers. Like in the case of epistemological studies, abundant sets of concrete inquiry questions can be formulated related to each domain. This is suggested for follow up research activities and consolidation. It must be noted that none of the above domains are intended to overlap with areas of interest of ontology, methodology, computation, or praxeology of SSK. This issues with be discussed in a next publication that aims at an intertwined exploration and a deeper analysis of the reasonable inquiry and analysis domains of sympérasmology.

Figure 5.

Possible domains of sympérasmological investigations.

Funding

This research received no external funding.

Conflicts of Interest

The author declares no conflict of interest.

References

- Nuvolari, A. Understanding successive industrial revolutions: A “development block” approach. Environ. Innov. Soc. Transit. 2019, 32, 33–44. [Google Scholar] [CrossRef]

- Wang, Y.; Falk, T.H. From information to intelligence revolution: A perspective of Canadian research on brain and its applications. In Proceedings of the IEEE SMC’18-BMI’18: Workshop on Global Brain Initiatives, Miyazaki, Japan, 7–10 October 2018; pp. 3–4. [Google Scholar]

- Makridakis, S. The forthcoming artificial intelligence (AI) revolution: Its impact on society and firms. Futures 2017, 90, 46–60. [Google Scholar] [CrossRef]

- Kastenhofer, K. Do we need a specific kind of technoscience assessment? Taking the convergence of science and technology seriously. Poiesis Prax. 2010, 7, 37–54. [Google Scholar] [CrossRef]

- Canton, J. Designing the future: NBIC technologies and human performance enhancement. Ann. N. Y. Acad. Sci. 2004, 1013, 186–198. [Google Scholar] [CrossRef] [PubMed]

- Wetter, K.J. Implications of technologies converging at the nano-scale. In Nanotechnologien nachhaltig gestalten. Konzepte und Praxis fur eine verantwortliche Entwicklung und Anwendung; Institut für Kirche und Gesellschaft: Iserlohn, Germany, 2006; pp. 31–41. [Google Scholar]

- Ma, J.; Ning, H.; Huang, R.; Liu, H.; Yang, L.T.; Chen, J.; Min, G. Cybermatics: A holistic field for systematic study of cyber-enabled new worlds. IEEE Access 2015, 3, 2270–2280. [Google Scholar] [CrossRef]

- Zhou, X.; Zomaya, A.Y.; Li, W.; Ruchkin, I. Cybermatics: Advanced strategy and technology for cyber-enabled systems and applications. Future Gener. Comput. Syst. 2018, 79, 350–353. [Google Scholar] [CrossRef]

- Horváth, I.; Gerritsen, B.H. Cyber-physical systems: Concepts, technologies and implementation principles. In Proceedings of the TMCE 2012 Symposium, Karlsruhe, Germany, 7–11 May 2012; Volume 1, pp. 7–11. [Google Scholar]

- Horváth, I.; Rusák, Z.; Li, Y. Order beyond chaos: Introducing the notion of generation to characterize the continuously evolving implementations of cyber-physical systems. In Proceedings of the 2017 ASME International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Cleveland, OH, USA, 6–9 August 2017. V001T02A015. [Google Scholar]

- Wang, Y. On abstract intelligence: Toward a unifying theory of natural, artificial, machinable, and computational intelligence. Int. J. Softw. Sci. Comput. Intell. 2009, 1, 1–17. [Google Scholar] [CrossRef]

- Wang, Y. Towards the abstract system theory of system science for cognitive and intelligent systems. Complex Intell. Syst. 2015, 1, 1–22. [Google Scholar] [CrossRef][Green Version]

- Diao, Y.; Hellerstein, J.L.; Parekh, S.; Griffith, R.; Kaiser, G.; Phung, D. Self-managing systems: A control theory foundation. In Proceedings of the 12th International Conference and Workshops on the Engineering of Computer-Based Systems, Greenbelt, MD, USA, 4–7 April 2005; pp. 441–448. [Google Scholar]

- Horváth, I. A computational framework for procedural abduction done by smart cyber-physical systems. Designs 2019, 3, 1. [Google Scholar] [CrossRef]