1. Introduction

Socially interactive “robots” bearing that name have been an object of interest since the very term was coined in the influential play R.U.R. by Karel Čapek in 1920 [

1], with popular mythology concerning automatons going back centuries, if not millennia.

As robots move from being the stuff of legends to being presented as potential utilities for our own homes there is an increasing interest in understanding how they might need to be designed to adequately support social interactions with humans.

But what exactly is a “social robot”? While the definitions are murky at this point, as de Graaf [

2] points out, there is a consensus that it must be something that interacts in a human-like way. For our purposes, the social robots that we have chosen to study exhibit social behavior (e.g., humor, compassion, the ability to play) and interact in a human-like manner via speech and non-verbal language. They are marketed with descriptions such as “

the world’s first family robot” [

3], “

The companion robot” [

4], “

genuine day-to-day companion, whose number one quality is his ability to perceive emotions” [

5]. For specific reference to robots designed for the home, throughout this article we will use an abbreviation “Social Domestic Robot” (SDR)—to accentuate the nature of a social robot designed specifically for domestic purposes.

Now that such machines are manufacturable and assuming more will soon be available for sale, how can we ensure that what is produced fits with the expectations of early adopters? These robots represent a fantastic opportunity to test many theories that the Human-Robot Interaction (HRI) and Human-Computer Interaction (HCI) communities have harbored for years, from technology adoption and acceptance to models of interaction in the wild, which, in this case, is the domestic environment. Moreover, these SDRs represent a realistic opportunity to test more future-oriented notions around the development of deep, extensive relationships between people and robots—an idea that has been extensively discussed as inevitable, but that has been little tested.

To facilitate this kind of testing, we have conducted interviews with 20 participants who have been exposed to the advertisements of the three SDRs. These ads pitched the robots to prospective customers and demonstrated potential use cases for each. The interviews allowed participants to reflect upon the most prominent features that they felt were of utmost importance in such machines, based on their first impressions. They also uncovered the initial resistances and suspicions (and therefore barriers to adoption and engagement) that participants have with regards these robots. From the interview data, we have compiled design considerations for the next generation of commercial SDRs, which should help ease the introduction of such robots to the home environment, taking a sample of potential early adopters’ considerations into account.

Through our qualitative analysis of the interview data, we explore the reasoning behind our participants’ perceptions of the robots, and use this to contemplate whether the specific SDRs tested are well-adapted for their utilitarian and social roles in the home. In this analysis, we also touch upon the potential for SDRs to form long-lasting relationships with their human owners.

This article makes the following contributions to the HRI community: (1) It provides an in-depth understanding of potential users’ first concerns when viewing advertisements for SDRs, covering issues such as privacy, functionality, physical design and potential emotional impacts of use; (2) Our study confirms and expands upon elements of existing models of technology acceptance concerning SDRs, adding more factors that designers need to consider when conceptualizing their own SDRs for development; (3) We put forward practical steps that designers of SDRs can adopt, to ensure that human-robot relationships can productively develop.

2. Literature Review

Below we expand on theoretical, laboratory and home-based evaluations of social and domestic robotic technologies. As we discuss the devices used for such evaluations, we point out that there has been little if any research into the devices that resemble the qualities of SDRs. We further note the two technology acceptance frameworks that were developed specifically for robot acceptance at home, and how they accommodate SDRs as suitable candidates for homes.

2.1. Theoretical Bases for SDRs

In scientific literature, the notion of practical applications of artificial social intelligence can be traced at least as far back as 1995, when Dautenhahn suggested including social aspects in the development of robotics, artificial intelligence and artificial life, furthering a prior suggestion that primate intelligence first evolved to solve social problems and only then moved on to the problems outside of the social domain [

6]. Only a year later Reeves and Nass published a compilation of their work asserting that humans treat computers rather like real people, furthering the notion that social engagement with machines is a common response [

7]. Later, extensive arguments towards the possibility of deep emotional engagements, including love and sex with robots, were introduced to broad audiences by Levy [

8].

2.2. Social Robots in Laboratories

Much of the practical research on the matter of social robotics has been performed in laboratory settings and/or has supported very short durations of user engagement. Much of the early work done at MIT with Kismet (1997) [

9,

10] and Cog (1998) [

11,

12] showed that even very brief or simplistic interactions can elicit quite emotional responses from

people, especially children, as indicated by Turkle [

13]. MIT have continued to experiment with developing the social aspects of robotics with projects like Leonardo (2002) [

14], which could learn about objects from emotional vocal expressions of a human teacher, much like children could; Huggable (2005) (and related technologies aimed at children) [

15], and Nexi (2008) [

16], a mobile, dexterous, social (MDS) robot, designed to cooperate with humans on a collaborative task (among others).

These examples see robots trying to perform social roles in different environments—in the lab (Kismet, Cog), at home with children (Huggable, etc.) and outside, collaborating with humans, as is the case with Nexi. Given that some of these were designed specifically for home environments, we can already see how social robotics is moving in to the private sphere of human activity.

Other universities joined the effort, e.g., Maggie (2005) [

17] was developed by Universidad Carlos III de Madrid; Stanford had done work on robots performing social roles in museums [

18], and Hiroshi Ishiguro at the ATR Intelligent Robotics and Communication Laboratories tested robots in e.g., [

19] where a field trial was conducted, drawing a conclusion that robots, given a more pronounced ability to maintain relationships with people, will have practical uses in our daily life, including teaching and motivation for elementary school children. Homes, as places for families including children, present an environment where children could have extensive interactions with SDRs. Having acceptance and even deep emotional ties with children is an important factor to consider when designing an SDR (Turkle [

13] discusses this particular topic at length).

A step further in building more intimate relationships with robots was performed by Samani with his introduction of Lovotics [

20].

While laboratory short-term experiments provide some initial information on the matter, it is noted [

2] that there are very few studies of longer-term interactions at homes. There are fewer studies still on socially enabled robots for home, since those only recently have come to market. Below, we review some of the attempts that have been made to investigate domestic robotic technologies, and point out how close those robots come to the definition of SDRs—the robots in question in this article.

2.3. Research on Commercially Available Robots for Domestic Use

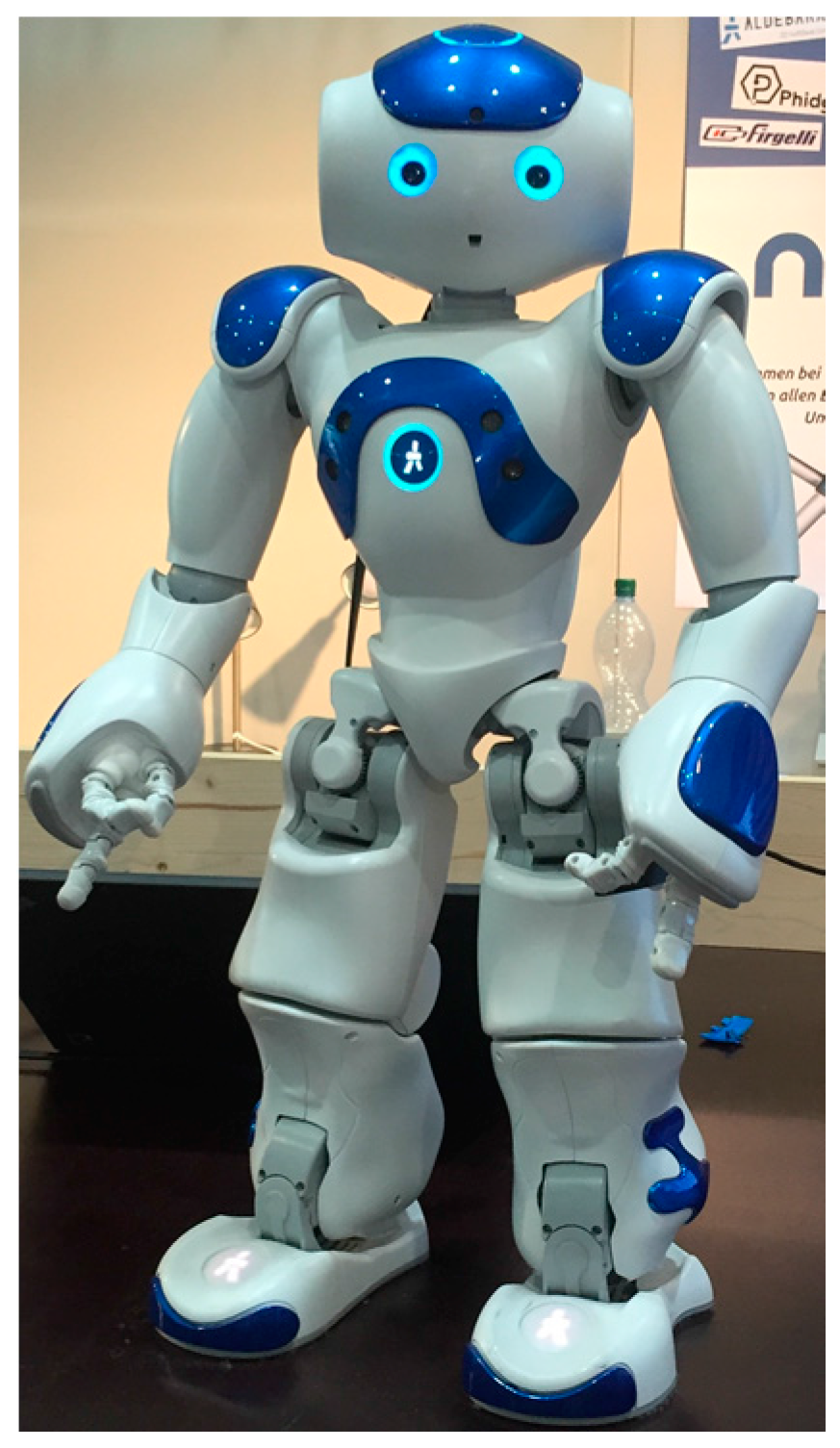

2.3.1. NAO

NAO by Aldebaran Robotics (

Figure 1) is a humanoid robot often used in HRI research (e.g., [

21,

22,

23,

24,

25]). While it is a promising platform, given its ability to interact both verbally and non-verbally, and its programmability (e.g., it can be modified to include capabilities such as “humor”), at a price point of USD

$9000 [

26] it is highly unlikely to become a common sight in homes for some time. It does not help either that there is very little, if any, research on evaluating NAO specifically within domestic spaces. NAO is portrayed as a teleoperated device (e.g., [

27]); further evidence from [

28] suggests that while the robot enjoys popularity, it is not seen as one for domestic use.

2.3.2. iCat

iCat by Philips enjoyed some popularity as a research platform, including research with children (e.g., [

29,

30]). Being able to use audio and facial animation, it could be considered an SDR in terms of its communication modes, but not in terms of its utilitarian value. Technology, however, has moved on significantly since 2005, when it was created, and while updates for NAO are steadily deployed, iCat has become obsolete. There is also little to no evaluation of iCat in a domestic setting.

2.3.3. Roomba

iRobot’s Roomba (

Figure 2) has been a very popular choice of robot when it comes to studies at home for several years. It is a robot vacuum cleaner, designed by a commercial company, and priced in a range akin to higher end domestic appliances (£349.99–£799.99 [

31]). While many studies have discussed the social dimensions people ascribe to Roomba [

32,

33,

34], and this is one of the few robots that has been evaluated over a long period of time in a domestic setting [

35,

36], it falls severely short of being able to communicate with people in a human-like manner, thus it should not be considered an SDR. Still, given that the robot is relatively well researched, we consider, in this article, the framework of robot acceptance, which has been largely based on considerations of interactions with Roomba devices.

2.3.4. Other Evaluations of Robotic Technologies at Home (AIBO & Pleo)

There have been a number of smaller investigations with robots in the home. We review the two commercially available robots that have some scientific traction, and enjoyed popularity, namely AIBO by Sony, and Pleo by Ugobe.

AIBO (

Figure 3a) was a robotic dog produced between 1999 [

37] and 2006 [

38]. It received attention from the academic community and the general population alike with some developing very strong bonds with the robot [

39], however long-term studies of AIBO are hard to come by. We can only point towards an analysis of AIBO-related forum postings [

40] (presumably by AIBO owners), where, much like in the case of Roomba, users attribute social behaviors or intentions to the robot. AIBO does not quite fall into the category of SDRs either, since their communication is quite limited and therefore not “human-like”.

Pleo (

Figure 3b) is a robotic dinosaur. While being quite a sophisticated device, what little evidence we have of its long-term use suggests that it was positioned as a toy in the minds of the participants [

41]. This is further supported by a blog-post analysis related to the toy [

42]. Pleo falls short in its communication modes as well, being, again, some way away from “human-like”.

2.4. Models of Robot Acceptance at Home

There have been several frameworks of domestic robot technology acceptance and adoption. Below, we review two frameworks based on longer-term studies, as we believe they are both more rigorously tested by the real users, and are designed based on devices that were commercially available to the users at the time, much like the SDRs we selected for our study. As we review each model, we note the similarities and differences between the robots that were used to create those models and the robots explored in our study. We also focus exclusively on the first phase of each framework, which is the closest to our current study—the pre-adoption phase. The first framework described in [

43] and later simplified by [

44] was based on a utilitarian robot (Roomba). The second one, described in [

2] and elaborated on in [

45] was designed around robots that had both social and utilitarian roles, thus being the closest to the SDRs we studied.

2.4.1. Sung, et al.’s Domestic Robot Ecology (DRE) Framework

The DRE Framework [

43] (later simplified by [

44]) was developed using Roomba vacuum cleaners and spans 4 phases. In the pre-adoption phase “

…people learn about the product and determine the value. Also, they form expectations and attitudes toward objects” [

43]. Indeed, prior expectations were the main thing discussed at that phase, with participants’ expectations being dependent on their tech-savviness. The main emphasis was on a domestic robot being a tool. In [

44] there is a further description that the expectation was for the robot to improve the cleanliness of the house, with less pronounced idiosyncratic notions about the robot’s appearance.

While there have been a number of studies with Roomba being ascribed social roles (e.g., [

32,

33,

46]), the authors of [

43] note that Roomba itself is a rather basic robot and that more developed robots could perform social roles. The authors of [

44] also point out that the experience of Roomba ended up being that of a tool and not as a utilitarian/social-companion combination. This is the main difference between Roombas and the robots in our study—SDRs are positioned to perform both utilitarian and social roles, while Roomba is positioned to perform a single utilitarian role—vacuum cleaning. Even taking that into account, the DRE Framework postulates that social roles in robots would play a significant part in the acceptance of the robots, thus positioning SDRs as suitable robotic companions for homes.

2.4.2. De Graaf’s Phased Framework of the Long-Term Process of Robot Acceptance

Providing an alternative to the above framework, de Graaf based her model on domestication theory and the “diffusion of innovations” theory. Nabaztag and its successor—Karotz were the test robots used. She introduced a six-phase framework of technology acceptance [

2]. As explained in [

45], in the pre-adoption phase “

people learn about the technology, determine its value and form expectations and attitudes towards it before they invite the technology into their homes”. This phase also explains that people form expectations of new technologies. The influencing variables she found at that stage were: social influence, usefulness, realism, attitudes towards robots, enjoyment, anthropomorphism, attitude to use and intention to use.

Nabaztag and Karotz represent the robots that are the closest to the ones investigated in this article: they have both social and utilitarian functions; are connected smart devices; programmable; and use both verbal and non-verbal language to communicate (lights and ear motions in this case).

2.5. Explosion of Interest in SDRs

Since 2014, we have seen more and more interest in commercially viable SDRs—those which are social (having sophisticated interaction capabilities, often both verbal and non-verbal), domestic (designed specifically for use in the home), available for a relatively affordable price (USD $400–$700 range), and actually being robots with dedicated hardware (as opposed to software to be used in a general purpose computer, e.g., chat bots).

Pepper, which was developed by Aldebaran Robotics together with SoftBank, has seen and continues to see successful sales in Japan, with 10,000 units sold up until 5 January 2017, the company plans to expand into new markets, like the U.S. [

47]. Being sold both to the general public and businesses, we are beginning to see evidence of deep, prolonged and emotional relationships developing between SDRs and a people. This is evident through the public blogs of Tomomi Ota, who records her relationship with Pepper [

48].

In 2016 there were further developments in the field, starting with announcements around Jibo [

49] and Buddy [

50], developed by start-ups or crowd funded and then followed by ASUS with their Zenbo robot [

51]. Both Jibo and Buddy were also demonstrated at CES 2017 [

52,

53], providing a further confirmation that the projects are still financially alive. New SDR announcements show up continuously, reflecting a growing interest in this space (e.g., Olly [

54], Oding, and Eywa E1 [

55]).

SDRs’ potential were also noted by major companies like KPMG, who advocate the potential uses of such machines in a business context [

56] showing appropriation of initially domestic technology for other uses.

2.6. Where Do We Fit In?

Given their potentially broad adoption, by scientific communities and the general public, it seems sensible to begin discussing (and influencing) the development of these new technologies. The development and introduction of SDRs to commercial markets will have significant impacts on the Internet of Things (IoT), home automation, privacy of individuals, and other aspects of technology-led private life. SDRs provide a useful platform to study many aspects of human-robot interaction as well, including relationship formation, and are therefore of primary interest to the scientific community.

Herein, we introduce a qualitative perspective on the human-robot relationship, and use information sources that are publicly available to potential users, not only to highlight the immediate issues people perceive prior to the acquisition of an SDR, but also to illuminate the emerging research challenges around this topic.

As we detail below, what academic researchers perceive as important points affecting the acceptability of SDRs, as presented to them in product pitches, are far from precise, measurable characteristics, like exact functions, algorithms and precise programming routines, but rather rely more on aesthetics, functionality relative to existing technology, and wider social issues with data handling, among other, nuanced issues, such as a potential user’s family composition or their previous exposure to various cultures and living environments.

3. Materials and Methods

3.1. SDRs Used in the Study

Below we sketch out details of the three SDRs of interest in this study, namely Pepper, Jibo and Buddy. While Pepper has been on sale in Japan for some time (and now sells internationally), Jibo and Buddy are yet to enter the scene. Jibo is promised to ship some time in 2017, while Buddy is promised to ship in July 2017 [

57].

These 3 SDRs were chosen to represent various cultures which give rise to such machines (Pepper is Japanese, Jibo is designed in the U.S. and Buddy is a French product), as well as various design decisions that commercial producers make to create an SDR (Pepper is humanoid, Buddy is more abstract and mobile, while Jibo is still more abstract and static).

3.1.1. Pepper

Pepper (

Figure 4) is a robot developed by Aldebaran Robotics and SoftBank in Japan. It is a 1.2-m robot on a wheelbase with arms. Its capabilities are advertised [

58] to include emotion recognition as in joy, sadness, anger and surprise; it can also recognize facial expressions like frown or smile as well as the tone of voice. Simple non-verbal clues like angle of a person’s head is also said to be recognizable.

Among other features are the ability to hear (4 directional microphones on the head) and speak, see with two standard HD cameras and one 3D camera, connect to the internet (via 802.11a/b/g/n Wi-Fi system) and use the tablet strapped to its chest for further functionality and to express its own emotional states. It also has what SoftBank calls an “emotional engine”, which allows Pepper to learn about you and accommodate its character to suit you through learning and dialogue.

Technical characteristics state that Pepper has anti-collision and self-balancing systems to prevent collisions and falls if pushed, 3 multi-directional wheels to move in any direction with the speed of up to 0.83 m/s, and with battery power of up to 12 h. Sensor-wise, it has two ultrasound transmitters and receivers, six laser sensors and three obstacle sensors which provide information about obstacles within a three-meter range. Tactile and temperature sensors are also installed.

SoftBank welcomes independent developers and the latest version of Pepper works on Android, allowing people to improve on its software functionality.

3.1.2. Jibo

Jibo (

Figure 5) is a crowdfunded SDR developed by Cynthia Breazeal in the US. It is ~28 cm tall, has a ~15 cm-wide base and weighs about 2.27 kg without batteries [

59]. It is advertised [

60] as being able to see with 2 HD cameras, hear with 360-degree microphones, speak, learn, help and relate to you.

Jibo has a battery which would last ~30–40 min, otherwise it is powered through the mains [

59]; it does not have wheels, but rotates on its base.

Jibo is advertised as a platform for which anyone can developed applications dubbed “skills” [

59]. A free SDK (now in beta) is available for download. Jibo is built on a version of Linux with proprietary code on top of it [

59].

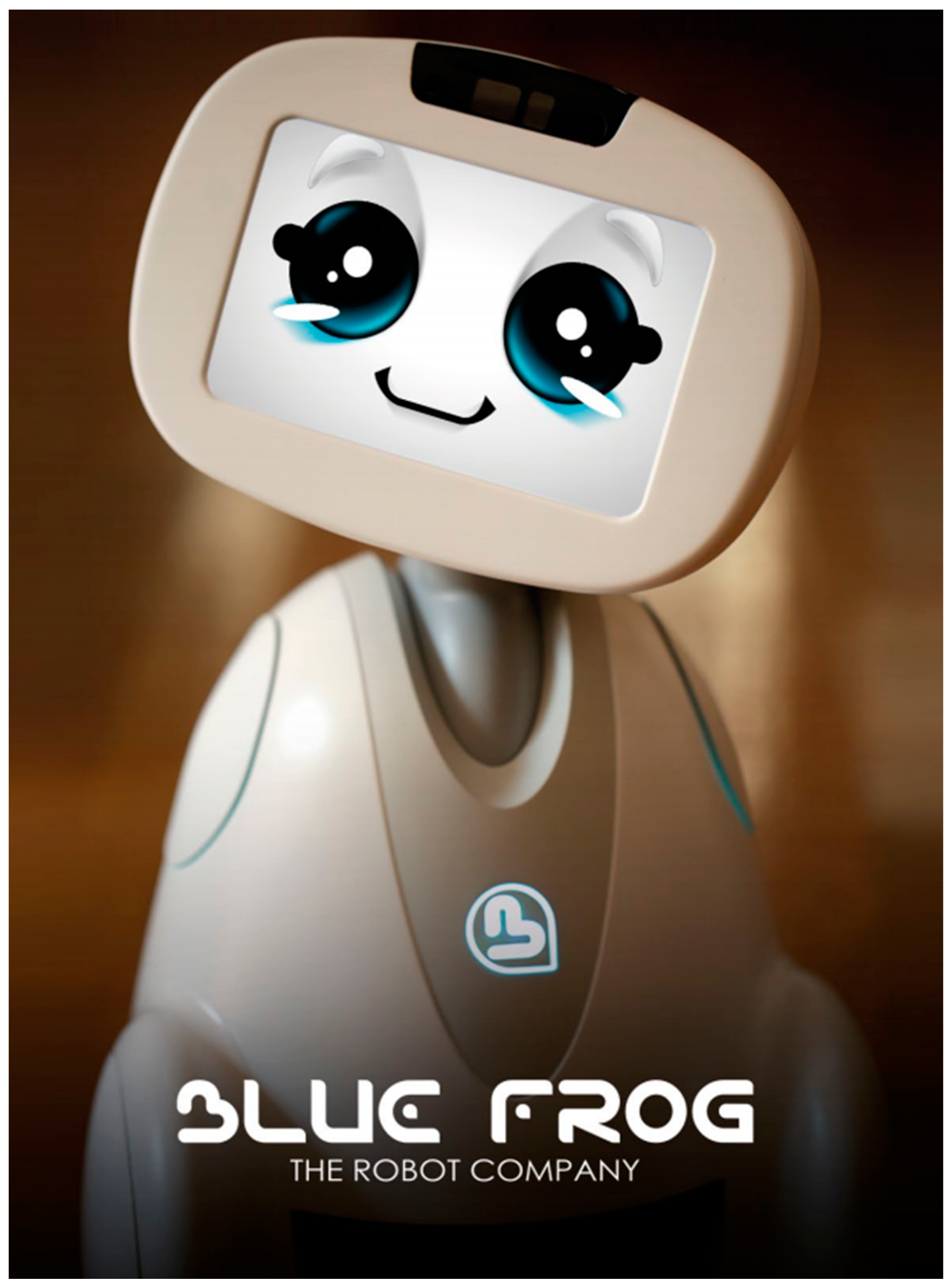

3.1.3. Buddy

Buddy (

Figure 6) is an SDR developed by Blue Frog Robotics in France. It is 56 × 35 × 35 cm in size and weighs just above 5 kg [

4]. Its services are advertised to include home security, connection to a smart home, help in social interactions, personal assistance, audio/video and photo capture and replay, edutainment, elder care and more through applications [

4].

Physical characteristics further include one range and one temperature sensor on the head, five obstacle detection, three range finder and 5 ground sensors, as well as one camera, one microphone, audio output, HDMI and USB outputs. Two speakers are mounted on the head as well. The face is displayed on a 20.3 cm touchscreen display. RGB LED lightning is also supported.

With 4 motors, full 360 degrees movement and a maximum speed of 0.7 m/s, it supports Wi-Fi and Bluetooth and lasts eight to ten hours. The obstacle clearance is 1.5 cm.

Buddy supports Arduino, Unity 3D engine and Android to help with development. The API is being developed, with support for multiple languages and tools for development [

61]. The IDE is built on the Unity engine.

3.2. Participants and the Interviewer

3.2.1. Recruitment

Participants were a self-selected sample, responding to an email advert send around a university in the North of England, UK. The adverts invited anyone interested (staff or students) to participate in an interview on social robotics, indicating that participants would watch three four-minute videos and then be asked some questions about what they saw.

3.2.2. The Participants

20 participants (10 males, 10 females) were recruited for this study. All participant names reported below have been pseudonymised.

Participants’ age ranged between 22 and 44 years (mean = 29.65, σ = 5.228), with 5 staff members and 15 student members taking part in the study.

Cultural prevalence refers to any country that a participant has spent 5 or more years in. The motivation for capturing this information stems from the studies by Li, Rau and Li [

62] and Evers et al. [

63], who indicate that responses to robot’s appearance and decisions may be culturally motivated. This information helped us to understand participants who framed their experiences or expectations of robots directly in relation to cultural ideals or notions that they had experienced. Thus, we have cultural representations of the UK (14), Saudi Arabia (2), Australia, Hong Kong, Iraq, UAE, Taiwan, Egypt, Jordan, Denmark, Germany, Kyrgyzstan, and Russia—one each.

None of the participants were involved in this project prior to the interview, nor had they been working on any projects related to robotics, HRI or AI. They may be considered potential early adopters, while not being experts or having academic interests in the field. Participants were colleagues residing within the same institution, but otherwise unrelated to the interviewer. They were unaware of a particular goal, either personal or professional in regards with this research. It is assumed that participants were truthful in their expression and voiced opinions they truly had. It was highlighted to them prior to the commencement of the interview that (to quote from the information sheet): “There are no right or wrong answers as the study is purely designed for exploratory purposes and does not attempt to test you in any way.”

3.2.3. The Interviewer

The interviewer was the first author of this study, a doctoral trainee, male, with appropriate training for conducting interviews. The interviewer was the primary investigator of the issues reported in this article. Sampling from a range of age groups, balancing participant genders and analyzing the data from text and not from audio were all done to minimize any possible interviewer bias.

3.3. Research Protocol

All subjects gave their informed consent for inclusion before they participated in the study. The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Ethics Committee of Newcastle University and referenced as “User perception of social robots based on promotional videos thereof”. Email with a link to a published study was deemed sufficient compensation for participants’ time.

All interviews were conducted in a quiet conference room within an office building where participants worked, with no one but the participant and the interviewer in the room. There were no interruptions. All interviews were conducted in the summer of 2016. The interview was piloted with 5 participants, but did not undergo any significant changes. No repeat interviews were carried out. No field notes were made during or after the interviews.

The interviewer gave a short survey form to the participants which collected demographic information presented above.

After that, participants watched the Jibo commercial [

3]. After the video ended, the participants were prompted to share their first impressions by the interviewer asking them: “What do you think about this one?” Once the participants responded, they were asked 3 further questions, namely:

Do you think this robot adapts its behavior to people? How so/Why not?

Do you think this robot can make decisions without human help? How so/Why not?

What do you think you can use this robot for?

These questions were motivated by de Graaf’s model of significant factors during the “pre-adoption” phase of long term SDR adoption [

45]. Question three was designed to move away from “over there, but not here” attitudes, which some people default to as a part of acquiescence bias or for other reasons. This question prompted some of the more significant responses reported in this article.

The same process continued for the videos of Buddy [

64] and Pepper [

65]. Once all 3 videos were shown, additional interview questions were asked, namely:

How do you feel about a future where we have these robots at home?

How would you defend the adoption of social robots?

What argument would you put against adoption of social robots?

Do you have any concerns over privacy of having such machines at home?

Would you mind adopting these kinds of robots for further study when they become available? Why/Why not?

These questions were designed to invite participants to think about the topic in more general terms, while also making an effort to solidify arguments for and against SDRs. The question about privacy was added to illicit any thoughts on this topic in relation to SDRs, and was motivated by the opinions of security experts in IoT (e.g., [

66]).

The last question (which was non-committal) was asked both to evaluate whether participants would be suitable for a later deployment of SDRs when those become available and to test the “over there, but not here” attitude again.

Once these questions were answered the study was over and participants left the room. The structured interviews lasted between roughly 30 min and an hour with an average duration of 44 min.

Data saturation on the themes reported in this article was achieved after participant 15 (Lucas), however the themes reported here were discussed by up to 20 participants, depending on the theme. While the general attitude with regards to these themes was apparent, the depth of discussion and individual idiosyncrasies necessitated further interviewing.

The reason the adverts were demonstrated in the order described here was motivated by a long-standing idea in robotics that the more human-like a robot is, the more positively it is perceived by people. Thus, showing the gradual increase in “human-likeness” from Jibo being the least human-like (neither mobile nor human-shaped), through Buddy being mobile, but not human-shaped, to Pepper—being both mobile and human-shaped, we expected a response that would be increasingly positive. However, this was not the case as discussed in the Results, and further reasons to this disparity are given in the Discussion section below.

3.4. Data Processing

Interview responses were audio-recorded, which resulted in over 14 h of audio material. Once the data collection from all the participants was completed, we transcribed the interviews verbatim, resulting in over 80,000 words worth of material (roughly 4000 words per interview). We then subjected these transcriptions to a thematic analysis procedure as outlined by Braun and Clarke [

67]. Neither the transcripts nor the findings were shown to the participants for comment or correction, to avoid biasing the analysis of initial responses with post-hoc rationalization or reflection from participants. 62 codes were initially identified, which were grouped into a number of descriptive themes. The most prominent were compiled into 3 analytical themes with a number of sub-themes all of which we report below. The codes were not pre-defined, but derived from the data. Data was coded by the interviewer (first author) and checked/discussed with the second author (Cohen’s kappa = 81%).

Table 1 below provides information about the codes that were generated from the interviews.

4. Results

Within this article we focus on the following three themes:

Reactions to form and functions of SDRs.

Worries over security in general and privacy in particular.

Difficulties in accepting SDRs on conceptual or emotional levels.

In the sections to follow, we unpack these themes in further detail, providing illustrative quotes from the interviews.

4.1. Form and Functions of SDRs

All the participants commented on the form and functions of SDRs, be it through specific suggestions (coded as “Design of SDRs”), comparing SDRs to other devices (“other devices can do it”, “general uselessness”), or expressing feelings or creep or terror (“Creepy, Terrifying SDRs”). Comments related to social functionality are quantified through “Comments on how SDRs are presented in ads”, as those comments were mainly made as a reaction to this specific presentation.

4.1.1. On General Appearance

When it comes to SDR appearance in general, participants seemed to have an adverse reaction towards Pepper being most human-like, but not quite; Buddy, which was more abstract in shape, but still had the ability to move around was perceived ambivalently; Jibo, being stationary, but with the ability to move on the spot was perceived either neutrally or positively. The unease of “borrowed” appearance is exemplified by Saveria as:

“… but I think if it’s going to be a robot, it should look like a robot. And it needs not to try and be a weird cyborg which hasn’t really learned of how to be a person.”

And by Judit as: “Machines should be machines and humans should be humans… and animals should be animals.”

This tendency to identify a robot by its appearance and negate those devices that try to cross the threshold between clearly robot-looking and robot-acting, and human-looking, human-acting was a very prominent feature throughout the interviews.

On the other hand, more abstract designs like Jibo received positive commentary:

“… whereas a machine can just look like a machine that’s friendly, and we can just design new aesthetics for machine that’s going to look friendly, without pretending to be human”.

(Lu)

4.1.2. On Facial Expressions

Each of the SDRs tested had a different implementation of a face: Jibo used a very abstract “eye” metaphor, Buddy had an animated face on screen and Pepper had a static face. With Pepper there were a number of comments viewing its static face in a negative light. From participants we heard comments such as:

“Buddy has its eyes in a display, so it can move it, and show emotions that you see in the movement, but then… then the Pepper… I don’t think its eyes showed anything. It was like a black spot that didn’t actually move or change its shape.”

(Judit)

This kind of response for some developed into Pepper being perceived as creepy: “This one was creepiest of all and I’ll tell you why: that face didn’t move.” (Saveria).

An animated face (whether human-like or abstracted to an “eye”) can both convey more in non-verbal communication and be perceived in a more neutral or even positive way:

“I actually find this one [Jibo] the most friendly. I mean it did that virtual “blink”, but it’s still virtual and I think it’s really cute and cool.”

(Lu)

4.1.3. On Social Functions of SDRs

Another important point in form and functions is that the social aspects of SDRs, while being heavily advertised, did not form a particular appeal with participants. In fact, it would be preferable for the social aspects of SDRs to be on the side lines, until users develop enough affection towards SDRs for social aspects to come into play and not be perceived as intrusive, weird or unnecessary. This comes to a general notion that social traits are something that we as people ascribe to robots, not something that can be easily programmed in:

“I think the whole idea of making the robots or machines more personalized needs to be a side thing, and not the main point of selling them, so like Siri for instance—it’s not, you don’t buy it because of Siri’s personality, but she makes it easier to do things that you actually want to do with the phone, so it’s more of the usability issue than the sell point itself […] I gave my robot vacuum cleaner a name and when I talk to my friends about him or… I talk about *him*, I don’t talk about *it*. […] I do give machines these personal traits myself, but […] I don’t buy them for that purpose…”

(Lucas)

This aligns well with the general perception of usefulness, as participants would often ask after watching the video: “So, what is this robot for?” Social functionality in SDRs was not perceived in this case on par with usefulness, by which, it seems, participants judged the robots.

4.1.4. On Utilitarian Functionality

Our participants suggest that SDRs need to be somehow better or different in functionality from the following four devices to distinguish themselves on the market: a mobile phone, a tablet, a laptop and a home automation system. Comments such as

“I see it as a glorified hands free phone or tablet.” (Nelda),

“I get a kind of suspicion that they are the iPad-on-wheels kind of thing.” (Ittai), and:

“Switching off electricity, let’s say, when you are out—this technology is connected and when everyone’s out you can just, through your phone, turn them off, rather than calling a freak machine in your house.” (Crimson),

clearly indicate that SDRs, as they are portrayed (and marketed), haven’t found their niche yet in the minds of our participants. They are still evidently looking for what we might call the “killer application”.

Multiple participants expressed a concern that, from what they saw, an SDR might be quite difficult to operate and they are not ready to invest time in learning to do so. Convenience was emphasized: “theoretically, they should be self-managing, so you don’t need to worry about them they are just going to be a convenience to you.” (Zev). To further the worries over functionality, participants whose first language was not English expressed a concern over how good the language processing unit in the SDRs was: “And then the robot maybe, hopefully understands my accent and my speech and look for what I am asking for.” (Andre).

The robots’ functionality as a physical entity was raised by participants. Pepper in particular was commented on for having arms and participants expressed a desire for it to do physical things—from switching the lights on and off physically, to carrying things around to helping with cooking, e.g., chopping vegetables. However, physical constraints especially with regards to obstacle navigation and stairs were also pointed out:

“It may be difficult for it to go up the stairs. So, for this robot, it will have to stay on one of the two floors or it gets up with you in the morning and you bring it down in the evening which is gonna be a headache, yeah?”

(Andre)

Participants expressed significant concerns over SDRs’ mobility and functionality with respect to what a home environment might entail for a robot. Some homes may have stairs, others—be entirely flat, but have rugs or piles of clothing on the floor, others still may share their current home with other people. Such issues introduce significant deviations from the idealized home environment demonstrated in SDR adverts.

4.2. Security/Privacy Issues of SDRs

While we have asked our participants about this topic directly, many of these concerns had been voiced without our prompt. Our question then allowed the participants to elaborate on these issues further.

This is exemplified by the number of occurrences of the codes being three and two times above the number of the participants respectively, and represented by two codes: “Data collection worries” for the general unease towards such activity (usually from the perspective or corporations collecting the data), and “Other people interpreting your data” related more specifically to hacking. All the participants expressed the worry on the topic multiple times throughout the interviews before and after our prompt.

4.2.1. Data Flow and Data Ownership in SDRs

The two most prominent concerns in this theme were over where the data that an SDR records goes, and the potential for malicious hacking of SDRs. Quotes such as:

“And just like you don’t have any kind of sound when it’s filming. You don’t really know what’s happening to the data.” (Milan), and

“… obviously the main concern I have with this is the fact that these are connected and I don’t know where the data that they collect is stored, who has access to it—all that stuff.” (Lucas)

portray the kind of unease participants expressed during the interviews with regards to the information that can be potentially recorded by SDRs. While the advertisements didn’t explicitly state that SDRs are perpetually online, specifications like those of Jibo [

59] suggest that the cloud is the real “brain” of the SDR and that data storage and its security is a valid concern.

Moreover, who owns the data recorded by an SDR and who has access to it remains an open question. Potentially, both companies that develop these robots, and the developers of third-party apps for them could have access to some or all information that an SDR collects. Participants were united in this regard with quotes like:

“… my problem with it is […] they are not clear what data that they are using, what data they are collecting, how they are collecting it, what they are using it for. And what my legal right over that data is.”

(Norton)

While these issues have been ameliorated to some extent by comments on the developers’ blogs, FAQs and websites (e.g., [

59] for Jibo, [

68] for Pepper), potential users would still need to search specifically for this kind of information instead of it being readily available. With the knowledge about SDRs only obtained from the adverts, participants were significantly worried about their data. Furthermore, none of the participants expressed any desire to search or ask for such information. Moreover, one of the participants specifically pointed out that end-user agreements, manuals and other such materials are written so poorly, that while the information may be there, it is impossible to understand it and thus make any use of it.

4.2.2. On Hacking and Lack of Trust in SDRs

Hacking and a general lack of trust towards SDRs came through as a concern in the interviews very strongly. So much so that it even undermines one of the very functions that one of the SDRs (Buddy) is advertised for—that of a watchman while you are away from home. As one participant explained:

“It’s a new field, but are you actually safer having something in the house telling you that someone’s outside? If you have a robot, somebody from the outside could see where everything in your house was.”

(Jin)

SDRs in this case are perceived as a pair of eyes and ears that an outsider can use to pry into your private life. Not only can they look at you in the present moment, but they could also potentially access your historical records, which becomes progressively more dangerous the more you interact with an SDR. Some of this fear was expressed in a very general tone

:“And the idea of having a bit of technology that sits around watching everything in your life—it still unsettles me […] I am not sure how much I want my every move to be watched by something.” (Loraine),

“Basically they record your lives and they know everything about you and… I am not sure if that can be held against you in some cases.” (Redd).

Some of it participants tried to justify:

“Bringing something external, that is hackable and not necessarily 100% trustworthy and… given the ability to wander around, take live videos, maybe stream it… [Laughs nervously] … that I might not be aware of—that’s a problem. It’s like bringing someone you don’t know in your house.”

(Spencer)

Somewhat unsurprisingly, participants felt uneasy particularly with the cameras that SDRs would use to navigate, observe and take pictures or videos. While the adverts did not demonstrate the full range of sensors available on SDRs, participants were not worried about, for example, sound being recorded.

Lack of trust was also the reason given for refusing to even “potentially adopt an SDR” by four of the participants.

4.3. Emotional Consequences of SDRs

“Replacing people + human communication disintegration” was the code for the discussion that occurred in all but one of our participants’ interviews. The topics of replacing people and issues of human communication were often concomitant, and we provide further results on those factors below. “Emotions in (and related to) robots” played a large role in grappling with both how social behavior was perceived on an emotional level and which behaviors were considered appropriate on a visceral level. This was discussed by 17 of our 20 participants. Finally, etiquette, while not heavily discussed in those specific terms (code “Etiquette for SDRs is not established” only discussed by half of the participants), has further support from the “attitude in general”, which accounts for 16 out of our 20 participants.

4.3.1. Replacing People with Robots

There was a prominent motif that the introduction of SDRs is a manifestation of replacing people with machines, to which participants were unanimously opposed. Exemplified by quotes such as:

“but I think when it starts to get into the kind of ehm… replacing humans, that’s when I am not as kind of over the moon about the future of it.” (Milan), and:

“I didn’t like it. It’s trying to, like, having emotional contact, as this replacing human beings, so ahm… you can’t” (Keira).

Participants evidently felt uneasy about this new kind of competition. The way adverts were crafted indeed made this point more prominent—every advert showed a robot interacting with a child either on its own or with a parent. Participants often referred to these particular scenes as “replacing people”.

Many participants took a defensive position when it came to interactions between children and SDRs:

“I mean, and this kind of hugging with the robot—I don’t know. I am not sure if I would like my child to be grown up with this kind of toy. I am not sure about what kind of relationship they would have. I mean would it be like feeling like relationship with normal human?” (Alma), and:

“The kind of social thing in terms of like kids, I don’t know how much I want people to pass that many elements for like caring for their kids onto like a machine, […] I think it might kind of compromise relationships in the family and stuff like that.” (Loraine).

Participants commented however, that a simpler device such as a phone or a Roomba-like vacuum cleaner were fine when it comes to interacting with children.

Having criticized relationships with SDRs, participants emphasized human-to-human interaction as being superior and even necessary:

“I understand that there are people that can’t or have a lot of difficulty in doing that, but there’s also something very important about going out and having real life interactions with people who will give you real life responses” (Jin), and:

“So comforting is like the possibility of a friend or a close friend or a family member—[…] having robots to do this job is not the right way of using robotics.” (Keira).

4.3.2. Social Behavior Simulation in SDRs

Social behavior in SDRs was not only criticized from the utility standpoint, but also on a more emotional level, mainly because either SDRs are not quite as developed to simulate such behaviors confidently or because robots in general are not perceived to have human qualities like humor, making it impossible to behave as if they did:

“… smartphones are fine, but I think the idea of these bits of technology, which trynna be like humans—they are not. Ahm… I think it is- that’s the phenomenon that’s triggered in my mind. It’s kind of got me, this humanoid, it’s not human”

(Michael)

While a simple wink from Jibo avoided heavy criticism, things like an SDR laughing, complaining or looking sleepy was criticized consistently and added to the notions of mistrust and doubt. A pre-programmed laughter even if reciprocated by a human was likened to that of a psychopath laughing only because they think it would be an appropriate time to laugh, not because they thought the joke was actually funny.

Behaviors suggesting that an SDR was sleepy or annoyed were met with ambivalent feelings, depending heavily on the type of SDR shown. When Pepper—a humanoid-looking SDR, was performing the behaviors of irritation, confusion or happiness—it was criticized less so. Buddy—a more abstract design on the other hand, received much heavier criticism when its face in the advert was shown to be sleepy—participants pointed out that a robot cannot be sleepy—it is not in the nature of the robot.

Some pointed out, that robot interactions were too compliant and plain. The interactions would have been richer, if a robot could somehow say “no” to the user, whether verbally or through behavior. When pointing out the limits of SDRs, some participants expressed their doubts about these robots ever being on a human-like level because they cannot have an argument with the user.

4.3.3. Rules of Engagement with SDRs

Even after all the criticism, participants were not really sure how to approach SDRs as they are not quite utilities and not quite people, so the etiquette and rules of engagement are not straightforward:

“it existed in this weird sort of space between like: “is it a person and a member of the house or is it a device because it was called “it”?” (Norton),

“… it’s a human in a robot body. And once you go there, then, how is it any different from human? Then you’ll need to treat it like a human, with all the issues of… what if you want to get rid of it?” (Spencer).

This ambiguity, participants felt, creates room for abuse of both robots and people: “I am not keen that the more human they get the more we might abuse them” (Vilma). This transference from treating an SDR to treating other people came through very strongly from the participants.

5. Discussion

Below we discuss how our findings impact design considerations, such as appearance, security and relationship building with SDRs. We also make note on expanding de Graaf’s first step in the model of acceptance [

45], point out the limitations of this study, and suggest questions for future research that we have encountered during our interviews.

5.1. Key Findings and Implications for Design

5.1.1. Appearance and Presentation

We observed a severe negative reaction towards the appearance of the SDR that looked most human-like (Pepper). In particular, anxiety among participants arose from two main concerns: the SDR’s general appearance and the face. We suggest that both aspects may fall into the dip of the uncanny valley [

69]. Although this notion is disputed [

70] and not precise, when participants speak about an SDR being creepy, this is exactly what comes to mind.

The more abstract we go on the scale: Pepper (humanoid)—Buddy—Jibo (abstract), the more likeable an SDR was, suggesting that participants enjoyed a more abstract aesthetic better. Aesthetics and first impressions were also indicative of how people commented on other aspects of SDRs, suggesting that this initial perception based on aesthetics played a significant role in how a robot was perceived overall. Given evidence from academic studies [

62,

63], as well as the commercial success of Pepper, we suggest that culturally-specific outlooks on robot aesthetics may play an important role in accepting a particular robot, and need to be investigated further.

This suggests to us that rather than continue to try to make machines more human-like and fall into the uncanny valley (first discussed by Mori [

71], with the updated discussion by Laue [

69] with respect to love and sex), when it comes to everyday home devices, participants felt that SDRs should fit into the idea of a more abstract entity, like furniture. This is not to say that SDRs must be static, they can still utilize movement and therefore retain a “life-like” component like Jibo does and more generally, in the way kinetic art does, by being both statically positioned and performing motion.

The faces of the SDRs were the most discussed physical features. A number of studies in psychology have addressed how people read faces (e.g., [

72,

73]) and perceptions of animated robotic faces (e.g., [

74,

75]) which detail how important facial expressions are for people whether they look at other people or at simulated faces on screen or in robots. While anthropomorphic features are consistently favored by people, including for robots for domestic use [

76], this was not part of the behavior we have observed. Specifically, for Pepper, its face was static, and not animated which caused the negative reactions. For Buddy, whose face was animated in a cartoonish way, the screen on which the face was displayed shared its functionality as a monitor to show information, like weather or news, which was perceived ambivalently. While Jibo employed the same strategy, its face, being a metaphor for the eye avoided heavy criticism. This suggests, that it is not just the face, but specifics of the animation as well as whether it shares its functionality with a general-purpose screen or has a dedicated space, which plays an important role in perceiving an SDR in a favorable light.

This also invites reflection on anthropomorphic perception of robotic features, whether the robots are humanoid or not. The interviews showed that social behavior is enough to engage people in imposing certain standards on how robots should perform, and that extends to facial expressions. Specifically, the static face of Pepper was referred to as creepy, we argue, precisely because it was static, while the social expectation would be for it to move and express/emote.

Overall, given how important face recognition in particular, is to humans (whether the face is robotic or not) [

73,

74], it is all the more important to ensure that faces, if SDRs have them, should be designed in such a way as not to promote revulsion in people. Even if it does not play a large functional role in the robot overall, it is a component to which potential users will pay significant attention.

From our interviews, the solution seems to lie in making robotic faces very abstract, metaphorical, and not human-like.

As to the design, we have encountered more favorable views with a more static SDR (Jibo), which, for the sake of functionality, provides a useful way of circumventing some of the physical limitations SDRs may have. While great progress has been made in mobility of robots (Boston Dynamics provides multiple examples with respect to both shape and sustained mobility [

77]), it is unlikely that the price for such developments will drop sufficiently for the technology to be mass-adopted and mass-produced for the general consumer market in the near future. Considering this, it seems like a useful design feature to purposefully limit SDRs’ mobility, or at least to provide an option of doing so for the consumer, lest SDRs become a burden for their users.

5.1.2. Function

Two types of functionality were distinguished in our study: social and utilitarian. Regarding the intended social aspects of the SDR, as a part of its functionality, participants expressed skepticism about it being the selling point and rather preferred it to be a nice side aspect, one to which they might warm up after prolonged use, when the value of the device for the user becomes more than its utility. From this, we conclude that designers should support the appeal of SDRs through a utilitarian case and focus on social aspects of their use sparingly, until an end user reaches the stage when an SDR is more than a utility, and has developed a sentimental value. While social aspects in robots constitute active response and engagement which is related to likeability [

62] and social skills in robots are welcome in the home environment [

2], our study suggests more specifically, that sociality is not a substitute for utility. This accords well with previous studies, as discussed in de Graaf’s work [

2]. Another aspect of social functionality—emotions of non-compliance and having a personal opinion could be implemented to facilitate a more diverse interaction between SDRs and their owners. While academic models of emotions in robots include both positive and negative emotions (e.g., [

78,

79]), we are yet to see a widespread commercial application of this approach.

When it comes to utility, there is indeed very little to be said about SDRs that would elevate them above a phone, a tablet, or a computer. It may be a good telecommunication idea to have a dynamic camera when you talk to people through the internet, as you can dynamically track and focus on who is speaking or what they are trying to show you; however, the question still stands: “is this single application worth hundreds of dollars?”. The proposed functionality of the SDRs we evaluated, faces stiff competition from other devices on the market that can already do their respected jobs faster, better and in a more convenient way than SDRs can. We conclude that SDRs need to seek their own niche on the market, rather than trying to copy existing functions from specialized devices, unless they can be performed somehow better or in a more convenient way. One suggestion as to what that niche could be comes from participants who expressed a desire for SDRs to utilize their physical platforms, e.g., using their hands to pick up objects or their mobility to move controllable cameras around and be available to the user without much hassle. Seeing how quick interactions are with existing technologies compared to SDRs (e.g., typing a query into a search engine and reading off the answer vs. telling an SDR what to look for and waiting for it to tell you back what you want to know), a utility that SDRs provide must also be convenient and easily accessible so that the adopters would not have a need to invest significant initial time and effort to understand how an SDR works. Otherwise, we believe that users will fall back to their familiar technologies that they already know how to use, and SDRs will not become fully appropriated/domesticated. We foresee innovative uses of SDRs coming from the community or modders, developers and enthusiasts of SDR applications, and it is encouraging that all SDRs tested in this study have, at least in theory, an open API through which anyone can create a use case for them. However, as it stands now, SDRs are likely to struggle to be adopted and accepted as preferred devices over the more specialized competition of phones, tablets and laptops.

Apart from practical implications for design, we arrive at an important question of preconception of SDRs: why would people think of an SDR as a primarily utilitarian device? There may be a few reasons. One of them comes from the marketing material itself, which tries to point out specifically utilitarian uses of SDRs. This was the case for Jibo and Buddy, but not for Pepper. Accordingly, reactions to Jibo and Buddy were similar and participants often compared the two as being roughly equivalent. Pepper, however, received a harsher critique, but at the same time a more human-like treatment, specifically pertaining to its expression of emotions.

We estimate that, because the technology is new, the outlook on SDRs being utilitarian devices, edutainment or social companions could come both from previous notions of similar devices, cultural expectations, and from the way these devices are demonstrated to potential users. Another factor at play could be the lack of clear distinction between whether SDRs are intended for utilitarian or hedonic purposes. Recent studies of people’s attitudes towards robots indicate these as possible culprits [

46,

63,

80]. All in all, in the absence of physical devices, we consider customer expectation management a critical factor in SDR adoption.

5.1.3. Security and Privacy

The issue of domestic security in general and privacy in particular takes a new spin with SDRs. Social robots are by their nature interactive via dialogue and use cameras to get around. The closest thing to the kind of security/privacy issues expressed by our participants we can find in mobile phones, but even those are static and a lot more controllable than SDRs, which can move around and act more independently. This is compounded by a further concern, which is known to affect all connected digital devices—the danger of hacking and exploitation. This was not only raised as a concern by almost every participant but also caused them to elaborate further on the idea of trusting SDRs with the tasks they are advertised to help with. Specifically, Buddy was advertised as a “camera on wheels” which you could connect to and check on your house. However, both our participants and experts in IoT security express distrust over giving this much power to the robot without adequate digital security, which a lot of IoT devices lack [

81].

The lack of trust in this particular technology is novel, given the duality of agency in SDRs—the agency of the robot itself and the agency of anyone taking control of the robot. The topic of robot trust is more pressing as we move towards robots with increasing potential for independent action (observable from consideration of key terms in ACM Library papers). Trust is usually evaluated separately for the robots that are autonomous (especially in military settings, e.g., [

82]), and robots that are teleoperated, whether users control the system themselves (discussed as early as 2004 [

83]), or whether controlled by a person users already trust (e.g., [

84]). In our case, however, an untrusted party is introduced, namely a potential malicious hacker, who hijacks control of the SDR without the owner knowing about it.

The point is all the more serious, given that every participant who refused to adopt (even potentially) an SDR for a further study had done so on the basis of lack of trust in SDRs and the potential for third-party hacking and being observed.

We conclude two points from this: first, that a jump in autonomy and the capability to record the private life of individuals should be at least matched with a comparable jump in security of such systems, to the point where increased security of these devices is made apparent and accountable to potential users; and second, because of the autonomy participants experienced a form of “othering”, where they distinguish an SDR as something foreign and potentially dangerous.

A separate, deeper discussion is required to assess whether an SDR can testify against its owner—will it be a witness or evidence? Which sets of rights and responsibilities will an SDR be covered by should a legal dispute arise? Digital forensics can utilize computers, phones and other devices for the purposes of criminal investigation, but SDRs are a new ground with their ability to record things ambiently, and store them in the cloud, where a server can be located in a country covered by laws separate from those in which the legal case is held. All of this remains an open question for legal authorities and experts to decide upon. A recent motion on this was made by the European Union [

85]; their proposal outlines some basic principles with regards to autonomous robots, although nothing legally binding has been produced yet [

86].

ACM-affiliated academics, among many others, continue to discuss the issues of privacy extensively: there were over 1500 papers published on the topic in 2016 alone [

87]. Non-academic organizations strive to inform the public of the issues of privacy as well, using various means, e.g., The Big Brother Award [

88] and Def Con [

89], among others.

What remains obscure is data ownership and use. Looking at current end-user policies on the web, the language provided is ambiguous. If information from SDRs could be used to “improve our services” or for “marketing purposes”—what does that really mean? It is evident, that people who could use SDRs in their daily lives would need much clearer definitions if they want to be sure about who owns what, and in what capacity, as well as having an option not to participate in some data collection procedures imposed by default settings.

These matters also arise from the perspective of social judgment: you can trust or mistrust a gossip, but if the data pulled from an SDR tells you that someone did something socially unacceptable, it offers much stronger evidence. None of the devices examined in this study advertise themselves as able to lie or omit information. It is unclear whether SDRs can properly authenticate you via voice commands, face recognition, a password or any other means. Further to that, it is not clear at all, whether such authentication grants you any privileges over any other users.

Overall, security and privacy are towards the top of the list of priorities, as our participants consistently raised them as concerns.

5.1.4. Emotional Consequences of Owning SDRs

Some participants expressed their concerns of children engaging with SDRs. This is a valid concern supported by work with children who interacted with much simpler robotic systems like Furbies and RoboSapien as discussed by Turkle [

13]. As children experiment with what is acceptable in social interaction, there is a danger that because SDRs, much like their simpler predecessors, do not seem to have negative responses, it will teach children that a thing such as violence in social interaction is a permissible behavior. This line of reasoning is supported by Turkle in her experiments with children using such technological toys [

13].

These reactions, combined with the previous points on autonomy represent the kind of conflicting views on SDRs that participants tried to resolve. On the one hand, there is a desire to be in control of the situation, be it the ability to control the robot, or monitor the relationships that children form with robots; on the other hand, people expressed a desire for SDRs to have their own internal states, opinions and the ability to argue with their owners, elevating the robots from being tools to being companions. This tug of war shows once more that SDRs are on a shaky ground between utilities and social beings capable of partnership with their human interlocutors—but not fully fitting into either category.

We further speculate that SDRs, if widely adopted, may ironically prompt many people to re-connect with their relatives, children and elders, manifesting what our participants have shared about cherishing human-to-human relationships in the face of robotic competition. While this may sound to the detriment of SDRs, if a proportion of the general population re-connects with their relatives, it is all for the better for people who prefer purely human interaction. For those who prefer human-robot interaction, especially in social care (a trend most studied in the elderly, e.g., [

90]), a widespread use of SDRs capable of social care would be beneficial to those who need or prefer home treatment plans—something that would also ease pressure from hospitals and other care facilities. An additional benefit relates to loneliness that people experience in the absence of a person to talk to, which may often happen with people in an unfamiliar social environment, the elderly being left alone and children challenged to integrate in new social circles or otherwise excluded. To this end, SDRs may provide the social support needed, seeing how loneliness can create an emotional connection to robots [

91].

Such considerations link to the competition between people and SDRs and doubts over human-SDR relationships being genuine. It also posits that participants envision SDR communication with people to be almost equal to the human-human communication with the implication that at least some people may be content to communicate exclusively with SDRs and avoid other people.

Separate consideration should be reserved for robots behaving emotionally. We have seen that the anthropomorphic SDR (Pepper) did not meet heavy criticism when it came to showing human-like emotions for the same reason, we argue, that humans do not criticize other humans when they show such emotions, even though they cannot be sure that they actually experience something that an emotion represents in expressed behavior. Philosophically, this lack of assurance that others actually feel what you feel is referred to as the “philosophical zombies” phenomenon. In a more practical sense, we understand for example, that actors are not subjected to the pain they behaviorally express, yet there is no revulsion or criticism of that. Such empathy is what we think we see when people do not negate Pepper its emotions.

On the other hand, the physical appearance of Jibo and Buddy, makes them much less relatable, much like we do not see emotions being widely ascribed to furniture or even to plants, even though plants are alive (as Jibo and Buddy pretend to be). For this reason, we argue that emotions for abstract SDRs should be developed in tandem with any physical necessities they might have. As an example, when Buddy, being a mobile SDR, which feeds from its battery, has a low battery charge, it might express it as sleepiness. In this way, there is no contradiction between what it needs (a recharge) and how it expresses it (sleepiness).

5.1.5. Impact of Results on Building Relationships with SDRs

There has been much speculation and concern about social robots entering our lives on a more permanent basis at some future point. Much of this speculation focuses on ethical considerations or issues of control. This study sheds light on the immediate present, and touches on the issues of people and their relationships with current SDRs. Such issues, while not as glorified, are, perhaps, more important to study and deal with now.

Firstly, convenience and utility would be fundamental in building a lasting relationship between people and SDRs, for they would sustain such relationships long enough for people to develop sentimentality towards robots. Because people may need quite a long time to warm up to SDRs [

45], convenience and utility will be the primary drivers of relationships over the first few months from the moment an SDR is brought home.

The second crucial point is understanding SDRs through their representations of emotions and needs, and having those not be considered inappropriate. While robots may portray human explorations and anxieties of self, it is in our power to develop robotic cultures that are distinct from human culture, but understandable by people.

The third issue is trust. We’ve touched numerous times on hackability of SDRs, data ownership and disclosure—all of these points come together to ensure that communicating with SDRs is safe. Long-lasting relationships of friendship or romance simply won’t take place if, at some level, a person would just wait for an SDR to betray them.

Finally, the fourth point is for an SDR to have its own “mind”. Internal robotic states have consistent traction in academic and commercial work, although the extent of implementation of them varies. However, as identified in this article, the quality of an SDR having its own “personhood” and view on the world is an essential part of lifting it from being a utility to being a companion.

These four very practical steps that can be technologically implemented now would serve as a sturdy basis to develop the kind of robots that we can like and even love.

5.2. Improvement of Existing Models of Acceptance

Many of the interview questions were inspired by the work of de Graaf [

45]. Factors that she pointed out as significant at the pre-adoption stage like perceived usefulness, realism, and anthropomorphism were heavily discussed by the participants. Based on the results of the interviews, we would further add security/privacy, as consolidated from exposure to art, literature and marketing material about SDRs. We believe that this fruitfully extends the first phase of de Graaf’s existing model of technology acceptance as well as DRE. It is understandable that privacy/security concerns were less pronounced with the robots they used to form the models, as those robots were a lot more controllable, while containing fewer sensors.

5.3. Limitations

One important consideration needs to be observed to interpret this study: presenting a robot via a video provokes different reaction vs. presenting a robot physically, as pointed out by Seo et al. [

92]. We had to default to videos in the absence of the physical robots. However, this is what early adopters have to deal with as well when choosing to adopt an SDR: they do not receive a physical copy to try out, but often have to invest money well in advance, as is the case with crowd-funded campaigns like Indiegogo and Kickstarter, where SDRs may be brought to implementation.

We also have to take into account that the sample of people interviewed was far from representing the general population. Instead, we interviewed a group of people who would likely be early adopters of such technology. At the end of the interview many have expressed their desire to adopt at least one of the SDRs shown, to tinker with it further in the privacy of their homes.

5.4. Open Questions for Future Work

A number of open questions have surfaced during this study, the most prominent of which we provide below:

Which sensors do people perceive as intrusive and which they do not?

What should the regulations be for the data generated by an SDR?

What legal stance should SDRs have? Should they have the right not to disclose incriminating information about their partners or themselves?

6. Conclusions

We have conducted a series of interviews to understand the reasoning academic researchers and students use when evaluating SDRs based on their adverts. Firstly, we found that our audience preferred abstract form and face for an SDR. We argued that the uncanny effect was the reason behind more human-like SDRs being perceived by our participants as creepy and terrifying. This therefore warrants a more abstract shape like a furniture item or a kinetic-art-type device. This was further supported by complexities that SDRs need to overcome with regards to the home environment: be it stairs, rugs, piles of clothes on the floor, etc.

We also focused specifically on facial expression of SDRs, arguing that, while it may not be the most functional part of a robot, it was the most commented upon, and therefore plays an important role in how SDRs were perceived by our participants, regardless of other qualities. We argued that from our interviews, the solution lies in making the face more abstract, thus, again, reducing the uncanny effect.

We also found out that our participants insisted on utilitarian functions to be clear, and better than that which existing devices can offer, before they can appreciate an SDR. The most common devices SDRs were compared to were: a mobile/smart phone, a tablet, a laptop and a home automation system. Our interviews uncovered the desires of our participants, for the robots to do more things physically (e.g., with their hands), rather than rely on an array of connected devices.

Secondly, our participants were concerned with privacy and ownership of data an SDR generates. We highlighted the need for clearly identified rules, laws and guidelines for both the countries where SDRs are used, the companies, which would be responsible for data collection, storage and processing, and the individuals, who should know how to opt in or out of SDRs’ data collection.

Relatedly, there were major user concerns around SDR hackability. We have argued that SDR hackability poses a new kind of concern, given the duality of agency in SDRs as autonomous agents, and as teleoperated agents; and given the mobility and autonomy of SDRs compared to existing consumer electronic devices, with otherwise similar capabilities, e.g., phones and laptops. We have argued further that a jump in autonomy and the capability to record the private life of individuals should be at least matched with a comparable jump in security of such systems, to the point where increased security is made very apparent to potential users.

Our interviews also uncovered that because of the autonomy of these SDRs, participants experienced a form of othering, where they distinguished an SDR as something foreign and potentially dangerous within their homes. We have strengthened the hackability and trust concerns by pointing out that all participants who refused to even potentially adopt an SDR for a further study, voiced lack of trust as their reason behind the refusal.

Thirdly, we uncovered a number of emotional factors affecting the judgment of SDRs. One such factor was the notion of replacing of people with robots, where SDRs take social roles currently positioned as human-exclusive activity (e.g., caring for children), or where SDRs are positioned as comparable social partners to humans.

The second emotional difficulty was accepting SDRs’ social behavior as genuine. We argued that simply copying human social cues (e.g., yawning, showing facial expressions of being annoyed) was not the best way for SDR-to-human communication. Our participants rejected such notions and stated, that it was not in the “nature” of a robot. Instead, we showed that an anthropomorphic effect could have been in place for the Pepper robot to avoid heavy criticism in this regard, but for more abstract SDRs we proposed a system, where social cues are related to the actual needs of the robots (e.g., showing facial expression of sleepiness or tiredness when a robot needs a re-charge) to make them more compatible with how our participants thought about social cues coming from robots.

The third factor was the lack of etiquette and the difficulty in identifying SDRs as either utilities or companions. We have highlighted a number of issues related to both the practical, legal side of how to identify an SDR, and a more philosophical question of where the boundary lies between a social agent and a utilitarian machine.

Finally, based on our results and discussions, we offered practical advice for the design of future SDRs, and the four steps that can be implemented to increase the chances of fostering long-lasting relationships between people and their SDRs. These steps were: to emphasize convenience and utility to the users, as those factors will drive the relationships for the first few months before a sentimental value is attached to an SDR; to create the kind of social cues that people can identify with, but which are simultaneously specific to the needs of SDRs; to make sure that SDRs are both transparent and accountable with how they treat the data they collect, and secure, thus improving trust; and to create a robotic personality, which we argue would be a significant factor in lifting an SDR from the status of “utility” and towards a status of “companion”.