4. Experimental Results

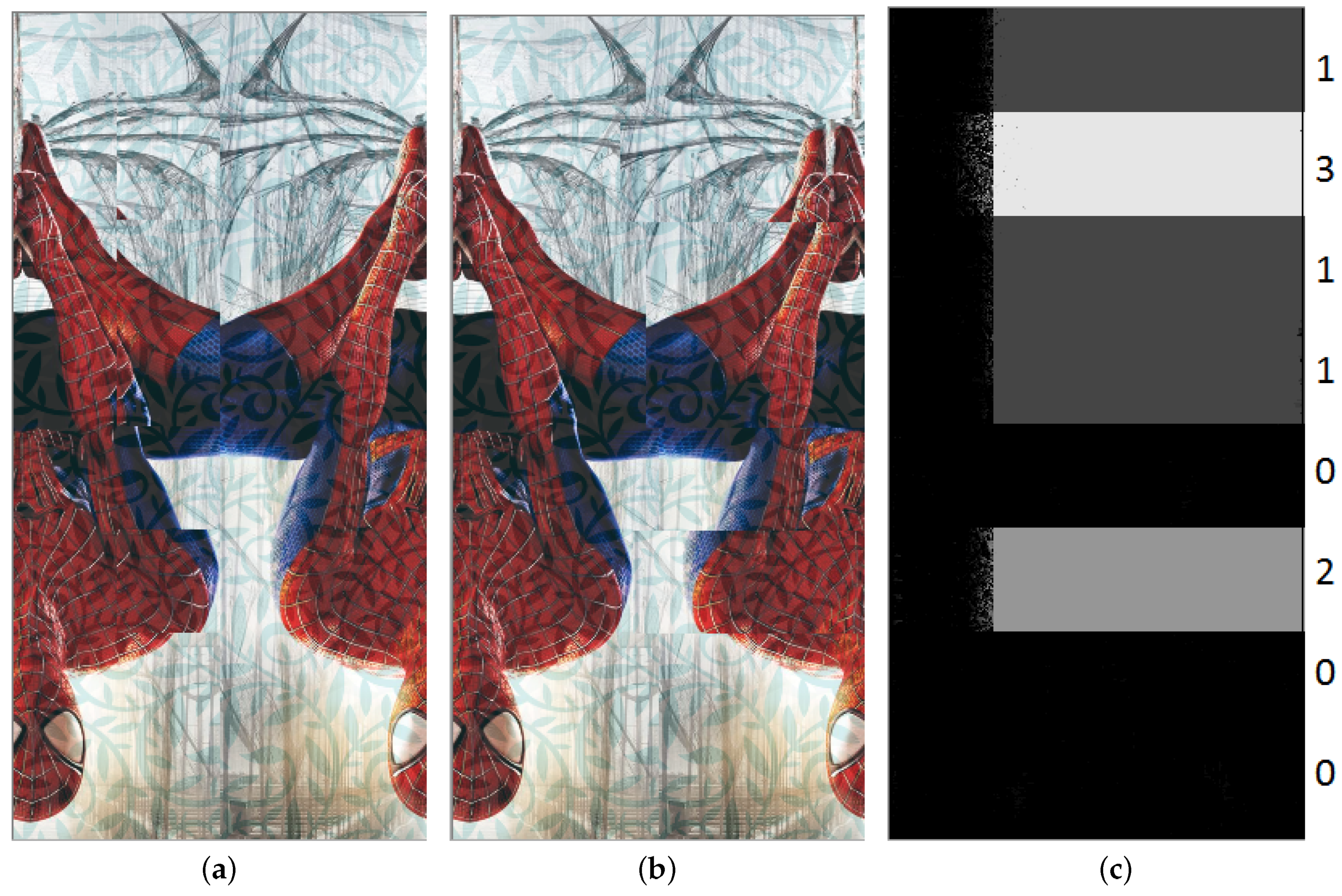

Is our proposed PMBC tag sensitive to different lighting conditions, noises, and scaling? We have tested these criteria using some PMBC tags as shown in

Figure 13. The tag also optically hides the same unique number 29,984. We have carried out three experiments to observe the ability to correctly decoded the unique number 29,984 after the following effects:

alternating the brightness and contrast of the tag;

scaling its original image;

adding noises and raindrops.

IrfanView tool (

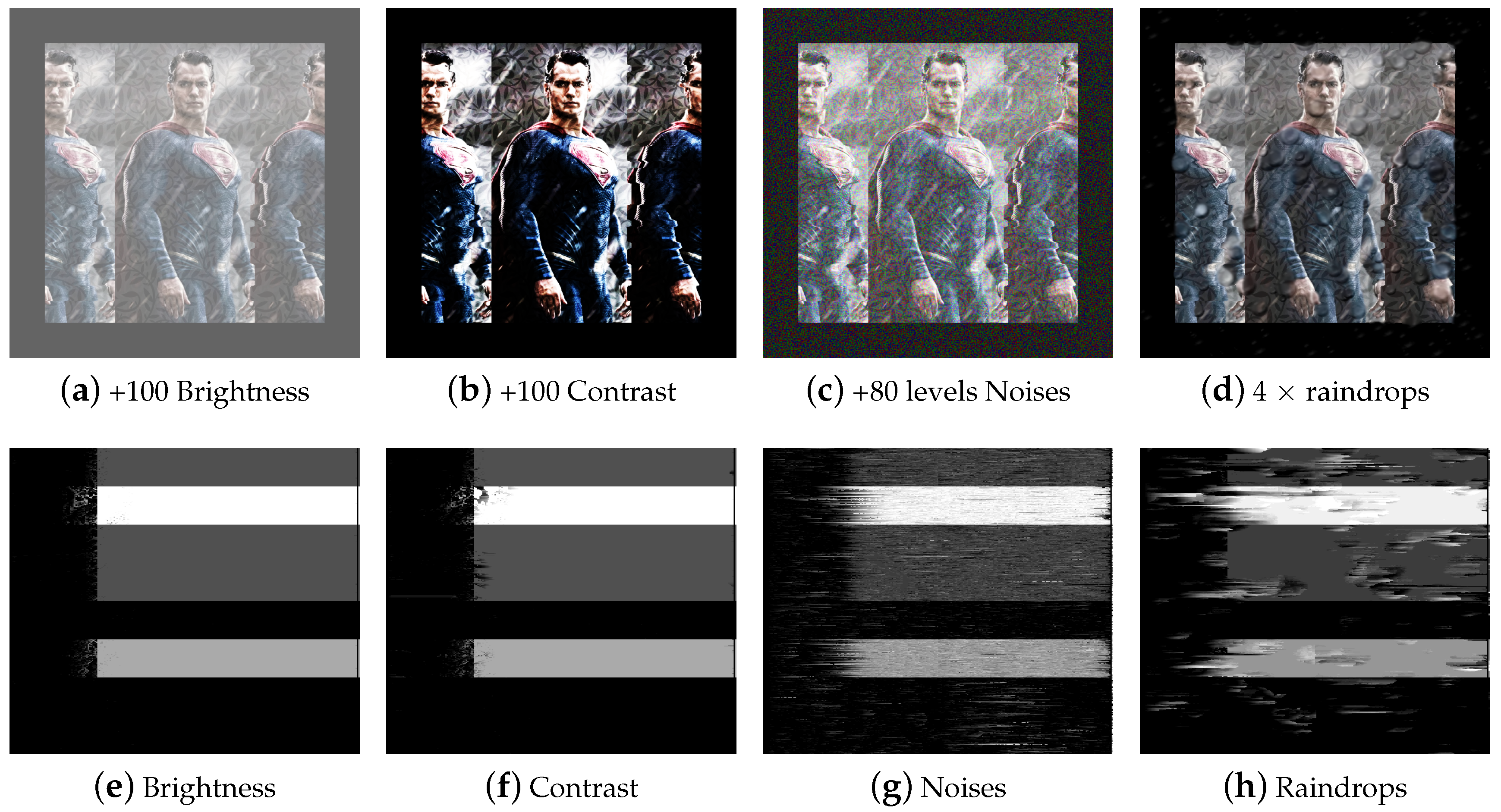

http://www.irfanview.com/) was used to alternate the sizes, apply effects, and add noises to the samples before checking the decoded results. Some IrfanView’s output examples are shown in

Figure 13.

4.1. Lighting Conditions (Brightness and Contrast)

Different brightness (from −200 to +200) and contrast (from −100 to +100) levels were applied on top of the “Superman” tag (Top rows of

Figure 13). The numerical results achieved from the affected images were compared with the original number 29,984, and the correctness is recorded in

Table 6. Overall, the reconstructed barcodes were scanned correctly in all tested brightness and contrast effects.

4.2. Different Scaling

We also rescaled the “Superman” tag to many different sizes:

,

,

,

,

,

. For each tag sample, we collected the decoded number again; results are shown in

Table 7. The decryption only failed when the image size was reduced to

pixels.

4.3. Noises and Raindrop Effects

We have applied some of the other IrfanView’s built-in effects: random noises and raindrops on the “Superman” tag (

Figure 13). The correctness of decoded numbers at each test is collected and showed in

Table 8. From the results, random noises in the image seem to create noises in the reconstructed disparity map. However, the decoded number stay correct at 29,984 for all the testing images. In fact, an image with maximised noise level: +255 (added in InfraView) is still correctly decoded. Raindrops also create effects on disparity maps; however, it is not strong enough to affect the correctness of the result.

The above three effects have been tested; overall, our PMBC tag is found to be relatively robust under various changes in brightness, contrast, noises, and raindrop effects. The only failure is detected when image sample is smaller than pixels. This can be improved by employing other image processing methods such as histogram equalisation, to identify different levels of depths more reliably.

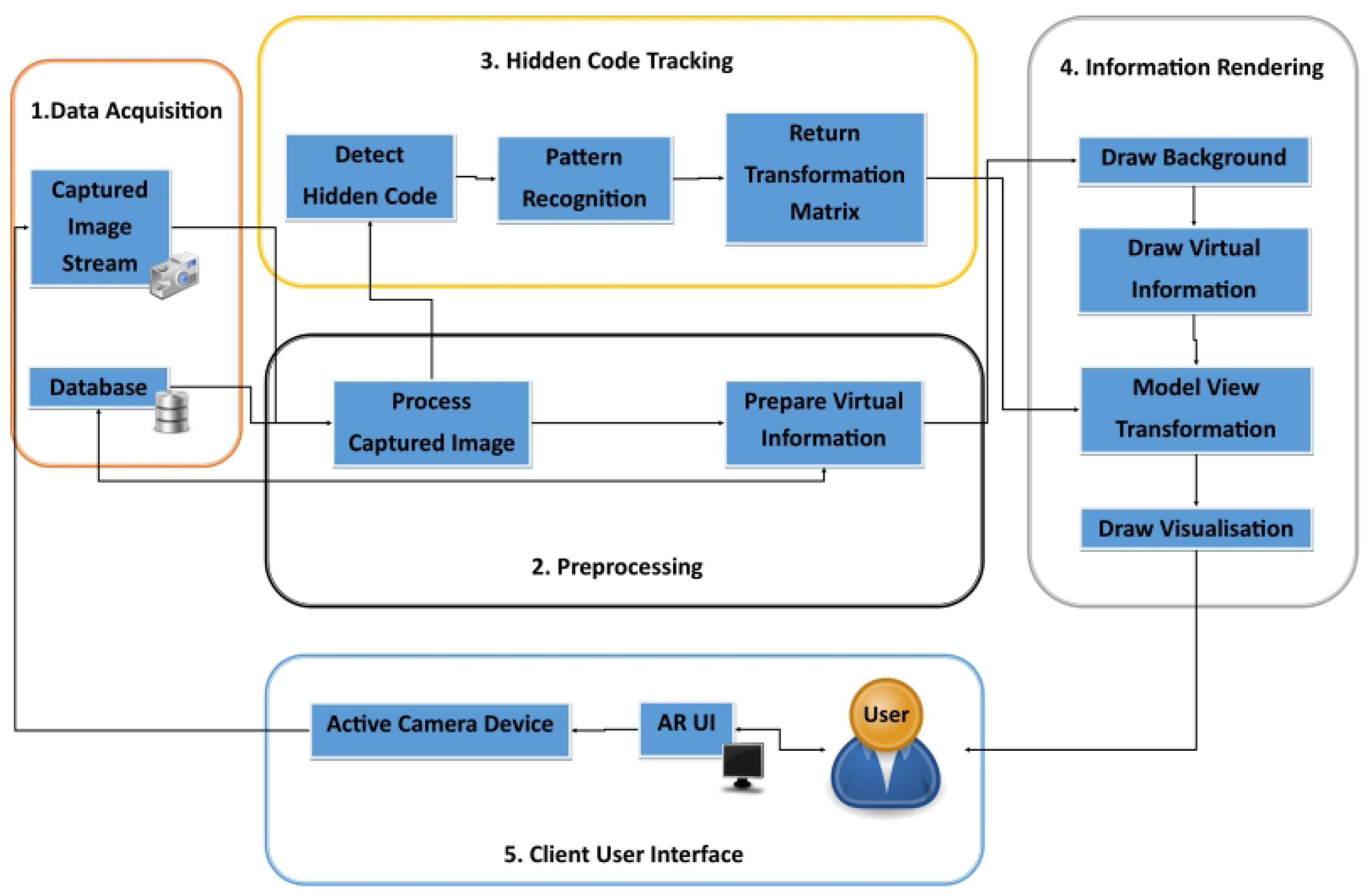

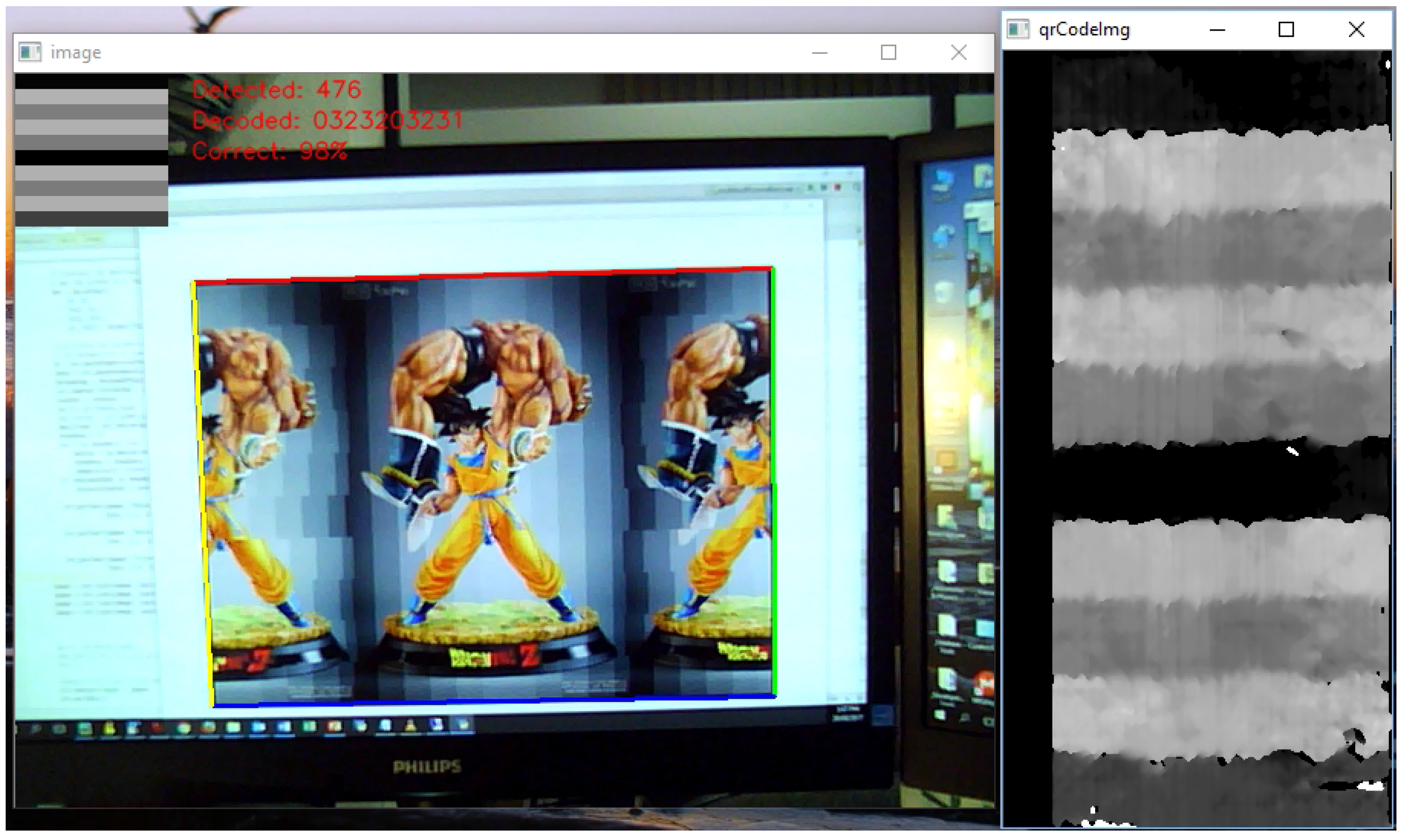

4.4. Experiment Evaluation Using Computer Webcam

To further test the proposed method, we have carried out some real-life tests using available commodity webcam. The webcam we used has a relatively low resolution of

pixels, and the detected tag was also quite small. The image tag was created storing a base four array: “0323203231”. Thus, in the built AR Tag, there were ten bars, and each contains four levels of depths. It was equivalent to 20 bits of binary numbers. In experiment one, shown in

Figure 14, we pointed the webcam to acquire a live picture of the marker. At the same time, the rectangular tag was detected and segmented to reconstruct the raw disparity map, as shown on the right of the figure. To reduce the effects of noises, we read each bar and take the median intensity value (of the whole bar) as the decoded element to store in the decoded array. A cleaner multi-level barcode was then displayed on the top left of the screen (as shown). The decoded array was compared with the original array to find the percentage of correct detection and decryption. As displayed in the screenshot, 98% of the detected tag returned correct results (over 476 frames).

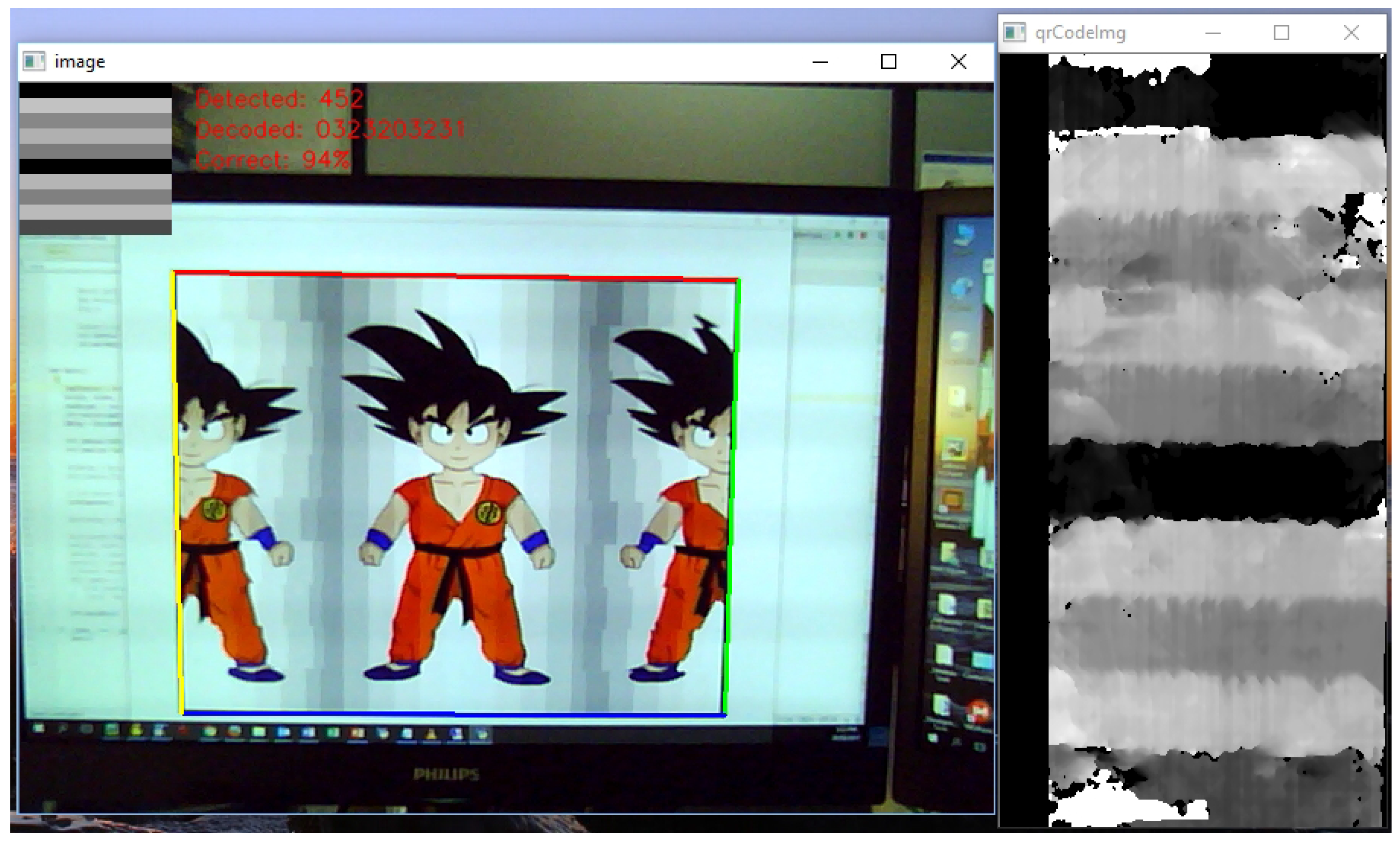

Similarly, we tested the system with another image holding the same array of “0323203231”. However, the encoding image contained a large blank white background (as shown in

Figure 15). The correct detection rate was now slightly reduced, to 94%, over 452 frames of testing video stream.

In both cases, we have retrieved a relatively high success rate of detection and decryption. On those that return incorrect results, only one or two elements of the ten are different. Therefore, a self-correction mechanism can be applied to the stored array, in order to increase the chance of the correct decryption data, which is the plan for our future work.

5. Limitation and Future Work of Embedding QR Codes

While our evaluation shows a promising result for Pictorial Marker with Hidden 3D Bar-code (PMBC), there are still some limitations that deem this marker not fully ready for the market. Firstly, we were unable to perform the test on different camera models. The current camera has a resolution of 1920 × 1080, which is considered a high standard for the camera models being used around the world. Secondly, the time constraints and limited budget prevented a usability test from being set up. Even though we managed to receive positive feedback from around five persons (all of them are our colleagues), stating that this marker had a better appearance than the traditional ones; it is still uncertain if PMBC can provide a more attractive AR marker for the general public. Shortly, we will try to fine-tune the marker algorithm as well as address any possible issues. Bigger evaluations with more test devices and users will also be performed to ensure that PMBC is suitable for the mass public.

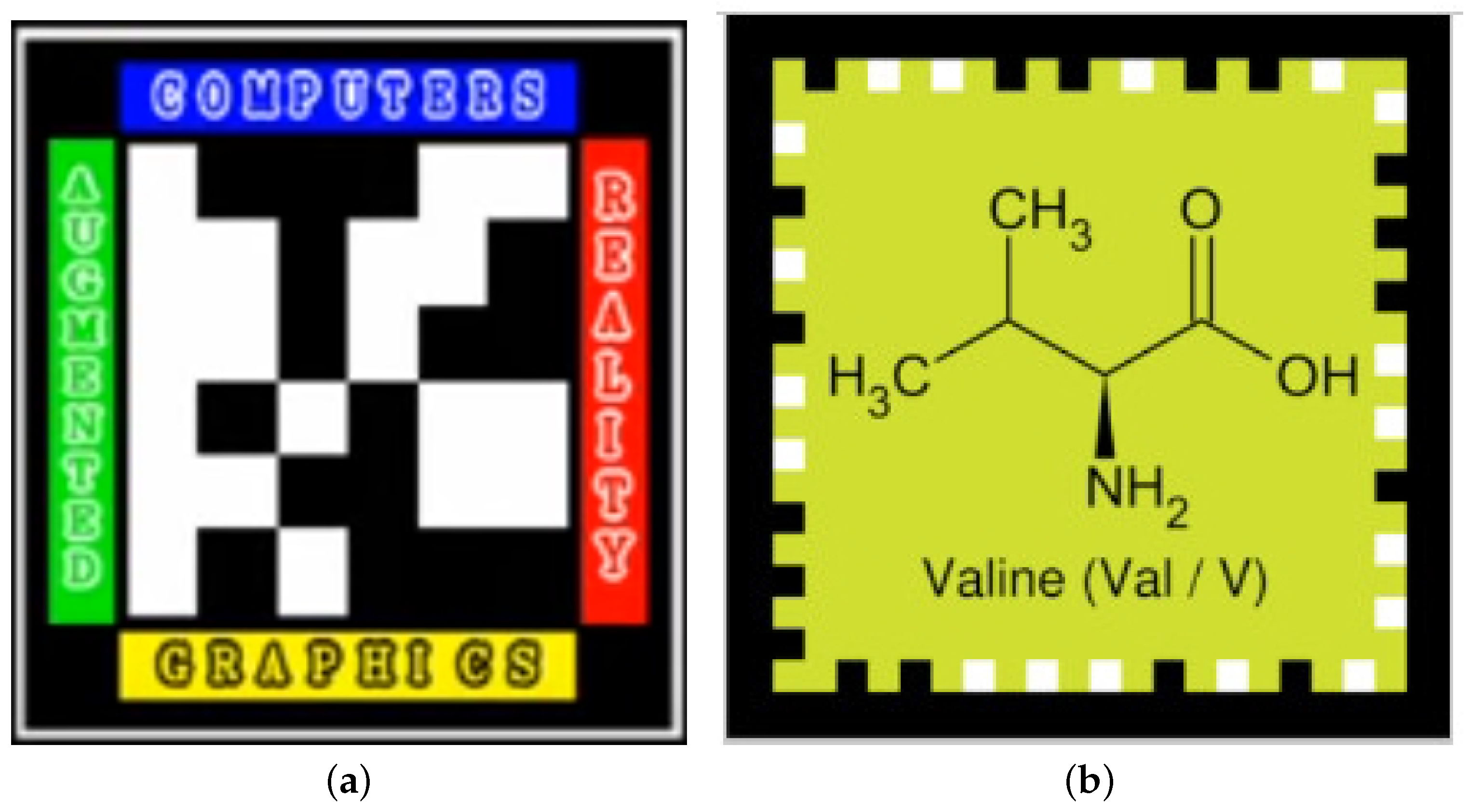

Furthermore, some may argue that our AR Tag is only capable of encoding a small amount of information (similar to 1D bar-code styles). However, our method of embedding binary data can be extended to hold 2D bar-codes such as VSCode, Aztec Code, Data Matrix, Maxi Code, PDF417, Visual Code, ShotCode, and QR Code [

37]. Much more information can be encoded with almost no extra cost. In this research, we opt to use QR code, as it is one of the most popularly used today.

The embedding of a QR code in this AR Tag is similar to the method described above previously—except, half of the black dots of the QR code are mirrored on the left edge of the coloured picture, and the other half is on the right. Two example results are shown in

Figure 16. The QR code stores the URL of the AUT website

http://aut.ac.nz, which was then optically presented in two different AR Tag images: AUT campus study space (top) and an image of the Hobbiton village (bottom).

To extract back the QR code, we simply cut the image in half and subtract one from another to get : . Ater that, an adaptive threshold process on is applied to retrieve the binary raw QR code image.

At this stage, a threshold

is chosen at 20; thus, any pixel in

is black if its intensity is less than 20 in value. The resulting QR code is shown in

Figure 17-left. This image is relatively noisy, presented with many thin black lines and it may not be reliably readable by many bar-code scanners.

Fortunately, such thin lines can easily be cleared using simple image morphology [

48]. We start with

N times dilation to clear away the unreliable thin lines:

Then, it follows by another

N times erosion to retrieve back the reliable black pixels of the QR codes:

with

is a

morphology kernel.

The final extracted result of QR code after image morphology is shown in

Figure 17-right. Most of the unreliable think lines are erased. It is clearly readable by the Bakodo Scanner Phone App (

http://bako.do), one of the available bar-code scanning software programs.

6. Conclusions

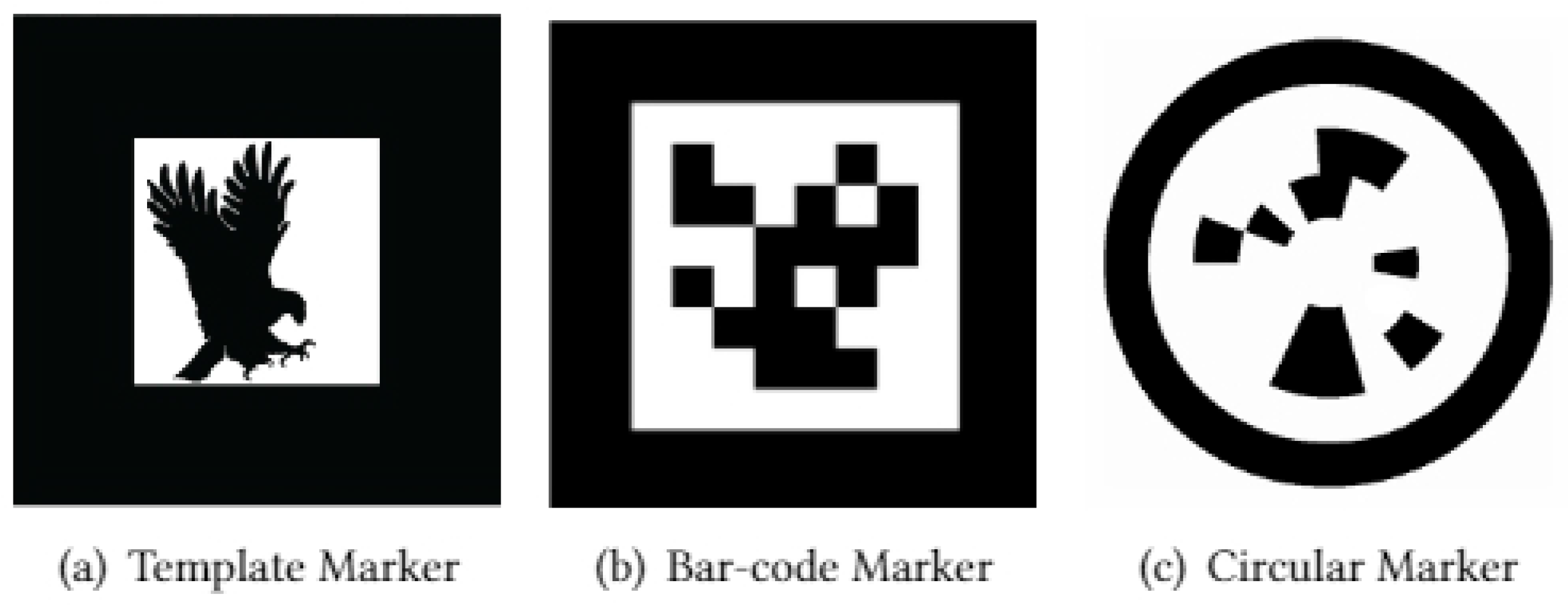

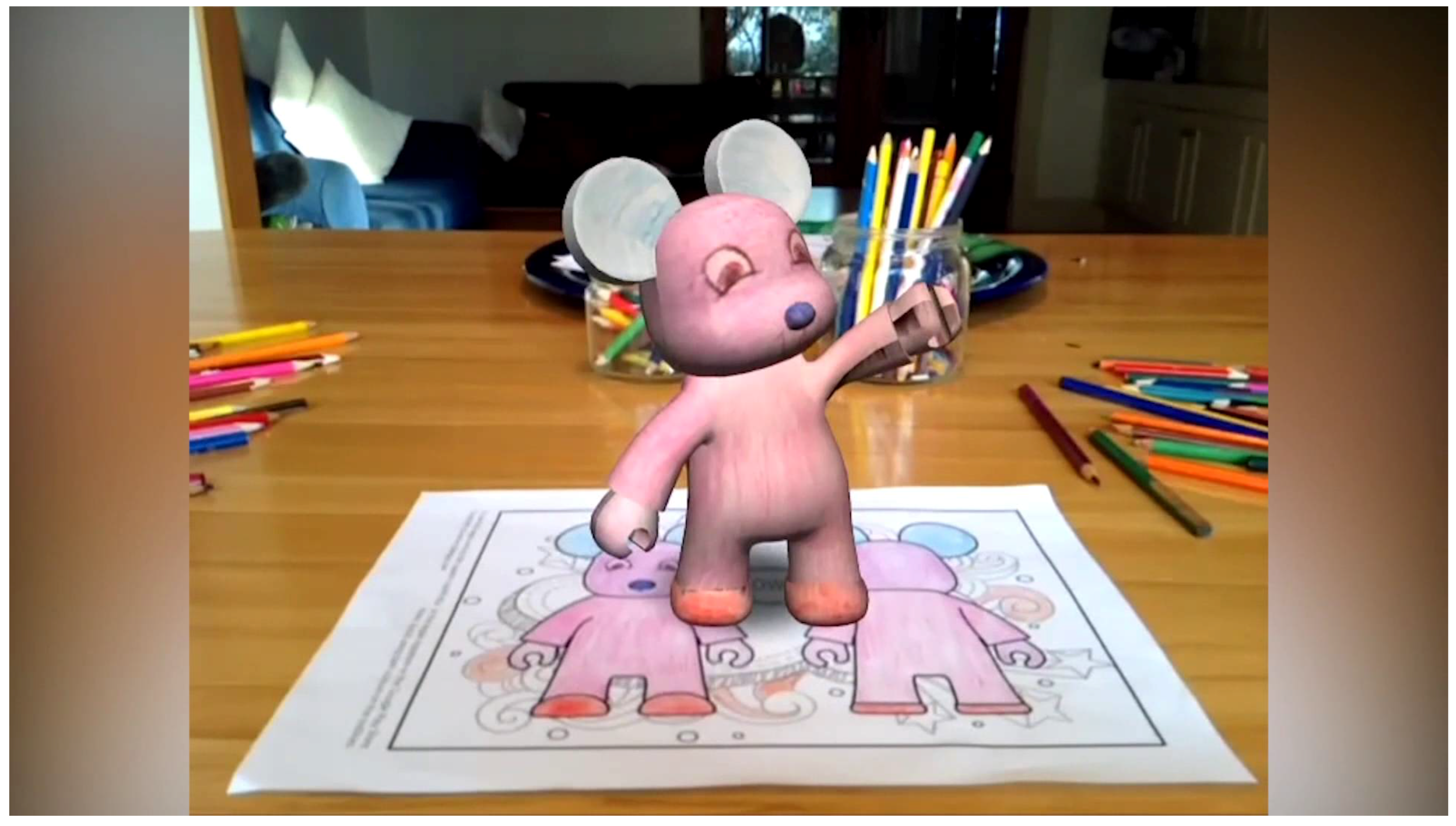

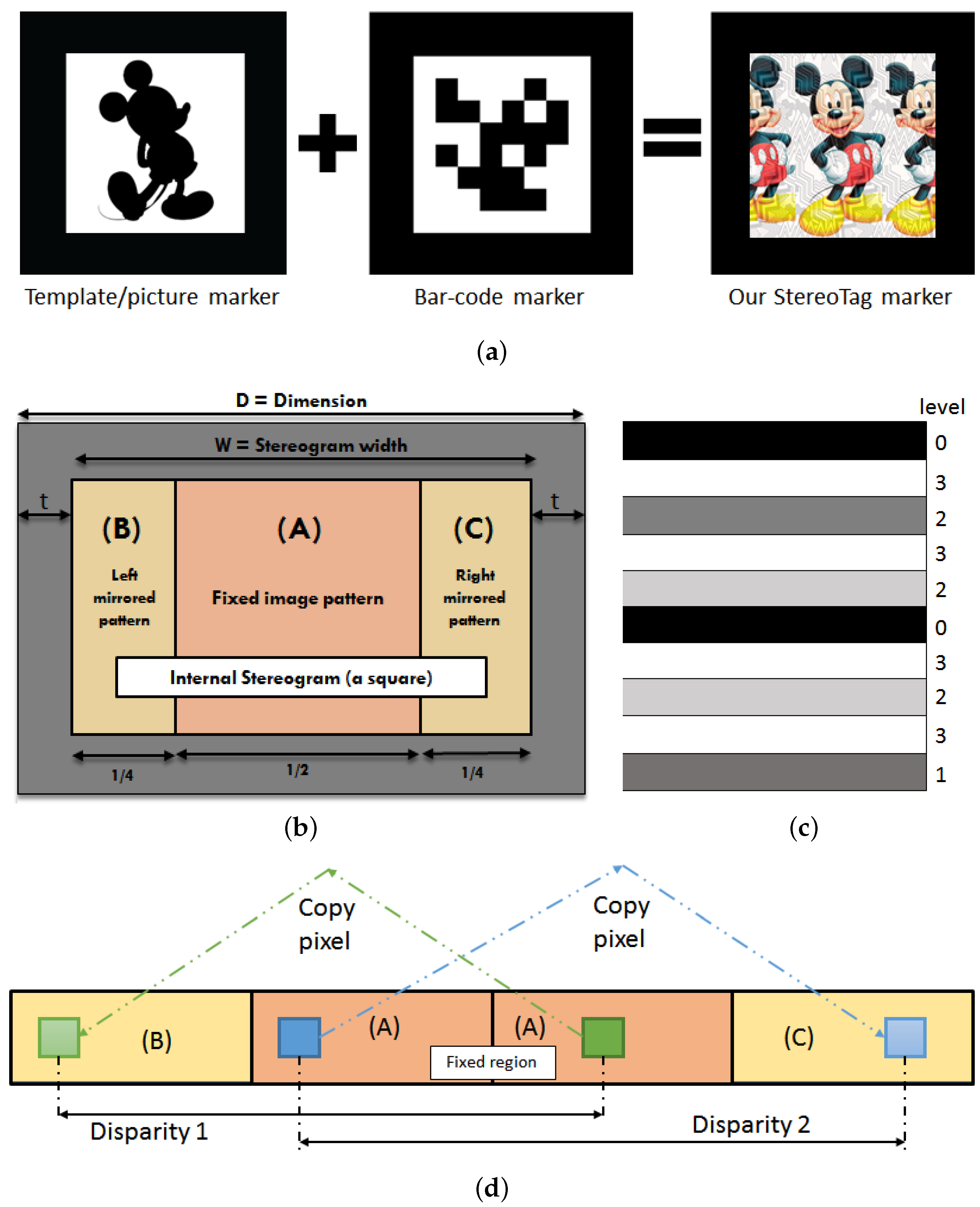

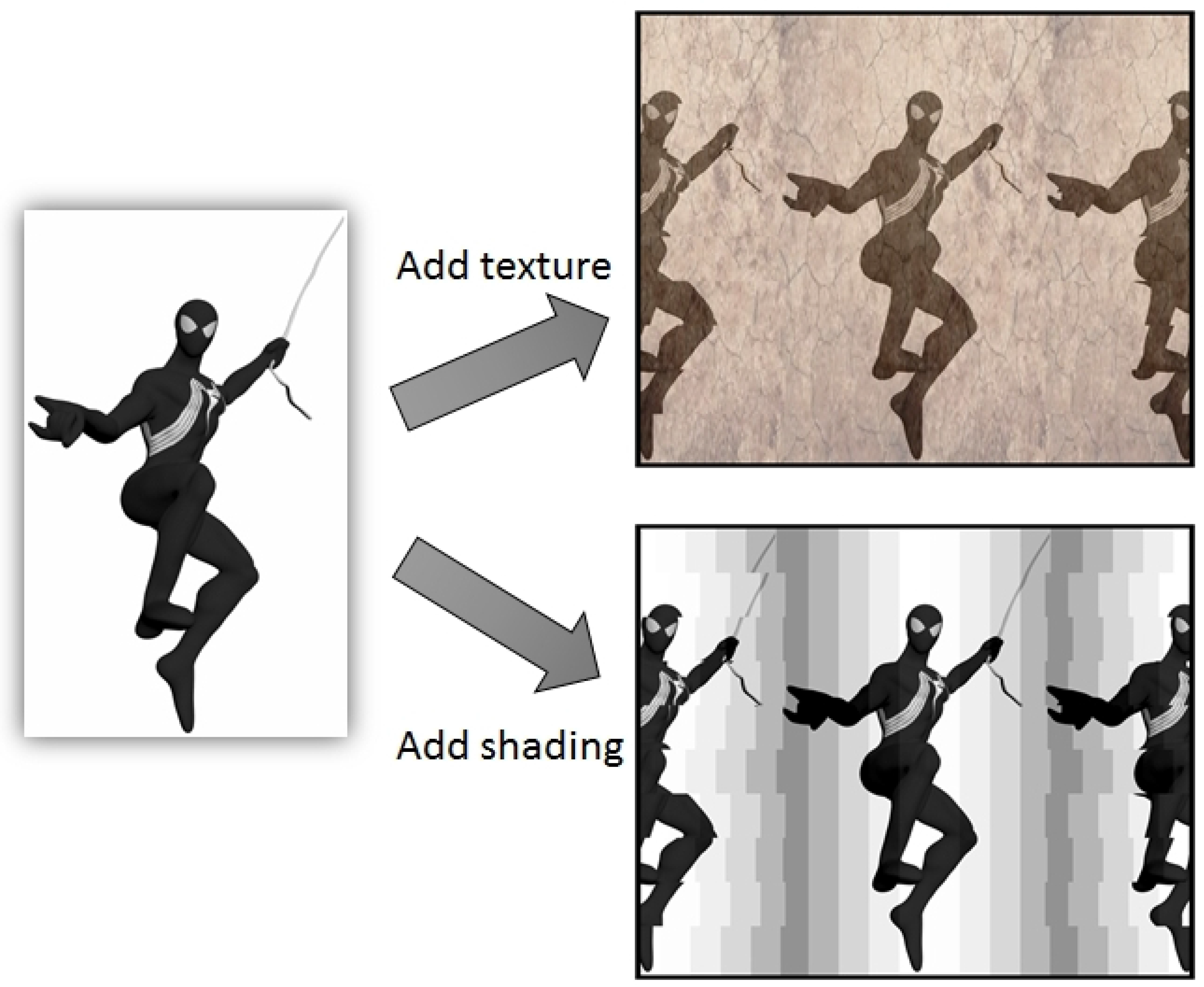

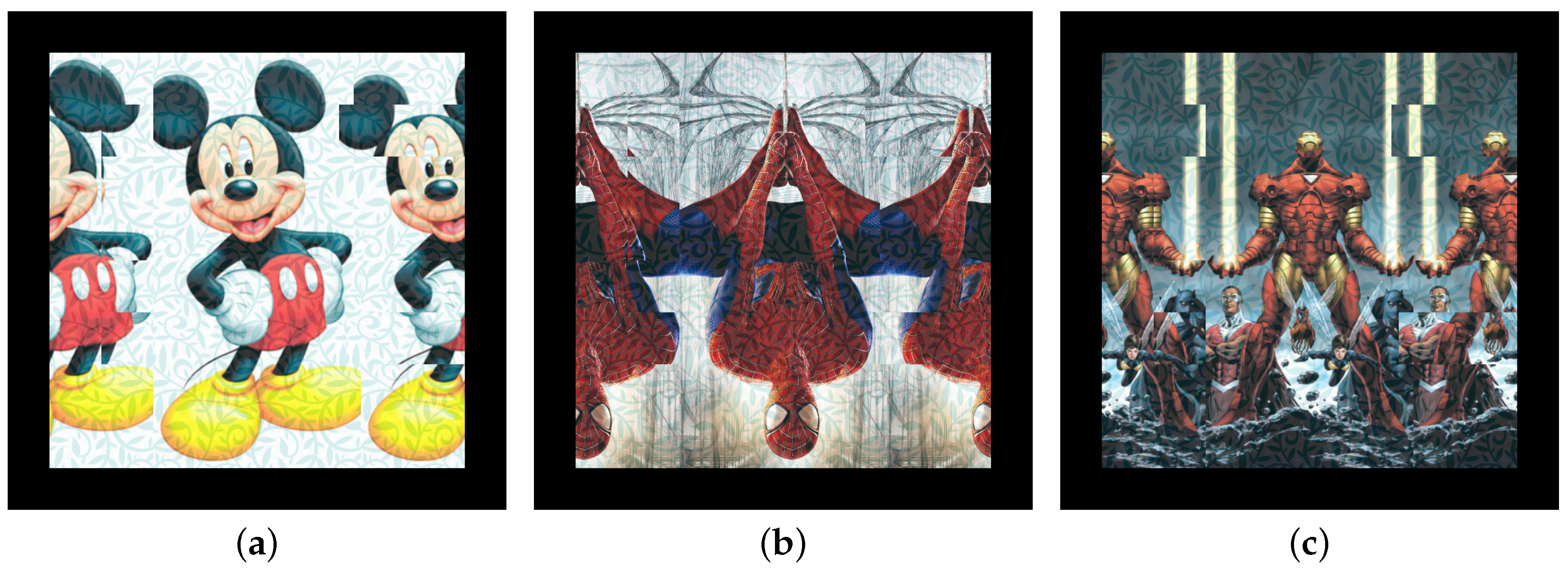

We propose a new way that combines the bar-code marker into the original picture of the advertisements. The method employs our proposed Pictorial Marker with a Hidden 3D Bar-code (PMBC) and current AR technology to enhance perception and usability of the viewers. PMBC is an AR tag that is capable of hiding multi-level optically (3D) bar-codes. It is a single image stereogram concealing a depth-coded bar-code while maintaining a complete colour picture of what it is presenting. Most of today’s similar applications are using either pictorial markers or barcode markers; each has its own disadvantages. Firstly, pictorial markers have meaningful appearances, but they are computationally expensive and indecisively identified by computers. Secondly, bar-code markers contain decodable numeric data, but they look uninteresting and uninformative. Our method not only keeps the original information of the advertisement but also encodes a broad range of numeric data. Moreover, a PMBC tag is relatively robust under various conditions; thus, it could be a promising approach to be used in future AR applications.