Enhancing Trust in Autonomous Vehicles through Intelligent User Interfaces That Mimic Human Behavior

Abstract

:1. Introduction

1.1. Autonomous Vehicles

1.2. Trust in Automation

1.3. Taking over Control

1.4. Anthropomorphism

1.5. Transparency

1.6. Polite Communication

1.7. The Current Study

2. Methods

2.1. Participants

2.2. Design

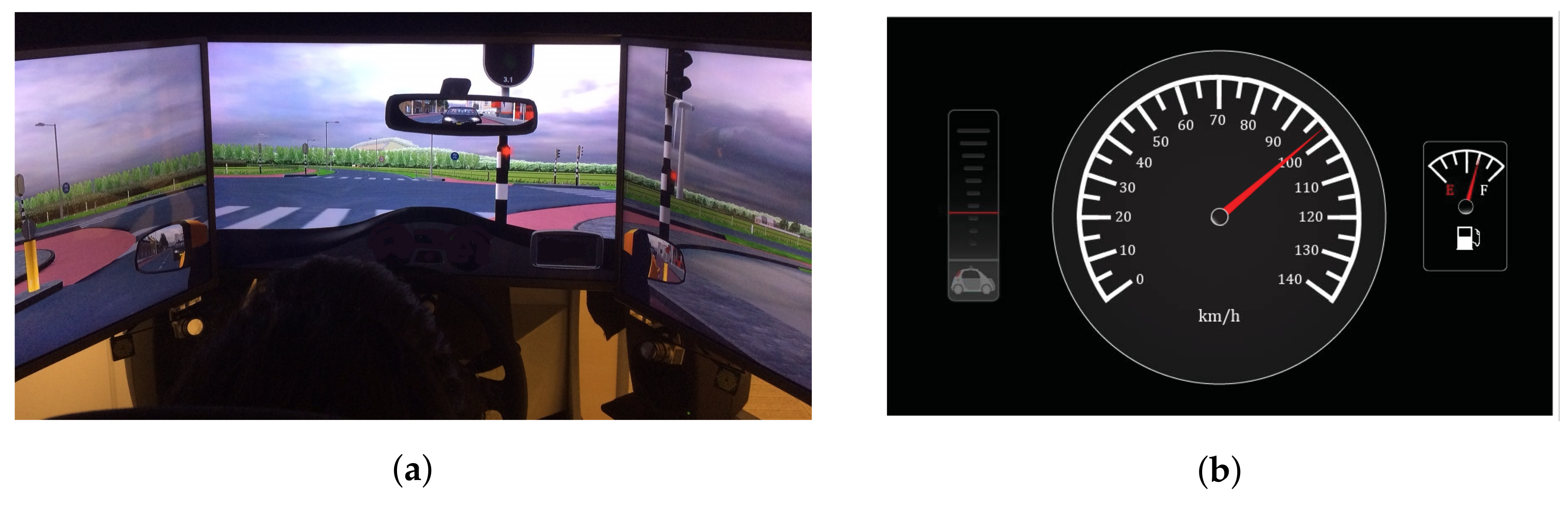

2.3. Materials

2.4. Procedure

3. Results

4. Discussion

Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Schoettle, B.; Sivak, M. A Survey of Public Opinion About Autonomous and Self-Driving Vehicles in the US, the UK, and Australia. 2014. Available online: https://deepblue.lib.umich.edu/handle/2027.42/108384 (accessed on 21 September 2018).

- McTear, M.; Callejas, Z.; Griol, D. The Conversational Interface: Talking to Smart Devices; Springer: New York, NY, USA, 2016. [Google Scholar]

- Weng, F.; Angkititrakul, P.; Shriberg, E.E.; Heck, L.; Peters, S.; Hansen, J.H. Conversational in-vehicle dialog systems: The past, present, and future. IEEE Signal Process. Mag. 2016, 33, 49–60. [Google Scholar] [CrossRef]

- Weng, F.; Varges, S.; Raghunathan, B.; Ratiu, F.; Pon-Barry, H.; Lathrop, B.; Zhang, Q.; Bratt, H.; Scheideck, T.; Xu, K.; et al. CHAT: A conversational helper for automotive tasks. In Proceedings of the Ninth International Conference on Spoken Language Processing, Pittsburgh, PA, USA, 17–21 September 2006. [Google Scholar]

- Meschtscherjakov, A.; Tscheligi, M.; Pfleging, B.; Sadeghian Borojeni, S.; Ju, W.; Palanque, P.; Riener, A.; Mutlu, B.; Kun, A.L. Interacting with Autonomous Vehicles: Learning from other Domains. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems; ACM: New York, NY, USA, 2018; p. 30. [Google Scholar]

- Waymo. Report on Autonomous Mode Disengagements for Waymo Self-Driving Vehicles in California; Disengagement Report; Waymo: Mountain View, CA, USA, 2016. [Google Scholar]

- Fortune. Who’s Winning the Self-Driving Car Race? 2018. Available online: https://www.bloomberg.com/news/features/2018-05-07/who-s-winning-the-self-driving-car-race (accessed on 21 September 2018).

- Fagnant, D.J.; Kockelman, K. Preparing a nation for autonomous vehicles: Opportunities, barriers and policy recommendations. Trans. Res. Part A Policy Pract. 2015, 77, 167–181. [Google Scholar] [CrossRef]

- Singh, S. Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey; Technical Report; National Highway Traffic Safety Administration: Washington, WA, USA, 2015.

- Peden, M.; Scurfield, R.; Sleet, D.; Mohan, D.; Hyder, A.A.; Jarawan, E.; Mathers, C.D. World Report on Road Traffic Injury Prevention; World Health Organization: Geneva, Switzerland, 2004. [Google Scholar]

- Elder, R.W.; Voas, R.; Beirness, D.; Shults, R.A.; Sleet, D.A.; Nichols, J.L.; Compton, R. ; Task Force on Community Preventive Services. Effectiveness of ignition interlocks for preventing alcohol-impaired driving and alcohol-related crashes: A Community Guide systematic review. Am. J. Prev. Med. 2011, 40, 362–376. [Google Scholar] [CrossRef] [PubMed]

- Mortimer, R.G. Perceptual factors in rear-end crashes. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; SAGE Publications: Thousand Oaks, CA, USA, 1990; Volume 34, pp. 591–594. [Google Scholar]

- Hills, B.L. Vision, visibility, and perception in driving. Perception 1980, 9, 183–216. [Google Scholar] [CrossRef] [PubMed]

- Victor, T.W.; Tivesten, E.; Gustavsson, P.; Johansson, J.; Sangberg, F.; Ljung Aust, M. Automation Expectation Mismatch: Incorrect Prediction Despite Eyes on Threat and Hands on Wheel. Hum. Factors 2018. [Google Scholar] [CrossRef] [PubMed]

- Ting, P.H.; Hwang, J.R.; Doong, J.L.; Jeng, M.C. Driver fatigue and highway driving: A simulator study. Physiol. Behav. 2008, 94, 448–453. [Google Scholar] [CrossRef] [PubMed]

- Young, K.L.; Salmon, P.M.; Cornelissen, M. Distraction-induced driving error: An on-road examination of the errors made by distracted and undistracted drivers. Accid. Anal. Prev. 2013, 58, 218–225. [Google Scholar] [CrossRef] [PubMed]

- Haboucha, C.J.; Ishaq, R.; Shiftan, Y. User preferences regarding autonomous vehicles. Trans. Res. Part C Emerg. Technol. 2017, 78, 37–49. [Google Scholar] [CrossRef]

- Choi, J.K.; Ji, Y.G. Investigating the importance of trust on adopting an autonomous vehicle. Int. J. Hum.-Comput. Interact. 2015, 31, 692–702. [Google Scholar] [CrossRef]

- Mayer, R.C.; Davis, J.H.; Schoorman, F.D. An integrative model of organizational trust. Acad. Manag. Rev. 1995, 20, 709–734. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef] [PubMed]

- Hoff, K.A.; Bashir, M. Trust in automation integrating empirical evidence on factors that influence trust. Hum. Factors J. Hum. Factors Ergon. Soc. 2015, 57, 407–434. [Google Scholar] [CrossRef] [PubMed]

- Pu, P.; Chen, L. Trust building with explanation interfaces. In Proceedings of the 11th International Conference on Intelligent User Interfaces; ACM: New York, NY, USA, 2006; pp. 93–100. [Google Scholar] [Green Version]

- Häuslschmid, R.; von Buelow, M.; Pfleging, B.; Butz, A. Supporting Trust in Autonomous Driving. In Proceedings of the 22nd International Conference on Intelligent User Interfaces; ACM: New York, NY, USA, 2017; pp. 319–329. [Google Scholar]

- McKnight, D.H.; Chervany, N.L. Trust and distrust definitions: One bite at a time. In Trust in Cyber-Societies; Springer: New York, NY, USA, 2001; pp. 27–54. [Google Scholar]

- Howard, D.; Dai, D. Public perceptions of self-driving cars: The case of Berkeley, California. In Proceedings of the Transportation Research Board 93rd Annual Meeting, Washington, DC, USA, 12–16 January 2014; Volume 14. [Google Scholar]

- Kyriakidis, M.; Happee, R.; de Winter, J.C. Public opinion on automated driving: results of an international questionnaire among 5000 respondents. Trans. Res. Part F Traffic Psychol. Behav. 2015, 32, 127–140. [Google Scholar] [CrossRef]

- Radlmayr, J.; Gold, C.; Lorenz, L.; Farid, M.; Bengler, K. How traffic situations and non-driving related tasks affect the take-over quality in highly automated driving. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; Sage Publications Sage CA: Los Angeles, CA, USA, 2014; Volume 58, pp. 2063–2067. [Google Scholar]

- De Winter, J.C.; Happee, R.; Martens, M.H.; Stanton, N.A. Effects of adaptive cruise control and highly automated driving on workload and situation awareness: A review of the empirical evidence. Trans. Res. Part F Traffic Psychol. Behav. 2014, 27, 196–217. [Google Scholar] [CrossRef] [Green Version]

- Ma, R.; Kaber, D.B. Situation awareness and workload in driving while using adaptive cruise control and a cell phone. Int. J. Ind. Ergon. 2005, 35, 939–953. [Google Scholar] [CrossRef]

- Rothbaum, F.; Weisz, J.R.; Snyder, S.S. Changing the world and changing the self: A two-process model of perceived control. J. Personal. Soc. Psychol. 1982, 42, 5. [Google Scholar] [CrossRef]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Duffy, B.R. Anthropomorphism and the social robot. Robot. Auton. Syst. 2003, 42, 177–190. [Google Scholar] [CrossRef] [Green Version]

- Epley, N.; Waytz, A.; Cacioppo, J.T. On seeing human: A three-factor theory of anthropomorphism. Psychol. Rev. 2007, 114, 864–886. [Google Scholar] [CrossRef] [PubMed]

- Eyssel, F.; Kuchenbrandt, D.; Bobinger, S. Effects of anticipated human–robot interaction and predictability of robot behavior on perceptions of anthropomorphism. Proceedings of The 6th International Conference on Human-Robot Interaction, Lausanne, Switzerland, 6–9 March 2011; pp. 61–67. [Google Scholar]

- Kennedy, J.S. The New Anthropomorphism; Cambridge University Press: Cambridge, UK, 1992. [Google Scholar]

- Waytz, A.; Morewedge, C.K.; Epley, N.; Monteleone, G.; Gao, J.H.; Cacioppo, J.T. Making sense by making sentient: Effectance motivation increases anthropomorphism. J. Personal. Soc. Psychol. 2010, 99, 410–435. [Google Scholar] [CrossRef] [PubMed]

- Khan, R.F.; Sutcliffe, A. Attractive agents are more persuasive. Int. J. Hum.-Comput. Interact. 2014, 30, 142–150. [Google Scholar] [CrossRef]

- Qiu, L.; Benbasat, I. Evaluating anthropomorphic product recommendation agents: A social relationship perspective to designing information systems. J. Manag. Inf. Syst. 2009, 25, 145–182. [Google Scholar] [CrossRef]

- Waytz, A.; Heafner, J.; Epley, N. The mind in the machine: Anthropomorphism increases trust in an autonomous vehicle. J. Exp. Soc. Psychol. 2014, 52, 113–117. [Google Scholar] [CrossRef]

- Strait, M.; Vujovic, L.; Floerke, V.; Scheutz, M.; Urry, H. Too much humanness for human–robot interaction: Exposure to highly humanlike robots elicits aversive responding in observers. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems; ACM: New York, USA, 2015; pp. 3593–3602. [Google Scholar]

- Andersen, K.E.; Köslich, S.; Pedersen, B.K.M.K.; Weigelin, B.C.; Jensen, L.C. Do We Blindly Trust Self-Driving Cars. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction; ACM: New York, NY, USA, 2017; pp. 67–68. [Google Scholar]

- Złotowski, J.A.; Sumioka, H.; Nishio, S.; Glas, D.F.; Bartneck, C.; Ishiguro, H. Persistence of the Uncanny Valley. Geminoid Stud. Sci. Technol. Hum. Teleoper. Andr. 2018, 163–187. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y. Machines and mindlessness: Social responses to computers. J. Soc. Issues 2000, 56, 81–103. [Google Scholar] [CrossRef]

- Reeves, B.; Nass, C. The Media Equation: How People Treat Computers, Television, And New Media Like Real People and Places; CSLI Publications and Cambridge University Press: Cambridge, UK, 1996. [Google Scholar]

- Hock, P.; Kraus, J.; Walch, M.; Lang, N.; Baumann, M. Elaborating Feedback Strategies for Maintaining Automation in Highly Automated Driving. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications; ACM: New York, NY, USA, 2016; pp. 105–112. [Google Scholar]

- Khastgir, S.; Birrell, S.; Dhadyalla, G.; Jennings, P. Calibrating trust to increase the use of automated systems in a vehicle. In Advances in Human Aspects of Transportation; Springer: New York, NY, USA, 2017; pp. 535–546. [Google Scholar]

- Verberne, F.M.; Ham, J.; Midden, C.J. Trust in smart systems: Sharing driving goals and giving information to increase trustworthiness and acceptability of smart systems in cars. Hum. Factors 2012, 54, 799–810. [Google Scholar] [CrossRef] [PubMed]

- Koo, J.; Kwac, J.; Ju, W.; Steinert, M.; Leifer, L.; Nass, C. Why did my car just do that? Explaining semi-autonomous driving actions to improve driver understanding, trust, and performance. Int. J. Interact. Des. Manuf. (IJIDeM) 2015, 9, 269–275. [Google Scholar] [CrossRef]

- Grice, H.P. Logic and conversation. In Syntax and Semantics, Vol 3: Speech Acts; Cole, P., Morgan, J., Eds.; Academic Press: New York, NY, USA, 1975; pp. 41–58. [Google Scholar]

- Nass, C.I.; Brave, S. Wired for Speech: How Voice Activates and Advances The Human-Computer Relationship; MIT Press: Cambridge, UK, 2005. [Google Scholar]

- Takayama, L.; Nass, C. Driver safety and information from afar: An experimental driving simulator study of wireless vs. in-car information services. Int. J. Hum.-Comput. Stud. 2008, 66, 173–184. [Google Scholar] [CrossRef]

- Schroeder, J.; Epley, N. The sound of intellect speech reveals a thoughtful mind, increasing a job candidate’s appeal. Psychol. Sci. 2015, 26, 877–891. [Google Scholar] [CrossRef] [PubMed]

- Qiu, L.; Benbasat, I. Online consumer trust and live help interfaces: The effects of text-to-speech voice and three-dimensional avatars. Int. J. Hum.-Comput. Interact. 2005, 19, 75–94. [Google Scholar] [CrossRef]

- Jensen, C.; Farnham, S.D.; Drucker, S.M.; Kollock, P. The effect of communication modality on cooperation in online environments. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems; ACM: New York, NY, USA, 2000; pp. 470–477. [Google Scholar]

- Helldin, T.; Falkman, G.; Riveiro, M.; Davidsson, S. Presenting system uncertainty in automotive UIs for supporting trust calibration in autonomous driving. In Proceedings of the 5th International Conference on Automotive User Interfaces and Interactive Vehicular Applications; ACM: New York, NY, USA, 2013; pp. 210–217. [Google Scholar]

- Merritt, S.M.; Heimbaugh, H.; LaChapell, J.; Lee, D. I trust it, but I don’t know why effects of implicit attitudes toward automation on trust in an automated system. Hum. Factors J. Hum. Factors Ergon. Soc. 2013, 55, 520–534. [Google Scholar] [CrossRef] [PubMed]

- Jian, J.Y.; Bisantz, A.M.; Drury, C.G. Foundations for an empirically determined scale of trust in automated systems. Int. J. Cognit. Ergon. 2000, 4, 53–71. [Google Scholar] [CrossRef]

- Warner, R.M.; Sugarman, D.B. Attributions of personality based on physical appearance, speech, and handwriting. J. Personal. Soc. Psychol. 1986, 50, 792. [Google Scholar] [CrossRef]

- Monahan, J.L. I Don’t Know It But I Like You The Influence of Non-conscious Affect on Person Perception. Hum. Commun. Res. 1998, 24, 480–500. [Google Scholar] [CrossRef]

- Ruijten, P.A.; Bouten, D.H.; Rouschop, D.C.; Ham, J.; Midden, C.J. Introducing a rasch-type anthropomorphism scale. In Proceedings of the 2014 ACM/IEEE International Conference On Human-Robot Interaction; ACM: New York, NY, USA, 2014; pp. 280–281. [Google Scholar]

- Bond, T.G.; Fox, C.M. Applying the Rasch Model: Fundamental Measurement in the Human Sciences; Psychology Press: Hove, UK, 2013. [Google Scholar]

- Thill, S.; Riveiro, M.; Lagerstedt, E.; Lebram, M.; Hemeren, P.; Habibovic, A.; Klingegård, M. Driver adherence to recommendations from support systems improves if the systems explain why they are given: A simulator study. Trans. Res. Part F Traffic Psychol. Behav. 2018, 56, 420–435. [Google Scholar] [CrossRef]

- Tintarev, N.; Masthoff, J. A Survey of Explanations in Recommender Systems. In Proceedings of the 2007 IEEE 23rd International Conference on Data Engineering Workshop, Washington, DC, USA, 17–20 April 2007; pp. 801–810. [Google Scholar]

- Sinha, R.; Swearingen, K. The Role of Transparency in Recommender Systems. In CHI ‘02 Extended Abstracts on Human Factors in Computing Systems; ACM: New York, NY, USA, 2002; pp. 830–831. [Google Scholar]

- Culley, K.E.; Madhavan, P. A note of caution regarding anthropomorphism in HCI agents. Comput. Hum. Behav. 2013, 29, 577–579. [Google Scholar] [CrossRef]

- Stanton, N.A.; Marsden, P. From fly-by-wire to drive-by-wire: safety implications of automation in vehicles. Saf. Sci. 1996, 24, 35–49. [Google Scholar] [CrossRef]

- Carlson, M.S.; Drury, J.L.; Desai, M.; Kwak, H.; Yanco, H.A. Identifying factors that influence trust in automated cars and medical diagnosis systems. In the Intersection of Robust Intelligence and Trust in Autonomous Systems; IEEE Press: New York, NY, USA, 2014; pp. 20–27. [Google Scholar]

- Hergeth, S.; Lorenz, L.; Vilimek, R.; Krems, J.F. Keep your scanners peeled: Gaze behavior as a measure of automation trust during highly automated driving. Hum. Factors 2016, 58, 509–519. [Google Scholar] [CrossRef] [PubMed]

- Llaneras, R.E.; Salinger, J.; Green, C.A. Human factors issues associated with limited ability autonomous driving systems: Drivers’ allocation of visual attention to the forward roadway. In Proceedings of the 7th International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design, Public Policy Center, University of Iowa, Iowa City, IA, USA, 18 June 2013; pp. 92–98. [Google Scholar]

- Moray, N.; Inagaki, T. Laboratory studies of trust between humans and machines in automated systems. Trans. Inst. Meas. Control 1999, 21, 203–211. [Google Scholar] [CrossRef]

- Payre, W.; Cestac, J.; Delhomme, P. Fully automated driving: Impact of trust and practice on manual control recovery. Hum. Factors 2016, 58, 229–241. [Google Scholar] [CrossRef] [PubMed]

- Abe, G.; Itor, M.; Tanaka, K. Dynamics of drivers’ trust in warning systems. IFAC Proc. Vol. 2002, 35, 363–368. [Google Scholar] [CrossRef]

- Gold, C.; Damböck, D.; Lorenz, L.; Bengler, K. “Take over!” How long does it take to get the driver back into the loop? In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; SAGE Publications Sage: Los Angeles, CA, USA, 2013; Volume 57, pp. 1938–1942. [Google Scholar]

- Nass, C.; Gong, L. Speech interfaces from an evolutionary perspective. Commun. ACM 2000, 43, 36–43. [Google Scholar] [CrossRef]

- De Visser, E.J.; Monfort, S.S.; McKendrick, R.; Smith, M.A.; McKnight, P.E.; Krueger, F.; Parasuraman, R. Almost human: Anthropomorphism increases trust resilience in cognitive agents. J. Exp. Psychol. Appl. 2016, 22, 331–349. [Google Scholar] [CrossRef] [PubMed]

- Eskritt, M.; Whalen, J.; Lee, K. Preschoolers can recognize violations of the Gricean maxims. Br. J. Dev. Psychol. 2008, 26, 435–443. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- De Winter, J.; Van Leuween, P.; Happee, P. Advantages and disadvantages of driving simulators: A discussion. In Proceedings of the Measuring Behavior, Noldus, Utrecht, The Netherlands, 28–31 August 2012; pp. 47–50. [Google Scholar]

- Bartlett, M.S.; Littlewort, G.; Fasel, I.; Movellan, J.R. Real Time Face Detection and Facial Expression Recognition: Development and Applications to Human Computer Interaction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshop, Madison, WI, USA, 16–22 June 2003; Volume 5, pp. 53–53. [Google Scholar]

- Rukavina, S.; Gruss, S.; Hoffmann, H.; Traue, H.C. Facial Expression Reactions to Feedback in a Human- Computer Interaction: Does Gender Matter? Psychology 2016, 7, 356–367. [Google Scholar] [CrossRef]

- Lee, E.J.; Nass, C.; Brave, S. Can computer-generated speech have gender: An experimental test of gender stereotype. In CHI’00 Extended Abstracts on Human Factors in Computing Systems; ACM: New York, NY, USA, 2000; pp. 289–290. [Google Scholar]

- Lee, K.M.; Nass, C. Designing social presence of social actors in human computer interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; ACM: New York, NY, USA, 2003; pp. 289–296. [Google Scholar]

- Nass, C.; Lee, K.M. Does computer-synthesized speech manifest personality? Experimental tests of recognition, similarity-attraction, and consistency-attraction. J. Exp. Psychol. Appl. 2001, 7, 171–181. [Google Scholar] [CrossRef] [PubMed]

| Measure | MGUI | SDGUI | MCUI | SDCUI |

|---|---|---|---|---|

| Trust | 3.702 | 1.434 | 4.455 | 1.268 |

| Perceived intelligence | 3.028 | 1.178 | 3.563 | 1.042 |

| Anthropomorphism | −0.025 | 1.895 | 1.188 | 1.676 |

| Likability | 3.056 | 1.117 | 3.672 | 0.989 |

| Measure | Mlow | SDlow | Mhigh | SDhigh |

|---|---|---|---|---|

| Trust | 3.771 | 1.117 | 4.386 | 1.231 |

| Perceived intelligence | 3.028 | 0.929 | 3.563 | 0.981 |

| Anthropomorphism | 0.230 | 1.736 | 0.933 | 1.616 |

| Likability | 3.122 | 0.898 | 3.606 | 0.868 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruijten, P.A.M.; Terken, J.M.B.; Chandramouli, S.N. Enhancing Trust in Autonomous Vehicles through Intelligent User Interfaces That Mimic Human Behavior. Multimodal Technol. Interact. 2018, 2, 62. https://doi.org/10.3390/mti2040062

Ruijten PAM, Terken JMB, Chandramouli SN. Enhancing Trust in Autonomous Vehicles through Intelligent User Interfaces That Mimic Human Behavior. Multimodal Technologies and Interaction. 2018; 2(4):62. https://doi.org/10.3390/mti2040062

Chicago/Turabian StyleRuijten, Peter A. M., Jacques M. B. Terken, and Sanjeev N. Chandramouli. 2018. "Enhancing Trust in Autonomous Vehicles through Intelligent User Interfaces That Mimic Human Behavior" Multimodal Technologies and Interaction 2, no. 4: 62. https://doi.org/10.3390/mti2040062

APA StyleRuijten, P. A. M., Terken, J. M. B., & Chandramouli, S. N. (2018). Enhancing Trust in Autonomous Vehicles through Intelligent User Interfaces That Mimic Human Behavior. Multimodal Technologies and Interaction, 2(4), 62. https://doi.org/10.3390/mti2040062