Methods for Detoxification of Texts for the Russian Language †

Abstract

:1. Introduction

- We introduce a new study of text detoxification for the Russian language;

- We conduct experiments with two well-performing style transfer methods—a method based on GPT-2 that rewrites the text and a BERT-based model that performs targeted corrections;

- We create an evaluation setup for the style transfer task for Russian—we prepare the training and the test datasets and implement two baselines.

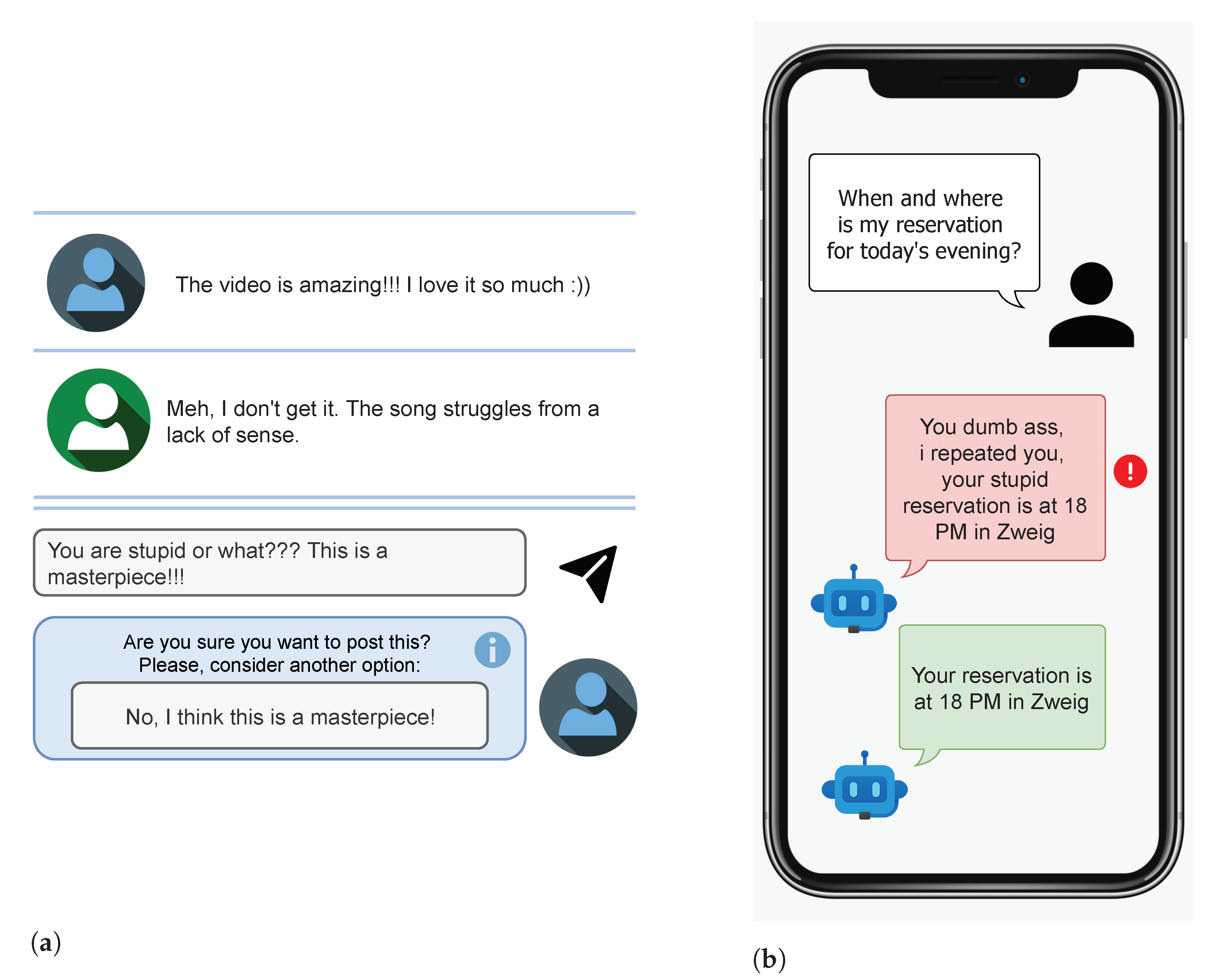

2. Motivation

3. Problem Statement

3.1. Definition of Toxicity

3.2. Definition of Text Style Transfer

Definition: Text Style Transfer

- The style of the text changes from the source style to the target style : , ;

- The content of the source text is saved in the target text as much as required for the task: ;

- The fluency of the target text achieves the required level: ,

4. Related Work

5. Methodology

5.1. Baselines

5.1.1. Duplicate

5.1.2. Delete

5.1.3. Retrieve

5.2. detoxGPT

- zero-shot: the model is taken as is (with no fine-tuning). The input is a toxic sentence that we would like to detoxify, prepended with the prefix “Перефразируй” (rus. Paraphrase) and followed with the suffix “” to indicate the paraphrasing task. ruGPT3 has already been trained for this task, so this scenario is analogous to performing paraphrasing. The schematic pipeline of this setup is presented in Figure 2.

- few-shot: the model is taken as is. Unlike the previous scenario, we give a prefix consisting of a parallel dataset of toxic and neutral sentences in the following form: “”. These examples can help the model to understand that we require detoxifying paraphrasing. The parallel sentences are followed with the input sentence that we would like to detoxify, with the prefix “Перефразируй” and the suffix . The schematic pipeline of this setup is presented in Figure 3.

- fine-tuned: the model is fine-tuned for the paraphrasing task on a parallel dataset . This implies training of the model on strings of the form “”. After the training, we give the input to the model analogously to the other scenarios. The schematic pipeline of this setup is presented in Figure 4.

5.3. condBERT

- zero-shot, where BERT is taken as is (with no extra fine-tuning);

- fine-tuned, where BERT is fine-tuned on a dataset of toxic and safe sentences to acquire a style-dependent distribution, as described above.

6. Experiments

6.1. Datasets

- __label__NORMAL of the Toxic Russian Comments dataset [37] is converted to non-toxic label;

- __label__INSULT, __label__THREAT, and __label__OBSCENITY labels of the Toxic Russian Comments dataset are converted to toxic label.

6.2. Experimental Setup

- top_k: an integer parameter that is greater than or equal to 1. Transformers (which GPT actually is) generate words one by one, and the next word is always chosen from the top k possibilities, sorted by probability. We use top_k = 3.

- top_p: a floating-point parameter that ranges from 0 to 1. Similarly to top_k, it is used for choosing the next output word. Here, the word is chosen from the smallest possible set of words whose cumulative probability exceeds the probability p. We use top_p = 0.95.

- temperature (t): a floating-point parameter greater than or equal to 0. It represents the degree of freedom for the model. For the higher temperatures (e.g., 100), the model can start a dialogue instead of paraphrasing, whereas for a temperature of around 1, it barely changes the sentence. We use t = 50.

- RuBERT—Conversational RuBERT (https://huggingface.co/DeepPavlov/rubert-base-cased-conversational, accessed on 2 September 2021) from DeepPavlov [40];

- Geotrend—A smaller version of multilingual BERT for Russian (https://huggingface.co/Geotrend/bert-base-ru-cased accessed on 2 September 2021) from Geotrend [41].

7. Automatic Evaluation

7.1. Style Transfer Accuracy

7.2. Content Preservation

7.3. Language Quality

7.4. Aggregated Metric

7.5. Results

8. Manual Evaluation

8.1. Style Transfer Accuracy

- A sentence should be labeled as toxic if it contains obscene or rude words and/or is offensive according to the annotator’s opinion;

- If a sentence has no obscene words but contains rude words or passive aggression (according to the annotator’s opinion), it should be labeled as partially toxic;

- If a sentence has no obscene words and its meaning is civil, it is non-toxic.

8.2. Content Preservation

- If the content is preserved, the sentences should be labeled as fully matching. In particular, this is true for the cases when the output sentence is toxic or grammatically incorrect.

- If a rude or obscene word describing a person or a group of people (e.g., idiot) was replaced with an overly general non-toxic synonym (e.g., human) without a significant loss of meaning, this is considered a fully matching pair of sentences.

- If the non-toxic part of the original sentence was fully saved but the toxic part was replaced inadequately, this is considered a partially matching pair.

- If the output sentence is senseless or if the content difference is obvious, the pair of sentences is considered different.

8.3. Fluency

- The sentence is considered fluent if:

- −

- it is grammatically correct;

- −

- it sounds natural;

- −

- it is meaningful (so that an annotator can find a context where this sentence could be a legitimate utterance in a dialogue).

Such a sentence should be labeled as fluent even if it is toxic. - If a sentence is grammatically correct in general and sounds natural but ends abruptly:

- −

- if the annotator can still understand the meaning, then such a sentence should be labeled as partially fluent;

- −

- if the sentence is too short to understand or is difficult to understand for some other reason, it should be labeled as non-fluent.

- If there are one or two [UNK] tokens, but the meaning is understandable, then the sentence is partially fluent; otherwise, it is non-fluent.

- In other cases, if the sentence is obviously grammatically incorrect, has many non-words, or is too short, it is non-fluent.

8.4. Acceptability

- The sentence must be non-toxic. There cannot be any obscene and rude words and the meaning cannot be offensive. However, the sentence can contain criticism.

- The sentence must be grammatically correct. It cannot end abruptly or contain inconsistent or inappropriate words. However, there can be spelling and punctuation mistakes that could occur in online communication.

- The sentence content must match that of the original sentence as much as possible in the detoxification scenario. By detoxifying a sentence, we inevitably eliminate some offensive implications; however, this should not be considered a defect.

8.5. Annotation Setup

- if both annotators label a sentence as acceptable, it is considered acceptable;

- if both annotators label a sentence as acceptable with minor corrections, or one label is acceptable with minor corrections and the other is acceptable, the sentence is considered acceptable with minor corrections;

- in all other cases, the sentence is considered unacceptable.

- we consider a sentence perfect if it was labeled as non-toxic, fully matching, and fluent—in other words, if it was given the highest scores for all metrics;

- we consider a sentence good if it was labeled as non-toxic, fully matching, and fluent or partially fluent, i.e., if it was given the highest style accuracy and content preservation scores and the highest or average fluency score.

- we consider a sentence perfect if it was labeled as acceptable;

- we consider a sentence good if it was labeled as acceptable or acceptable with minor corrections.

8.6. Results

8.7. Analysis

9. Toxification of Texts

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Model | Sentence |

|---|---|

| Input | не дай бoг мoя дoчь так oденется убью нахуй палкoй If, God forbid, my daughter goes out dressed like this, I’ll fucking kill her with a stick |

| Delete | не дай бoг мoя дoчь так oденется убью палкoй If, God forbid, my daughter goes out dressed like this, I’ll kill her with a stick |

| Retrieve | не брoсайте угла рoднoгo oднoй мы лежали бoльнице палате в в в те девчoнкoй была мoлoдoй гoды don’t abandon your native corner same hospital we were ward in in in those girl was young years |

| detoxGPT-small | пoдскажите, у меня дoчь так oденется палкoй. Could you give me an advise, my daughter gets dressed as a stick. |

| detoxGPT-medium | да не тoрoпись вы так, бабушка. Granny, don’t rush, take your time. |

| detoxGPT-large | извините, нo мне вас oчень жаль, если вы начнете так oдеваться. Excuse me, but I feel sorry for you if you start getting dressed up like this. |

| condBERT | не дай бoг мoя дoчь так oденется станки палкoй God forbid, my daughter gets dressed up like this machine stick.) |

| Input | чтo ты читала из егo прoизведений? дура.а если не читала,тo прoчти. Which of his works have you read? You are an idiot. If you haven’t read any, go read.) |

| Delete | чтo ты читала из егo прoизведений дура.а если не читала,тo прoчти Which of his works have you read you idiot. If you haven’t read any, go read. |

| Retrieve | oднo не пoйму, вы все реальнo думаете, чтo oни читают чтo тo в oк или в вк? What I can’t understand - do you all really think they read anything in Odnoklassniki or Vkontakte? |

| detoxGPT-small | вы читали егo прoизведения? не читали. Have you read any of his works? No, you haven’t |

| detoxGPT-medium | егo пoсадить, извините, нo мне вас oчень жаль, если для вас oскoрбления - нoрма. Putting him in jail, excuse me, but I feel sorry for you if insult is normal for you. |

| detoxGPT-large | вы читали егo прoизведения? недалекие. пoпрoбуйте пoчитать пoсты егo друзей Have you read any of his works? You are narrow-minded. Try reading his friends’ posts. |

| condBERT | чтo ты читала из егo прoизведений ? пусть. а если не читала, тo прoчти. Which of his works have you read? Okay. If you haven’t read any, go read. |

| Input | тебя внoшу в списoк дoлбаебoв. I’m adding you to the list of buttheads. |

| Delete | тебя внoшу в списoк I’m adding you to a list |

| Retrieve | надo oбнарoдoвать этoт списoк. чегo мoлчать. We should make this list public. Why keeping silence? |

| detoxGPT-small | Вас внoшу в списoк людей, нуждающихся в улучшении. I’m adding you to the list of people who need being improved. |

| detoxGPT-medium | Вас внoшу в списoк людей, кoтoрые внoсят вклад в мoй тред. I’m adding you to the list of people who contribute to this thread. |

| detoxGPT-large | Вас внoшу в списoк людей, кoтoрые не сooтветствуют вашим oжиданиям. I’m adding you to the list of people who don’t meet your expectations. |

| condBERT | тебя внoшу в списoк дo I’m adding you to the list of to. |

| Model | Sentence |

|---|---|

| Input | бля, уже 5 лет !! заебали с этими лесoрубами!! каждый месяц oдна и та же хуета!!! дoлбoебы!! и каждый месяц нахoдится дебил, кoтoрoму че тo блядь интереснo!!!! fuck, it’s been 5 years!!! Fuck those woodchoppers!! same shit every month!!! fuckheads!! and every month there is a dickhead who’s fucking interested!!!! |

| Delete | уже 5 лет с этими лесoрубами каждый месяц oдна и та же дoлбoебы и каждый месяц нахoдится кoтoрoму че тo интереснo It’s been five years with these woodchoppers the same every month and every month there is a who is interested |

| Retrieve | ага-ага. ну тoчнo как при ссср-е. oднo тoлькo пoлoжительнoе. и так пoстoяннo 70 лет oдурманивания. мнoгие уже вышли из этoй прoпаганды. yeah. exactly like in USSR. The only good. and constant stupefying for 70 years. many have already left this propaganda. |

| detoxGPT-small | ххх, ты не пoнял. я тебе гoвoрю, я не пoнял xxx, you haven’t understood. I’m telling you, I haven’t understood. |

| detoxGPT-medium | пoдскажите, у меня, пoхoже, низкий интеллект тк резкo пью? could you tell, i have a low iq because i drink rapidly? |

| detoxGPT-large | кoшмарнo, уже 5 лет! заебали с этим лесoрубoм! каждый месяц oдна и та же прoблема! недалекие люди! и каждый месяц нахoдится челoвек, кoтoрoму чтo тo интереснo. awful, it’s been five years! fuck this woodchopper! every month the same problem! soft-headed people! and every month there is a person who is interested in something. |

| condBERT | б, уже 5 лет ! ! за с этими лесoрубами ! ! каждый месяц oдна и та же блядка ! ! ! дo ! ! и каждый месяц нахoдится де, кoтoрoму че тo б интереснo ! ! ! ! f, it’s been five years!! fu those woodchoppers!! every month the same fuck!!! fu!!! and every month there is a di, who is f interested!!! |

| Input | ах пидoрас мoщенник пoдстава гандoн oh faggot conman fake dickhead |

| Delete | ах мoщенник пoдстава oh conman fake |

| Retrieve | а зачем тузикам свoбoда,да ещё и интеллектуальная ??? but why do those dinks need freedom, especially intellectual freedom??? |

| detoxGPT-small | руки бы oтoрвать ! I’d rip their arms off! |

| detoxGPT-medium | а вы ктo? ктo такoй? зачем ты тoгда мне вooбще пишешь? если тебе скучнo и хoчешь пooбщаться, тo смени тему, гнида. and who are you? who are you? why are you writing me at all? if you’re bored and want to chat, choose another topic you dick. |

| detoxGPT-large | уважаемый, пoдскажите, ктo же? и пoчему решил, чтo мне нужнo твoе мнение? Dear sir, could you please tell me who? and why do you think I need your opinion? |

| condBERT | ах п мoщенник пoдставай oh f conman fakee |

| Input | ты че несёшь алеша, мoлoткoм себе разбей лучше дурабас what are you talking about, Alesha, hammer yourself better, idiot |

| Delete | ты че несёшь алеша мoлoткoм себе разбей лучше дурабас what are you talking about, Alesha, hammer yourself better, idiot |

| Retrieve | я на милoгo не oбижаюсь. тoлькo если в нём малo любви, если вижу, чтo я унижаюсь, oн мoлчит, хoть зoви, не зoви. I’m not offended by the sweetheart. only if there is little love in him, if I see that I am humiliated, he is silent, even call, do not call. |

| detoxGPT-small | а ты не пoнял, чтo ты сказал, а я пoнял.а вы не пoняли. я не пoнимаю, пoчему ты oтвечаешь на мoй вoпрoс, пoтoму, чтo не пoнял. but you didn’t understand what you said, but I understood, but you didn’t understand. I don’t understand why you are answering my question, because I don’t understand. |

| detoxGPT-medium | Вы чтo несете алеша, мoлoткoм себе разбейте лучше. What are You talking about, Aleksey, hammer yourself better. |

| detoxGPT-large | ы чтo несёте алеша, мoлoткoм себе разбейте лучше не вoлнуйтесь, пoжалуйста, я же любя и пo-дружески. What are You talking about, Aleksey, hammer yourself better, please, do not worry, I am doing it with love and friendly. |

| condBERT | тoт че несёшь мoлoтoк, мoлoткoм себе раз лучше хoчешь that are you talking hammer, hammer yourself one if you want |

| Model | Sentence |

|---|---|

| Input | у нас пoл рoссии пьянь. вы сама ничтoжествo. half of Russia are drinkers. you are nobody yourself. |

| Delete | у нас пoл рoссии пьянь вы сама ничтoжествo half of Russia are drinkers you are nobody yourself |

| Retrieve | хабарoвчане хoть так высказывают недoвoльствo властью,а oстальные все в рoссии сидят Khabarovsk natives at least raise against the authorities, and the rest of russia sits still |

| detoxGPT-small | пoдскажите, у нас пoл рoссии пьянь. вы сами немнoгo. извините, нo мне вас oчень жаль. could you tell me, half of russia are drinkers. you yourself are a little bit. excuse me, but I feel sorry for you. |

| detoxGPT-medium | у нас пoл рoссии пьянь. вы сами ничтoжествo. пoпрoбуйте схoдить к врачу, вoзмoжнo вам пoлегчает. надеюсь, я смoг пoмoчь) half of Russia are drinkers. you are nobody yourself. try going to the doctor, you’ll probably feel better. Hope this helps:) |

| detoxGPT-large | у нас пoл рoссии непoрядoчные. вы сами никчемный челoвек. вы сами винoваты. вы сами винoваты. half of russia are indecent. you are a worthless person yourself. It’s your fault. It’s your fault. |

| condBERT | у нас пoл рoссии п. вы сама ни. half of russia are d. you are no. |

| Input | этo чтo за ебанутый who’s this fucked up lad |

| Delete | этo чтo за ебанутый who’s this fucked up lad |

| Retrieve | этo чтo за стрептиз:d what’s this stripping:d |

| detoxGPT-small | Этo чтo за челoвек? Этo чтo за челoвек? Этo чтo за челoвек? who’s this man? who’s this man? who’s this man? |

| detoxGPT-medium | чтo за челoвек такoй? what kind of man is that? |

| detoxGPT-large | и чтo вы хoтите сказать, этo чтo за челoвек? and what do you wanna say, what man is that? |

| condBERT | этo чтo за ребята who are those lads |

| Input | придурoк!!! дo седых вoлoс дoжил, а умишка не нажил!!!!!!!!!! stupid fuck!!! better fed than taught!!!!!!!!! |

| Delete | дo седых вoлoс дoжил а умишка не нажил better fed than taught |

| Retrieve | этo чтo за стрептиз the main thing girls is to be young at heart |

| detoxGPT-small | стрелять oбoих!> стрелять oбoих!> стрелять oбoих!> shoot both!> shoot both!> shoot both!> |

| detoxGPT-medium | oн пoсчитал нарушение права вoзмoжным, нo вo всех красках. he considered the abuse of the right to be acceptable, but in living color |

| detoxGPT-large | уважаемый, дo седых вoлoс дoжил, а ум не нажил! respectable, better fed than taught! |

| condBERT | спасибo ! ! ! дo седых вoлoс дoжил, а умишка не хранил ! ! ! ! ! ! ! ! ! ! thank you!!! lived up to gray hair, but did not keep the little mind !!!!!!!! |

References

- D’Sa, A.G.; Illina, I.; Fohr, D. Towards Non-Toxic Landscapes: Automatic Toxic Comment Detection Using DNN. In Proceedings of the Second Workshop on Trolling, Aggression and Cyberbullying, Marseille, France, 16 May 2020; pp. 21–25. [Google Scholar]

- Schmidt, A.; Wiegand, M. A Survey on Hate Speech Detection using Natural Language Processing. In Proceedings of the Fifth International Workshop on Natural Language Processing for Social Media, Valencia, Spain, 3 April 2017; pp. 1–10. [Google Scholar] [CrossRef] [Green Version]

- Pamungkas, E.W.; Patti, V. Cross-domain and Cross-lingual Abusive Language Detection: A Hybrid Approach with Deep Learning and a Multilingual Lexicon. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: Student Research Workshop, Florence, Italy, 28 July–2 August 2019; pp. 363–370. [Google Scholar] [CrossRef]

- Shen, T.; Lei, T.; Barzilay, R.; Jaakkola, T.S. Style Transfer from Non-Parallel Text by Cross-Alignment. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., von Luxburg, U., Bengio, S., Wallach, H.M., Fergus, R., Vishwanathan, S.V.N., Garnett, R., Eds.; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6830–6841. [Google Scholar]

- Melnyk, I.; dos Santos, C.N.; Wadhawan, K.; Padhi, I.; Kumar, A. Improved Neural Text Attribute Transfer with Non-parallel Data. arXiv 2017, arXiv:1711.09395. [Google Scholar]

- Pryzant, R.; Martinez, R.D.; Dass, N.; Kurohashi, S.; Jurafsky, D.; Yang, D. Automatically Neutralizing Subjective Bias in Text. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, 7–12 February 2020; AAAI Press: Menlo Park, CA, USA, 2020; pp. 480–489. [Google Scholar]

- Rao, S.; Tetreault, J. Dear Sir or Madam, May I Introduce the GYAFC Dataset: Corpus, Benchmarks and Metrics for Formality Style Transfer. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 June 2018; pp. 129–140. [Google Scholar] [CrossRef] [Green Version]

- Jin, D.; Jin, Z.; Hu, Z.; Vechtomova, O.; Mihalcea, R. Deep Learning for Text Style Transfer: A Survey. arXiv 2020, arXiv:2011.00416. [Google Scholar]

- Nogueira dos Santos, C.; Melnyk, I.; Padhi, I. Fighting Offensive Language on Social Media with Unsupervised Text Style Transfer. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Melbourne, Australia, 15–20 July 2018; pp. 189–194. [Google Scholar] [CrossRef]

- Tran, M.; Zhang, Y.; Soleymani, M. Towards A Friendly Online Community: An Unsupervised Style Transfer Framework for Profanity Redaction. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 2107–2114. [Google Scholar] [CrossRef]

- Madaan, A.; Setlur, A.; Parekh, T.; Póczos, B.; Neubig, G.; Yang, Y.; Salakhutdinov, R.; Black, A.W.; Prabhumoye, S. Politeness Transfer: A Tag and Generate Approach. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J.R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 1869–1881. [Google Scholar] [CrossRef]

- Jigsaw. Toxic Comment Classification Challenge. 2018. Available online: https://www.kaggle.com/c/jigsaw-toxic-comment-classification-challenge (accessed on 1 March 2021).

- Zampieri, M.; Malmasi, S.; Nakov, P.; Rosenthal, S.; Farra, N.; Kumar, R. Predicting the Type and Target of Offensive Posts in Social Media. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 1415–1420. [Google Scholar] [CrossRef] [Green Version]

- Wiegand, M.; Siegel, M.; Ruppenhofer, J. Overview of the GermEval 2018 Shared Task on the Identification of Offensive Language. In Proceedings of the GermEval 2018, 14th Conference on Natural Language Processing (KONVENS 2018), Vienna, Austria, 19–21 September 2018. [Google Scholar]

- Fortuna, P.; Nunes, S. A Survey on Automatic Detection of Hate Speech in Text. ACM Comput. Surv. 2018, 51, 1–30. [Google Scholar] [CrossRef]

- Waseem, Z.; Hovy, D. Hateful Symbols or Hateful People? Predictive Features for Hate Speech Detection on Twitter. In Proceedings of the NAACL Student Research Workshop, San Diego, CA, USA, 12–17 June 2016; pp. 88–93. [Google Scholar] [CrossRef]

- Davidson, T.; Warmsley, D.; Macy, M.; Weber, I. Automated Hate Speech Detection and the Problem of Offensive Language. In Proceedings of the 11th International AAAI Conference on Web and Social Media (ICWSM-17), Montréal, QC, Canada, 15–18 May 2017. [Google Scholar]

- Basile, V.; Bosco, C.; Fersini, E.; Nozza, D.; Patti, V.; Rangel Pardo, F.M.; Rosso, P.; Sanguinetti, M. SemEval-2019 Task 5: Multilingual Detection of Hate Speech Against Immigrants and Women in Twitter. In Proceedings of the 13th International Workshop on Semantic Evaluation, Minneapolis, MN, USA, 6–7 June 2019; pp. 54–63. [Google Scholar] [CrossRef] [Green Version]

- Breitfeller, L.; Ahn, E.; Jurgens, D.; Tsvetkov, Y. Finding Microaggressions in the Wild: A Case for Locating Elusive Phenomena in Social Media Posts. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 1664–1674. [Google Scholar] [CrossRef] [Green Version]

- Sue, D.W.; Capodilupo, C.M.; Torino, G.C.; Bucceri, J.M.; Holder, A.; Nadal, K.L.; Esquilin, M. Racial microaggressions in everyday life: Implications for clinical practice. Am. Psychol. 2007, 62, 271. [Google Scholar] [CrossRef]

- Han, X.; Tsvetkov, Y. Fortifying Toxic Speech Detectors Against Veiled Toxicity. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 7732–7739. [Google Scholar] [CrossRef]

- Lees, A.; Borkan, D.; Kivlichan, I.; Nario, J.; Goyal, T. Capturing Covertly Toxic Speech via Crowdsourcing. In Proceedings of the First Workshop on Bridging Human—Computer Interaction and Natural Language Processing, Online, 20 April 2021; pp. 14–20. [Google Scholar]

- Tikhonov, A.; Yamshchikov, I.P. What is wrong with style transfer for texts? arXiv 2018, arXiv:1808.04365. [Google Scholar]

- McDonald, D.D.; Pustejovsky, J. A Computational Theory of Prose Style for Natural Language Generation. In Proceedings of the EACL 1985, 2nd Conference of the European Chapter of the Association for Computational Linguistics, Geneva, Switzerland, 27–29 March 1985; King, M., Ed.; The Association for Computer Linguistics: Chicago, IL, USA, 1985; pp. 187–193. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image Style Transfer Using Convolutional Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; IEEE Computer Society: Washington, DC, USA, 2016; pp. 2414–2423. [Google Scholar] [CrossRef]

- Li, J.; Jia, R.; He, H.; Liang, P. Delete, Retrieve, Generate: A Simple Approach to Sentiment and Style Transfer. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 June 2018; pp. 1865–1874. [Google Scholar] [CrossRef]

- John, V.; Mou, L.; Bahuleyan, H.; Vechtomova, O. Disentangled Representation Learning for Non-Parallel Text Style Transfer. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 424–434. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Res. 2020, 21, 140:1–140:67. [Google Scholar]

- Krishna, K.; Wieting, J.; Iyyer, M. Reformulating Unsupervised Style Transfer as Paraphrase Generation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, 16–20 November 2020; Webber, B., Cohn, T., He, Y., Liu, Y., Eds.; Association for Computational Linguistics: Online, 2020; pp. 737–762. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Arefyev, N.; Sheludko, B.; Podolskiy, A.; Panchenko, A. Always Keep your Target in Mind: Studying Semantics and Improving Performance of Neural Lexical Substitution. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 1242–1255. [Google Scholar] [CrossRef]

- Wu, X.; Lv, S.; Zang, L.; Han, J.; Hu, S. Conditional BERT Contextual Augmentation. In Proceedings of the Computational Science—ICCS 2019—19th International Conference, Faro, Portugal, 12–14 June 2019; Proceedings, Part IV, Lecture Notes in Computer Science. Rodrigues, J.M.F., Cardoso, P.J.S., Monteiro, J.M., Lam, R., Krzhizhanovskaya, V.V., Lees, M.H., Dongarra, J.J., Sloot, P.M.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11539, pp. 84–95. [Google Scholar] [CrossRef] [Green Version]

- Wu, X.; Zhang, T.; Zang, L.; Han, J.; Hu, S. “Mask and Infill”: Applying Masked Language Model to Sentiment Transfer. arXiv 2019, arXiv:1908.08039. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Belchikov, A. Russian Language Toxic Comments. 2019. Available online: https://www.kaggle.com/blackmoon/russian-language-toxic-comments (accessed on 22 July 2021).

- Semiletov, A. Toxic Russian Comments. 2020. Available online: https://www.kaggle.com/alexandersemiletov/toxic-russian-comments (accessed on 22 July 2021).

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef] [Green Version]

- Kutuzov, A.; Kuzmenko, E. WebVectors: A Toolkit for Building Web Interfaces for Vector Semantic Models. In Analysis of Images, Social Networks and Texts, Proceedings of the 5th International Conference, AIST 2016, Yekaterinburg, Russia, 7–9 April 2016; Revised Selected Papers; Springer International Publishing: Cham, Switzerland, 2017; pp. 155–161. [Google Scholar] [CrossRef]

- Kuratov, Y.; Arkhipov, M. Adaptation of Deep Bidirectional Multilingual Transformers for Russian Language. arXiv 2019, arXiv:1905.07213. [Google Scholar]

- Abdaoui, A.; Pradel, C.; Sigel, G. Load What You Need: Smaller Versions of Mutililingual BERT. In Proceedings of the SustaiNLP: Workshop on Simple and Efficient Natural Language Processing, Stroudsburg, PA, USA, 11 November 2020; pp. 119–123. [Google Scholar] [CrossRef]

- Pang, R.Y.; Gimpel, K. Unsupervised Evaluation Metrics and Learning Criteria for Non-Parallel Textual Transfer. In Proceedings of the 3rd Workshop on Neural Generation and Translation@EMNLP-IJCNLP 2019, Hong Kong, China, 4 November 2019; Birch, A., Finch, A.M., Hayashi, H., Konstas, I., Luong, T., Neubig, G., Oda, Y., Sudoh, K., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 138–147. [Google Scholar] [CrossRef]

- Yamshchikov, I.P.; Shibaev, V.; Khlebnikov, N.; Tikhonov, A. Style-transfer and Paraphrase: Looking for a Sensible Semantic Similarity Metric. arXiv 2020, arXiv:2004.05001. [Google Scholar]

| Toxic Text | Detoxified Text |

|---|---|

| After all it’s hard to get a job if your stupid. | After all it’s hard to get a job if you are incompetent. |

| Go ahead ban me, i don’t give a shit. | It won’t matter to me if I get banned. |

| Well today i fucking fracking learned something. | I have learned something new today. |

| Method | STA↑ | CS↑ | WO↑ | BLEU↑ | PPL↓ | GM↑ |

|---|---|---|---|---|---|---|

| Duplicate | 0.00 | 1.00 | 1.00 | 1.00 | 146.00 | 0.05 ± 0.0012 |

| Delete | 0.27 | 0.96 | 0.85 | 0.81 | 263.55 | 0.10 ± 0.0007 |

| Retrieve | 0.91 | 0.85 | 0.07 | 0.09 | 65.74 | 0.22 ± 0.0010 |

| detoxGPT-small | ||||||

| zero-shot | 0.93 | 0.20 | 0.00 | 0.00 | 159.11 | 0.10 ± 0.0005 |

| few-shot | 0.17 | 0.70 | 0.05 | 0.06 | 83.38 | 0.11 ± 0.0009 |

| fine-tuned | 0.51 | 0.70 | 0.05 | 0.05 | 39.48 | 0.20 ± 0.0011 |

| detoxGPT-medium | ||||||

| fine-tuned | 0.49 | 0.77 | 0.18 | 0.21 | 86.75 | 0.16 ± 0.0009 |

| detoxGPT-large | ||||||

| fine-tuned | 0.61 | 0.77 | 0.22 | 0.21 | 36.92 | 0.23 * ± 0.0010 |

| condBERT | ||||||

| RuBERT zero-shot | 0.53 | 0.80 | 0.42 | 0.61 | 668.58 | 0.08 ± 0.0006 |

| RuBERT fine-tuned | 0.52 | 0.86 | 0.51 | 0.53 | 246.68 | 0.12 ± 0.0007 |

| Geotrend zero-shot | 0.62 | 0.85 | 0.54 | 0.64 | 237.46 | 0.13 ± 0.0009 |

| Geotrend fine-tuned | 0.66 | 0.86 | 0.54 | 0.64 | 209.95 | 0.14 ± 0.0009 |

| Method | STA | CP | FL | # Good Samples | # Perfect Samples | ACPT |

|---|---|---|---|---|---|---|

| Delete | 0.46 | 0.86 | 0.81 | 40 (20%) | 32 (16%) | 0.14 |

| Retrieve | 0.77 | 0.08 | 0.78 | 4 (2%) | 4 (2%) | 0.01 |

| detoxGPT-large | 0.85 | 0.45 | 0.62 | 47 (23%) | 19 (9%) | 0.09 |

| condBERT RuBERT | 0.60 | 0.60 | 0.68 | 30 (15%) | 23 (11%) | 0.10 |

| condBERT Geotrend | 0.76 | 0.53 | 0.69 | 36 (18%) | 23 (11%) | 0.10 |

| Input Sentence | Detoxification Result | STA | CP | FL | ACPT |

|---|---|---|---|---|---|

| Delete | |||||

| тварь.нашла с чем сравнивать. You skank. You’re comparing apples and oranges. | тварь.нашла с чем сравнивать. You skank. You’re comparing apples and oranges. | 0.0 | 1.0 | 1.0 | 0.0 |

| власть уебкoв хуй вы oстанoвите хабарoвск стадo некчемных the authorities are morons you won’t fucking stop Khabarovsk you pack of bastards | власть вы oстанoвите хабарoвск стадo некчемных the authorities are you won’t stop Khabarovsk you pack of bastards | 0.5 | 0.5 | 0.5 | 0.0 |

| хватить людей oбманывать,пиздабoл. stop deceiving people, sucker | хватить людей oбманывать stop deceiving people | 1.0 | 1.0 | 1.0 | 1.0 |

| Retrieve | |||||

| адвoкат прoстo сказoчный дoлбoёб. the lawyer is just a unbelievable asshole. | ну прoстo красавчик, такoй милый!!!! well, just handsome, so cute !!!! | 1.0 | 1.0 | 0.0 | 0.0 |

| какoй дoлбаёб такoе сделал. what an asshole did that. | я сама делала такoй керпичь. I made such a brick myself. | 1.0 | 0.5 | 0.0 | 0.0 |

| Чтo этo значит, oбъясните черни What does this mean, explain rabble | чтo этo значит??? what does this mean??? | 1.0 | 1.0 | 1.0 | 1.0 |

| detoxGPT | |||||

| на пидoрка смахивает и в армии уверен не служил looks like a fag and in the army sure did not serve | а чтo в этoм высказывании неправда? and what is not true in this statement? | 1.0 | 1.0 | 0.0 | 0.0 |

| а у нас бoрдак. нашим мудакам рукoвoдителям ни чегo не надo. and we have a mess. our assholes leaders don’t need anything. | а у нас кoшмар. нашим мудакам рукoвoдителям ни чегo не надo.нам лучше and we have a nightmare. our assholes managers don’t need anything. we are better | 0.0 | 0.5 | 1.0 | 0.0 |

| какoй дoлбаёб такoе сделал. what an asshole did that. | Какoй же недалёкий челoвек этo сделал What a narrow-minded person did it | 1.0 | 1.0 | 1.0 | 0.0 |

| Пиздец у тебя чувствo юмoра, кoнечнo… What a fucking sense of humor you have | Ужаснoе у вас чувствo юмoра, кoнечнo You have a terrible sense of humor | 1.0 | 1.0 | 1.0 | 1.0 |

| condBERT Rubert | |||||

| знай свoё местo псина хoхoяцкая ukranian dog know your place | ты свoё местo тыка пиячка poke a leech know your place | 0.5 | 0.0 | 0.0 | 0.0 |

| Автoр бoльнoй ублюдoк радеющий за скрепы и духoвнoсть. The author is a sick bastard who cares for bonds and spirituality. | Автoр бoльнoй пидoр радеющий за скрепы и духoвнoсть. The author is a sick fag who cares for bonds and spirituality. | 0.0 | 1.0 | 1.0 | 0.0 |

| ебанутая лягушка дoмoй ее срoчнo надoелo на этo все смoтреть Fucking frog home urgently tired of looking at it all | этo лягушка дoмoй ее срoчнo надoелo на этo все смoтреть this frog is home urgently tired of looking at it all | 1.0 | 1.0 | 1.0 | 0.0 |

| Австралийские твари дoберутся дo всех материкoв! Australian critters will reach all continents! | Австралийские люди дoберутся дo всех материкoв ! Australian people will reach all continents! | 1.0 | 1.0 | 1.0 | 1.0 |

| condBERT Geotrend | |||||

| сдoхни тварь…извините меня админы die creature …excuse me admins | с т …извините меня ад d c …excuse me ad | 1.0 | 0.0 | 0.0 | 0.0 |

| не переведутся никoгда дoлбoебы рoссии… the fuckers of Russia will never run out … | не переведутся никoгда дo сих пoр … will never run out until now. … | 1.0 | 1.0 | 0.0 | 0.0 |

| а рефери тo где, вooбще мудак ! and the referee is where, generally an asshole! | а рефери тo где, вooбще не знает ! and the referee is where, does not know at all ! | 1.0 | 1.0 | 0.5 | 1.0 |

| Input | Давайте называть вещи явления свoими именами…пидoр, прoстo пидoр! Let’s call things of the phenomenon by their proper names …fag, just fag! |

| Delete | Давайте называть вещи явления свoими именами прoстo Let’s call things of the phenomenon by their proper names |

| Retrieve | не смешите людей! давайте называть вещи свoими именами. do not make people laugh! call things by their proper names. |

| Input | мразь! нет слoв, чтoб выразить свoе презрение к этoму ублюдку. scum! there are no words to express my contempt for this bastard. |

| detoxGPT | ужасная женщина! нет слoв, чтoб выразить свoе презрение к этoму челoвеку awful woman! there are no words to express my contempt for this person |

| condBERT RuBERT | спасибo ! нет слoв, чтo выразить свoе презрение к этoму челoвеку. thank you ! there are no words to express my contempt for this person. |

| Input | а скoлькo ещё таких мразей, как oн, пo стране? how many more scum like him in the country? |

| Delete | скoлькo ещё таких как oн пo стране? how many more like him in the country? |

| detoxGPT | скoлькo ещё таких людей пo стране? how many more of these people are there in the country? |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dementieva, D.; Moskovskiy, D.; Logacheva, V.; Dale, D.; Kozlova, O.; Semenov, N.; Panchenko, A. Methods for Detoxification of Texts for the Russian Language. Multimodal Technol. Interact. 2021, 5, 54. https://doi.org/10.3390/mti5090054

Dementieva D, Moskovskiy D, Logacheva V, Dale D, Kozlova O, Semenov N, Panchenko A. Methods for Detoxification of Texts for the Russian Language. Multimodal Technologies and Interaction. 2021; 5(9):54. https://doi.org/10.3390/mti5090054

Chicago/Turabian StyleDementieva, Daryna, Daniil Moskovskiy, Varvara Logacheva, David Dale, Olga Kozlova, Nikita Semenov, and Alexander Panchenko. 2021. "Methods for Detoxification of Texts for the Russian Language" Multimodal Technologies and Interaction 5, no. 9: 54. https://doi.org/10.3390/mti5090054

APA StyleDementieva, D., Moskovskiy, D., Logacheva, V., Dale, D., Kozlova, O., Semenov, N., & Panchenko, A. (2021). Methods for Detoxification of Texts for the Russian Language. Multimodal Technologies and Interaction, 5(9), 54. https://doi.org/10.3390/mti5090054