Interactive Visualizations of Transparent User Models for Self-Actualization: A Human-Centered Design Approach

Abstract

:1. Introduction

2. Transparent User Modeling

2.1. Adaptive Systems

2.2. Intelligent Tutoring Systems

2.3. Other Educational Systems

2.4. Explainable Recommender Systems

3. Self-Actualization

3.1. Psychology Perspective

3.2. Computer Science Perspective

4. A Framework for Self-Actualization Goals of Transparent User Modeling

4.1. Method

4.2. Results

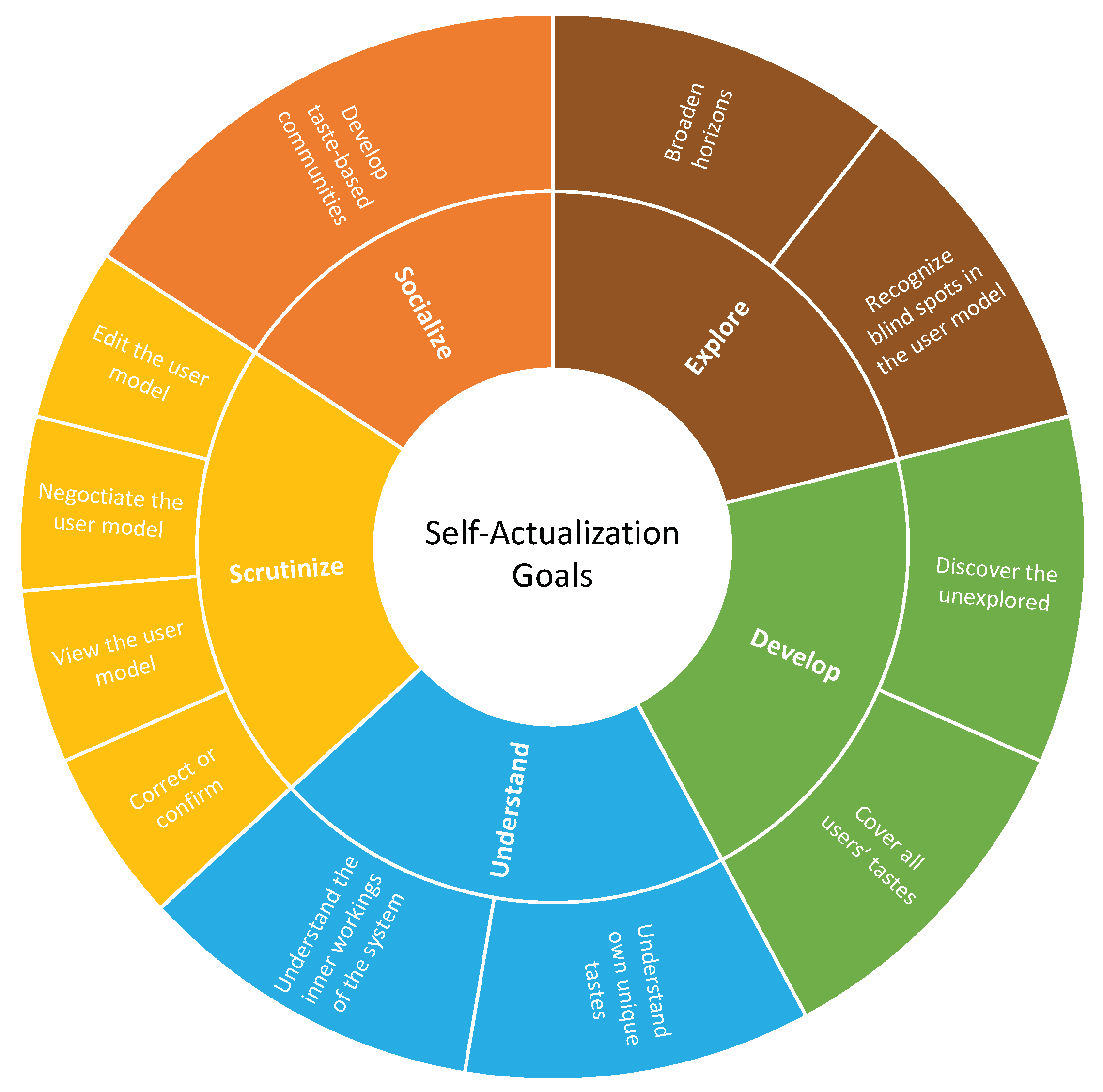

4.2.1. Self-Actualization Goals

4.2.2. Self-Actualization Mechanisms

4.3. The EDUSS Framework

5. EDUSS Framework in Action

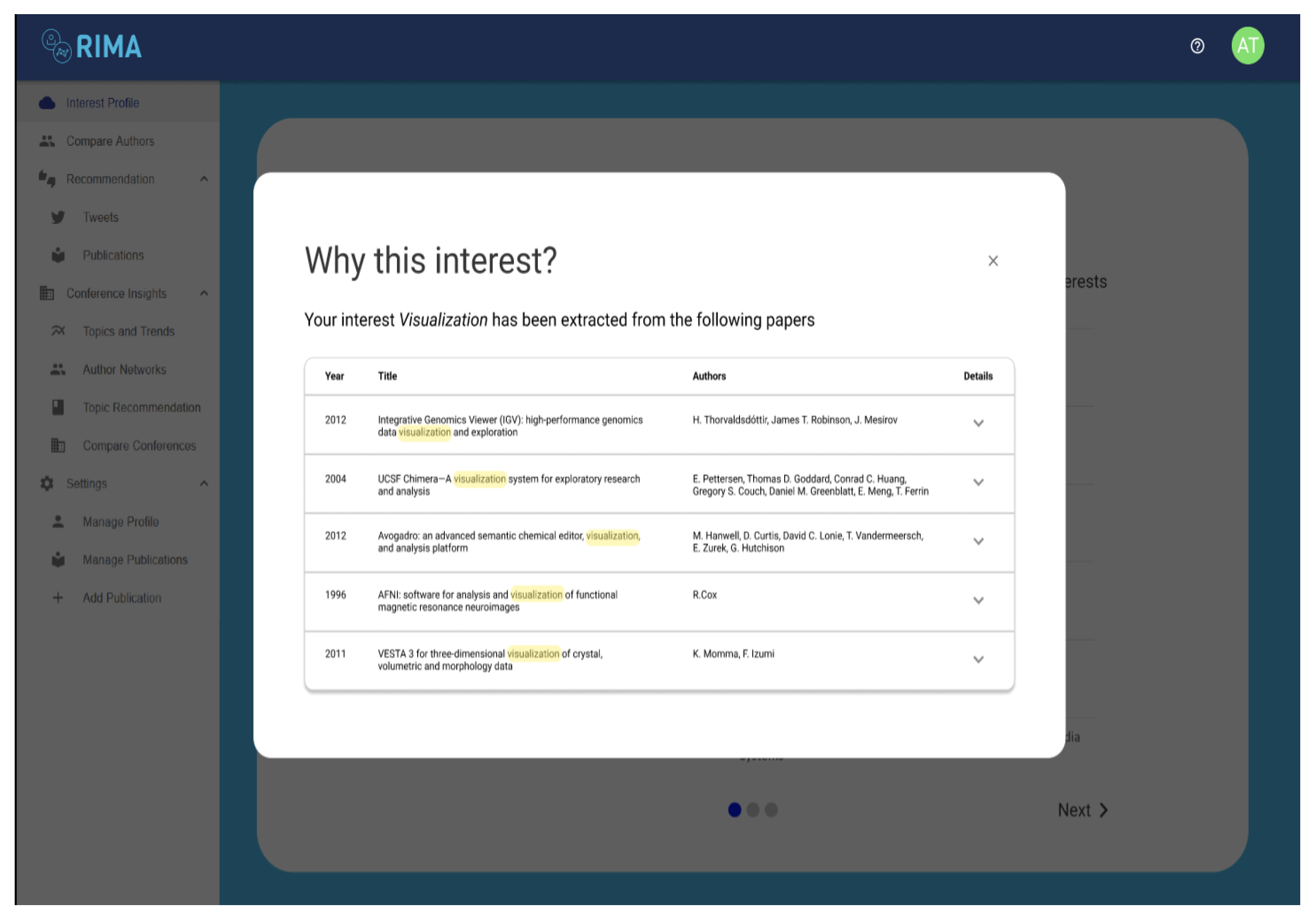

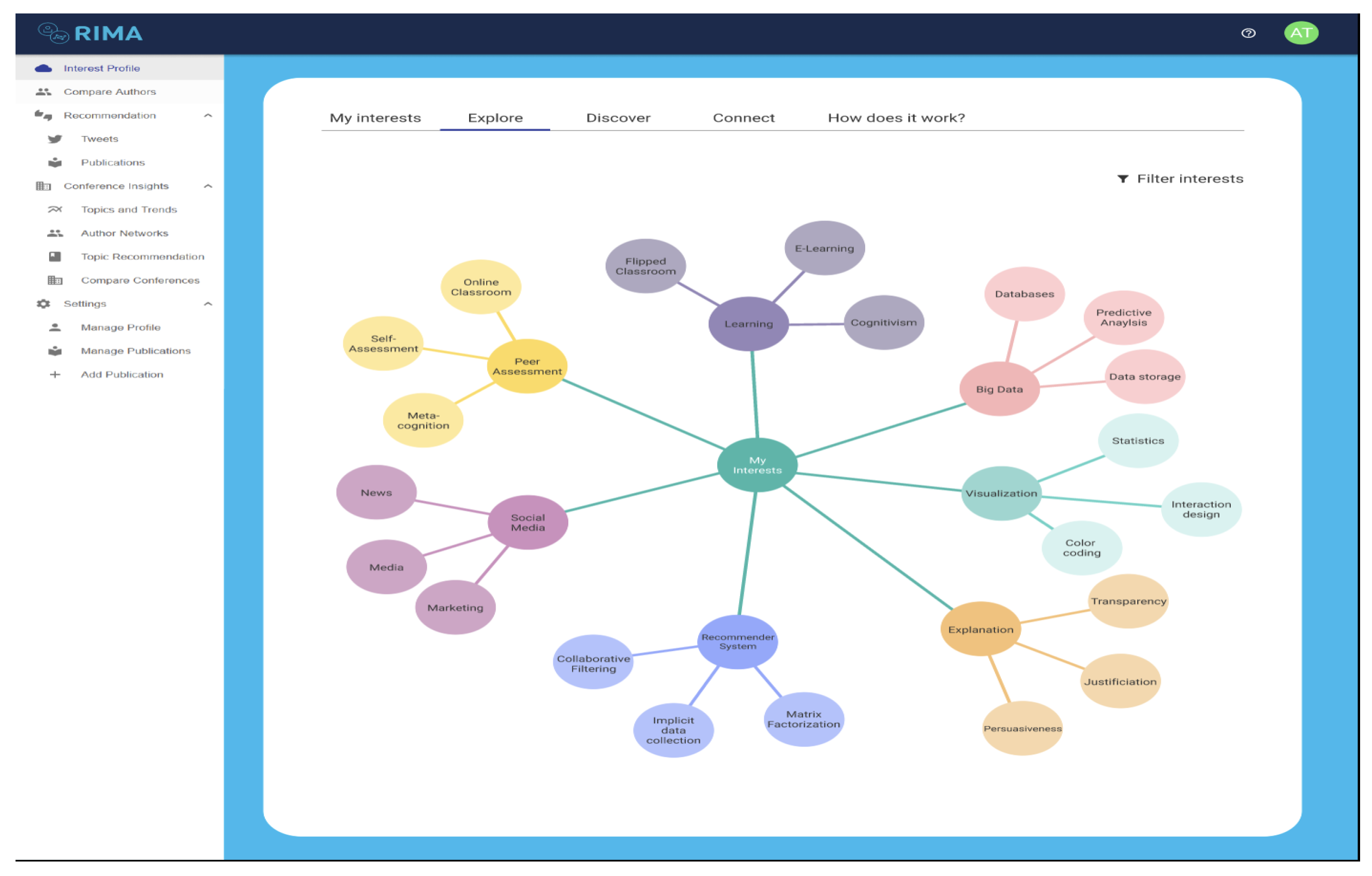

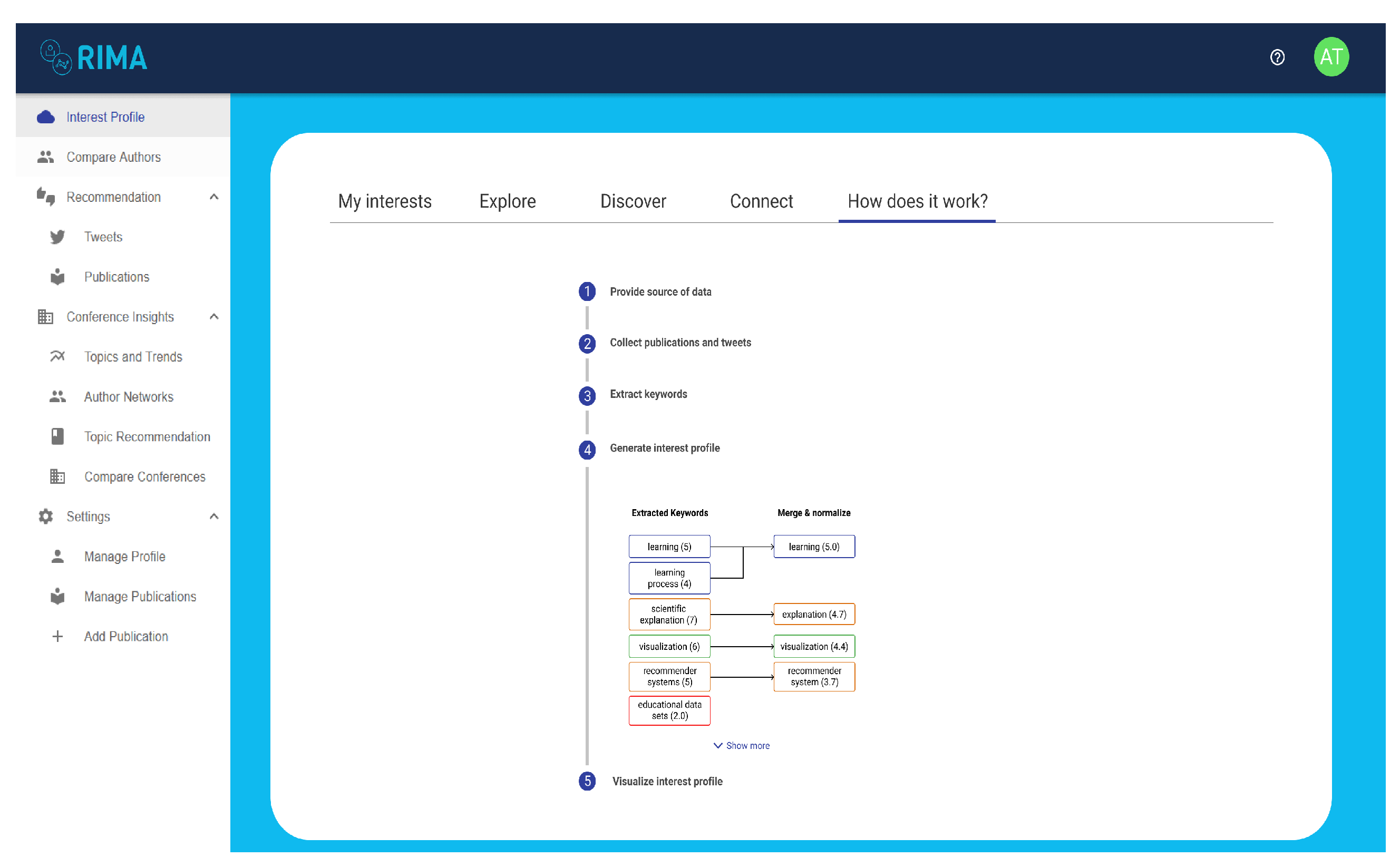

5.1. User Interest Model Generation

5.2. Human-Centered Design

5.3. Designing Visualizations for Self-Actualization

5.3.1. Participants

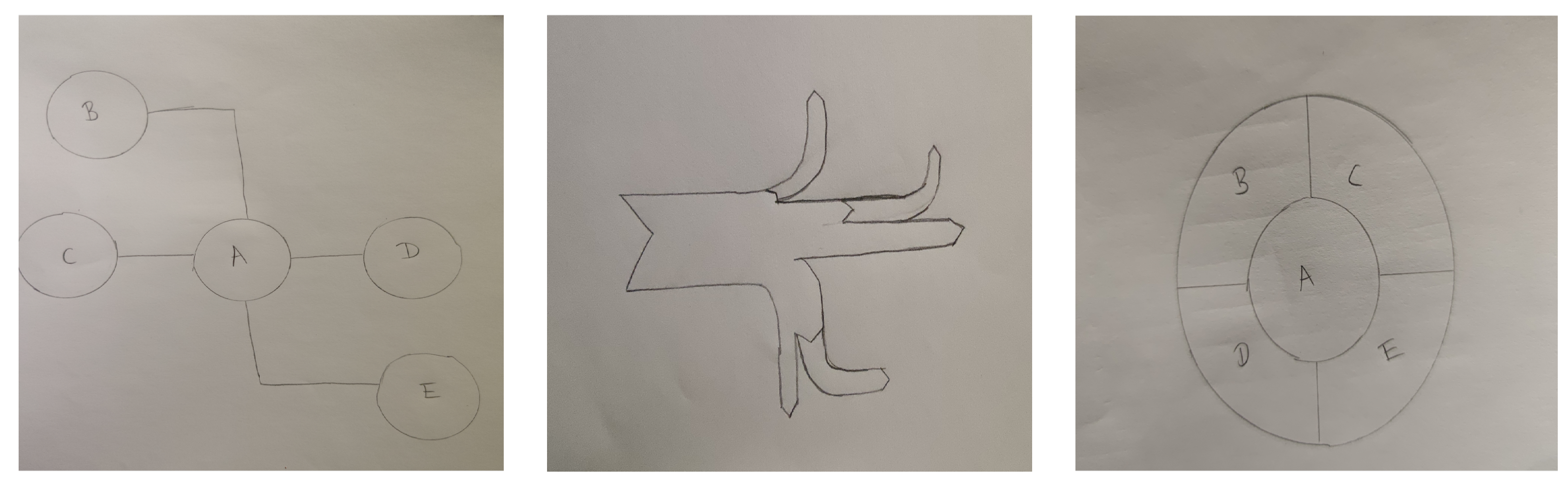

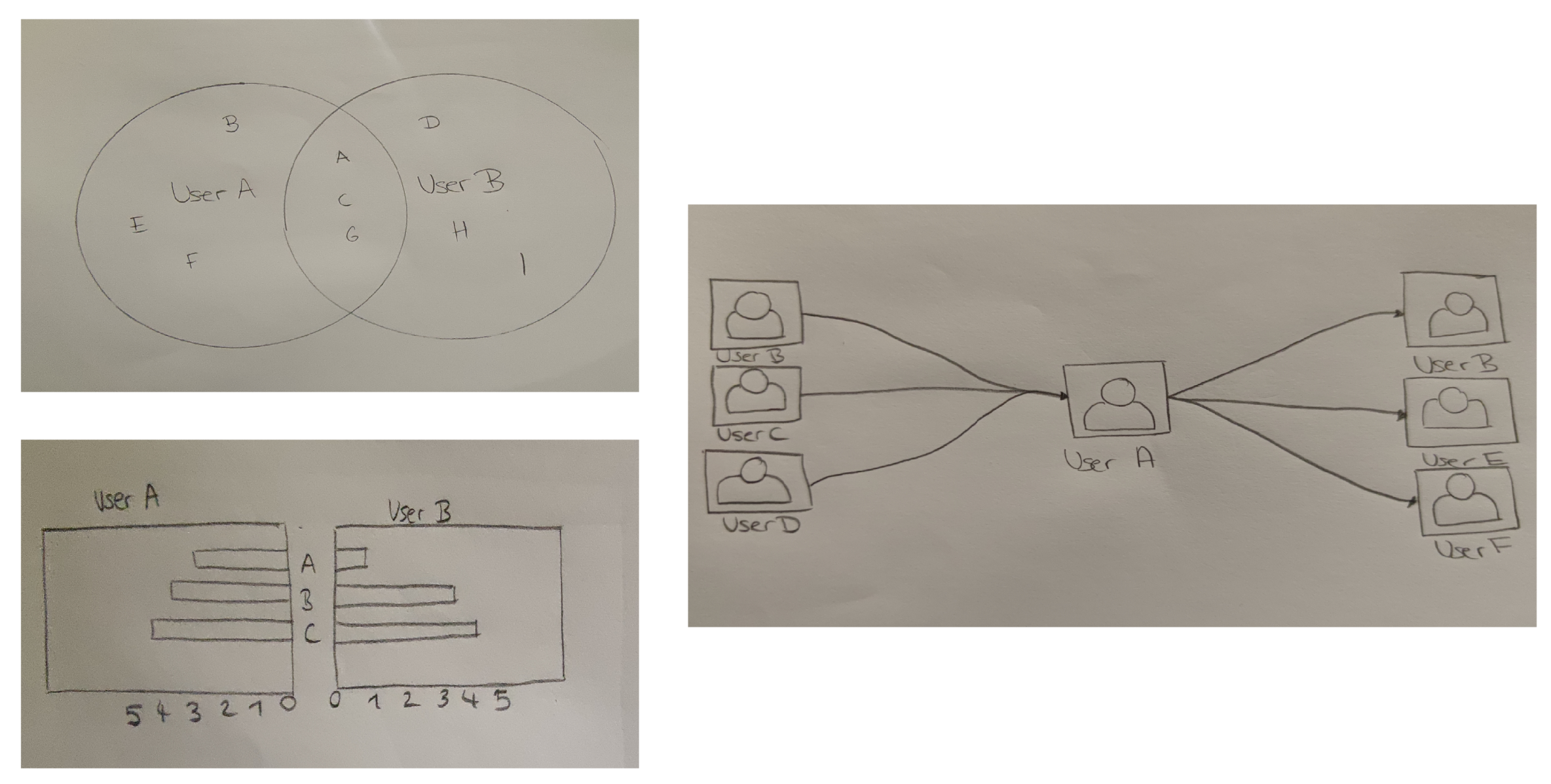

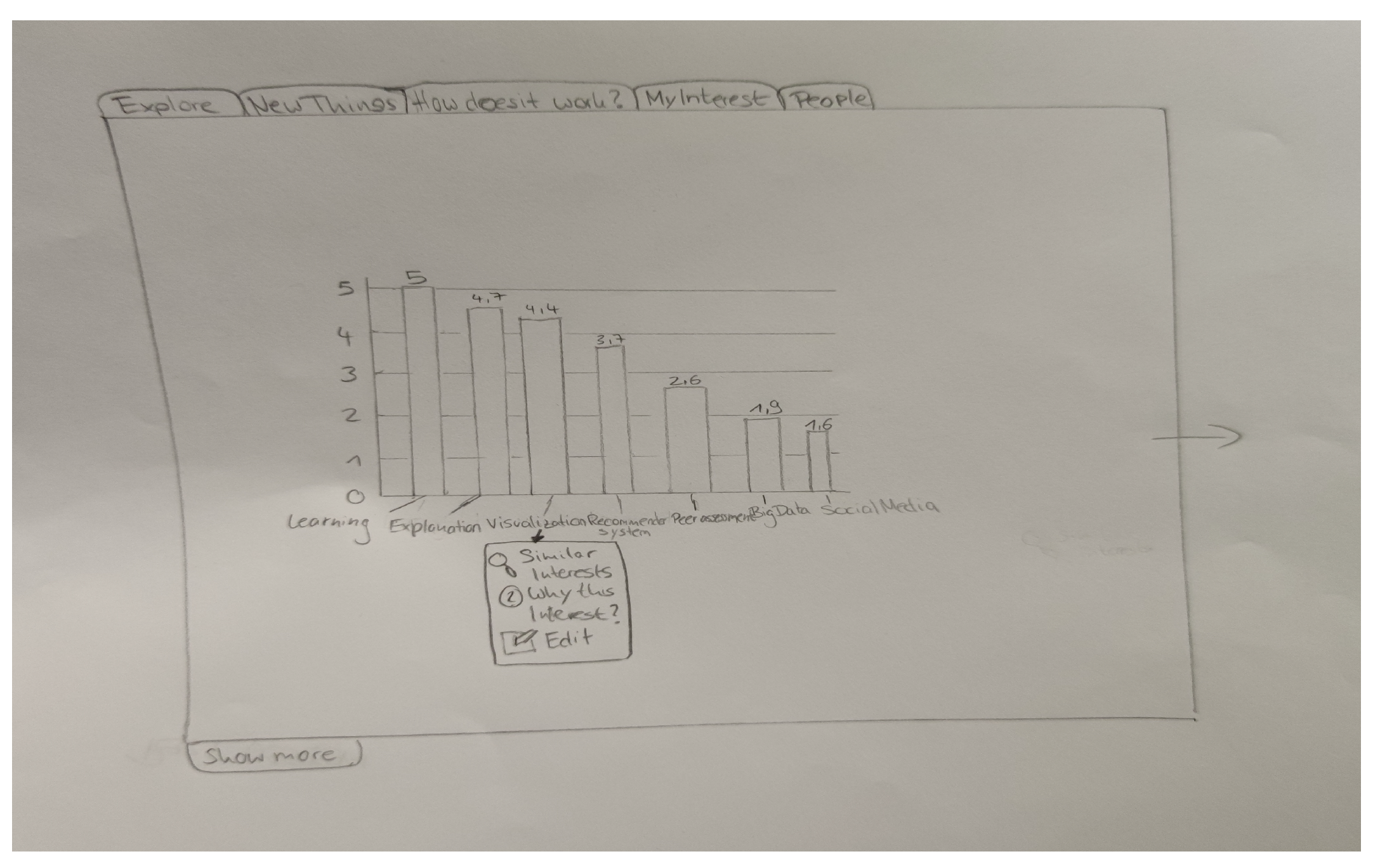

5.3.2. First Iteration

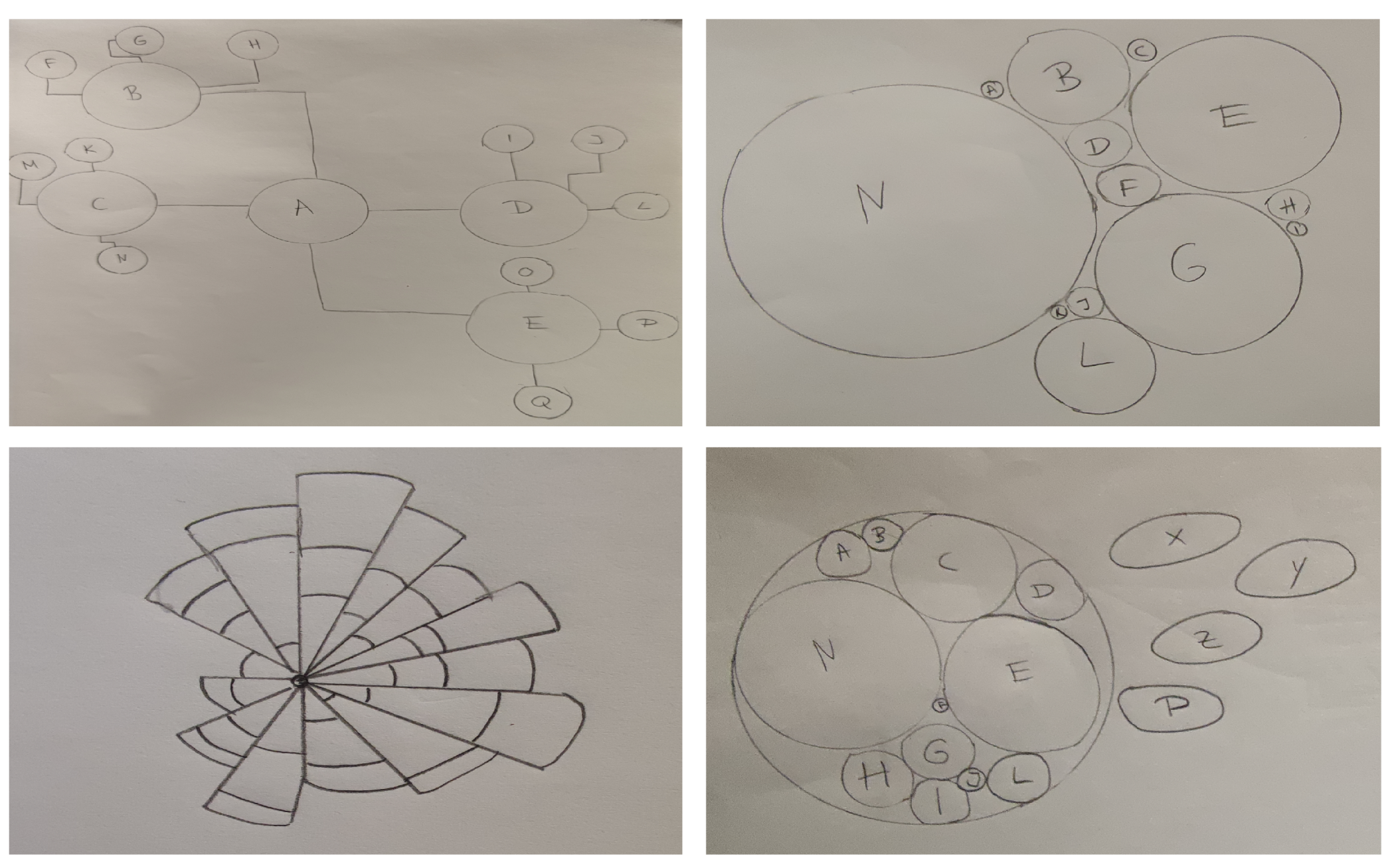

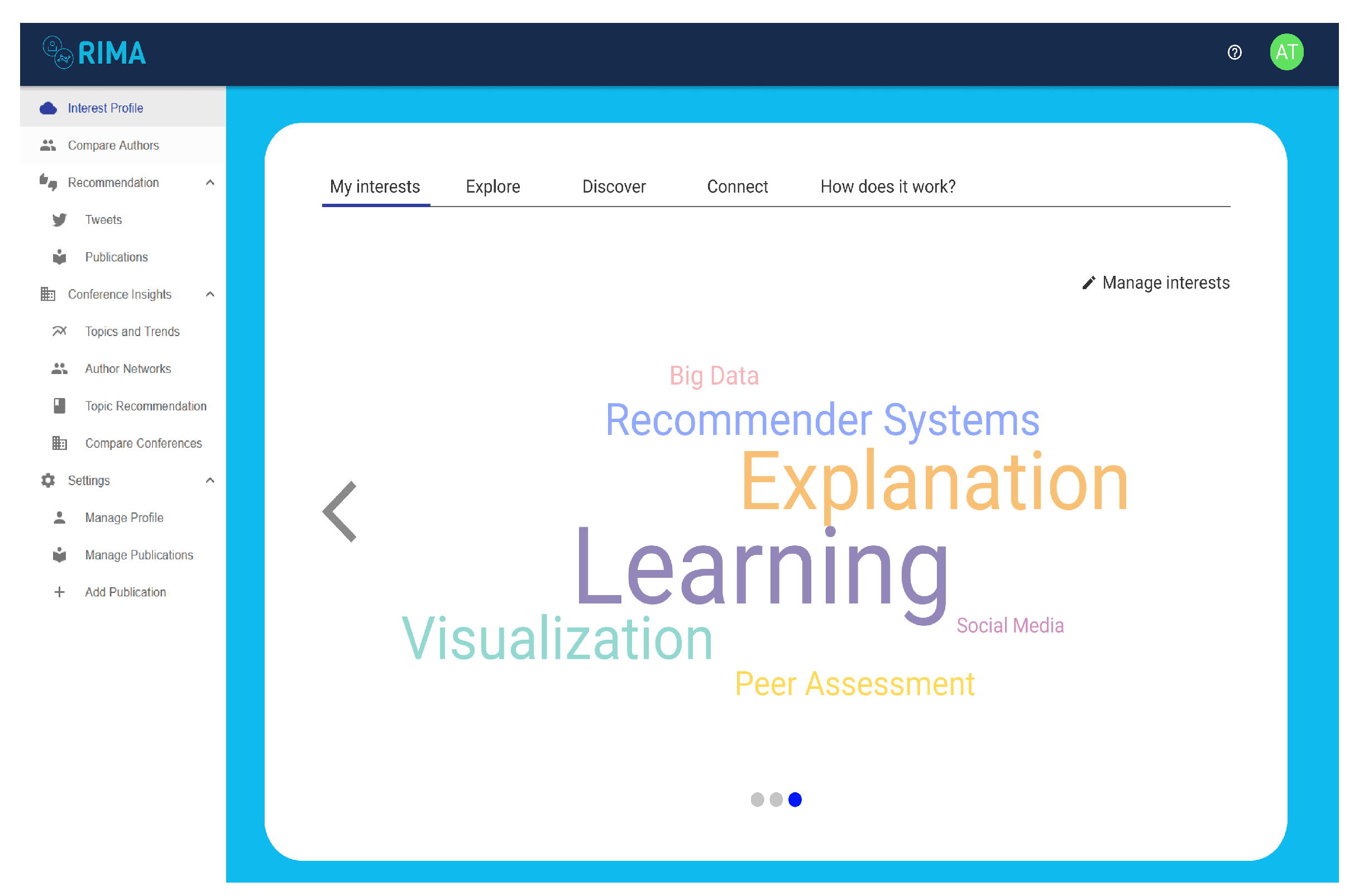

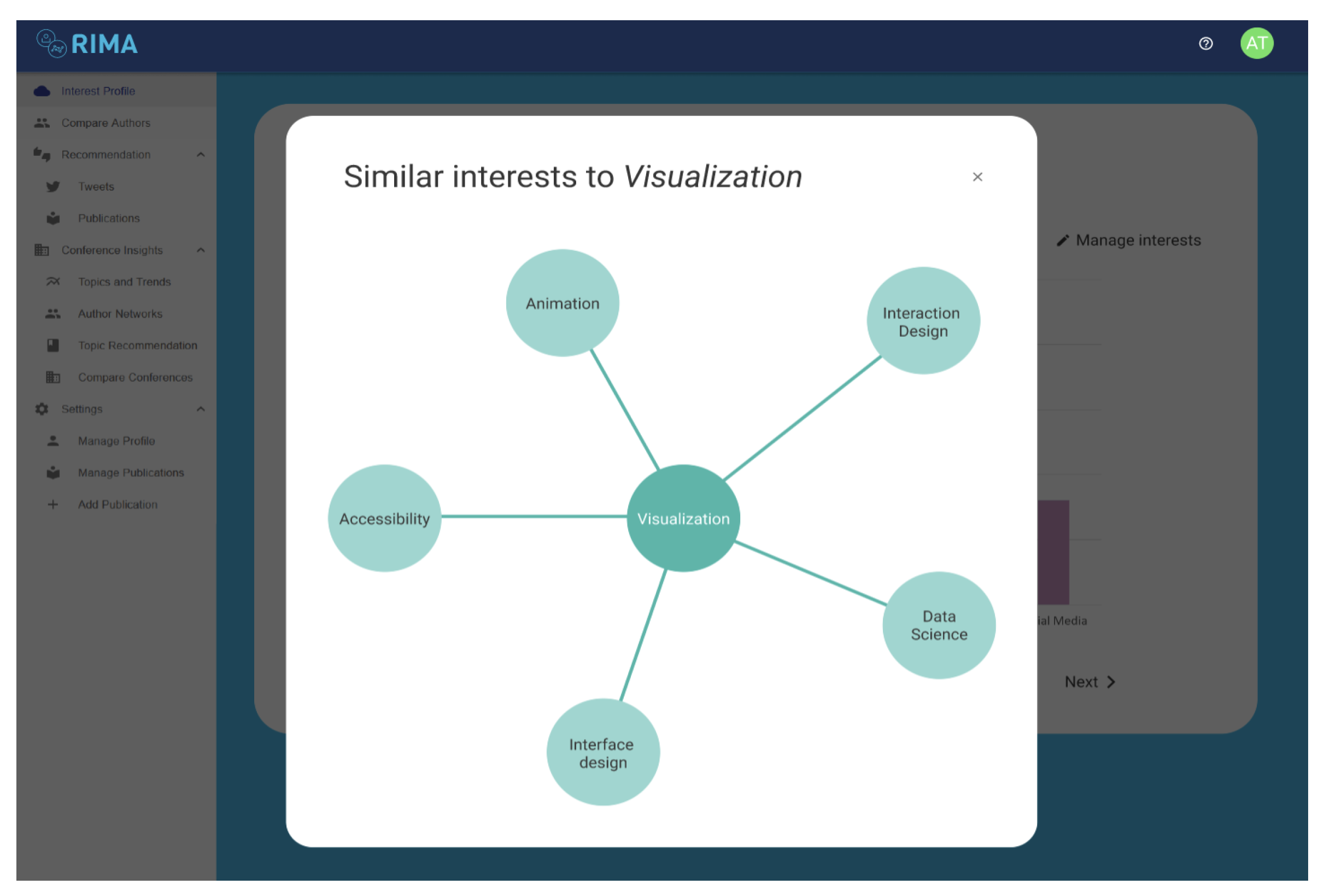

5.3.3. Second Iteration

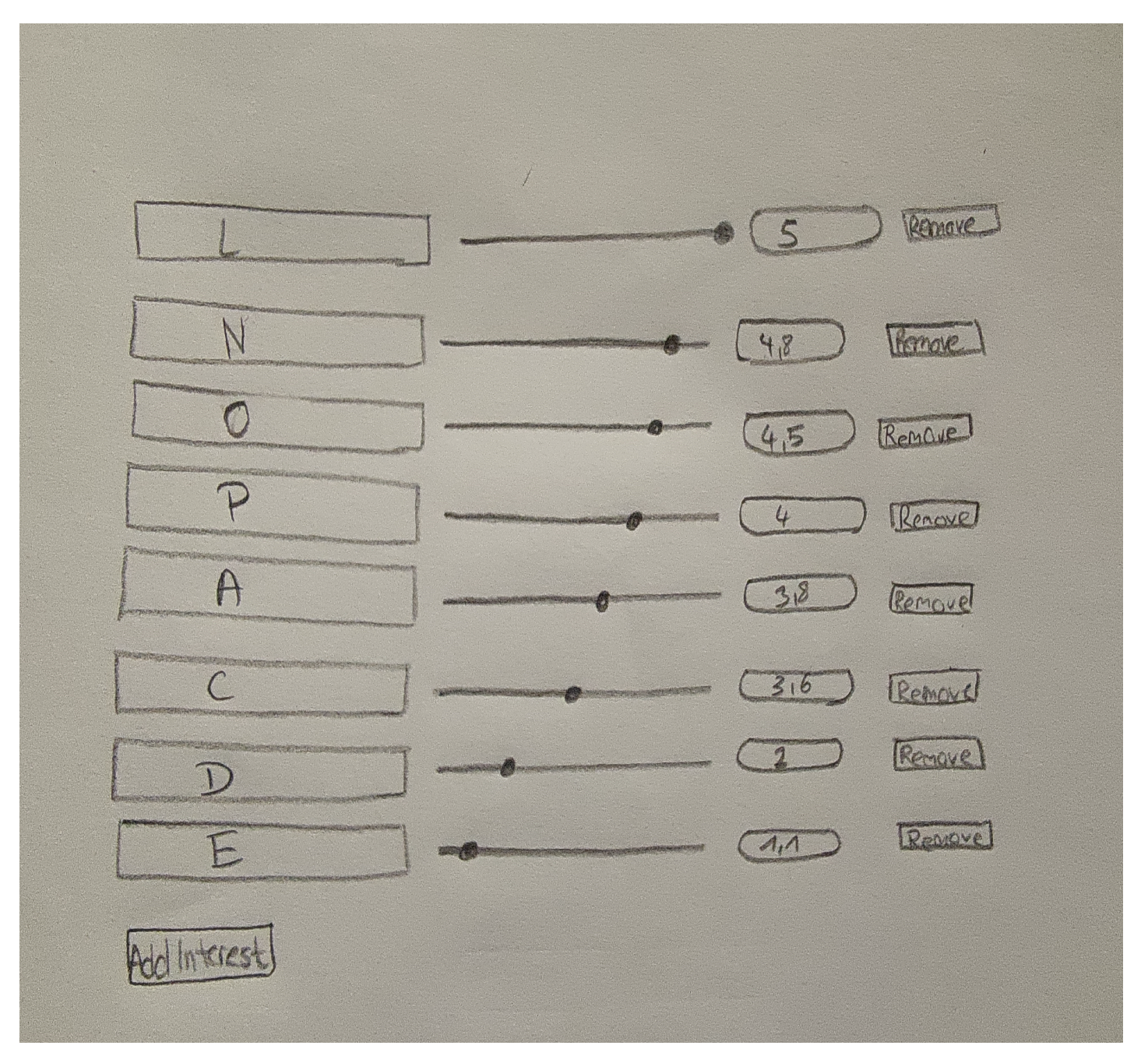

5.3.4. Third Iteration

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bull, S.; Kay, J. SMILI: A framework for interfaces to learning data in open learner models, learning analytics and related fields. Int. J. Artif. Intell. Educ. 2016, 26, 293–331. [Google Scholar] [CrossRef] [Green Version]

- Tintarev, N.; Masthoff, J. Explaining recommendations: Design and evaluation. In Recommender Systems Handbook; Springer: New York, NY, USA, 2015; pp. 353–382. [Google Scholar]

- Wasinger, R.; Wallbank, J.; Pizzato, L.; Kay, J.; Kummerfeld, B.; Böhmer, M.; Krüger, A. Scrutable user models and personalised item recommendation in mobile lifestyle applications. In International Conference on User Modeling, Adaptation, and Personalization; Springer: Berlin/Heidelberg, Germany, 2013; pp. 77–88. [Google Scholar]

- Knijnenburg, B.P.; Sivakumar, S.; Wilkinson, D. Recommender systems for self-actualization. In Proceedings of the 10th ACM Conference on Recommender Systems, Boston, MA, USA, 15–19 September 2016; pp. 11–14. [Google Scholar]

- Wilkinson, D. Testing a Recommender System for Self-Actualization. In Proceedings of the 12th ACM Conference on Recommender Systems, Vancouver, BC, Canada, 2–7 October 2018. [Google Scholar] [CrossRef]

- Graus, D.; Sappelli, M.; Manh Chu, D. “Let me tell you who you are”-Explaining recommender systems by opening black box user profiles. In Proceedings of the 2nd Fatrec Workshop on Responsible Recommendation, Vancouver, BC, Canada, 2–7 October 2018. [Google Scholar]

- Wasinger, R.; Fry, M.; Kay, J.; Kummerfeld, B. User modelling ecosystems: A user-centred approach. In International Conference on User Modeling, Adaptation, and Personalization; Springer: Berlin/Heidelberg, Germany, 2012; pp. 334–339. [Google Scholar]

- Ahn, J.W.; Brusilovsky, P.; Grady, J.; He, D.; Syn, S.Y. Open user profiles for adaptive news systems: Help or harm? In Proceedings of the 16th International Conference on World Wide Web, Banff, AB, Canada, 8–12 May 2007; pp. 11–20. [Google Scholar] [CrossRef]

- Rahdari, B.; Brusilovsky, P.; Babichenko, D. Personalizing information exploration with an open user model. In Proceedings of the 31st ACM Conference on Hypertext and Social Media, Orlando, FL, USA, 13–15 July 2020; pp. 167–176. [Google Scholar]

- Conati, C.; Porayska-Pomsta, K.; Mavrikis, M. AI in Education needs interpretable machine learning: Lessons from Open Learner Modelling. arXiv 2018, arXiv:1807.00154. [Google Scholar]

- Bull, S.; Kay, J. Open learner models. In Advances in Intelligent Tutoring Systems; Springer: Berlin/Heidelberg, Germany, 2010; pp. 301–322. [Google Scholar]

- Basu, S.; Biswas, G.; Kinnebrew, J.S. Learner modeling for adaptive scaffolding in a computational thinking-based science learning environment. User Model. User-Adapt. Interact. 2017, 27, 5–53. [Google Scholar] [CrossRef]

- Putnam, V.; Conati, C. Exploring the Need for Explainable Artificial Intelligence (XAI) in Intelligent Tutoring Systems (ITS). In Proceedings of the Joint ACM IUI 2019 Workshops, Los Angeles, CA, USA, 20 March 2019; Volume 19, pp. 1–7. [Google Scholar]

- Conati, C.; Barral, O.; Putnam, V.; Rieger, L. Toward personalized XAI: A case study in intelligent tutoring systems. Artif. Intell. 2021, 298, 103503. [Google Scholar] [CrossRef]

- Li, S.; Zhao, H. A Survey on Representation Learning for User Modeling. In Proceedings of the International Joint Conference on Artificial Intelligence, Yokohama, Japan, 7–15 January 2021; pp. 4997–5003. [Google Scholar]

- Hocine, N. Personalized serious games for self-regulated attention training. In Proceedings of the Adjunct Publication of the 27th Conference on User Modeling, Adaptation and Personalization, Larnaca, Cyprus, 9–12 June 2019; pp. 251–255. [Google Scholar]

- Hooshyar, D.; Bardone, E.; Mawas, N.E.; Yang, Y. Transparent Player Model: Adaptive Visualization of Learner Model in Educational Games. In International Conference on Innovative Technologies and Learning; Springer: Berlin/Heidelberg, Germany, 2020; pp. 349–357. [Google Scholar]

- Abdi, S.; Khosravi, H.; Sadiq, S.; Gasevic, D. Complementing educational recommender systems with open learner models. In Proceedings of the Tenth International Conference on Learning Analytics & Knowledge, Frankfurt, Germany, 23–27 March 2020; pp. 360–365. [Google Scholar]

- Bodily, R.; Kay, J.; Aleven, V.; Jivet, I.; Davis, D.; Xhakaj, F.; Verbert, K. Open learner models and learning analytics dashboards: A systematic review. In Proceedings of the 8th International Conference on Learning Analytics and Knowledge, Sydney, Australia, 7–9 March 2018; pp. 41–50. [Google Scholar]

- Bull, S.; Ginon, B.; Boscolo, C.; Johnson, M. Introduction of learning visualisations and metacognitive support in a persuadable open learner model. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburg, UK, 25–29 April 2016; pp. 30–39. [Google Scholar]

- Barria Pineda, J.; Brusilovsky, P. Making educational recommendations transparent through a fine-grained open learner model. In Proceedings of the Workshop on Intelligent User Interfaces for Algorithmic Transparency in Emerging Technologies at the 24th ACM Conference on Intelligent User Interfaces, IUI 2019, Los Angeles, CA, USA, 20 March 2019; Volume 2327. [Google Scholar]

- Barria-Pineda, J.; Brusilovsky, P. Explaining educational recommendations through a concept-level knowledge visualization. In Proceedings of the 24th International Conference on Intelligent User Interfaces: Companion, Marina del Rey, CA, USA, 17–20 March 2019; pp. 103–104. [Google Scholar]

- Ain, Q.U.; Chatti, M.A.; Guesmi, M.; Joarder, S. A Multi-Dimensional Conceptualization Framework for Personalized Explanations in Recommender Systems. In Proceedings of the Joint 27th International Conference on Intelligent User Interfaces, Helsinki, Finland, 22–25 March 2022. [Google Scholar]

- Guesmi, M.; Chatti, M.; Vorgerd, L. Input or Output: Effects of Explanation Focus on the Perception of Explainable Recommendation with Varying Level of Details. In Proceedings of the IntRS’21: Joint Workshop on Interfaces and Human Decision Making for Recommender Systems, Virtual Event, 25 September 2021; Volume 2948, pp. 55–72. [Google Scholar]

- Balog, K.; Radlinski, F.; Arakelyan, S. Transparent, scrutable and explainable user models for personalized recommendation. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 265–274. [Google Scholar]

- Guesmi, M.; Chatti, M.; Sun, Y.; Zumor, S.; Ji, F.; Muslim, A.; Vorgerd, L.; Joarder, S. Open, scrutable and explainable interest models for transparent recommendation. In Proceedings of the Joint Proceedings of the ACM IUI 2021 Workshops, College Station, TX, USA, 13–17 April 2021. [Google Scholar]

- Sullivan, E.; Bountouridis, D.; Harambam, J.; Najafian, S.; Loecherbach, F.; Makhortykh, M.; Kelen, D.; Wilkinson, D.; Graus, D.; Tintarev, N. Reading news with a purpose: Explaining user profiles for self-actualization. In Proceedings of the Adjunct Publication of the 27th Conference on User Modeling, Adaptation and Personalization, Larnaca, Cyprus, 9–12 June 2019; pp. 241–245. [Google Scholar]

- Tintarev, N.; Masthoff, J. Designing and evaluating explanations for recommender systems. In Recommender Systems Handbook; Springer: New York, NY, USA, 2011; pp. 479–510. [Google Scholar]

- Badenes, H.; Bengualid, M.N.; Chen, J.; Gou, L.; Haber, E.; Mahmud, J.; Nichols, J.W.; Pal, A.; Schoudt, J.; Smith, B.A.; et al. System U: Automatically deriving personality traits from social media for people recommendation. In Proceedings of the 8th ACM Conference on Recommender Systems, Silicon Valley, CA, USA, 6–10 October 2014; pp. 373–374. [Google Scholar]

- Du, F.; Plaisant, C.; Spring, N.; Shneiderman, B. Visual interfaces for recommendation systems: Finding similar and dissimilar peers. ACM Trans. Intell. Syst. Technol. (TIST) 2018, 10, 1–23. [Google Scholar] [CrossRef]

- Barria-Pineda, J.; Akhuseyinoglu, K.; Brusilovsky, P. Explaining need-based educational recommendations using interactive open learner models. In Proceedings of the Adjunct Publication of the 27th Conference on User Modeling, Adaptation and Personalization, Larnaca, Cyprus, 9–12 June 2019; pp. 273–277. [Google Scholar]

- Green, S.J.; Lamere, P.; Alexander, J.; Maillet, F.; Kirk, S.; Holt, J.; Bourque, J.; Mak, X.W. Generating transparent, steerable recommendations from textual descriptions of items. In Proceedings of the Third ACM Conference on Recommender Systems, New York, NY, USA, 23–25 October 2009; pp. 281–284. [Google Scholar]

- Bakalov, F.; Meurs, M.J.; König-Ries, B.; Sateli, B.; Witte, R.; Butler, G.; Tsang, A. An approach to controlling user models and personalization effects in recommender systems. In Proceedings of the 2013 International Conference on Intelligent User Interfaces, Santa Monica, CA, USA, 19–22 March 2013; pp. 49–56. [Google Scholar]

- Jin, Y.; Seipp, K.; Duval, E.; Verbert, K. Go with the flow: Effects of transparency and user control on targeted advertising using flow charts. In Proceedings of the International Working Conference on Advanced Visual Interfaces, Bari, Italy, 7–10 June 2016; pp. 68–75. [Google Scholar]

- Du, F.; Malik, S.; Theocharous, G.; Koh, E. Personalizable and interactive sequence recommender system. In Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–6. [Google Scholar]

- Zürn, M.; Eiband, M.; Buschek, D. What if? Interaction with Recommendations. In Proceedings of the IUI workshop on Explainable Smart Systems and Algorithmic Transparency in Emerging Technologies, Cagliari, Italy, 17–20 March 2020. [Google Scholar]

- Rahdari, B.; Brusilovsky, P.; Javadian Sabet, A. Connecting Students with Research Advisors Through User-Controlled Recommendation. In Proceedings of the Fifteenth ACM Conference on Recommender Systems, Virtual Event, 27 September–1 October 2021; pp. 745–748. [Google Scholar]

- Liang, Y.; Willemsen, M.C. Interactive Music Genre Exploration with Visualization and Mood Control. In Proceedings of the 26th International Conference on Intelligent User Interfaces, College Station, TX, USA, 14–17 April 2021; pp. 175–185. [Google Scholar]

- Huang, X.; Fang, Q.; Qian, S.; Sang, J.; Li, Y.; Xu, C. Explainable interaction-driven user modeling over knowledge graph for sequential recommendation. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 548–556. [Google Scholar]

- Guesmi, M.; Chatti, M.A.; Vorgerd, L.; Joarder, S.; Zumor, S.; Sun, Y.; Ji, F.; Muslim, A. On-demand Personalized Explanation for Transparent Recommendation. In Proceedings of the Adjunct Proceedings of the 29th ACM Conference on User Modeling, Adaptation and Personalization, Utrecht, The Netherlands, 21–25 June 2021; pp. 246–252. [Google Scholar]

- Goldstein, K. Human Nature in the Light of Psychopathology; Harvard University Press: Cambridge, MA, USA, 1940. [Google Scholar]

- Rogers, C.R. Client-Centered Therapy; Houghton-Mifflin: Boston, MA, USA, 1951; Volume 7. [Google Scholar]

- Maslow, A.H. A theory of human motivation. Psychol. Rev. 1943, 50, 370. [Google Scholar] [CrossRef] [Green Version]

- Rogers, C.R. The Actualizing Tendency in Relation to “motives” and to consciousness. In Nebraska Symposium on Motivation; Jones, M.R., Ed.; U. Nebraska Press: Lincoln, NE, USA, 1963; pp. 1–24. [Google Scholar]

- Maslow, A.H. Toward a Psychology of Being; D. van Nostrand Company: Princeton, NJ, USA, 1962. [Google Scholar]

- Maslow, A.H. Motivation and Personality Harper and Row; Longman an Imprint ofAddison Wesley Longman, Inc.: New York, NY, USA, 1954. [Google Scholar]

- Hanlon, J.M. Administration and Education: Toward a Theory of Self-Actualization; Wadsworth Publishing Company: Belmont, CA, USA, 1968. [Google Scholar]

- Dezhbankhan, F.; Baranovich, D.L.; Abedalaziz, N. Impacts of Direct Metacognitive Instructions on Self-Actualization. Int. Educ. Stud. 2020, 13, 1–9. [Google Scholar] [CrossRef]

- Akçay, C.; Akyol, B. Self-actualization levels of participants in lifelong education centers. Procedia-Soc. Behav. Sci. 2014, 116, 1577–1580. [Google Scholar] [CrossRef] [Green Version]

- Amir Kiaei, Y. The Relationship between Metacognition, Self-Actualization, and Well-Being among University Students: Reviving Self-Actualization as the Purpose of Education; Florida International University Electronic Theses and Dissertations. 1367; Florida International University: Miami, FL, USA, 2014. [Google Scholar]

- Sperling, R.A.; Howard, B.C.; Staley, R.; DuBois, N. Metacognition and self-regulated learning constructs. Educ. Res. Eval. 2004, 10, 117–139. [Google Scholar] [CrossRef]

- Shipunova, O.D.; Berezovskaya, I.P.; Smolskaia, N.B. The role of student’s self-actualization in adapting to the e-learning environment. In Proceedings of the Seventh International Conference on Technological Ecosystems for Enhancing Multiculturality, León, Spain, 16–18 October 2019; pp. 745–750. [Google Scholar]

- Guo, L. Beyond the top-N: Algorithms that generate recommendations for self-actualization. In Proceedings of the 12th ACM Conference on Recommender Systems, Vancouver, BC, Canada, 2 October 2018; pp. 573–577. [Google Scholar]

- Harambam, J.; Bountouridis, D.; Makhortykh, M.; Van Hoboken, J. Designing for the better by taking users into account: A qualitative evaluation of user control mechanisms in (news) recommender systems. In Proceedings of the 13th ACM Conference on Recommender Systems, Copenhagen, Denmark, 16–20 September 2019; pp. 69–77. [Google Scholar]

- Liang, Y. Recommender system for developing new preferences and goals. In Proceedings of the 13th ACM Conference on Recommender Systems, Copenhagen, Denmark, 16–20 September 2019; pp. 611–615. [Google Scholar]

- Kay, J. Lifelong learner modeling for lifelong personalized pervasive learning. IEEE Trans. Learn. Technol. 2008, 1, 215–228. [Google Scholar] [CrossRef]

- Liang, Y.; Willemsen, M.C. Personalized recommendations for music genre exploration. In Proceedings of the 27th ACM Conference on User Modeling, Adaptation and Personalization, Larnaca, Cyprus, 9–12 June 2019; pp. 276–284. [Google Scholar]

- Kumar, J.; Tintarev, N. Using Visualizations to Encourage Blind-Spot Exploration. In Proceedings of the Joint Workshop on Interfaces and Human Decision Making for Recommender Systems, Vancouver, BC, Canada, 2–7 October 2018; pp. 53–60. [Google Scholar]

- Tintarev, N.; Rostami, S.; Smyth, B. Knowing the unknown: Visualising consumption blind-spots in recommender systems. In Proceedings of the 33rd Annual ACM Symposium on Applied Computing, Pau, France, 9–13 April 2018; pp. 1396–1399. [Google Scholar]

- Kunkel, J.; Schwenger, C.; Ziegler, J. Newsviz: Depicting and controlling preference profiles using interactive treemaps in news recommender systems. In Proceedings of the 28th ACM Conference on User Modeling, Adaptation and Personalization, Genoa, Italy, 14–17 July 2020; pp. 126–135. [Google Scholar]

- Bull, S.; Al-Shanfari, L. Negotiating individual learner models in contexts of peer assessment and group learning. In Proceedings of the Workshops at the 17th International Conference on Artificial Intelligence in Education AIED 2015, Madrid, Spain, 22–26 June 2015; Volume 1432, pp. 1–6. [Google Scholar]

- Maslow, A.H. Self-Actualization; Big Sur Recordings: Tiburon, CA, USA, 1971. [Google Scholar]

- Norman, D. The Design of Everyday Things: Revised and Expanded Edition; Basic Books; A Member of the Perseus Books Group: New York, NY, USA, 2013. [Google Scholar]

- Chatti, M.A.; Ji, F.; Guesmi, M.; Muslim, A.; Singh, R.K.; Joarder, S.A. SIMT: A Semantic Interest Modeling Toolkit. In Proceedings of the 29th ACM Conference on User Modeling, Adaptation and Personalization, Utrecht, The Netherlands, 21–25 June 2021; pp. 75–78. [Google Scholar]

- Harte, R.; Quinlan, L.R.; Glynn, L.; Rodríguez-Molinero, A.; Baker, P.M.; Scharf, T.; ÓLaighin, G. Human-centered design study: Enhancing the usability of a mobile phone app in an integrated falls risk detection system for use by older adult users. JMIR MHealth UHealth 2017, 5, e7046. [Google Scholar] [CrossRef] [PubMed]

- Abras, C.; Maloney-Krichmar, D.; Preece, J. User-centered design. In Encyclopedia of Human-Computer Interaction; Bainbridge, W., Ed.; Sage Publisher: Thousand Oaks, CA, USA, 2004; Volume 37, pp. 445–456. [Google Scholar]

- Petrelli, D.; Not, E. User-centred design of flexible hypermedia for a mobile guide: Reflections on the HyperAudio experience. User Model. User-Adapt. Interact. 2005, 15, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Why You Only Need to Test with 5 Users. Available online: https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users/ (accessed on 20 May 2022).

| Goal | Description | References |

|---|---|---|

| Explore | Explore one’s own tastes. | [4] |

| Explore underdeveloped tastes. | ||

| Cover unexplored potential preferences. | [53] | |

| Explore tastes that go beyond the mainstream. | ||

| Explore one’s unique personal preferences. | [6] | |

| Explore beyond their known interests. | [54] | |

| Explore new items that they might be interesting and further build new preferences. | [55] | |

| Explore new tastes. | [38] | |

| Enable the learner to explore their learner model. | [56] | |

| Develop | Develop new preferences. | [38,55,57] |

| Develop deep learning and meta-cognitive approaches to learning. | [1] | |

| Develop ones’ unique tastes and preferences. | [4,5,6,53] | |

| The propensity of an individual to become actualized in his potential. | [41] | |

| The tendency of an organism to develop its abilities in order to preserve and develop its personality. | [44] | |

| Self-actualization can be described as the complete realization of one’s potential ... which involve the full development of one’s abilities and appreciation for life. | [45] | |

| Broaden horizons | Make small steps outside of current interests. | [27] |

| Offer “news from unexplored territories”, inspired by the notion of diversity, helps readers to expand their horizon. | [54] | |

| Discover the unexplored | Urge users to explore topics they are largely outside of their current interests. | [27] |

| Offer “surprising news”, inspired by the notion of serendipity, generates a random order of items. | [54] | |

| Recognize blind spots in the user model | Encourage users to identify their blind spots through visualizations. | [58,59] |

| Make users aware of blind-spots in their profile. | [60] | |

| Present users their filter bubbles (blind spots) to encourage them to explore new items | [55] | |

| Cover all users’ tastes | Help the user discover all of their preferences. | [4] |

| Discover new and unknown areas of one’s own taste. | ||

| Get a more holistic representation of the user’s tastes. | [53] | |

| The system is able to cover all of user’s tastes. | [5] | |

| Understand own unique tastes | Support users in understanding their unique tastes and preferences. | [4,5,53] |

| Offer “news from the other ideological side”, inspired by the notion of intellectual diplomacy, helps people to understand their ideological counterparts. | [54] | |

| Understand the inner workings of the system | When users feel educated about algorithmic workings of a RS, they can be more motivated to explore items beyond their usual interests. | [60] |

| The system will explain why it believes its current assessment to be correct by providing evidence to support these beliefs. | [10] | |

| By exposing the user profile we can support users in better understanding part of the underlying mechanisms of the recommender system. | [6] | |

| Correct or confirm | Presenting users with a list of things the system predicts the user will hate will allow users to correct or confirm these predictions. | [4,5] |

| Providing users the ability to correct their profiles when they disagree with (parts of) it. | [1,6,61] | |

| Show recommendations with a low predicted rating, allowing users to correct potential “false negatives”. | [53] | |

| The individual’s self-concept is maintained and enhanced via reflection and the reinterpretation of various experiences which enable the individual to recover, change and develop. | [42] | |

| View the user model | Users may view the model’s current evaluation of relevant student’s states and abilities. | [1,10,61] |

| Negotiate the user model | The user and the system work together to arrive at an assessment. | [1,5,10] |

| Edit the user model | The user can directly change the model assessment and system’s representation of their knowledge at will. | [1,3,10,32] |

| Develop taste-based communities | Users actively recommend items to other users which can contribute to a sense of fulfilment (helping others) and pride (being called upon for expertise). | [4] |

| Recommend groups of users to come together and develop “taste-based communities” that are based on shared preferences. |

| Mechanism | Description | References |

|---|---|---|

| Increase diversity gradually | Not connect users to completely unrelated items, but gradually increase diversity, to slowly move out of their epistemic bubble | [27] |

| Visualize underdeveloped parts of a user model | Visualizing under-explored parts of a user profile improves exploration | [27] |

| Connect users based on shared preferences | Recommend groups of users to come together and develop “taste-based communities” that are based on shared preferences, e.g., regarding certain controversial items. | [4] |

| Explore under-represented preferences | Focus on exploring underdeveloped tastes, rather than optimizing the probability that users will like the recommendations. | [4,53] |

| Detect not just some but all of the user’s preferences | Show a list of hard-to-predict items that may be used to identify unexpressed preferences. | [4] |

| Try new things | Recommenders can help users to better understand their own tastes, because developing one’s tastes means trying new things, even if this includes things that one may not like. | [4,5] |

| Access the user model with varying levels of interactivity | Allow users to access their content with varying levels of interactivity | [1,10] |

| Explain the user model (i.e., the system’s input) | Summarize and visualize the high dimensional internal representations of users (i.e., user profiles) in such a way that users can interpret them, and take action on them. | [6,27] |

| Goals | Sub-Goals | Mechanisms |

|---|---|---|

| Explore | Broaden horizons | Increase diversity gradually |

| Recognize blind spots in the user model | Explore under-represented preferences | |

| Develop | Discover the unexplored | Visualize underdeveloped parts of a user model |

| Try new things | ||

| Cover all users’ tastes | Detect not just some but all of the user’s preferences | |

| Understand own unique tastes | ||

| Understand | Explain the user model (i.e., the system’s input) | |

| Understand the inner workings of the system | ||

| Scrutinize | Correct or confirm | Access the user model with varying levels of interactivity |

| View the user model | ||

| Negotiate the user model | ||

| Edit the user model | ||

| Socialize | Develop taste-based communities | Connect users based on shared preferences |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guesmi, M.; Chatti, M.A.; Tayyar, A.; Ain, Q.U.; Joarder, S. Interactive Visualizations of Transparent User Models for Self-Actualization: A Human-Centered Design Approach. Multimodal Technol. Interact. 2022, 6, 42. https://doi.org/10.3390/mti6060042

Guesmi M, Chatti MA, Tayyar A, Ain QU, Joarder S. Interactive Visualizations of Transparent User Models for Self-Actualization: A Human-Centered Design Approach. Multimodal Technologies and Interaction. 2022; 6(6):42. https://doi.org/10.3390/mti6060042

Chicago/Turabian StyleGuesmi, Mouadh, Mohamed Amine Chatti, Alptug Tayyar, Qurat Ul Ain, and Shoeb Joarder. 2022. "Interactive Visualizations of Transparent User Models for Self-Actualization: A Human-Centered Design Approach" Multimodal Technologies and Interaction 6, no. 6: 42. https://doi.org/10.3390/mti6060042

APA StyleGuesmi, M., Chatti, M. A., Tayyar, A., Ain, Q. U., & Joarder, S. (2022). Interactive Visualizations of Transparent User Models for Self-Actualization: A Human-Centered Design Approach. Multimodal Technologies and Interaction, 6(6), 42. https://doi.org/10.3390/mti6060042