Evaluating Virtual Hand Illusion through Realistic Appearance and Tactile Feedback

Abstract

1. Introduction

- RQ1: Do different hand appearances affect our participants’ sense of presence and VHI?

- RQ2: Does tactile feedback affect our participants’ sense of presence and VHI?

- RQ3: Does prior VR experience affect our participants’ sense of presence and VHI?

2. Related Work

3. Methodology

3.1. Participants

3.2. Virtual Reality Application

3.3. Experimental Conditions

3.4. Measurements

3.5. Procedure

4. Results

4.1. Presence

4.2. Hand Ownership

4.3. Touch Sensation

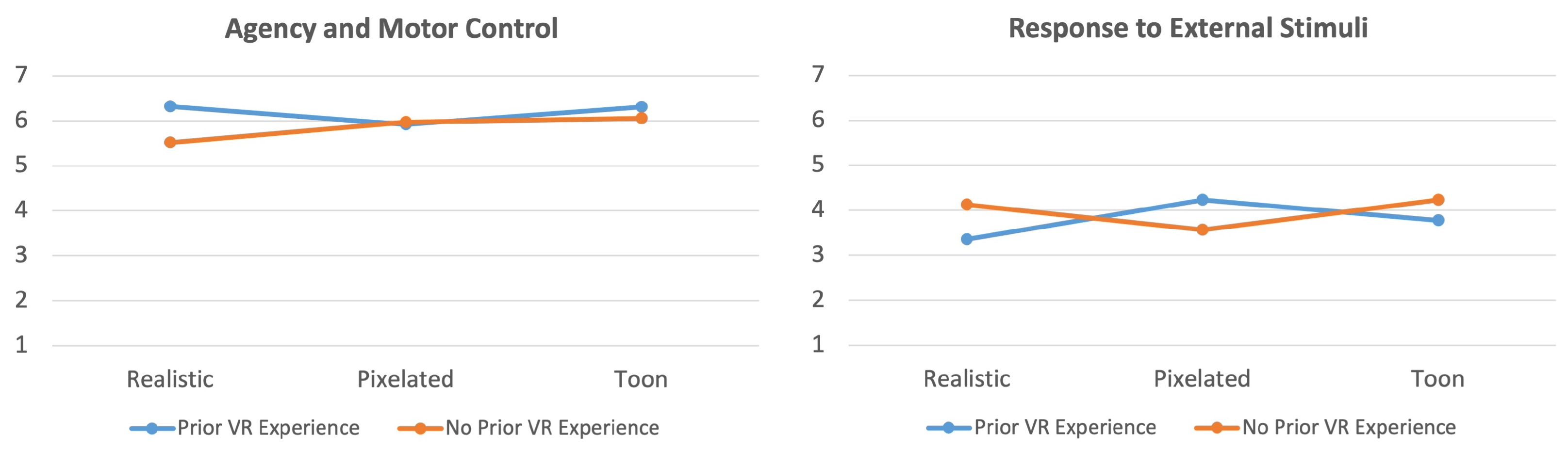

4.4. Agency and Motor Control

4.5. External Appearance

4.6. Response to External Stimuli

4.7. Prior VR Experience

5. Discussion

5.1. RQ1: Realistic Appearance

5.2. RQ2: Tactile Feedback

5.3. RQ3: Prior VR Experience

5.4. Limitations

5.5. Design Considerations

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- De Monsabert, B.G.; Edwards, D.; Shah, D.; Kedgley, A. Importance of consistent datasets in musculoskeletal modelling: A study of the hand and wrist. Ann. Biomed. Eng. 2018, 46, 71–85. [Google Scholar] [CrossRef] [PubMed]

- Peck, T.C.; Tutar, A. The impact of a self-avatar, hand collocation, and hand proximity on embodiment and stroop interference. IEEE Trans. Vis. Comput. Graph. 2020, 26, 1964–1971. [Google Scholar] [CrossRef]

- Tseng, P.; Bridgeman, B. Improved change detection with nearby hands. Exp. Brain Res. 2011, 209, 257–269. [Google Scholar] [CrossRef][Green Version]

- Weidler, B.J.; Abrams, R.A. Hand proximity—Not arm posture—Alters vision near the hands. Atten. Percept. Psychophys. 2013, 75, 650–653. [Google Scholar] [CrossRef] [PubMed]

- Kondo, R.; Sugimoto, M.; Minamizawa, K.; Hoshi, T.; Inami, M.; Kitazaki, M. Illusory body ownership of an invisible body interpolated between virtual hands and feet via visual-motor synchronicity. Sci. Rep. 2018, 8, 7541. [Google Scholar] [CrossRef] [PubMed]

- Zell, E.; Aliaga, C.; Jarabo, A.; Zibrek, K.; Gutierrez, D.; McDonnell, R.; Botsch, M. To stylize or not to stylize? The effect of shape and material stylization on the perception of computer-generated faces. ACM Trans. Graph. (TOG) 2015, 34, 1–12. [Google Scholar] [CrossRef]

- Höll, M.; Oberweger, M.; Arth, C.; Lepetit, V. Efficient physics-based implementation for realistic hand-object interaction in virtual reality. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Tuebingen/Reutlingen, Germany, 18–22 March 2018; pp. 175–182. [Google Scholar]

- Hoyet, L.; Argelaguet, F.; Nicole, C.; Lécuyer, A. “Wow! i have six Fingers!”: Would You accept structural changes of Your hand in Vr? Front. Robot. AI 2016, 3, 27. [Google Scholar] [CrossRef]

- Lin, L.; Jörg, S. Need a hand? How appearance affects the virtual hand illusion. In Proceedings of the ACM Symposium on Applied Perception, Anaheim, CA, USA, 22–23 July 2016; pp. 69–76. [Google Scholar]

- Schwind, V.; Knierim, P.; Tasci, C.; Franczak, P.; Haas, N.; Henze, N. “These are not my hands!” Effect of Gender on the Perception of Avatar Hands in Virtual Reality. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1577–1582. [Google Scholar]

- Pamungkas, D.S.; Ward, K. Electro-tactile feedback system to enhance virtual reality experience. Int. J. Comput. Theory Eng. 2016, 8, 465. [Google Scholar] [CrossRef]

- Cui, D.; Mousas, C. An on-site and remote study during the COVID-19 pandemic on virtual hand appearance and tactile feedback. Behav. Inf. Technol. 2021, 40, 1278–1291. [Google Scholar] [CrossRef]

- Khamis, M.; Schuster, N.; George, C.; Pfeiffer, M. ElectroCutscenes: Realistic Haptic Feedback in Cutscenes of Virtual Reality Games Using Electric Muscle Stimulation. In Proceedings of the 25th ACM Symposium on Virtual Reality Software and Technology, Sydney, Australia, 11–15 September 2019; pp. 1–10. [Google Scholar]

- Ma, K.; Hommel, B. The virtual-hand illusion: Effects of impact and threat on perceived ownership and affective resonance. Front. Psychol. 2013, 4, 604. [Google Scholar] [CrossRef]

- Schwind, V.; Lin, L.; Di Luca, M.; Jörg, S.; Hillis, J. Touch with foreign hands: The effect of virtual hand appearance on visual-haptic integration. In Proceedings of the 15th ACM Symposium on Applied Perception, Vancouver, BC, Canada, 10–11 August 2018; pp. 1–8. [Google Scholar]

- Schild, J.; Flock, L.; Martens, P.; Roth, B.; Schünemann, N.; Heller, E.; Misztal, S. EPICSAVE Lifesaving Decisions—A Collaborative VR Training Game Sketch for Paramedics. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; p. 1389. [Google Scholar]

- Walker, J.M.; Blank, A.A.; Shewokis, P.A.; O’Malley, M.K. Tactile feedback of object slip facilitates virtual object manipulation. IEEE Trans. Haptics 2015, 8, 454–466. [Google Scholar] [CrossRef]

- Schiefer, M.A.; Graczyk, E.L.; Sidik, S.M.; Tan, D.W.; Tyler, D.J. Artificial tactile and proprioceptive feedback improves performance and confidence on object identification tasks. PLoS ONE 2018, 13, e0207659. [Google Scholar] [CrossRef] [PubMed]

- Jung, S.; Bruder, G.; Wisniewski, P.J.; Sandor, C.; Hughes, C.E. Over my hand: Using a personalized hand in vr to improve object size estimation, body ownership, and presence. In Proceedings of the Symposium on Spatial User Interaction, Berlin, Germany, 13–14 October 2018; pp. 60–68. [Google Scholar]

- Ogawa, N.; Narumi, T.; Hirose, M. Object size perception in immersive virtual reality: Avatar realism affects the way we perceive. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Tuebingen/Reutlingen, Germany, 18–22 March 2018; pp. 647–648. [Google Scholar]

- Volante, M.; Babu, S.V.; Chaturvedi, H.; Newsome, N.; Ebrahimi, E.; Roy, T.; Daily, S.B.; Fasolino, T. Effects of virtual human appearance fidelity on emotion contagion in affective inter-personal simulations. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1326–1335. [Google Scholar] [CrossRef] [PubMed]

- MacDorman, K.F.; Green, R.D.; Ho, C.C.; Koch, C.T. Too real for comfort? Uncanny responses to computer generated faces. Comput. Hum. Behav. 2009, 25, 695–710. [Google Scholar] [CrossRef]

- Ring, L.; Utami, D.; Bickmore, T. The right agent for the job? In International Conference on Intelligent Virtual Agents; Springer: Cham, Switzerland, 2014; pp. 374–384. [Google Scholar]

- Doran, J.P.; Zucconi, A. Unity 2018 Shaders and Effects Cookbook: Transform Your Game into a Visually Stunning Masterpiece with over 70 Recipes; Packt Publishing Ltd.: Birmingham, UK, 2018. [Google Scholar]

- Nair, R. Using Raymarched Shaders as Environments in 3D Video Games; Drexel University: Philadelphia, PA, USA, 2020. [Google Scholar]

- Nordberg, J. Visual Effects for Mobile Games: Creating a Clean Visual Effect for Small Screens. Bachelor’s Thesis, University of Applied Sciences, Turku, Finland, 2020. [Google Scholar]

- Argelaguet, F.; Hoyet, L.; Trico, M.; Lécuyer, A. The role of interaction in virtual embodiment: Effects of the virtual hand representation. In Proceedings of the 2016 IEEE Virtual Reality (VR), Greenville, SC, USA, 19–23 March 2016; pp. 3–10. [Google Scholar]

- Koilias, A.; Mousas, C.; Anagnostopoulos, C.N. The effects of motion artifacts on self-avatar agency. Informatics 2019, 6, 18. [Google Scholar] [CrossRef]

- Kokkinara, E.; Slater, M.; López-Moliner, J. The effects of visuomotor calibration to the perceived space and body, through embodiment in immersive virtual reality. ACM Trans. Appl. Percept. (TAP) 2015, 13, 1–22. [Google Scholar] [CrossRef]

- Krogmeier, C.; Mousas, C.; Whittinghill, D. Human–virtual character interaction: Toward understanding the influence of haptic feedback. Comput. Animat. Virtual Worlds 2019, 30, e1883. [Google Scholar] [CrossRef]

- Fribourg, R.; Argelaguet, F.; Lécuyer, A.; Hoyet, L. Avatar and sense of embodiment: Studying the relative preference between appearance, control and point of view. IEEE Trans. Vis. Comput. Graph. 2020, 26, 2062–2072. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Franco, M.; Peck, T.C. Avatar embodiment. towards a standardized questionnaire. Front. Robot. AI 2018, 5, 74. [Google Scholar] [CrossRef]

- Maselli, A.; Slater, M. The building blocks of the full body ownership illusion. Front. Hum. Neurosci. 2013, 7, 83. [Google Scholar] [CrossRef]

- Kokkinara, E.; Slater, M. Supplementary Material for:“Measuring the Effects through Time of the Influence of Visuomotor and Visuotactile Synchronous Stimulation on a Virtual Body Ownership Illusion”. Perception 2014, 43, NP1–NP4. [Google Scholar] [CrossRef]

- Kilteni, K.; Bergstrom, I.; Slater, M. Drumming in immersive virtual reality: The body shapes the way we play. IEEE Trans. Vis. Comput. Graph. 2013, 19, 597–605. [Google Scholar] [CrossRef]

- Peck, T.C.; Seinfeld, S.; Aglioti, S.M.; Slater, M. Putting yourself in the skin of a black avatar reduces implicit racial bias. Conscious. Cogn. 2013, 22, 779–787. [Google Scholar] [CrossRef]

- Sposito, A.V.; Bolognini, N.; Vallar, G.; Posteraro, L.; Maravita, A. The spatial encoding of body parts in patients with neglect and neurologically unimpaired participants. Neuropsychologia 2010, 48, 334–340. [Google Scholar] [CrossRef]

- Longo, M.R.; Haggard, P. An implicit body representation underlying human position sense. Proc. Natl. Acad. Sci. USA 2010, 107, 11727–11732. [Google Scholar] [CrossRef]

- Coelho, L.A.; Gonzalez, C.L. The visual and haptic contributions to hand perception. Psychol. Res. 2018, 82, 866–875. [Google Scholar] [CrossRef] [PubMed]

- Zibrek, K.; Kokkinara, E.; McDonnell, R. The effect of realistic appearance of virtual characters in immersive environments-does the character’s personality play a role? IEEE Trans. Vis. Comput. Graph. 2018, 24, 1681–1690. [Google Scholar] [CrossRef]

- Lin, L.; Normoyle, A.; Adkins, A.; Sun, Y.; Robb, A.; Ye, Y.; Di Luca, M.; Jörg, S. The effect of hand size and interaction modality on the virtual hand illusion. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 510–518. [Google Scholar]

- Moehring, M.; Froehlich, B. Effective manipulation of virtual objects within arm’s reach. In Proceedings of the 2011 IEEE Virtual Reality Conference, Osaka, Japan, 23–27 March 2011; pp. 131–138. [Google Scholar]

- Lin, J.; Schulze, J.P. Towards naturally grabbing and moving objects in VR. Electron. Imaging 2016, 2016, 1–6. [Google Scholar] [CrossRef]

- Porter III, J.; Boyer, M.; Robb, A. Guidelines on successfully porting non-immersive games to virtual reality: A case study in minecraft. In Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play, Melbourne, Australia, 28–31 October 2018; pp. 405–415. [Google Scholar]

- Lo, J.; Johansson, R.S. Regional differences and interindividual variability in sensitivity to vibration in the glabrous skin of the human hand. Brain Res. 1984, 301, 65–72. [Google Scholar]

- Merchel, S.; Altinsoy, M.E. Psychophysical comparison of the auditory and tactile perception: A survey. J. Multimodal User Interfaces 2020, 14, 271–283. [Google Scholar] [CrossRef]

- Gaudeni, C.; Meli, L.; Jones, L.A.; Prattichizzo, D. Presenting surface features using a haptic ring: A psychophysical study on relocating vibrotactile feedback. IEEE Trans. Haptics 2019, 12, 428–437. [Google Scholar] [CrossRef]

- Fröhner, J.; Salvietti, G.; Beckerle, P.; Prattichizzo, D. Can wearable haptic devices foster the embodiment of virtual limbs? IEEE Trans. Haptics 2018, 12, 339–349. [Google Scholar] [CrossRef]

- Moore, C.H.; Corbin, S.F.; Mayr, R.; Shockley, K.; Silva, P.L.; Lorenz, T. Grasping Embodiment: Haptic Feedback for Artificial Limbs. Front. Neurorobot. 2021, 15, 66. [Google Scholar] [CrossRef]

- Richard, G.; Pietrzak, T.; Argelaguet, F.; Lécuyer, A.; Casiez, G. Studying the Role of Haptic Feedback on Virtual Embodiment in a Drawing Task. Front. Virtual Real. 2021, 1, 28. [Google Scholar] [CrossRef]

- Gutiérrez, Á.; Sepúlveda-Muñoz, D.; Gil-Agudo, Á.; de los Reyes Guzman, A. Serious game platform with haptic feedback and EMG monitoring for upper limb rehabilitation and smoothness quantification on spinal cord injury patients. Appl. Sci. 2020, 10, 963. [Google Scholar] [CrossRef]

- McGregor, C.; Bonnis, B.; Stanfield, B.; Stanfield, M. Design of the ARAIG haptic garment for enhanced resilience assessment and development in tactical training serious games. In Proceedings of the 2016 IEEE 6th International Conference on Consumer Electronics-Berlin (ICCE-Berlin), Berlin, Germany, 5–7 September 2016; pp. 214–217. [Google Scholar]

- Cui, D.; Mousas, C. Evaluating Wearable Tactile Feedback Patterns During a Virtual Reality Fighting Game. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bari, Italy, 4–8 October 2021; pp. 328–333. [Google Scholar]

- Cui, D.; Kao, D.; Mousas, C. Toward understanding embodied human-virtual character interaction through virtual and tactile hugging. Comput. Animat. Virtual Worlds 2021, 32, e2009. [Google Scholar] [CrossRef]

- Koilias, A.; Mousas, C.; Anagnostopoulos, C.N. I feel a moving crowd surrounds me: Exploring tactile feedback during immersive walking in a virtual crowd. Comput. Animat. Virtual Worlds 2020, 31, e1963. [Google Scholar] [CrossRef]

- Krogmeier, C.; Mousas, C.; Whittinghill, D. Human, virtual human, bump! a preliminary study on haptic feedback. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 1032–1033. [Google Scholar]

- Guterstam, A.; Gentile, G.; Ehrsson, H.H. The invisible hand illusion: Multisensory integration leads to the embodiment of a discrete volume of empty space. J. Cogn. Neurosci. 2013, 25, 1078–1099. [Google Scholar] [CrossRef]

- Fossataro, C.; Bruno, V.; Gindri, P.; Pia, L.; Berti, A.; Garbarini, F. Feeling touch on the own hand restores the capacity to visually discriminate it from someone else’hand: Pathological embodiment receding in brain-damaged patients. Cortex 2018, 104, 207–219. [Google Scholar] [CrossRef] [PubMed]

- Botvinick, M.; Cohen, J. Rubber hands ‘feel’ touch that eyes see. Nature 1998, 391, 756. [Google Scholar] [CrossRef]

- Ehrsson, H.H.; Spence, C.; Passingham, R.E. That is my hand! Activity in premotor cortex reflects feeling of ownership of a limb. Science 2004, 305, 875–877. [Google Scholar] [CrossRef]

- Tsakiris, M.; Haggard, P. The rubber hand illusion revisited: Visuotactile integration and self-attribution. J. Exp. Psychol. Hum. Percept. Perform. 2005, 31, 80. [Google Scholar] [CrossRef] [PubMed]

- Tsakiris, M.; Carpenter, L.; James, D.; Fotopoulou, A. Hands only illusion: Multisensory integration elicits sense of ownership for body parts but not for non-corporeal objects. Exp. Brain Res. 2010, 204, 343–352. [Google Scholar] [CrossRef] [PubMed]

- Farmer, H.; Maister, L.; Tsakiris, M. Change my body, change my mind: The effects of illusory ownership of an outgroup hand on implicit attitudes toward that outgroup. Front. Psychol. 2014, 4, 1016. [Google Scholar] [CrossRef]

- Pyasik, M.; Tieri, G.; Pia, L. Visual appearance of the virtual hand affects embodiment in the virtual hand illusion. Sci. Rep. 2020, 10, 5412. [Google Scholar] [CrossRef]

- Sanchez-Vives, M.V.; Spanlang, B.; Frisoli, A.; Bergamasco, M.; Slater, M. Virtual hand illusion induced by visuomotor correlations. PLoS ONE 2010, 5, e10381. [Google Scholar] [CrossRef]

- Brugada-Ramentol, V.; Clemens, I.; de Polavieja, G.G. Active control as evidence in favor of sense of ownership in the moving Virtual Hand Illusion. Conscious. Cogn. 2019, 71, 123–135. [Google Scholar] [CrossRef] [PubMed]

- Choi, W.; Li, L.; Satoh, S.; Hachimura, K. Multisensory integration in the virtual hand illusion with active movement. BioMed Res. Int. 2016, 2016, 8163098. [Google Scholar] [CrossRef]

- Qu, J.; Sun, Y.; Yang, L.; Hommel, B.; Ma, K. Physical load reduces synchrony effects on agency and ownership in the virtual hand illusion. Conscious. Cogn. 2021, 96, 103227. [Google Scholar] [CrossRef]

- Schatz, R.; Regal, G.; Schwarz, S.; Suettc, S.; Kempf, M. Assessing the QoE impact of 3D rendering style in the context of VR-based training. In Proceedings of the 2018 Tenth international conference on quality of multimedia experience (QoMEX), Cagliari, Italy, 29 May–1 June 2018; pp. 1–6. [Google Scholar]

- Torkhani, F.; Wang, K.; Chassery, J.M. Perceptual quality assessment of 3D dynamic meshes: Subjective and objective studies. Signal Process. Image Commun. 2015, 31, 185–204. [Google Scholar] [CrossRef]

- Lavoué, G.; Mantiuk, R. Quality assessment in computer graphics. In Visual Signal Quality Assessment; Springer: Cham, Switzerland, 2015; pp. 243–286. [Google Scholar]

- Kiefl, N.; Figas, P.; Bichlmeier, C. Effects of graphical styles on emotional states for VR-supported psychotherapy. In Proceedings of the 2018 10th International Conference on Virtual Worlds and Games for Serious Applications (VS-Games), Wurzburg, Germany, 5–7 September 2018; pp. 1–4. [Google Scholar]

- Zibrek, K.; Martin, S.; McDonnell, R. Is photorealism important for perception of expressive virtual humans in virtual reality? ACM Trans. Appl. Percept. (TAP) 2019, 16, 1–19. [Google Scholar] [CrossRef]

- Kao, D.; Magana, A.J.; Mousas, C. Evaluating Tutorial-Based Instructions for Controllers in Virtual Reality Games. Proc. ACM Hum.-Comput. Interact. 2021, 5, 1–28. [Google Scholar] [CrossRef]

- Lougiakis, C.; Katifori, A.; Roussou, M.; Ioannidis, I.P. Effects of virtual hand representation on interaction and embodiment in HMD-based virtual environments using controllers. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 22–26 March 2020; pp. 510–518. [Google Scholar]

- Usoh, M.; Catena, E.; Arman, S.; Slater, M. Using presence questionnaires in reality. Presence 2000, 9, 497–503. [Google Scholar] [CrossRef]

- Gliem, J.A.; Gliem, R.R. Calculating, interpreting, and reporting Cronbach’s alpha reliability coefficient for Likert-type scales. In Proceedings of the Midwest Research-to-Practice Conference in Adult, Continuing, and Community Education, Columbus, OH, USA; 2003. [Google Scholar]

- Croasmun, J.T.; Ostrom, L. Using likert-type scales in the social sciences. J. Adult Educ. 2011, 40, 19–22. [Google Scholar]

- Latoschik, M.E.; Roth, D.; Gall, D.; Achenbach, J.; Waltemate, T.; Botsch, M. The effect of avatar realism in immersive social virtual realities. In Proceedings of the 23rd ACM Symposium on Virtual Reality Software and Technology, Gothenburg Sweden, 8–10 November 2017; pp. 1–10. [Google Scholar]

- Lugrin, J.L.; Latt, J.; Latoschik, M.E. Anthropomorphism and Illusion of Virtual Body Ownership. In Proceedings of the ICAT-EGVE, Kyoto, Japan, 28–30 October 2015; pp. 1–8. [Google Scholar]

- Heinrich, C.; Cook, M.; Langlotz, T.; Regenbrecht, H. My hands? Importance of personalised virtual hands in a neurorehabilitation scenario. Virtual Real. 2021, 25, 313–330. [Google Scholar] [CrossRef]

- Thaler, A.; Piryankova, I.; Stefanucci, J.K.; Pujades, S.; de La Rosa, S.; Streuber, S.; Romero, J.; Black, M.J.; Mohler, B.J. Visual perception and evaluation of photo-realistic self-avatars from 3D body scans in males and Females. Front. ICT 2018, 5, 18. [Google Scholar] [CrossRef]

- Vargas, L.; Huang, H.H.; Zhu, Y.; Hu, X. Static and dynamic proprioceptive recognition through vibrotactile stimulation. J. Neural Eng. 2021, 18, 046093. [Google Scholar] [CrossRef]

- Cooper, N.; Milella, F.; Pinto, C.; Cant, I.; White, M.; Meyer, G. The effects of substitute multisensory feedback on task performance and the sense of presence in a virtual reality environment. PLoS ONE 2018, 13, e0191846. [Google Scholar] [CrossRef] [PubMed]

- Schecter, S.; Lin, W.; Gopal, A.; Fan, R.; Rashba, E. Haptics and the heart: Force and tactile feedback system for cardiovascular interventions. Cardiovasc. Revasc. Med. 2018, 19, 36–40. [Google Scholar] [CrossRef]

- D’Alonzo, M.; Clemente, F.; Cipriani, C. Vibrotactile stimulation promotes embodiment of an alien hand in amputees with phantom sensations. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 23, 450–457. [Google Scholar] [CrossRef] [PubMed]

- Haggard, P. Sense of agency in the human brain. Nat. Rev. Neurosci. 2017, 18, 196–207. [Google Scholar] [CrossRef] [PubMed]

- Bregman-Hai, N.; Kessler, Y.; Soffer-Dudek, N. Who wrote that? Automaticity and reduced sense of agency in individuals prone to dissociative absorption. Conscious. Cogn. 2020, 78, 102861. [Google Scholar] [CrossRef] [PubMed]

- Pacchierotti, C.; Sinclair, S.; Solazzi, M.; Frisoli, A.; Hayward, V.; Prattichizzo, D. Wearable haptic systems for the fingertip and the hand: Taxonomy, review, and perspectives. IEEE Trans. Haptics 2017, 10, 580–600. [Google Scholar] [CrossRef] [PubMed]

| No. | Variable | Questions/Statements |

|---|---|---|

| 1 | Presence | Please rate your sense of being in the virtual environment, on a scale of 1 to 7, where 7 represents your normal experience of being in a place. |

| 2 | To what extent were there times during the experience when the virtual environment was the reality for you? 1 indicates not at all, and 7 indicates totally. | |

| 3 | During the time of the experience, which was the strongest on the whole, your sense of being in the virtual environment or of being elsewhere? 1 indicates being in the virtual environment, and 7 being elsewhere. | |

| 4 | During the time of your experience, did you often think to yourself that you were actually in the virtual environment? 1 indicates strongly disagree, and 7 indicates strongly agree. | |

| 5 | Hand Ownership | I felt as if the virtual hand was my real hand. 1 indicates strongly disagree, and 7 indicates strongly agree. |

| 6 | It felt as if the virtual hand I saw was someone else’s. 1 indicates strongly disagree, and 7 indicates strongly agree. | |

| 7 | It seemed as if I might have more than one hand. 1 indicates strongly disagree, and 7 indicates strongly agree. | |

| 8 | Tactile Sensation | It seemed as if I felt the vibration of the equipment in the location where I saw the virtual hand touch the object. 1 indicates strongly disagree, and 7 indicates strongly agree. |

| 9 | It seemed as if the touch I felt was located somewhere between my physical hand and the virtual hand. 1 indicates strongly disagree, and 7 indicates strongly agree. | |

| 10 | It seemed as if the touch I felt was caused by the hand touching the object. 1 indicates strongly disagree and 7 indicates strongly agree. | |

| 11 | It seemed as if my hand was touching the gears. 1 indicates strongly disagree and 7 indicates strongly agree. | |

| 12 | Agency and Motor Control | It felt like I could control the virtual hand as if it was my own hand. 1 indicates strongly disagree and 7 indicates strongly agree. |

| 13 | The movements of the virtual hands were caused by my movements. 1 indicates strongly disagree and 7 indicates strongly agree. | |

| 14 | I felt as if the movements of the virtual hands were influencing my own movements. 1 indicates strongly disagree and 7 indicates strongly agree. | |

| 15 | I felt as if the virtual hand was moving by itself. 1 indicates strongly disagree and 7 indicates strongly agree. | |

| 16 | External Appearance | It felt as if my (real) hand were turning into an “avatar” hand. 1 indicates strongly disagree and 7 indicates strongly agree. |

| 17 | At some point, it felt as if my real hand was starting to take on the posture or shape of the virtual hand that I saw. 1 indicates strongly disagree and 7 indicates strongly agree. | |

| 18 | At some point, it felt that the virtual hand resembled my own (real) hand in terms of shape, skin tone, or other visual features. 1 indicates strongly disagree and 7 indicates strongly agree. | |

| 19 | Response to External Stimuli | I felt a tactile sensation in my hand when I saw the spike coming out. 1 indicates strongly disagree and 7 indicates strongly agree. |

| 20 | When the spike came out, I felt the instinct to draw my hands out. 1 indicates strongly disagree and 7 indicates strongly agree. | |

| 21 | I felt as if my hand had hurt. 1 indicates strongly disagree and 7 indicates strongly agree. | |

| 22 | I had the feeling that I might be harmed by the spike. 1 indicates strongly disagree and 7 indicates strongly agree. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, D.; Mousas, C. Evaluating Virtual Hand Illusion through Realistic Appearance and Tactile Feedback. Multimodal Technol. Interact. 2022, 6, 76. https://doi.org/10.3390/mti6090076

Cui D, Mousas C. Evaluating Virtual Hand Illusion through Realistic Appearance and Tactile Feedback. Multimodal Technologies and Interaction. 2022; 6(9):76. https://doi.org/10.3390/mti6090076

Chicago/Turabian StyleCui, Dixuan, and Christos Mousas. 2022. "Evaluating Virtual Hand Illusion through Realistic Appearance and Tactile Feedback" Multimodal Technologies and Interaction 6, no. 9: 76. https://doi.org/10.3390/mti6090076

APA StyleCui, D., & Mousas, C. (2022). Evaluating Virtual Hand Illusion through Realistic Appearance and Tactile Feedback. Multimodal Technologies and Interaction, 6(9), 76. https://doi.org/10.3390/mti6090076