1. Introduction

The Architecture, Engineering and Construction (AEC) industry has experienced approximately a 1% annual increase in productivity over the last two decades. This is well below the 2.8% annual increase of the total economy, and the 3.6% of the manufacturing industry [

1]. This lack of productivity is often attributed to the fragmented nature of the industry, as well as limited investment in new digital technologies [

2]. The adoption of Building Information Modelling has contributed to labour productivity as well as enhancing work efficiency and project quality [

3]. Although BIM has facilitated the adoption of 3D drawings for communication, AEC professionals still largely communicate their designs to clients and stakeholders through plans, sections, and elevations [

4]. Stakeholders outside of the AEC industry have difficulties understanding these 2D drawings, making this mode of communication ineffective and costly [

4,

5]. The National Institute of Standard and Technology estimated USD 15.8 billion in annual interoperability costs during the entire life cycle of building. This amount included costs for design file reworking, requests for information, construction site rework, delays, etc. [

6].

Healthcare facilities are critical pieces of infrastructure for society, providing medical assistance and care for patients. The design of healthcare facilities requires specialist design consultants as well as the participation of stakeholders and the medical staff during design reviews. The stakeholders are required to provide feedback and “sign-off” design decisions. Design reviews typically consist of a 2D drawing package (building plans, sections, elevations) and technical specifications along with a few 3D images that stakeholders must review and provide comment on. Stakeholders and medical staff often find 2D drawings difficult to interpret due to the lack of specialised knowledge of architecture or engineering [

7]. This inevitably leads to client–design team miscommunication and poor design decisions. Research has shown that the quality of the built environment impacts upon patient health, so the consequence of a poor design outcome is more than just a financial burden [

8,

9,

10]. Due to the highly specialised nature of healthcare architecture, an effective design review tool utilising the latest digital technologies, such as BIM and Virtual Reality (VR), should be considered of vital importance to healthcare design teams [

11,

12].

Previous research has successfully investigated the adoption of an integrated BIM/VR system as a visualization and simulation platform for healthcare design [

7]. However, despite the increased adoption of BIM and VR in the AEC industry, the full potential of BIM and VR has not been exploited yet. In particular, the use of only geometric information from a BIM model into a VR environment limits the benefits of the BIM/VR technology. Therefore, its full potential can be achieved when non-geometric BIM metadata are visualised and communicated via immersive VR interfaces [

13].

This research seeks to determine if VR can be used to convey additional, technical information effectively to aid stakeholders in understanding the complexities and particulars of a healthcare project. This additional information is derived from the AEC consultants BIM model, using the BIM metadata generated from programs like Autodesk Revit.

To determine if reviewing an architectural design in VR alongside BIM Metadata is beneficial to the design process, a user study with 17 healthcare professionals was conducted during which the participants reviewed the design of a hospital under two different conditions. The first condition mimicked a traditional design review package, with PDF architectural drawings and specifications and a 3D digital desktop model. The second condition was a VR model with encoded BIM metadata. During these reviews, the participants were asked to complete a series of tasks with the aim to measure their understanding of the design. Afterwards, they completed a System Usability Scale (SUS) questionnaire [

14] and a short interview regarding their experience.

A review of similar research was conducted, and from this it was determined that many studies have looked at the effectiveness of VR in design reviews for architecture; however, this was limited to geometric and spatial information, with few studies looking into the potential of displaying technical BIM metadata alongside geometric data. After the following Background section, the Material and Methods section defines the research questions, introduces the used prototype and describes the user study. This is followed by the Results and Discussion sections. The article ends with a Conclusions section.

2. Background

2.1. Design Reviews in Healthcare Architecture

Design reviews are conducted throughout the design phase of a project to validate the design against the brief and to gain sign-off from stakeholders [

15]. As the brief evolves throughout the design process, there must be a way to track the changes; this feature is currently lacking in existing review tools [

16]. This is important in healthcare design, as the specialised equipment can change rapidly and these changes need to be tracked and understood by all parties.

Architectural 2D drawings convey limited information to support design reviews. Physical mock-ups, on the other hand, have been shown to be an effective design communication tool [

17,

18]. However, they are expensive and time consuming to build and offer limited options when considering design alternatives [

19]. Conducting a design review in VR is considerably cheaper than building a 1:1 mock up or undertaking re-work during construction [

17].

2.2. Healthcare Architecture

Healthcare projects are highly complex, requiring many AEC professionals to provide input. They often have many different clients or stakeholders, all with specific requirements [

20]. The functions of the space change frequently with new developments [

21]. Compared with other projects, there are a high number of Fixtures, Furniture and Equipment (FF&E) that need to be coordinated and correctly positioned. Much of this equipment is specialised, and often features particular spatial and service connection requirements that may not be immediately obvious to the design team. Previous research has indicated that stakeholders may have difficulty understanding what these items are in 2D plans [

17].

2.3. BIM and Metadata

AEC professionals produce 3D models using Building Information Modelling (BIM) software. While a BIM is often referred to as a 3D model, it is much more than that; it is a collection of processes that allow cross-discipline teams access to a variety of information throughout the lifecycle of a building [

22,

23]. BIM models are also used to generate plans, sections and elevations typically seen in architectural drawing packages. However, they have the potential to do more [

12]. Each element in a BIM model can store a wide range of metadata, including quantities, material properties, dimensions, comments, phasing, manufacturer information, costs, etc. While most BIM objects come with a limited amount of metadata programmed in, designers have the ability to extend the dataset as required, providing the ability to add additional information to aid in the design review process [

24]. This information can include specifications about specialised equipment such as those used in the healthcare industry.

2.4. Virtual Reality in Architecture Design Reviews

Immersive Virtual Environments (IVEs) in VR provide a quick and convenient evaluation tool compared with physical mock-ups [

25]. Multi-user design reviews have helped to gain insights from nursing staff in a virtual mock-up [

11]. VR mock-ups can be used to review designs at a high level of detail and discuss changes in cost [

26]. However, participants can find it difficult to switch between the prototype model and the design documents (assumed to be digital pdfs), and suggested having the design documents available for markup on an adjacent smart board [

26]. An extensive literature review into the current state of BIM-based AEC VR research identified the underutilisation of BIMs’ non-geometric data as one of the core gaps [

13]. In another study [

27], the use of hybrid media—VR with traditional 2D drawings—gave the best overall result. This study [

27] concludes that VR should be used in conjunction with 2D drawings and “BIMs” for a more robust design review.

2.5. Simulation/Scenario Based Design Reviews

A scenario-based study had nurses evaluate three different preoperative rooms within virtual reality by completing several tasks [

28]. The study concluded with a clear preference for one option and noted that, by using a scenario-based evaluation, participants were able to interact with their environment, providing a more “clinically relevant and systematic comparison of multiple design options” [

28]. In the preferred design, it looked like a stretcher was used in place of a much larger hospital bed, giving the illusion of space. While the research does not mention why this was done, it would be interesting to know if the researchers believed this had an impact, as one of the cited reasons for this preferred design was the “increased space around the bed” [

28]. This suggests that the identification of furniture within VR is not as clear as other studies have concluded, and the use of BIM metadata has the potential to reduce some of these errors.

In a previous scenario-based study [

17], end-users compared the effectiveness of four different architectural communication modes: (1) 2D plans; (2) printed 3D perspectives accompanied by plans; (3) physical mock-up; and (4) virtual reality mock-up. The physical mock-up was deemed the most useful mode of communication, followed by VR mock-ups with 2D plans, and 3D perspectives were the least useful. The study [

17] identified a lack of fidelity as a reason for the VR platform performing lower than expected. The VR mock-up elicited the fewest design suggestions, while the physical mock-up elicited the most. The authors conclude that the four different modes of communication could be used to complement each other, as they all illustrated different aspects of the design.

2.6. Summary

This background section has identified some clear gaps in the research of VR design reviews for healthcare. If the issues created in design reviews relate to poor communication, it follows that using a mode that allows for the greatest fidelity in the display of information would be beneficial. Several of the above papers identified the need for traditional architectural plans alongside the VR model [

17,

27,

28]. This suggests that there is a need to display information beyond the spatial and geometric data typically presented in VR. This article seeks to build upon the advantages and improve upon the limitations faced in the previous studies by implementing a system that allows users to review the BIM metadata typically found in architectural plans and specifications in VR with the aim of improving the effectiveness of design reviews.

3. Materials and Methods

3.1. Research Questions

This research aims to determine the effectiveness of undertaking Architectural Design Reviews in a VR headset for healthcare projects by measuring how well participants understand the proposed design, how much time they take to complete a set of tasks, and the perceived usability of the review tool. Previous research has already highlighted the benefits of the design review process VR offers. What this research seeks to build upon is VR’s ability to convey the semantically rich BIM metadata in a way that enables stakeholders to complete a design review with fewer errors caused by miscommunication.

The effectiveness of the design review will be evaluated against a series of tasks designed to test the participants’ understanding of the design, the usability of the design review tool and the time taken to complete the design review.

3.2. Prototype

To test whether BIM metadata can be viewed within a VR headset (Oculus Quest 2,

https://www.meta.com/nz/quest/products/quest-2/ accessed on 19 May 2023), an Operating Unit of a hospital was designed and built in Autodesk Revit (

https://www.autodesk.co.nz/products/revit/overview?term=1-YEAR (accessed on 28 April 2023)), a leading piece of AEC software. The operating unit was broken up into two unique modules; (1) the Theatre Module and (2) the Recovery Module. The Operating Unit modules were then exported from Revit using Unity Reflect (

https://unity.com/products/unity-reflect (accessed on 28 April 2023)) into the Unity Game Engine (

https://unity.com (accessed on 28 April 2023)). Unity Reflect maintains the fidelity of the BIM metadata, enabling its use within the Unity Game Engine. The models were also exported using the Revit plugin, Enscape (

https://enscape3d.com/ (accessed on 28 April 2023)), to create the 3D desktop model; this meant that the same models and datasets could be used for the Virtual Reality condition of the experiment and the PDF + 3D model condition of the experiment. The two modules, Recovery Unit and Theatre Unit, were designed using the Australian Health Facilities Guidelines—Operating Unit as a guide [

29]. The standard room sizes, finishes and fixtures were derived from or taken directly from the Australasian Health Facility Guidelines (AusHFG:

https://healthfacilityguidelines.com.au/australasian-health-facility-guidelines (accessed on 28 April 2023)) and their Autodesk Revit library. AusHFG is widely used throughout Australia and New Zealand in the design of hospitals.

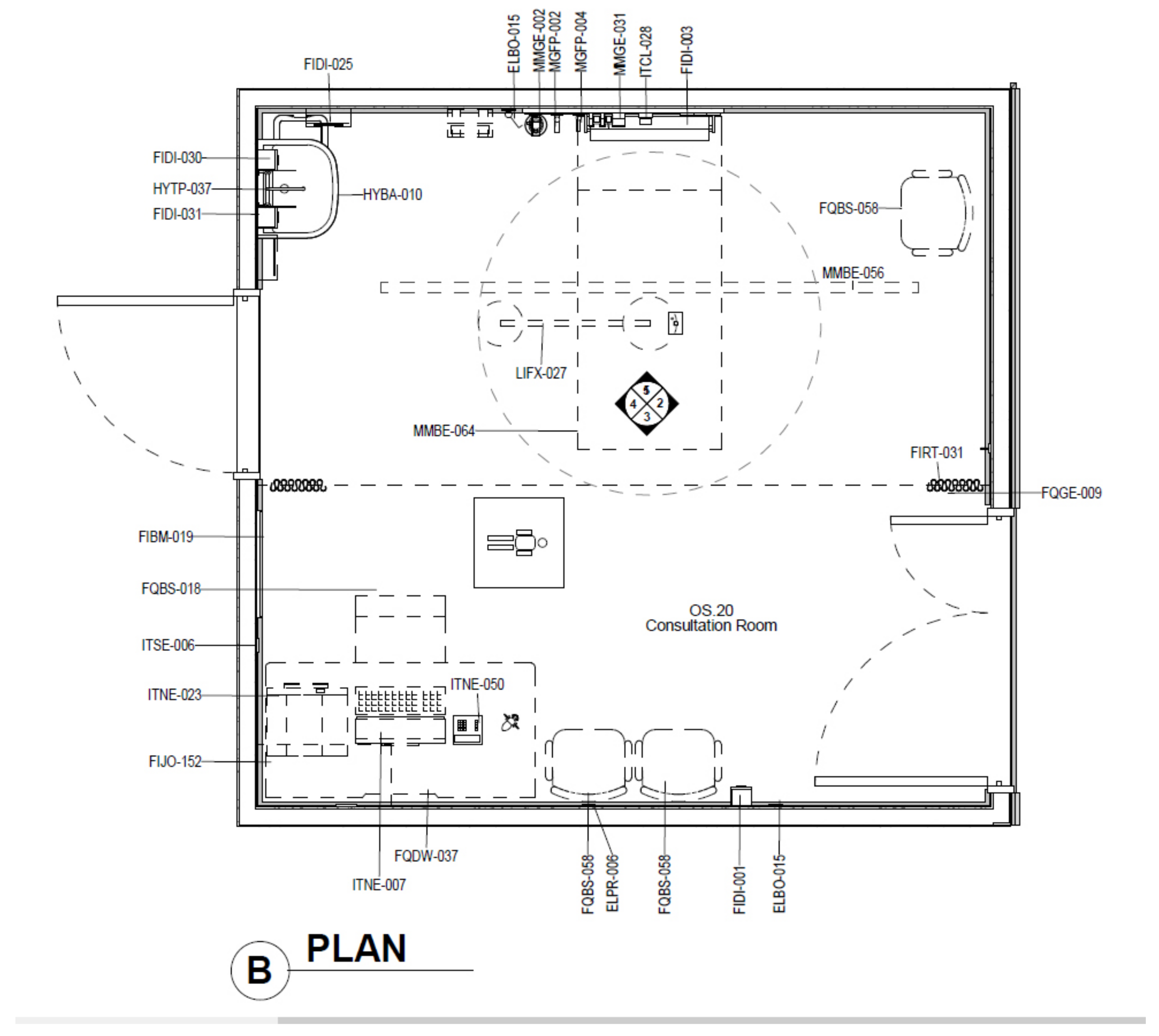

Figure 1, below, is a 3D view from the Theatre Module Revit file.

Figure 2 illustrates the Recovery Module.

3.3. Study Design

This study was approved by the Human Research Ethics Committee (HREC) of our University. A mixed method evaluation was used with an experiment, and a quantitative questionnaire and a qualitative interview were conducted. The experiment was a within-subjects Latin Square design, with all participants evaluating both the Virtual Reality and PDF condition.

Before starting the experiment, participants completed a questionnaire on their background and experience with VR (see

Appendix A). Then, participants reviewed one module design in one condition.

At the beginning of each condition, the participants completed a short tutorial, designed to take approximately 10 min. Participants were asked to complete 3 tasks to give them the skills to complete the main experiment, with the experimenter on hand to answer any questions and guide participants if they had difficulties with a task. See

Figure 3 below for a view of a typical instruction on the clipboard.

Participants were also guided on how to use the Enscape model with onscreen instructions and, if required, participants were shown how to use Adobe Acrobat Reader (see

Figure 4 and

Figure 5). When the tutorial tasks were completed and participants had been given a chance to ask any clarifying questions, the tutorial was ended and the experiment was started.

For the review of each condition, participants would have to complete specifically designed tasks and questions for that condition (see

Appendix B and

Appendix C for the questionnaires on the Theatre and Recovery module). The tasks the participants had to complete tested their understanding of the design by assessing their ability to first identify the correct piece of equipment, then review and provide design review feedback, and finally identify errors.

Each question required the participants to review the geometric information visible to them, as well as the BIM metadata, which were visible either in the schedules or on the clipboard. Each question began with the room number and name in which the relevant object was located to help the participant find the relevant information. The final task of each condition was to list any codewords found during the experiment. Within each module in each condition there were children’s toys placed in unusual locations with codewords in their metadata (see

Figure 6). This was designed to test whether participants would notice obvious errors and explore the design beyond the structured review.

The tasks set out above are designed to answer whether using Virtual Reality to view BIM metadata would increase the understanding of architectural design when compared to traditional forms of design communication, by evaluating how well the participants understood the design between the two different conditions. The participants were also timed to help us to answer whether using Virtual Reality to view BIM metadata would increase the efficiency of the architectural design review when compared to traditional forms of design communication. By measuring how many tasks the participant completes correctly and in what time frame, we will be able to answer whether the design review was more efficient in VR than when using a traditional design review.

At the end of each condition, participants would also complete an SUS questionnaire (see

Appendix D) and answer six follow-up questions designed to reveal information about the performed tasks (see

Appendix E). Then, participants would repeat the above procedure in the other condition.

Finally, a brief, structured interview was conducted after participants reviewed both conditions at the end of the experiment. The questions asked participants what they did and did not like about each of the modes, along with which was their preferred condition. They were also given the chance to give any feedback or make any comments about either condition. The interviews were recorded, transcribed, then analysed for patterns/themes/consistencies. The questions were designed to reveal basic preferences and to determine what the participants preferred in each of the conditions, as well as what they found the most problematic.

The results of the interviews, along with the SUS scores and post-review questionnaire data, will help us determine which condition participants found to be more usable, which will enable us to answer whether using Virtual Reality to view BIM metadata will increase the usability of the architectural design review tool when compared to traditional forms of design communication. Refer to

Figure 7 for an overview of the experimental setup.

4. Results

4.1. Participants

Participants for the user study were required to have healthcare experience. Twenty participants signed up to undertake the user study; however, due to unforeseen circumstances, only seventeen were able to participate. The occupations of the participants varied and represented a diverse cross section of experience. Participants ranged from first-year graduates up to seasoned professionals with 10+ years’ experience. The fields varied, too, with Pharmacists, Physiotherapist, Nurses, GPs, Audiologist and Registrars taking part. The 17 participants consisted of 4 (24%) males and 13 (76%) females. All participants had normal or corrected-to-normal vision. 11 participants had never used HMDs before, while the other 6 used VR a couple of times a year. Participants were also asked if they had ever reviewed architectural plans; 4 out of the 17 participants had. The participants varied in age; seven of the participants were aged between 35 and 50, six were aged between 25 and 34, two were between 18 and 24, one was aged 51–64 and one was over the age of 65. All participants completed the experiment, and no participants reported any feelings of cybersickness.

4.2. Task Results

The participants were overall more successful in the VR condition, with more participants getting more correct answers. A Wilcoxon signed-ranks test indicated that participants scored statistically significantly higher in the VR condition (Z = −2.334, p = 0.020). The VR condition had an overall mean of 7.00 (SD = 1.32), while the PDF condition had an overall mean of 5.29 (SD = 1.86). This was a 32% increase over PDF.

Of the 17 participants, 11 (64.7%) completed more tasks correctly in the VR condition, while 4 (24.3%) completed more tasks correctly in the PDF condition. Two participants received the same score between VR and PDF (11.7%).

The first question in each condition was designed to test the participants’ ability to identify errors between the proposed design and the requirements listed in the metadata. The design appeared correct, and it was only through reading the metadata that participants could find the error. A total of 22% of the participants reviewing the design in the PDF condition got this correct compared with 75% of participants in VR. Similarly, the Recovery module asked participants how many wash handbasins were needed. There was only one in the proposed design, while the metadata stated there needs to be 1 basin for every 4 bed bays, with 7 bed bays in the project, 2 basins were actually required. 25% of participants viewing the PDF condition got this correct, while 100% of participants got it correct in VR.

The final question in each condition was about the codewords in the BIM metadata of the toys positioned around each module. This was designed to test whether participants would identify any obvious errors or if they would strictly answer the tasks. Two participants in the VR condition found them during their experiment. One of the participants who found it in the VR condition specifically asked if they could go off task and just ‘explore’. When they did this, they found multiple codewords and entered rooms that had no specific tasks for them to complete.

4.3. Time Taken

The amount of time each participant took varied significantly. For the VR condition, the quickest time was 9 min and 5 s. The shortest time for the PDF condition was 12 min and 40 s. The longest VR time was 21 min and 5 s, while the longest time in PDF was 35 min and 3 s. The mean time in VR was 15 min and 45 s, while the mean time in PDF was 22 min and 46 s.

Figure 8, below, illustrates how much time each participant took to complete the tasks. The task completion time was converted into seconds, and a Wilcoxon signed-rank test was run over the figures to determine significance. With Z = −2.864 and

p = 0.004, the time difference was statistically significant.

4.4. SUS Results

The VR condition also scored considerably higher on the SUS survey, with 78.70 as the average SUS score (SD = 10.65) compared to 38.67 (SD = 19.31) for the PDF and 3D model condition. The accepted ‘passing’ SUS score was considered to be 68 [

30], and as such only the VR condition was considered as passing. A Wilcoxon signed-rank test was carried out and showed statistical significance between the SUS results, with Z = −3.624,

p = 0.001. The lowest SUS score for the PDF condition was 5, while the lowest for VR was 62.5. The highest PDF score was 67.5, while the highest for VR was 97.5. See

Figure 9 for a graph of the SUS scores.

4.5. Post Review Questionnaire Results

After the participants had completed the SUS questionnaire, six other Likert Scale questions were asked, specifically relating to the review condition and how they believed they did. These data were gathered to reveal specifically what the participants thought of the various elements of the design review tools. If we were to convert the Likert Scale to numbers, rating Strongly Disagree as 1, Disagree as 2, etc., and Strongly Agree as 5, we can obtain an average score for each question.

Figure 10 plots this.

Breaking down the response by question, we can see that Q4 has the biggest difference in response, suggesting that the participants, at least, perceived viewing metadata in VR as easier than PDF. The next-biggest difference in response was found in Q2; it was easy to locate the various objects requiring your feedback, with VR scoring higher again here. In every question, the VR condition received a higher rating. The closest rating was found in Q1: it was easy to locate the various rooms. This is surprising, as the VR condition required the participants to explore the space to find the rooms while the PDF condition had a bookmarked table of contents allowing participants to jump directly to each room.

4.6. Interview Results

A thematic analysis of the interviews was undertaken. In the interview, 16 of the 17 participants selected VR as their preferred design review condition. The reasons why they selected VR varied, but followed consistent themes. Seven participants noted that VR made it easier to visualise the design, and four participants noted that VR was more user friendly. Other reasons included that the information was easier to access, two participants liked the ‘point and click’ nature of the VR condition, and another participant liked how you could track comments between reviews (this was common to both conditions). One participant preferred VR because of the hands-on aspect. The person who selected PDF noted that while it was harder to use, they believed the PDF contained a lot more technical information.

Participants were asked what they did and did not like about each condition. The VR condition had five participants note that it was just like being back in a hospital; they “felt like they were in the room”. Participants noted that VR was easier to use. Four participants noted that it made it easier to visualise the space. Three participants noted specifically that it was easier to access the metadata. Two people mentioned how they could just “point and click” and see the metadata instantly, and other participants mentioned that it was easier because “not too much information came up at once”.

5. Discussion

The results showed a clear preference for VR over the PDF condition. During the interview, participants noted that it was much easier to find items in VR. They also mentioned that VR was more user friendly and intuitive.

The results showed a significant difference between the two conditions, with VR having a higher mean score of 7.00 over PDF’s score of 5.29. On average, less time was taken to answer more questions correctly in VR than in PDF. The mean time in VR was 15 min and 45 s, while the mean time in PDF was 22 min and 46 s.

The results illustrate that VR was perceived as more usable than the PDF condition. The SUS scores were significantly higher in favour of VR. In the post-review questionnaire, in all six questions the VR condition received a higher rating.

The participants in the VR condition completed more tasks correctly in a shorter time. The interview results showed a stronger preference for VR compared to the PDF + 3D model condition, with 16 of the 17 participants selecting the VR condition as their preferred option. During the interview, participants noted that it was much easier to use. The results of the SUS questionnaire back this up, with a statistically significant difference between the PDF and VR conditions. Many participants in the interview (5) noted that it was easier to access the metadata in VR, and likewise the post-review questionnaire also showed that VR was easier for metadata access. This was reinforced by the higher task completion rate within the VR condition. Participants stated in the interview and in the questionnaire that they found items easier to identify in VR, and this was backed up by the higher task completion rate within VR and the higher SUS score. The above results allow us to conclude that VR improves the effectiveness of design reviews over traditional forms of communication because participants found it easier to use, they completed more tasks correctly and quickly, and overall they preferred this tool over the PDF tool.

However, the PDF condition did have its advantages. During the interview, several participants identified that the PDF drawings appeared to be more accurate and contain additional data. This is interesting, as the VR condition and the PDF condition contained identical data sets. They also appreciated how the elements were coded between the plans and the schedule, and they enjoyed using the 3D Enscape Model.

It was interesting how few participants noticed or selected the toys. These were brightly coloured items that clearly did not belong in a hospital environment. It is also worth noting that all but one participant stuck to the prescribed tasks. This participant found more codewords than anyone else. The participants generally kept to the structured tasks and did not explore the modules further.

As identified by Sidani et al. [

13], little research has been carried out on the use of non-geometric BIM data within VR. This paper aimed to address this. Other research suggests that the use of plans and technical information alongside VR models is helpful. The above results generally reflect what other researchers have found—that VR is a useful tool to review spatial and geometric data. By including technical data alongside the geometry, however, we were able to demonstrate that VR can be used to review the technical specifications required in a healthcare project (see

Figure 11, below, for an example of the metadata within the VR prototype).

6. Limitations

The study took place toward the end of 2021 in Christchurch, New Zealand, during the COVID-19 pandemic. This had an impact on the number of people who could participate in the experiment, and given that the participants were healthcare workers, it was particularly challenging to recruit people. The study participants’ gender was heavily skewed, with 76% of participants identifying as female; according to the WHO, 67% of the global health and social care workforce are female [

31]. Future studies should work to address this, and attempt to attract participants that better reflect the global workforce.

The experimenter over-estimated the participants’ familiarity with Adobe Acrobat Reader. It had been assumed they would know basic tools such as zoom and next page, but this was not always the case. This issue was revealed during the tutorial stage of the experiment, so was able to be rectified by the experimenter carefully explaining how to use the required tool. However, if the experiment was to be repeated, a more structured, formal guide on using Adobe Reader should be considered.

There were only two different designs reviewed, and the difference in task completion rate suggests they were of varying complexity. Further designs should deliberately take into account design complexity and test across a variety of complex designs. While the two different modules were designed to be of equal complexity, the task results would suggest that this was not the case.

Autodesk Revit was used to input the metadata due to the author’s familiarity with this programme. It should be noted that other AEC software packages offer this capability as well.

Unity Reflect was used to parse the metadata from Revit into the Unity game engine. This is an expensive plugin that may be a barrier to many architectural firms. However, there are alternatives that achieve similar outcomes, notably Datasmith, which plugs into the Unreal Game Engine and allows the transfer of metadata from Revit, 3DS Max, Sketchup and Archicad into the game engine (

https://www.unrealengine.com/en-US/datasmith/plugins (accessed on 30 May 2023)).

7. Conclusions

This article explored whether using Virtual Reality to view BIM metadata increases the effectiveness of Architectural Design Reviews. To test if viewing designs and BIM metadata in VR is beneficial to the design review process, a user study with 17 healthcare professionals was conducted during which the participants reviewed the design of a hospital either with PDF architectural drawings and a 3D digital desktop model or within a VR model. Given the higher task completion rate in a shorter time in VR, a higher SUS score and feedback in the interview generally being more positive, with 16 out of the 17 participants selecting VR as their preferred condition, this research concludes that yes, Virtual Reality can be used to view BIM metadata and it can increase the effectiveness of design reviews.

The prototype developed to test the hypotheses was only used to conduct one phase of a design review; the next phase of the design review is for the architect to review the comments and suggestions made by the stakeholders, and update the design as required. An avenue for future research is to test whether this design review tool allows for the transfer of knowledge easily and effectively back to the design team. The prototype was set up to generate a database file that can be read into the architect’s Revit file. When it is read into a particular drawing, any comments made on a specific object will turn it bright red, alerting the architect to the requested change. This part of the design review process was not included in this research for scope reasons.

The prototype is a custom-built application and required significant setup and customisation to bring the tool functionality to a level suitable for a design review. While the time taken to produce the second module (Recovery) was a fraction of the time taken to build the first module (Theatre), there was still an estimated 40 h of work needed to create and build the second module. Though there will be some efficiencies over time, adding an additional 40 h worth of work onto a design review is significant, even if there is the potential to recoup that time through fewer design changes in the later stages of a project. Future work, therefore, needs to explore more automated ways of creating VR models that are suitable for design reviews from the available BIM data.

While the participants identified and corrected more errors in the VR condition, this was not an accurate reflection of a typical design review session. Stakeholders may be guided to known issues; however, most review sessions have stakeholders evaluating the design freely. The participants in this user study were guided toward finding the errors and identifying the items through the tasks. During the study, one participant asked if they could deviate from the task list and just explore. This participant found more codewords than anyone else and explored more of the VR model than the other participants. Future work, therefore, needs to research whether VR shows similar advantages when using a less structured or guided user study where participants are free to explore and find the errors on their own. Still, the healthcare professionals in this study identified a clear preference and the high completion rate of the tasks, coupled with the quicker completion times, clearly points to VR being the more effective design review tool.