1. Introduction

Human perception is by nature multisensory, and an extensive amount of research has been dedicated to understanding how we perceive the world through the interaction of our senses, starting with the pioneering work presented in [

1]. In Biocca et al. [

2], the authors outline different ways in which the senses interact: for instance, cross-modal enhancement refers to the fact that stimuli from one sensory channel enhance or alter the perceptual interpretation of stimulation from another sensory channel. In certain situations individuals can lack or have reduced sensory modalities. This is the case of individuals with hearing impairment, visual impairment or tactile impairment. In these situations, technologies could help augment or substitute the missing modality.

In this paper we focus on individuals with hearing impairment and investigate how devices based on the sense of touch can help them to make better sense of different stimuli and in recent years, several devices have been proposed for this purpose. This approach is supported by the fact that hearing and touch present a higher temporal resolution if compared to vision, which is especially true when the sense of touch is experienced by the hands, which have a greater resolution than other body parts [

3].

Since the 1920s, researchers conducted experiments with the goal of investigating the perception of vibrating objects through tactile sensitivity, and comparing their characteristics with hearing perception. These efforts also led to new research paths such as inquiring how deaf people experience sound through the sense of touch [

4].

Recent research by Cieśla et al. [

5] has shown that a speech-to-touch sensory substitution device significantly improves speech recognition in both cochlear implant users and individuals with normal hearing. This finding aligns with the longstanding idea that the sense of touch can be effectively employed to substitute or enhance auditory experiences in such devices. One of the first experiments in this direction was the “hearing glove”, a speech technology modeled on the cochlea but constrained by the limited sensitivity of human skin, presumably invented by Norbert Wiener in the 1940s [

6]. Other prominent experiments were performed by Clark and colleagues who proposed the Tickle Talker [

7], an eight channel electro-tactile speech processor. The Tickle Talker was used to reinforce residual hearing or to supplement lip reading; the device showed potential in rehabilitation of severe hearing-impaired children and adults. Since then, tactile feedback has been used for several applications aimed at aiding hearing-impaired individuals, such as music listening [

8], and even tap dancing [

9]. In the works considered by this review, vibrotactile devices have been used to enhance various dimensions of hearing, such as sound source localization [

10], pitch discrimination [

11,

12], and speech comprehension [

13,

14]. Experiments have been proposed to improve non-auditory perceptual abilities, including environmental perception [

15], voice tone control [

16], as well as cognitive ones like braille perception [

17], lip reading [

18], or web browsing [

19].

Most of the time, developers of games and video games most of the times do not take the needs of individuals with disabilities into account while creating their products [

20]. Thus, accessible games have an important role to include a population that otherwise would be excluded [

21]. In the last twenty years [

22] a strong focus has been placed on creating accessible games for populations with different abilities. For individuals with severe hearing loss or those who may not benefit from traditional speech training, augmentative and alternative communication methods can support effective rehabilitation [

23]. Among them, gamification principles have been demonstrated to be effective in strengthening children’s learning performance and improving their training experience [

24,

25]; this strategy has been widely applied in children’s education and training products, bringing principles and mechanics from the gaming world to increase engagement and motivation of the user [

26]. Therefore, a gamification approach to auditory-verbal training is also a promising direction for hearing rehabilitation [

27], merging the fields of gamification and training to benefit individuals with hearing impairments.

We have briefly discussed the development of devices that enhance or replace acoustic signals, as well as the extensive use of tactile and vibrotactile feedback in games and video games over the past several decades [

28]. These applications have roots dating back to the early days of gaming [

29]. As a result of these developments, the intersection of rehabilitation and training techniques for the deaf, haptic and vibrotactile stimulation, and game dynamics emerges as a promising area of research that deserves further investigation.

A relatively recent review concludes that there is a lack of research in auditory or cognitive impairments compared with visual and motor disabilities, suggesting this as a topic for further research [

20]. While devices that augment or substitute hearing using touch have been continuously developed, it is less known how training using such devices can help improve hearing skills. Systematic reviews have raised questions about the effectiveness of musical training [

30] and investigated and individualized computer-based auditory training [

31]. Some have underscored the influence of variables such as participants’ age, training duration, and the type of hearing device used [

32], while some enquired the use of tactile displays for music applications design for hearing impaired individuals [

8]. Additionally, studies have explored the impact of gamification on the learning process [

24], while others have focused on deaf students without incorporating the haptic aspect into the assessment [

33]. Therefore, the evaluation of the impact of vibrotactile technology is a crucial consideration for providing assistance in training activities to individuals with hearing impairments.

In this paper, we present a systematic review of the literature regarding training and gamified experiences that use haptic feedback to help individuals with hearing impairments.

Section 2 introduces the (often ambiguous) terminology,

Section 3 addresses the research questions,

Section 4 the methodology,

Section 5 the results,

Section 6 the discussion,

Section 7 the limitations of this study and

Section 8 the conclusions.

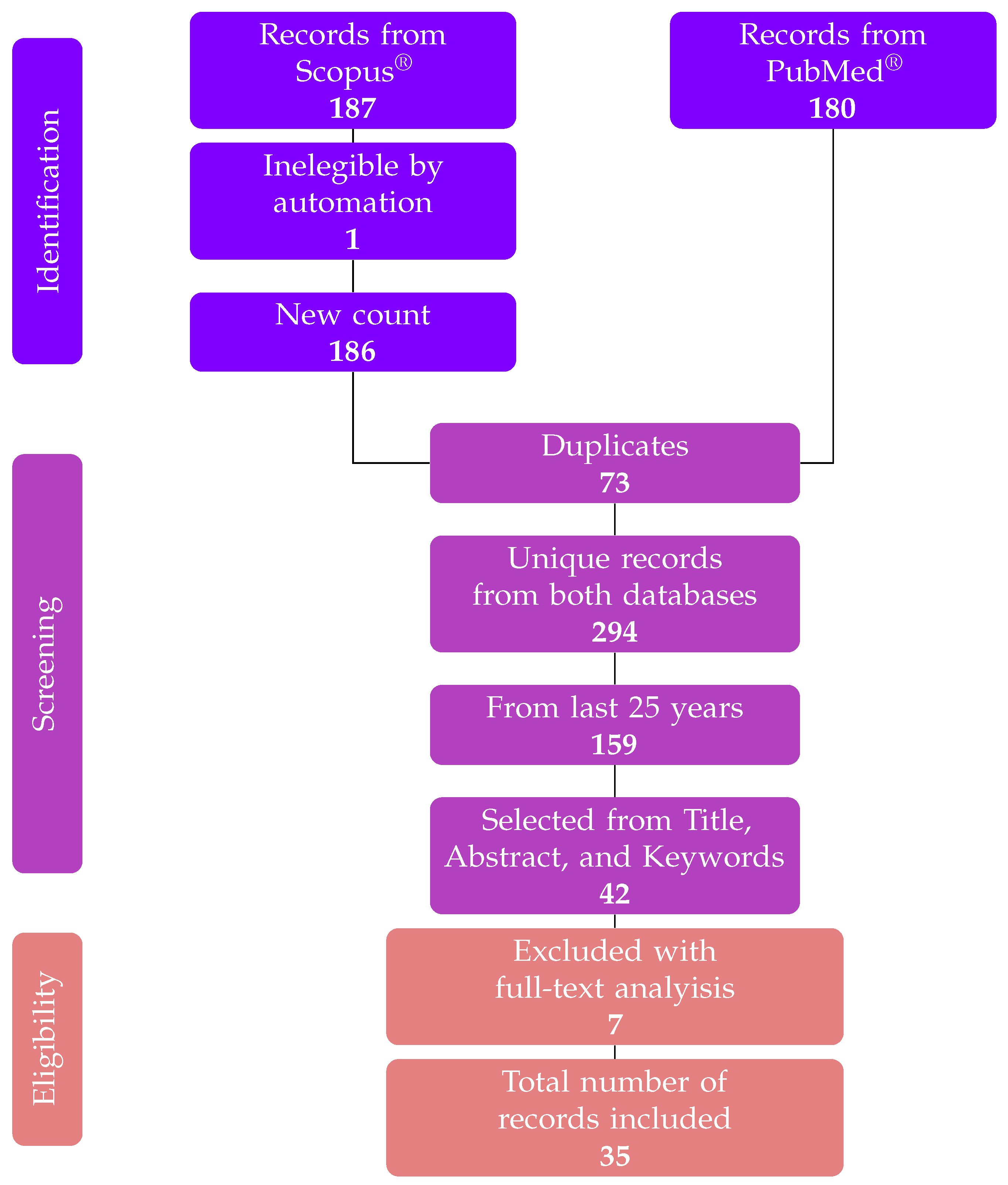

6. Discussion

The primary objective of this systematic review is to gather and analyze articles that propose haptic treatments or design solutions for the hearing-impaired population. In our methodology we detail the sampling approach, and in the subsequent chapter we evaluate 35 identified papers published between 2000 and 2023.

Two central themes underpin our exploration: training and gamification. To include all relevant literature where haptic technology intersects with gamification for hearing-impaired individuals, specific keywords such as game and gamification were introduced. The research in this particular domain yielded a limited number of papers. Despite the interest from industry and academia in video games equipped with vibrotactile feedback [

70], the literature reporting their application to enhance the experience for the hearing-impaired population appears to be sparse. In our research, only three articles directly addressed gamification aspects in their design processes [

46,

47,

62]. Cano et al. [

46] focused on a table game for children aged 7 to 11 with hearing impairment. The game board and cards are the principal means of engagement. Additionally, a smartphone provides visual and vibrotactile feedback by reading QR codes on the physical interface. However, the latter is somewhat limited, offering a buzz-like sensation only when a child’s answer is incorrect, given its secondary role since the smartphone screen simultaneously displays a corresponding sad face emoticon. The second paper [

47] introduces a mobile app that offers vibrotactile feedback in response to a detected drumming gesture by the smartphone. The interaction and feedback are described clearly, but the study lacks emphasis on the gaming aspect, even if the keyword game is included. The final paper addressing gamification is authored by Evreinov et al. [

62]. In this work, the authors showcase a pen which is able to provide vibrotactile feedback when connected to a pocket PC. The primary objective of the vibrotactile feedback in this context is to convey tactile icons (i.e., tactons [

71]) to deaf or blind users during their interaction with two video games designed for this specific purpose. Bringing together these considerations, we noticed a gap in the literature regarding games and haptics for the hearing impaired, making this a valuable path to investigate in the future.

Figure 2 reveals a growing interest in haptics applied to training for the hearing impaired. This can be paired with the increase in the number of new systems for music applications for the same target population [

8]. The majority of papers focus on the effectiveness of the developed rehabilitative method, as stated in

Section 5.2.2. Seventeen out of 27 studies (62.96%) measure the user’s accuracy on a specific task which is the most recurrent measurement, as reported in

Figure 7. The remaining ones rely on psychophysiological measurements (such as two-interval forced choice scores), speech comprehension evaluations, or qualitative observations. Conversely, all the studies that collected data regarding task accuracy have effectiveness as a research focus, except for three [

12,

52,

62]. This finding can be read as a shared methodology construction; the experimental design of a rehabilitative or training method includes the definition of a task whose outcome serves as a measurable quantity that can be used as a metric for training effectiveness.

We relate the almost equal partition in

Figure 8 to some of the observed themes’ main patterns. For the target population, we note that there are seven studies involving blind participants in which vibrotactile technology was used for sensory substitution; only two used it for sensory augmentation. It is reasonable to think that absence of sight drives this design choice. Conversely, all three studies involving users with cochlear implants use haptics for sensory augmentation. Cochlear implant users receive a new electrical stimulation to their auditory nerve that gives them a mode of perception; making it multisensory could be a way to acquaint them with hearing. We note that sensory substitution and augmentation have a symmetric distribution concerning the main trends in vibrotactile processing in

Figure 13. Among the studies using the augmentation approach, four used temporal envelope and seven synthetic generation; in the other group, six used temporal envelope, and only three generated vibrotactile stimulation synthetically. Even if distributed almost uniformly, sensory substitution leans toward creating the vibrotactile stimulus from scratch; sensory augmentation tends to use a temporal envelope perceivable by the other senses.

Determining the placement of vibrotactile stimuli is crucial for achieving the intended outcomes. The sensitivity distribution of our body to haptic stimuli is quite diverse, and considering these concepts is pivotal for good design. From

Figure 9 it can be seen that most devices deliver haptic feedback to hands, palms, and fingertips. This is because these areas are rich in mechanoreceptors such as Pacinian receptors and Meissner corpuscles, which are crucial for perceiving vibrotactile stimulation [

72]. Notably, even in the early stages of human life, during infants’ exploration, it has been demonstrated that we commonly rely on our hands and fingers to give sense to our surroundings. This tactile exploration allows humans to discern the objects’ shapes, textures, and temperatures, even before having the ability to investigate them visually [

73]. Another area of the body used in the selected manuscripts are the wrists. This is a more convenient area for conducting other activities while receiving haptic feedback, since our hands can be left free to perform other tasks. The drawbacks are the presence of body hair that affects sensitivity, and clothes that might interfere with the experience. It is worth mentioning that in the article by Tufatulin et al. [

45], the researchers used a loudspeaker to convey a full-body haptic feedback experience through water, using it as a medium to provide a multisensory experience, combining sound and vibrations to improve children’s hearing activation after hearing aid or cochlear implantation.

As observed in

Section 5.3.3, a variety of mappings have been explored. However, more than 50% of these studies utilized vibrations derived from sound stimuli (

sound-vibrotactile mapping). This finding is unsurprising given the well-established connection between auditory and tactile modalities in the literature [

74], as these two senses show good potential when working together and present some close interactions [

75]. When we look at perceptual aspects, such as the different sensitivities and thresholds of frequency perception for tactile and auditory channels, we can observe similar integration, masking, gap detection and just noticeable difference (JND) effects [

76]. From a practical standpoint, sound-to-vibrotactile mapping proves technically convenient, as it often allows direct feeding of sounds within the audio range (20–20000 Hz). Since humans present limited tactile capabilities if compared to hearing ones (e.g., reduced frequency spectrum and resolution [

76]), often Digital Signal Processing (DSP) techniques are often applied to the input sound stimuli to extract specific features such as the fundamental frequency (F0), harmonics, and temporal envelope. This way, the vibrotactile stimulation can emphasize certain aspects of the sound input while omitting secondary ones, aligning with the research objectives and tactile capabilities. In

Figure 13, we can observe that one of the most common approaches involves extracting the temporal envelope from sound signals and applying it to the vibrotactile signal (that could, for instance, be generated using a synthesis method). If we focus on the articles that employed the

sound-vibrotactile mapping, a remarkable pattern emerges: all of them administered vibrotactile stimuli to either the fingertip, the palm, or the whole hand, capitalizing on the high sensitivity of these body parts to vibrations [

72]. Furthermore, stimulating the hand or fingertip requires minimal preparation from the participants, often eliminating the need for additional garments or wearable equipment that might increase the task duration and discomfort. Out of the 19 studies applying the

sound-vibrotactile mapping, nine present positive statistically significant results; and additional six show more complex results with negative and positive outcomes [

51,

57,

60,

61,

64,

65]. These outcomes are tightly linked with both the design choices and the characteristics of the participants. Upon examining individual experiments, a common trend emerges in eight of the 14 studies that reported a positive or statistically significant result: the temporal envelope processing technique. This technique involves extracting the amplitude of sound stimuli over time and applying it to the vibrotactile signal, aiming at a clear amplitude correlation between the two. Summarizing these findings, one could argue that employing

sound-vibrotactile mapping with temporal envelope processing techniques and delivering this stimulus to the hand (or fingertip or palm) may result in positive and statistically significant outcomes.

Moving to the device choice, we can observe that almost every article adopts a unique approach. The most common device is the smartphone [

38,

44,

47,

54,

58], given its near-ubiquity; with most people owning one or at least being familiar with it, smartphones serve as convenient and portable tools for training and enhancing experiences. However, the compact size of smartphones comes with some drawbacks, particularly concerning vibrotactile performance. Due to their small form factor, the actuators in these devices must also be small, resulting in reduced frequency performance. Additionally, the design focus for the vibrotactile experience on smartphones has consistently prioritized conveying simple messages or notifications rather than complex sounds. To reduce costs and keep them as compact as possible, the majority of smartphones are equipped with ERM actuators that usually operate on one single frequency (resonant frequency) [

77]. Furthermore, using such devices in this field introduces significant challenges in controlling potentially confounding variables that are typically less pronounced in controlled laboratory settings and equipment, hence complicating and reducing the reliability of experiments and evaluations. A contrasting approach is evident in the studies by Fletcher et al. [

10,

14,

48,

50], where electrodynamic shakers are employed to convey vibrations through a complex and high-fidelity piece of equipment. Specifically, electrodynamic shakers are closely linked to voice-coils and find extensive use in industrial applications. Using this method, the HVLab device reproduces the input signal with good quality, covering a frequency range of 16 to 500 Hz with a low tolerance for frequency deviation (<0.1%). Given the variety of tools and devices available, researchers should exercise caution when choosing an actuator technology, bearing in mind that each has its pros and cons. Broadly, two major categories can be distinguished: piezoelectric, ERM, and linear moving magnets favor small size and low cost, whereas electromagnetic vibrators, voice-coils, loudspeakers, and inertial transducers emphasize high-quality performance.

Our research has unveiled haptic solutions that have evolved over the years, often utilizing unique vibrotactile processing techniques tailored to specific devices, as shown in

Section 5.4.1 and

Section 5.4.3. The lack of documentation on both hardware and software for the patented solutions generated issues concerning transparency and replicability. The lack of standardization of processing (

Section 5.4.3) and device technology raises concerns about the generalizability of the findings to broader user populations. This issue becomes even more evident when considering the target population (

Section 5.5.1).

The retrieved data reveals a significant disparity in the participants involved in these experiments: most studies either include a limited number of individuals with target impairment, or simulate impairments by depriving people of one or more senses. Thirteen publications present more than eight participants with impairments (above the mean of the whole study group; see

Figure 14), and ten of these studies declared an affiliation with a hospital or collaboration with a school, health institution, or association for impaired individuals [

14,

40,

45,

48,

51,

54,

56,

59,

60,

63,

64,

65]. While recognizing the substantial challenges in the recruitment process, particularly within minority groups, we recommend that researchers establish close collaborations with hospitals, schools, and care centers to access a more diverse and representative population. Working closely within a clinical environment can also shed light on challenges that might not be apparent to academics alone. This collaborative approach can foster a better understanding of the real-world needs and experiences of the hearing-impaired population, ultimately leading to more effective haptic solutions.

A consistent pattern emerges when filtering the included articles to focus on those with positive statistically significant outcomes involving impaired individuals. All six studies meeting these criteria have been published within the last eleven years and employed sound-to-vibrotactile feedback mapping. If we dig into the details, four of the six articles applied haptic technology to enhance another sensory modality by applying vibrotactile stimulation on the wrist [

14,

40,

48] or full body, as observed by Nanayakkara et al. [

56]. The remaining two studies used vibrations in other body parts for sensory substitution [

11,

54]. In three of them, the vibrotactile processing techniques utilized temporal envelope-based methods [

14,

48,

54], while the other three applied full sound [

56] generated synthetic stimuli [

11]. Since Daza Gonzalez et al. [

40] utilized the Lofelt bracelet, the specifics of the DSP method for the vibrotactile generation were not disclosed.

Considering the studies with either no training or only a brief training experience before exposing the participants to the experiment (pre-test), we observed no relevant pattern relating the training length and the statistical significance of the results. Nine studies reported no significant results, whereas seven studies did.

In conclusion, the evidence that all the significant positive outcomes involved a sound-to-vibrotactile feedback mapping confirms the long-standing idea that the multiple perceptual aspects connected to sound are transmittable through touch. Thus, a sensory substitution of this type is a viable solution for hearing-impaired rehabilitation and training.

8. Conclusions and Future Research

This systematic review compiles a set of papers exploring the integration of haptic feedback in training and gamification protocols to enhance the auditory experience for individuals with hearing impairments. We initially identified 294 articles from two prominent databases using relevant keywords. After careful screening and eligibility checks, we included 35 manuscripts in our analysis. Our examination primarily centers on study design, hardware and software solutions, training protocols, and the resulting test outcomes. Finally, we derive insights from the findings to provide recommendations for future researchers and designers.

Within the literature review, we observed a notable scarcity of studies addressing games and haptics for hearing-impaired individuals, underlining the urgency for further exploration in this critical area. Furthermore, those that delved into the topic often had a limited focus, either on vibrotactile or gamification aspects, leaving the combination relatively unexplored.

A noteworthy discovery is a consensus on targeting hands and wrists with haptic feedback alongside temporal envelope-processed sound, yielding positive and statistically significant results. This presents a promising avenue for future research. On the contrary, the diverse array of devices conveying vibrotactile feedback adds complexity, making it challenging to establish clear correlations between treatment administration and observed outcomes.

We emphasize the importance of conducting research in real-world, ecologically valid environments, collaborating closely with end-users, rather than confining studies solely to controlled laboratory settings. While acknowledging the challenges of field research, we contend that testing in real-world scenarios offers a more accurate understanding of the practical challenges and benefits experienced by hearing-impaired individuals with haptic solutions. Inspired by the diversity of design choices for training programs (see

Section 5.5.2), we believe that combining qualitative assessments with quantitative data can provide a more comprehensive understanding of this multifaceted sensory domain and richer interpretation of the results.

Referring to the results in

Section 5.6.2, it is crucial to note that several papers were excluded from our analysis due to a lack of statistically significant quantitative findings, attributed to a low participant count. This challenge can be addressed by designing studies involving organizations and hospitals, thereby ensuring a more extensive population to collaborate with and emphasizing the importance of qualitative results alongside quantitative ones.

As a final remark for future research, we recommend exploring more engaging technologies tailored to the younger population. While researchers and industries have developed immersive technologies over the past decade, it is noteworthy that previous studies emphasize the importance of clinical environments [

78]. However, there is a limited inclusion of haptic feedback in immersive technology specifically designed for hearing-impaired individuals, with only a few examples found in the literature [

79]. Therefore, we propose further investigation into the potential benefits of immersive experiences coupled with haptic feedback for this demographic.