How to Design Human-Vehicle Cooperation for Automated Driving: A Review of Use Cases, Concepts, and Interfaces

Abstract

1. Introduction

2. Background and Related Work

2.1. Strategies and HMIs for Driver–Vehicle Cooperation

2.2. Existing Frameworks and Taxonomies for Driver–Vehicle Cooperation

2.3. Human-Centered Cooperation Perspective

3. Research Questions and Aim of the Paper

- RQ 1

- Which elements define the cooperation use case in a cooperative interaction?

- RQ 2

- Which elements define the cooperation frame in a cooperative interaction?

- RQ 3

- Which elements describe the characteristics of the HMI in a cooperative interaction?

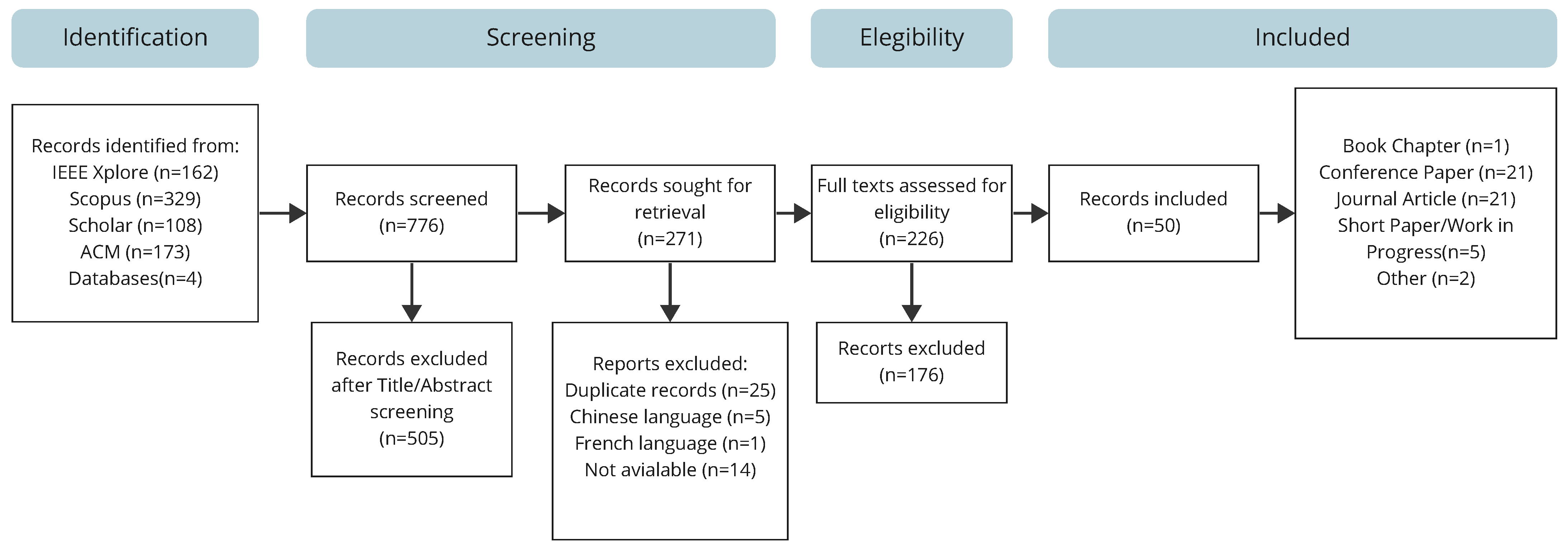

4. Materials and Methods

4.1. Literature Survey

4.1.1. Identification

- ACM Digital Library:

- ((“cooperat*” OR “collaborat*” OR “shared control”) AND (“human” OR “user” OR “driver”) AND (“vehicle” OR “driving”))

- Scopus:

- TITLE-ABS (“cooperat*” OR “collaborat*” OR “shared control”) AND TITLE-ABS (“human” OR “user” OR “driver”) AND TITLE-ABS (“vehicle” OR “driving”) AND KEY (“human–computer interaction” OR “human–machine interaction” OR “human automation interaction”)

- IEEE Xplore:

- ((“cooperat*” OR “collaborat*” OR “shared control”) AND (“human” OR “user” OR “driver”) AND (“vehicle” OR “driving”) AND (“human–computer interaction” OR “human–machine interaction” OR “human automation interaction”))

- Google Scholar:

- (human | driver | user | driving | agent) intitle: (car | vehicle | automation) intitle:(cooperation | collaboration|cooperative | collaborative) AND (“human–computer interaction” | “human–machine interaction” | “human automation interaction”)

4.1.2. Screening

- The research must be about human–automation cooperation/collaboration

- The research must be about human–vehicle interaction

- The research must be about cooperative driving use cases, concepts, or interfaces

- The research must be peer-reviewed

4.1.3. Clustering and Categorization

- What type of paper is it?

- What was the main research question of the paper?

- Which method was used in the paper?

- What was the main metric taken in the experiment (if applicable)?

- What is the main contribution of the paper?

4.2. Taxonomy Development and Validation

4.2.1. Development

4.2.2. Initial Validation

4.2.3. Exemplary Application

5. Results

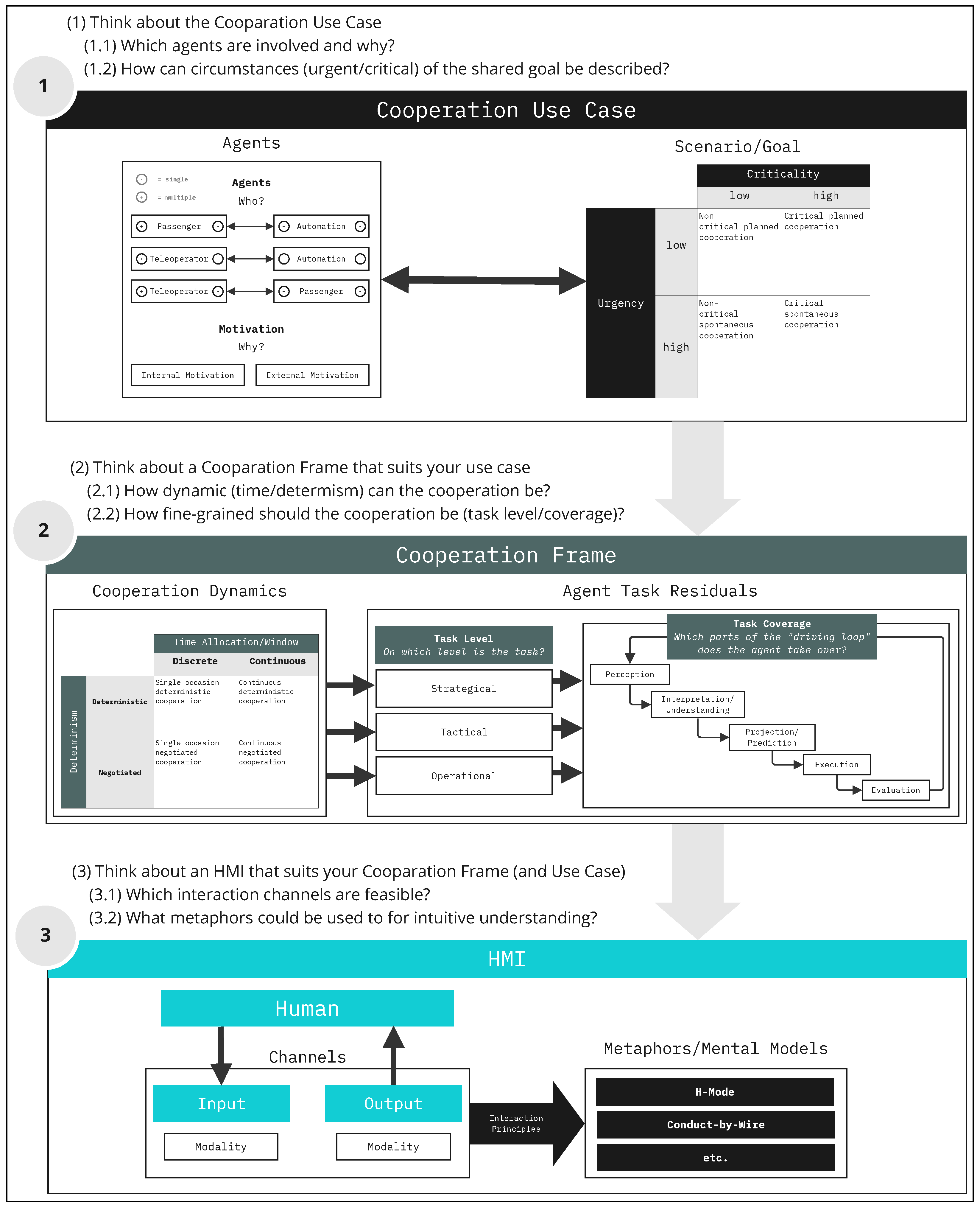

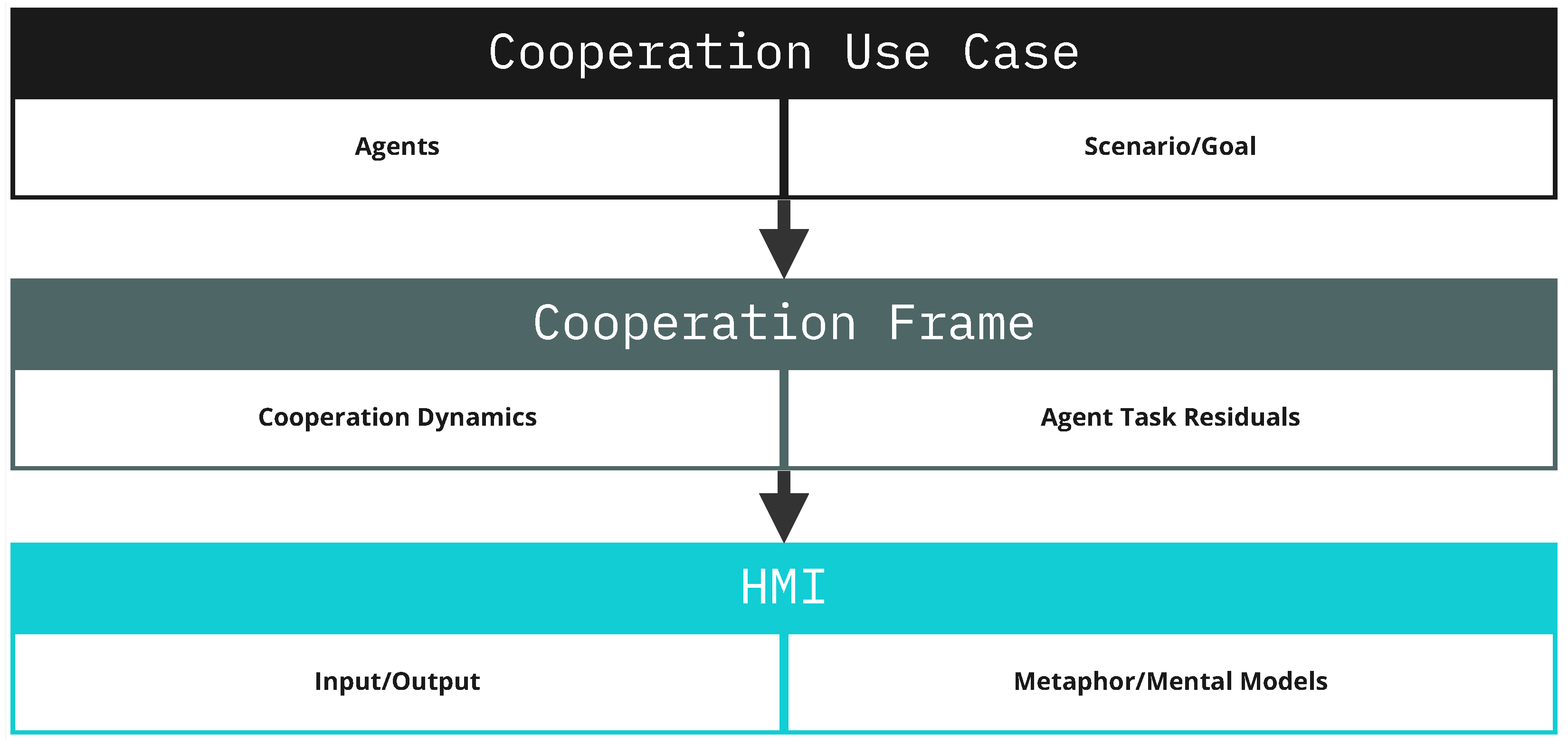

- Thinking about the concrete cooperation use case(s), from that

- designing the cooperation task distribution concept for the use case(s), and then

- designing a feasible HMI that allows humans involved in the cooperation to fulfill their task share in a usable matter.

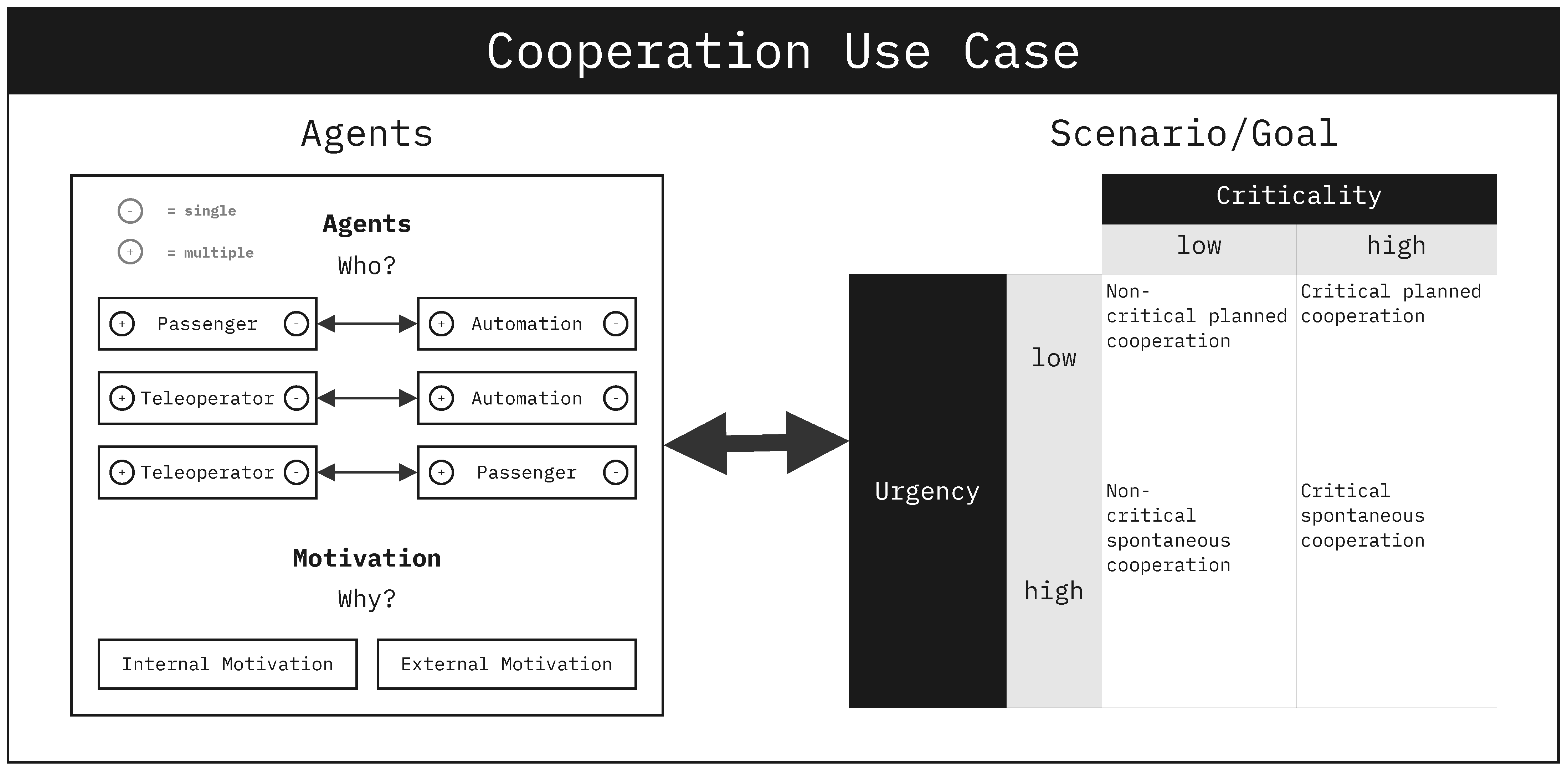

5.1. Cooperation Use Case

5.1.1. Agents

Co-Agents

Motivation

5.1.2. Scenario

Criticality and Urgency

- Non-critical planned cooperation: tasks that are not urgent and non-critical. An example would be a passenger request for a change in the automation’s driving style.

- Non-critical spontaneous cooperation: tasks that are non-critical but require sudden action. An example would be a passenger who must choose between route alternatives with limited time.

- Critical planned cooperation: tasks that are not urgent but have high criticality. An example would be the passenger deciding on long-term strategies.

- Critical spontaneous cooperation: tasks that are urgent and critical. An example would be the passenger choosing maneuvers for the automation to execute.

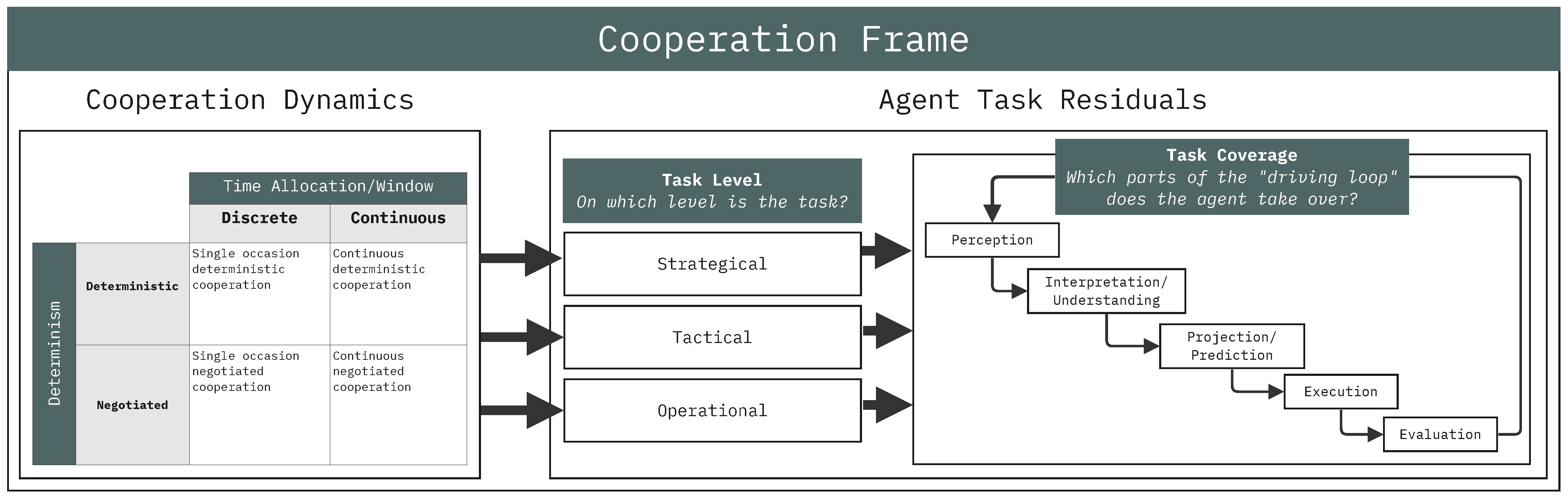

5.2. Cooperation Frame

5.2.1. Cooperation Dynamics

Determinism

Time Allocation/Window

- Single occasion deterministic cooperation: An Agent takes over a “master” role in one discrete interaction. For the execution of the sub-task they take over in this instance, they will be the decision authority. The other co-agents revert to subordinated roles for the duration of this single interaction.

- Single occasion negotiated cooperation: Multiple Agents negotiate a cooperative action on one occasion. In this instance, there is not necessarily one co-agent taking over the single “master” role for the cooperation. The co-agents need a defined negotiation strategy to come to a conclusion. This strategy has to be defined beforehand.

- Continuous deterministic cooperation: an example of this is continuous maneuver-based cooperation. The co-agents work together over a longer period of time. However, in this case, the interactions are discrete (e.g., choosing maneuvers which the automation should execute). In these repeated single interactions, one co-agent takes over a “master” role. In the case of Conduct-by-Wire, the driver can override the automation’s intentions by choosing maneuver commands. In this scenario, there is no negotiation between the co-agents.

- Continuous negotiated cooperation: In this case, multiple co-agents continuously negotiate to reach a cooperative decision. None of the agents takes over the primary “master” role to override the decisions of the other agents. One example of this is Haptic Shared Control (HSC). In HSC, the driver and the automation engage in continuous cooperation on the operational level (multiple agents partially in control). The trajectory of the vehicle is thereby negotiated by the force the human applies on the steering wheel and the force the automation applies to the steering wheel at the same time. Using this method, both agents communicate their intentions. The negotiation result is the resulting combined trajectory.

5.2.2. Agents Task Residuals

Task Level

- Long-term tasks: navigation and route planning (strategical).

- Mid-term tasks: maneuver planning and execution (tactical).

- Short-term tasks: trajectory planning and following (operational).

Task Coverage

Example

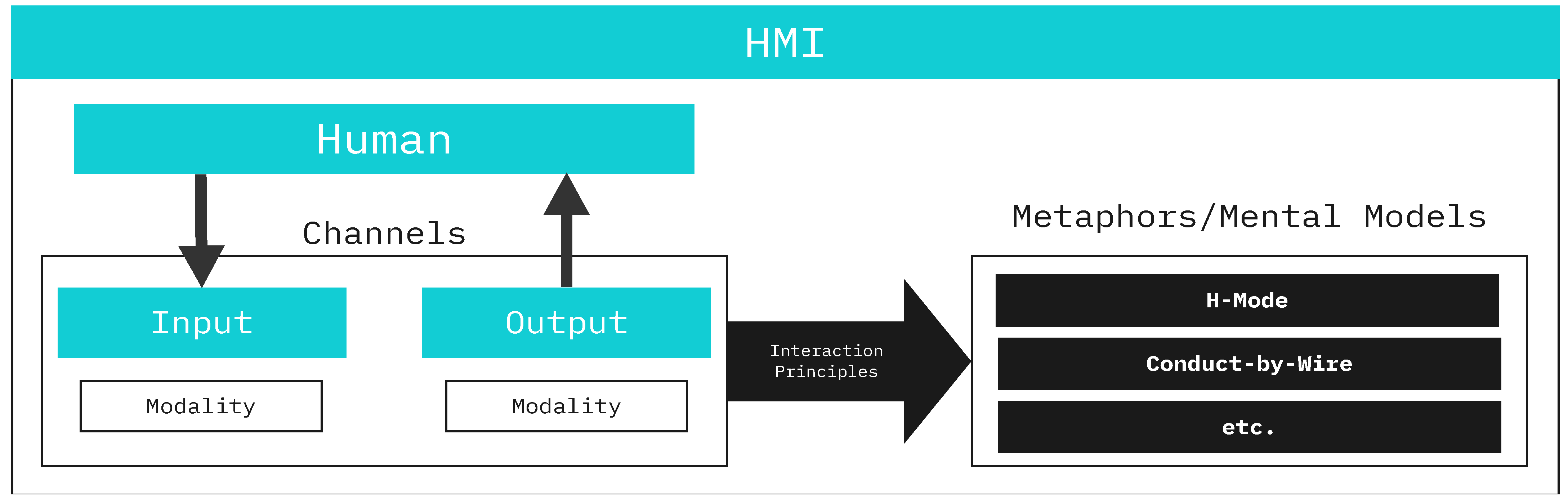

5.3. Human Machine Interface

5.3.1. Input and Output

5.3.2. Metaphors/Mental Models

6. Application

6.1. Exemplary Application

6.1.1. Step 1: Use Case Classification

6.1.2. Step 2: Cooperation Frame Classification

6.1.3. Step 3: HMI Classification

6.2. Categorization of Related Literature

7. Discussion

7.1. Comparison to Existing Frameworks and Taxonomies

7.2. The Cooperation Use Case

7.3. Applicability

- In-depth analysis of existing cooperative HMI concepts

- Classification and comparative analysis of existing literature on cooperative driving

- The iterative development of use cases, cooperation frameworks, and HMIs through a bottom-up approach for experimental studies

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Source | Was There a Clear Statement of the Aims of the Research? | Is the Methodology Appropriate? | Was the Research Design Appropriate to Address the Aims of the Research? | Was the Recruitment Strategy Appropriate to the Aims of the Research? | Were the Data Collected in a Way that Addressed the Research Issue? | Has the Relationship between Researcher and Participants Been Adequately Considered? | Have Ethical Issues Been Taken into Consideration? | Was the Data Analysis Sufficiently Rigorous? | Is There a Clear Statement of Findings? | Is the Research Valuable? |

|---|---|---|---|---|---|---|---|---|---|---|

| [26] | y | y | y | ? | y | n.a | n.a | y | y | y |

| [21] | y | y | y | y | y | ? | ? | y | y | y |

| [40] | y | y | y | y | y | y | ? | y | y | y |

| [37] | y | y | y | y | y | y | ? | y | y | y |

| [41] | y | y | y | y | y | y | y | y | y | y |

| [34] | y | y | y | y | y | y | ? | y | y | y |

| [20] | y | y | y | ? | y | ? | ? | y | y | y |

| [36] | y | y | y | ? | y | ? | y | y | y | y |

| [38] | y | y | y | y | y | ? | ? | y | y | y |

| [42] | y | y | y | ? | y | ? | ? | y | y | y |

| [35] | y | y | y | ? | y | ? | y | y | y | y |

| [39] | y | y | y | n.a | n.a | n.a | n.a | n.a | y | y |

| [41] | y | y | y | n.a | n.a | n.a | n.a | n.a | y | y |

| [61] | y | y | y | n.a | n.a | n.a | n.a | y | y | y |

| [62] | y | y | y | y | y | ? | y | y | y | y |

| [65] | y | y | y | y | y | ? | ? | y | y | y |

| [67] | y | y | y | ? | y | ? | ? | y | y | y |

| [69] | y | y | y | ? | y | ? | ? | y | y | y |

| [63] | y | y | y | n.a | y | n.a | ? | y | y | y |

| [64] | y | y | y | y | y | ? | y | y | y | y |

| [66] | y | y | y | n.a | n.a | n.a | n.a | y | y | y |

| [55] | y | y | y | y | y | ? | y | y | y | y |

| [70] | y | y | y | n.a | n.a | n.a | n.a | n.a | y | y |

| [73] | y | y | y | ? | y | ? | ? | y | y | y |

| [77] | y | y | y | ? | y | ? | ? | y | y | y |

| [43] | y | y | y | ? | y | ? | ? | y | y | y |

| [73] | y | y | y | y | y | ? | ? | y | y | y |

| [71] | y | y | y | ? | y | ? | ? | y | y | y |

| [72] | y | y | y | ? | y | ? | ? | y | y | y |

| [75] | y | y | y | y | y | ? | ? | y | y | y |

| [76] | y | y | y | ? | y | ? | ? | y | y | y |

| [48] | y | y | y | ? | y | ? | ? | y | y | y |

| Source | Was There a Clear Statement of the Aims of the Research? | Is the Methodology Appropriate? | Was the Research Design Appropriate to Address the Aims of the Research? | Was the Recruitment Strategy Appropriate to the Aims of the Research? | Were the Data Collected in a Way that Addressed the Research Issue? | Has the Relationship between Researcher and Participants Been Adequately Considered? | Have Ethical Issues Been Taken into Consideration? | Was the Data Analysis Sufficiently Rigorous? | Is There a Clear Statement of Findings? | Is the Research Valuable? |

|---|---|---|---|---|---|---|---|---|---|---|

| [45] | y | y | y | ? | y | ? | ? | y | y | y |

| [47] | y | y | y | y | y | y | y | y | y | y |

| [49] | y | y | y | y | y | y | y | y | y | y |

| [22] | y | y | n.a | n.a | n.a | n.a | n.a | n.a | y | y |

| [50] | y | y | y | ? | y | ? | ? | y | y | y |

| [52] | y | y | y | ? | y | ? | ? | y | y | y |

| [56] | y | y | y | y | y | ? | ? | y | y | y |

| [59] | y | y | y | y | y | ? | ? | y | y | y |

| [60] | y | y | y | ? | y | ? | ? | y | y | y |

| [46] | y | y | y | n.a | y | n.a | n.a | y | y | y |

| [44] | y | y | y | n.a | n.a | n.a | n.a | n.a | y | y |

| [51] | y | y | y | y | y | ? | ? | y | y | y |

| [53] | y | y | y | ? | y | ? | ? | y | y | y |

| [54] | y | y | y | n.a | n.a | n.a | n.a | n.a | y | y |

| [55] | y | y | y | ? | y | ? | ? | y | y | y |

| [57] | y | n.a | n.a | n.a | n.a | n.a | n.a | n.a | y | y |

| [58] | y | y | y | ? | y | ? | ? | y | y | y |

Appendix B

References

- Ground Vehicle Standard J3016_202104; Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. SAE International: Pittsburgh, PA, USA, 2021. [CrossRef]

- Walch, M.; Sieber, T.; Hock, P.; Baumann, M.; Weber, M. Towards Cooperative Driving: Involving the Driver in an Autonomous Vehicle’s Decision Making. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 24–26 October 2016; pp. 261–268. [Google Scholar] [CrossRef]

- Frison, A.K.; Wintersberger, P.; Riener, A. Resurrecting the ghost in the shell: A need-centered development approach for optimizing user experience in highly automated vehicles. Transp. Res. Part F Traffic Psychol. Behav. 2019, 65, 439–456. [Google Scholar] [CrossRef]

- Detjen, H.; Faltaous, S.; Pfleging, B.; Geisler, S.; Schneegass, S. How to Increase Automated Vehicles’ Acceptance through In-Vehicle Interaction Design: A Review. Int. J. Hum. Comput. Interact. 2021, 37, 308–330. [Google Scholar] [CrossRef]

- Stiegemeier, D.; Kraus, J.; Baumann, M. Why drivers use in-vehicle technology: The role of basic psychological needs and motivation. Transp. Res. Part F Traffic Psychol. Behav. 2024, 100, 133–153. [Google Scholar] [CrossRef]

- Kettwich, C.; Schrank, A.; Oehl, M. Teleoperation of highly automated vehicles in public transport: User-centered design of a human–machine interface for remote-operation and its expert usability evaluation. Multimodal Technol. Interact. 2021, 5, 26. [Google Scholar] [CrossRef]

- Neumeier, S.; Wintersberger, P.; Frison, A.K.; Becher, A.; Facchi, C.; Riener, A. Teleoperation: The Holy Grail to Solve Problems of Automated Driving? Sure, but Latency Matters. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 21–25 September 2019; pp. 186–197. [Google Scholar] [CrossRef]

- Guo, C.; Sentouh, C.; Haué, J.B.; Popieul, J.C. Driver–vehicle cooperation: A hierarchical cooperative control architecture for automated driving systems. Cogn. Technol. Work 2019, 21, 657–670. [Google Scholar] [CrossRef]

- Flemisch, F.; Bengler, K.; Bubb, H.; Winner, H.; Bruder, R. Towards cooperative guidance and control of highly automated vehicles: H-Mode and Conduct-by-Wire. Ergonomics 2014, 57, 343–360. [Google Scholar] [CrossRef] [PubMed]

- Ghasemi, A.H.; Johns, M.; Garber, B.; Boehm, P.; Jayakumar, P.; Ju, W.; Gillespie, R.B. Role Negotiation in a Haptic Shared Control Framework. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ann Arbor, MI, USA, 24–26 October 2016; pp. 179–184. [Google Scholar] [CrossRef]

- Walch, M.; Colley, M.; Weber, M. Driving-Task-Related Human–Machine Interaction in Automated Driving: Towards a Bigger Picture. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, New York, NY, USA, 21–25 September 2019; pp. 427–433. [Google Scholar] [CrossRef]

- Winner, H.; Hakuli, S. Conduct-by-Wire: Following a new paradigm for driving into the future. In Proceedings of the FISITA World Automotive Congress, Yokohama, Japan, 22–27 October 2006. [Google Scholar]

- Hoc, J.M. Towards a cognitive approach to human–machine cooperation in dynamic situations. Int. J. Hum. Comput. Stud. 2001, 54, 509–540. [Google Scholar] [CrossRef]

- Pichen, J.; Stoll, T.; Baumann, M. From SAE-Levels to Cooperative Task Distribution: An Efficient and Usable Way to Deal with System Limitations? In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 9–14 September 2021; pp. 109–115. [Google Scholar] [CrossRef]

- Wiegand, G.; Holländer, K.; Rupp, K.; Hussmann, H. The Joy of Collaborating with Highly Automated Vehicles. In Proceedings of the 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 21–22 September 2020; pp. 223–232. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Cao, D.; Hang, P. Toward human-vehicle collaboration: Review and perspectives on human-centered collaborative automated driving. Transp. Res. Part C Emerg. Technol. 2021, 128, 103199. [Google Scholar] [CrossRef]

- Flemisch, F.; Adams, C.; Conway, S.; Goodrich, K.; Palmer, M.; Schutte, P. The H-Metaphor as a Guideline for Vehicle Automation and Interaction; NASA/TM-2003-212672; NASA: Washington, DC, USA, 2003. [Google Scholar]

- Woide, M.; Miller, L.; Colley, M.; Damm, N.; Baumann, M. I have Got the Power: Exploring the Impact of Cooperative Systems on Driver-Initiated Takeovers and Trust in Automated Vehicles. In Proceedings of the 15th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ingolstadt, Germany, 18–22 September 2023; pp. 123–135. [Google Scholar] [CrossRef]

- Michon, J.A. A Critical View of Driver Behavior Models: What Do We Know, What Should We Do? In Human Behavior and Traffic Safety; Evans, L., Schwing, R.C., Eds.; Springer: Boston, MA, USA, 1985; pp. 485–524. [Google Scholar] [CrossRef]

- Wang, C.; Krüger, M.; Wiebel-Herboth, C.B. “Watch Out!”: Prediction-Level Intervention for Automated Driving. In Proceedings of the 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 21–22 September 2020; pp. 169–180. [Google Scholar] [CrossRef]

- Wang, C.; Chu, D.; Martens, M.; Krüger, M.; Weisswange, T.H. Hybrid Eyes: Design and Evaluation of the Prediction-Level Cooperative Driving with a Real-World Automated Driving System. In Proceedings of the 14th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 17–20 September 2022; pp. 274–284. [Google Scholar] [CrossRef]

- Wang, C.; Weisswange, T.H.; Krüger, M. Designing for Prediction-Level Collaboration Between a Human Driver and an Automated Driving System. In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 9–14 September 2021; pp. 213–216. [Google Scholar] [CrossRef]

- Zimmermann, M.; Bengler, K. A multimodal interaction concept for cooperative driving. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, QLD, Australia, 23–26 June 2013; pp. 1285–1290. [Google Scholar] [CrossRef]

- Petermeijer, S.M.; Tinga, A.; Jansen, R.; de Reus, A.; van Waterschoot, B. What Makes a Good Team?—Towards the Assessment of Driver-Vehicle Cooperation. In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 9–14 September 2021; pp. 99–108. [Google Scholar] [CrossRef]

- Wang, C. A Framework of the Non-Critical Spontaneous Intervention in Highly Automated Driving Scenarios. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, New York, NY, USA, 21–25 September 2019; pp. 421–426. [Google Scholar] [CrossRef]

- Mirnig, A.G.; Gärtner, M.; Laminger, A.; Meschtscherjakov, A.; Trösterer, S.; Tscheligi, M.; McCall, R.; McGee, F. Control Transition Interfaces in Semiautonomous Vehicles: A Categorization Framework and Literature Analysis. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 24–27 September 2017; pp. 209–220. [Google Scholar] [CrossRef]

- Wolf, I. The Interaction Between Humans and Autonomous Agents. In Autonomous Driving: Technical, Legal and Social Aspects; Springer: Berlin/Heidelberg, Germany, 2016; pp. 103–124. [Google Scholar] [CrossRef]

- Malve, B.; Peintner, J.; Sadeghian, S.; Riener, A. “Do You Want to Drive Together?”—A Use Case Analysis on Cooperative, Automated Driving. In Proceedings of the 15th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ingolstadt, Germany, 18–22 September 2023; pp. 209–214. [Google Scholar] [CrossRef]

- Peintner, J.; Manger, C.; Riener, A. Communication of Uncertainty Information in Cooperative, Automated Driving: A Comparative Study of Different Modalities. In Proceedings of the 15th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ingolstadt, Germany, 18–22 September 2023; pp. 322–332. [Google Scholar] [CrossRef]

- Walch, M.; Mühl, K.; Kraus, J.; Stoll, T.; Baumann, M.; Weber, M. From Car-Driver-Handovers to Cooperative Interfaces: Visions for Driver–Vehicle Interaction in Automated Driving. In Automotive User Interfaces: Creating Interactive Experiences in the Car; Meixner, G., Müller, C., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 273–294. [Google Scholar] [CrossRef]

- Woide, M.; Stiegemeier, D.; Pfattheicher, S.; Baumann, M. Measuring driver-vehicle cooperation: Development and validation of the Human–Machine-Interaction-Interdependence Questionnaire (HMII). Transp. Res. Part F Traffic Psychol. Behav. 2021, 83, 424–439. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef]

- Haddaway, N.R.; Page, M.J.; Pritchard, C.C.; McGuinness, L.A. PRISMA2020: An R package and Shiny app for producing PRISMA 2020-compliant flow diagrams, with interactivity for optimised digital transparency and Open Synthesis. Campbell Syst. Rev. 2022, 18, e1230. [Google Scholar] [CrossRef]

- Peintner, J.B.; Manger, C.; Riener, A. “Can You Rely on Me?” Evaluating a Confidence HMI for Cooperative, Automated Driving. In Proceedings of the 14th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 17–20 September 2022; pp. 340–348. [Google Scholar] [CrossRef]

- Saito, T.; Wada, T.; Sonoda, K. Control Transferring between Automated and Manual Driving Using Shared Control. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Adjunct, New York, NY, USA, 24–27 September 2017; pp. 115–119. [Google Scholar] [CrossRef]

- Walch, M.; Woide, M.; Mühl, K.; Baumann, M.; Weber, M. Cooperative Overtaking: Overcoming Automated Vehicles’ Obstructed Sensor Range via Driver Help. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 21–25 September 2019; pp. 144–155. [Google Scholar] [CrossRef]

- Kuramochi, H.; Utsumi, A.; Ikeda, T.; Kato, Y.O.; Nagasawa, I.; Takahashi, K. Effect of Human–Machine Cooperation on Driving Comfort in Highly Automated Steering Maneuvers. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, New York, NY, USA, 21–25 September 2019; pp. 151–155. [Google Scholar] [CrossRef]

- Tatsumi, K.; Utsumi, A.; Ikeda, T.; Kato, Y.O.; Nagasawa, I.; Takahashi, K. Evaluation of Driver’s Sense of Control in Lane Change Maneuvers with a Cooperative Steering Control System. In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 9–14 September 2021; pp. 107–111. [Google Scholar] [CrossRef]

- Sarabia, J.; Diaz, S.; Marcano, M.; Zubizarreta, A.; Pérez Rastelli, J. Haptic Steering Wheel for Enhanced Driving: An Assessment in Terms of Safety and User Experience. In Proceedings of the 14th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 17–20 September 2022; pp. 219–221. [Google Scholar] [CrossRef]

- Colley, M.; Askari, A.; Walch, M.; Woide, M.; Rukzio, E. ORIAS: On-The-Fly Object Identification and Action Selection for Highly Automated Vehicles. In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 9–14 September 2021; pp. 79–89. [Google Scholar] [CrossRef]

- Ros, F.; Terken, J.; van Valkenhoef, F.; Amiralis, Z.; Beckmann, S. Scribble Your Way Through Traffic. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 23–25 September 2018; pp. 230–234. [Google Scholar] [CrossRef]

- Detjen, H.; Faltaous, S.; Geisler, S.; Schneegass, S. User-Defined Voice and Mid-Air Gesture Commands for Maneuver-Based Interventions in Automated Vehicles. In Proceedings of the Mensch Und Computer 2019, New York, NY, USA, 8–11 September 2019; pp. 341–348. [Google Scholar] [CrossRef]

- Damböck, D.; Kienle, M.; Bengler, K.; Bubb, H. The H-Metaphor as an Example for Cooperative Vehicle Driving. In Human-Computer Interaction: Towards Mobile and Intelligent Interaction Environments, Proceedings of the 14th International Conference on Human–Computer Interaction, Orlando, FL, USA, 9–14 July 2011; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6763, pp. 376–385. [Google Scholar] [CrossRef]

- Sefati, M.; Gert, D.; Kreiskoether, K.D.; Kampker, A. A Maneuver Based Interaction Framework for External Users of an Automated Assistance Vehicle. In SMARTGREENS 2017, VEHITS 2017: Smart Cities, Green Technologies, and Intelligent Transport Systems; Donnellan, B., Klein, C., Helfert, M., Gusikhin, O., Pascoal, A., Eds.; Springer: Cham, Switzerland, 2019; pp. 274–295. [Google Scholar] [CrossRef]

- Ercan, Z.; Carvalho, A.; Tseng, H.E.; Gökaşan, M.; Borrelli, F. A predictive control framework for torque-based steering assistance to improve safety in highway driving. Veh. Syst. Dyn. 2018, 56, 810–831. [Google Scholar] [CrossRef]

- Rothfuß, S.; Ayllon, C.; Flad, M.; Hohmann, S. Adaptive Negotiation Model for Human–Machine Interaction on Decision Level. IFAC-PapersOnLine 2020, 53, 10174–10181. [Google Scholar] [CrossRef]

- Li, Q.; Wang, Z.; Wang, W.; Zeng, C.; Li, G.; Yuan, Q.; Cheng, B. An Adaptive Time Budget Adjustment Strategy Based on a Take-Over Performance Model for Passive Fatigue. IEEE Trans. Hum.-Mach. Syst. 2022, 52, 1025–1035. [Google Scholar] [CrossRef]

- Walch, M.; Colley, M.; Weber, M. CooperationCaptcha: On-The-Fly Object Labeling for Highly Automated Vehicles. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 4–9 May 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Muslim, H.; Kiu Leung, C.; Itoh, M. Design and evaluation of cooperative human–machine interface for changing lanes in conditional driving automation. Accid. Anal. Prev. 2022, 174, 106719. [Google Scholar] [CrossRef]

- Kridalukmana, R.; Eridani, D.; Septiana, R.; Rochim, A.F.; Setyobudhi, C.T. Developing Autopilot Agent Transparency for Collaborative Driving. In Proceedings of the 2022 19th International Joint Conference on Computer Science and Software Engineering (JCSSE), Bangkok, Thailand, 22–25 June 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, R.; Kaizuka, T.; Nakano, K. Driver-Automation Shared Control: Modeling Driver Behavior by Taking Account of Reliance on Haptic Guidance Steering. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 144–149. [Google Scholar] [CrossRef]

- Marcano, M.; Tango, F.; Sarabia, J.; Castellano, A.; Pérez, J.; Irigoyen, E.; Diaz, S. From the Concept of Being “the Boss” to the Idea of Being “a Team”: The Adaptive Co-Pilot as the Enabler for a New Cooperative Framework. Appl. Sci. 2021, 11, 6950. [Google Scholar] [CrossRef]

- Zwaan, H.; Petermeijer, S.; Abbink, D. Haptic shared steering control with an adaptive level of authority based on time-to-line crossing. IFAC-PapersOnLine 2019, 52, 49–54. [Google Scholar] [CrossRef]

- Sentouh, C.; Popieul, J.C.; Debernard, S.; Boverie, S. Human–Machine Interaction in Automated Vehicle: The ABV Project. IFAC Proc. Vol. 2014, 47, 6344–6349. [Google Scholar] [CrossRef]

- Li, R.; Li, Y.; Li, S.E.; Zhang, C.; Burdet, E.; Cheng, B. Indirect Shared Control for Cooperative Driving Between Driver and Automation in Steer-by-Wire Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 22, 7826–7836. [Google Scholar] [CrossRef]

- Izadi, V.; Ghasemi, A.H. Quantifying the performance of an adaptive haptic shared control paradigm for steering a ground-vehicle. Transp. Eng. 2022, 10, 100141. [Google Scholar] [CrossRef]

- van Zoelen, E.M.; Peeters, L.; Bos, S.J.; Ye, F. Shared Control and the Democratization of Driving in Autonomous Vehicles. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, New York, NY, USA, 21–25 September 2019; pp. 120–124. [Google Scholar] [CrossRef]

- Da Lio, M.; Donà, R.; Papini, G.P.R.; Plebe, A. The Biasing of Action Selection Produces Emergent Human-Robot Interactions in Autonomous Driving. IEEE Robot. Autom. Lett. 2022, 7, 1254–1261. [Google Scholar] [CrossRef]

- Muslim, H.; Itoh, M. Trust and Acceptance of Adaptive and Conventional Collision Avoidance Systems. IFAC-PapersOnLine 2019, 52, 55–60. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Ghasemi, A.H.; Zheng, Y.; Febbo, H.; Jayakumar, P.; Ersal, T.; Stein, J.L.; Gillespie, R.B. Who’s the boss? Arbitrating control authority between a human driver and automation system. Transp. Res. Part F Traffic Psychol. Behav. 2020, 68, 144–160. [Google Scholar] [CrossRef]

- Rothfuß, S.; Schmidt, R.; Flad, M.; Hohmann, S. A Concept for Human–Machine Negotiation in Advanced Driving Assistance Systems. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 3116–3123. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, R.; Kaizuka, T.; Nakano, K. A Driver-Automation Shared Control for Forward Collision Avoidance While Automation Failure. In Proceedings of the 2018 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Shenyang, China, 24–27 August 2018; pp. 93–98. [Google Scholar] [CrossRef]

- Tao, W.; Chen, Y.; Yan, X.; Li, W.; Shi, D. Assessment of Drivers’ Comprehensive Driving Capability Under Man-Computer Cooperative Driving Conditions. IEEE Access 2020, 8, 152909–152923. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Lu, Y.; Pan, S.; Sarter, N.; Gillespie, R.B. Comparing Coupled and Decoupled Steering Interface Designs for Emergency Obstacle Evasion. IEEE Access 2021, 9, 116857–116868. [Google Scholar] [CrossRef]

- Pichen, J.; Miller, L.; Baumann, M. Cooperative Speed Regulation in Automated Vehicles: A Comparison Between a Touch, Pedal, and Button Interface as the Input Modality. In Proceedings of the 2021 IEEE Intelligent Vehicles Symposium (IV), Nagoya, Japan, 11–17 July 2021; pp. 245–250. [Google Scholar] [CrossRef]

- Nguyen, A.T.; Sentouh, C.; Popieul, J.C. Driver-Automation Cooperative Approach for Shared Steering Control Under Multiple System Constraints: Design and Experiments. IEEE Trans. Ind. Electron. 2017, 64, 3819–3830. [Google Scholar] [CrossRef]

- Johns, M.; Mok, B.; Sirkin, D.M.; Gowda, N.M.; Smith, C.A.; Talamonti, W.J., Jr.; Ju, W. Exploring Shared Control in Automated Driving. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; pp. 91–98. [Google Scholar] [CrossRef]

- Yan, Z.; Yang, K.; Wang, Z.; Yang, B.; Kaizuka, T.; Nakano, K. Intention-Based Lane Changing and Lane Keeping Haptic Guidance Steering System. IEEE Trans. Intell. Veh. 2021, 6, 622–633. [Google Scholar] [CrossRef]

- Muslim, H.; Itoh, M. The Effects of System Functional Limitations on Driver Performance and Safety When Sharing the Steering Control during Lane-Change. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 135–140. [Google Scholar] [CrossRef]

- Karakaya, B.; Kalb, L.; Bengler, K. Cooperative Approach to Overcome Automation Effects During the Transition Phase of Conditional Automated Vehicles. 2018. Available online: https://www.semanticscholar.org/paper/Cooperative-Approach-to-Overcome-Automation-Effects-Karakaya-Kalb/7684c52cd6c16e52380ee25b9b23409277f264ce (accessed on 18 December 2023).

- Guo, C. Designing Driver-Vehicle Cooperation Principles for Automated Driving Systems. Ph.D. Thesis, Université de Valenciennes et du Hainaut-Cambresis, Valenciennes, France, 2017. [Google Scholar]

- Walch, M.; Lehr, D.; Colley, M.; Weber, M. Do not You See Them? Towards Gaze-Based Interaction Adaptation for Driver-Vehicle Cooperation. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, New York, NY, USA, 21–25 September 2019; pp. 232–237. [Google Scholar] [CrossRef]

- Li, X.; Zhao, X.; Li, Z.; Rong, J. Effects of Cooperative Vehicle Infrastructure System on driver’s attention with different personal attribute. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 3990–3995. [Google Scholar] [CrossRef]

- Li, X.; Li, Z.; Zhao, X.; Rong, J.; Zhang, Y. Effects of Cooperative Vehicle Infrastructure System on Driver’s Visual and Driving Performance Based on Cognition Process. Int. J. Automot. Technol. 2022, 23, 1213–1227. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, J.; Li, Y.; Hansen, P.; Wang, J. Human-Computer Collaborative Interaction Design of Intelligent Vehicle—A Case Study of HMI of Adaptive Cruise Control. In HCII 2021: HCI in Mobility, Transport, and Automotive Systems; Krömker, H., Ed.; Springer International Publishing: Cham, Switzerland, 2021; pp. 296–314. [Google Scholar] [CrossRef]

- Hoc, J.M.; Mars, F.; Milleville-Pennel, I.; Jolly, É.; Netto, M.; Blosseville, J.M. Human–machine cooperation in car driving for lateral safety: Delegation and mutual control. Trav. Hum. 2006, 69, 153–182. [Google Scholar] [CrossRef]

- Jordan, N.; Franck, M.; Jean-Michel, H. Lateral Control Support for Car Drivers: A Human–Machine Cooperation Approach. In Proceedings of the 14th European Conference on Cognitive Ergonomics: Invent! Explore! London, UK, 28–31 August 2007; pp. 249–252. [Google Scholar] [CrossRef]

- Baltzer, M.; López, D.; Flemisch, F. Interaction Patterns for Cooperative Guidance and Control of Vehicles. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 9–12 October 2022; pp. 929–934. [Google Scholar] [CrossRef]

- Cohn, M. User Stories Applied: For Agile Software Development; Addison-Wesley Professional: Boston, MA, USA, 2004. [Google Scholar]

- Yanco, H.A.; Drury, J.L. A taxonomy for human–robot interaction. In Proceedings of the AAAI Fall Symposium on Human-Robot Interaction, Arlington, VA, USA, 17–19 November 2002; pp. 111–119. [Google Scholar]

- Rotter, J.B. Generalized expectancies for internal versus external control of reinforcement. Psychol. Monogr. Gen. Appl. 1966, 80, 1–28. [Google Scholar] [CrossRef]

- Caspar, E.A.; Cleeremans, A.; Haggard, P. The relationship between human agency and embodiment. Conscious. Cogn. 2015, 33, 226–236. [Google Scholar] [CrossRef]

- Jeunet, C.; Albert, L.; Argelaguet, F.; Lécuyer, A. “Do you feel in control?”: Towards novel approaches to characterise, manipulate and measure the sense of agency in virtual environments. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1486–1495. [Google Scholar] [CrossRef] [PubMed]

- Bandura, A. Social cognitive theory of personality. In Handbook of Personality: Theory and Research, 2nd ed.; Guilford Press: New York, NY, USA, 1999; pp. 154–196. [Google Scholar]

- Deci, E.L.; Ryan, R.M. The “What” and “Why” of Goal Pursuits: Human Needs and the Self-Determination of Behavior. Psychol. Inq. 2000, 11, 227–268. [Google Scholar] [CrossRef]

- Peters, D.; Calvo, R.A.; Ryan, R.M. Designing for Motivation, Engagement and Wellbeing in Digital Experience. Front. Psychol. 2018, 9, 797. [Google Scholar] [CrossRef]

- Wang, C.; Weisswange, T.H.; Krüger, M.; Wiebel-Herboth, C.B. Human-Vehicle Cooperation on Prediction-Level: Enhancing Automated Driving with Human Foresight. In Proceedings of the 2021 IEEE Intelligent Vehicles Symposium Workshops (IV Workshops), Nagoya, Japan, 11–17 July 2021; pp. 25–30. [Google Scholar] [CrossRef]

- Abbink, D.; Mulder, M.; Boer, E. Haptic shared control: Smoothly shifting control authority? Cogn. Technol. Work 2012, 14, 19–28. [Google Scholar] [CrossRef]

- Guo, C.; Sentouh, C.; Popieul, J.C.; Haué, J.B.; Langlois, S.; Loeillet, J.J.; Soualmi, B.; Nguyen That, T. Cooperation between driver and automated driving system: Implementation and evaluation. Transp. Res. Part F Traffic Psychol. Behav. 2019, 61, 314–325. [Google Scholar] [CrossRef]

- Tas, O.S.; Kuhnt, F.; Zöllner, J.M.; Stiller, C. Functional system architectures towards fully automated driving. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 304–309. [Google Scholar] [CrossRef]

- Stampf, A.; Colley, M.; Rukzio, E. Towards Implicit Interaction in Highly Automated Vehicles—A Systematic Literature Review. Proc. ACM Hum.-Comput. Interact. 2022, 6, 191. [Google Scholar] [CrossRef]

- Johnson-Laird, P. The history of mental models. In Psychology of Reasoning; Psychology Press: London, UK, 2004. [Google Scholar]

- Wilson, J.R.; Rutherford, A. Mental Models: Theory and Application in Human Factors. Hum. Factors 1989, 31, 617–634. [Google Scholar] [CrossRef]

- Johns, M.; Mok, B.; Talamonti, W.; Sibi, S.; Ju, W. Looking Ahead: Anticipatory Interfaces for Driver-Automation Collaboration. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Meyer, R.; Graf von Spee, R.; Altendorf, E.; Flemisch, F.O. Gesture-Based Vehicle Control in Partially and Highly Automated Driving for Impaired and Non-impaired Vehicle Operators: A Pilot Study. In UAHCI 2018: Universal Access in Human-Computer Interaction. Methods, Technologies, and Users; Antona, M., Stephanidis, C., Eds.; Springer: Cham, Switzerland, 2018; pp. 216–227. [Google Scholar] [CrossRef]

- Francesco Biondi, I.A.; Jeong, K.A. Human-Vehicle Cooperation in Automated Driving: A Multidisciplinary Review and Appraisal. Int. J. Hum.-Comput. Interact. 2019, 35, 932–946. [Google Scholar] [CrossRef]

- Cimolino, G.; Graham, T.N. Two Heads Are Better Than One: A Dimension Space for Unifying Human and Artificial Intelligence in Shared Control. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 29 April–5 May 2022. [Google Scholar] [CrossRef]

- Pano, B.; Chevrel, P.; Claveau, F.; Sentouh, C.; Mars, F. Obstacle Avoidance in Highly Automated Cars: Can Progressive Haptic Shared Control Make it Safer and Smoother? IEEE Trans. Hum.-Mach. Syst. 2022, 52, 547–556. [Google Scholar] [CrossRef]

- Benderius, O.; Berger, C.; Malmsten Lundgren, V. The Best Rated Human–Machine Interface Design for Autonomous Vehicles in the 2016 Grand Cooperative Driving Challenge. IEEE Trans. Intell. Transp. Syst. 2018, 19, 1302–1307. [Google Scholar] [CrossRef]

| Cooperation Use Case | Agents | Agents | Single Passenger and Single Automation | [10,20,21,22,26,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,94,95,96,97,98,99] |

| Multiple Passengers and Single Automation | [57] | |||

| Teleoperator and Automation | - | |||

| Teleoperator and Passenger | - | |||

| Motivation | Internal | [35,57] | ||

| External | [10,20,21,22,26,34,36,37,38,39,40,41,44,45,46,47,48,49,50,51,52,53,54,55,56,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78] | |||

| Internal & External | [42,43] | |||

| Scenario/Goal | Criticality | Low | [20,34,35,36,38,39,41,42,44,46,51,53,54,55,57,58,68,75,76] | |

| High | [10,21,22,26,37,40,43,45,47,48,49,50,52,56,59,60,61,62,63,64,65,66,67,69,70,71,72,73,74,77,78] | |||

| Urgency | Low | [36,38,41,51,55,57,58,65,75,76] | ||

| High | [10,20,21,22,26,34,35,37,39,40,42,43,44,45,46,47,48,49,50,52,53,54,56,59,60,61,62,63,64,66,67,68,69,70,71,72,73,74,77,78] | |||

| Cooperation Frame | Cooperation Dynamics | Time Allocation/Window and Determinism | Continuous and Deterministic | [42,57,72,73,74] |

| Discrete and Deterministic | [26,34,35,36,47,48,49,50,54,59,63,65,71,75,76] | |||

| Continuous and Negotiated | [10,20,21,22,37,39,40,41,43,45,51,52,53,55,56,58,60,61,62,64,66,67,68,70,77,78] | |||

| Discrete and Negotiated | [38,44,46,69] | |||

| Agents Task Residuals | Task Level | Strategical | - | |

| Tactical | [20,21,22,26,34,36,40,41,42,44,46,47,48,50,54,57,58,63,65,71,72,73,74,75,76] | |||

| Operational | [10,35,37,38,39,43,45,49,51,52,53,55,56,59,60,61,62,64,66,67,68,69,70,77,78] | |||

| Task Coverage | Perception | [40,57] | ||

| Projection/Prediction | [21,22,26,47,50,54,63,71] | |||

| Multiple Steps | [10,20,34,35,36,37,38,39,41,42,43,44,45,46,48,49,51,52,53,55,56,58,59,60,61,62,64,65,66,67,68,69,70,72,73,74,75,76,77,78] | |||

| Human–Machine Interface | Input | Modality | Haptic | [10,26,34,35,36,37,38,39,41,44,45,46,47,49,50,51,52,53,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,71,72,75,76,77,78] |

| Auditory | [40] | |||

| Gaze | [21] | |||

| Multiple Modalities | [20,22,43,48,54,70] | |||

| Output | Modality | Visual | [21,22,26,34,40,41,42,44,47,49,50,54,57,63,65,71,72,73,74] | |

| Tactile | [10,35,37,38,51,53,55,56,59,60,66,67,68,77] | |||

| Multiple Modalities | [20,36,39,43,52,64,69,70,78] | |||

| - | [46,48,58,75,76] | |||

| Metaphors/Mental | H-Mode | [43,70] | ||

| Models | Maneuver Based Interaction | [44] | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peintner, J.; Escher, B.; Detjen, H.; Manger, C.; Riener, A. How to Design Human-Vehicle Cooperation for Automated Driving: A Review of Use Cases, Concepts, and Interfaces. Multimodal Technol. Interact. 2024, 8, 16. https://doi.org/10.3390/mti8030016

Peintner J, Escher B, Detjen H, Manger C, Riener A. How to Design Human-Vehicle Cooperation for Automated Driving: A Review of Use Cases, Concepts, and Interfaces. Multimodal Technologies and Interaction. 2024; 8(3):16. https://doi.org/10.3390/mti8030016

Chicago/Turabian StylePeintner, Jakob, Bengt Escher, Henrik Detjen, Carina Manger, and Andreas Riener. 2024. "How to Design Human-Vehicle Cooperation for Automated Driving: A Review of Use Cases, Concepts, and Interfaces" Multimodal Technologies and Interaction 8, no. 3: 16. https://doi.org/10.3390/mti8030016

APA StylePeintner, J., Escher, B., Detjen, H., Manger, C., & Riener, A. (2024). How to Design Human-Vehicle Cooperation for Automated Driving: A Review of Use Cases, Concepts, and Interfaces. Multimodal Technologies and Interaction, 8(3), 16. https://doi.org/10.3390/mti8030016