How Blind Individuals Recall Mathematical Expressions in Auditory, Tactile, and Auditory–Tactile Modalities

Abstract

1. Introduction

- (a)

- Auditory Modality through TtS systems in connection to screen readers;

- (b)

- Tactile Modality by

- reading texts in braille either on embossed paper or on refreshable braille displays,

- reading tactile images, or

- manipulating 3D tactile artifacts;

- (c)

- i.

- Is there a threshold to the cognitive overload regarding the type and number of elements in an ME where the recall rapidly decreases?

- ii.

- Does a modality provide better chances of ME recall to blind users?

2. Materials and Methods

2.1. Participants

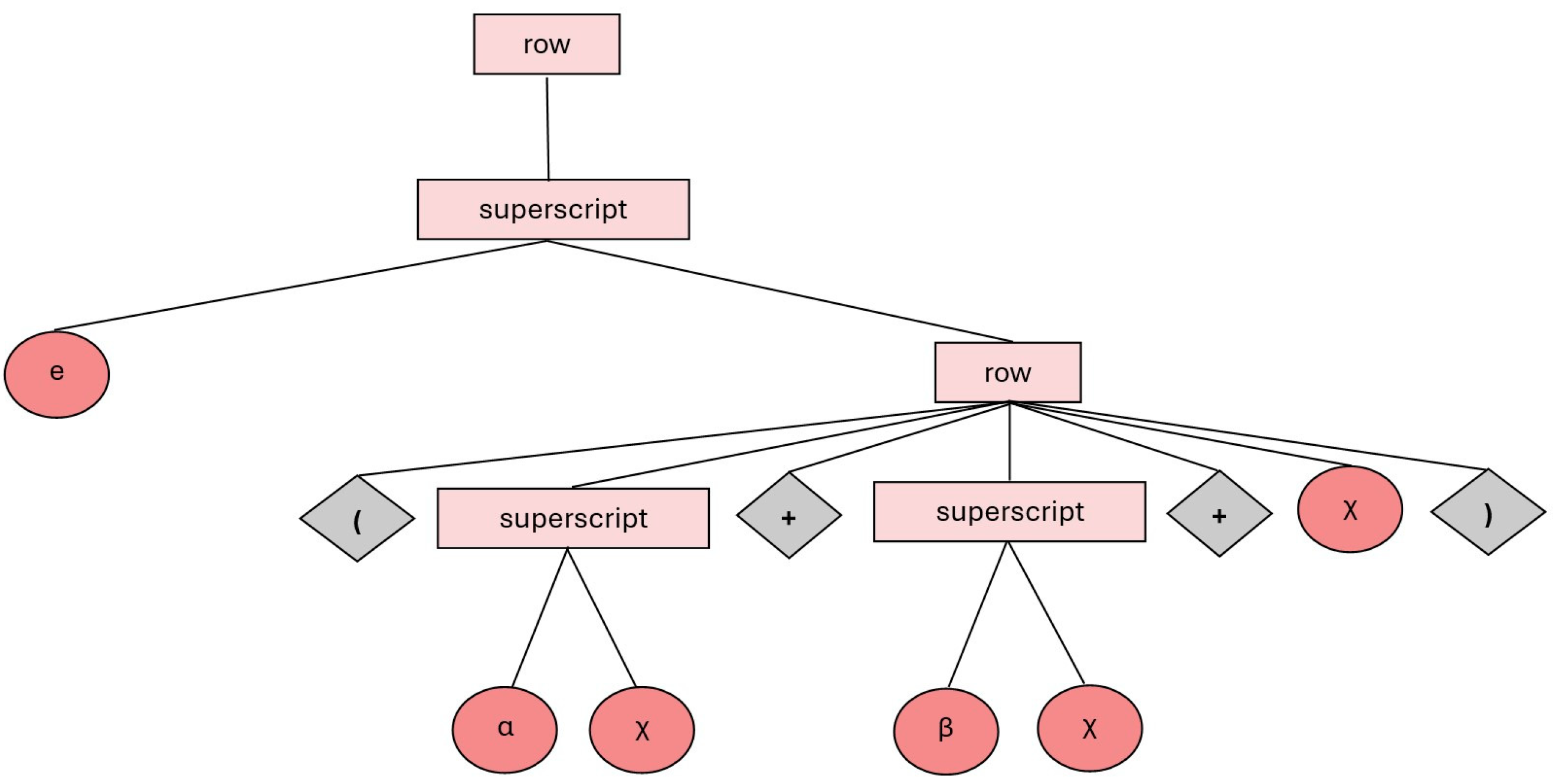

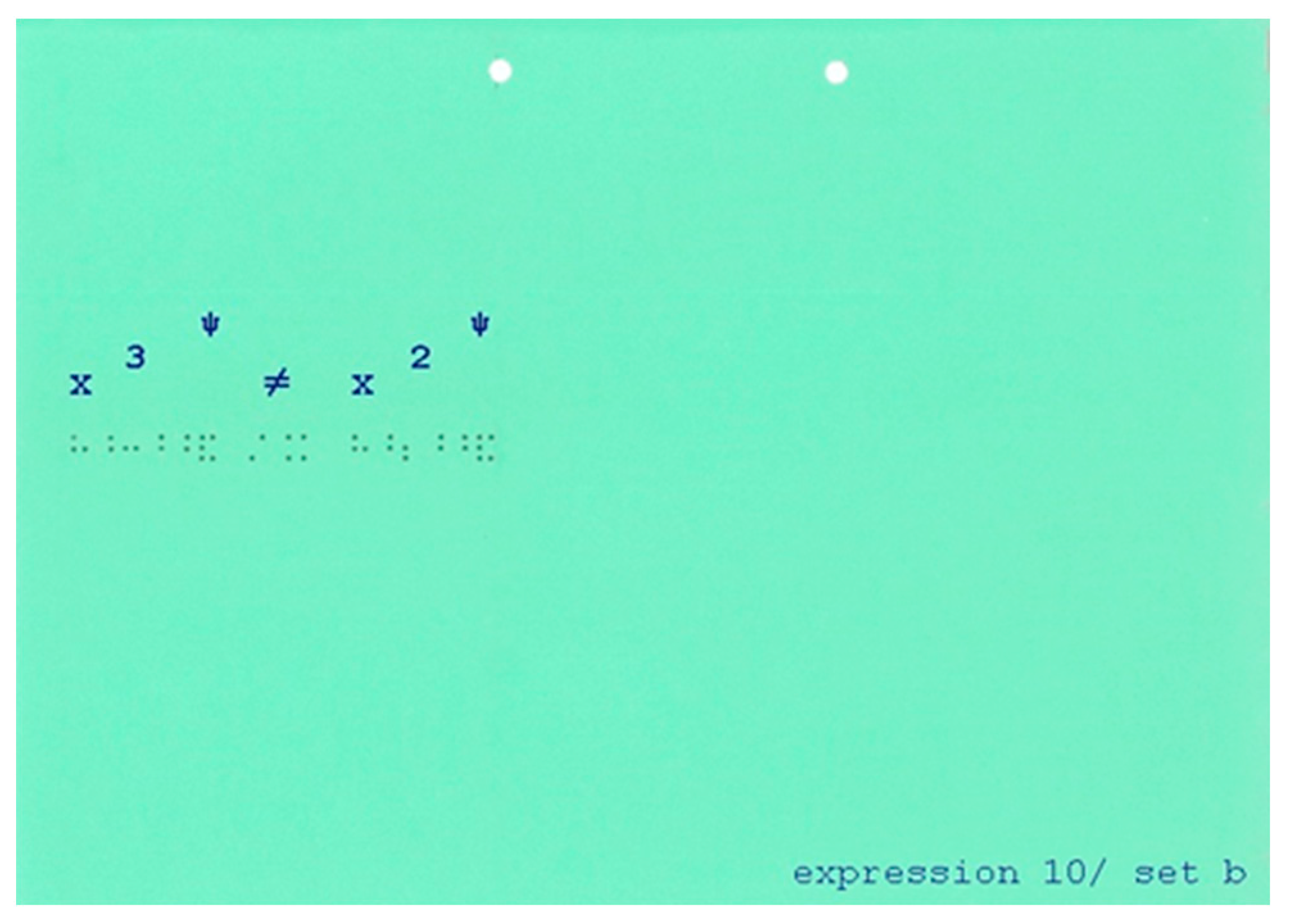

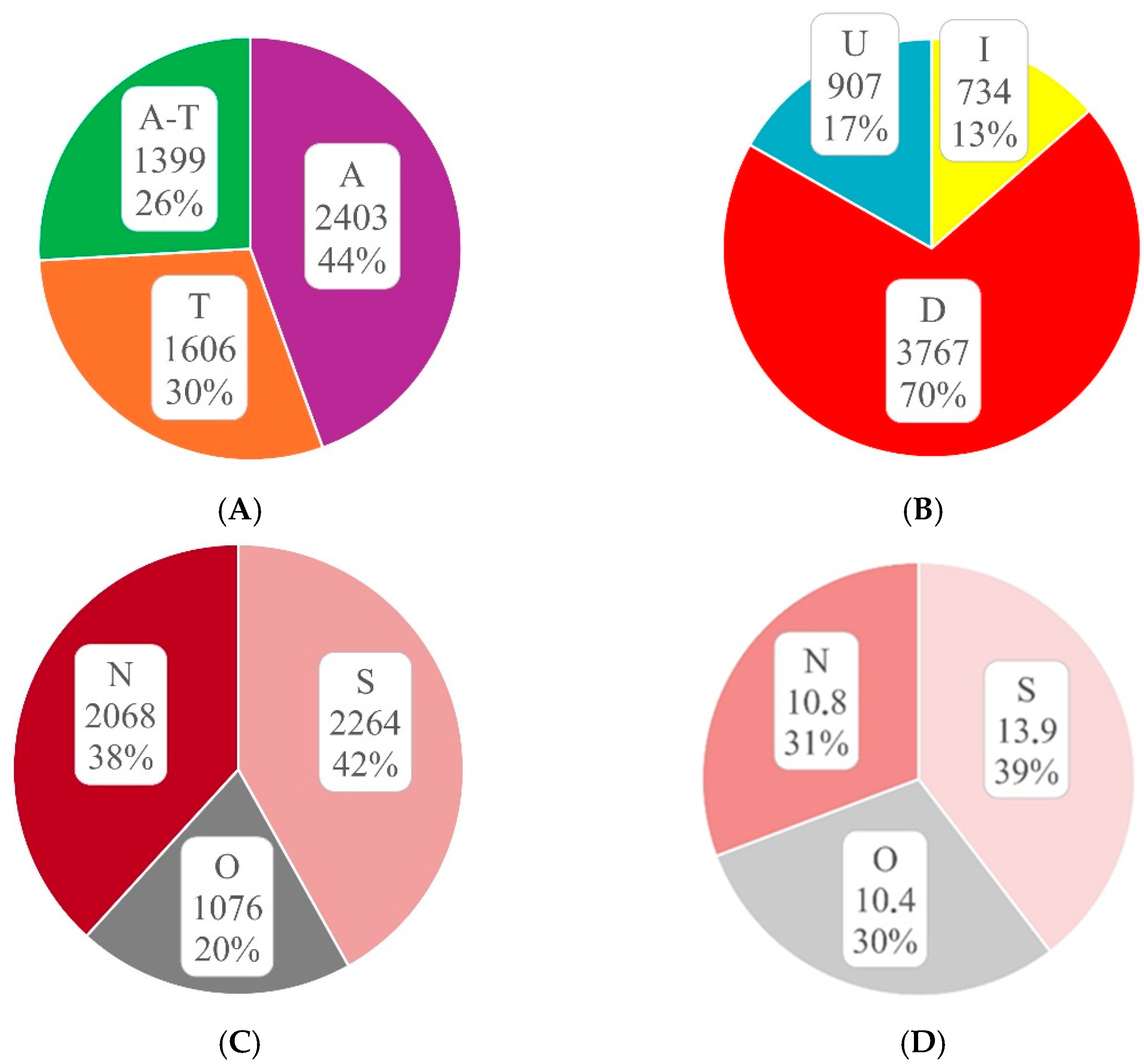

2.2. Materials

2.3. Experimental Procedure

2.4. Data Analysis

C = S + N + O

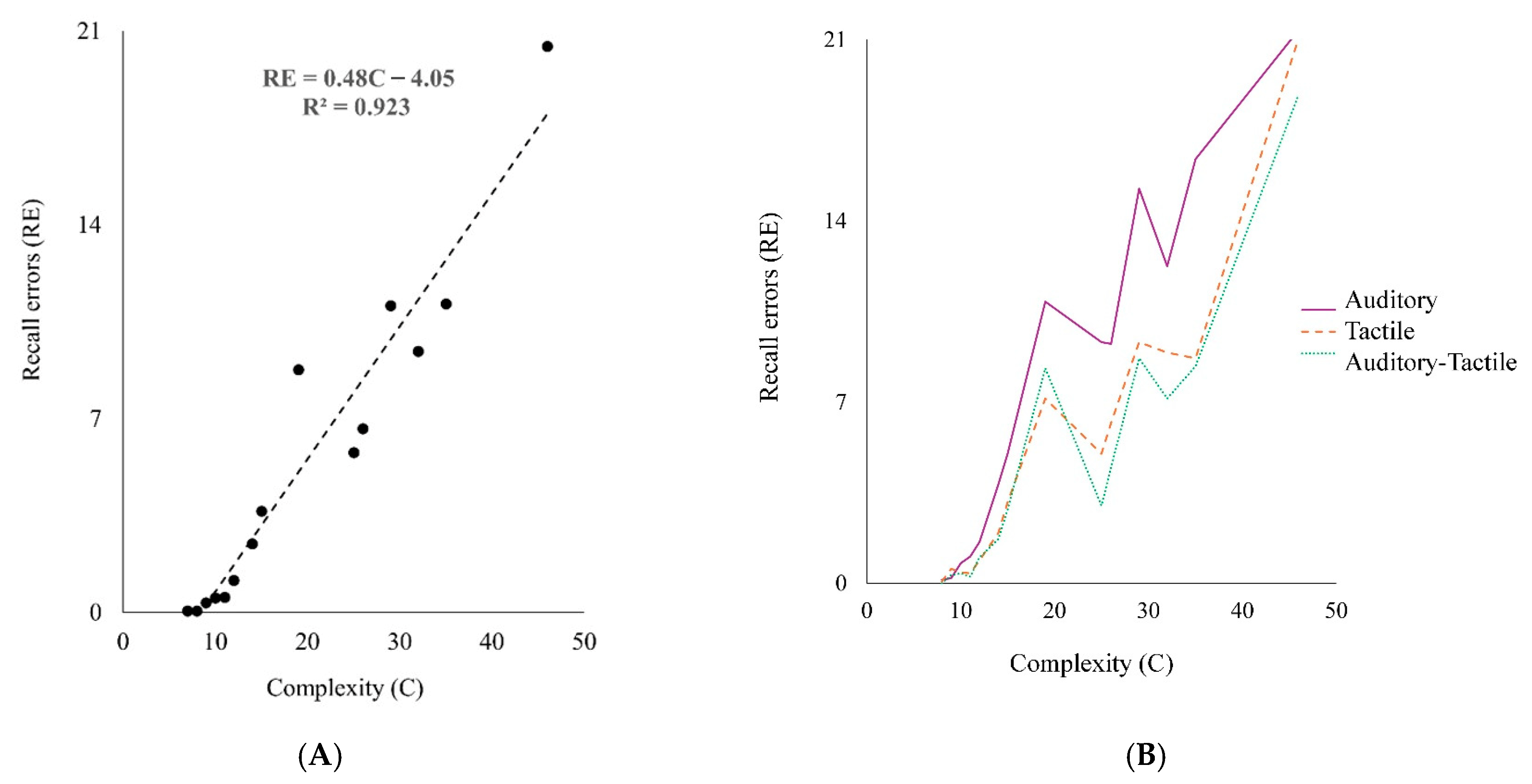

3. Results and Discussion

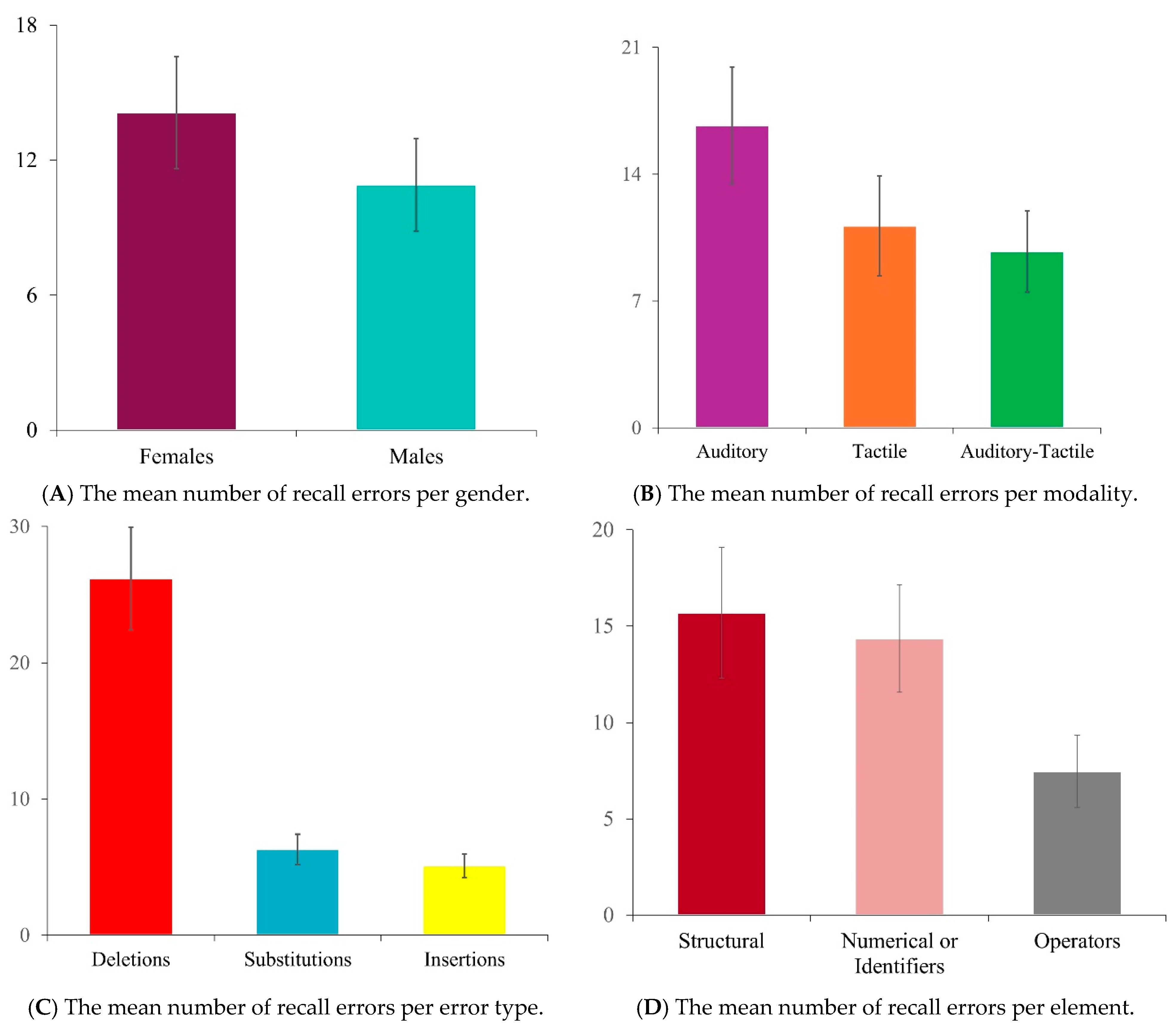

3.1. Descriptive Statistics

3.2. Inferential Statistics

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. The Three Sets of Stimuli Used in the Experiment

| Set A | Set B | Set C | |

| 1 | |||

| 2 | |||

| 3 | |||

| 4 | |||

| 5 | |||

| 6 | |||

| 7 | |||

| 8 | |||

| 9 | |||

| 10 | |||

| 11 | |||

| 12 | |||

| 13 | |||

| 14 | |||

| 15 | |||

| 16 | |||

| 17 | |||

| 18 | |||

| 19 | |||

| 20 | |||

| 21 | |||

| 22 | |||

| 23 | |||

| 24 | |||

| 25 |

References

- Fellbaum, K.; Koroupetroglou, G. Principles of electronic speech processing with applications for people with disabilities. Technol. Disab. 2008, 20, 55–85. [Google Scholar] [CrossRef]

- Lorch, R.F.; Lemarié, J. Improving Communication of Visual Signals by Text-to-Speech Software. In Universal Access in Human-Computer Interaction. Applications and Services for Quality of Life. UAHCI 2013. Lecture Notes in Computer Science; Stephanidis, C., Antona, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8011, pp. 364–371. [Google Scholar]

- Freitas, D.; Kouroupetroglou, G. Speech technologies for blind and low vision persons. Technol. Disab. 2008, 20, 135–156. [Google Scholar] [CrossRef]

- Khan, A.; Khusro, S. An insight into smartphone-based assistive solutions for visually impaired and blind people: Issues, challenges and opportunities. Univers. Access Inf. Soc. 2021, 20, 265–298. [Google Scholar] [CrossRef]

- Fernández-Batanero, J.M.; Montenegro-Rueda, M.; Fernández-Cerero, J.; García-Martínez, I. Assistive technology for the inclusion of students with disabilities: A systematic review. Educ. Technol. Res. Dev. 2022, 70, 1911–1930. [Google Scholar] [CrossRef]

- Remache-Vinueza, B.; Trujillo-León, A.; Zapata, M.; Sarmiento-Ortiz, F.; Vidal-Verdú, F. Audio-tactile rendering: A review on technology and methods to convey musical information through the sense of touch. Sensors 2021, 21, 6575. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Li, B.; Hedgpeth, T.; Haven, T. Instant tactile-audio map: Enabling access to digital maps for people with visual impairment. In Proceedings of the 11th International ACM SIGACCESS Conference on Computers and Accessibility, Pittsburgh, PA, USA, 25–28 October 2009. [Google Scholar]

- Raman, T.V. AsTeR: Audio system for technical readings. Inf. Technol. Disab. 1994, 1. [Google Scholar]

- LaTeX—A Document Preparation System. Available online: https://www.latex-project.org/ (accessed on 27 April 2024).

- Stevens, R.; Edwards, A.; Harling, P. Access to mathematics for visually disabled students through multimodal interaction. Hum. Comput. Interact. 1997, 12, 47–92. [Google Scholar] [PubMed]

- Ferreira, H.; Freitas, D. Audio Rendering of Mathematical Formulae Using MathML and AudioMath. In User-Centered Interaction Paradigms for Universal Access in the Information Society; Stary, C., Stephanidis, C., Eds.; Lecture Notes in Computer Science 2004; Springer: Berlin/Heidelberg, Germany, 2004; Volume 3196, pp. 391–399. [Google Scholar]

- Mathematical Markup Language (MathML). Available online: https://www.w3.org/Math/whatIsMathML.html (accessed on 27 April 2024).

- Isaacson, M.; Srinivasan, S.; Lloyd, L. Development of an algorithm for improving quality and information processing capacity of MathSpeak synthetic speech renderings. Disab. Rehabil. Assist. Technol. 2010, 5, 83–93. [Google Scholar] [CrossRef] [PubMed]

- Sheikh, W.; Schleppenbach, D.; Leas, D. MathSpeak: A non-ambiguous language for audio rendering of MathML. Int. J. Learn. Technol. 2018, 13, 3–25. [Google Scholar] [CrossRef]

- Soiffer, N. MathPlayer v2.1: Web-based math accessibility. In Proceedings of the 9th International ACM SIGACCESS Conference on Computers and Accessibility, Tempe, AZ, USA, 15–17 October 2007. [Google Scholar]

- Yamaguchi, K.; Masakazu, S. On necessity of a new method to read out math contents properly in DAISY. In Computers Helping People with Special Needs; Miesenberger, K., Klaus, J., Zagler, W., Karshmer, A., Eds.; Lecture Notes in Computer Science 2010; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6180, pp. 415–422. [Google Scholar]

- Wongkia, W.; Naruedomkul, K.; Cercone, N. i-Math: Automatic math reader for Thai blind and visually impaired students. Comput. Math. Appl. 2012, 64, 2128–2140. [Google Scholar] [CrossRef]

- Salamonczyk, A.; Brzostek-Pawlowska, J. Translation of MathML formulas to Polish text, example applications in teaching the blind. In Proceedings of the 2nd International Conference on Cybernetics, Gdynia, Poland, 24–26 June 2015. [Google Scholar]

- Park, J.H.; Lee, J.W.; Um, J.W.; Yook, J. Korean language math-to-speech rules for digital books for people with reading disabilities and their usability evaluation. J. Supercomput. 2021, 77, 6381–6407. [Google Scholar] [CrossRef]

- Soiffer, N. Browser-independent accessible math. In Proceedings of the 12th International Web for All Conference, New York, NY, USA, 18–20 May 2015. [Google Scholar]

- Cervone, D. MathJax: A platform for mathematics on the Web. Not. AMS 2012, 59, 312–316. [Google Scholar] [CrossRef]

- The Nemeth Braille Code for Mathematics and Science Notation; American Printing House for the Blind: Louisville, KY, USA, 1972.

- Bansal, A.; Sorge, V.; Balakrishnan, M.; Aggarwal, A. Towards Semantically Enhanced Audio Rendering of Equations. In Computers Helping People with Special Needs; Miesenberger, K., Kouroupetroglou, G., Mavrou, K., Manduchi, R., Covarrubias Rodriguez, M., Penáz, P., Eds.; Lecture Notes in Computer Science 2022; Springer: Berlin/Heidelberg, Germany, 2022; Volume 13341, pp. 30–37. [Google Scholar]

- Riga, V.; Antonakopoulou, T.; Kouvaras, D.; Lentas, S.; Kouroupetroglou, G. The BrailleMathCodes Repository. In Proceedings of the 4th International Workshop on Digitization and E-Inclusion in Mathematics and Science, Tokyo, Japan, 18–19 February 2021. [Google Scholar]

- Stöger, B.; Miesenberger, K. Accessing and dealing with mathematics as a blind individual: State of the art and challenges. In Proceedings of the International Conference Enabling Access for Persons with Visual Impairment, Athens, Greece, 12–14 February 2015. [Google Scholar]

- MathType. Available online: https://en.wikipedia.org/wiki/MathType (accessed on 27 April 2024).

- Duxbury DBT: Braille Translation Software. Available online: https://www.duxburysystems.com/ (accessed on 27 April 2024).

- Tiger Software Suite 8 (TSS). Available online: https://viewplus.com/product/tiger-software-suite8/ (accessed on 27 April 2024).

- Kanahori, T.; Suzuki, M. Scientific PDF document reader with simple interface for visually impaired people. In Computers Helping People with Special Needs; Miesenberger, K., Klaus, J., Zagler, W., Karshmer, A., Eds.; Lecture Notes in Computer Science 2006; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4061, pp. 48–52. [Google Scholar]

- Kortemeyer, G. Using artificial-intelligence tools to make LaTeX content accessible to blind readers. arXiv 2023, arXiv:2306.02480. [Google Scholar] [CrossRef]

- Arooj, S.; Zulfiqar, S.; Qasim Hunain, M.; Shahid, S.; Karim, A. Web-ALAP: A web-based LaTeX editor for blind individuals. In Proceedings of the 22nd International ACM SIGACCESS Conference on Computers and Accessibility, New York, NY, USA, 26–28 October 2020. [Google Scholar]

- Hara, S.; Ohtake, N.; Higuchi, M.; Miyazaki, N.; Watanabe, A.; Kusunoki, K.; Sato, H. MathBraille: A system to transform LATEX documents into Braille. ACM SIGCAPH Comput. Phys. Handicap. 2000, 66, 17–20. [Google Scholar] [CrossRef]

- Papasalouros, A.; Tsolomitis, A. A direct TeX-to-Braille transcribing method. In Proceedings of the 17th International ACM SIGACCESS Conference on Computers and Accessibility, Lisbon, Portugal, 26–28 October 2015. [Google Scholar]

- Gillan, D.J.; Barraza, P.; Karshmer, A.I.; Pazuchanics, S. Cognitive Analysis of Equation Reading: Application to the Development of the Math Genie. In Computers Helping People with Special Needs; Miesenberger, K., Klaus, J., Zagler, W.L., Burger, D., Eds.; Lecture Notes in Computer Science 2004; Springer: Berlin/Heidelberg, Germany, 2014; Volume 3118, pp. 630–637. [Google Scholar]

- Archambault, D.; Stöger, B.; Fitzpatrick, D.; Miesenberger, K. Access to Scientific Content by Visually Impaired People. Upgrade 2007, 8, 14. [Google Scholar]

- Simmering, V.R.; Wood, C.M. The development of real-time stability supports visual working memory performance: Young children’s feature binding can be improved through perceptual structure. Dev. Psychol. 2017, 53, 1474–1493. [Google Scholar] [CrossRef]

- Barrouillet, P.; Gavens, N.; Vergauwe, E.; Gaillard, V.; Camos, V. Working memory span development: A time-based resource-sharing model account. Dev. Psychol. 2009, 45, 477–490. [Google Scholar] [CrossRef] [PubMed]

- McGaugh, J.L. Memory—A century of consolidation. Science 2000, 287, 248–251. [Google Scholar] [CrossRef] [PubMed]

- Zimmermann, J.F.; Moscovitch, M.; Alain, C. Attending to auditory memory. Brain Res. 2016, 1640, 208–221. [Google Scholar] [CrossRef]

- Withagen, A.; Kappers, A.M.; Vervloed, M.P.; Knoors, H.; Verhoeven, L. Short term memory and working memory in blind versus sighted children. Res. Dev. Disab. 2013, 34, 2161–2172. [Google Scholar] [CrossRef]

- Argyropoulos, V.; Masoura, E.; Tsiakali, T.K.; Nikolaraizi, M.; Lappa, C. Verbal working memory and reading abilities among students with visual impairment. Res. Dev. Disab. 2017, 64, 87–95. [Google Scholar] [CrossRef] [PubMed]

- Pring, L. The ‘reverse-generation’ effect: A comparison of memory performance between blind and sighted children. Br. J. Psychol. 1988, 79, 387–400. [Google Scholar] [CrossRef] [PubMed]

- Kacorri, H.; Riga, P.; Kouroupetroglou, G. EAR-Math: Evaluation of Audio Rendered Mathematics. In Universal Access in Human-Computer Interaction; Stephanidis, C., Antona, M., Eds.; Lecture Notes in Computer Science 2014; Springer: Berlin/Heidelberg, Germany, 2014; Volume 8514, pp. 111–120. [Google Scholar]

- W3C Web Accessibility Initiative (WAI). MathML Fundamentals. Available online: https://www.w3.org/TR/MathML2/chapter2.html (accessed on 21 June 2024).

- Geurten, M.; Catale, C.; Meulemans, T. Involvement of executive functions in children’s metamemory. Appl. Cognit. Psychol. 2016, 1, 70–80. [Google Scholar] [CrossRef]

- Baddeley, A.; Hitch, G. Working Memory: Past, present….and future. In The Cognitive Neuroscience of Working Memory; Osaka, N., Logie, R.H., D’Esposito, M., Eds.; Oxford University Press: New York, NY, USA, 2007; pp. 1–20. [Google Scholar]

- Baddeley, A.D.; Logie, R.H. Working memory: The multiple-component model. In Models of Working Memory: Mechanisms of Active Maintenance and Executive Control; Miyake, A., Shah, P., Eds.; Cambridge University Press: Cambridge, UK, 1999; pp. 28–61. [Google Scholar]

- Noel, M.P.; Désert, M.; Aubrun, A.; Seron, X. Involvement of short-term memory in complex mental calculation. Mem. Cognit. 2001, 29, 34–42. [Google Scholar] [CrossRef] [PubMed]

- Lehmann, M. Rehearsal development as development of iterative recall processes. Front. Psychol. 2015, 6, 308. [Google Scholar] [CrossRef]

- Kacorri, H.; Riga, P.; Kouroupetroglou, G. Performance Metrics and Their Extraction Methods for Audio Rendered Mathematics. In Computers Helping People with Special Needs; Miesenberger, K., Fels, D., Archambault, D., Peňáz, P., Zagler, W., Eds.; Lecture Notes in Computer Science 2014; Springer: Berlin/Heidelberg, Germany, 2014; Volume 8547, pp. 30–37. [Google Scholar]

- Riga, P.; Kouroupetroglou, G.; Ioannidou, P. An Evaluation Methodology of Math-to-Speech in Non-English DAISY Digital Talking Books. In Computers Helping People with Special Needs; Miesenberger, K., Bühler, C., Penaz, P., Eds.; Lecture Notes in Computer Science 2016; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9758, pp. 27–34. [Google Scholar]

- Bansal, A.; Balakrishnan, M.; Sorge, V. Evaluating cognitive complexity of algebraic equations. J. Technol. Pers. Disab. 2021, 9, 170–200. [Google Scholar]

- W3C Web Accessibility Initiative (WAI). Cognitive Accessibility at W3C. Available online: https://www.w3.org/WAI/cognitive/ (accessed on 21 June 2024).

- National Federation of the Blind. Statistical Facts about Blindness in the United States. 2017. Available online: https://nfb.org/blindness-statistics (accessed on 21 June 2024).

- Souza, A.; Freitas, D. Towards the Improvement of the Cognitive Process of the Synthesized Speech of Mathematical Expression in MathML: An Eye-Tracking. In Proceedings of the International Conference on Interactive Media, Smart Systems and Emerging Technologies, Limassol, Cyprus, 4–7 October 2022. [Google Scholar]

| Modality | Mean | Min | Max | Std |

|---|---|---|---|---|

| Auditory | 7.88 s | 2 s | 20 s | 5.27 s |

| Tactile | 46.18 s | 2 s | 370 s | 52.16 s |

| Auditory-Tactile Auditory part Tactile part | 54.06 s 7.88 s 37.00 s | 4 s 2 s 3 s | 383 s 20 s 299 s | 54.41 s 5.27 s 38.00 s |

| Modality | Constant a | Coefficient b | Lower 95% CI | Upper 95% CI |

|---|---|---|---|---|

| Auditory | −4.4 | 0.570 | 0.512 | 0.628 |

| Tactile | −4.2 | 0.449 | 0.395 | 0.503 |

| Auditory–Tactile | −3.6 | 0.389 | 0.334 | 0.444 |

| Complexity | p-Values of Pairwise Comparisons | ||

|---|---|---|---|

| Auditory vs. Tactile | Auditory vs. Auditory–Tactile | Tactile vs. Auditory–Tactile | |

| 7 | 0.333 | 0.333 | 0.333 |

| 8 | 0.669 | 0.333 | 0.333 |

| 9 | 0.164 | 0.333 | 0.216 |

| 10 | 0.345 | 0.277 | 0.839 |

| 11 | 0.048 | 0.007 | 0.354 |

| 12 | 0.055 | 0.088 | 0.682 |

| 14 | 0.000 | 0.000 | 0.439 |

| 15 | 0.010 | 0.006 | 0.679 |

| 19 | 0.109 | 0.321 | 0.589 |

| 25 | 0.066 | 0.007 | 0.044 |

| 26 | 0.021 | 0.001 | 0.095 |

| 29 | 0.030 | 0.029 | 0.759 |

| 32 | 0.099 | 0.008 | 0.260 |

| 35 | 0.012 | 0.007 | 0.871 |

| 46 | 0.926 | 0.499 | 0.201 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Riga, P.; Kouroupetroglou, G. How Blind Individuals Recall Mathematical Expressions in Auditory, Tactile, and Auditory–Tactile Modalities. Multimodal Technol. Interact. 2024, 8, 57. https://doi.org/10.3390/mti8070057

Riga P, Kouroupetroglou G. How Blind Individuals Recall Mathematical Expressions in Auditory, Tactile, and Auditory–Tactile Modalities. Multimodal Technologies and Interaction. 2024; 8(7):57. https://doi.org/10.3390/mti8070057

Chicago/Turabian StyleRiga, Paraskevi, and Georgios Kouroupetroglou. 2024. "How Blind Individuals Recall Mathematical Expressions in Auditory, Tactile, and Auditory–Tactile Modalities" Multimodal Technologies and Interaction 8, no. 7: 57. https://doi.org/10.3390/mti8070057

APA StyleRiga, P., & Kouroupetroglou, G. (2024). How Blind Individuals Recall Mathematical Expressions in Auditory, Tactile, and Auditory–Tactile Modalities. Multimodal Technologies and Interaction, 8(7), 57. https://doi.org/10.3390/mti8070057