Human Attitudes in Robotic Path Programming: A Pilot Study of User Experience in Manual and XR-Controlled Robotic Arm Manipulation

Abstract

1. Introduction

2. Key UX Factors for XR in Human–Robot Collaboration

3. Research Question

4. Materials and Methods

4.1. Participants

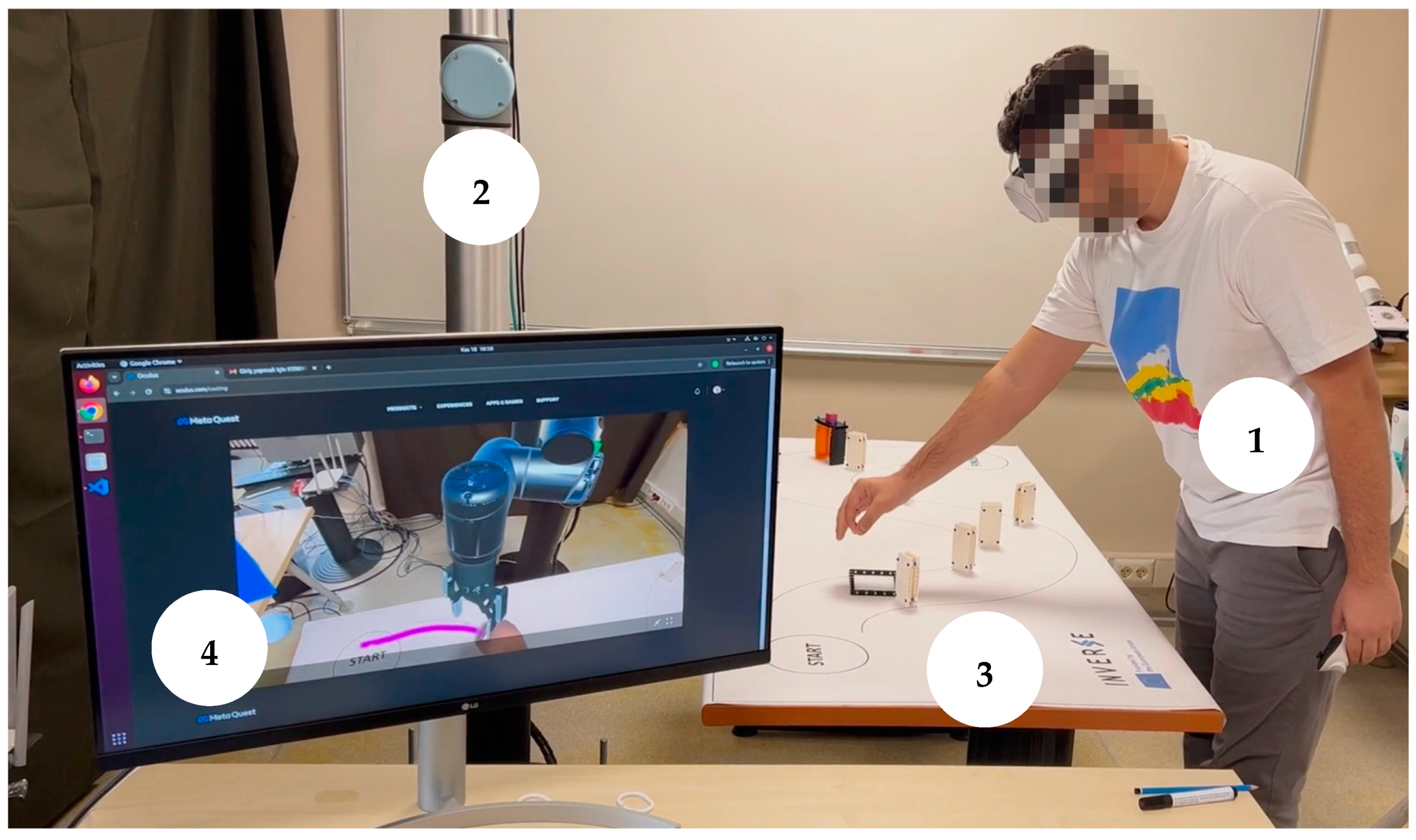

4.2. Experimental Setup

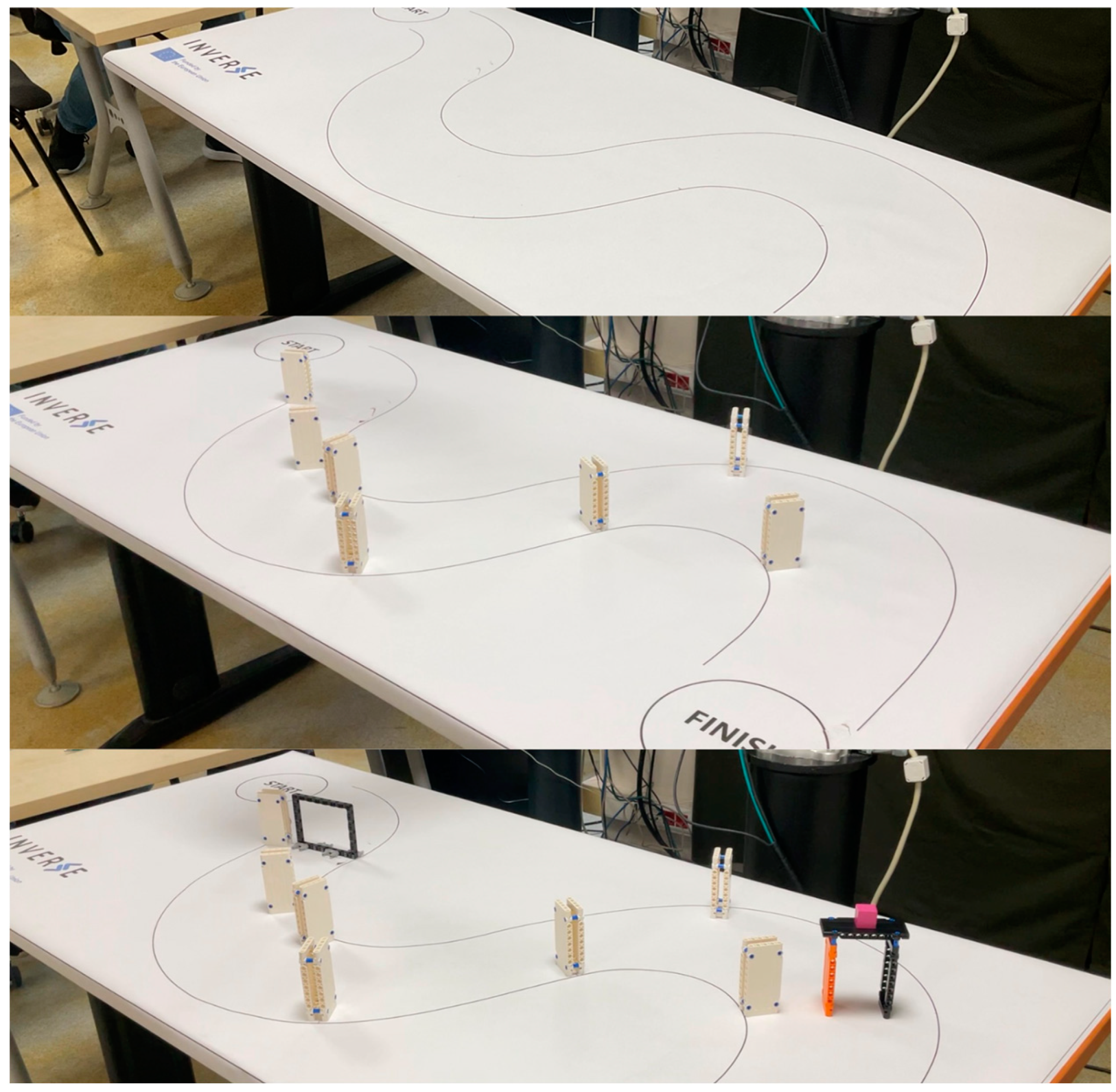

- Level 1 (easy): The robot was required to follow a flat circuit without leaving the lines, limited to motion on the x and y axes, without height differences. This level primarily assessed motion control and served as a familiarization phase for participants.

- Level 2 (intermediate): This level introduced a higher degree of dexterity by incorporating tactile-sensitive obstacles. Both the participants (during manipulation) and the robot (during execution) had to avoid these obstacles. If touched, these lightweight obstacles would either move or fall, providing clear visual feedback.

- Level 3 (advanced): In addition to the challenges of Level 2, this level incorporated motion along the z-axis, requiring greater precision. A bridge was added that the robot had to avoid by moving over it, along with a second bridge featuring a wooden cube. The robot had to push the cube off the platform, necessitating precise manipulation and configuration by the participant. This level integrated the general requirements for robotic manipulation, such as dexterity across all three axes (x, y, z), and precision.

- Manual robot manipulation (RE): Participants manually guided the robot to record the trajectory using their hands. After recording, participants observed the robot’s execution of the programmed task while standing in front of the table.

- XR manipulation in real-time speed (RS): Participants used Meta Quest 3 XR glasses [43] and the RAMPA (v.1.0) application [44]. The RAMPA application is a software tool designed to assist users in programming robot paths by allowing them to draw imaginary lines on a circuit table. As users draw, the application displays a virtual robot following the traced path in real time, providing immediate visual feedback to facilitate precise path programming. The physical robot then replicates the movements at the same speed as the participant’s drawing. If the participant draws quickly, the robot moves quickly, and if they draw slowly, the robot replicates the trajectory at a slower pace.

- XR manipulation in safety speed (SS): Like the RS mode, participants used the XR glasses and application to draw the robot’s trajectory. However, in this mode, the physical robot executed the movements at a speed five times slower than the participant’s drawing speed.

4.3. Variables, Measures and Evaluations

- Usability: For usability, a subset of 6 questions (Q1–Q6) from the system usability scale (SUS) [31] was used. The Cronbach’s alpha score of 0.81 demonstrates good internal consistency, indicating that these items effectively capture perceived ease of use and usefulness of the system. Despite using only part of the original SUS scale, the results confirm the reliability of this measure.

- Workload: The workload construct was evaluated using all 6 questions (Q7–Q12) from the NASA-TLX [46]. The resulting Cronbach’s alpha of 0.74 indicates acceptable internal consistency. This score suggests that the selected items reliably measure the cognitive and physical demands placed on users during task execution.

- Flow: The flow construct was measured using 4 questions (Q13–Q16) from the flow state scale (FSS) [47]. The Cronbach’s alpha for this construct was 0.32, reflecting poor internal consistency. This result may stem from the limited number of items used or variability in participant responses. Future studies should consider revising or expanding the flow-related items to better capture the immersion and engagement experience.

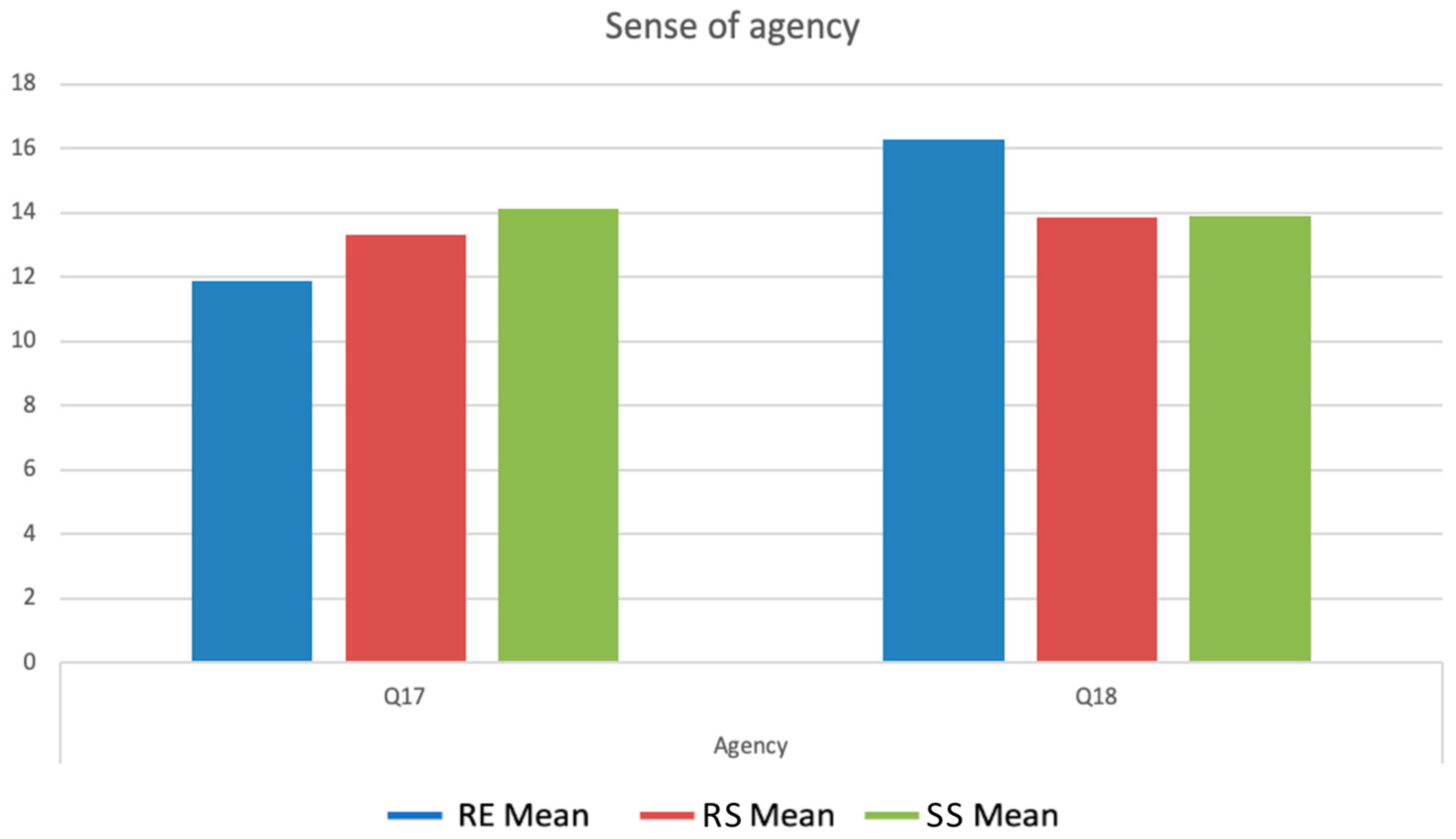

- Agency: For agency, 2 questions (Q17–Q18) from the sense of agency scale (SoAS) [42] were used. The Cronbach’s alpha score of 0.73 indicates acceptable internal consistency, suggesting that the selected items adequately measure the user’s perceived control and influence within the system.

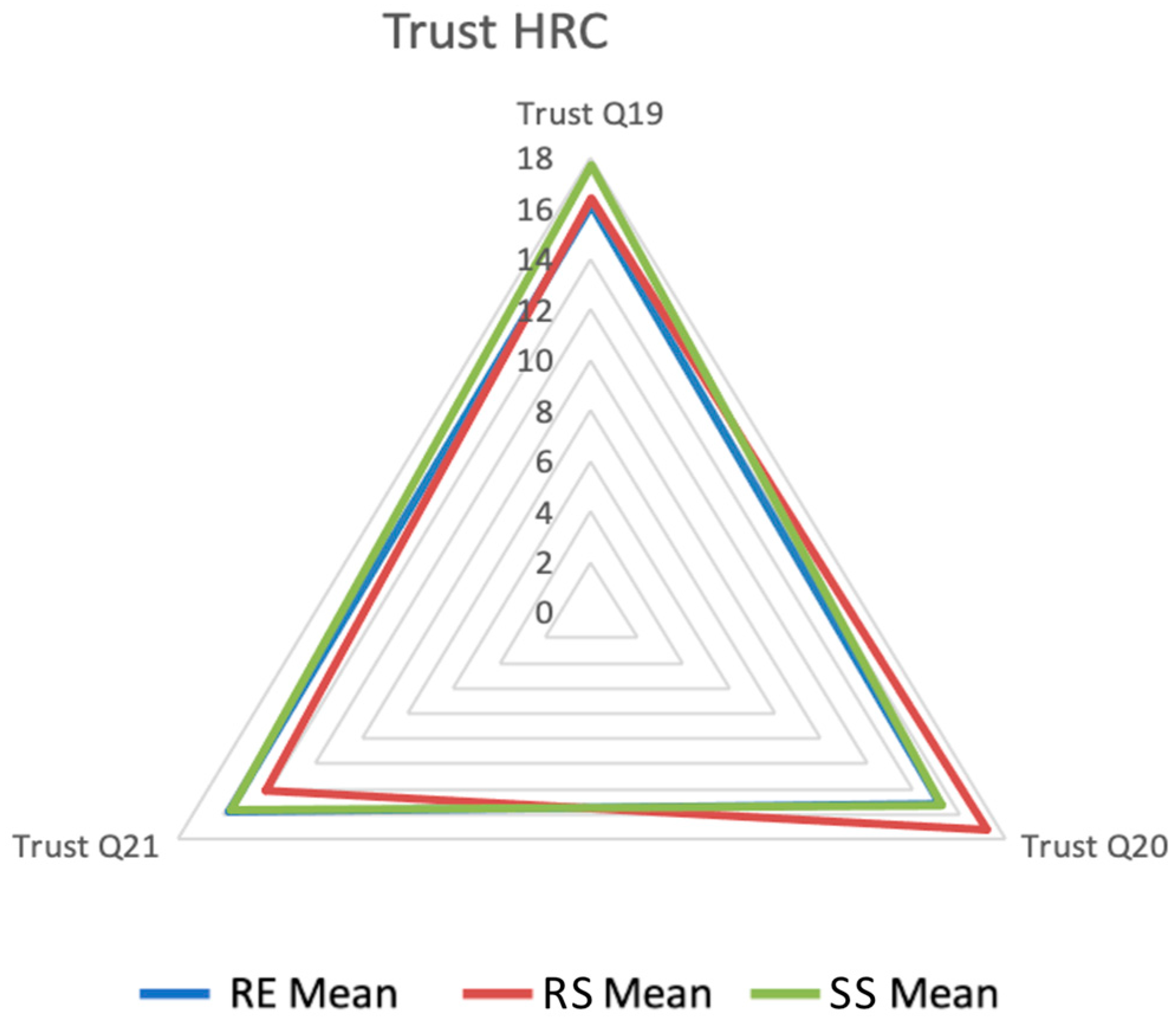

- Trust: The trust construct was assessed using 3 questions (Q19–Q21) from the Trust in Industrial Human–Robot Collaboration Questionnaire [48]. Cronbach’s alpha for this construct was 0.58, indicating relatively low internal consistency. This suggests a need for refining the measurement items or including additional questions to assess user trust in the system comprehensively.

- Technical measurements: In addition to the questionnaire, several technical measurements were recorded during the user testing. These measurements included the number of errors made, the time taken for task execution, and the number of attempts required for each task. These measurements were gathered via observations and video recordings.

5. Results

5.1. RQ: Do XR Technologies Enhance Human–Robot Interaction in Robotic Path Programming by Improving Technical Performance and Operator’s Experience?

- Q1. Desire to use the system frequently: A significant effect of mode on participants’ desire to use the system frequently was observed (F(2,N) = 7.21, p = 0.0016). Both XR control modes elicited a higher desire to use the system compared to manual control.

- Q2. Perceived ease of use: The perceived ease of use differed significantly among modes (F(2,N) = 15.74, p < 0.001). Participants rated the XR control modes as easier to use than the manual control mode.

- Q5. Learning curve: Beliefs about the ease with which most people could learn to use the system varied by mode (F(2,N) = 3.88, p = 0.026). The XR control modes were perceived as having a shorter learning curve.

- Q6. Laboriousness of use: Significant differences were found in the perceived laboriousness of the system (F(2,N) = 23.13, p < 0.001), with the manual control mode rated as more laborious.

- Q8. Physical activity required: The physical effort required differed significantly among modes (F(2,N) = 135.11, p < 0.001). The manual control mode required more physical activity than the XR control modes.

- Q11. Effort to achieve performance: A significant difference was observed in the effort participants felt they had to exert to achieve their performance level (F(2,N) = 10.73, p = 0.0001), with less effort reported under XR control modes.

5.2. Qualitative Metrics

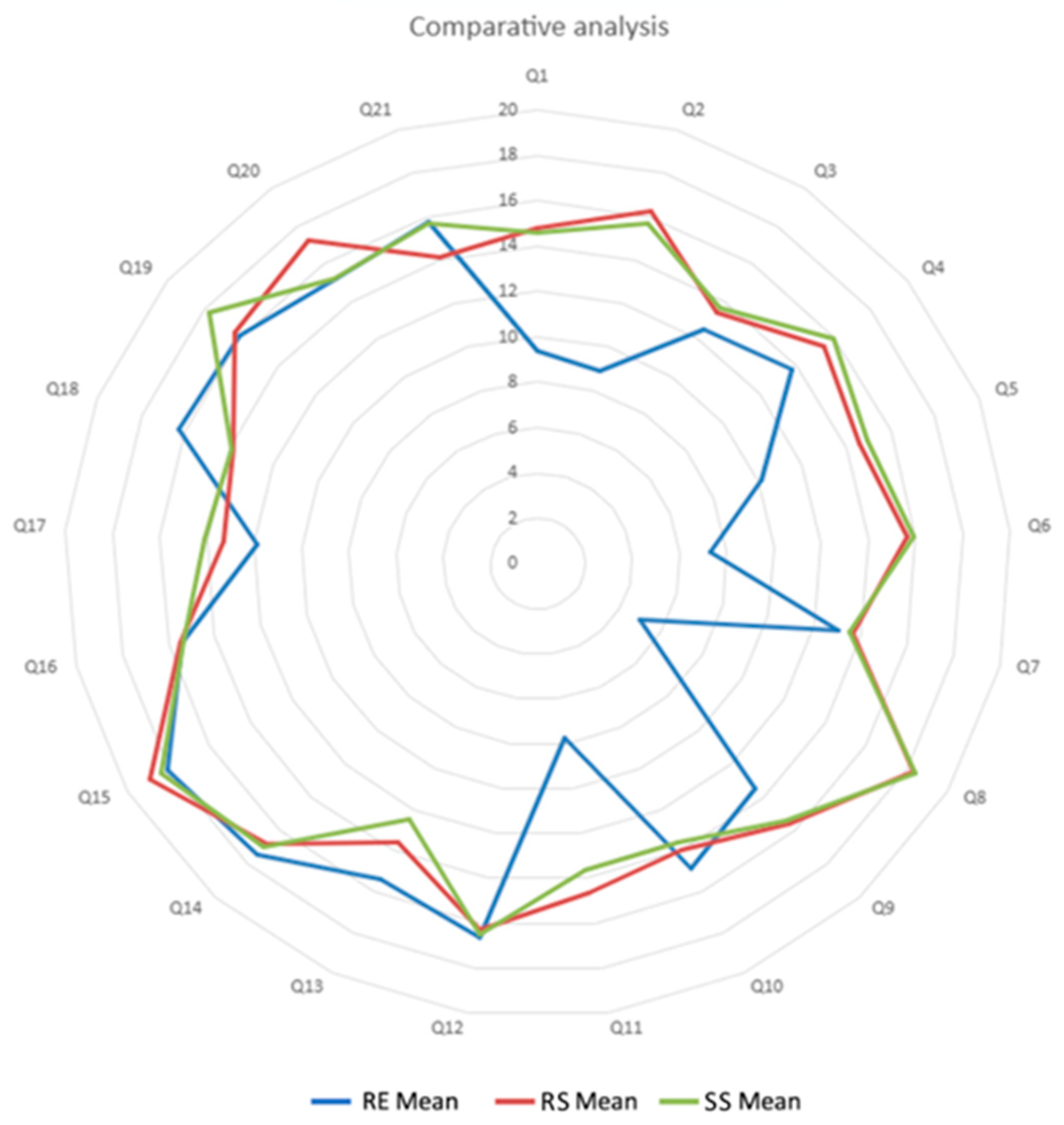

- Usability: The results for usability, measured through six questions (Q1–Q6), reveal a clear advantage for the XR-based control modes (RS and SS) compared to manual control (RE). Participants rated the XR modes significantly higher in perceived ease of use (Q2) and the learning curve (Q5), indicating that XR control was both intuitive and easier to learn. Additionally, the manual mode was perceived as more laborious, as evidenced by the lower scores in Q6, which assessed the effort required to use the system. The radar chart (Figure 4) visually supports these findings, showing consistently higher scores for XR-based modes across all usability items. These results highlight the XR modes’ ability to streamline interactions and reduce the friction typically associated with manual control, making them the preferred choice in terms of user-friendliness and efficiency.Figure 4. Usability results extracted from the questionnaire.

- Workload: Workload was evaluated using six items (Q7–Q12) from the NASA Task Load Index (NASA-TLX), and the results indicate significant differences between the control modes. The manual mode (RE) consistently imposed a higher cognitive and physical workload on participants. For example, participants reported increased mental demands in Q7, as the manual control required greater focus on decision-making, coordination, and precision. Similarly, Q8 highlighted higher physical effort for manual tasks, where controlling the system required strenuous movements. Q11 further reinforced this trend, with users feeling they had to work harder (both mentally and physically) to achieve satisfactory performance.

- Flow State: The results for flow state, assessed through items such as Q13 (challenge in relation to skills) and Q15 (focused attention), reveal a relatively balanced perception across the three modes of control: manual (RE), XR at normal speed (RS), and XR at safety speed (SS). Notably, in Q13, the manual control mode (RE) scored slightly higher, suggesting that participants felt the challenge provided by direct interaction with the robot was better aligned with their perceived skills. However, despite potential limitations of the XR modes (such as the intrusiveness of wearing XR glasses, weight of the equipment, and the experience of perceiving reality through a screen) participants rated their ability to stay focused and immersed in the tasks similarly to manual control. This lack of a significant difference can be viewed as a positive indicator: the XR-based interactions, even with their physical and perceptual demands, did not substantially hinder the participants’ ability to achieve flow states. These results (see Figure 6) suggest that XR systems can deliver experiences comparable to real-world interactions, demonstrating their potential to support user engagement and focus on human–robot interactions.

- Agency: The sense of agency, assessed through questions such as Q17 (sense of control) and Q18 (logical consequences of actions), did not show significant differences across the control modes. Although the XR modes offered intuitive interfaces and a slightly higher perception of control, these differences were not substantial enough to conclude a clear advantage over the manual mode. Overall, participants did not perceive substantial changes in their ability to effectively influence the system, suggesting that the type of control had minimal impact on this dimension. See Figure 7.

- Trust: This item, assessed through items such as Q19 (comfort with robot movements) and Q21 (system reliability), did not show substantial differences between the three modes of control as can be seen in Figure 8: RE, RS, and SS. While participants expressed slightly higher levels of trust in the XR modes, the differences were not pronounced enough to suggest a clear preference for one mode over another. This lack of significant variation could be attributed to several factors. First, participants may have had similar expectations for system reliability across all modes, as the robot’s fundamental capabilities and behaviors did not change significantly between modes. They also were in a controlled environment, and this could have helped to avoid feelings of distrust. Additionally, the XR environment, despite introducing perceptual differences (e.g., wearing a headset and viewing the physical world through a screen), likely maintained sufficient predictability and consistency to prevent substantial trust disparities.

- Correlation among variables: The following section analyzes the correlation matrices obtained from the questionnaire data under the three experimental conditions (RE, RS, and SS), with a particular focus on identifying meaningful patterns of relationships among the different questions. In case of need, the correlation matrixes of all three cases can be found in the Supplementary Materials. In line with established guidelines for interpreting correlation magnitudes [49] we considered coefficients exceeding 0.70 to represent strong relationships.

5.3. Performance Metrics

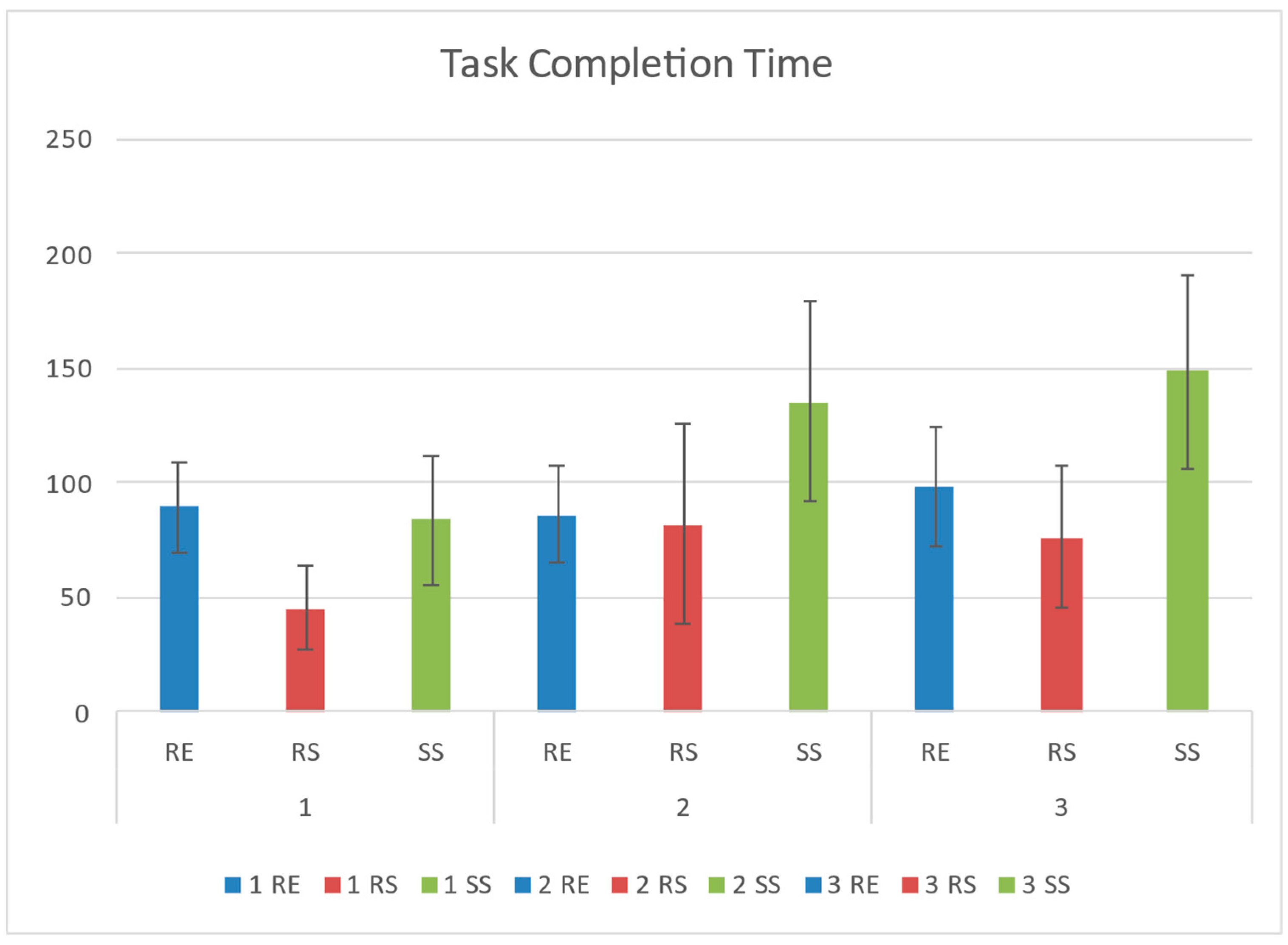

- Task Completion Time

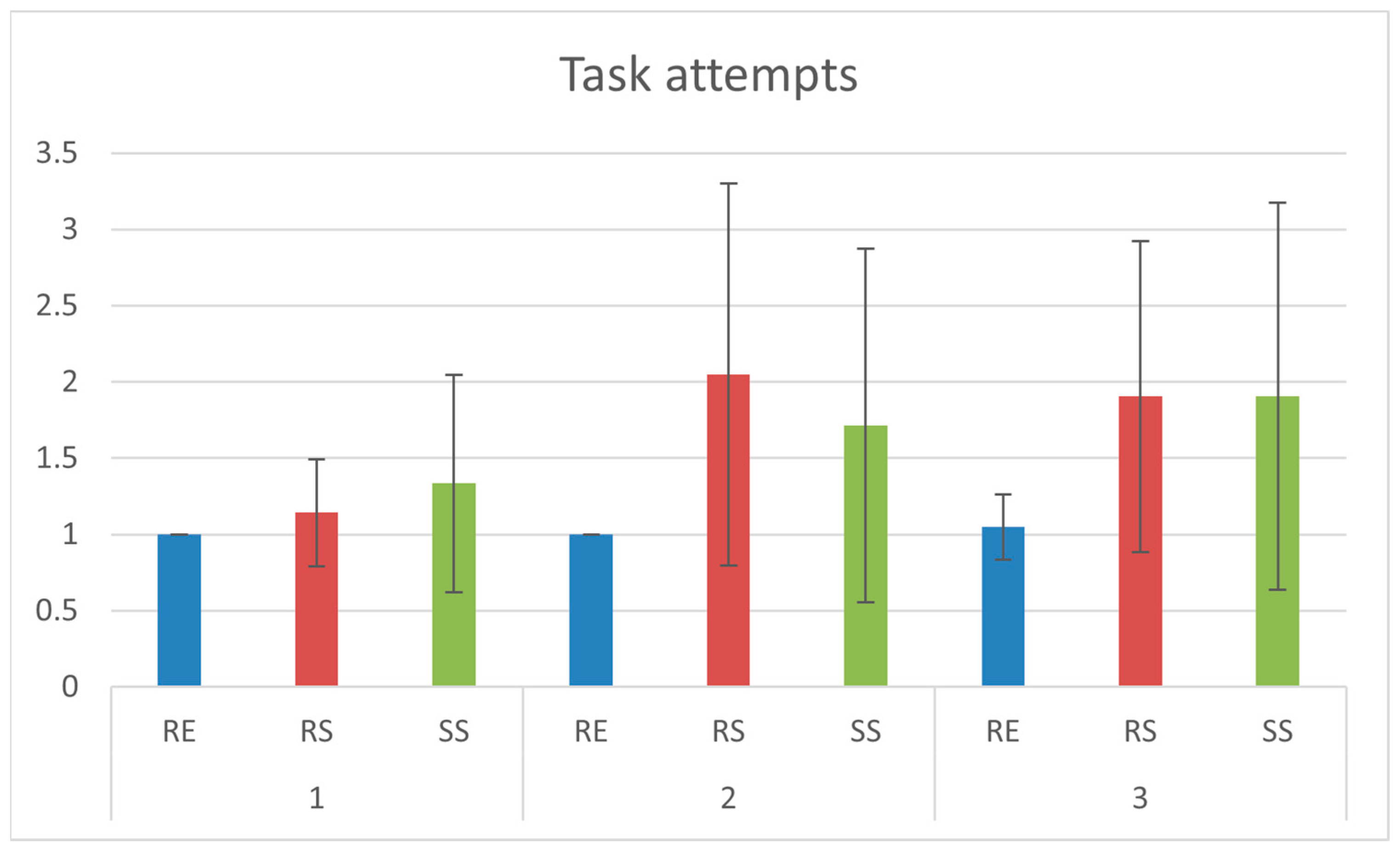

- Number of Attempts

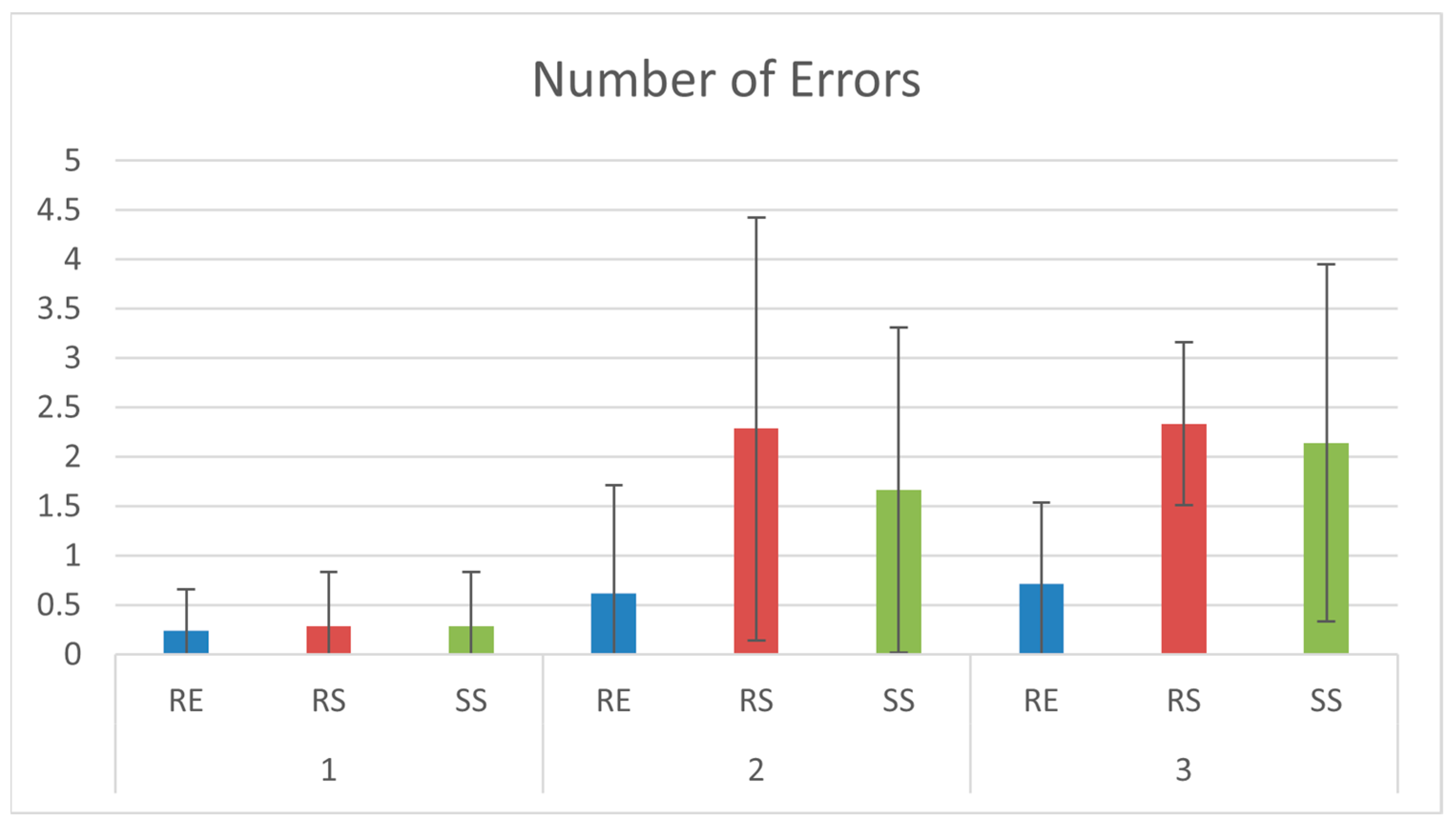

- Number of Errors

5.4. Differences Among Segmentations

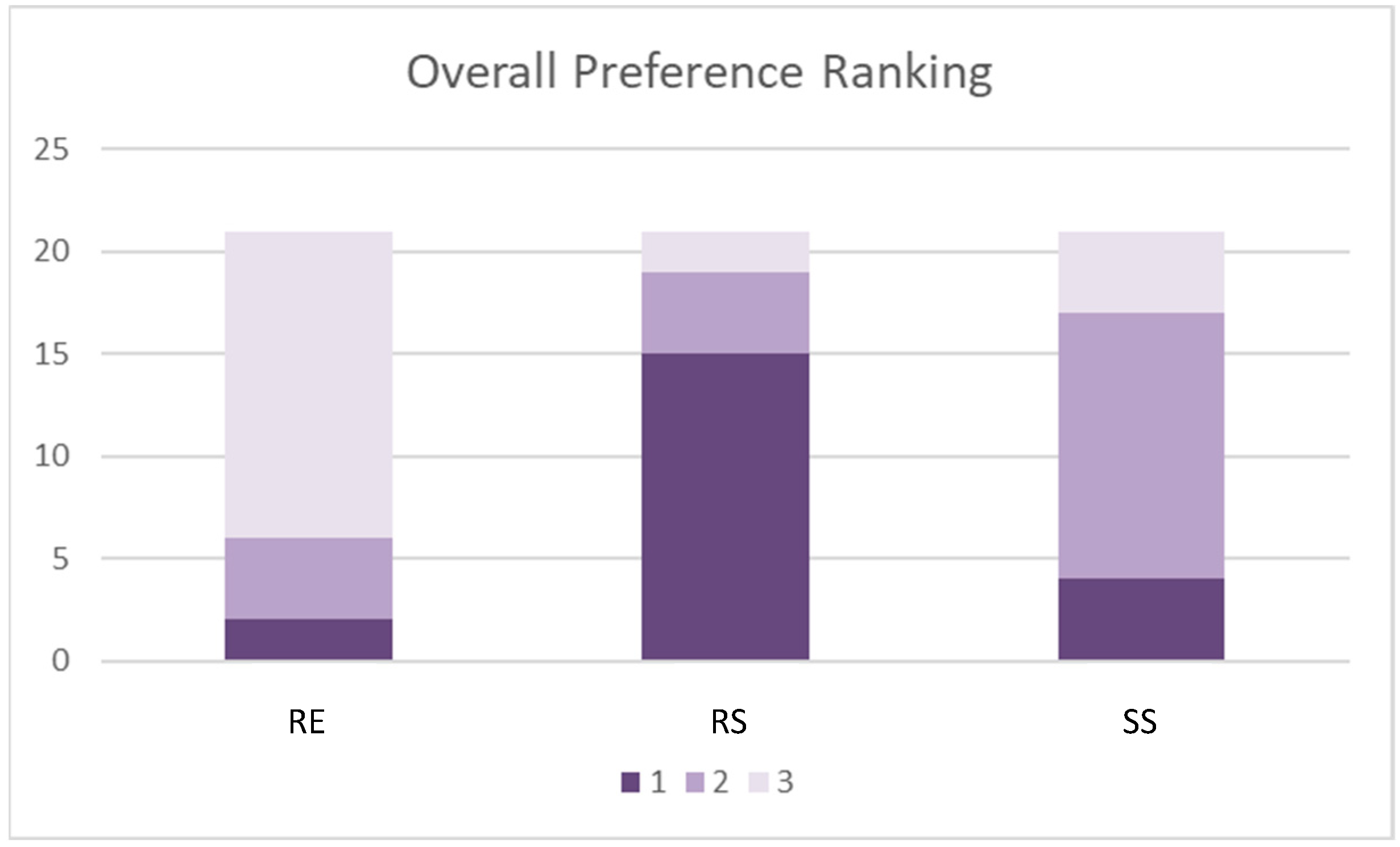

5.5. Overall Preference Metrics

- Manual control (RE): χ2 = 14.00, p = 0.000456

- XR control normal speed (RS): χ2 = 14.00, p = 0.000456

- XR control safety speed (SS): χ2 = 7.71, p = 0.01056

5.6. Minimal Detectable Effect (MDE) Analysis

6. Discussion

- The performance data presented a more complex picture. While the RS mode significantly reduced task completion times, particularly in simpler tasks, it also resulted in an increased number of attempts and errors in more complex tasks (Tasks 2 and 3). The SS mode, intended to mitigate errors through slower operation, did not significantly reduce errors compared to the RS mode and led to longer completion times. These errors stemmed from both the system and the interaction itself. Due to calibration and the robot’s 3D model, the system had a safety offset and an additional offset from a scale error of about 1:1.02. As a result, at certain points in the circuit, the robot would touch an obstacle even when participants executed the task correctly. Nevertheless, the most frequent error was the issue with hand tracking. In some cases, because of the participants’ hand position, the headset would lose tracking and the tracing would stop, sometimes requiring them to start over. Since these errors were largely system-based, testing made it possible to identify them, as well as the fact that tracking becomes more complicated with smaller hands. These improvements will be implemented at a later stage.

- The findings suggest that users are willing to tolerate certain inefficiencies in exchange for the benefits offered by XR control. This tolerance underscores the importance of user-centered design in XR technologies, where the focus is on creating interfaces that are not only functional but also engaging and easy to use [17]. Developers should consider incorporating adaptive features that adjust to task complexity, providing additional guidance or automation in more challenging tasks to reduce errors.

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Questionnaires

Appendix A.1. Socio-Demographic Questionnaire

- o

- Woman

- o

- Man

- o

- Prefer not to say

- o

- Other

- o

- Yes, eyeglasses

- o

- Yes, contact lenses

- o

- No

- o

- I have never interacted with a robot.

- o

- I have previously interacted at least once with a robotic arm while executing a task.

- o

- I have experience in interacting with a robotic arm while executing a task.

- o

- I have never interacted with AR and did not know about it until now

- o

- I have never interacted with AR, but I know what it is.

- o

- I have interacted at least once with AR via mobile/tablet.

- o

- I have interacted at least once with AR through glasses.

Appendix A.2. Questionnaire for Qualitative Assessment for UX

- I think that I would like to use this system frequently.

- I thought the system was easy to use.

- I think that I would need the support of a technician person to be able to use this system.

- I found the various functions in this system were well integrated.

- I would imagine that most people would learn to use this system very quickly.

- I found the system very laborious to use.

- The mental and perceptual activity required was high (e.g., thinking, deciding, calculating, remembering, looking, searching, etc.).

- The physical activity required was high (e.g., pushing, pulling, turning, controlling, activating, etc.).

- I felt high time pressure due to the rate or pace at which the tasks occurred.

- I think I was successful in accomplishing the goal of the task set by the experimenter. I am satisfied with my performance.

- I had to work hard (mentally and physically) to accomplish my level of performance.

- I felt insecure, discouraged, irritated, stressed and annoyed during the task.

- I was challenged, but I believed my skills would allow me to meet the challenge.

- I knew clearly what I wanted to do.

- My attention was focused entirely on what I was doing.

- I was aware of how well I was performing.

- I am in full control of what I do.

- The consequences of my actions feel like they don’t logically follow my actions.

- The way in which the robot moved made me feel uncomfortable.

- The speed with which the robot picked and released the components made me feel uneasy.

- I felt I could rely on the robot to do what it was supposed to do.

| Robot controlled manually | ||

| Robot controlled with AR (same speed) | ||

| Robot controlled with AR (different speed) |

| Comments: |

References

- Gao, Z.; Wanyama, T.; Singh, I.; Gadhrri, A.; Schmidt, R. From industry 4.0 to robotics 4.0—A conceptual framework for collaborative and intelligent robotic systems. In Procedia Manufacturing; Elsevier B.V.: Amsterdam, The Netherlands, 2020; pp. 591–599. [Google Scholar] [CrossRef]

- Soori, M.; Dastres, R.; Arezoo, B.; Jough, F.K.G. Intelligent robotic systems in Industry 4.0: A review. J. Adv. Manuf. Sci. Technol. 2024, 4, 2024007-0. [Google Scholar] [CrossRef]

- Xu, M.; David, J.M.; Kim, S.H. The Fourth Industrial Revolution: Opportunities and Challenges. Int. J. Financ. Res. 2018, 9, 90–95. [Google Scholar] [CrossRef]

- Sheridan, T.B. Human-Robot Interaction; SAGE Publications Inc.: Thousand Oaks, CA, USA, 2016. [Google Scholar] [CrossRef]

- Wang, X.V.; Kemény, Z.; Váncza, J.; Wang, L. Human–robot collaborative assembly in cyber-physical production: Classification framework and implementation. CIRP Ann. Manuf. Technol. 2017, 66, 5–8. [Google Scholar] [CrossRef]

- Kemény, Z.; Beregi, R.; Nacsa, J.; Kardos, C.; Horváth, D. Human–robot collaboration in the MTA SZTAKI learning factory facility at Győr. Procedia Manuf. 2018, 23, 105–110. [Google Scholar] [CrossRef]

- Nahavandi, S. Industry 5.0-a human-centric solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef]

- Speicher, M.; Hall, B.D.; Nebeling, M. What is mixed reality? In Proceedings of the Conference on Human Factors in Computing Systems—Proceedings, Glasgow, Scotland, 4–9 May 2019. [CrossRef]

- Azuma, R.T. A Survey of Augmented Reality. 1997. Available online: http://www.cs.unc.edu/~azumaW (accessed on 4 December 2024).

- Walker, M.; Phung, T.; Chakraborti, T.; Williams, T.; Szafir, D. Virtual, Augmented, and Mixed Reality for Human-robot Interaction: A Survey and Virtual Design Element Taxonomy. ACM Trans. Hum. Robot. Interact. 2023, 12, 1–39. [Google Scholar] [CrossRef]

- Jiang, X.; Mattes, P.; Jia, X.; Schreiber, N.; Neumann, G.; Lioutikov, R. A Comprehensive User Study on Augmented Reality-Based Data Collection Interfaces for Robot Learning. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 11–15 March 2024; pp. 333–342. [Google Scholar] [CrossRef]

- Chen, J.; Salas, D.A.C.; Bilal, M.; Zhou, Q.; Johal, W., Mr. LfD: A Mixed Reality Interface for Robot Learning from Demonstration. 2024. Available online: https://github.com/CHRI-Lab/MrLfD_Hub (accessed on 4 December 2024).

- Zhao, F.; Deng, W.; Pham, D.T. A Robotic Teleoperation System with Integrated Augmented Reality and Digital Twin Technologies for Disassembling End-of-Life Batteries. Batteries 2024, 10, 382. [Google Scholar] [CrossRef]

- Steinfeld, A.; Fong, T.; Kaber, D.; Lewis, M.; Scholtz, J.; Schultz, A.; Goodrich, M. Common metrics for human-robot interaction. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 33–40. [Google Scholar] [CrossRef]

- Goodrich, M.A.; Schultz, A.C. Human-robot interaction: A survey. Found. Trends® Hum. Comput. Interact. 2007, 1, 203–275. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–339. [Google Scholar] [CrossRef]

- Norman, D.A. The Design of Everyday Things: Revised and Expanded Edition; Basic Books: New York, NY, USA, 2013. [Google Scholar]

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human–robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Haddadin, S.; Croft, E. Physical Human–Robot Interaction. In Springer Handbooks; Springer Science and Business Media Deutschland GmbH: Berlin/Heidelberg, Germany, 2016; pp. 1835–1874. [Google Scholar] [CrossRef]

- Bauer, A.; Wollherr, D.; Buss, M. Human-robot collaboration: A survey. Int. J. Humanoid Robot. 2008, 5, 47–66. [Google Scholar] [CrossRef]

- Lasota, P.A.; Rossano, G.F.; Shah, J.A. Toward safe close-proximity human-robot interaction with standard industrial robots. In Proceedings of the IEEE International Conference on Automation Science and Engineering, New Taipei, Taiwan, 18–22 August 2014; pp. 339–344. [Google Scholar] [CrossRef]

- Delgado JM, D.; Oyedele, L.; Ajayi, A.; Akanbi, L.; Akinade, O.; Bilal, M.; Owolabi, H. Robotics and automated systems in construction: Understanding industry-specific challenges for adoption. J. Build. Eng. 2019, 26, 100868. [Google Scholar] [CrossRef]

- Tashtoush, T.; Garcia, L.; Landa, G.; Amor, F.; Laborde, A.N.; Oliva, D.E.; Safar, F.G.E. Human-Robot Interaction and Collaboration (HRI-C) Utilizing Top-View RGB-D Camera System. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 10–17. [Google Scholar] [CrossRef]

- Lasota, P.A.; Fong, T.; Shah, J.A. A Survey of Methods for Safe Human-Robot Interaction. Found. Trends® Robot. 2017, 5, 261–349. [Google Scholar] [CrossRef]

- Zacharaki, A.; Kostavelis, I.; Gasteratos, A.; Dokas, I. Safety Bounds in Human Robot Interaction: A Survey; Elsevier B.V.: Amsterdam, The Netherlands, 2020. [Google Scholar] [CrossRef]

- Akalin, N.; Kiselev, A.; Kristoffersson, A.; Loutfi, A. A Taxonomy of Factors Influencing Perceived Safety in Human–Robot Interaction. Int. J. Soc. Robot. 2023, 15, 1993–2004. [Google Scholar] [CrossRef]

- Lichte, D.; Wolf, K.-D. Use case-based consideration of safety and security in cyber physical production system. In Safety and Reliability-Safe Societies in A Changing World; Taylor & Francis: Abingdon, UK, 2018; pp. 1395–1401. [Google Scholar]

- Hoffman, G. Evaluating Fluency in Human-Robot Collaboration. IEEE Trans. Hum. Mach. Syst. 2019, 49, 209–218. [Google Scholar] [CrossRef]

- Wang, X.; Shen, L.; Lee, L.-H. Towards Massive Interaction with Generalist Robotics: A Systematic Review of XR-enabled Remote Human-Robot Interaction Systems. arXiv 2024, arXiv:2403.11384. [Google Scholar] [CrossRef]

- Karpichev, Y.; Charter, T.; Hong, J.; Enayati AM, S.; Honari, H.; Tamizi, M.G.; Najjaran, H. Extended Reality for Enhanced Human-Robot Collaboration: A Human-in-the-Loop Approach. In Proceedings of the 2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN), Pasadena, CA, USA, 26–30 August 2024; pp. 1991–1998. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A Quick and Dirty Usability Scale. 1995. Available online: https://www.researchgate.net/publication/228593520 (accessed on 5 December 2024).

- Kim, Y.M.; Rhiu, I.; Yun, M.H. A Systematic Review of a Virtual Reality System from the Perspective of User Experience. Int. J. Hum. Comput. Interact. 2020, 36, 893–910. [Google Scholar] [CrossRef]

- Hart, S.; Stavenland, L. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Dünser, A.; Grasset, R.; Billinghurst, M. survey of evaluation techniques used in augmented studies. In Proceedings of the ACM SIGGRAPH ASIA 2008 Courses, SIGGRAPH Asia’08, Singapore, 10–13 December 2008. [Google Scholar] [CrossRef]

- Csikszentmihalyi, M. Flow: The Psychology of Optimal Experience. 1990. Available online: https://www.researchgate.net/publication/224927532 (accessed on 4 December 2024).

- Nakamura, J.; Csikszentmihalyi, M. Flow Theory and Research. In The Oxford Handbook of Positive Psychology, 2nd ed.; Oxford University Press: Oxford, UK, 2012. [Google Scholar] [CrossRef]

- Michailidis, L.; Balaguer-Ballester, E.; He, X. Flow and immersion in video games: The aftermath of a conceptual challenge. Front. Psychol. 2018, 9, 1682. [Google Scholar] [CrossRef]

- Hassan, L.; Jylhä, H.; Sjöblom, M.; Hamari, J. Flow in VR: A Study on the Relationships Between Preconditions, Experience and Continued Use. In Proceedings of the Annual Hawaii International Conference on System Sciences, Maui, HI, USA, 7–10 January 2020; pp. 1196–1205. [Google Scholar] [CrossRef]

- Moore, J.W.; Middleton, D.; Haggard, P.; Fletcher, P.C. Exploring implicit and explicit aspects of sense of agency. Conscious. Cogn. 2012, 21, 1748–1753. [Google Scholar] [CrossRef] [PubMed]

- Caspar, E.A.; Desantis, A.; Dienes, Z.; Cleeremans, A.; Haggard, P. The sense of agency as tracking control. PLoS ONE 2016, 11, e0163892. [Google Scholar] [CrossRef]

- Beck, B.; Di Costa, S.; Haggard, P. Having control over the external world increases the implicit sense of agency. Cognition 2017, 162, 54–60. [Google Scholar] [CrossRef] [PubMed]

- Tapal, A.; Oren, E.; Dar, R.; Eitam, B. The sense of agency scale: A measure of consciously perceived control over one’s mind, body, and the immediate environment. Front. Psychol. 2017, 8, 1552. [Google Scholar] [CrossRef]

- Meta, “Meta Quest 3”, Meta Platforms, Menlo Park, CA, USA. 2023. Available online: https://www.meta.com/quest/quest-3/ (accessed on 1 December 2024).

- Dogangun, F.; Bahar, S.; Yildirim, Y.; Temir, B.T.; Ugur, E.; Dogan, M.D. RAMPA: Robotic Augmented Reality for Machine Programming and Automation. arXiv 2024, arXiv:2410.13412. [Google Scholar] [CrossRef]

- Pearse, N. Deciding on the scale granularity of response categories of likert type scales: The case of a 21-point scale. Electron. J. Bus. Res. Methods 2011, 9, 159–171. Available online: www.ejbrm.com (accessed on 6 December 2024).

- NASA Task Load Index. Available online: https://digital.ahrq.gov/health-it-tools-and-resources/evaluation-resources/workflow-assessment-health-it-toolkit/all-workflow-tools/nasa-task-load-index (accessed on 30 November 2023).

- Jackson, S.; Marsh, H.W. Development and Validation of a Scale to Measure Optimal Experience: The Flow State Scale. J. Sport Exerc. Psychol. 1996, 18, 17–35. [Google Scholar] [CrossRef]

- Charalambous, G.; Fletcher, S.; Webb, P. The Development of a Scale to Evaluate Trust in Industrial Human-robot Collaboration. Int. J. Soc. Robot. 2016, 8, 193–209. [Google Scholar] [CrossRef]

- Evans, R.H. An Analysis of Criterion Variable Reliability in Conjoint Analysis. Percept. Mot. Ski. 1996, 82, 988–990. [Google Scholar] [CrossRef]

- Toothaker, L.E. Book Review: Nonparametric Statistics for the Behavioral Sciences (Second Edition): Sidney Siegel and N. John Castellan, Jr. New York: McGraw-Hill, 1988, 399 pp., approx. $47.95. Appl. Psychol. Meas. 1989, 13, 217–219. [Google Scholar] [CrossRef]

| Experience Interacting with | Experience with Extended Reality | Total | ||

|---|---|---|---|---|

| XR Glasses | XR Mobile | Knows What It Is but Never Used | ||

| Experienced | 1 | 1 | 0 | 2 |

| Little experience | 2 | 2 | 2 | 6 |

| No experience | 5 | 3 | 5 | 13 |

| Total | 8 | 8 | 7 | 21 |

| Interaction with the Robot | Speed of the Robot | Level of the Task |

|---|---|---|

| Manual robot manipulation | Same speed as user (RE). | Level 1: Easy, motion control (x,y) |

| Level 2: Medium, dexterity (x,y) | ||

| Level 3: Medium, dexterity and precision (x,y,z) | ||

| XR-based robot manipulation | Same speed as user (RS). | Level 1: Easy, motion control (x,y) |

| Level 2: Medium, dexterity (x,y) | ||

| Level 3: Medium, dexterity and precision (x,y,z) | ||

| Safety speed: X5 times slower than user (SS). | Level 1: Easy, motion control (x,y) | |

| Level 2: Medium, dexterity (x,y) | ||

| Level 3: Medium, dexterity and precision (x,y,z) |

| Variable | Tool | Number of Items |

|---|---|---|

| Usability | System usability scale | 6 |

| Workload | NASA-TLX | 6 |

| Flow state | Flow state scale | 4 |

| Agency | Sense of agency scale | 2 |

| Trust | Trust in Industrial Human–Robot Collaboration Questionnaire | 3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Escallada, O.; Osa, N.; Lasa, G.; Mazmela, M.; Doğangün, F.; Yildirim, Y.; Bahar, S.; Ugur, E. Human Attitudes in Robotic Path Programming: A Pilot Study of User Experience in Manual and XR-Controlled Robotic Arm Manipulation. Multimodal Technol. Interact. 2025, 9, 27. https://doi.org/10.3390/mti9030027

Escallada O, Osa N, Lasa G, Mazmela M, Doğangün F, Yildirim Y, Bahar S, Ugur E. Human Attitudes in Robotic Path Programming: A Pilot Study of User Experience in Manual and XR-Controlled Robotic Arm Manipulation. Multimodal Technologies and Interaction. 2025; 9(3):27. https://doi.org/10.3390/mti9030027

Chicago/Turabian StyleEscallada, Oscar, Nagore Osa, Ganix Lasa, Maitane Mazmela, Fatih Doğangün, Yigit Yildirim, Serdar Bahar, and Emre Ugur. 2025. "Human Attitudes in Robotic Path Programming: A Pilot Study of User Experience in Manual and XR-Controlled Robotic Arm Manipulation" Multimodal Technologies and Interaction 9, no. 3: 27. https://doi.org/10.3390/mti9030027

APA StyleEscallada, O., Osa, N., Lasa, G., Mazmela, M., Doğangün, F., Yildirim, Y., Bahar, S., & Ugur, E. (2025). Human Attitudes in Robotic Path Programming: A Pilot Study of User Experience in Manual and XR-Controlled Robotic Arm Manipulation. Multimodal Technologies and Interaction, 9(3), 27. https://doi.org/10.3390/mti9030027