Bag of Features (BoF) Based Deep Learning Framework for Bleached Corals Detection

Abstract

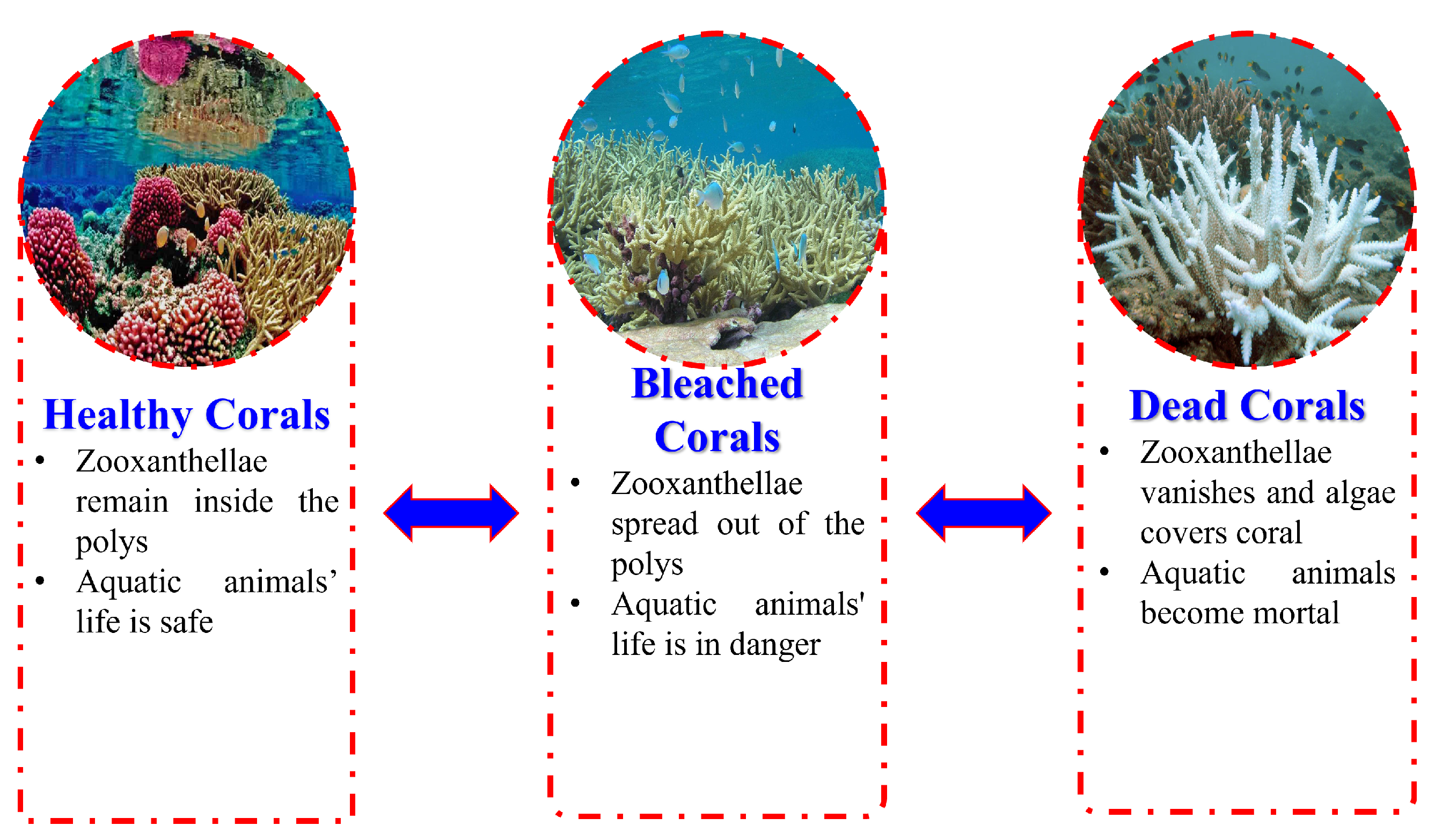

:1. Introduction

2. Related Work

Motivation and Contribution

- We have created a novel custom CNN named as CoralNet for the classification of bleached and unbleached corals.

- We propose a novel Bag of Features (BoF) technique integrated with SVM to classify bleached and unbleached corals with high accuracy. BoF is a vector containing handcrafted features extracted with the help of HOG and LBP as well as spatial features extracted with AlexNet and CoralNet.

- We also propose a novel bleached corals positioning algorithm to locate the position of bleached corals.

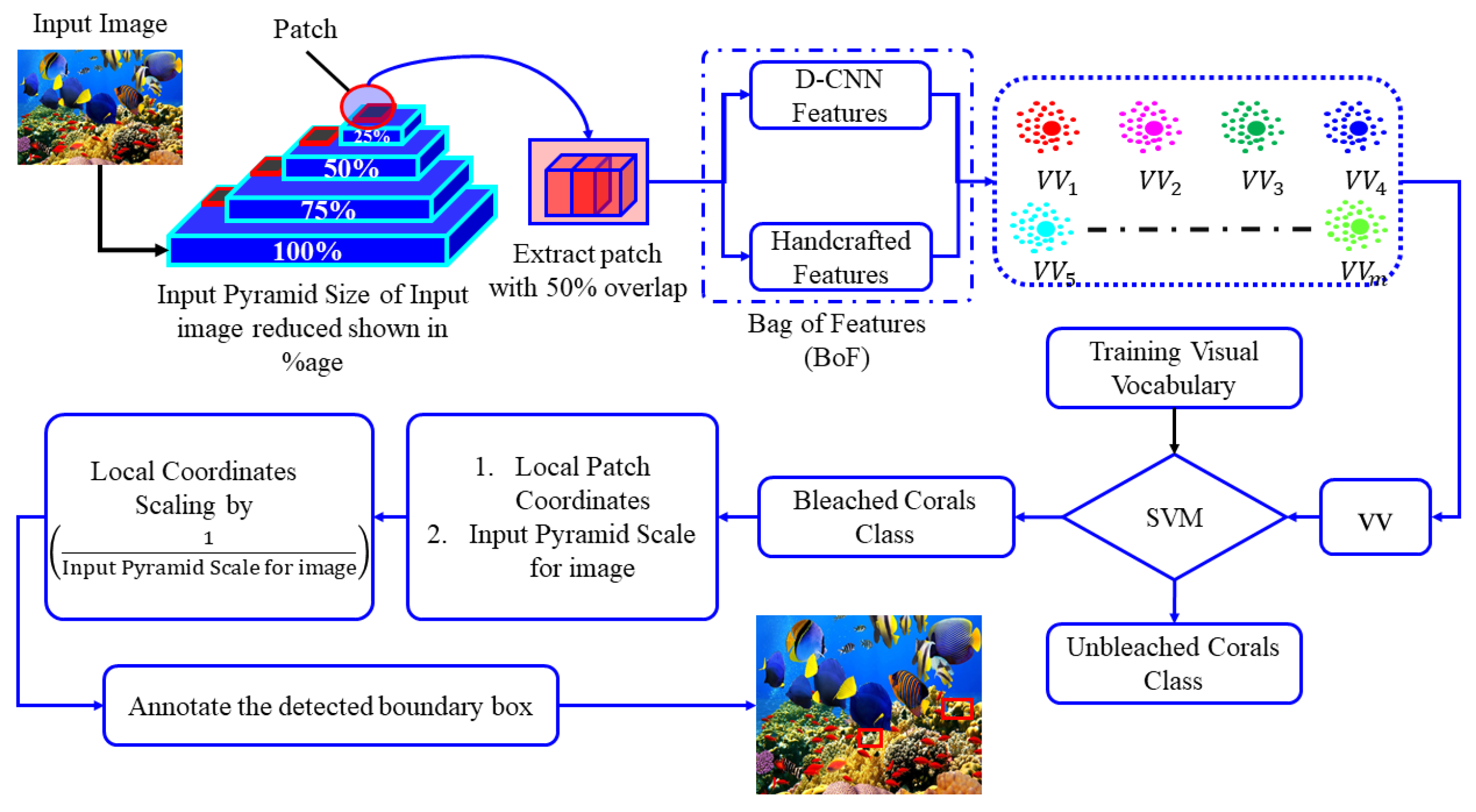

3. Proposed Framework

3.1. Explanation of Steps

3.2. Feature Extraction

3.2.1. Spatial Features

3.2.2. Pretrained D-CNN

3.2.3. Custom D-CNN: CoralNet

3.2.4. Handcrafted Features

3.3. Bag of Features (BoF) and Visual Vocabulary (VV)

3.3.1. K-Means Clustering Algorithm

| Algorithm 1: k-means Clustering Algorithm. |

3.3.2. Validation of Clusters

| Algorithm 2: Silhouette Analysis. |

3.4. Classifier

3.5. Confusion Matrix

- True Positive : It is the accurate prediction of the bleached corals.

- True Negative : It is the accurate prediction of the unbleached corals.

- False Positive : It is the false prediction of the bleached corals.

- False Negative : It is the false prediction of the unbleached corals.

- Sensitivity : It is the ratio of accurate prediction of the corals and can be given by Equation (4).

- Specificity : It is the ratio of the prediction of unbleached corals and can be given by Equation (5).

- Accuracy: The ratio of correct prediction to the total number of instances can be given by Equation (6).

- F1-score: It is the weighted mean of sensitivity and specificity and can be given by Equation (7).

3.6. Dataset

3.7. Bleached Corals Positioning Algorithm

| Algorithm 3: Bleached Corals Positioning Algorithm. |

|

4. Experimental Results

4.1. Generalized Performance of BoF Model on Moorea Corals Dataset

4.2. Bleached Corals Localization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hedley, J.D.; Roelfsema, C.M.; Chollett, I.; Harborne, A.R.; Heron, S.F.; Weeks, S.; Skirving, W.J.; Strong, A.E.; Eakin, C.M.; Christensen, T.R.L.; et al. Remote Sensing of Coral Reefs for Monitoring and Management: A Review. Remote Sens. 2016, 8, 118. [Google Scholar] [CrossRef] [Green Version]

- Sully, S.; Burkepile, D.E.; Donovan, M.K.; Hodgson, G.; Woesik, R. A global analysis of coral bleaching over the past two decades. Nat. Commun. 2019, 10, 1264. [Google Scholar] [CrossRef] [Green Version]

- Conti-Jerpe, I.; Thompson, P.D.; Wong, C.; Oliveira, N.L.; Duprey, N.; Moynihan, M.; Baker, D. Trophic strategy and bleaching resistance in reef-building corals. Sci. Adv. 2020, 6, eaaz5443. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- DeCarlo, T.M.; Gajdzik, L.; Ellis, J.; Coker, D.J.; Roberts, M.B.; Hammerman, N.M.; Pandolfi, J.M.; Monroe, A.A.; Berumen, M.L. Nutrient-supplying ocean currents modulate coral bleaching susceptibility. Sci. Adv. 2020, 6, 5493–5499. [Google Scholar] [CrossRef] [PubMed]

- Fawad; Jamil Khan, M.; Rahman, M.; Amin, Y.; Tenhunen, H. Low-rank multi-channel features for robust visual object tracking. Symmetry 2019, 11, 1155. [Google Scholar]

- González-Rivero, M.; Beijbom, O.; Rodriguez-Ramirez, A.; Ganase, A.; Gonzalez-Marrero, Y.; Herrera-Reveles, A.; Kennedy, E.V.; Kim, C.J.; Lopez-Marcano, S.; Markey, K. Monitoring of coral reefs using artificial intelligence: A feasible and cost-effective approach. Remote Sens. 2020, 12, 489–502. [Google Scholar] [CrossRef] [Green Version]

- Liu, B.; Liu, Z.; Men, S.; Li, Y.; Ding, Z.; He, J.; Zhao, Z. Underwater hyperspectral imaging technology and its applications for detecting and mapping the seafloor: A review. Sensors 2020, 20, 4962. [Google Scholar] [CrossRef]

- Yang, B.; Xiang, L.; Chen, X.; Jia, W. An online chronic disease prediction system based on incremental deep neural network. Comput. Mater. Contin. 2021, 67, 951–964. [Google Scholar] [CrossRef]

- Mahmood, A.; Bennamoun, M.; Sohel, F.A.; Gary, R.; Kendrick, A.; Hovey, R.; Kendrick, G.A.; Fisher, R.B. Deep image representations for coral image classification. IEEE J. Ocean. Eng. 2019, 44, 121–131. [Google Scholar] [CrossRef] [Green Version]

- Tekin, R.; Ertuĝrul, Ö.F.; Kaya, Y. New local binary pattern approaches based on color channels in texture classification. Multimed Tools Appl. 2020, 79, 32541–32561. [Google Scholar] [CrossRef]

- Yuan, B.H.; Liu, G.H. Image retrieval based on the gradient-structures histogram. Neural Comput. Appl. 2020, 32, 11717–11727. [Google Scholar] [CrossRef]

- Song, T.; Li, H.; Meng, F.; Wu, Q.; Cai, J. LETRIST: Locally encoded transform feature histogram for rotation-invariant texture classification. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 1565–1579. [Google Scholar] [CrossRef]

- Kaya, Y.; Kuncan, M.; Kaplan, K.; Minaz, M.R.; Ertun c, H.M. A new feature extraction approach based on one-dimensional gray level co-occurrence matrices for bearing fault classification. J. Exp. Theor. Artif. Intell. 2021, 33, 161–178. [Google Scholar] [CrossRef]

- Nkenyereye, L.; Tama, B.A.; Lim, S. A stacking-based deep neural network approach for effective network anomaly detection. Comput. Mater. Contin. 2021, 66, 2217–2227. [Google Scholar]

- Murala, S.; Maheshwari, R.P.; Balasubramanian, R. Local tetra patterns: A new feature descriptor for content-based image retrieval. IEEE Trans. Image Process. 2012, 21, 2874–2886. [Google Scholar] [CrossRef] [PubMed]

- Bemani, A.; Baghban, A.; Shamshirband, S.; Mosavi, A.; Csiba, P.; Várkonyi-Kóczy, A.R. Applying ann, anfis, and lssvm models for estimation of acid solvent solubility in supercritical CO2. Comput. Mater. Contin. 2020, 63, 1175–1204. [Google Scholar] [CrossRef]

- Jamil, S.; Rahman, M.; Ullah, A.; Badnava, S.; Forsat, M.; Mirjavadi, S.S. Malicious uav detection using integrated audio and visual features for public safety applications. Sensors 2020, 20, 3923. [Google Scholar] [CrossRef]

- Chu, Y.; Yue, X.; Yu, L.; Sergei, M.; Wang, Z. Automatic image captioning based on ResNet50 and LSTM with soft attention. Wirel. Commun. Mob. Comput. 2020, 2020, 8909458. [Google Scholar] [CrossRef]

- Wazirali, R. Intrusion detection system using fknn and improved PSO. Comput. Mater. Contin. 2021, 67, 1429–1445. [Google Scholar] [CrossRef]

- Alsharman, N.; Jawarneh, I. Googlenet cnn neural network towards chest ct coronavirus medical image classification. J. Comput. Sci. 2020, 16, 620–625. [Google Scholar] [CrossRef]

- Joshi, K.; Tripathi, V.; Bose, C.; Bhardwaj, C. Robust sports image classification using inceptionv3 and neural networks. Procedia Comput. Sci. 2020, 167, 2374–2381. [Google Scholar] [CrossRef]

- Bennett, M.K.; Younes, N.; Joyce, K. Automating Drone Image Processing to Map Coral Reef Substrates Using Google Earth Engine. Drones 2020, 4, 50. [Google Scholar] [CrossRef]

- Raphael, A.; Dubinsky, Z.; Iluz, D.; Netanyahu, N.S. Neural Network Recognition of Marine Benthos and Corals. Diversity 2020, 12, 29. [Google Scholar] [CrossRef] [Green Version]

- Odagawa, S.; Takeda, T.; Yamano, H.; Matsunaga, T. Bottom-type classification in coral reef area using hyperspectral bottom index imagery. In Proceedings of the 2015 7th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Tokyo, Japan, 2–5 June 2015; pp. 1–4. [Google Scholar]

- Xu, J.; Zhao, J.; Wang, F.; Chen, Y.; Lee, Z. Detection of Coral Reef Bleaching Based on Sentinel-2 Multi-Temporal Imagery: Simulation and Case Study. Front. Mar. Sci. 2021, 8, 268. [Google Scholar] [CrossRef]

- Saliu, F.; Montano, S.; Leoni, B.; Lasagni, M.; Galli, P. Microplastics as a threat to coral reef environments: Detection of phthalate esters in neuston and scleractinian corals from the Faafu Atoll, Maldives. Mar. Pollut. Bull. 2019, 142, 234–241. [Google Scholar] [CrossRef] [PubMed]

- Lodhi, V.; Chakravarty, D.; Mitra, P. Hyperspectral imaging for earth observation: Platforms and instruments. J. Indian Inst. Sci. 2018, 98, 429–443. [Google Scholar] [CrossRef]

- Mahmood, A.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F.; Hovey, R.; Kendrick, G.; Fisher, R.B. Coral classification with hybrid feature representations. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; Volume 1, pp. 519–523. [Google Scholar]

- Meng, L.; Hirayama, T.; Oyanagi, S. Underwater-drone with panoramic camera for automatic fish recognition based on deep learning. IEEE Access 2018, 6, 17880–17886. [Google Scholar] [CrossRef]

- Han, K.-X.; Chien, W.; Chiu, C.-C.; Cheng, Y.-T. Application of Support Vector Machine (SVM) in the Sentiment Analysis of Twitter DataSet. Appl. Sci. 2020, 10, 1125. [Google Scholar] [CrossRef] [Green Version]

- Kranjčić, N.; Medak, D.; Župan, R.; Rezo, M. Support Vector Machine Accuracy Assessment for Extracting Green Urban Areas in Towns. Remote Sens. 2019, 11, 655. [Google Scholar] [CrossRef] [Green Version]

- Rahman, M.H.; Shahjalal, M.; Hasan, M.K.; Ali, M.O.; Jang, Y.M. Design of an SVM Classifier Assisted Intelligent Receiver for Reliable Optical Camera Communication. Sensors 2021, 21, 4283. [Google Scholar] [CrossRef]

- Fan, J.; Lee, J.; Lee, Y. A Transfer Learning Architecture Based on a Support Vector Machine for Histopathology Image Classification. Appl. Sci. 2021, 11, 6380. [Google Scholar] [CrossRef]

- Chicco, D.; Warrens, M.J.; Jurman, G. The Matthews Correlation Coefficient (MCC) is More Informative Than Cohen’s Kappa and Brier Score in Binary Classification Assessment. IEEE Access 2021, 9, 78368–78381. [Google Scholar] [CrossRef]

- Shihavuddin, ASM. Coral reef dataset. Mendeley Data 2017, V2. [Google Scholar] [CrossRef]

- Bleached and Unbleached Corals Classification. Available online: https://www.kaggle.com/sonainjamil/bleached-corals-detection (accessed on 22 September 2021).

- BHD Corals. Available online: https://www.kaggle.com/sonainjamil/bhd-corals (accessed on 23 September 2021).

- Moorea Labeled Corals. Available online: http://vision.ucsd.edu/content/moorea-labeled-corals (accessed on 27 September 2021).

- Beijbom, O.; Edmunds, P.J.; Kline, D.I.; Mitchell, B.G.; Kriegman, D. Automated Annotation of Coral Reef Survey Images. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 1170–1177. [Google Scholar]

| Parameter | Value |

|---|---|

| Optimizer | Adam |

| Epochs | 10 |

| Batch Size | 64 |

| Loss Function | Cross Entropy |

| Technique’s Name | SVM Kernel | Sensitivity | Specificity | Accuracy | F1-Score | Cohen’s Kappa |

|---|---|---|---|---|---|---|

| LBP [10] | Polynomial | 70.1% | 75.9% | 71.8% | 0.729 | 0.731 |

| HOG [11] | Linear | 66.3% | 69.3% | 67.1% | 0.678 | 0.663 |

| LETRIST [12] | Linear | 56.2% | 59.7% | 56.6% | 0.579 | 0.594 |

| GLCM [13] | RBF | 66.2% | 75.1% | 69.3% | 0.704 | 0.732 |

| GLCM [13] | Polynomial | 73.1% | 80.4% | 76.7% | 0.766 | 0.751 |

| CJLBP [14] | Linear | 71.2% | 77.3% | 72.7% | 0.741 | 0.743 |

| LTrP [15] | Linear | 48.4% | 50.2% | 49.1% | 0.493 | 0.524 |

| AlexNet [17] | Linear | 94.1% | 96.3% | 95.2% | 0.952 | 0.966 |

| ResNet-50 [18] | Linear | 92.2% | 96.4% | 94.5% | 0.942 | 0.952 |

| VGG-19 [19] | Linear | 92.1% | 92.1% | 92.2% | 0.921 | 0.851 |

| GoogleNet [20] | Linear | 85.1% | 93.1% | 88.2% | 0.889 | 0.873 |

| Inceptionv3 [21] | Linear | 77.1% | 92.3% | 83.3% | 0.840 | 0.862 |

| CoralNet | – | 92.1% | 97.3% | 95.0% | 0.950 | 0.962 |

| BoF | Linear | 99.1% | 99.0% | 99.08% | 0.995 | 0.982 |

| Technique’s Name | SVM Kernel | Sensitivity | Specificity | Accuracy | F1-Score | Cohen’s Kappa |

|---|---|---|---|---|---|---|

| LBP [10] | Quadratic | 70.56% | 70.56% | 70.60% | 0.706 | 0.411 |

| HOG [11] | Linear | 94.64% | 94.40% | 94.40% | 0.945 | 0.889 |

| LETRIST [12] | Linear | 58.2% | 61.7% | 58.6% | 0.599 | 0.534 |

| GLCM [13] | RBF | 69.2% | 78.1% | 72.3% | 0.714 | 0.702 |

| GLCM [13] | Cubic | 72.1% | 81.2% | 77.3% | 0.756 | 0.731 |

| CJLBP [14] | Linear | 73.2% | 75.3% | 73.7% | 0.751 | 0.723 |

| LTrP [15] | Linear | 50.2% | 53.2% | 51.1% | 0.529 | 0.506 |

| AlexNet [17] | Linear | 97.78% | 97.78% | 97.80% | 0.978 | 0.956 |

| ResNet-50 [18] | Linear | 98.91% | 98.89% | 98.90% | 0.989 | 0.978 |

| VGG-19 [19] | Linear | 94.3% | 94.3% | 94.5% | 0.943 | 0.884 |

| GoogleNet [20] | Linear | 93.33% | 93.33% | 93.33% | 0.933 | 0.867 |

| Inceptionv3 [21] | Linear | 95.56% | 95.56% | 95.60% | 0.956 | 0.911 |

| CoralNet | – | 92.1% | 97.3% | 95.0% | 0.950 | 0.962 |

| BoF | Linear | 99.2% | 98.9% | 99.0% | 0.985 | 0.984 |

| Technique’s Name | Classifier | Sensitivity | Specificity | Accuracy | F1-Score | Cohen’s Kappa |

|---|---|---|---|---|---|---|

| LBP [10] | SVM | 69.3% | 71.4% | 69.8% | 0.689 | 0.691 |

| HOG [11] | SVM | 74.42% | 60.05% | 75.2% | 0.665 | 0.621 |

| LETRIST [12] | SVM | 55.3% | 58.5% | 55.4% | 0.569 | 0.584 |

| GLCM [13] | SVM | 65.2% | 74.1% | 68.3% | 0.694 | 0.722 |

| CJLBP [14] | SVM | 70.2% | 76.3% | 71.7% | 0.731 | 0.733 |

| LTrP [15] | SVM | 47.4% | 49.2% | 48.1% | 0.483 | 0.514 |

| AlexNet [17] | SVM | 86.37% | 83.73% | 92.20% | 0.850 | 0.826 |

| ResNet-50 [18] | SVM | 85.43% | 85.80% | 92.60% | 0.856 | 0.852 |

| VGG-19 [19] | SVM | 82.1% | 82.1% | 82.2% | 0.821 | 0.781 |

| GoogleNet [20] | SVM | 80.55% | 80.51% | 88.60% | 0.805 | 0.803 |

| Inceptionv3 [21] | SVM | 81.10% | 76.44% | 86.30% | 0.787 | 0.761 |

| CoralNet | – | 91.1% | 96.3% | 94.0% | 0.940 | 0.952 |

| BoF | SVM | 98.1% | 98.0% | 98.11% | 0.985 | 0.972 |

| Technique’s Name | Classifier | Sensitivity | Specificity | Accuracy | F1-Score | Cohen’s Kappa |

|---|---|---|---|---|---|---|

| LBP [10] | SVM | 67.5% | 70.2% | 67.8% | 0.676 | 0.683 |

| HOG [11] | SVM | 75.37% | 61.15% | 76.35% | 0.665 | 0.634 |

| LETRIST [12] | SVM | 56.50% | 59.63% | 56.56% | 0.585 | 0.591 |

| GLCM [13] | SVM | 64.21% | 73.89% | 67.24% | 0.683 | 0.710 |

| CJLBP [14] | SVM | 72.54% | 78.65% | 73.45% | 0.752 | 0.753 |

| LTrP [15] | SVM | 46.39% | 49.89% | 48.45% | 0.476 | 0.503 |

| AlexNet [17] | SVM | 90.13% | 91.84% | 93.80% | 0.910 | 0.916 |

| ResNet-50 [18] | SVM | 88.53% | 93.90% | 93.23% | 0.893 | 0.891 |

| VGG-19 [19] | SVM | 85.70% | 85.70% | 85.80% | 0.858 | 0.803 |

| GoogleNet [20] | SVM | 90.85% | 90.61% | 94.30% | 0.907 | 0.901 |

| Inceptionv3 [21] | SVM | 86.52% | 83.39% | 90.81% | 0.865 | 0.878 |

| CoralNet | – | 91.1% | 96.3% | 94.0% | 0.940 | 0.952 |

| BoF | SVM | 98.07% | 98.10% | 98.09% | 0.983 | 0.970 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jamil, S.; Rahman, M.; Haider, A. Bag of Features (BoF) Based Deep Learning Framework for Bleached Corals Detection. Big Data Cogn. Comput. 2021, 5, 53. https://doi.org/10.3390/bdcc5040053

Jamil S, Rahman M, Haider A. Bag of Features (BoF) Based Deep Learning Framework for Bleached Corals Detection. Big Data and Cognitive Computing. 2021; 5(4):53. https://doi.org/10.3390/bdcc5040053

Chicago/Turabian StyleJamil, Sonain, MuhibUr Rahman, and Amir Haider. 2021. "Bag of Features (BoF) Based Deep Learning Framework for Bleached Corals Detection" Big Data and Cognitive Computing 5, no. 4: 53. https://doi.org/10.3390/bdcc5040053

APA StyleJamil, S., Rahman, M., & Haider, A. (2021). Bag of Features (BoF) Based Deep Learning Framework for Bleached Corals Detection. Big Data and Cognitive Computing, 5(4), 53. https://doi.org/10.3390/bdcc5040053