1. Introduction

The Internet is a major source of health information [

1,

2,

3]. The public consumes health content and advice from a wide range of actors including public health agencies, corporations, healthcare professionals and increasingly influencers of all levels [

2,

4]. In the last decade, with the rise of social media, the volume and sources of health information have multiplied dramatically with an associated rate of propagation. Health information on social media is not subject to the same degree of filtering and quality control by professional gatekeepers common in either public health or commercial sources and is particularly prone to being out of date, incomplete and inaccurate [

5]. Furthermore, there is extensive evidence that individuals and organisations promote health information that is contrary to accepted scientific evidence or public policy, and in extreme cases, is deceptive, unethical and misleading [

6,

7]. This is also true in the context of online communications relating to the COVID-19 pandemic [

8,

9]. Within two months of the disclosure of the first COVID-19 case in Wuhan, China, the Director General of the World Health Organisation (WHO) was prompted to declare: “

We’re not just fighting an epidemic; we’re fighting an infodemic” [

10]. During the first year of the pandemic, the WHO identified over 30 discrete topics that are the subject of misinformation in the COVID-19 discourse [

11].

During the first year of the pandemic, Brazil was one of the global epicentres of the COVID-19 pandemic, both in terms of infections and deaths. From 3 January 2020 to 9 February 2021, the WHO reported 9,524,640 confirmed cases of COVID-19 and 231,534 COVID-19-related deaths in Brazil, the third highest rate in the world after the USA and India [

12]. As most governments worldwide, the Brazilian government was blindsided by the rapid transmission and impact of COVID-19. For much of 2020, COVID-19 was a pandemic characterised by uncertainty in transmission, pathogenicity and strain-specific control options [

13]. Against this backdrop, the Brazilian government had to balance disease mitigation through interventions such as social distancing, travel restrictions and closure of educational institutions and non-essential businesses with the effects they have on Brazilian society and economy. The success of such strategies depends on the effectiveness of government authorities executing both communication and enabling measures, and the response of individuals and communities [

14]. Unfortunately, research suggests significant incongruities between advice offered by Brazilian federal government officials and public health agencies [

15,

16,

17].

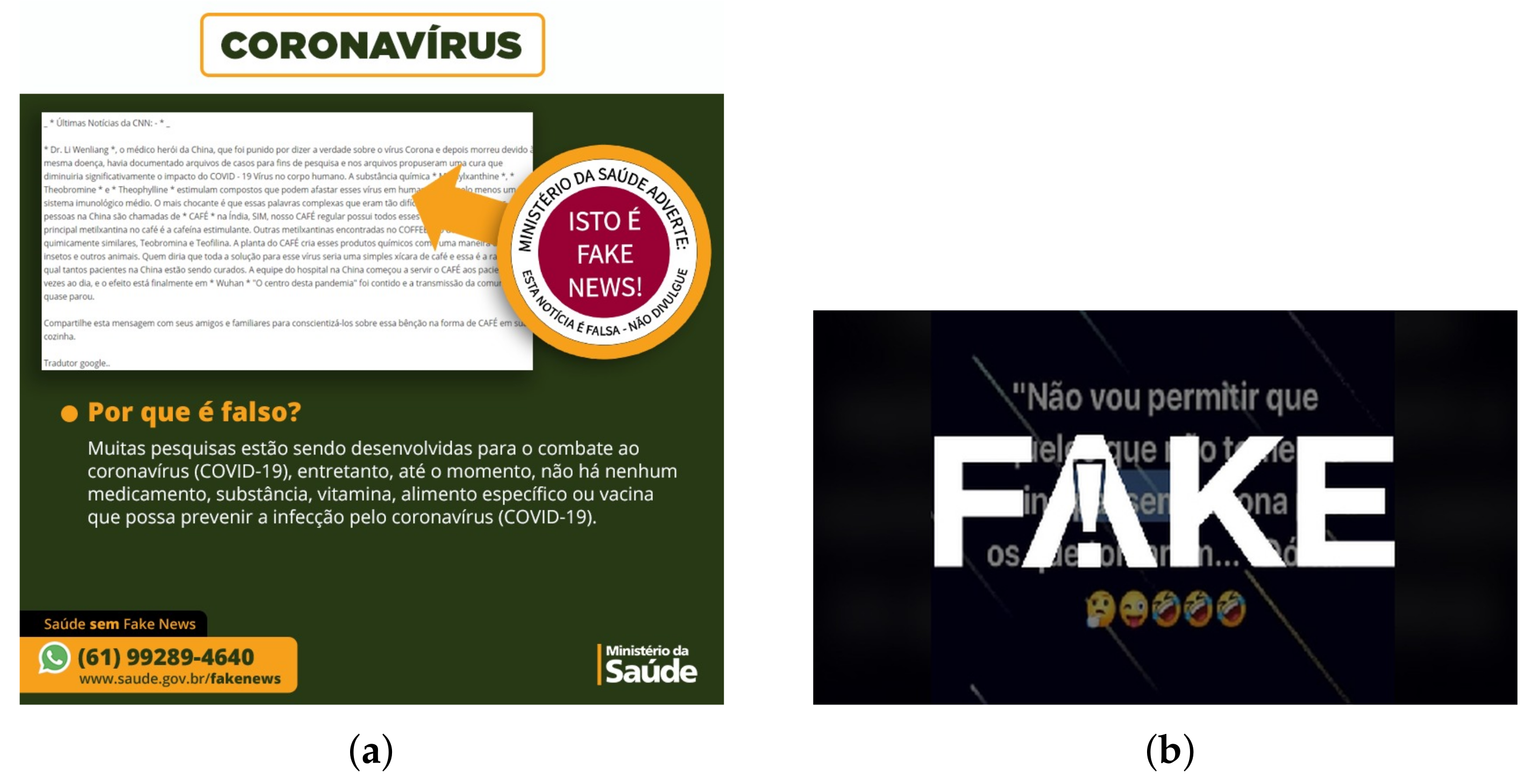

Even before the COVID-19 pandemic, Brazil faced challenges in health literacy levels [

18] and increasing distrust in vaccines and vaccination [

19]. It is established that humans can be both irrational and vulnerable when distinguishing between truth and falsehood [

20]. This situation is exacerbated where truths and falsehoods are repeated by traditional sources of trustworthy information, namely the news media and government, and then shared and amplified by peers via social media. In Brazil, the threat of fake COVID-19 news resulted in the Ministry of Health launching an initiative,

Saúde sem Fake News [

21], in an effort to identify and counteract the spread of fake news. There have been no updates since June 2020. In the absence of adequate countermeasures to stem the rise of fake news and against the backdrop of conflicting communications from federal government and public health agencies, Cardoso et al. [

18] described Brazil as “

… a fertile field for misinformation that hinders adequate measures taken to mitigate COVID-19”.

The complexity of dealing with communication during a health crisis is quite high, as social media, compared with traditional media, is more difficult to monitor, track and analyse [

22], and people can easily become misinformed [

23]. Given this context, it is important to develop mechanisms to monitor and mitigate the dissemination of online fake news at scale [

24]. To this end, machine learning and deep learning models provide a potential solution. However, most of the current literature and datasets are based on resource-rich languages, such as English, to train and test models, and studies with other languages, such as Portuguese, face many challenges to find or to produce benchmark datasets. Our focus is on fake news about COVID-19 in the Brazilian Portuguese language that circulated in Brazil during the period of January 2020 to February 2021. Portuguese is a pluricentric or polycentric language, in that it possesses more than one standard (national) variety, e.g., European Portuguese and Brazilian Portuguese, as well as African varieties. Furthermore, Brazilian Portuguese has been characterised as highly diglossic, i.e., it has a formal traditional form of the language, the so-called H-variant, and a vernacular form, the L-variant, as well as a wide range of dialects [

25,

26]. The COVID-19 pandemic introduced new terms and new public health concepts to the global linguistic repertoire, which in turn introduced a number of language challenges, not least problems related to the translation and use of multilingual terminology in public health information and medical research from dominant languages [

27]. Consequently, building models based on English language translation which do not take into account the specific features of the Brazilian Portuguese language and the specific language challenges of COVID-19 are likely to be inadequate, thus motivating this work.

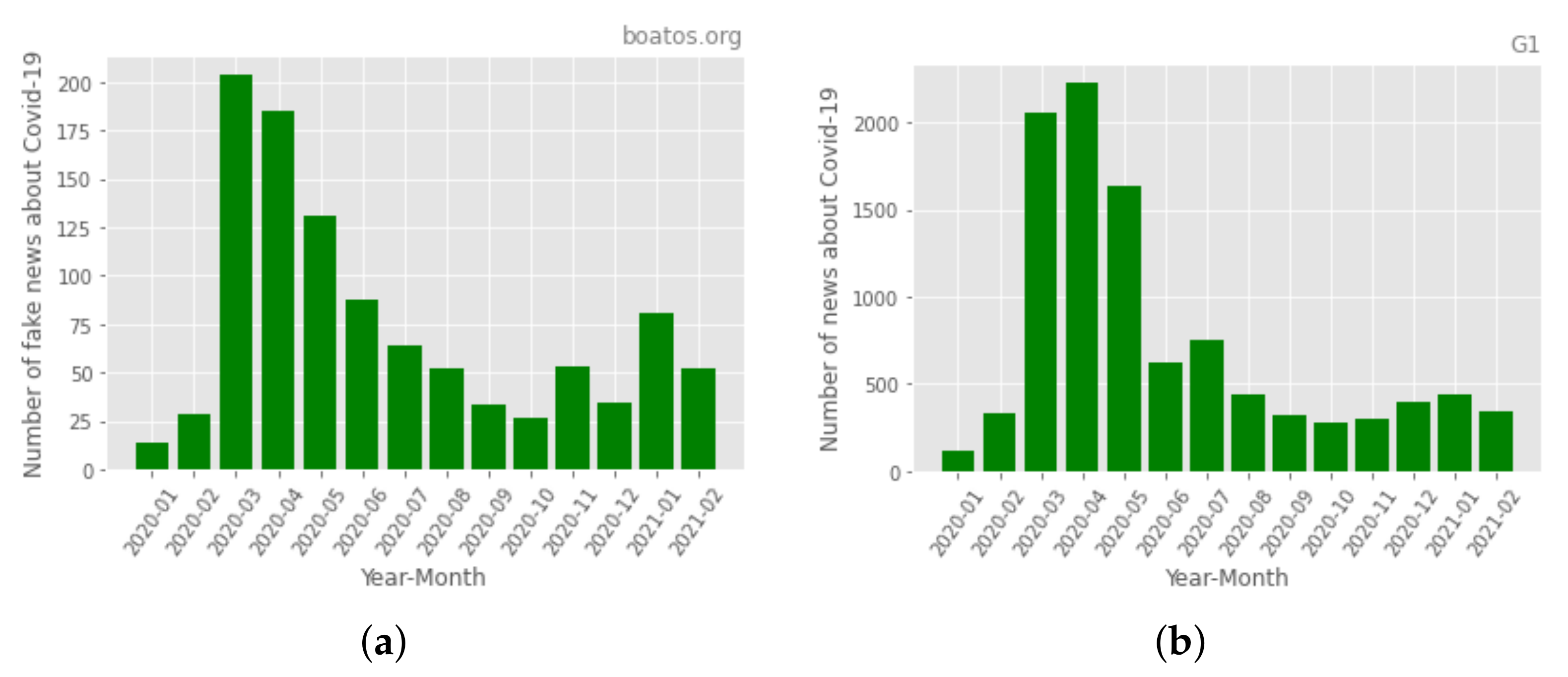

This article makes a number of contributions. Firstly, we provide a dataset composed of 11,382 articles in the Portuguese language comprising 10,285 articles labelled “true news” and 1047 articles labelled “fake news” in relation to COVID-19. Secondly, we present an exploratory data analysis on COVID-19 fake news that circulated in Brazil during the first year of the pandemic. Thirdly, we propose and compare machine learning and deep learning models to detect COVID-19 fake news in the Brazilian Portuguese language, and analyse the impact of removing stop words from the messages.

2. Background

Fake news has been defined both in broad and narrow terms and can be characterised by authenticity, intention and whether it is news at all [

28]. The broad definition includes non-factual content that misleads the public (e.g., deceptive and false news, disinformation and misinformation), rumour and satire, amongst others [

28]. The narrow definition focuses on intentionally false news published by a recognised news outlet [

28]. Extent research focuses on differentiating between fake news and true news, and the types of actors that propagate fake news. This paper is focused on the former, i.e., the attributes of the fake news itself. As such, it is concerned with identifying fake news based on characteristics such as writing style and quality [

29], word counts [

30], sentiment [

31] and topic-agnostic features (e.g., a large number of ads or a frequency of morphological patterns in text) [

32].

As discussed in the Introduction, the Internet, and in particular social media, is transforming public health promotion, surveillance, public response to health crises, as well as tracking disease outbreaks, monitoring the spread of misinformation and identifying intervention opportunities [

33,

34]. The public benefits from improved and convenient access to easily available and tailored information in addition to the opportunity to potentially influence health policy [

33,

35]. It has had a liberating effect on individuals, enabling users to search for both health and vaccine-related content and exchange information, opinions and support [

36,

37]. Notwithstanding this, research suggests that there are significant concerns about information inaccuracy and potential risks associated with the use of inaccurate health information, amongst others [

38,

39,

40]. The consequences of misinformation, disinformation and misinterpretation of health information can interfere with attempts to mitigate disease outbreak, delay or result in failure to seek or continue legitimate medical treatment as well as interfere with sound public health policy and attempts to disseminate public health messages by undermining trust in health institutions [

23,

41].

Historically, the news media has played a significant role in Brazilian society [

42]. However, traditional media has been in steady decline in the last decade against the backdrop of media distrust (due to perceived media bias and corruption) and the rise of the Internet and social media [

43]. According to the Reuters Institute Digital News Report 2020 [

44], the Internet (including social media) is the main source of news in Brazil. It is noteworthy that Brazil is one of a handful of countries where across all media sources the public prefers partial news, a factor that can create a false sense of uniformity and validity and foster the propagation of misinformation [

44]. While Facebook is a source of misinformation concern in most countries worldwide, Brazil is relatively unique in that WhatsApp is a significant channel of news and misinformation [

44]. This preference of partial news sources and social media in Brazil has lead to significant issues in the context of COVID-19.

From the beginning of the COVID-19 pandemic, the WHO has reported on a wide variety of misinformation related to COVID-19 [

11]. These include unsubstantiated claims and conspiracy theories related to hydroxychloroquine, reduced risk of infection, 5G mobile networks and sunny and hot weather, amongst others [

11]. What differs in the Brazilian context is that the Brazilian public has been exposed to statements from the political elite, including the Brazilian President, that have contradicted the Brazilian Ministry of Health, pharmaceutical companies and health experts. Indeed, the political elite in Brazil have actively promoted many of the misleading claims identified by the WHO. This has included statements promoting erroneous information on the effects of COVID-19, “cures” and treatments unsupported by scientific evidence and an end to social distancing, amongst others [

45]. These statements by government officials become news and lend legitimacy to them. As vaccines and vaccination programmes to mitigate COVID-19 become available, such statements sow mistrust in health systems but provide additional legitimacy to anti-vaccination movements that focus on similar messaging strategies, e.g., questioning the safety and effectiveness of vaccines, sharing conspiracy theories, publishing general misinformation and rumours, promoting that Big Pharma and scientific experts are not to be trusted, stating that civil liberties and human’s freedom of choice are endangered, questioning whether vaccinated individuals spread diseases and promoting alternative medicine [

46,

47,

48].

While vaccines and vaccinations are a central building block of efforts to control and reduce the impact of COVID-19, vaccination denial and misinformation propagated by the anti-vaccination movement represents a tension between freedom of speech and public health. Social network platforms have been reluctant to intervene on this topic and on misinformation in general [

49], however, there have been indicators that this attitude is changing, particularly in the context of COVID-19 [

50]. However, even where there is a desire to curb misinformation by platforms, the identification of fake news and misinformation, in general, is labour intensive and particularly difficult to moderate on closed networks such as WhatsApp. To scale such monitoring requires automation. While over 282 million people speak Portuguese worldwide, commercial tools and research has overwhelmingly focused on the most popular languages, namely English and Chinese. This may be due to the concentration of Portuguese speakers in a relatively small number of countries. Over 73% of native Portuguese speakers are located in Brazil and a further 24% in just three other countries—Angola, Mozambique and Portugal [

51]. As discussed earlier, it is important to note that Portuguese as a language is pluricentric and Brazilian Portuguese is highly diglossic, thus requiring native language datasets for accurate classification.

3. Related Works

Research on automated fake news detection typically falls in to two main categories, approaches based on knowledge, and those based on style [

20]. Style-based fake news detection, the focus of this article, attempts to analyse the writing style of the target article to identify whether there is an attempt to mislead the reader. These approaches typically rely on binary classification techniques to classify news as fake or not based on general textual features (lexicon, syntax, discourse, and semantic), latent textual features (word, sentence and document) and associated images [

20]. These are typically based on data mining and information retrieval, natural language processing (NLP) and machine learning techniques, amongst others [

20,

52]. This study compares machine learning and deep learning techniques for fake news detection.

There is well-established literature on the use of traditional machine learning for both knowledge-based and style-based detection. For example, naive Bayes [

53,

54], support vector machine (SVM) [

54,

55,

56,

57,

58], Random Forest [

59,

60], and XGBoost [

59,

61] are widely cited in the literature. Similarly, a wide variety of deep learning techniques have been used including convolutional neural networks (CNNs) [

62,

63,

64,

65] long short term memory (LSTM) [

66,

67], recurrent neural networks (RNN) and general recurrent units (GRU) models [

66,

67,

68], other deep learning neural networks architectures [

69,

70,

71] and ensemble approaches [

63,

72,

73].

While automated fake news detection has been explored in health and disease contexts, the volume of research has expanded rapidly since the commencement of the COVID-19 pandemic. While a comprehensive review of the literature is beyond the scope of this article, four significant trends are worthy of mention. Firstly, although some studies use a variety of news sources (e.g., [

74]) and multi-source datasets such as CoAID [

75], the majority of studies focus on data sets comprising social media data and specifically Twitter data, e.g., [

76,

77]. This is not wholly unsurprising as access to the Twitter API is easily accessible and the public data sets on the COVID-19 discourse have been made available, e.g., [

78,

79,

80]. Secondly, though a wide range of machine learning and deep learning techniques feature in studies including CNNs, LSTMs and others, there is a notable increase in the use of bidirectional encoder representations from transformers (BERT) [

74,

76,

77]. This can be explained by the relative recency and availability of BERT as a technique and early performance indicators. Thirdly, and related to the previous points, few datasets or research identified use a Brazilian Portuguese language corpus and a Brazilian empirical context. For example, the COVID-19 Twitter Chatter dataset features English, French, Spanish and German language data [

79]. CoAID does not identify its language, but all sources and search queries identified are English language only. The Real Worry Dataset is English language only [

80]. The dataset described in [

78] does feature a significant portion of Portuguese tweets, however, none of the keywords used are in the Portuguese language and the data is Twitter only. Similarly, the MM-COVID dataset features 3981 fake news items and 7192 trustworthy items in six languages including Portuguese [

81]. While Brazilian Portuguese is included, it would appear both European and Brazilian Portuguese are labelled as one homogeneous language, and the total number of fake Portuguese language items is relatively small (371).

Notwithstanding the foregoing, there has been a small number of studies that explore fake news in the Brazilian context. Galhardi et al. [

82] used data collected from the

Eu Fiscalizo, a crowdsourcing tool where users can send content that they believe is inappropriate or fake. Analysis suggests that fake news about COVID-19 is primarily related to homemade methods of COVID-19 prevention or cure (85%), largely disseminated via WhatsApp [

82]. While this study is consistent with other reports, e.g., [

44], it comprises a small sample (154 items) and classification is based on self-reports. In line with [

83,

84], Garcia Filho et al. [

85] examined temporal trends in COVID-19. Using Google Health Trends, they identified a sudden increase in interest in issues related to COVID-19 from March 2020 after the adoption of the first measures of social distance. Of specific interest to this paper is the suggestion by Garcia Filho et al. that unclear messaging between the President, State Governors and the Minister of Health may have resulted in a reduction in search volumes. Ceron et al. [

86] proposed a new Markov-inspired method for clustering COVID-19 topics based on evolution across a time series. Using a dataset 5115 tweets published by two Brazilian fact-checking organisations,

Aos Fatos and

Agência Lupa, their data also suggested the data clearly revealed a complex intertwining between politics and the health crisis during the period under study.

Fake news detection is a relatively new phenomenon. Monteiro et al. [

87] presented the first reference corpus in Portuguese focused on fake news, Fake.Br corpus, in 2018. The Fake.Br. corpus comprises 7200 true and fake news items and was used to evaluate an SVM approach to automatically classify fake news messages. The SVM model achieved 89% accuracy using five-fold cross validation. Subsequently, the Fake.Br corpus was used to evaluate other techniques to detect fake news. For example, Silva et al. [

88] compare the performance of six techniques to detect fake news, i.e., logistic regression, SVM, decision tree, Random Forest, bootstrap aggregating (bagging) and adaptive boosting (AdaBoost). The best F1 score, 97.1%, was achieved by logistic regression when stop words were not removed and the traditional bag-of-words (BoW) was applied to represent the text. Souza et al. [

89] proposed a linguistic-based method based on grammatical classification, sentiment analysis and emotions analysis, and evaluated five classifiers, i.e., naive Bayes, AdaBoost, SVM, gradient boost (GB) and K-nearest neighbours (KNN) using the Fake.Br corpus. GB presented the best accuracy, 92.53%, when using emotion lexicons as complementary information for classification. Faustini et al. [

90] also used the Fake.Br corpus and two other datasets, one comprising fake news disseminated via WhatsApp, as well as a dataset comprising tweets, to compare four different techniques in one-class classification (OCC)—SVM, document-class distance (DCD), EcoOCC (an algorithm based on k-means) and naive Bayes classifier for OCC. All algorithms performed similarly with the exception of the one-class SVM, which showed greater F-score variance.

More recently, the Digital Lighthouse project at the Universidade Federal do Ceara in Brazil has published a number of studies and datasets relating to misinformation on WhatsApp in Brazil. These include FakeWhatsApp.BR [

91] and COVID19.BR [

92,

93]. The FakeWhatsApp.BR dataset contains 282,601 WhatsApp messages from users and groups from all Brazilian states collected from 59 groups from July 2018 to November of 2018 [

91]. The FakeWhatsApp.BR corpus contains 2193 messages labelled misinformation and 3091 messages labelled non-misinformation [

91]. The COVID-19.BR contains messages from 236 open WhatsApp groups with at least 100 members collected from April 2020 to June 2020. The corpus contains 2043 messages, 865 labelled as misinformation and 1178 labelled as non-misinformation. Both datasets contain similar data, i.e., message text, time and date, phone number, Brazilian state, word count, character count and whether the message contained media [

91,

93]. Cabral et al. [

91] combined classic natural language processing approaches for feature extraction with nine different machine learning classification algorithms to detect fake news on WhatsApp, i.e., logistic regression, Bernoulli, complement naive Bayes, SVM with a linear kernel (LSVM), SVM trained with stochastic gradient descent (SGD), SVM trained with an RBF kernel, K-nearest neighbours, Random Forest (RF), gradient boosting and a multilayer perceptron neural network (MLP). The best performing results were generated by MLP, LSVM and SGD, with a best F1 score of 0.73, however, when short messages were removed, the best performing F1 score rose to 0.87. Using the COVID19.BR dataset, Martins et al. [

92] compared machine learning classifiers to detect COVID-19 misinformation on WhatsApp. Similar to their earlier work [

91], they tested LSVM and MLP models to detect misinformation in WhatsApp messages, in this case related to COVID-19. Here, they achieved a highest F1 score of 0.778; an analysis of errors indicated errors occurred primarily due to short message length. In Martins et al. [

93], they extend their work to detect COVID-19 misinformation in Brazilian Portuguese WhatsApp messages using bidirectional long–short term memory (BiLSTM) neural networks, pooling operations and an attention mechanism. This solution, called MIDeepBR, outperformed their previous proposal as reported in [

92] with an F1 score of 0.834.

In contrast with previous research, we build and present a new dataset comprising fake news in the Brazilian Portuguese language relating exclusively to COVID-19 in Brazil. In contrast with Martins et al. [

92] and Cabral et al. [

91], we do not use a WhatsApp dataset, which may due to its nature be dominated by L-variant Brazilian Portuguese. Furthermore, the dataset used in this study is over a longer period (12 months) compared with Martins et al. [

92,

93] and Cabral et al. [

91]. Furthermore, unlike Li et al. [

81], we specifically focus on the Brazilian Portuguese language as distinct from European or African variants. To this end, the scale of items in our dataset is significantly larger than, for example, the MM-COVID dataset. In addition to an exploratory data analysis of the content, we evaluate and compare machine learning and deep learning approaches for detecting fake news. In contrast with Martins et al. [

93], we include gated recurrent units (GRUs) and evaluate both unidirectional and bidirectional GRUs and LSTMs, as well as machine learning classifiers.

5. Detecting Fake News Using Machine Learning and Deep Learning

As discussed in

Section 3, machine learning and deep learning models have been widely applied in NLP. In this paper, we compare the performance of four supervised machine learning techniques as per [

59]—support vector machine (SVM), Random Forest, gradient boosting and naive Bayes—against four deep learning models—LSTM, Bi-LSTM, GRU and Bi-GRU as per [

102].

SVM is a non-parametric technique [

103] capable of performing data classification; it is commonly used in images and texts [

104]. SVM is based on hyper-plane construction and its goal is to find the optimal separating hyper-plane where the separating margin between two classes should be maximised. Random Forest and gradient boosting are tree-based classifiers that perform well for text classification [

104,

105]. Random Forest unifies several decision trees, building them randomly from a set of possible trees with

K random characteristics in each node. By using an ensemble, gradient boosting increases the robustness of classifiers while decreasing their variances and biases [

105]. Naive Bayes is a traditional classifier based on the Bayes theorem. Due to its relatively low memory use, it is considered computationally inexpensive compared with other approaches [

104]. Naive Bayes assigns the most likely class to a given example described by its characteristic vector. In this study, we consider the naive Bayes algorithm for multinomially distributed data.

As discussed, we evaluate four deep learning models—two unidirectional RNNS (LSTM and GRU) and two bidrectional RNNs (Bi-LSTM and Bi-GRU). Unlike unidirectional RNNs that process the input in a sequential manner and ignoring future context, in bidirectional RNNs (Bi-RNNS) the input is presented forwards and backwards to two separate recurrent networks, and both are connected to the same output layer [

106]. In this work, we use two types of bi-RNN: bidirectional long short-term memory (Bi-LSTM) and bidirectional gated recurrent unit (Bi-GRU) as per [

102], as they demonstrated a good performance in text classification tasks.

To train and test the models, we use the dataset presented in

Section 4.1 containing 1047 fake news items and 10,285 true news items. We applied a random undersampling technique to balance the dataset, where the largest class is randomly trimmed until it is the same size as the smallest class. The final dataset used to train and test the models comprises 1047 fake news items and 1047 true news items, totalling 2094 items. A total of 80% of the dataset was allocated for training and 20% for testing.

5.1. Evaluation Metrics

To evaluate the performance of the models, we consider the following metrics as per [

59]: accuracy, precision, recall, specificity and F1 score. These metrics are based on a confusion matrix, a cross table that records the number of occurrences between the true classification and the classification predicted by the model [

107]. It is composed of true positive (TP), true negative (TN), false positive (FP) and false negative (FN).

Accuracy is the percentage of correctly classified instances over the total number of instances [

108]. It is calculated as the sum of TP and TN divided by the total of samples, as shown in Equation (

1).

Precision is the number of class members classified correctly over the total number of instances classified as class members [

108]. It is calculated as the number of TP divided by the sum of TP and FP, as shown in Equation (

2).

Recall (sensitivity or true positive rate) is the number of class members classified correctly over the total number of class members [

108]. It is calculated as the number of TP divided by the sum of TP and FN, as shown in Equation (

3).

Specificity (or true negative rate) is the number of class members classified correctly as negative. It is calculated by the number of TN divided by the sum of TN and FP, as per Equation (

4).

To address vulnerabilities in machine learning systems designed to optimise precision and recall, weighted harmonic means can be used to balance between these metrics [

109]. This is known as the F1 score. It is calculated as per Equation (

5).

5.2. Experiments

In their evaluation of machine learning techniques for junk e-mail spam detection, Méndez et al. [

110] analysed the impact of stop-word removal, stemming and different tokenization schemes on the classification task. They argued that “

spammers often introduce “noise” in their messages using phrases like “MONEY!!”, “FREE!!!” or placing special characters into the words like “R-o-l-e?x””. As fake news can be viewed as similar type of noise within a document, we define two experiments in order to evaluate the impact of removing such noise from news items: (a) applying text preprocessing techniques and (b) using the raw text of the fake news item.

For the first experiment, we remove the stop words, convert text to lower case and apply vectorization to convert text to a matrix of token counts. For the second experiment, we use the raw text without removing stop words and without converting text to lowercase. We also apply vectorization to convert text to a matrix of token counts, similar to the first experiment.

Specifically for deep learning models, we used an embedding layer built using the FastText library (

https://fasttext.cc/, last accessed on 1 March 2022), which has a pre-trained word vector available in Portuguese. Padding was also performed so that all inputs had the same size. All deep learning models were trained using 20 epochs with a dropout in the recurrent layers with a probability of 20% to mitigate against overfitting [

111]. Grid search was used to optimise model hyper-parameters [

112].

It is hard to make a direct comparison with extant studies due to challenges in reproducibility. For example, similar data may not be available. Notwithstanding this, to facilitate comparison we benchmarked against the study reported by Paixão et al. [

113]. They also propose classifiers for fake news detection although without a focus on COVID-19; they use fake news relating to politics, TV shows, daily news, technology, economy and religion. We replicated one of their deep learning models, a CNN (see

Table 2), and tested it with our dataset for comparison.

5.3. Evaluation

5.3.1. Experiment 1—Applying Text Preprocessing

Table 3 and

Table 4 present the parameters and levels used in the grid search for machine learning and deep learning models, respectively, when applying text preprocessing techniques (Experiment 1). The values in bold indicate the best configuration of each model based on the F1 score.

Table 5 presents the classification results of ML and deep learning models using the configuration selected by the grid search technique (see

Table 3 and

Table 4). The test experiments were executed ten times, the metric results are the mean and their respective standard deviation.

For the machine learning models, the best accuracy (), precision () and F1 score () were achieved by the SVM model. The best recall () was achieved by the naive Bayes, and the best specificity () was achieved by the Random Forest. Gradient boosting presented the worst machine learning performance. In general, the machine learning models achieved recall at levels greater than , the recall obtained by the gradient boosting model.

There was significantly more variation in the deep learning models. The CNN model proposed by Paixão et al. [

113] outperformed our proposed models in three metrics: accuracy (91.22%), recall (88.54%) and F1 score (90.80%). This is explained by the relationships between the the F1 score and the precision and recall metrics, i.e., the F1 score is the harmonic mean between the precision and recall. Paixão et al.’s CNN model obtained high levels of those metrics. Our LSTM model presented the best precision (

) and the best specificity (

). These metrics are very important in the context of fake news detection. Specificity assesses the method’s ability to detect true negatives, that is, correctly detect true news as in fact true. On the other hand, precision assesses the ability to classify fake news as actually false. Having a model that achieves good results in these metrics is of paramount importance for automatic fake news classification. It is also important to mention that the bidirectional models (Bi-LSTM and Bi-GRU) presented competitive results with other models but did not outperform any other unidirectional model.

Figure 8 shows the receiver operating characteristic (ROC) curve and the area under the ROC curve (AUC) of all models when applying text preprocessing techniques. Gradient boosting presented the worst result with an AUC of 0.8552, which is expected since it presented the worst recall result, as shown in

Table 5.

Although the naive Bayes presented the best recall, it also presented the highest false positive rate resulting in an AUC of 0.8909. The Bi-GRU model presented the highest AUC value (0.8996), followed by Random Forest (0.8944) and Bi-LTSM (0.8941). It is important to note that the bidirectional models outperformed the respective unidirectional models in obtaining the best AUC results. The Bi-GRU and Bi-LSTM presented AUC values of 0.8996 and 0.8941, respectively, while the GRU and LSTM presented AUC values of 0.8803 and 0.8647, respectively.

For illustration purposes, we selected some fake news that were misclassified. We added the complete text of news, but the models process the sentences in lowercase and without stop words. “

Hospital de campanha do anhembi vazio sem colchões para os pacientes e sem atendimento” was misclassified by the SVM model and “

Agora é obrigatório o Microchip na Austrália! Muito protesto por lá.. Australianos vão às ruas para protestar contra o APP de rastreamento criado para controlar pessoas durante o Covid-19, contra exageros nas medidas de Lockdown, distanciamento social, uso de máscaras, Internet 5G, vacina do Bill Gates e microchip obrigatório. Dois líderes do protesto foram presos e multados.” was misclassified by the LTSM model. In addition, we noted that the fake news misclassified by the SVM model was also misclassified by the LSTM model. Consistent with Cabral et al. [

91] and Martins et al. [

92], misclassification would seem to be more likely where text is too short to be classified correctly by the models.

5.3.2. Experiment 2: Using Raw Text

Table 6 and

Table 7 present the parameters and levels used in the grid search for machine learning and deep learning models, respectively, when we use raw text of the news items (Experiment 2). The parameters and levels are the same as those used in Experiment 1. Again, the values in bold indicate the best configuration of each model based on F1 score.

Table 8 presents the classification results of machine learning and deep learning models when using raw text of the messages and the configuration selected by the grid search technique (see

Table 6 and

Table 7). The test experiments were executed 10 times; the metric results are the mean and their respective standard deviation.

For the machine learning models, Random Forest performed best across four metrics: accuracy (), precision (), specificity () and F1 score (). The best recall () was achieved by the naive Bayes. In contrast with Experiment 1, the SVM did not perform the best in any metric, although its metrics were all above 90% and close to the Random Forest values. Similar to Experiment 1, gradient boosting performed the worst of the machine learning models evaluated.

Regarding the evaluation of deep learning models and in contrast with Experiment 1, the bidirectional models presented better results than their respective unidirectional models. The Bi-LSTM presented the best accuracy (94.34%) while the Bi-GRU presented the best levels of recall (93.13%) and F1 score (94.03%). The CNN model proposed by Paixão et al. [

113] obtained the best results for precision (98.20%) and specificity (98.46%), however, it presented lower recall values (87.61%) which also impacts the F1 score.

Figure 9 presents the ROC curve and AUC results for the models when using raw text. The results are slightly closer to those presented in

Figure 8, showing that the proposed models are able to obtain good results using raw text or preprocessed texts. However, in contrast to using preprocessing text techniques, the Random Forest outperformed all other models, since it presented the lowest false positive rate and a relatively good true positive rate. The second and third best performing models were, in order, the Bi-LSTM and Bi-GRU models with AUC values of 0.8948 and 0.8927, respectively. The LSTM and SVM models presented the same AUC result (0.8924), while the GRU and naive Bayes models had lower results than those shown in

Figure 8, with AUCs of 0.8909 and 0.8729, respectively. The gradient boosting model presented the worst results for AUC, 0.843, similar to applying preprocessing techniques.

Again, for illustration purposes, we selected samples of fake news that were misclassified by the models. “Ative sua conta grátis pelo PERÍODO DE ISOLAMENTO! NETFLIX-USA.NET Netflix Grátis contra COVID-19 Ative sua conta grátis pelo PERÍODO DE ISOLAMENTO!” was misclassified by the Random Forest model. It is interesting to note that this fake news is not about the direct impact of COVID-19, but about an indirect impact, i.e., the availability of free Netflix during the pandemic. “Não há como ser Ministro da Saúde num país onde o presidente coloca sua mediocridade e ignorância a frente da ciência e não sente pelas vítimas desta pandemia. Nelson Teich. URGENTE Nelson Teich pede exoneração do Ministério da Saúde por não aceitar imposições de um Presidente medíocre e ignorante.” is a message that was misclassified by the Bi-GRU model. Again, this is not directly about the pandemic, but rather the resignation of Brazil’s Minister of Health during the pandemic. In contrast to Experiment 1 misclassification, here we believe the news item is too long, thus compromising the model’s ability to classify it correctly.

5.4. Discussion, Challenges and Limitations

One would normally expect deep learning models to outperform machine learning models due their ability to deal with more complex multidimensional problems. In our study, when applying text preprocessing techniques (

Table 5), machine learning presented better results than deep learning across all metrics analysed. In contrast to previous works that used the Fake.Br corpus [

87,

88,

89,

90], a dataset composed of 7200 messages, we used an equally balanced dataset composed of only 2094 messages, which could explain the relatively poor performance of the deep learning models. Deep learning models require large volumes of data for training [

114]. Since the dataset used was relatively small, we posit that the deep learning models were not able to adequately learn the fake news patterns from the data set once stop words were removed. The creation of a sufficiently large dataset is not an insignificant challenge in the context of COVID-19 fake news in Portuguese.

In general, the best results were obtained when using the raw text of the news items, i.e., without removing stop words and converting text to lowercase. Random Forest presented the best results for accuracy (

), precision (

) and specificity (

); the Bi-GRU model presented the best recall (

) and F1 score (

). The usage of raw text had a significant impact on the performance of the deep learning models performance; all deep learning metrics in Experiment 2 were above 91% (

Table 8). The performance of the machine learning models was also impacted positively. This is consistent with Méndez et al. [

110] suggestion that that noise removal may hamper the classification of non-traditional documents, such as junk e-mail, and in this case, fake news. In effect, pre-processing made the fake news item structure closer to the true news item structure, making it difficult to classify them. This is supported by the improvement in the recall metric in both machine learning and deep learning models. In our context, the recall assesses the model’s performance in correctly classifying fake news. A considerable improvement was achieved in practically all models, except naive Bayes, which had already achieved a good result with the pre-processed base (92.57%). Considering the pre-processed base, the recall of most models were below 90% (disregarding the naive Bayes), where the best recall of the machine and deep learning models were, respectively, SVM (89.69%) and Bi-GRU (86.85%). When using raw text, most models had a recall above 90%, with the exception of gradient boosting which had the worst result (89.84%). By running both experiments, one can see the impact of pre-processing clearly—keeping the stop words and the capital letters in the text contributes to improved correct classification of fake news.

This study has a number of limitations. A range of parameters and levels used in the grid search for finding the best configuration for each model were identified. Other configurations may result in better results. As discussed earlier, larger datasets prepared by specialist fact-checking organisations may result in different results. The evaluation of machine learning and deep learning models using accuracy, precision, recall, specificity and F1score presents challenges in describing differences in results. Complementarity has been suggested as a potential solution, particularly where F1 scores are very close [

109].

6. Conclusions and Future Work

In February 2020, two months after the disclosure of the first COVID-19 case in Wuhan, China, the WHO published a report declaring that the pandemic was accompanied by an “infodemic”. An exceptional volume of information about the COVID-19 outbreak started to be produced, however, not always from reliable sources, making it difficult to source adequate guidance on this new health threat. The spread of fake news represents a serious public health issue that can endanger lives around the world.

While machine learning does not usually require deep linguistic knowledge, pluricentric and highly diglossic languages, such as Brazilian Portuguese, require specific solutions to achieve accurate translation and classification, particularly where meaning may be ambiguous or misleading as in the case of fake news. Such challenges are further exacerbated when new terms evolve or are introduced in to linguistic repertoires, as in the case of COVID-19. Given the scale and negative externalities of health misinformation due to COVID-19, it is not surprising that there has been an increase in recent efforts to produce fake news datasets in Brazilian Portuguese. We add to this effort by providing a new dataset composed of 1047 fake news items and 10,285 news items related to COVID-19 in Portuguese that circulated in Brazil in the last year. Based on the fake news items, we performed an exploratory data analysis, exploring the main themes and their textual attributes. Vaccines and vaccination were the central themes of the fake news that circulated in Brazil during the focal period.

We also proposed and evaluated machine learning and deep learning models to automatically classify COVID-19 fake news in Portuguese. The results show that, in general, SVM presented the best results, achieving 98.28% specificity. All metrics of all models were improved when using the raw text of the messages (the only exception was the precision of SVM, but the difference was very little, 0.08%).

As future work, we plan to increase the dataset with more fake news featuring specific L-variant and H-variant classifications as well as other dialectal features, as well as the source of the fake news (websites, WhatsApp, Facebook, Twitter etc.), and the type of multimedia used (video, audio and image). This extended dataset will not only aid in the refinement of fake news detection but allow researchers to explore other aspects of the fake news phenomenon during COVID-19, including detection of pro-vaccination, anti-vaccination and vaccine-hesitant users, content, motivation, topics and targets of content and other features including stigma. Furthermore, future research should consider the diffusion and impact of fake news and explore both the extent of propagation, the type of engagement and actors involved, including the use of bots. The detection of new types of fake news, particularly in a public health context, can inform public health responses but also optimise platform moderation systems. To this end, research on the use of new transformer-based deep learning architectures such as BERT and GPT-3 may prove fruitful.