Artificial Intelligence in the Interpretation of Videofluoroscopic Swallow Studies: Implications and Advances for Speech–Language Pathologists

Abstract

:1. Introduction

2. Background

2.1. Overview of Artificial Intelligence

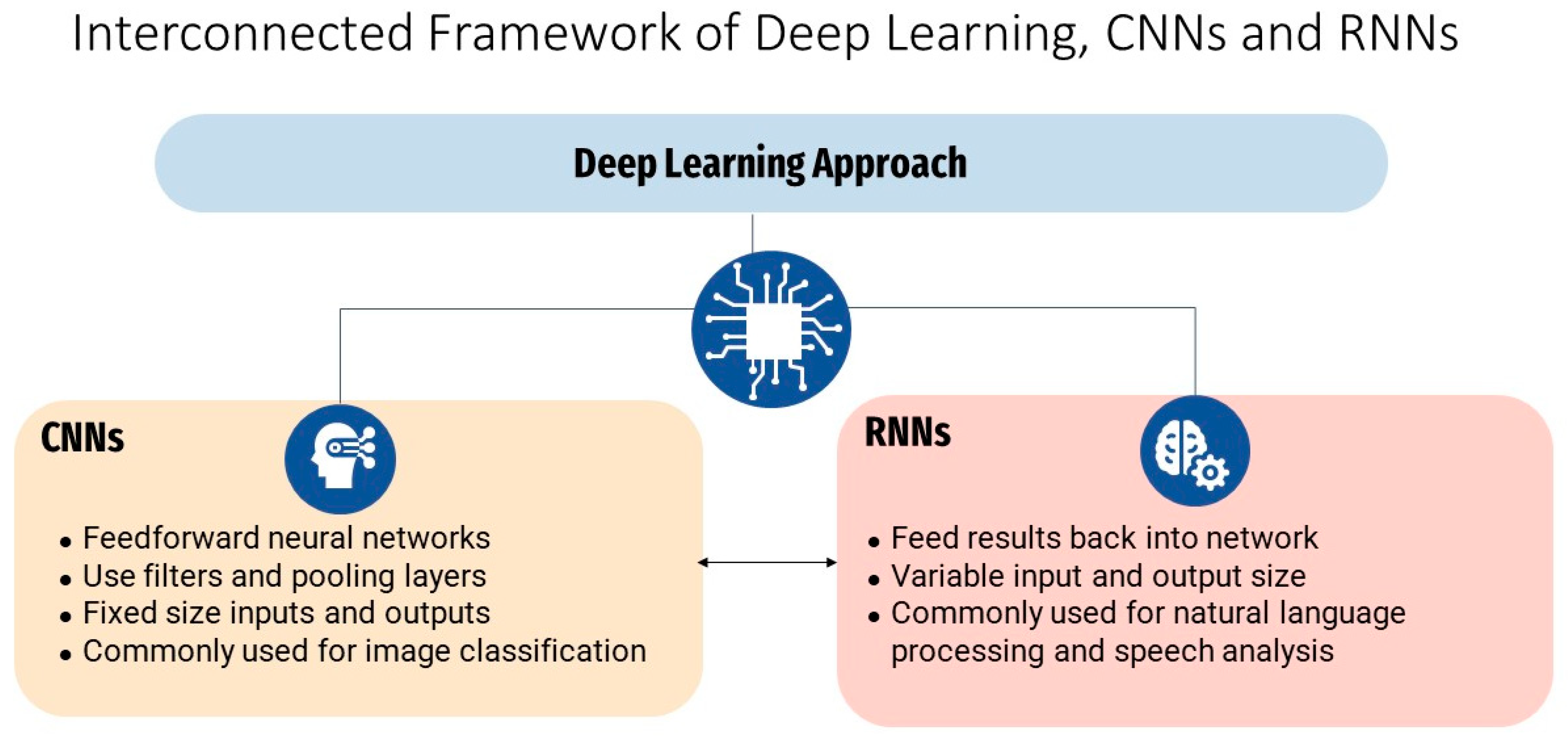

2.2. Overview of Machine Learning and Deep Learning

2.3. DL Applications for Medical Imaging

3. Aims and Methodology

Literature Selection

4. Results

4.1. Detection of Aspiration

4.2. Temporal Parameters of Swallowing Function

4.3. Hyoid Bone Movement

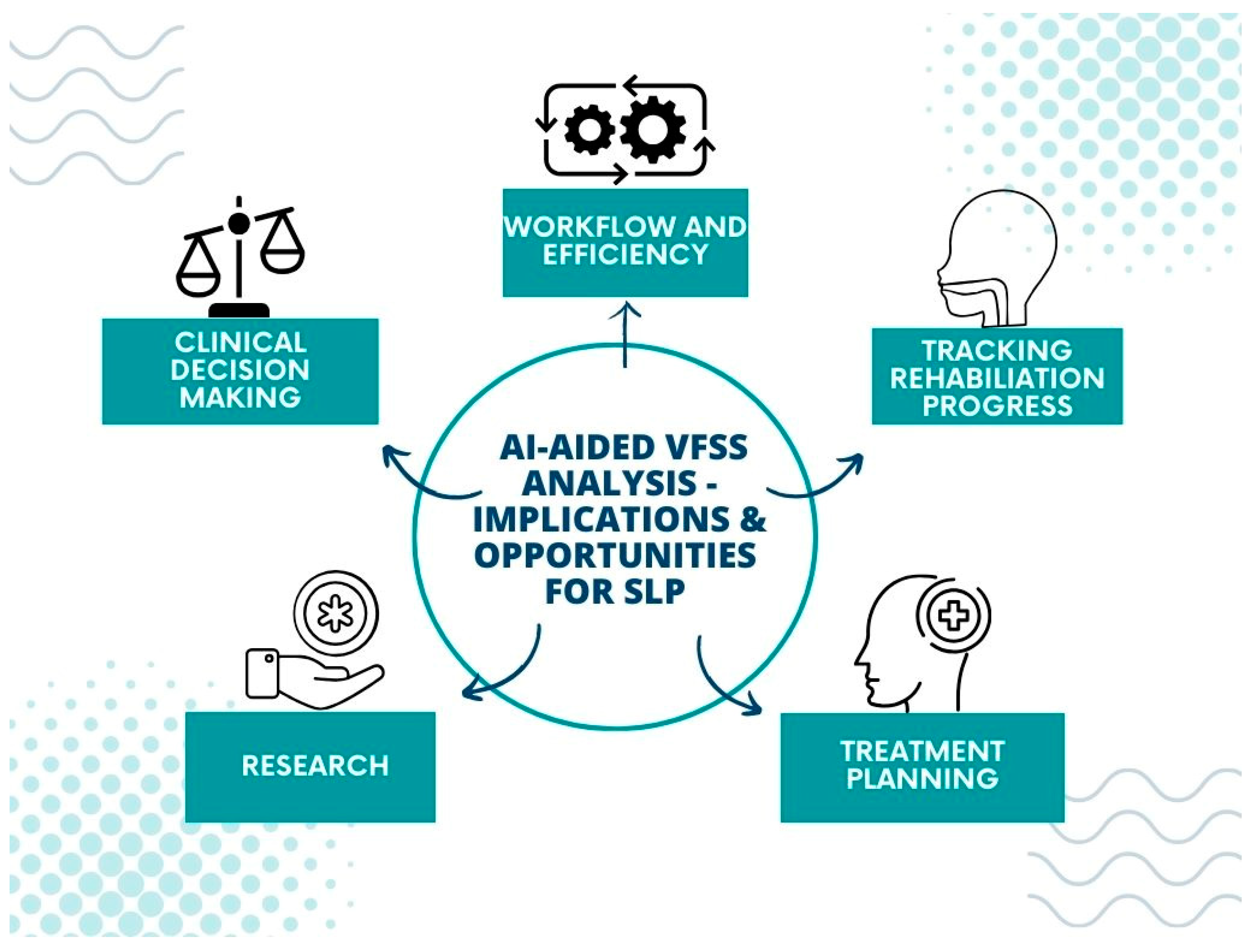

5. Implications for Speech–Language Pathology

5.1. Clinical Applications of AI Detection of Laryngeal Penetration and Aspiration

5.2. Clinical Applications of AI Measurement of Temporal Parameters of Swallowing

5.3. Clinical Applications of Hyoid Bone Movement Detection Using AI

6. Limitations and Future Directions

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- O’Rourke, F.; Vickers, K.; Upton, C.; Chan, D. Swallowing and Oropharyngeal Dysphagia. Clin. Med. 2014, 14, 196–199. [Google Scholar] [CrossRef]

- Azer, S.A.; Kanugula, A.K.; Kshirsagar, R.K. Dysphagia. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2023. [Google Scholar]

- Ekberg, O.; Hamdy, S.; Woisard, V.; Wuttge-Hannig, A.; Ortega, P. Social and Psychological Burden of Dysphagia: Its Impact on Diagnosis and Treatment. Dysphagia 2002, 17, 139–146. [Google Scholar] [CrossRef] [PubMed]

- Smith, R.; Bryant, L.; Hemsley, B. The True Cost of Dysphagia on Quality of Life: The Views of Adults with Swallowing Disability. Int. J. Lang. Commun. Disord. 2023, 58, 451–466. [Google Scholar] [CrossRef] [PubMed]

- Altman, K.W.; Yu, G.; Schaefer, S.D. Consequence of Dysphagia in the Hospitalized Patient: Impact on Prognosis and Hospital Resources. Arch. Otolaryngol. Head Neck Surg. 2010, 136, 784–789. [Google Scholar] [CrossRef] [PubMed]

- Speech Pathology Australia Clinical Guidelines. Available online: https://www.speechpathologyaustralia.org.au/SPAweb/Members/Clinical_Guidelines/spaweb/Members/Clinical_Guidelines/Clinical_Guidelines.aspx?hkey=f66634e4-825a-4f1a-910d-644553f59140 (accessed on 28 August 2023).

- Scope of Practice in Speech-Language Pathology. Available online: https://www.asha.org/policy/sp2016-00343/ (accessed on 28 August 2023).

- Barnaby-Mann, G.; Lenius, K. The Bedside Examination in Dysphagia. Phys. Med. Rehabil. Clin. N. Am. 2008, 19, 747–768. [Google Scholar] [CrossRef]

- Garand, K.L.F.; McCullough, G.; Crary, M.; Arvedson, J.C.; Dodrill, P. Assessment across the Life Span: The Clinical Swallow Evaluation. Available online: https://pubs.asha.org/doi/epdf/10.1044/2020_AJSLP-19-00063 (accessed on 23 August 2023).

- Lynch, Y.T.; Clark, B.J.; Macht, M.; White, S.D.; Taylor, H.; Wimbish, T.; Moss, M. The Accuracy of the Bedside Swallowing Evaluation for Detecting Aspiration in Survivors of Acute Respiratory Failure. J. Crit. Care 2017, 39, 143–148. [Google Scholar] [CrossRef]

- DePippo, K.L.; Holas, M.A.; Reding, M.J. Validation of the 3-oz Water Swallow Test for Aspiration Following Stroke. Arch. Neurol. 1992, 49, 1259–1261. [Google Scholar] [CrossRef]

- McCullough, G.H.; Rosenbek, J.C.; Wertz, R.T.; McCoy, S.; Mann, G.; McCullough, K. Utility of Clinical Swallowing Examination Measures for Detecting Aspiration Post-Stroke. J. Speech Lang. Hear. Res. 2005, 48, 1280–1293. [Google Scholar] [CrossRef]

- Desai, R.V. Build a Case for Instrumental Swallowing Assessments in Long-Term Care. Available online: https://leader.pubs.asha.org/doi/10.1044/leader.OTP.24032019.38 (accessed on 23 August 2023).

- Warner, H.; Coutinho, J.M.; Young, N. Utilization of Instrumentation in Swallowing Assessment of Surgical Patients during COVID-19. Life 2023, 13, 1471. [Google Scholar] [CrossRef]

- Costa, M.M.B. Videofluoroscopy: The Gold Standard Exam for Studying Swallowing and Its Dysfunction. Arq. Gastroenterol. 2010, 47, 327–328. [Google Scholar] [CrossRef]

- Kerrison, G.; Miles, A.; Allen, J.; Heron, M. Impact of Quantitative Videofluoroscopic Swallowing Measures on Clinical Interpretation and Recommendations by Speech-Language Pathologists. Dysphagia 2023, 38, 1528–1536. [Google Scholar] [CrossRef] [PubMed]

- Hossain, I.; Roberts-South, A.; Jog, M.; El-Sakka, M.R. Semi-Automatic Assessment of Hyoid Bone Motion in Digital Videofluoroscopic Images. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2014, 2, 25–37. [Google Scholar] [CrossRef]

- Natarajan, R.; Stavness, I.; Pearson, W. Semi-Automatic Tracking of Hyolaryngeal Coordinates in Videofluoroscopic Swallowing Studies. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2017, 5, 379–389. [Google Scholar] [CrossRef]

- Lee, W.H.; Chun, C.; Seo, H.G.; Lee, S.H.; Oh, B.-M. STAMPS: Development and Verification of Swallowing Kinematic Analysis Software. Biomed. Eng. OnLine 2017, 16, 120. [Google Scholar] [CrossRef] [PubMed]

- Kellen, P.M.; Becker, D.L.; Reinhardt, J.M.; Van Daele, D.J. Computer-Assisted Assessment of Hyoid Bone Motion from Videofluoroscopic Swallow Studies. Dysphagia 2010, 25, 298–306. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.K.; Choo, Y.J.; Choi, G.S.; Shin, H.; Chang, M.C.; Chang, M.; Park, D. Deep Learning Analysis to Automatically Detect the Presence of Penetration or Aspiration in Videofluoroscopic Swallowing Study. J. Korean Med. Sci. 2022, 37, e42. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.T.; Park, E.; Hwang, J.-M.; Jung, T.-D.; Park, D. Machine Learning Analysis to Automatically Measure Response Time of Pharyngeal Swallowing Reflex in Videofluoroscopic Swallowing Study. Sci. Rep. 2020, 10, 14735. [Google Scholar] [CrossRef] [PubMed]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial Intelligence in Healthcare: Past, Present and Future. Stroke Vasc. Neurol. 2017, 2, e000101. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine Learning and Deep Learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine Learning: Trends, Perspectives, and Prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef] [PubMed]

- Busnatu, S.; Niculescu, A.G.; Bolocan, A.; Petrescu, G.E.D.; Păduraru, D.N.; Năstasă, I.; Lupușoru, M.; Geantă, M.; Andronic, O.; Grumezescu, A.M.; et al. Clinical Applications of Artificial Intelligence—An Updated Overview. J. Clin. Med. 2022, 11, 2265. [Google Scholar] [CrossRef] [PubMed]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K.; et al. CheXNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning. arXiv 2017, arXiv:1711.05225. [Google Scholar]

- Paré, G.; Kitsiou, S. Chapter 9—Methods for Literature Reviews. In Handbook of eHealth Evaluation: An Evidence-Based Approach [Internet]; Lau, F., Kuziemsky, C., Eds.; University of Victoria: Victoria, BC, USA, 27 February 2017. Available online: https://www.ncbi.nlm.nih.gov/books/NBK481583/ (accessed on 1 October 2023).

- Ferrari, R. Writing narrative style literature reviews. Med. Writ. 2015, 24, 4. [Google Scholar] [CrossRef]

- Langmore, S.E.; Terpenning, M.S.; Schork, A.; Chen, Y.; Murray, J.T.; Lopatin, D.; Loesche, W.J. Predictors of Aspiration Pneumonia: How Important Is Dysphagia? Dysphagia 1998, 13, 69–81. [Google Scholar] [CrossRef]

- Iida, Y.; Näppi, J.; Kitano, T.; Hironaka, T.; Katsumata, A.; Yoshida, H. Detection of Aspiration from Images of a Videofluoroscopic Swallowing Study Adopting Deep Learning. Oral Radiol. 2023, 39, 553–562. [Google Scholar] [CrossRef]

- Lee, S.J.; Ko, J.Y.; Kim, H.I.; Choi, S.I. Automatic Detection of Airway Invasion from Videofluoroscopy via Deep Learning Technology. Appl. Sci. 2020, 10, 6179. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, H.I.; Park, G.S.; Kim, S.Y.; Choi, S.I.; Lee, S.J. Reliability of Machine and Human Examiners for Detection of Laryngeal Penetration or Aspiration in Videofluoroscopic Swallowing Studies. J. Clin. Med. 2021, 10, 2681. [Google Scholar] [CrossRef]

- Bandini, A.; Steele, C.M. The Effect of Time on the Automated Detection of the Pharyngeal Phase in Videofluoroscopic Swallowing Studies. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Mexico City, Mexico, 1–5 November 2021; pp. 3435–3438. [Google Scholar] [CrossRef]

- Jeong, S.Y.; Kim, J.M.; Park, J.E.; Baek, S.J.; Yang, S.N. Application of Deep Learning Technology for Temporal Analysis of Videofluoroscopic Swallowing Studies. Res. Sq. 2022. [Google Scholar] [CrossRef]

- Matsuo, K.; Palmer, J.B. Anatomy and Physiology of Feeding and Swallowing—Normal and Abnormal. Phys. Med. Rehabil. Clin. N. Am. 2008, 19, 691–707. [Google Scholar] [CrossRef]

- Cook, I.J.; Dodds, W.J.; Dantas, R.O.; Massey, B.; Kern, M.K.; Lang, I.M.; Brasseur, J.G.; Hogan, W.J. Opening Mechanisms of the Human Upper Esophageal Sphincter. Am. J. Physiol. 1989, 257, G748–G759. [Google Scholar] [CrossRef] [PubMed]

- Hsiao, M.Y.; Weng, C.H.; Wang, Y.C.; Cheng, S.H.; Wei, K.C.; Tung, P.Y.; Chen, J.Y.; Yeh, C.Y.; Wang, T.G. Deep Learning for Automatic Hyoid Tracking in Videofluoroscopic Swallow Studies. Dysphagia 2023, 38, 171–180. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.I.; Kim, Y.; Kim, B.; Shin, D.Y.; Lee, S.J.; Choi, S.I. Hyoid Bone Tracking in a Videofluoroscopic Swallowing Study Using a Deep-Learning-Based Segmentation Network. Diagnostics 2021, 11, 1147. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Coyle, J.L.; Sejdic, E. Automatic Hyoid Bone Detection in Fluoroscopic Images Using Deep Learning. Sci. Rep. 2018, 8, 12310. [Google Scholar] [CrossRef]

- Lee, D.; Lee, W.H.; Seo, H.G.; Oh, B.-M.; Lee, J.C.; Kim, H.C. Online Learning for the Hyoid Bone Tracking during Swallowing with Neck Movement Adjustment Using Semantic Segmentation. IEEE Access 2020, 8, 157451–157461. [Google Scholar] [CrossRef]

- Shin, D.; Lebovic, G.; Lin, R.J. In-Hospital Mortality for Aspiration Pneumonia in a Tertiary Teaching Hospital: A Retrospective Cohort Review from 2008 to 2018. J. Otolaryngol. Head Neck Surg. 2023, 52, 23. [Google Scholar] [CrossRef]

- Martin-Harris, B.; Brodsky, M.B.; Michel, Y.; Lee, F.-S.; Walters, B. Delayed Initiation of the Pharyngeal Swallow: Normal Variability in Adult Swallows. J. Speech Lang. Hear. Res. 2007, 50, 585–594. [Google Scholar] [CrossRef]

- Martin-Harris, B.; Jones, B. The Videofluorographic Swallowing Study. Phys. Med. Rehabil. Clin. N. Am. 2008, 19, 769–785. [Google Scholar] [CrossRef]

- Donohue, C.; Mao, S.; Sejdić, E.; Coyle, J.L. Tracking Hyoid Bone Displacement during Swallowing without Videofluoroscopy Using Machine Learning of Vibratory Signals. Dysphagia 2021, 36, 259–269. [Google Scholar] [CrossRef]

- Wei, K.C.; Hsiao, M.Y.; Wang, T.G. The Kinematic Features of Hyoid Bone Movement during Swallowing in Different Disease Populations: A Narrative Review. J. Formos. Med. Assoc. 2022, 121, 1892–1899. [Google Scholar] [CrossRef]

- Goisauf, M.; Abadía, M. Ethics of AI in Radiology: A Review of Ethical and Societal Implications. Front. Big Data 2022, 5, 850383. [Google Scholar] [CrossRef] [PubMed]

- Naik, N.; Hameed, B.M.Z.; Shetty, D.K.; Swain, D.; Shah, M.; Paul, R.; Aggarwal, K.; Ibrahim, S.; Patil, V.; Smriti, K.; et al. Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility? Front. Surg. 2022, 9, 266. [Google Scholar] [CrossRef] [PubMed]

| Ref | Sample | Algorithm | Findings |

|---|---|---|---|

| [21] | 190 participants with dysphagia | CNN | The AUC of the validation dataset of the VFSS images for the CNN model was 0.942 for normal findings, 0.878 for penetration, and 1.000 for aspiration |

| [32] | 54 participants with aspiration, 75 participants without aspiration | Three CNNs; Simple-Layer, Multiple-Layer, and Modified LeNet | The AUC values at epoch 50 were 0.973, 0.890, and 0.950, respectively, with statistically significant differences between AUC values |

| [33] | 106 participants with dysphagia | Deep CNN using U-Net | Detected airway invasion with an overall accuracy of 97.2% in classifying image frames and 93.2% in classifying video files |

| [34] | 49 participants with dysphagia | Deep CNN using U-Net | Kappa coefficients indicate moderate to substantial interrater agreement between AI and human raters in identifying laryngeal penetration or aspiration |

| Ref | Sample | Algorithm | Findings |

|---|---|---|---|

| [22] | 27 participants with subjective dysphagia | 3D CNN | Average success rate of detection during the pharyngeal phase of 97.5% |

| [35] | 78 healthy participants | Compared multiple CNN algorithms | Pearson’s correlation coefficient of 0.951 for BPM and 0.996 for UESC |

| [36] | 547 VFSS video clips from patients with dysphagia | 3D CNN | Average accuracy of 0.864 to 0.981 |

| Ref | Sample | Algorithm | Findings |

|---|---|---|---|

| [39] | 44 participants with dysphagia | CNN; Cascaded Pyramid Network | Excellent inter-rater reliability for hyoid bone detection, good-to-excellent inter-rater reliability for displacement and the average velocity of the hyoid bone in horizontal or vertical directions, moderate-to-good reliability in calculating the average velocity in horizontal direction |

| [40] | 207 participants with dysphagia | CNN; U-Net | mAP of 91% for hyoid bone detection |

| [41] | 265 participants with dysphagia | CNN; SSD | mAP of 89.14% for hyoid bone detection |

| [42] | 77 participants; healthy individuals and individuals with Parkinson’s Disease and stroke. | CNN; MDNet | DSC results for the proposed method were 0.87 for healthy individuals, 0.88 for patients with Parkinson’s Disease, 0.85 for patients with stroke, and a total of 0.87. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Girardi, A.M.; Cardell, E.A.; Bird, S.P. Artificial Intelligence in the Interpretation of Videofluoroscopic Swallow Studies: Implications and Advances for Speech–Language Pathologists. Big Data Cogn. Comput. 2023, 7, 178. https://doi.org/10.3390/bdcc7040178

Girardi AM, Cardell EA, Bird SP. Artificial Intelligence in the Interpretation of Videofluoroscopic Swallow Studies: Implications and Advances for Speech–Language Pathologists. Big Data and Cognitive Computing. 2023; 7(4):178. https://doi.org/10.3390/bdcc7040178

Chicago/Turabian StyleGirardi, Anna M., Elizabeth A. Cardell, and Stephen P. Bird. 2023. "Artificial Intelligence in the Interpretation of Videofluoroscopic Swallow Studies: Implications and Advances for Speech–Language Pathologists" Big Data and Cognitive Computing 7, no. 4: 178. https://doi.org/10.3390/bdcc7040178

APA StyleGirardi, A. M., Cardell, E. A., & Bird, S. P. (2023). Artificial Intelligence in the Interpretation of Videofluoroscopic Swallow Studies: Implications and Advances for Speech–Language Pathologists. Big Data and Cognitive Computing, 7(4), 178. https://doi.org/10.3390/bdcc7040178