Defending Against AI Threats with a User-Centric Trustworthiness Assessment Framework

Abstract

1. Introduction

2. Threats and Trustworthiness of AI Applications

2.1. Generative AI Threats

2.2. Real-World Examples

2.3. Trustworthiness and User Experience of AI Applications

3. Controlling the Risks of AI Apps

3.1. Technological Controls

3.2. Regulatory Controls

3.3. Trustworthiness Assessment

3.4. Limitations and the Need for User Involvement

- echnological defenses often become brittle over time. Malicious actors consistently demonstrate a remarkable ability to stay ahead of defensive mechanisms, continually developing new threats and undermining AI models. This adaptability presents a persistent challenge, continuously testing the effectiveness of existing safeguards and necessitating ongoing improvements to defensive measures.

- Regulations struggle to keep pace with the rapid advancement of technology. This regulatory lag can create gaps in protection, allowing emerging threats to exploit these delays. Moreover, the reactive nature of regulatory updates may not adequately address the speed and sophistication of new AI threats, further intensifying the risk of insufficient safeguards and oversight.

- The technical nature of trustworthiness assessment frameworks limits their accessibility to the general public. Consequently, average users often remain unaware of such frameworks and lack specialized tools to independently evaluate the AI applications they use as of their trustworthiness. This disconnect extends to the specialized terminology used within such frameworks, making it even harder for users to start familiarizing themselves with important aspects such as transparency and privacy that they should expect from AI applications.

4. AI User Self-Assessment Framework: Research Design and Methodology

4.1. Conceptual Framework

- User Control. This dimension focuses on users’ ability to understand and manage the AI app’s actions and decisions. It assesses whether users can control the app’s operations and intervene or override decisions if needed. The aim is to ensure users maintain their autonomy and that the AI app supports their decision-making without undermining it. Key areas include awareness of AI-driven decisions, control over the app, and measures to avoid over-reliance on the technology. A detailed set of questions enabling users to assess the dimension of user control is available in Appendix A.1.

- Transparency. The set of questions related to the dimension of transparency (as detailed in Appendix A.2), aim to enable users to assesses how openly the AI app operates. It focuses on the clarity and accessibility of information regarding the app’s data sources, decision-making processes, and purpose. It also evaluates how well the app communicates its benefits, limitations, and potential risks, ensuring users have a clear understanding of the AI’s operations.

- Security. This dimension aims to enable users assess the robustness of AI apps against potential threats, as well as mechanisms for handling security issues. The questions relevant to security, as detailed in Appendix A.3, evaluate how confident users feel about the app’s safety measures and ability to function without compromising data or causing harm. Important considerations include the app’s reliability, the use of high-quality information, and user awareness of security updates and risks.

- Privacy. This dimension examines how the AI app handles personal data, emphasizing data protection and user awareness of data use. It considers users’ ability to control their personal information and the app’s commitment to collecting only necessary data. Topics include respect for user privacy, transparency in data collection, and options for data deletion and consent withdrawal. The specific questions aiming to enable users evaluate the app with respect to their privacy are available in Appendix A.4.

- Ethics. A set of questions relevant to the dimension of ethics, as detailed in Appendix A.5, aims to address the ethical considerations of the AI app, such as avoiding unfair bias and ensuring inclusivity. It evaluates whether the app is designed to be accessible to all users and whether it includes mechanisms for reporting and correcting discrimination. Ethical design principles and fairness in data usage are central themes.

- Compliance. This dimension of the framework looks at the processes in place for addressing user concerns and ensuring the AI app adheres to relevant legal and ethical standards. Through targeted questions, as detailed in Appendix A.6, it evaluates the availability of reporting mechanisms, regular reviews of ethical guidelines, and ways for users to seek redress if negatively affected by the app. Ensuring compliance with legal frameworks and promoting accountability are key aspects.

4.2. Framework Development Process

- Literature Review. We began with a review of the existing literature on the threats posed by popular AI applications, using real-world incidents as case studies. This review highlighted how these threats undermine AI trustworthiness and how positive user experiences can sometimes obscure underlying trustworthiness issues. We also examined current countermeasures to these threats. Exploring existing solutions for assessing AI trustworthiness, we identified key dimensions and principles that became the foundation for the framework’s core elements.

- Framework Design. Based on the identified dimensions of AI Trustworthiness, we designed the framework by adapting principles from the ALTAI framework and incorporating user-centric elements. We simplified questions from the ALTAI framework to make them more relevant to users without technical expertise. This design process involved defining specific criteria and crafting user-friendly questions for each dimension. The aim was to create questions that users, regardless of their technical background, can understand and engage with the framework, improving the accessibility and effectiveness of the self-assessment tool.

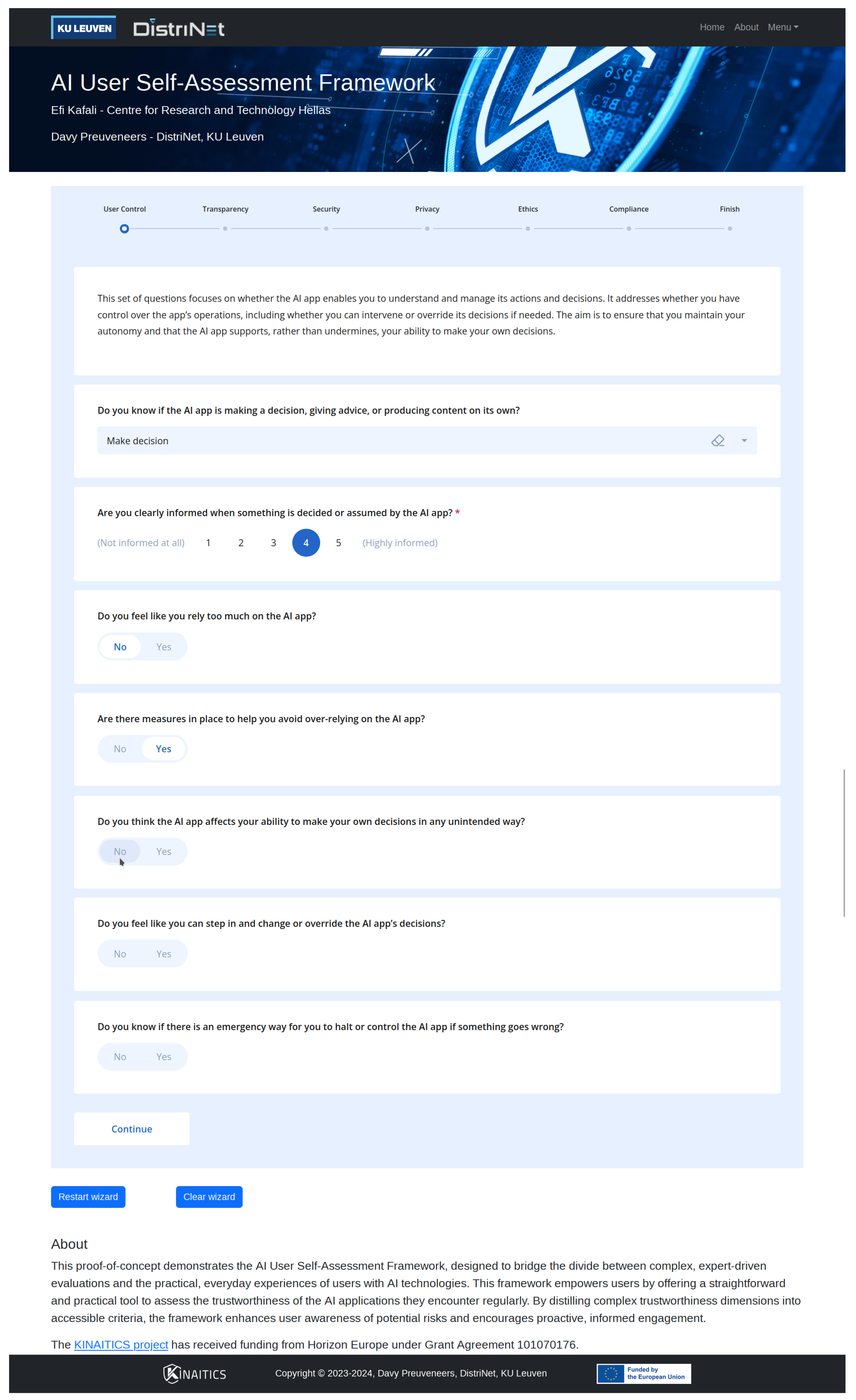

- Framework Implementation. Following the design of the framework, we developed a practical self-assessment tool that incorporates all identified questions. The tool features a user-friendly interface where each dimension is represented by a set of relevant questions. Users can interact with the tool by answering these questions based on their experiences with the AI app they are assessing. Additionally, the tool includes a scoring system that quantifies user responses. Each question is associated with specific criteria and scoring options, allowing users to assign scores based on how well the AI app meets the standards for each dimension according to their perceptions. The scores are aggregated to generate an ruling trustworthiness rating for the app in question. The tool was developed to facilitate individual assessments and allow for comparative analysis across different AI applications. The self-assessment tool, the different dimensions, as well as a subset of the questions, are demonstrated in Figure 3.

- Framework Application. Users can access our interface, where they are presented with a list of popular AI applications, such as ChatGPT, for trustworthiness assessment. Upon selecting an app, users are guided through a series of questions covering the identified dimensions of AI trustworthiness. As they respond to the questions, their answers are scored based on predefined criteria for each dimension. Once the user completes the assessment, the tool aggregates their individual scores. These scores are then combined with assessments from other users evaluating the same AI app, providing a trustworthiness rating. This aggregated rating helps create a collective evaluation of the app’s trustworthiness.

4.3. User Scoring and Evaluation Process

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Questionnaire for the AI User Self-Assessment Framework

Appendix A.1. User Control

- Do you know if the AI app is making a decision, giving advice, or producing content on its own?

- Are you clearly informed when something is decided or assumed by the AI app?

- Do you feel like you rely too much on the AI app?

- Are there measures in place to help you avoid over-relying on the AI app?

- Do you think the AI app affects your ability to make your own decisions in any unintended way?

- Do you feel like you can step in and change or override the AI app’s decisions?

- Do you know if there is an emergency way for you to halt or control the AI app if something goes wrong?

Appendix A.2. Transparency

- Can you find out what data the app used to make a specific decision or recommendation?

- Does the app explain the rationale behind reaching its decisions or predictions?

- Does the app explain the purpose and criteria behind its decisions or recommendations?

- Are the benefits of using the app clearly communicated to you?

- Are the limitations and potential risks of using the app, such as its accuracy and error rates, clearly communicated to you?

- Does the app provide instructions or training on how to use it properly?

Appendix A.3. Security

- Do you think that the app could cause serious safety issues for users or society if it breaks or gets hacked?

- Are you informed about the app’s security updates and how long they will be provided?

- Does the app clearly explain any risks involved in using it and how it keeps you safe?

- Do you find the app reliable and free of frequent errors or malfunctions?

- Do you think the app uses up-to-date, accurate, and high-quality information for its operations?

- Does the app provide information about how accurate it is supposed to be and how it ensures this accuracy?

- Could the app’s unreliability cause significant issues for you or your safety?

Appendix A.4. Privacy

- Do you feel that the app respects your privacy?

- Are you aware of any ways to report privacy issues with the app?

- Do you know if and to what extent the app uses your personal data?

- Are you informed about what data is collected by the app and why?

- Does the app explain how your personal data is protected?

- Does the app allow you to delete your data or withdraw your consent?

- Are settings about deleting your data or withdrawing your consent visible and easily accessible?

- Do you feel that the app only collects data that are necessary for its functions?

Appendix A.5. Ethics

- Do you think that the app creators have a plan to avoid creating or reinforcing unfair bias in how the app works?

- Do you think that the app creators make sure the data used by the app includes a diverse range of people?

- Does the app allow you to report issues related to bias or discrimination easily?

- Do you think the app is designed to be usable by people of all ages, genders, and abilities?

- Do you consider the app easy to use for people with disabilities or those who might need extra help?

- Does the app follow principles that make it accessible and usable for as many people as possible?

Appendix A.6. Compliance

- Are there clear ways for you to report problems or concerns about the app?

- Are you informed about the app’s compliance with relevant legal frameworks?

- Are there processes indicating regular reviews and updates regarding the app’s adherence to ethical guidelines?

- Are there mechanisms for you to seek redress if you are negatively affected by the app?

References

- Emmert-Streib, F. From the digital data revolution toward a digital society: Pervasiveness of artificial intelligence. Mach. Learn. Knowl. Extr. 2021, 3, 284–298. [Google Scholar] [CrossRef]

- Ait Baha, T.; El Hajji, M.; Es-Saady, Y.; Fadili, H. The power of personalization: A systematic review of personality-adaptive chatbots. SN Comput. Sci. 2023, 4, 661. [Google Scholar] [CrossRef]

- Allen, C.; Payne, B.R.; Abegaz, T.; Robertson, C. What You See Is Not What You Know: Studying Deception in Deepfake Video Manipulation. J. Cybersecur. Educ. Res. Pract. 2024, 2024, 9. [Google Scholar] [CrossRef]

- Bansal, G.; Chamola, V.; Hussain, A.; Guizani, M.; Niyato, D. Transforming conversations with AI—A comprehensive study of ChatGPT. Cogn. Comput. 2024, 16, 2487–2510. [Google Scholar] [CrossRef]

- Liu, H.; Wang, Y.; Fan, W.; Liu, X.; Li, Y.; Jain, S.; Liu, Y.; Jain, A.; Tang, J. Trustworthy AI: A computational perspective. ACM Trans. Intell. Syst. Technol. 2022, 14, 1–59. [Google Scholar] [CrossRef]

- Saeed, M.M.; Alsharidah, M. Security, privacy, and robustness for trustworthy AI systems: A review. Comput. Electr. Eng. 2024, 119, 109643. [Google Scholar] [CrossRef]

- Reinhardt, K. Trust and trustworthiness in AI ethics. AI Ethics 2023, 3, 735–744. [Google Scholar] [CrossRef]

- Casare, A.; Basso, T.; Moraes, R. User Experience and Trustworthiness Measurement: Challenges in the Context of e-Commerce Applications. In Proceedings of the Future Technologies Conference (FTC) 2021, Vancouver, BC, Canada, 28–29 November 2022; Springer: Berlin/Heidelberg, Germany, 2022; Volume 1, pp. 173–192. [Google Scholar]

- Chander, B.; John, C.; Warrier, L.; Gopalakrishnan, K. Toward trustworthy artificial intelligence (TAI) in the context of explainability and robustness. ACM Comput. Surv. 2024. [Google Scholar] [CrossRef]

- Díaz-Rodríguez, N.; Del Ser, J.; Coeckelbergh, M.; de Prado, M.L.; Herrera-Viedma, E.; Herrera, F. Connecting the dots in trustworthy Artificial Intelligence: From AI principles, ethics, and key requirements to responsible AI systems and regulation. Inf. Fusion 2023, 99, 101896. [Google Scholar] [CrossRef]

- Brunner, S.; Frischknecht-Gruber, C.; Reif, M.U.; Weng, J. A comprehensive framework for ensuring the trustworthiness of AI systems. In Proceedings of the 33rd European Safety and Reliability Conference (ESREL), Southampton, UK, 3–7 September 2023; Research Publishing: Singapore, 2023; pp. 2772–2779. [Google Scholar]

- Weng, Y.; Wu, J. Leveraging Artificial Intelligence to Enhance Data Security and Combat Cyber Attacks. J. Artif. Intell. Gen. Sci. (JAIGS) 2024, 5, 392–399. [Google Scholar] [CrossRef]

- Durovic, M.; Corno, T. The Privacy of Emotions: From the GDPR to the AI Act, an Overview of Emotional AI Regulation and the Protection of Privacy and Personal Data. Privacy Data Prot.-Data-Driven Technol. 2025, 368–404. [Google Scholar]

- Ala-Pietilä, P.; Bonnet, Y.; Bergmann, U.; Bielikova, M.; Bonefeld-Dahl, C.; Bauer, W.; Bouarfa, L.; Chatila, R.; Coeckelbergh, M.; Dignum, V.; et al. The Assessment List for Trustworthy Artificial Intelligence (ALTAI); European Commission: Brussels, Belgium, 2020. [Google Scholar]

- Croce, F.; Andriushchenko, M.; Sehwag, V.; Debenedetti, E.; Flammarion, N.; Chiang, M.; Mittal, P.; Hein, M. RobustBench: A standardized adversarial robustness benchmark. In Proceedings of the Thirty-fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 2), Virtual Conference, 7–10 December 2021. [Google Scholar]

- Baz, A.; Ahmed, R.; Khan, S.A.; Kumar, S. Security risk assessment framework for the healthcare industry 5.0. Sustainability 2023, 15, 16519. [Google Scholar] [CrossRef]

- Schwemer, S.F.; Tomada, L.; Pasini, T. Legal ai systems in the eu’s proposed artificial intelligence act. In Proceedings of the Second International Workshop on AI and Intelligent Assistance for Legal Professionals in the Digital Workplace (LegalAIIA 2021), Held in Conjunction with ICAIL, Sao Paulo, Brazil, 21 June 2021. [Google Scholar]

- Dasi, U.; Singla, N.; Balasubramanian, R.; Benadikar, S.; Shanbhag, R.R. Ethical implications of AI-driven personalization in digital media. J. Inform. Educ. Res. 2024, 4, 588–593. [Google Scholar]

- Pa Pa, Y.M.; Tanizaki, S.; Kou, T.; Van Eeten, M.; Yoshioka, K.; Matsumoto, T. An attacker’s dream? exploring the capabilities of chatgpt for developing malware. In Proceedings of the 16th Cyber Security Experimentation and Test Workshop, Marina Del Rey, CA, USA, 7–8 August 2023; pp. 10–18. [Google Scholar]

- Gill, S.S.; Kaur, R. ChatGPT: Vision and challenges. Internet Things-Cyber-Phys. Syst. 2023, 3, 262–271. [Google Scholar] [CrossRef]

- Li, J.; Yang, Y.; Wu, Z.; Vydiswaran, V.V.; Xiao, C. ChatGPT as an Attack Tool: Stealthy Textual Backdoor Attack via Blackbox Generative Model Trigger. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Mexico City, Mexico, 16–21 June 2024; Volume 1, pp. 2985–3004. [Google Scholar]

- Roy, S.S.; Thota, P.; Naragam, K.V.; Nilizadeh, S. From Chatbots to Phishbots?: Phishing Scam Generation in Commercial Large Language Models. In Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP). IEEE Computer Society, Francisco, CA, USA, 20–23 May 2024; p. 221. [Google Scholar]

- Kshetri, N. ChatGPT in developing economies. IT Prof. 2023, 25, 16–19. [Google Scholar] [CrossRef]

- Chen, Y.; Kirhsner, S.; Ovchinnikov, A.; Andiappan, M.; Jenkin, T. A Manager and an AI Walk into a Bar: Does ChatGPT Make Biased Decisions Like We Do? 2023. Available online: https://ssrn.com/abstract=4380365 (accessed on 20 October 2024).

- Luccioni, S.; Akiki, C.; Mitchell, M.; Jernite, Y. Stable bias: Evaluating societal representations in diffusion models. In Proceedings of the 37th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023; pp. 56338–56351. [Google Scholar]

- Wang, Y.; Pan, Y.; Yan, M.; Su, Z.; Luan, T.H. A survey on ChatGPT: AI-generated contents, challenges, and solutions. IEEE Open J. Comput. Soc. 2023, 4, 280–302. [Google Scholar] [CrossRef]

- Qu, Y.; Shen, X.; He, X.; Backes, M.; Zannettou, S.; Zhang, Y. Unsafe diffusion: On the generation of unsafe images and hateful memes from text-to-image models. In Proceedings of the 2023 ACM SIGSAC Conference on Computer and Communications Security, Copenhagen, Denmark, 26–30 November 2023; pp. 3403–3417. [Google Scholar]

- Li, J.; Cheng, X.; Zhao, W.X.; Nie, J.Y.; Wen, J.R. Halueval: A large-scale hallucination evaluation benchmark for large language models. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 6449–6464. [Google Scholar]

- Hanley, H.W.; Durumeric, Z. Machine-made media: Monitoring the mobilization of machine-generated articles on misinformation and mainstream news websites. In Proceedings of the International AAAI Conference on Web and Social Media, Buffalo, NY, USA, 3–6 June 2024; Volume 18, pp. 542–556. [Google Scholar]

- Vartiainen, H.; Tedre, M. Using artificial intelligence in craft education: Crafting with text-to-image generative models. Digit. Creat. 2023, 34, 1–21. [Google Scholar] [CrossRef]

- Malinka, K.; Peresíni, M.; Firc, A.; Hujnák, O.; Janus, F. On the educational impact of chatgpt: Is artificial intelligence ready to obtain a university degree? In Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 1, Turku, Finland, 10–12 July 2023; pp. 47–53. [Google Scholar]

- Strickland, E. IBM Watson, heal thyself: How IBM overpromised and underdelivered on AI health care. IEEE Spectr. 2019, 56, 24–31. [Google Scholar] [CrossRef]

- Hunkenschroer, A.L.; Kriebitz, A. Is AI recruiting (un) ethical? A human rights perspective on the use of AI for hiring. AI Ethics 2023, 3, 199–213. [Google Scholar] [CrossRef]

- Rudolph, J.; Tan, S.; Tan, S. War of the chatbots: Bard, Bing Chat, ChatGPT, Ernie and beyond. The new AI gold rush and its impact on higher education. J. Appl. Learn. Teach. 2023, 6, 364–389. [Google Scholar]

- Kaur, D.; Uslu, S.; Rittichier, K.J.; Durresi, A. Trustworthy artificial intelligence: A review. ACM Comput. Surv. (CSUR) 2022, 55, 1–38. [Google Scholar] [CrossRef]

- Shin, D. The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. Int. J. Hum.-Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Li, B.; Qi, P.; Liu, B.; Di, S.; Liu, J.; Pei, J.; Yi, J.; Zhou, B. Trustworthy AI: From principles to practices. ACM Comput. Surv. 2023, 55, 1–46. [Google Scholar] [CrossRef]

- Langford, T.; Payne, B. Phishing Faster: Implementing ChatGPT into Phishing Campaigns. In Proceedings of the Future Technologies Conference, Vancouver, BC, Canada, 19–20 October 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 174–187. [Google Scholar]

- Xie, Y.; Yi, J.; Shao, J.; Curl, J.; Lyu, L.; Chen, Q.; Xie, X.; Wu, F. Defending ChatGPT against jailbreak attack via self-reminders. Nat. Mach. Intell. 2023, 5, 1–11. [Google Scholar] [CrossRef]

- Wan, Y.; Wang, W.; He, P.; Gu, J.; Bai, H.; Lyu, M.R. Biasasker: Measuring the bias in conversational ai system. In Proceedings of the 31st ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, San Francisco, CA, USA, 3–9 December 2023; pp. 515–527. [Google Scholar]

- Epstein, D.C.; Jain, I.; Wang, O.; Zhang, R. Online detection of ai-generated images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 382–392. [Google Scholar]

- Chaka, C. Detecting AI content in responses generated by ChatGPT, YouChat, and Chatsonic: The case of five AI content detection tools. J. Appl. Learn. Teach. 2023, 6, 94–104. [Google Scholar]

- Xu, H.; Zhu, T.; Zhang, L.; Zhou, W.; Yu, P.S. Machine unlearning: A survey. ACM Comput. Surv. 2023, 56, 1–36. [Google Scholar] [CrossRef]

- Dwivedi, R.; Dave, D.; Naik, H.; Singhal, S.; Omer, R.; Patel, P.; Qian, B.; Wen, Z.; Shah, T.; Morgan, G.; et al. Explainable AI (XAI): Core ideas, techniques, and solutions. ACM Comput. Surv. 2023, 55, 1–33. [Google Scholar] [CrossRef]

- Ali, S.; Abuhmed, T.; El-Sappagh, S.; Muhammad, K.; Alonso-Moral, J.M.; Confalonieri, R.; Guidotti, R.; Del Ser, J.; Díaz-Rodríguez, N.; Herrera, F. Explainable Artificial Intelligence (XAI): What we know and what is left to attain Trustworthy Artificial Intelligence. Inf. Fusion 2023, 99, 101805. [Google Scholar] [CrossRef]

- Casper, S.; Davies, X.; Shi, C.; Gilbert, T.K.; Scheurer, J.; Rando, J.; Freedman, R.; Korbak, T.; Lindner, D.; Freire, P.; et al. Open Problems and Fundamental Limitations of Reinforcement Learning from Human Feedback. arXiv 2023, arXiv:2307.15217. [Google Scholar]

- Kieseberg, P.; Weippl, E.; Tjoa, A.M.; Cabitza, F.; Campagner, A.; Holzinger, A. Controllable AI-An Alternative to Trustworthiness in Complex AI Systems? In Proceedings of the International Cross-Domain Conference for Machine Learning and Knowledge Extraction, Benevento, Italy, 29 August–1 September 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 1–12. [Google Scholar]

- Hacker, P.; Engel, A.; Mauer, M. Regulating ChatGPT and other large generative AI models. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; pp. 1112–1123. [Google Scholar]

- Chamberlain, J. The risk-based approach of the European Union’s proposed artificial intelligence regulation: Some comments from a tort law perspective. Eur. J. Risk Regul. 2023, 14, 1–13. [Google Scholar] [CrossRef]

- Bengio, Y.; Hinton, G.; Yao, A.; Song, D.; Abbeel, P.; Darrell, T.; Harari, Y.N.; Zhang, Y.Q.; Xue, L.; Shalev-Shwartz, S.; et al. Managing extreme AI risks amid rapid progress. Science 2024, 384, 842–845. [Google Scholar] [CrossRef] [PubMed]

- Laux, J.; Wachter, S.; Mittelstadt, B. Trustworthy artificial intelligence and the European Union AI act: On the conflation of trustworthiness and acceptability of risk. Regul. Gov. 2023, 18, 3–32. [Google Scholar] [CrossRef] [PubMed]

- Hupont, I.; Micheli, M.; Delipetrev, B.; Gómez, E.; Garrido, J.S. Documenting high-risk AI: A European regulatory perspective. Computer 2023, 56, 18–27. [Google Scholar] [CrossRef]

- Lucaj, L.; Van Der Smagt, P.; Benbouzid, D. Ai regulation is (not) all you need. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; pp. 1267–1279. [Google Scholar]

- Meszaros, J.; Preuveneers, D.; Biasin, E.; Marquet, E.; Erdogan, I.; Rosal, I.M.; Vranckaert, K.; Belkadi, L.; Menende, N. European Union—ChatGPT, Are You Lawfully Processing My Personal Data? GDPR Compliance and Legal Basis for Processing Personal Data by OpenAI. J. AI Law Regul. 2024, 1, 233–239. [Google Scholar] [CrossRef]

- Fedele, A.; Punzi, C.; Tramacere, S. The ALTAI checklist as a tool to assess ethical and legal implications for a trustworthy AI development in education. Comput. Law Secur. Rev. 2024, 53, 105986. [Google Scholar] [CrossRef]

- Radclyffe, C.; Ribeiro, M.; Wortham, R.H. The assessment list for trustworthy artificial intelligence: A review and recommendations. Front. Artif. Intell. 2023, 6, 1020592. [Google Scholar] [CrossRef]

- Zicari, R.V.; Brodersen, J.; Brusseau, J.; Düdder, B.; Eichhorn, T.; Ivanov, T.; Kararigas, G.; Kringen, P.; McCullough, M.; Möslein, F.; et al. Z-Inspection®: A process to assess trustworthy AI. IEEE Trans. Technol. Soc. 2021, 2, 83–97. [Google Scholar] [CrossRef]

- Vetter, D.; Amann, J.; Bruneault, F.; Coffee, M.; Düdder, B.; Gallucci, A.; Gilbert, T.K.; Hagendorff, T.; van Halem, I.; Hickman, E.; et al. Lessons learned from assessing trustworthy AI in practice. Digit. Soc. 2023, 2, 35. [Google Scholar] [CrossRef]

- Vlachogianni, P.; Tselios, N. Perceived usability evaluation of educational technology using the System Usability Scale (SUS): A systematic review. J. Res. Technol. Educ. 2022, 54, 392–409. [Google Scholar] [CrossRef]

| Control Level | Elements | References |

|---|---|---|

| Technological | Advanced methods and tools aimed at enhancing AI security, robustness, privacy, and interpretability, including vulnerability detection, response and recovery, and XAI for clearer decision-making. | [15,38,39,40,41,42,43,44,45,46,47] |

| Regulatory | Laws and guidelines established to govern the ethical use of AI technologies, ensuring compliance with standards for data privacy, transparency, and accountability. | [48,49,50,51,52,53,54] |

| Trustworthiness | Frameworks and guidelines designed to assess and ensure the ethical principles, transparency, fairness, and overall reliability of AI systems, guiding their responsible development and deployment. | [14,35,37,55,56,57,58] |

| ALTAI | AI User Self-Assessment Framework |

|---|---|

| Human Agency and Oversight | User Control |

| Transparency | Transparency |

| Technical Robustness and Safety | Security |

| Privacy and Data Governance | Privacy |

| Diversity, Non-discrimination, Fairness | Ethics |

| Accountability | Compliance |

| Societal and Environmental Well-being | Not explicitly mapped |

| Example Questions | On-App Criteria | Objective | |

| User Control | Do you feel like you can step in and change or override the AI app’s decisions? | Options to override AI decisions, emergency halt functions. | Ensure users maintain control over the AI app’s operations, promoting autonomy and the ability to intervene. |

| Transparency | Can you find out what data the app used to make a specific decision or recommendation? | Clear explanations of data sources and decision-making processes. | Promote understanding and clarity regarding the AI app’s actions and decisions, encouraging users to value transparency. |

| Security | Do you think that the app could cause serious safety issues for users or society if it breaks or gets hacked? | Robust data encryption, regular security audits, and proactive threat detection. | Ensure users feel confident about the app’s safety measures and its ability to protect their data and functionality. |

| Privacy | Do you feel that the app respects your privacy? | Transparent data collection policies, easy-to-access consent and data deletion options. | Empower users to make informed decisions about their personal information and understand how their data are handled. |

| Ethics | Do you think that the app creators have a plan to avoid creating or reinforcing unfair bias in how the app works? | Bias detection and correction mechanisms, adherence to ethical guidelines. | Ensure fairness, inclusivity, and alignment with ethical standards. |

| Compliance | Are there clear ways for you to report problems or concerns about the app? | Accessible reporting mechanisms, regular ethical reviews, and compliance with legal standards. | Empower users to hold the app accountable and ensure it adheres to relevant laws and ethical guidelines. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kafali, E.; Preuveneers, D.; Semertzidis, T.; Daras, P. Defending Against AI Threats with a User-Centric Trustworthiness Assessment Framework. Big Data Cogn. Comput. 2024, 8, 142. https://doi.org/10.3390/bdcc8110142

Kafali E, Preuveneers D, Semertzidis T, Daras P. Defending Against AI Threats with a User-Centric Trustworthiness Assessment Framework. Big Data and Cognitive Computing. 2024; 8(11):142. https://doi.org/10.3390/bdcc8110142

Chicago/Turabian StyleKafali, Efi, Davy Preuveneers, Theodoros Semertzidis, and Petros Daras. 2024. "Defending Against AI Threats with a User-Centric Trustworthiness Assessment Framework" Big Data and Cognitive Computing 8, no. 11: 142. https://doi.org/10.3390/bdcc8110142

APA StyleKafali, E., Preuveneers, D., Semertzidis, T., & Daras, P. (2024). Defending Against AI Threats with a User-Centric Trustworthiness Assessment Framework. Big Data and Cognitive Computing, 8(11), 142. https://doi.org/10.3390/bdcc8110142