Recognize the Little Ones: UAS-Based In-Situ Fluorescent Tracer Detection

Abstract

:1. Introduction

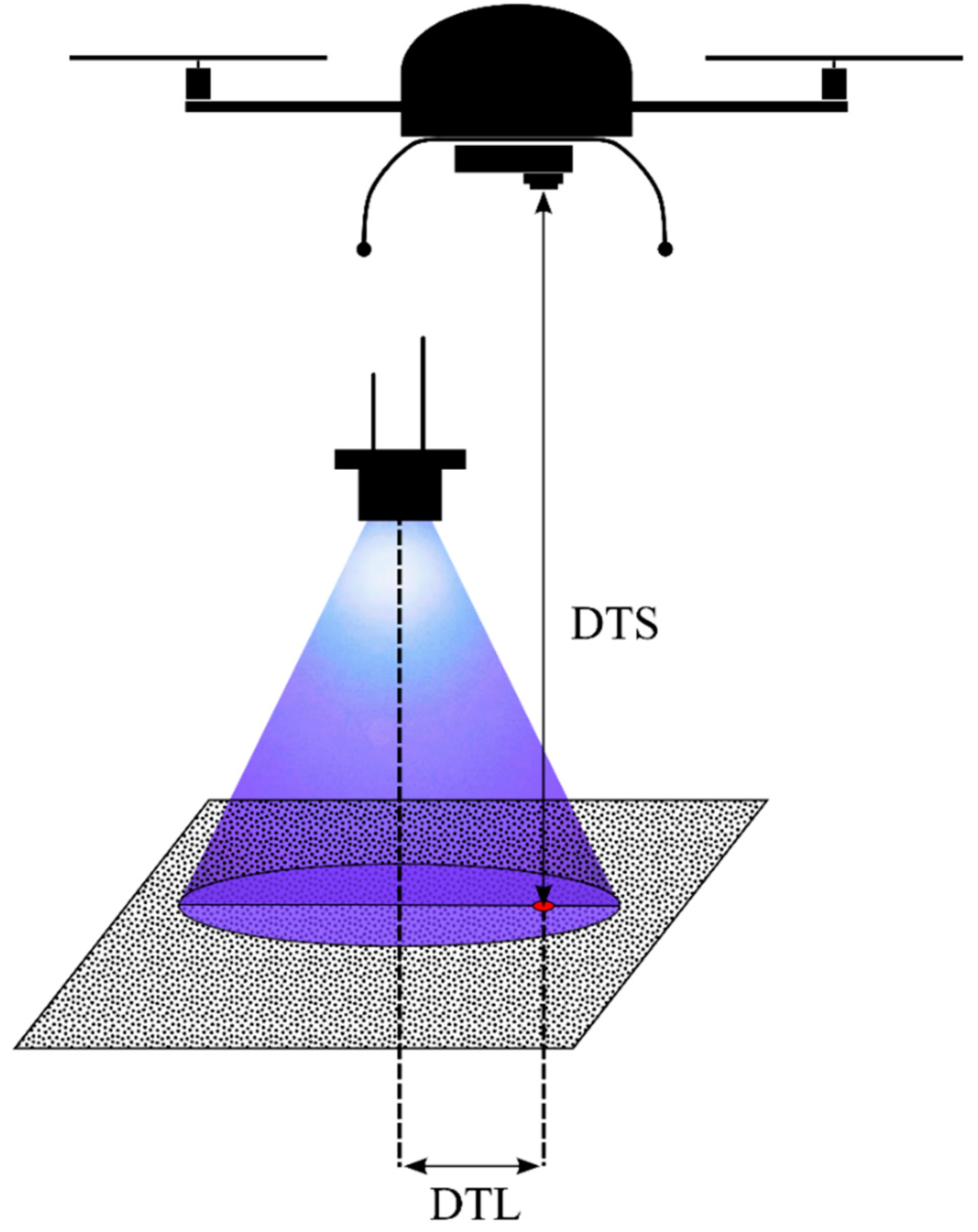

- detection probability decreases with increasing distance between the UAS camera sensor and the fluorescent tracer (DTS),

- detection probability decreases with increasing distance between the projection center on the ground of a UV radiation source spotlight and the fluorescent tracer (DTL),

- detection probability increases with increasing size of the fluorescent tracer.

2. Materials and Methods

2.1. Experimental Design & Data Collection

2.2. Image Data Processing

2.3. Detection Success and Statistics

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Krebs, C.J. Ecology: Pearson New International Edition: The Experimental Analysis of Distribution and Abundance, 6th ed.; Prentice Hall: Harlow, UK, 2013; ISBN 978-1-292-02627-5. [Google Scholar]

- Woiwod, I.P.; Thomas, C.D.; Reynolds, D.R. Insect Movement: Mechanisms and Consequences, 1st ed.; CABI: Wallingford, UK; New York, NY, USA, 2001; ISBN 978-0-85199-456-7. [Google Scholar]

- Riley, J.R.; Smith, A.D.; Reynolds, D.R.; Edwards, A.S.; Osborne, J.L.; Williams, I.H.; Carreck, N.L.; Poppy, G.M. Tracking bees with harmonic radar. Nature 1996, 379, 29. [Google Scholar] [CrossRef]

- Daniel Kissling, W.; Pattemore, D.E.; Hagen, M. Challenges and prospects in the telemetry of insects. Biol. Rev. Camb. Philos. Soc. 2014, 89, 511–530. [Google Scholar] [CrossRef]

- Hagen, M.; Wikelski, M.; Kissling, W.D. Space Use of Bumblebees (Bombus spp.) Revealed by Radio-Tracking. PLoS ONE 2011, 6, e19997. [Google Scholar] [CrossRef]

- Guan, Z.; Brydegaard, M.; Lundin, P.; Wellenreuther, M.; Runemark, A.; Svensson, E.I.; Svanberg, S. Insect monitoring with fluorescence lidar techniques: Field experiments. Appl. Opt. 2010, 49, 5133–5142. [Google Scholar] [CrossRef]

- Lemen, C.A.; Freeman, P.W. Tracking Mammals with Fluorescent Pigments: A New Technique. J. Mammal. 1985, 66, 134–136. [Google Scholar] [CrossRef] [Green Version]

- Rittenhouse, T.A.G.; Altnether, T.T.; Semlitsch, R.D. Fluorescent Powder Pigments as a Harmless Tracking Method for Ambystomatids and Ranids. Herpetol. Rev. 2006, 37, 188–191. [Google Scholar]

- Orlofske, S.A.; Grayson, K.L.; Hopkins, W.A. The Effects of Fluorescent Tracking Powder on Oxygen Consumption in Salamanders Using Either Cutaneous or Bimodal Respiration. Copeia 2009, 2009, 623–627. [Google Scholar] [CrossRef]

- Furman, B.L.S.; Scheffers, B.R.; Paszkowski, C.A. The use of fluorescent powdered pigments as a tracking technique for snakes. Herpetol. Conserv. Biol. 2011, 6, 473–478. [Google Scholar]

- Foltan, P.; Konvicka, M. A new method for marking slugs by ultraviolet-fluorescent dye. J. Molluscan Stud. 2008, 74, 293–297. [Google Scholar] [CrossRef] [Green Version]

- Rice, K.B.; Fleischer, S.J.; de Moraes, C.M.; Mescher, M.C.; Tooker, J.F.; Gish, M. Handheld lasers allow efficient detection of fluorescent marked organisms in the field. PLoS ONE 2015, 10, e0129175. [Google Scholar] [CrossRef]

- Van Rossum, F.; Stiers, I.; Van Geert, A.; Triest, L.; Hardy, O.J. Fluorescent dye particles as pollen analogues for measuring pollen dispersal in an insect-pollinated forest herb. Oecologia 2011, 165, 663–674. [Google Scholar] [CrossRef]

- Mitchell, R.J.; Irwin, R.E.; Flanagan, R.J.; Karron, J.D. Ecology and evolution of plant–pollinator interactions. Ann. Bot. 2009, 103, 1355–1363. [Google Scholar] [CrossRef] [Green Version]

- Harrison, T.; Winfree, R. Urban drivers of plant-pollinator interactions. Funct. Ecol. 2015, 29, 879–888. [Google Scholar] [CrossRef] [Green Version]

- McDonald, R.W.; St. Clair, C.C. The effects of artificial and natural barriers on the movement of small mammals in Banff National Park, Canada. Oikos 2004, 105, 397–407. [Google Scholar] [CrossRef]

- Townsend, P.A.; Levey, D.J. An Experimental Test of Wether Habitat Corridors Affect Pollen Transfer. Ecology 2005, 86, 466–475. [Google Scholar] [CrossRef]

- Rahmé, J.; Suter, L.; Widmer, A.; Karrenberg, S. Inheritance and reproductive consequences of floral anthocyanin deficiency in Silene dioica (Caryophyllaceae). Am. J. Bot. 2014, 101, 1388–1392. [Google Scholar] [CrossRef] [Green Version]

- Laudenslayer, W.F.; Fargo, R.J. Use of night-vision goggles, light-tags, and fluorescent powder for measuring microhabitat use of nocturnal small mammals. Trans. West. Sect. Wildl. Soc. 1997, 33, 12–17. [Google Scholar]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Singh, J.S.; Roy, P.S.; Murthy, M.S.R.; Jha, C.S. Application of landscape ecology and remote sensing for assessment, monitoring and conservation of biodiversity. J. Indian Soc. Remote Sens. 2010, 38, 365–385. [Google Scholar] [CrossRef]

- Wang, Z.; Li, H.; Zhu, Y.; Xu, T. Review of Plant Identification Based on Image Processing. Arch. Comput. Methods Eng. 2017, 24, 637–654. [Google Scholar] [CrossRef]

- Rasmussen, J.; Nielsen, J.; Garcia-Ruiz, F.; Christensen, S.; Streibig, J.C. Potential uses of small unmanned aircraft systems (UAS) in weed research. Weed Res. 2013, 53, 242–248. [Google Scholar] [CrossRef]

- López-Granados, F. Weed detection for site-specific weed management: Mapping and real-time approaches. Weed Res. 2011, 51, 1–11. [Google Scholar] [CrossRef]

- Kim, H.G.; Park, J.-S.; Lee, D.-H. Potential of Unmanned Aerial Sampling for Monitoring Insect Populations in Rice Fields. Fla. Entomol. 2018, 101, 330–334. [Google Scholar] [CrossRef]

- Tan, L.T.; Tan, K.H. Alternative air vehicles for sterile insect technique aerial release. J. Appl. Entomol. 2013, 137, 126–141. [Google Scholar] [CrossRef]

- Shields, E.J.; Testa, A.M. Fall migratory flight initiation of the potato leafhopper, Empoasca fabae (Homoptera: Cicadellidae): Observations in the lower atmosphere using remote piloted vehicles. Agric. For. Meteorol. 1999, 97, 317–330. [Google Scholar] [CrossRef]

- Schmale III, D.G.; Dingus, B.R.; Reinholtz, C. Development and application of an autonomous unmanned aerial vehicle for precise aerobiological sampling above agricultural fields. J. Field Robot. 2008, 25, 133–147. [Google Scholar] [CrossRef]

- Park, Y.-L.; Gururajan, S.; Thistle, H.; Chandran, R.; Reardon, R. Aerial release of Rhinoncomimus latipes (Coleoptera: Curculionidae) to control Persicaria perfoliata (Polygonaceae) using an unmanned aerial system. Pest Manag. Sci. 2018, 74, 141–148. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Tahir, N.; Brooker, G. Feasibility of UAV Based Optical Tracker for Tracking Australian Plague Locust. In Proceedings of the Australasian Conference on Robotics and Automation, Sydney, Australia, 2–4 December 2009. [Google Scholar]

- Brooker, G.; Randle, J.A.; Attia, M.E.; Xu, Z.; Abuhashim, T.; Kassir, A.; Chung, J.J.; Sukkarieh, S.; Tahir, N. First airborne trial of a UAV based optical locust tracker. In Proceedings of the Australasian Conference on Robotics and Automation, Melbourne, Australia, 7–9 December 2011. [Google Scholar]

- Hijmans, R.J.; van Etten, J.; Cheng, J.; Sumner, M.; Mattiuzzi, M.; Greenberg, J.A.; Lamigueiro, O.P.; Bevan, A.; Bivand, R.; Busetto, L.; et al. Raster: Geographic Data Analysis and Modeling. R Package Version 2.8-4. 2018. Available online: https://cran.r-project.org/web/packages/raster/index.html (accessed on 20 January 2019).

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Wien, Austria, 2015; ISBN 3-900051-07-0. [Google Scholar]

- Foody, G.M.; Mathur, A. The use of small training sets containing mixed pixels for accurate hard image classification: Training on mixed spectral responses for classification by a SVM. Remote Sens. Environ. 2006, 103, 179–189. [Google Scholar] [CrossRef]

- Kimball, S.; Mattis, P. GNU Image Manipulation Program. 2016. Available online: https://www.gimp.org/ (accessed on 19 February 2019).

- Pau, G.; Fuchs, F.; Sklyar, O.; Boutros, M.; Huber, W.; Pau, G. EBImage: An R package for image processing with applications to cellular phenotypes. Bioinformatics 2010, 26, 979–981. [Google Scholar] [CrossRef]

- Taylor, M. sinkr: Collection of Functions with Emphasis in Multivariate Data Analysis. 2017. Available online: https://github.com/marchtaylor/sinkr (accessed on 19 February 2019).

- Harrell, F.E., Jr. Hmisc: Harrell Miscellaneous. Version4.2-0. 2018. Available online: https://cran.r-project.org/web/packages/Hmisc/index.html (accessed on 20 January 2019).

- Weston, S. doParallel: Foreach Parallel Adaptor for the “Parallel” Package; Microsoft Corporation: Redmond, WA, USA, 2017. [Google Scholar]

- Weston, S. Foreach: Provides Foreach Looping Construct for R; Microsoft Corporation: Redmond, WA, USA, 2017. [Google Scholar]

- Kuhn, M. Caret: Classification and Regression Training. 2018. Available online: https://CRAN.R-project.org/package=caret (accessed on 19 February 2019).

- Huang, K.; Murphy, R.F. Boosting accuracy of automated classification of fluorescence microscope images for location proteomics. BMC Bioinform. 2004, 5, 78. [Google Scholar]

- Chang, C.-C.; Lin, C.-J. LIBSVM—A Library for Support Vector Machines. Version 3.23. 2018. Available online: https://www.csie.ntu.edu.tw/~cjlin/libsvm/ (accessed on 19 February 2019).

- Meyer, D.; Dimitriadou, E.; Hornik, K.; Weingessel, A.; Leisch, F. e1071: Misc Functions of the Department of Statistics; Probability Theory Group (Formerly: E1071); TU Wien: Wien, Austria, 2015. [Google Scholar]

- QGIS Development Team. QGIS Geographic Information System; Open Source Geospatial Foundation: Chicago, IL, USA, 2017. [Google Scholar]

- Bates, D.; Mächler, M.; Bolker, B.; Walker, S. Fitting Linear Mixed-Effects Models Using lme4. J. Stat. Softw. 2015, 67, 1–48. [Google Scholar] [CrossRef]

- Venables, W.N.; Ripley, B.D. Modern Applied Statistics with S. In Statistics and Computing, 4th ed.; Springer: New York, NY, USA, 2002; ISBN 978-0-387-95457-8. [Google Scholar]

- Delignette-Muller, M.-L.; Dutang, C.; Pouillot, R.; Denis, J.-B.; Siberchicot, A. Fitdistrplus: Help to Fit of a Parametric Distribution to Non-Censored or Censored Data. Version 1.0-14. 2018. Available online: https://CRAN.R-project.org/package=fitdistrplus (accessed on 19 February 2019).

- Bartoń, K. MuMIn: Multi-Model Inference. Version 1.42.1. 2018. Available online: https://CRAN.R-project.org/package=MuMIn (accessed on 19 February 2019).

- Fox, J.; Weisberg, S. An R Companion to Applied Regression; Revised; SAGE Publications, Inc.: Thousand Oaks, CA, USA, 2010. [Google Scholar]

- Fox, J.; Weisberg, S.; Friendly, M.; Hong, J.; Andersen, R.; Firth, D.; Taylor, S. Effect Displays for Linear, Generalized Linear, and Other Models. Version 4.1-0. 2018. Available online: https://CRAN.R-project.org/package=effects (accessed on 19 February 2019).

- Lehmann, J.R.K.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Afán, I.; Máñez, M.; Díaz-Delgado, R. Drone Monitoring of Breeding Waterbird Populations: The Case of the Glossy Ibis. Drones 2018, 2, 42. [Google Scholar] [CrossRef]

- Williams, C.S.; Becklund, O.A. Introduction to the Optical Transfer Function; SPIE Publications: Bellingham, WA, USA, 2002; ISBN 978-0-8194-4336-6. [Google Scholar]

| DTS Level | Tracer Size | Identified | Image No. | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (m) | (mm²) | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Sum | |

| 1 (low): | 1 | no | 62 | 59 | 74 | 76 | 57 | 73 | 66 | 73 | 540 | ||

| 2.2–2.7 | yes | 42 | 45 | 30 | 28 | 47 | 31 | 38 | 31 | 292 | |||

| 4 | no | 2 | 3 | 6 | 2 | 2 | 2 | 2 | 2 | 21 | |||

| yes | 25 | 24 | 21 | 25 | 25 | 25 | 25 | 25 | 195 | ||||

| 2 (high): | 1 | no | 61 | 78 | 139 | ||||||||

| 3.0-3.65 | yes | 44 | 26 | 70 | |||||||||

| 4 | no | 4 | 4 | 8 | |||||||||

| yes | 20 | 23 | 43 | ||||||||||

| sum | 129 | 131 | 131 | 131 | 131 | 131 | 131 | 131 | 131 | 131 | 1308 | ||

| Parameter | Chi-sq | Df | p Value |

|---|---|---|---|

| size | 210.373 | 1 | <0.001 |

| DTS | 17.262 | 1 | <0.001 |

| DTL | 130.279 | 1 | <0.001 |

| size x DTL | 9.243 | 1 | 0.002 |

| Parameter | Estimate | SE | z Value | p Value |

|---|---|---|---|---|

| Intercept (size 1, DTS 1) | 5.518 | 0.541 | 10.196 | <0.001 |

| size 4 | 0.337 | 0.979 | 0.344 | 0.730 |

| DTS 2 | −1.811 | 0.436 | −4.155 | <0.001 |

| DTL | −0.191 | 0.016 | −11.804 | <0.001 |

| size 4 x DTL | 0.090 | 0.030 | 3.04 | 0.002 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Teickner, H.; Lehmann, J.R.K.; Guth, P.; Meinking, F.; Ott, D. Recognize the Little Ones: UAS-Based In-Situ Fluorescent Tracer Detection. Drones 2019, 3, 20. https://doi.org/10.3390/drones3010020

Teickner H, Lehmann JRK, Guth P, Meinking F, Ott D. Recognize the Little Ones: UAS-Based In-Situ Fluorescent Tracer Detection. Drones. 2019; 3(1):20. https://doi.org/10.3390/drones3010020

Chicago/Turabian StyleTeickner, Henning, Jan R. K. Lehmann, Patrick Guth, Florian Meinking, and David Ott. 2019. "Recognize the Little Ones: UAS-Based In-Situ Fluorescent Tracer Detection" Drones 3, no. 1: 20. https://doi.org/10.3390/drones3010020

APA StyleTeickner, H., Lehmann, J. R. K., Guth, P., Meinking, F., & Ott, D. (2019). Recognize the Little Ones: UAS-Based In-Situ Fluorescent Tracer Detection. Drones, 3(1), 20. https://doi.org/10.3390/drones3010020