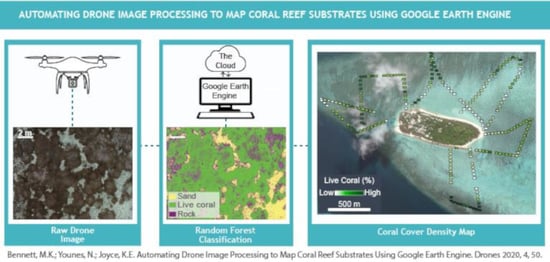

Automating Drone Image Processing to Map Coral Reef Substrates Using Google Earth Engine

Abstract

:1. Introduction

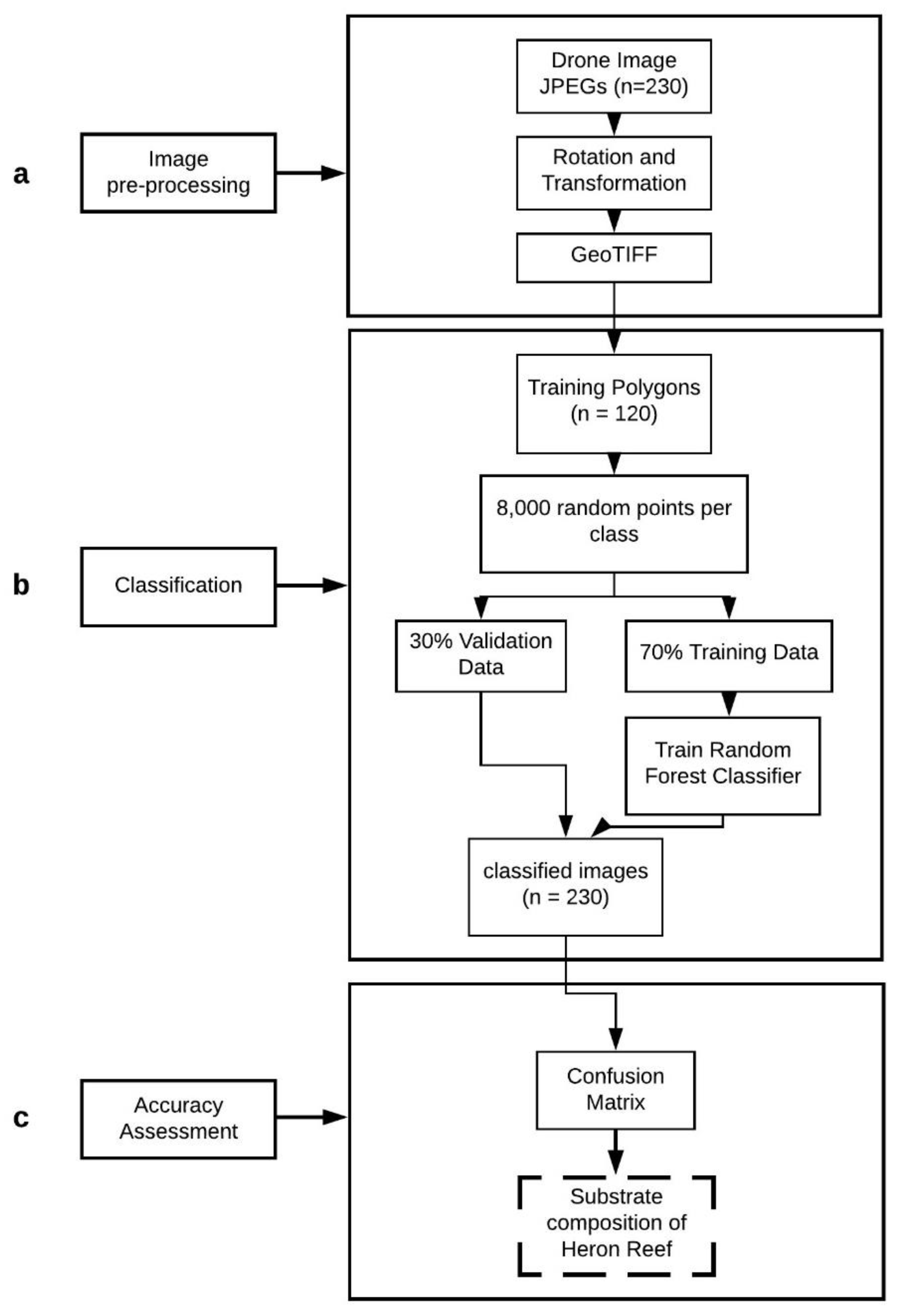

2. Methods

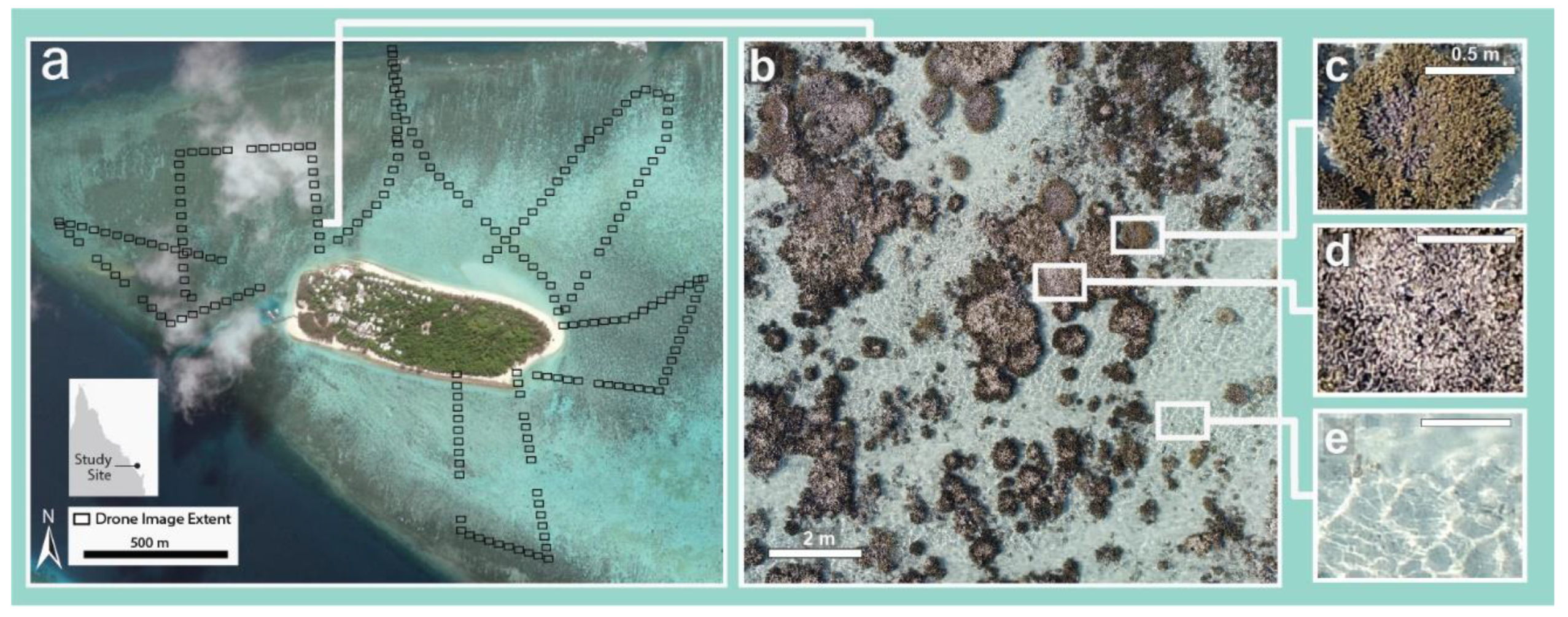

2.1. Study Site

2.2. Data Acquisition

2.3. Data Processing

2.4. Image Pre-Processing

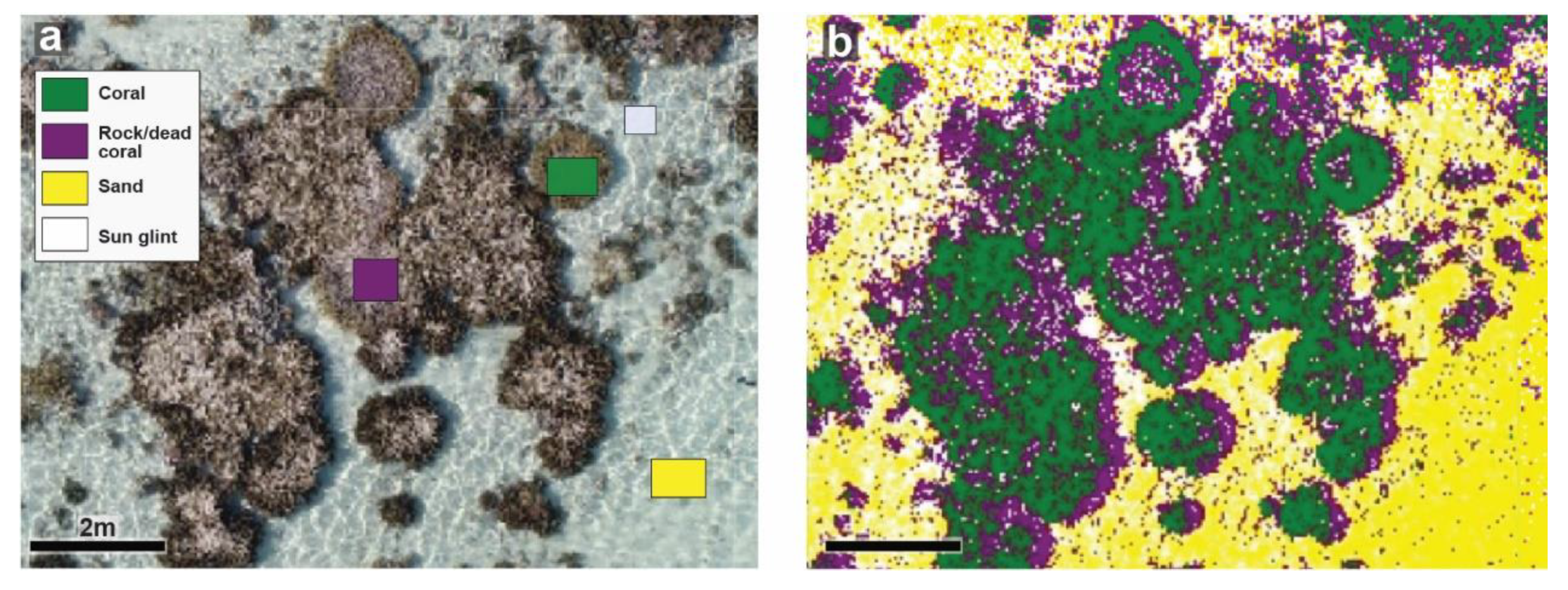

2.5. Image Classification

2.6. Accuracy Assessment

2.7. Code and Data Access

3. Results

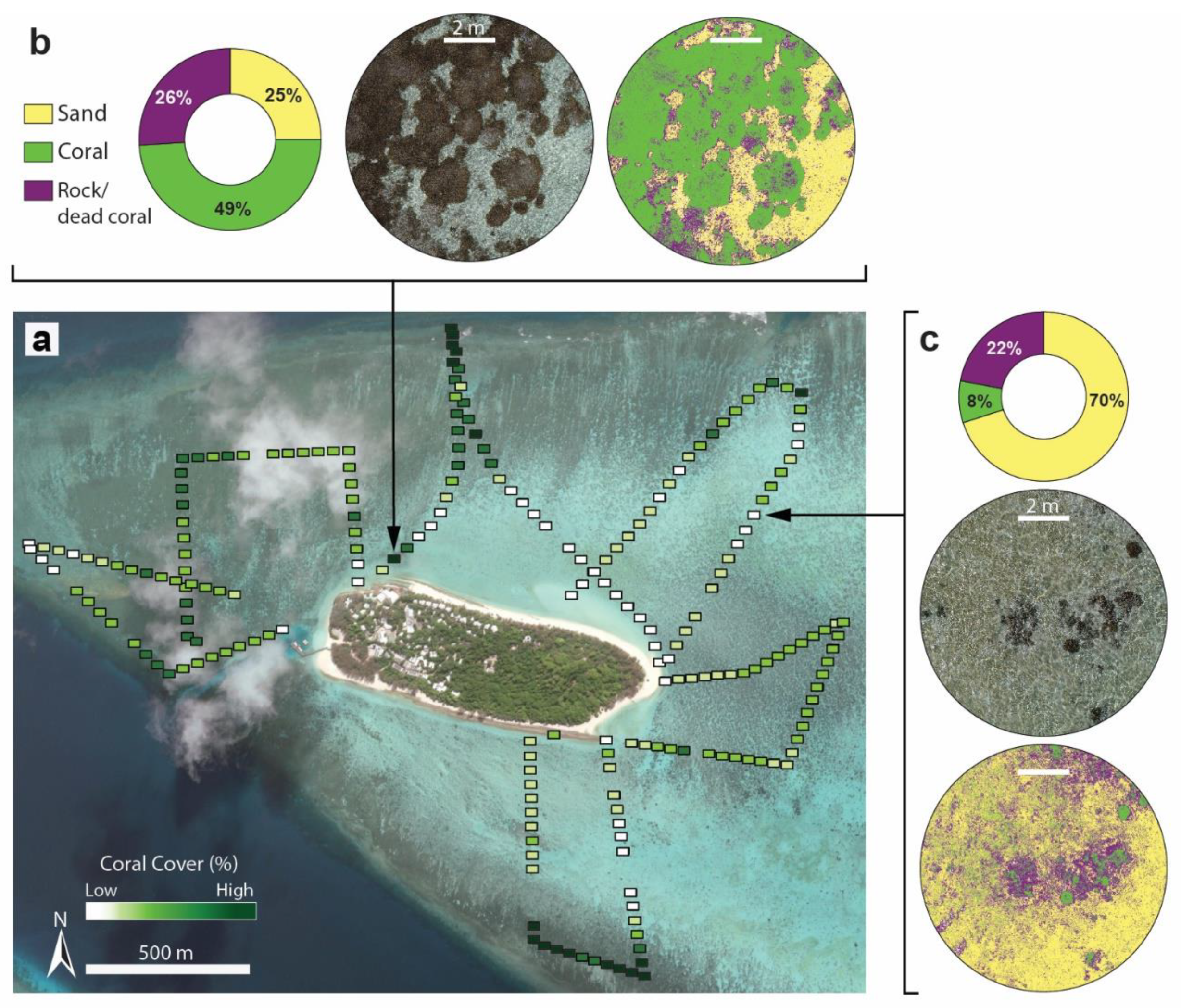

3.1. Image Classification

3.2. Accuracy Assessment

4. Discussion

4.1. Image Classification Accuracy

4.2. Workflow Assessment

5. Future Applications

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- GBRMPA. Great Barrier Reef Outlook Report 2019; Great Barrier Reef Marine Park Authority: Townsville, Australia, 2019.

- Knutson, T.; Camargo, S.J.; Chan, J.C.; Emanuel, K.; Ho, C.H.; Kossin, J.; Mohapatra, M.; Satoh, M.; Sugi, M.; Walsh, K.; et al. Tropical cyclones and climate change assessment: Part II: Projected response to anthropogenic warming. Bull. Am. Meteorol. Soc. 2020, 101, E303–E322. [Google Scholar] [CrossRef]

- Boko, M.; Niang, I.; Nyong, A.; Vogel, A.; Githeko, A.; Medany, M.; Osman-Elasha, B.; Tabo, R.; Yanda, P.Z. Intergovernmental Panel on Climate Change. Sea Level Change. In Climate Change 2013—The Physical Science Basis: Working Group I Contribution to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK, 2014; pp. 1137–1216. [Google Scholar]

- Hughes, T.P.; Kerry, J.T.; Álvarez-Romero, M.J.; Álvarez-Romero, G.; Anderson, K.D. Global warming and recurrent mass bleaching of corals. Nature 2017, 543, 373–377. [Google Scholar] [CrossRef] [PubMed]

- Harrison, H.B.; Álvarez-Noriega, M.; Baird, A.H.; Heron, S.F.; MacDonald, C.; Hughes, T.P. Back-to-back coral bleaching events on isolated atolls in the Coral Sea. Coral Reefs 2019, 38, 713–719. [Google Scholar] [CrossRef]

- Barbier, E.B.; Hacker, S.D.; Kennedy, C.; Koch, E.W.; Stier, A.C.; Silliman, B.R. The value of estuarine and coastal ecosystem services. Ecol. Monogr. 2011, 81, 169–193. [Google Scholar] [CrossRef]

- Hedley, J.D.; Roelfsema, C.M.; Chollett, L.; Harborne, A.R.; Heron, S.F.; Weeks, S.; Skirving, W.J.; Strong, A.E.; Eakin, C.M.; Christensen, T.R.L.; et al. Remote sensing of coral reefs for monitoring and management: A review. Remote. Sens. 2016, 8, 118. [Google Scholar] [CrossRef] [Green Version]

- Mumby, P.J.; Skirving, W.; Strong, A.E.; Hardy, J.T.; LeDrew, E.L.; Hochberg, E.J.; Stumpf, R.P.; David, L.P. Remote sensing of coral reefs and their physical environment. Mar. Pollut. Bull. 2004, 48, 219–228. [Google Scholar] [CrossRef]

- Goodman, J.A.; Purkis, S.J.; Phinn, S.R. Coral Reed Remote Sensing A Guide for Mapping, Monitoring and Managemen; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Roelfsema, C.; Kovacs, E.; Carlos Ortiz, J.; Wolff, N.H.; Callaghan, D.; Wettle, M.; Ronan, M.; Hamylton, S.M.; Mumby, P.J.; Phinn, S. Coral reef habitat mapping: A combination of object-based image analysis and ecological modelling. Remote. Sens. Environ. 2018, 208, 27–41. [Google Scholar] [CrossRef]

- Storlazzi, C.D.; Dartnell, P.; Hatcher, G.A.; Gibbs, A.E. End of the chain? Rugosity and fine-scale bathymetry from existing underwater digital imagery using structure-from-motion (SfM) technology. Coral Reefs 2016, 35, 889–894. [Google Scholar] [CrossRef]

- Collin, A.; Planes, S. Enhancing coral health detection using spectral diversity indices from worldview-2 imagery and machine learners. Remote. Sens. 2012, 4, 3244–3264. [Google Scholar] [CrossRef] [Green Version]

- Collin, A.; Ramambason, C.; Pastol, Y.; Casella, E.; Rovere, A.; Thiault, L.; Espiau, B.; Siu, G.; Lerouvreur, F.; Nakamura, N.; et al. Very high resolution mapping of coral reef state using airborne bathymetric LiDAR surface-intensity and drone imagery. Int. J. Remote. Sens. 2018, 39, 5676–5688. [Google Scholar] [CrossRef] [Green Version]

- Purkis, S.J. Remote sensing tropical coral reefs: The view from above. Annu. Rev. Mar. Sci. 2018, 10, 149–168. [Google Scholar] [CrossRef] [PubMed]

- Haya, Y.L.O.M.; Fuji, M. Mapping the change of coral reefs using remote sensing and in situ measurements: A case study in Pangkajene and Kepulauan Regency, Spermonde Archipelago, Indonesia. J. Oceanogr. 2017, 73, 623–645. [Google Scholar] [CrossRef]

- Saul, S.; Perkis, S. Semi-automated object-based classification of coral reef habitat using discrete choice models. Remote. Sens. 2015, 7, 15894–15916. [Google Scholar] [CrossRef] [Green Version]

- Collin, A.; Laporte, J.; Koetz, B.; Martin-Lauzer, F.R.; Desnos, Y.L. Mapping bathymetry, habitat, and potential bleaching of coral reefs using Sentinel-2. In Proceedings of the 13th International Coral Reef Symposium, Honolulu, HI, USA, 19–24 June 2016; pp. 405–420, <hal-01460593>. [Google Scholar]

- Hedley, J.D.; Roelfsema, C.M.; Phinn, S.R.; Mumby, P.J. Environmental and sensor limitations in optical remote sensing of coral reefs: Implications for monitoring and sensor design. Remote. Sens. 2012, 4, 271–302. [Google Scholar] [CrossRef] [Green Version]

- Joyce, K.E.; Duce, S.; Leahy, S.M.; Leon, J.; Maier, S.W. Principles and practice of acquiring drone-based image data in marine environments. Mar. Freshw. Res. 2019, 70, 952–963. [Google Scholar] [CrossRef]

- Colefax, A.P.; Butcher, P.A.; Kelaher, B.P. The potential for unmanned aerial vehicles (UAVs) to conduct marine fauna surveys in place of manned aircraft. ICES J. Mar. Sci. 2017, 75, 1–8. [Google Scholar] [CrossRef]

- Fernández-Guisuraga, J.M.; Sanz-Ablanedo, E.; Suárez-Seoane, S.; Calvo, L. Using unmanned aerial vehicles in postfire vegetation survey campaigns through large and Heterogeneous Areas: Opportunities and challenges. Sensors 2018, 18, 856. [Google Scholar] [CrossRef] [Green Version]

- Topouzelis, K.; Papakonstantinou, A.; Doukari, M.; Stamatis, P.; Makri, D.; Katsanevakis, S. Coastal habitat mapping in the Aegean Sea using high resolution orthophoto maps. In Proceedings of the Fifth International Conference on Remote Sensing and Geoinformation of the Environment, Paphos, Cyprus, 20–23 March 2017; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10444, p. 1044417. [Google Scholar]

- Duffy, J.P.; Pratt, L.; Anderson, K.; Land, P.E.; Shutler, J.D. Spatial assessment of intertidal seagrass meadows using optical imaging systems and a lightweight drone. Estuar. Coast. Shelf Sci. 2018, 200, 169–180. [Google Scholar] [CrossRef]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Belluscio, A.; Ardizzone, G. Mapping and classification of ecologically sensitive marine habitats using unmanned aerial vehicle (UAV) imagery and object-based image analysis (OBIA). Remote. Sens. 2018, 10, 1331. [Google Scholar] [CrossRef] [Green Version]

- Morales-Barquero, L.; Lyons, M.B.; Phinn, S.R.; Roelfsema, C.M. Trends in remote sensing accuracy assessment approaches in the context of natural resources. Remote. Sens. 2019, 11, 2305. [Google Scholar] [CrossRef] [Green Version]

- Alonso, A.; Muñoz-Carpena, R.; Kennedy, R.E.; Murcia, C. Wetland landscape spatio-temporal degradation dynamics using the new google earth engine cloud-based platform: Opportunities for non-specialists in remote sensing. Trans. ASABE 2016, 59, 1333–1344. [Google Scholar]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote. Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Kumar, L.; Mutanga, O. Google Earth Engine applications. Remote. Sens. 2019, 11, 420. [Google Scholar]

- Kumar, L.; Mutanga, O. Google Earth Engine applications since inception: Usage, trends, and potential. Remote. Sens. 2018, 10, 1509. [Google Scholar] [CrossRef] [Green Version]

- Robinson, N.P.; Allred, B.W.; Jones, M.O.; Moreno, A.; Kimball, J.S.A. Dynamic Landsat derived normalized difference vegetation index (NDVI) product for the conterminous United States. Remote. Sens. 2017, 9, 863. [Google Scholar] [CrossRef] [Green Version]

- He, M.; Kimball, J.S.; Maneta, M.P.; Maxwell, B.D.; Moreno, A.; Beguería, S.; Wu, X. Regional crop gross primary productivity and yield estimation using fused landsat-MODIS data. Remote. Sens. 2018, 10, 372. [Google Scholar] [CrossRef] [Green Version]

- Tsai, Y.; Stow, D.; Chen, H.; Lewison, R.; An, L.; Shi, L. Mapping vegetation and land use types in fanjingshan national nature reserve using Google Earth Engine. Remote. Sens. 2018, 10, 927. [Google Scholar] [CrossRef] [Green Version]

- Traganos, D.; Aggarwal, B.; Poursanidis, D.; Topouzelis, K.; Chrysoulakis, N.; Reinartz, P. Towards global-scale seagrass mapping and monitoring using Sentinel-2 on Google Earth Engine: The case study of the aegean and ionian seas. Remote. Sens. 2018, 10, 1227. [Google Scholar] [CrossRef] [Green Version]

- Sagawa, T.; Yamashita, Y.; Okumura, T.; Yamanokuchi, T. Satellite derived bathymetry using machine learning and multi-temporal satellite images. Remote. Sens. 2019, 11, 1155. [Google Scholar] [CrossRef] [Green Version]

- Lyons, B.M.; Roelfsema, C.M.; Kennedy, E.V.; Kovacs, E.M.; Borrego-Acevedo, R.; Markey, K.; Roe, M.; Yuwono, D.M.; Harris, D.L.; Phinn, S.R.; et al. Mapping the world’s coral reefs using a global multiscale earth observation framework. Remote. Sens. Ecol. Conversat. 2020, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Jell, J.S.; Flood, P.G. Guide to the Geology of Reefs of the Capricorn and Bunker Groups, Great Barrier Reef Province, with Special Reference to Heron Reef; University of Queensland Press: Brisbane, Australia, 1978; Volume 8, p. 85. [Google Scholar]

- Congalton, R.G. Accuracy assessment and validation of remotely sensed and other spatial information. Int. J. Wildland Fire 2001, 10, 321–328. [Google Scholar] [CrossRef] [Green Version]

- Roelfsema, C.M.; Phinn, S.R. Integrating field data with high spatial resolution multispectral satellite imagery for calibration and validation of coral reef benthic community maps. J. Appl. Remote Sens. 2010, 4, 043527. [Google Scholar] [CrossRef] [Green Version]

- Wicaksono, P.; Aryaguna, P.A.; Lazuardi, W. Benthic habitat mapping model and cross validation using machine-learning classification algorithms. Remote. Sens. 2019, 11, 1279. [Google Scholar] [CrossRef] [Green Version]

- Murray, N.J.; Phinn, S.R.; DeWitt, M.; Ferrari, R.; Johnston, R. The global distribution and trajectory of tidal flats. Nature 2019, 565, 222–225. [Google Scholar] [CrossRef]

- Granshaw, S. Fundamentals of satellite remote sensing: An environmental approach. Photogramm. Rec. 2017, 32, 61–62. [Google Scholar] [CrossRef]

- Salmond, J.; Passenger, J.; Kovaks, E.; Roelfsema, C.; Stetner, D. Reef Check Australia 2018 Heron Island Reef Health Report; Reef Check Foundation Ltd.: Marina del Rey, CA, USA, 2018. [Google Scholar]

- Phinn, S.R.; Roelfsema, C.M.; Mumby, P.J. Multi-scale, object-based image analysis for mapping geomorphic and ecological zones on coral reefs. Int. J. Remote Sens. 2011, 33, 3768–3797. [Google Scholar] [CrossRef]

- Doukari, M.; Batsaris, M.; Papakonstantinou, A.; Topouzelis, K. A Protocol for aerial survey in coastal Areas using UAS. Remote. Sens. 2019, 11, 1913. [Google Scholar] [CrossRef] [Green Version]

- Traganos, D.; Poursanidis, D.; Aggarwal, B.; Chrysoulakis, N.; Reinartz, P. Estimating satellite-derived bathymetry (SDB) with the google earth engine and sentinel-2. Remote. Sens. 2018, 10, 859. [Google Scholar] [CrossRef] [Green Version]

- Mount, R. Acquisition of through-water aerial survey images: Surface effects and the prediction of sun glitter and subsurface illumination. Photogramm. Eng. Remote. Sens. 2005, 71, 1407–1415. [Google Scholar] [CrossRef]

- Bejarano, S.; Mumby, P.J.; Hedley, J.D.; Sotheran, I. Combining optical and acoustic data to enhance the detection of Caribbean forereef habitats. Remote. Sens. Environ. 2010, 114, 2768–2778. [Google Scholar] [CrossRef]

- Casella, E.; Collin, A.; Harris, D.; Ferse, S.; Bejarano, S.; Parravicini, V.; Hench, J.L.; Rovere, A. Mapping coral reefs using consumer-grade drones and structure from motion photogrammetry techniques. Coral Reefs 2017, 36, 269–275. [Google Scholar] [CrossRef]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote. Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Wu, H.; Li, Z.L. Scale issues in remote sensing: A review on analysis, processing and modeling. Sensors 2009, 9, 1768–1793. [Google Scholar] [CrossRef] [PubMed]

- Collin, A.; Archambault, P.; Planes, S. Revealing the regime of shallow coral reefs at patch scale by continuous spatial modeling. Front. Mar. Sci. 2014, 1, 65. [Google Scholar] [CrossRef]

- Baraldi, A.; Bruzzone, L.; Blonda, P. Quality assessment of classification and cluster maps without ground truth knowledge. IEEE Trans. Geosci. Remote. Sens. 2005, 43, 857–873. [Google Scholar] [CrossRef]

- Foody, G.M. Harshness in image classification accuracy assessment. Int. J. Remote Sens. 2008, 29, 3137–3158. [Google Scholar] [CrossRef] [Green Version]

- Powell, R.L.; Matzke, N.; de Souza, C., Jr.; Clark, M.; Numata, I.; Hess, L.L.; Roberts, D.A. Sources of error in accuracy assessment of thematic land-cover maps in the Brazilian Amazon. Remote. Sens. Environ. 2004, 90, 221–234. [Google Scholar] [CrossRef]

- Lyons, M.B.; Keith, D.A.; Phinn, S.R.; Mason, T.J.; Elith, J. A comparison of resampling methods for remote sensing classification and accuracy assessment. Remote. Sens. Environ. 2018, 208, 145–153. [Google Scholar] [CrossRef]

- Koh, L.P.; Wich, S.A. Dawn of drone ecology: Low-cost autonomous aerial vehicles for conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef] [Green Version]

- Getzin, S.; Wiegand, K.; Schöning, I. Assessing biodiversity in forests using very high-resolution images and unmanned aerial vehicles. Methods Ecol. Evol. 2011, 3, 397–404. [Google Scholar] [CrossRef]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Ruwaimana, M.; Satyanarayana, B.; Otero, V.; Muslim, A.M.; Muhammad, S.A. The advantages of using drones over space-borne imagery in the mapping of mangrove forests. PLoS ONE 2018, 13, e0200288. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barnas, A.F.; Darby, B.J.; Vandeberg, G.S.; Rockwell, R.F.; Ellis-Felege, S.N. A comparison of drone imagery and ground-based methods for estimating the extent of habitat destruction by lesser snow geese (Anser caerulescens caerulescens) in La Pérouse Bay. PLoS ONE 2019, 14, e0217049. [Google Scholar] [CrossRef] [Green Version]

- Gray, P.C.; Ridge, J.T.; Poulin, S.K.; Seymour, A.C.; Schwantes, A.M.; Swenson, J.J.; Johnston, D.W. Integrating drone imagery into high resolution satellite remote sensing assessments of estuarine environment. Remote. Sens. 2018, 10, 1257. [Google Scholar] [CrossRef] [Green Version]

- Fallati, L.; Saponari, L.; Savini, A.; Marchese, F.; Corselli, C.; Galli, P. Multi-Temporal UAV Data and object-based image analysis (OBIA) for estimation of substrate changes in a post-bleaching scenario on a maldivian reef. Photogramm. Eng. Remote. Sens. 2020, 12, 2093. [Google Scholar] [CrossRef]

| Overall Map Accuracy 0.86 | ||

|---|---|---|

| Class | User | Producer |

| Live Coral | 0.86 | 0.92 |

| Rock/dead coral | 0.85 | 0.71 |

| Sand | 0.87 | 0.90 |

| Sun Glint | 0.86 | 0.91 |

| Class | Live Coral | Rock/Dead Coral | Sand | Sun Glint | Row Total |

|---|---|---|---|---|---|

| Live Coral | 2213 | 330 | 14 | 9 | 2566 |

| Rock/dead coral | 156 | 1664 | 54 | 84 | 1958 |

| Sand | 28 | 170 | 2160 | 119 | 2477 |

| Sun Glint | 10 | 186 | 168 | 2157 | 2521 |

| Column Total | 2407 | 2350 | 2396 | 2369 | 9522 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bennett, M.K.; Younes, N.; Joyce, K. Automating Drone Image Processing to Map Coral Reef Substrates Using Google Earth Engine. Drones 2020, 4, 50. https://doi.org/10.3390/drones4030050

Bennett MK, Younes N, Joyce K. Automating Drone Image Processing to Map Coral Reef Substrates Using Google Earth Engine. Drones. 2020; 4(3):50. https://doi.org/10.3390/drones4030050

Chicago/Turabian StyleBennett, Mary K., Nicolas Younes, and Karen Joyce. 2020. "Automating Drone Image Processing to Map Coral Reef Substrates Using Google Earth Engine" Drones 4, no. 3: 50. https://doi.org/10.3390/drones4030050

APA StyleBennett, M. K., Younes, N., & Joyce, K. (2020). Automating Drone Image Processing to Map Coral Reef Substrates Using Google Earth Engine. Drones, 4(3), 50. https://doi.org/10.3390/drones4030050