Leveraging AI to Estimate Caribou Lichen in UAV Orthomosaics from Ground Photo Datasets

Abstract

:1. Introduction

2. Materials and Methods

2.1. Datasets

2.1.1. UAV Image Preparation

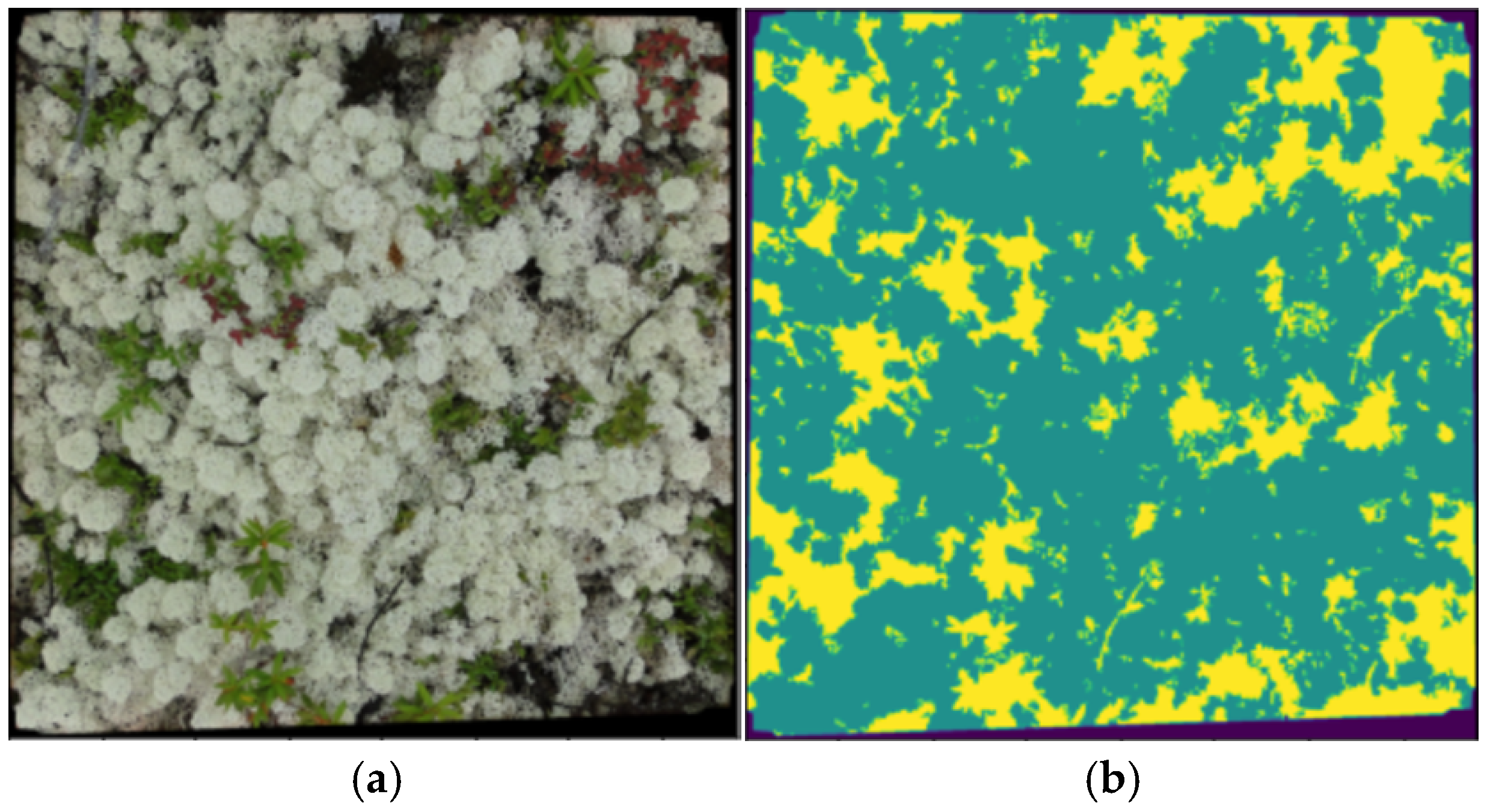

2.1.2. Neural Network Preparation

2.2. Neural Network Training

2.3. Neural Network Prediction and Post Processing

3. Results

3.1. UAV LiCNN Ground Photo Mosaic Test Results

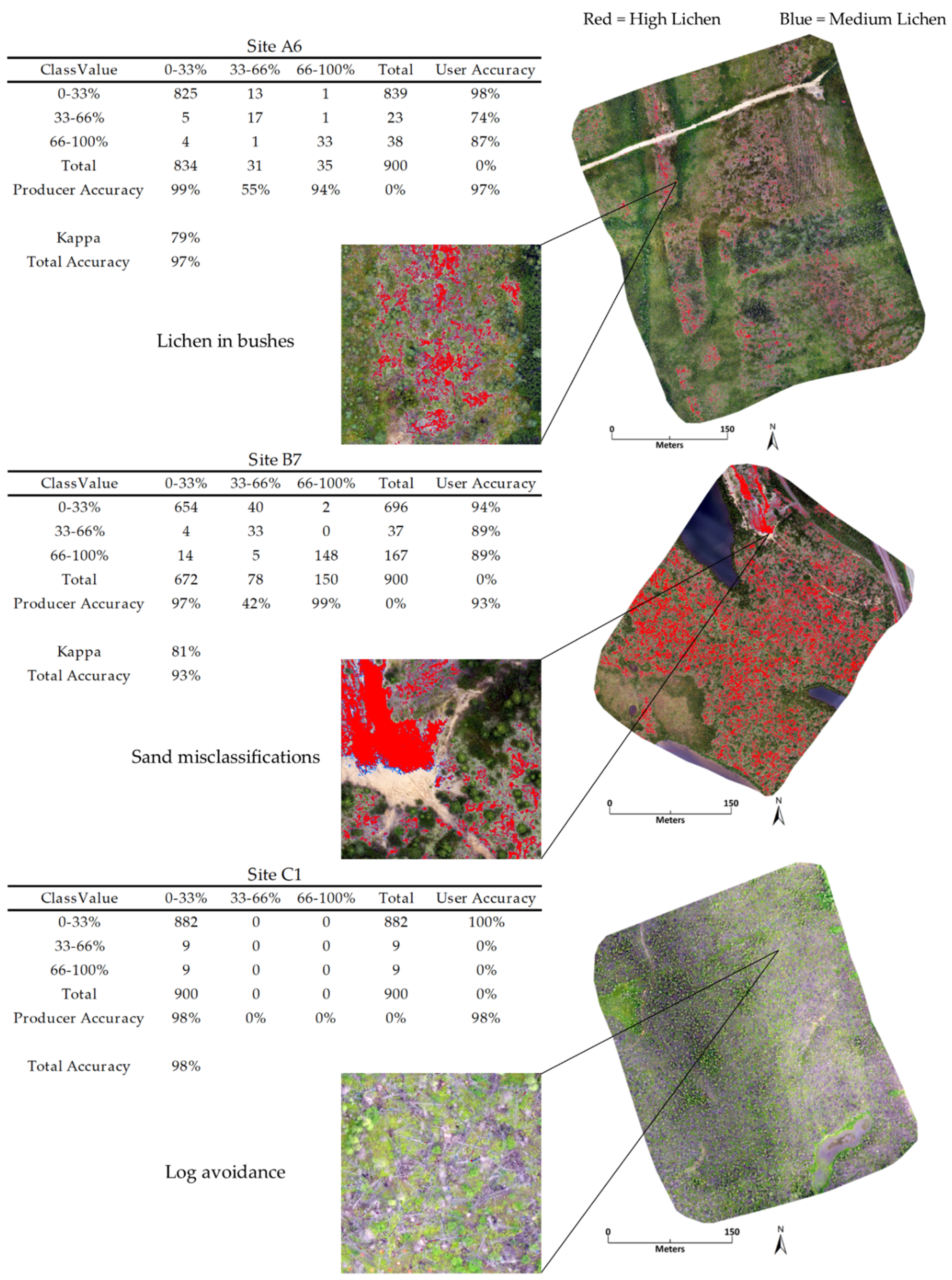

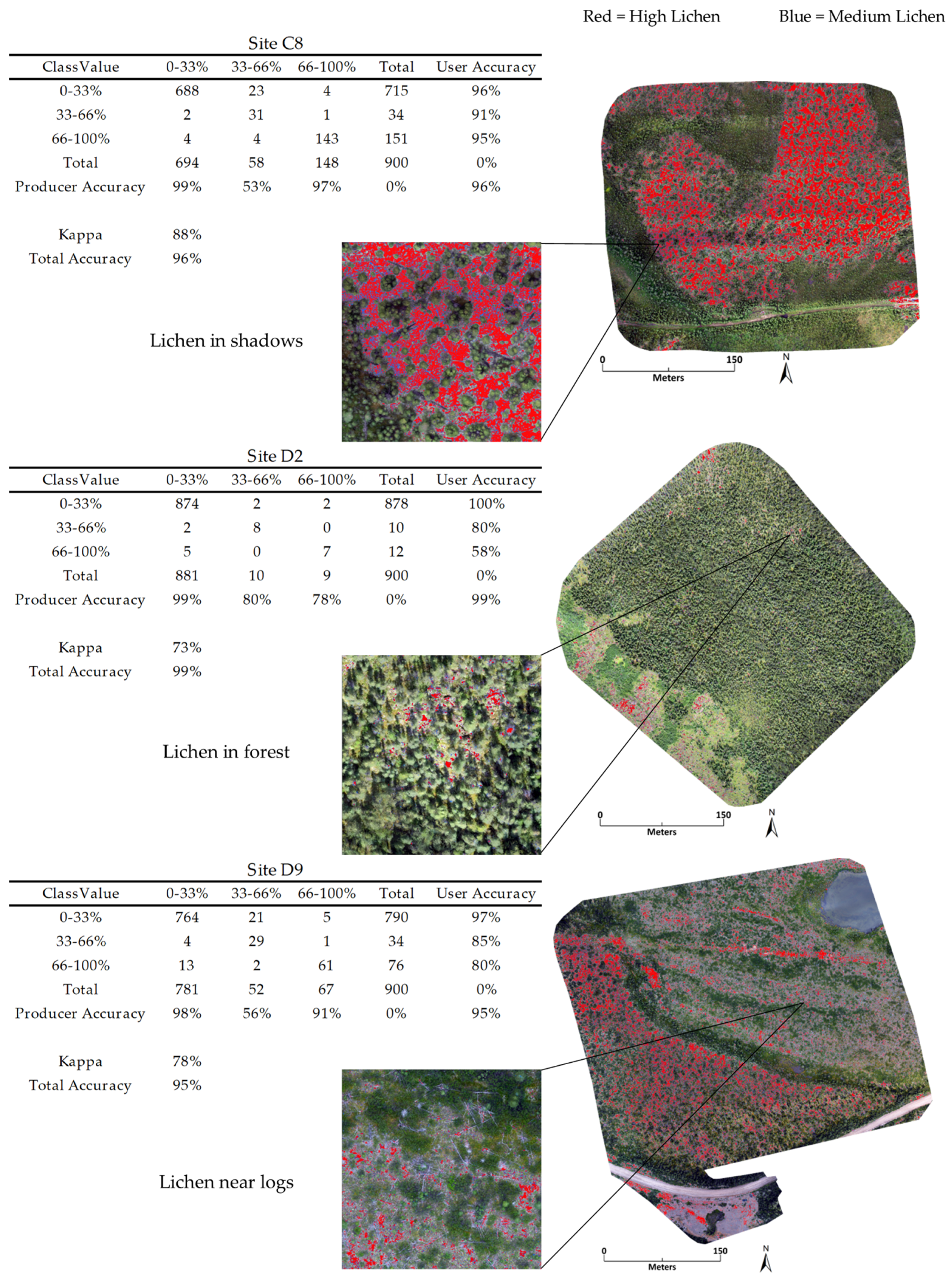

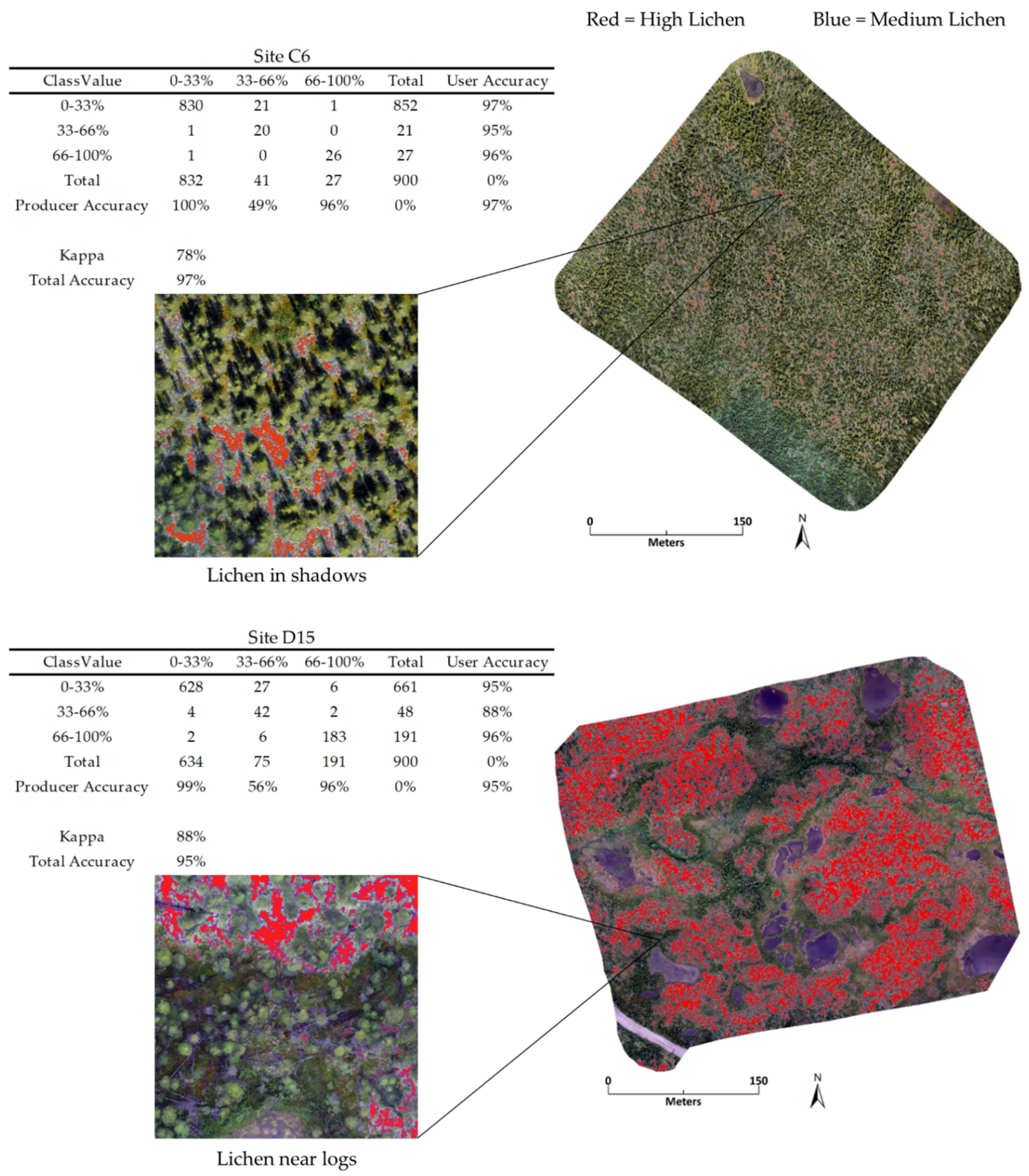

3.2. Manual Point Accuracy Assessment

3.3. UAV LiCNN Microplot Prediction and Ground Truth Comparison

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

References

- Fraser, R.H.; Pouliot, D.; van der Sluijs, J. UAV and high resolution satellite mapping of Forage Lichen (Cladonia spp.) in a Rocky Canadian Shield Landscape. Can. J. Remote Sens. 2021, 1–14. [Google Scholar] [CrossRef]

- Macander, M.J.; Palm, E.C.; Frost, G.V.; Herriges, J.D.; Nelson, P.R.; Roland, C.; Russell, K.L.; Suitor, M.J.; Bentzen, T.W.; Joly, K.; et al. Lichen cover mapping for Caribou ranges in interior Alaska and Yukon. Environ. Res. Lett. 2020, 15, 055001. [Google Scholar] [CrossRef]

- Schmelzer, I.; Lewis, K.P.; Jacobs, J.D.; McCarthy, S.C. Boreal caribou survival in a warming climate, Labrador, Canada 1996–2014. Glob. Ecol. Conserv. 2020, 23, e01038. [Google Scholar] [CrossRef]

- Thompson, I.D.; Wiebe, P.A.; Mallon, E.; Rodger, A.R.; Fryxell, J.M.; Baker, J.A.; Reid, D. Factors influencing the seasonal diet selection by woodland caribou (rangifer tarandus tarandus) in boreal forests in Ontario. Can. J. Zool. 2015, 93, 87–98. [Google Scholar] [CrossRef]

- Théau, J.; Peddle, D.R.; Duguay, C.R. Mapping lichen in a caribou habitat of Northern Quebec, Canada, using an enhancement-classification method and spectral mixture analysis. Remote Sens. Environ. 2005, 94, 232–243. [Google Scholar] [CrossRef]

- Gunn, A. Rangifer tarandus. IUCN Red List. Threat. Species 2016, e.T29742A22167140. [Google Scholar] [CrossRef]

- Dengler, J.; Jansen, F.; Glöckler, F.; Peet, R.K.; de Cáceres, M.; Chytrý, M.; Ewald, J.; Oldeland, J.; Lopez-Gonzalez, G.; Finckh, M.; et al. The Global Index of Vegetation-Plot Databases (GIVD): A new resource for vegetation science. J. Veg. Sci. 2011, 22, 582–597. [Google Scholar] [CrossRef]

- Kentsch, S.; Caceres, M.L.L.; Serrano, D.; Roure, F.; Diez, Y. Computer vision and deep learning techniques for the analysis of drone-acquired forest images, a transfer learning study. Remote Sens. 2020, 12, 1287. [Google Scholar] [CrossRef] [Green Version]

- Pap, M.; Kiraly, S.; Moljak, S. Investigating the usability of UAV obtained multispectral imagery in tree species segmentation. International Archives of the Photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. 2019, 42, 159–165. [Google Scholar] [CrossRef] [Green Version]

- Chabot, D.; Dillon, C.; Shemrock, A.; Weissflog, N.; Sager, E.P.S. An object-based image analysis workflow for monitoring shallow-water aquatic vegetation in multispectral drone imagery. ISPRS Int. J. Geo-Inf. 2018, 7, 294. [Google Scholar] [CrossRef] [Green Version]

- Boon, M.A.; Greenfield, R.; Tesfamichael, S. Wetland assessment using unmanned aerial vehicle (UAV) photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. 2016, 41, 781–788. [Google Scholar] [CrossRef] [Green Version]

- Murugan, D.; Garg, A.; Singh, D. Development of an Adaptive Approach for Precision Agriculture Monitoring with Drone and Satellite Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 5322–5328. [Google Scholar] [CrossRef]

- Alvarez-Taboada, F.; Paredes, C.; Julián-Pelaz, J. Mapping of the invasive species Hakea sericea using Unmanned Aerial Vehicle (UAV) and worldview-2 imagery and an object-oriented approach. Remote Sens. 2017, 9, 913. [Google Scholar] [CrossRef] [Green Version]

- Campbell, M.J.; Dennison, P.E.; Tune, J.W.; Kannenberg, S.A.; Kerr, K.L.; Codding, B.F.; Anderegg, W.R.L. A multi-sensor, multi-scale approach to mapping tree mortality in woodland ecosystems. Remote Sens. Environ. 2020, 245, 111853. [Google Scholar] [CrossRef]

- Jozdani, S.; Chen, D.; Chen, W.; Leblanc, S.G.; Prévost, C.; Lovitt, J.; He, L.; Johnson, B.A. Leveraging Deep Neural Networks to Map Caribou Lichen in High-Resolution Satellite Images Based on a Small-Scale, Noisy UAV-Derived Map. Remote Sens. 2021, 13, 2658. [Google Scholar] [CrossRef]

- Zhao, T.; Yang, Y.; Niu, H.; Chen, Y.; Wang, D. Comparing U-Net convolutional networks with fully convolutional networks in the performances of pomegranate tree canopy segmentation. Multispectral Hyperspectral Ultraspectral Remote Sens. Technol. Tech. Appl. 2018, 64. [Google Scholar] [CrossRef]

- Bhatnagar, S.; Gill, L.; Ghosh, B. Drone image segmentation using machine and deep learning for mapping raised bog vegetation communities. Remote Sens. 2020, 12, 2602. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2015, Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef] [Green Version]

- Noh, H.; Hong, S.; Han, B. Learning deconvolution network for semantic segmentation. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV) 2015, Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef] [Green Version]

- Shi, Q.; Liu, M.; Li, S.; Liu, X.; Wang, F.; Zhang, L. A deeply supervised attention metric-based network and an open aerial image dataset for remote sensing change detection. IEEE Trans. Geosci. Remote Sens. 2021, 1–16. [Google Scholar] [CrossRef]

- Luo, F.; Zhang, L.; Du, B.; Zhang, L. Dimensionality Reduction with Enhanced Hybrid-Graph Discriminant Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5336–5353. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, T.B.P. U-Net: Convolutional Networks for Biomedical Image Segmentation. Lect. Notes Comput. Sci. 2015, 9, 234–241. [Google Scholar] [CrossRef] [Green Version]

- Abrams, J.F.; Vashishtha, A.; Wong, S.T.; Nguyen, A.; Mohamed, A.; Wieser, S.; Kuijper, A.; Wilting, A.; Mukhopadhyay, A. Habitat-Net: Segmentation of habitat images using deep learning. Ecol. Inform. 2019, 51, 121–128. [Google Scholar] [CrossRef]

- Tang, H.; Wang, B.; Chen, X. Deep learning techniques for automatic butterfly segmentation in ecological images. Comput. Electron. Agric. 2020, 178, 105739. [Google Scholar] [CrossRef]

- Jo, H.J.; Na, Y.-H.; Song, J.-B. Data augmentation using synthesized images for object detection. In Proceedings of the 2017 17th International Conference on Control, Automation and Systems (ICCAS) 2017, Jeju, Korea, 18–21 October 2017. [Google Scholar] [CrossRef]

- Lovitt, J.; Richardson, G.; Rajaratnam, K.; Chen, W.; Leblanc, S.G.; He, L.; Nielsen, S.E.; Hillman, A.; Schmelzer, I.; Arsenault, A. Using AI to estimate caribou lichen ground cover from field-level digital photographs in support of EO-based regional mapping. Remote Sens. 2021, in press. [Google Scholar]

- He, L.; Chen, W.; Leblanc, S.G.; Lovitt, J.; Arsenault, A.; Schmelzer, I.; Fraser, R.H.; Sun, L.; Prévost, C.R.; White, H.P.; et al. Integration of multi-scale remote sensing data in reindeer lichen fractional cover mapping in Eastern Canada. Remote Sens. Environ. 2021, in press. [Google Scholar]

- Miranda, B.R.; Sturtevant, B.R.; Schmelzer, I.; Doyon, F.; Wolter, P. Vegetation recovery following fire and harvest disturbance in central Labrador—a landscape perspective. Can. J. For. Res. 2016, 46, 1009–1018. [Google Scholar] [CrossRef] [Green Version]

- Schmelzer, I. CFS Lichen Mapping 2019. (J. Lovitt, Interviewer).

- Leblanc, S.G. Off-the-shelf Unmanned Aerial Vehicles for 3D Vegetation mapping. Geomat. Can. 2020, 57, 28. [Google Scholar] [CrossRef]

- Fernades, R.; Prevost, C.; Canisius, F.; Leblanc, S.G.; Maloley, M.; Oakes, S.; Holman, K.; Knudby, A. Monitoring snow depth change across a range of landscapes with ephemeral snowpacks using structure from motion applied to lightweight unmanned aerial vehicle videos. Cryosphere 2018, 12, 3535–3550. [Google Scholar] [CrossRef] [Green Version]

- Nordberg, M.L.; Allard, A. A remote sensing methodology for monitoring lichen cover. Can. J. Remote Sens. 2002, 28, 262–274. [Google Scholar] [CrossRef]

- Bauerle, A.; van Onzenoodt, C.; Ropinski, T. Net2Vis—a visual grammar for automatically generating Publication-Tailored Cnn Architecture Visualizations. IEEE Trans. Vis. Comput. Graph. 2021, 27, 2980–2991. [Google Scholar] [CrossRef] [PubMed]

- McHugh, M.L. Lessons in biostatistics interrater reliability: The kappa statistic. Biochem. Med. 2012, 22, 276–282. [Google Scholar] [CrossRef]

| Loss | Accuracy | IoU Coefficient | |

|---|---|---|---|

| Sample Size | 200 | 200 | 200 |

| Mean | 0.4936 | 87.40% | 0.7050 |

| Standard Deviation | 0.0570 | 1.25% | 0.0157 |

| Min | 0.4119 | 83.56% | 0.6736 |

| Max | 0.7076 | 89.37% | 0.7365 |

| Class | Mean User Accuracy | Mean Producer Accuracy |

|---|---|---|

| Low | 96.74% | 98.75% |

| Medium | 86.04% | 55.88% |

| High | 85.84% | 92.93% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Richardson, G.; Leblanc, S.G.; Lovitt, J.; Rajaratnam, K.; Chen, W. Leveraging AI to Estimate Caribou Lichen in UAV Orthomosaics from Ground Photo Datasets. Drones 2021, 5, 99. https://doi.org/10.3390/drones5030099

Richardson G, Leblanc SG, Lovitt J, Rajaratnam K, Chen W. Leveraging AI to Estimate Caribou Lichen in UAV Orthomosaics from Ground Photo Datasets. Drones. 2021; 5(3):99. https://doi.org/10.3390/drones5030099

Chicago/Turabian StyleRichardson, Galen, Sylvain G. Leblanc, Julie Lovitt, Krishan Rajaratnam, and Wenjun Chen. 2021. "Leveraging AI to Estimate Caribou Lichen in UAV Orthomosaics from Ground Photo Datasets" Drones 5, no. 3: 99. https://doi.org/10.3390/drones5030099