Large-Scale Reality Modeling of a University Campus Using Combined UAV and Terrestrial Photogrammetry for Historical Preservation and Practical Use

Abstract

:1. Introduction

2. BYU History, UAV Photogrammetry, and Algorithms

2.1. Advancing UAV and Photogrammetry Technologies

2.2. Smart Campuses, Augmented Reality, and Virtual Reality

2.3. Algorithms and Machine Learning

2.3.1. A Priori Information

- NP: non-deterministic polynomial-time problems can be verified with polynomial time but not necessarily solved.

- NP-hard: hard as or harder to solve than NP that can be reduced to a partial solution in polynomial time.

- NP-complete: both NP and NP-hard; meaning that the proposed solution—which is practical but not necessarily optimal—is constrained to a feasible polynomial solve time. This means that accuracy and precision may be sacrificed in order to reach a timely solution.

2.3.2. A Posteriori Information

2.3.3. Specific Software (Machine Learning) Methodology

3. Methods

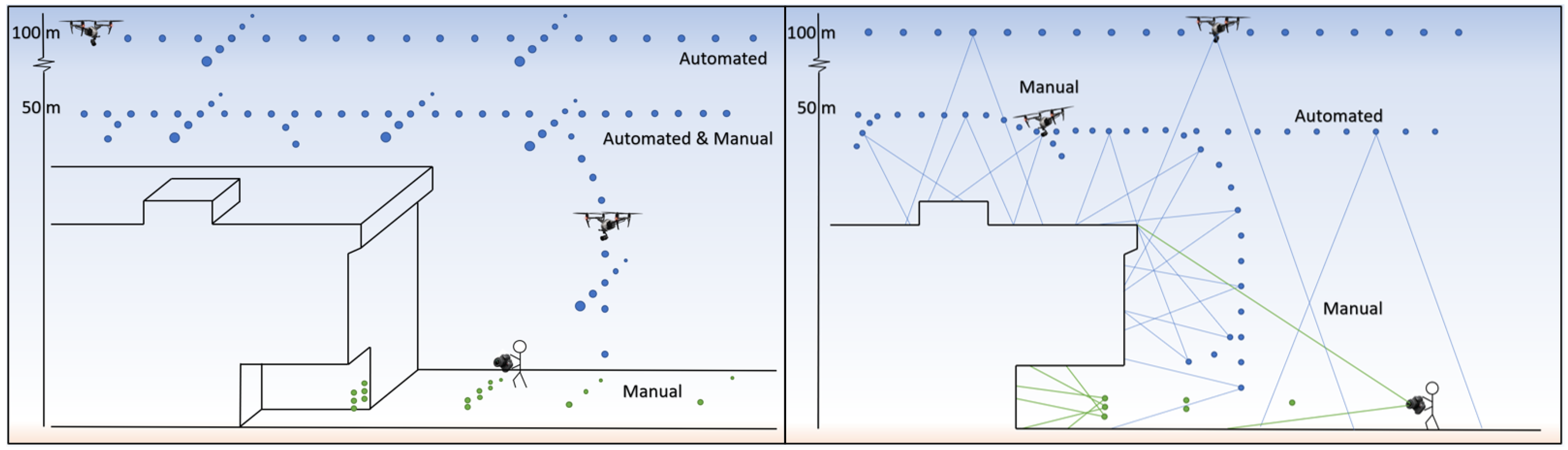

3.1. Data Acquisition

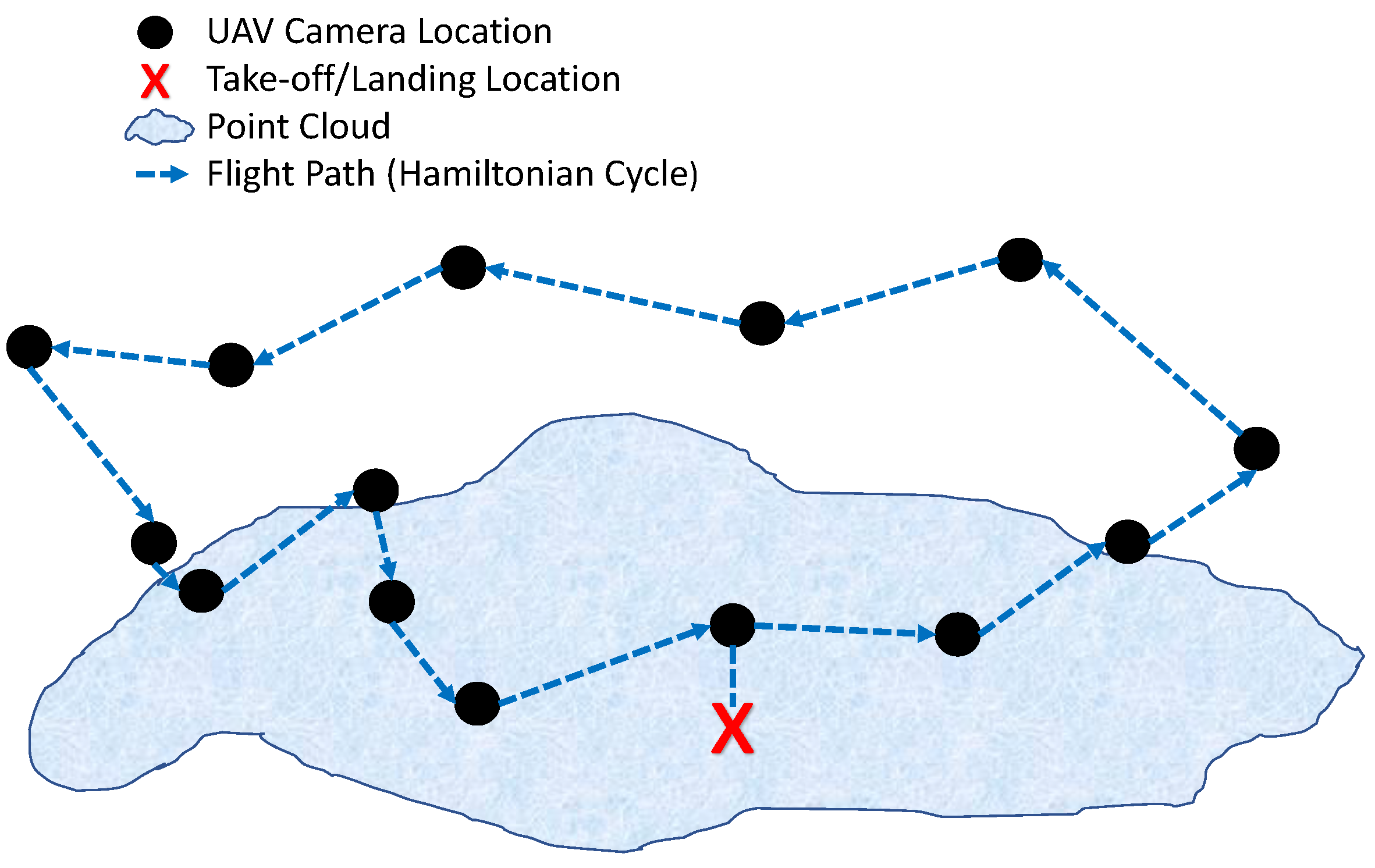

3.1.1. Flight Acquisition Techniques

3.1.2. Ground Control

3.2. Data Pre-Processing

3.3. Data Processing

3.4. Post-Processing

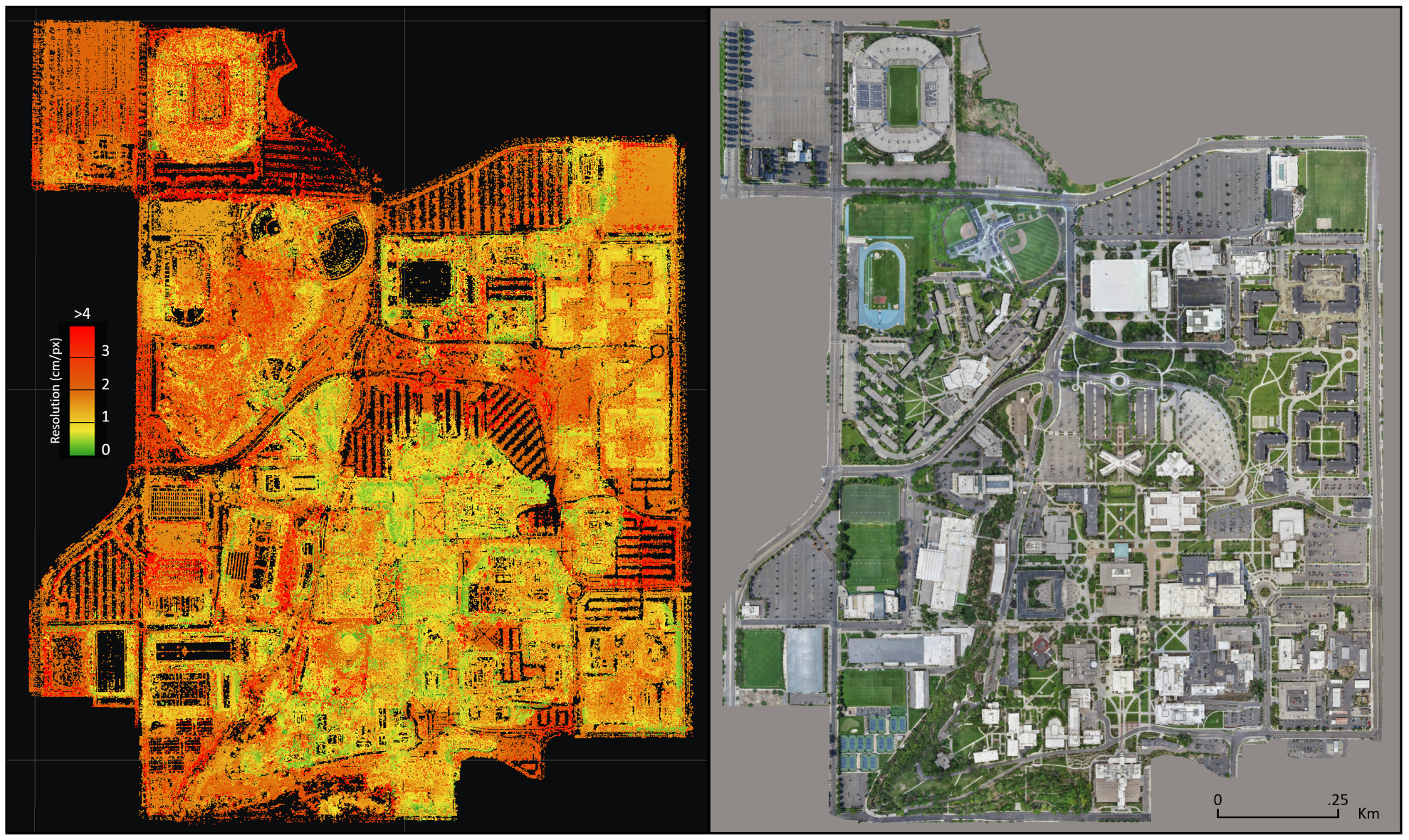

4. Results

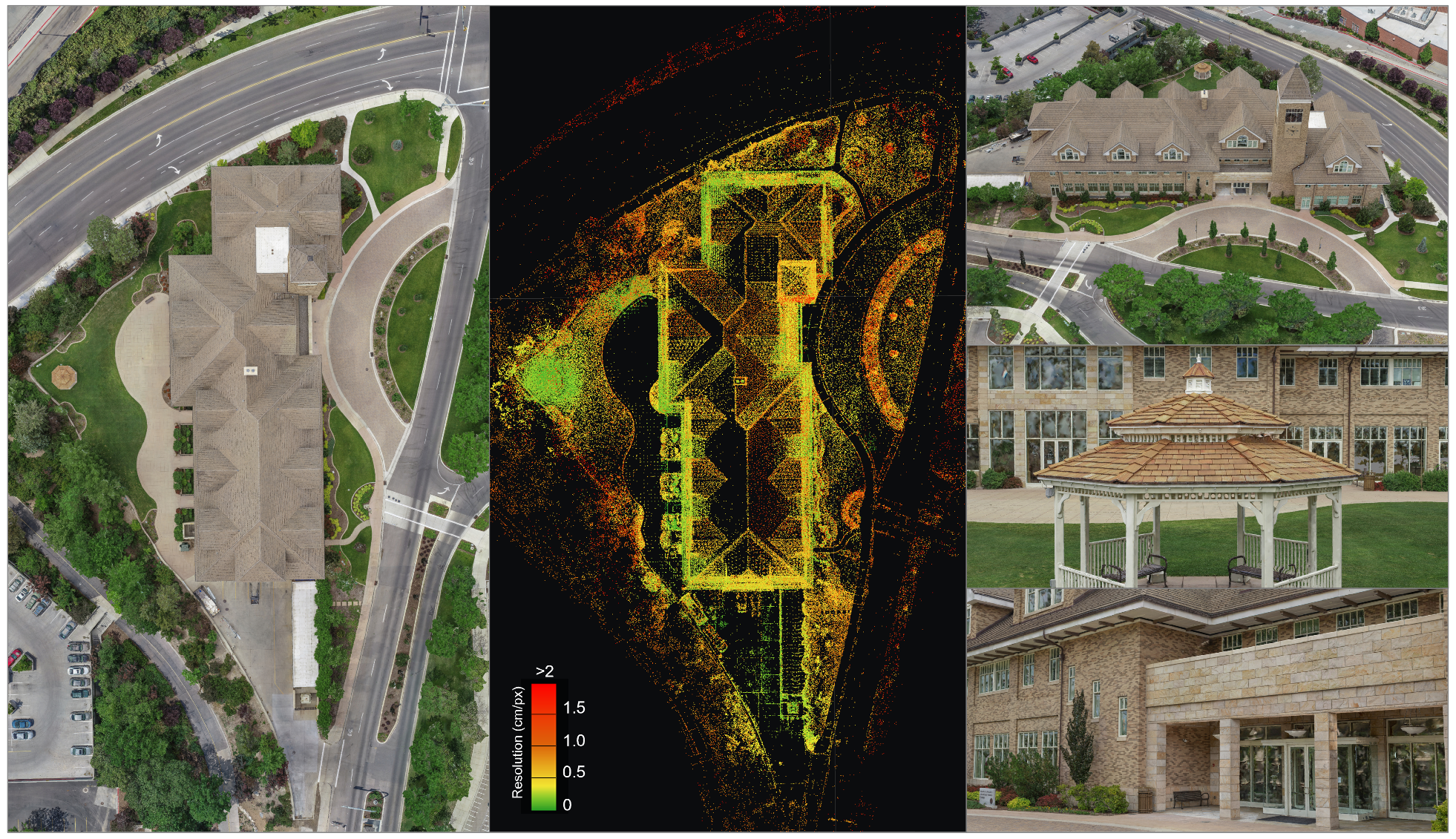

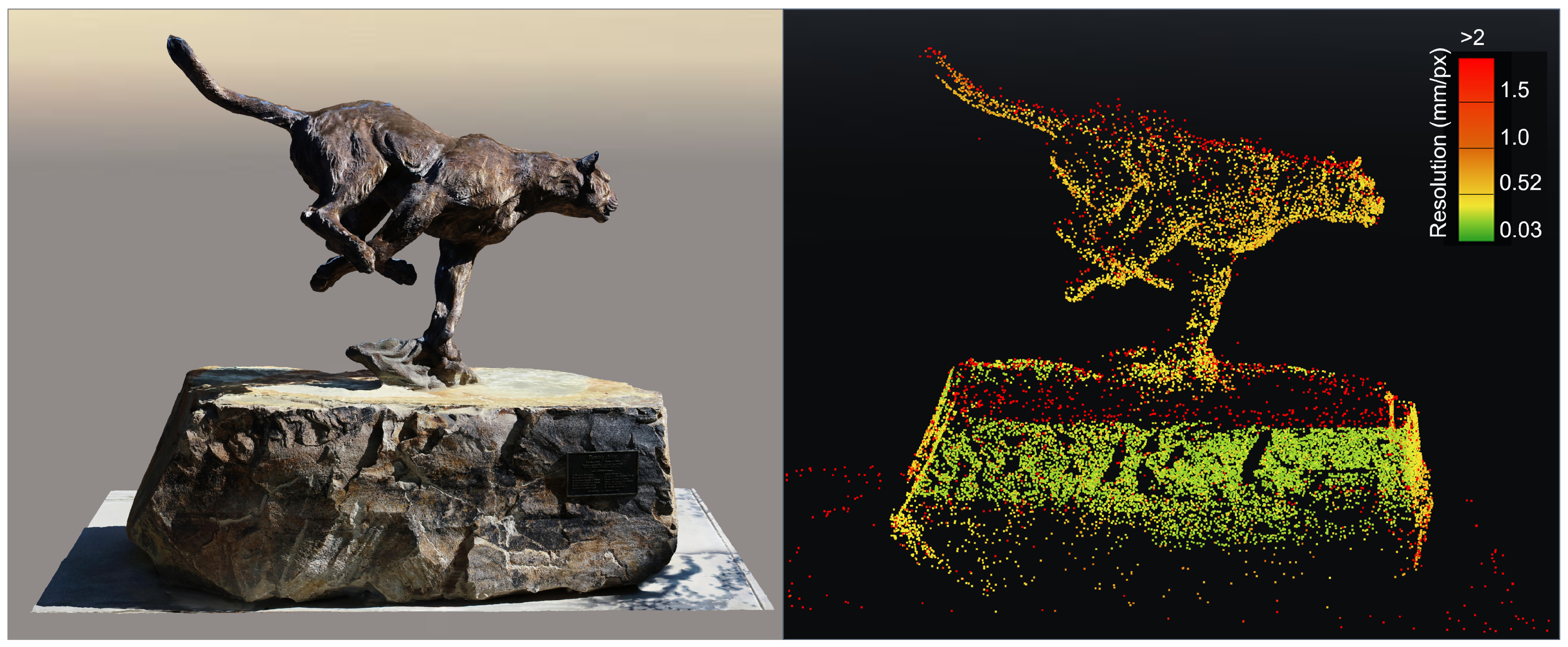

4.1. Model Resolution

4.2. Model Accuracy

4.2.1. Absolute Accuracy Assessment

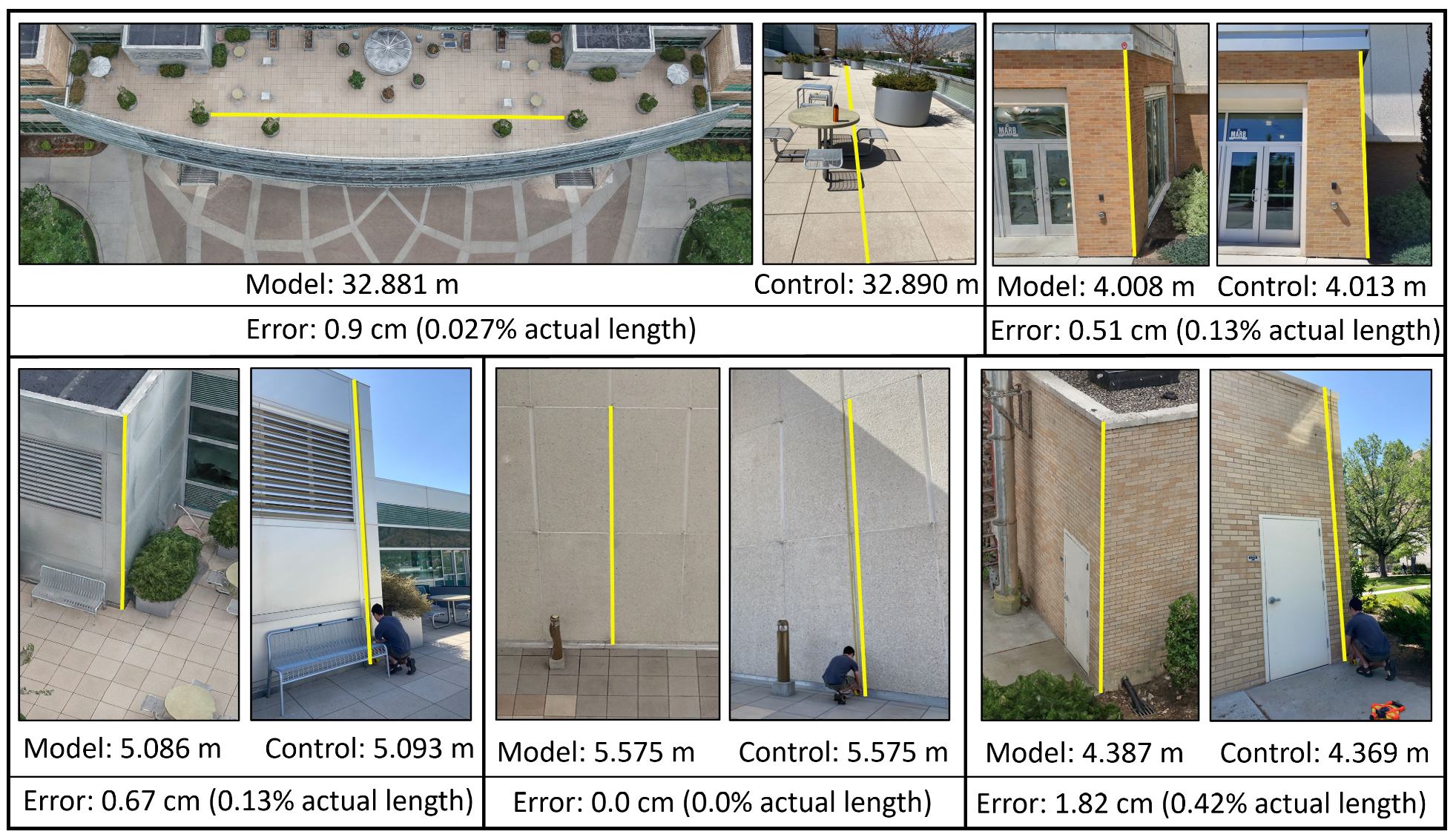

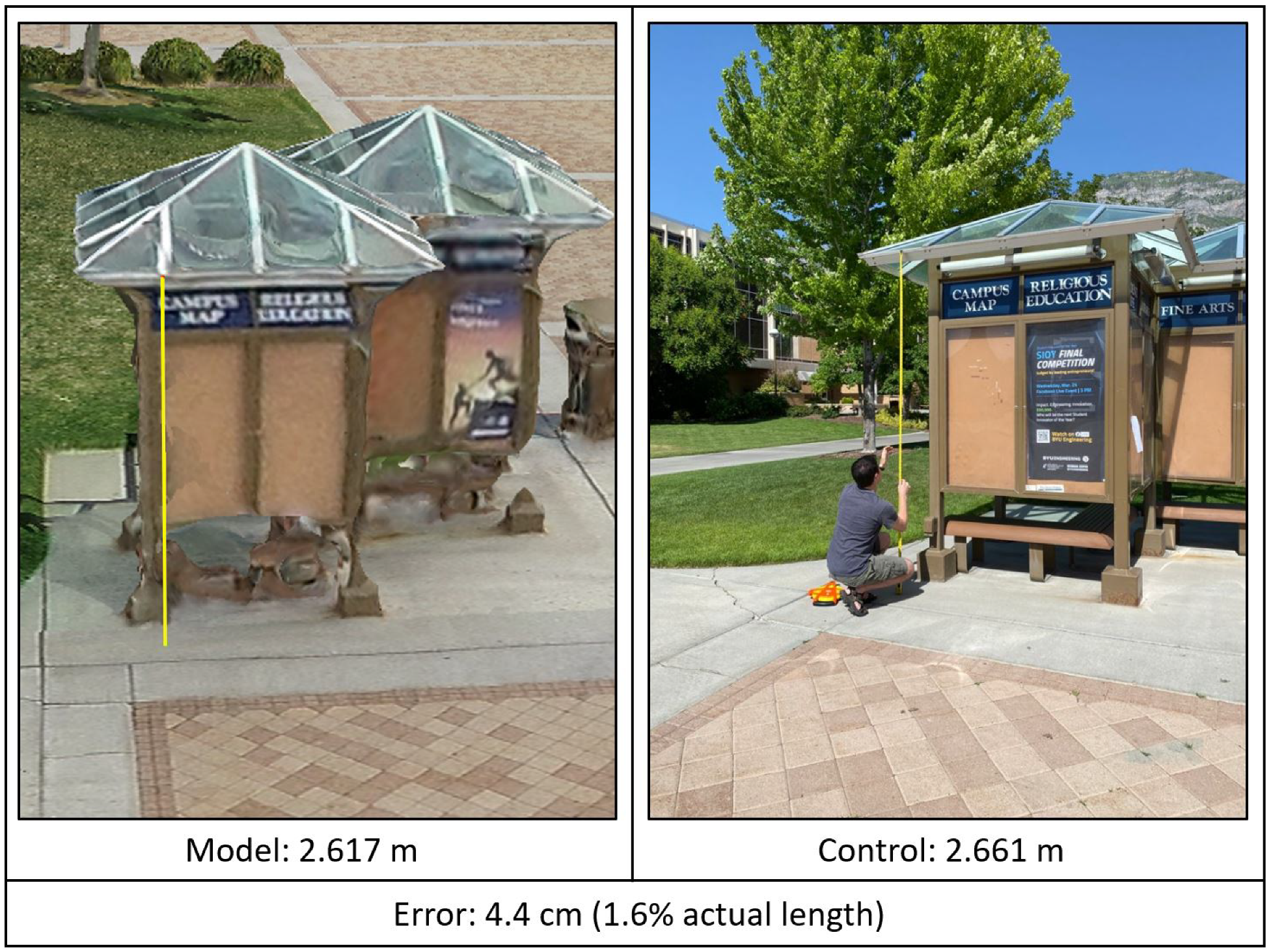

4.2.2. Relative Accuracy

4.2.3. SLAM-Based LIDAR Comparison

4.3. Optimized Flight Path Model Results

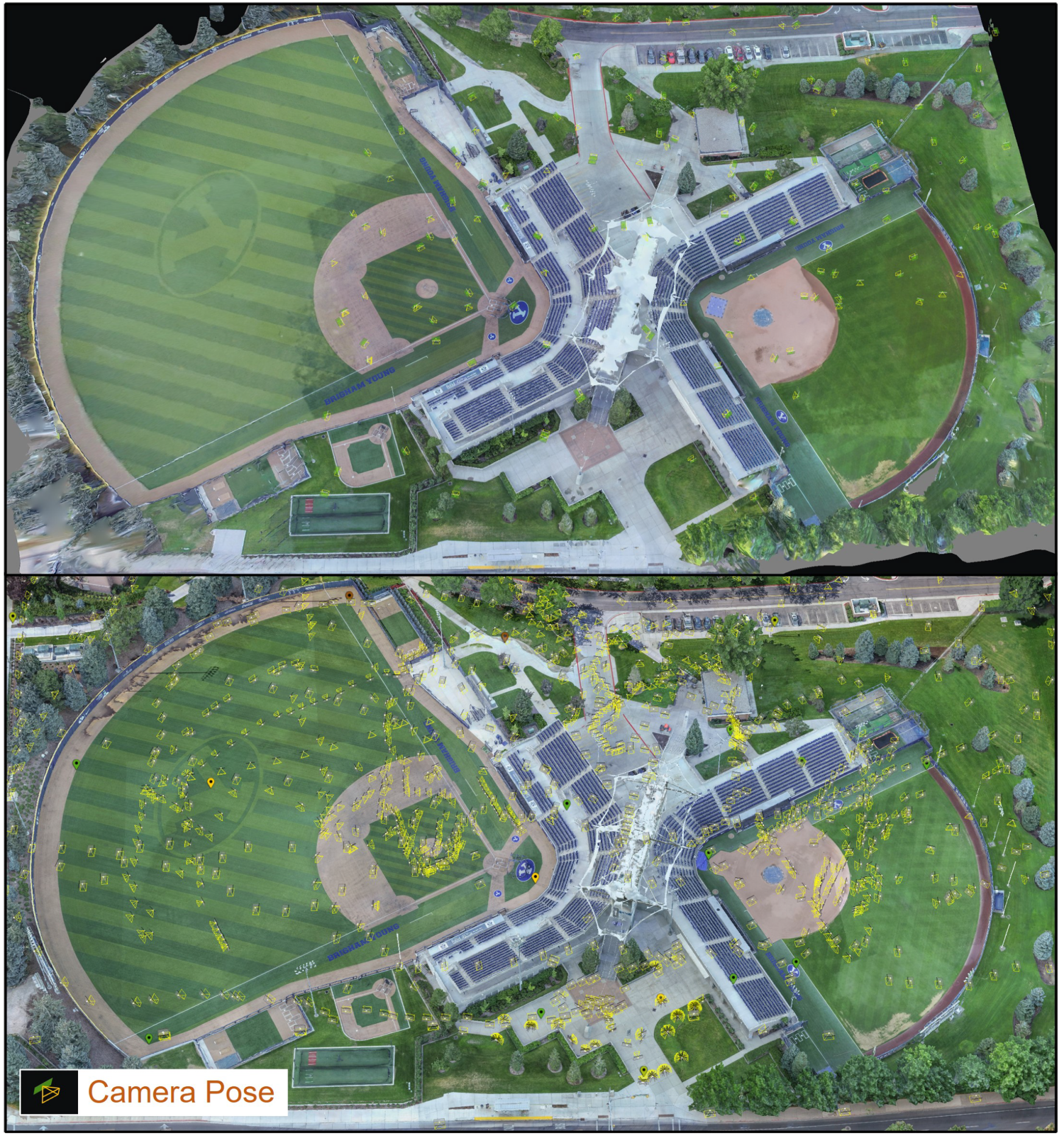

4.3.1. Miller Baseball and Softball Park Optimized Flight Model

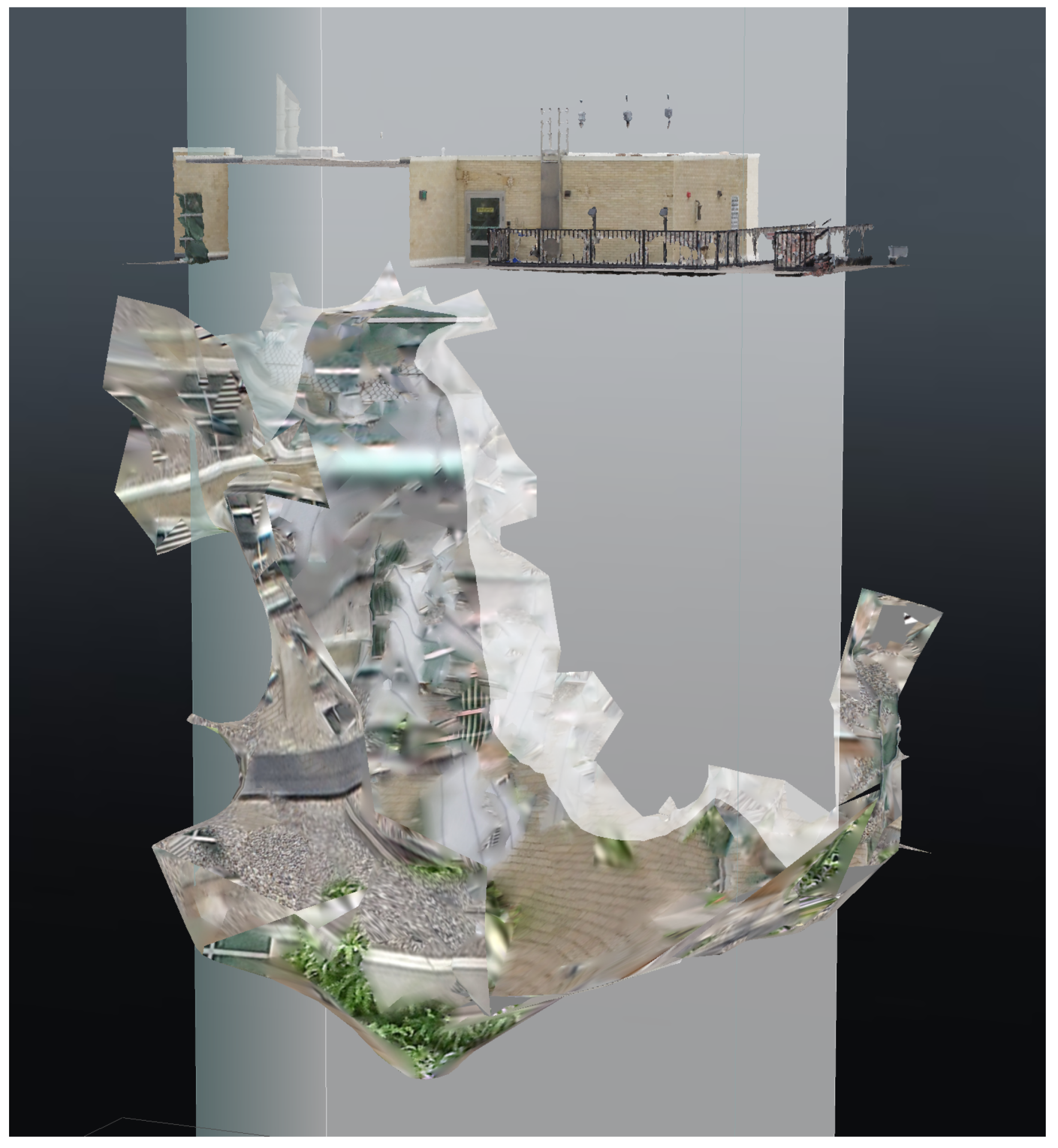

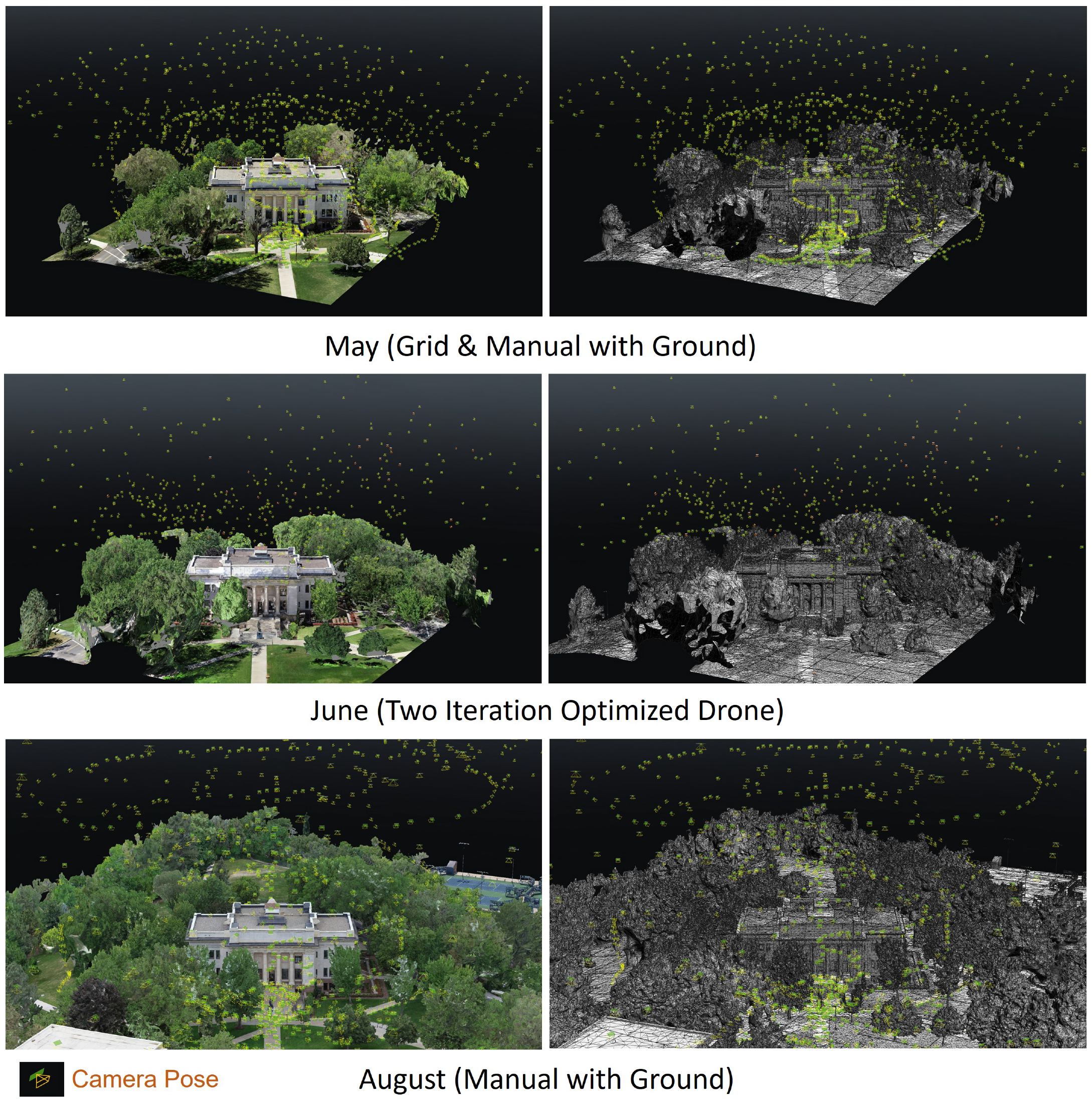

4.3.2. Karl G. Maeser Building Optimized Flight Model

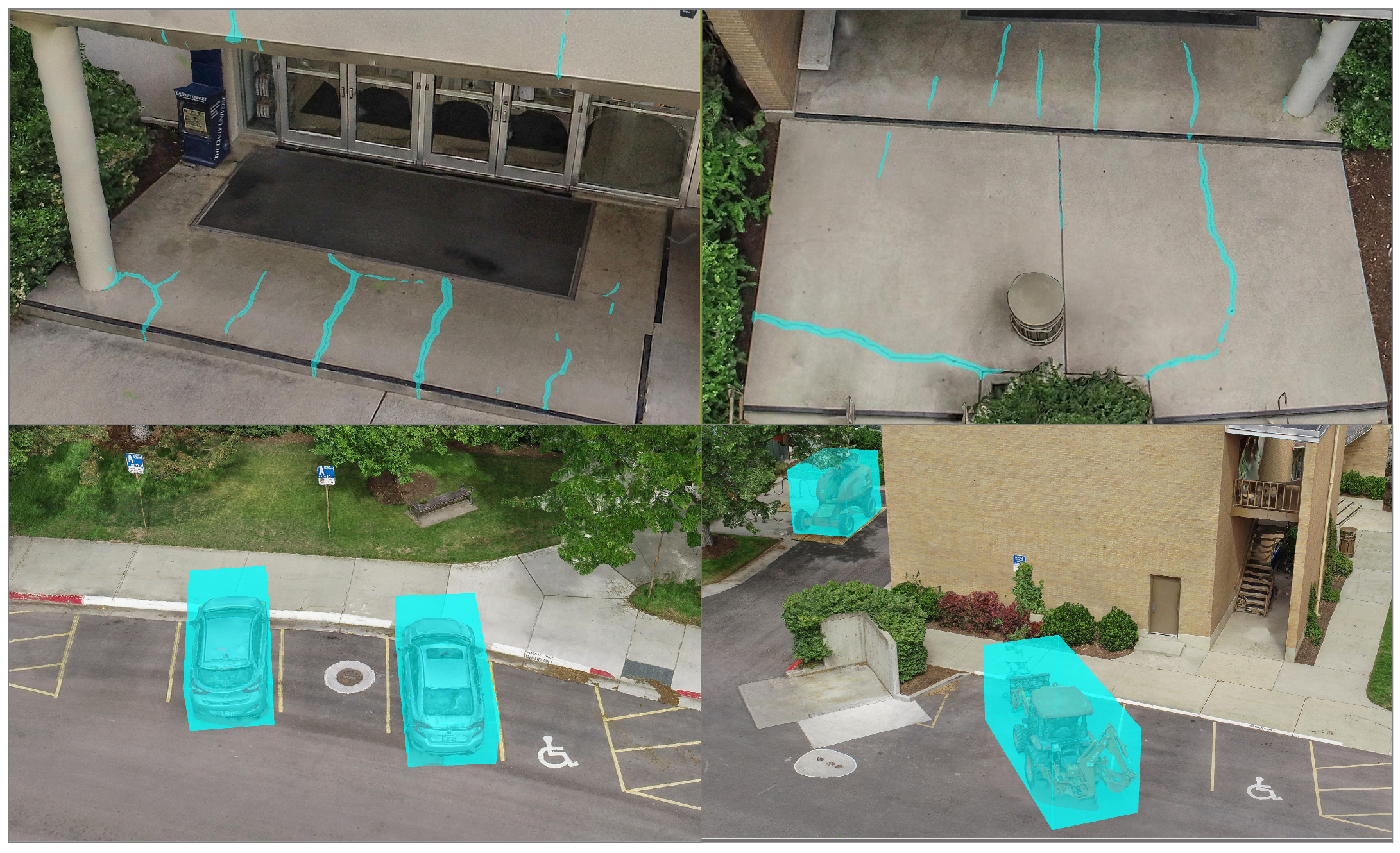

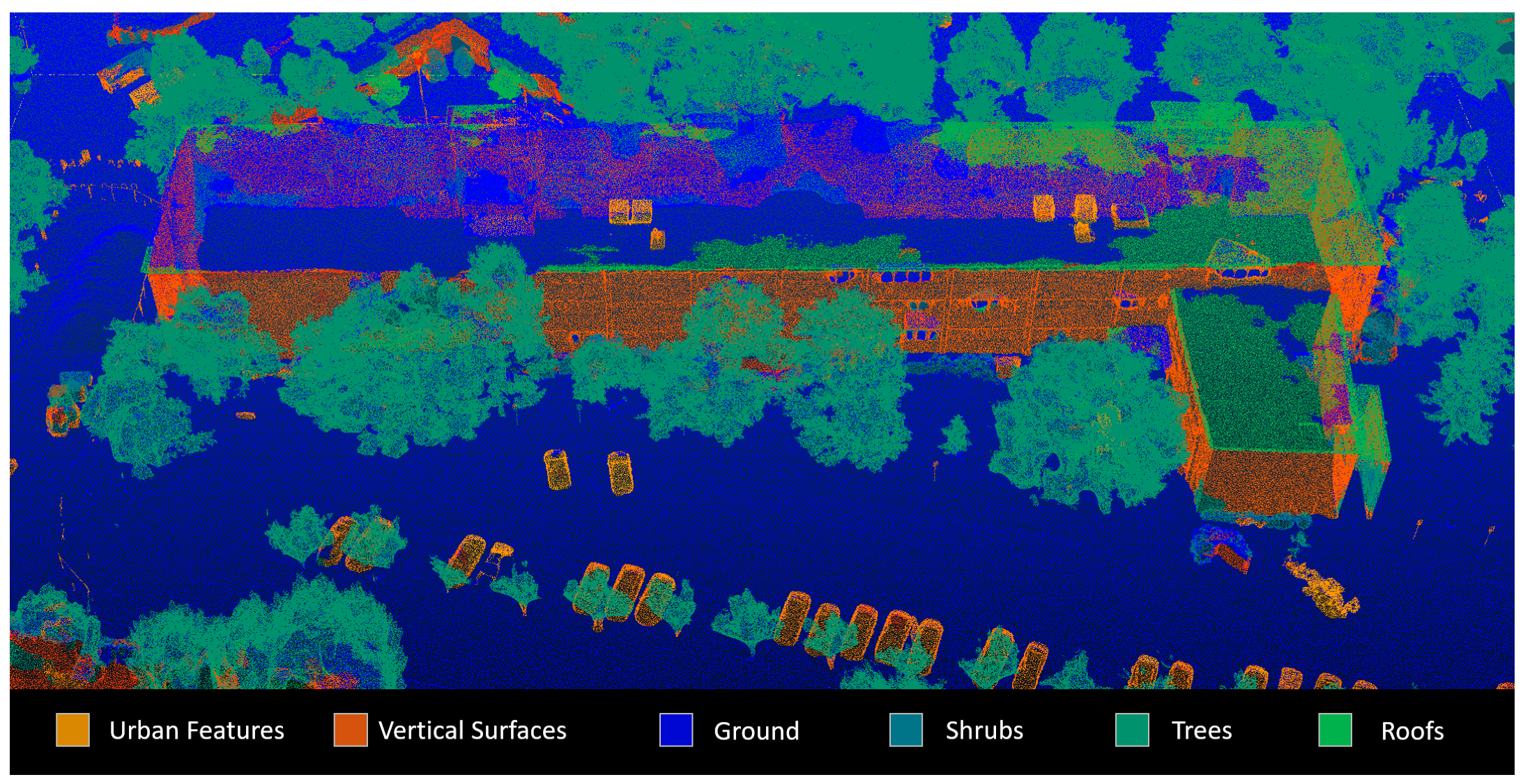

4.4. Machine Learning and Object Recognition of Final Model

4.5. Augmented and Virtual Reality and Realistic Visualization

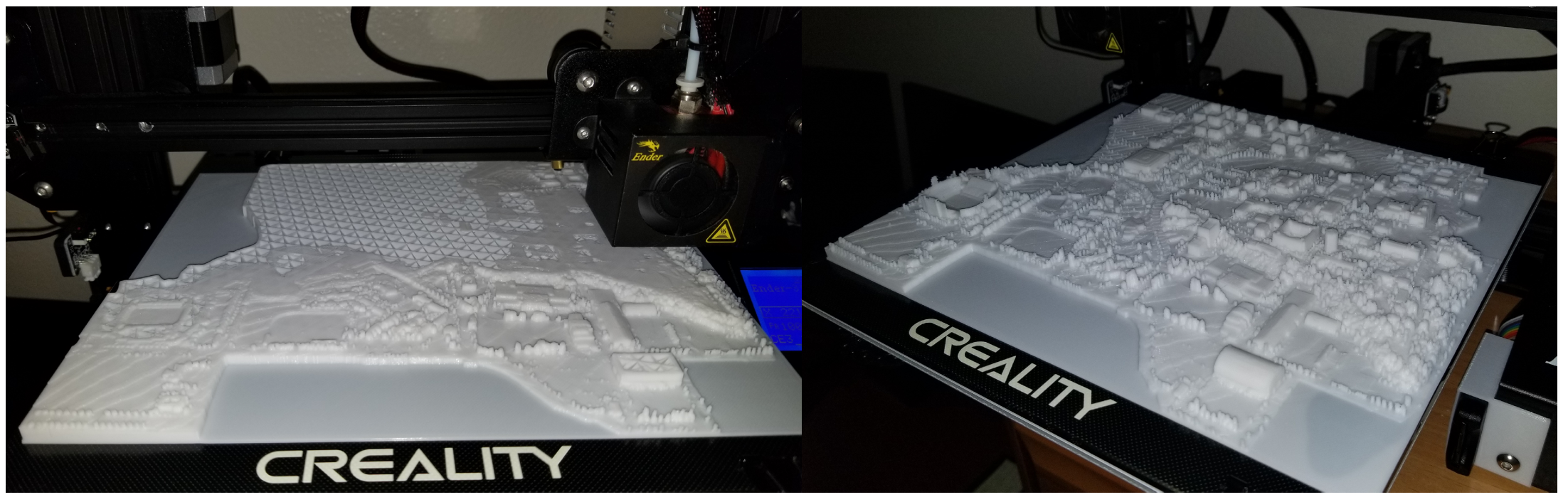

4.6. 3D Printing

5. Discussion and Analysis

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wilkinson, E.L.; Skousen, W.C. Brigham Young University: A School of Destiny; Brigham Young University Press: Provo, UT, USA, 1976. [Google Scholar]

- Deseret News. 9 Important Events in BYU History. 2015. Available online: https://www.deseret.com/2015/10/15/20765089/byu-history-9-important-events (accessed on 6 November 2021).

- Building Inventory Brigham Young University October 2019. 2019. Available online: https://brightspotcdn.byu.edu/03/90/ba644d56416db62461d1e29777d3/building-inventory.pdf (accessed on 6 November 2021).

- Brigham Young University News Bureau. Maeser Memorial Building, ca. 1911, Courtesy, Perry Special Collections; Harold B. Lee Library, Brigham Young University: Provo, UT, USA, 2005. [Google Scholar]

- Winters, C.R. How Firm a Foundation. 2012. Available online: https://magazine.byu.edu/article/how-firm-a-foundation/ (accessed on 6 November 2021).

- Daines, G. The City Beautiful Movement and the Karl G. Maeser Building. 2012. Available online: https://scblog.lib.byu.edu/2012/09/05/the-city-beautiful-movement-and-the-karl-g-maeser-building/ (accessed on 6 November 2021).

- Gardener, P.B. 3186 Windows into Engineering. 2019. Available online: https://magazine.byu.edu/article/3186-windows-into-engineering/ (accessed on 6 November 2021).

- Hollingshead, T. BYU Announces Construction of New West View Building. 2018. Available online: https://news.byu.edu/news/byu-announces-construction-new-west-view-building (accessed on 6 November 2021).

- Hollingshead, T. BYU Announces Approval to Construct New Music Building. 20 February 2020. Available online: https://news.byu.edu/announcements/byu-announces-approval-to-construct-new-music-building (accessed on 6 November 2021).

- Walker, M.R. BYU, Still: Covid-19 and Its Impact. 2020. Available online: https://magazine.byu.edu/article/coronavirus-covid-19/ (accessed on 6 November 2021).

- Cummings, A.R.; McKee, A.; Kulkarni, K.; Markandey, N. The rise of UAVs. Photogramm. Eng. Remote Sens. 2017, 83, 317–325. [Google Scholar] [CrossRef]

- Rathje, E.M.; Franke, K.W. Remote Sensing for Geotechnical Earthquake Reconnaissance. Soil Dyn. Earthq. Eng. 2016, 91, 304–316. [Google Scholar] [CrossRef] [Green Version]

- Schenk, T. Introduction to Photogrammetry; The Ohio State University: Columbus, OH, USA, 2005; Volume 106. [Google Scholar]

- Ullman, S. The interpretation of structure from motion. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1979, 203, 405–426. [Google Scholar]

- Faugeras, O.D.; Lustman, F. Motion and structure from motion in a piecewise planar environment. Int. J. Pattern Recognit. Artif. Intell. 1988, 2, 485–508. [Google Scholar] [CrossRef] [Green Version]

- Bolles, R.C.; Baker, H.H.; Marimont, D.H. Epipolar-plane image analysis: An approach to determining structure from motion. Int. J. Comput. Vis. 1987, 1, 7–55. [Google Scholar] [CrossRef]

- Koenderink, J.J.; Van Doorn, A.J. Affine structure from motion. JOSA A 1991, 8, 377–385. [Google Scholar] [CrossRef] [PubMed]

- Sturm, P.; Triggs, B. A Factorization Based Algorithm for Multi-Image Projective Structure and Motion. 1996, pp. 709–720. Available online: https://bit.ly/3qLzgHK (accessed on 6 November 2021).

- Bartoli, A.; Sturm, P. Structure-from-motion using lines: Representation, triangulation, and bundle adjustment. Comput. Vis. Image Underst. 2005, 100, 416–441. [Google Scholar] [CrossRef] [Green Version]

- Brostow, G.J.; Shotton, J.; Fauqueur, J.; Cipolla, R. Segmentation and Recognition Using Structure from Motion Point Clouds. 2008, pp. 44–57. Available online: https://bit.ly/2YSVb42 (accessed on 6 November 2021).

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landforms 2013, 38, 421–430. [Google Scholar] [CrossRef] [Green Version]

- Franke, K.W.; Zimmaro, P.; Lingwall, B.N.; Kayen, R.E.; Tommasi, P.; Chiabrando, F.; Santo, A. A Phased Reconnaissance Approach to Documenting Landslides Following the 2016 Central Italy Earthquakes. Earthq. Spectra 2018, 34, 1693–1719. [Google Scholar] [CrossRef]

- Wu, C. Towards Linear-Time Incremental Structure from Motion. 2013, pp. 127–134. Available online: https://bit.ly/3cjXKzf (accessed on 6 November 2021).

- Palmer, L.M.; Franke, K.W.; Abraham Martin, R.; Sines, B.E.; Rollins, K.M.; Hedengren, J.D. Application and Accuracy of Structure from Motion Computer Vision Models with Full-Scale Geotechnical Field Tests. 2015, pp. 2432–2441. Available online: https://bit.ly/3CnDEib (accessed on 6 November 2021).

- Ahad, M.A.; Paiva, S.; Tripathi, G.; Feroz, N. Enabling technologies and sustainable smart cities. Sustain. Cities Soc. 2020, 61, 102301. [Google Scholar] [CrossRef]

- Abujubbeh, M.; Al-Turjman, F.; Fahrioglu, M. Software-defined wireless sensor networks in smart grids: An overview. Sustain. Cities Soc. 2019, 51, 101754. [Google Scholar] [CrossRef]

- Alrashed, S. Key performance indicators for Smart Campus and Microgrid. Sustain. Cities Soc. 2020, 60, 102264. [Google Scholar] [CrossRef]

- Boursianis, A.D.; Papadopoulou, M.S.; Diamantoulakis, P.; Liopa-Tsakalidi, A.; Barouchas, P.; Salahas, G.; Karagiannidis, G.; Wan, S.; Goudos, S.K. Internet of Things (IoT) and Agricultural Unmanned Aerial Vehicles (UAVs) in smart farming: A comprehensive review. Internet Things 2020, 100187. [Google Scholar] [CrossRef]

- Arifitama, B.; Hanan, G.; Rofiqi, M.H. Mobile Augmented Reality for Campus Visualization Using Markerless Tracking in an Indonesian Private University. Int. J. Interact. Mob. Technol. 2021, 15, 21–33. [Google Scholar] [CrossRef]

- Pavlik, A. Offer virtual reality tours to attract prospects who can’t make it to campus. Enroll. Manag. Rep. 2020, 24, 6–7. [Google Scholar] [CrossRef]

- Wu, B.; Wang, Y.; Liu, R.; Tan, S.; Hao, R. Research of Intelligent Campus Design Based on Immersive BIM + VR Technology. J. Phys. Conf. Ser. 2021, 1885, 052053. [Google Scholar] [CrossRef]

- Franke, K.W.; Rollins, K.M.; Ledezma, C.; Hedengren, J.D.; Wolfe, D.; Ruggles, S.; Bender, C.; Reimschiissel, B. Reconnaissance of Two Liquefaction Sites Using Small Unmanned Aerial Vehicles and Structure from Motion Computer Vision Following the April 1, 2014 Chile Earthquake. J. Geotech. Geoenviron. Eng. 2017, 143, 11. [Google Scholar] [CrossRef]

- Ruggles, S.; Clark, J.; Franke, K.W.; Wolfe, D.; Hedengren, J.D.; Martin, R.A.; Reimschiissel, B. Comparison of SfM Computer Vision Point Clouds of a Landslide from Multiple Small UAV Platforms and Sensors to a TLS based Model. J. Unmanned Veh. Syst. 2016, 4, 246–265. [Google Scholar] [CrossRef]

- Martin, R.; Rojas, I.; Franke, K.; Hedengren, J.; Martin, R.A.; Rojas, I.; Franke, K.; Hedengren, J.D. Evolutionary View Planning for Optimized UAV Terrain Modeling in a Simulated Environment. Remote Sens. 2015, 8, 26. [Google Scholar] [CrossRef] [Green Version]

- Martin, R.A.; Blackburn, L.; Pulsipher, J.; Franke, K.; Hedengren, J.D. Potential benefits of combining anomaly detection with view planning for UAV infrastructure modeling. Remote Sens. 2017, 9, 434. [Google Scholar] [CrossRef] [Green Version]

- Bengio, Y.; Lodi, A.; Prouvost, A. Machine learning for combinatorial optimization: A methodological tour d’horizon. Eur. J. Oper. Res. 2021, 290, 405–421. [Google Scholar] [CrossRef]

- Hammond, J.E.; Vernon, C.A.; Okeson, T.J.; Barrett, B.J.; Arce, S.; Newell, V.; Janson, J.; Franke, K.W.; Hedengren, J.D. Survey of 8 UAV Set-Covering Algorithms for Terrain Photogrammetry. Remote Sens. 2020, 12, 2285. [Google Scholar] [CrossRef]

- Abrahamsen, M.; Adamaszek, A.; Miltzow, T. The art gallery problem is ∃ℝ-complete. In Proceedings of the 50th Annual ACM SIGACT Symposium on Theory of Computing, Los Angeles, CA, USA, 25–29 June 2018; pp. 65–73. [Google Scholar]

- Liu, J.; Sridharan, S.; Fookes, C. 6 Recent Advances in Camera Planning for Large Area Surveillance: A Comprehensive Review. ACM Comput. Surv. 2016, 49, 1–37. [Google Scholar] [CrossRef]

- Michael, J.B.; Voas, J. Algorithms, Algorithms, Algorithms. IEEE Ann. Hist. Comput. 2020, 53, 13–15. [Google Scholar] [CrossRef]

- Arce, S.; Vernon, C.A.; Hammond, J.; Newell, V.; Janson, J.; Franke, K.W.; Hedengren, J.D. Automated 3D Reconstruction Using Optimized View-Planning Algorithms for Iterative Development of Structure-from-Motion Models. Remote Sens. 2020, 12, 2169. [Google Scholar] [CrossRef]

- Polat, N.; Uysal, M. An investigation of tree extraction from UAV-based photogrammetric dense point cloud. Arab. J. Geosci. 2020, 13, 1–8. [Google Scholar] [CrossRef]

- Al-Ghamdi, J.A.; Al-Masalmeh, E.R. Heuristics and Meta-Heuristics Optimization Methods in Solving Traveling Salesman Problem TSP. Available online: https://www.ijariit.com/manuscript/heuristics-and-meta-heuristics-optimization-methods-in-solving-traveling-salesman-problem-tsp/ (accessed on 6 November 2021).

- Hoffman, K.L.; Padberg, M.; Rinaldi, G. Traveling salesman problem. Encycl. Oper. Res. Manag. Sci. 2013, 1, 1573–1578. [Google Scholar]

- Hoppe, C.; Wendel, A.; Zollmann, S.; Paar, A.; Pirker, K.; Irschara, A.; Bischof, H.; Kluckner, S. Photogrammetric Camera Network Design for Micro Aerial Vehicles; Technical Report; Institute for Computer Graphics and Vision—Graz University of Technology: Mala Nedelja, Slovenia, 2012. [Google Scholar]

- Freeman, M.; Vernon, C.; Berrett, B.; Hastings, N.; Derricott, J.; Pace, J.; Horne, B.; Hammond, J.; Janson, J.; Chiabrando, F.; et al. Sequential Earthquake Damage Assessment Incorporating Optimized sUAV Remote Sensing at Pescara del Tronto. Geosciences 2019, 9, 332. [Google Scholar] [CrossRef] [Green Version]

- Al-Betar, M.A.; Awadallah, M.A.; Faris, H.; Aljarah, I.; Hammouri, A.I. Natural selection methods for grey wolf optimizer. Expert Syst. Appl. 2018, 113, 481–498. [Google Scholar] [CrossRef]

- Feo, T.A.; Resende, M.G. A probabilistic heuristic for a computationally difficult set covering problem. Oper. Res. Lett. 1989, 8, 67–71. [Google Scholar] [CrossRef]

- Jung, D.; Dong, Y.; Frisk, E.; Krysander, M.; Biswas, G. Sensor selection for fault diagnosis in uncertain systems. Int. J. Control 2020, 93, 629–639. [Google Scholar] [CrossRef] [Green Version]

- Cerrone, C.; Cerulli, R.; Golden, B. Carousel greedy: A generalized greedy algorithm with applications in optimization. Comput. Oper. Res. 2017, 85, 97–112. [Google Scholar] [CrossRef]

- Antoine, R.; Lopez, T.; Tanguy, M.; Lissak, C.; Gailler, L.; Labazuy, P.; Fauchard, C. Geoscientists in the Sky: Unmanned Aerial Vehicles Responding to Geohazards. Surv. Geophys. 2020, 41, 1285–1321. [Google Scholar] [CrossRef]

- Okeson, T.J.; Barrett, B.J.; Arce, S.; Vernon, C.A.; Franke, K.W.; Hedengren, J.D. Achieving Tiered Model Quality in 3D Structure from Motion Models Using a Multi-Scale View-Planning Algorithm for Automated Targeted Inspection. Sensors 2019, 19, 2703. [Google Scholar] [CrossRef] [Green Version]

- Systems, B. Bentley: Advancing Infrastructure. 2020. Available online: https://www.bentley.com/en (accessed on 6 November 2021).

- AgiSoft Metashape Professional (Version 1.5.5). 2019. Available online: https://www.agisoft.com/ (accessed on 6 November 2021).

- Esri. 2021. Available online: https://www.esri.com/en-us/home (accessed on 6 November 2021).

- Open Source Community. CloudCompare. 2019. Available online: https://www.danielgm.net/cc/ (accessed on 6 November 2021).

- Klápště, P.; Fogl, M.; Barták, V.; Gdulová, K.; Urban, R.; Moudrý, V. Sensitivity analysis of parameters and contrasting performance of ground filtering algorithms with UAV photogrammetry-based and LiDAR point clouds. Int. J. Digit. Earth 2020, 13, 1672–1694. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.; Rodríguez-Pérez, J.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM Photogrammetry Survey as a Function of the Number and Location of Ground Control Points Used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef] [Green Version]

- Jordan, M.I.; Mitchell, T.M. Machine Learning: Trends, Perspectives, and Prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef] [PubMed]

- Lee, I.; Shin, Y.J. Machine learning for enterprises: Applications, algorithm selection, and challenges. Bus. Horizons 2020, 63, 157–170. [Google Scholar] [CrossRef]

- Scheurer, M.S.; Slager, R.J. Unsupervised machine learning and band topology. Phys. Rev. Lett. 2020, 124, 226401. [Google Scholar] [CrossRef]

- Davis II, R.L.; Greene, J.K.; Dou, F.; Jo, Y.K.; Chappell, T.M. A Practical Application of Unsupervised Machine Learning for Analyzing Plant Image Data Collected Using Unmanned Aircraft Systems. Agronomy 2020, 10, 633. [Google Scholar] [CrossRef]

- Eskandari, R.; Mahdianpari, M.; Mohammadimanesh, F.; Salehi, B.; Brisco, B.; Homayouni, S. Meta-Analysis of Unmanned Aerial Vehicle (UAV) Imagery for Agro-Environmental Monitoring Using Machine Learning and Statistical Models. Remote Sens. 2020, 12, 3511. [Google Scholar] [CrossRef]

- Carvajal-Ramírez, F.; Serrano, J.M.P.R.; Agüera-Vega, F.; Martínez-Carricondo, P. A comparative analysis of phytovolume estimation methods based on UAV-photogrammetry and multispectral imagery in a mediterranean forest. Remote Sens. 2019, 11, 2579. [Google Scholar] [CrossRef] [Green Version]

- Bolourian, N.; Hammad, A. LiDAR-equipped UAV path planning considering potential locations of defects for bridge inspection. Autom. Constr. 2020, 117, 103250. [Google Scholar] [CrossRef]

- Ashour, R.; Taha, T.; Dias, J.M.M.; Seneviratne, L.; Almoosa, N. Exploration for Object Mapping Guided by Environmental Semantics using UAVs. Remote Sens. 2020, 12, 891. [Google Scholar] [CrossRef] [Green Version]

- Almadhoun, R.; Abduldayem, A.; Taha, T.; Seneviratne, L.; Zweiri, Y. Guided next best view for 3D reconstruction of large complex structures. Remote Sens. 2019, 11, 2440. [Google Scholar] [CrossRef] [Green Version]

- Aguilar, W.G.; Casaliglla, V.P.; Pólit, J.L.; Abad, V.; Ruiz, H. Obstacle avoidance for flight safety on unmanned aerial vehicles. In Proceedings of the International Work-Conference on Artificial Neural Networks, Cádiz, Spain, 14–16 June 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 575–584. [Google Scholar]

- Radovic, M.; Adarkwa, O.; Wang, Q. Object recognition in aerial images using convolutional neural networks. J. Imaging 2017, 3, 21. [Google Scholar] [CrossRef]

- Mittal, P.; Sharma, A.; Singh, R. Deep learning-based object detection in low-altitude UAV datasets: A survey. Image Vis. Comput. 2020, 104, 104046. [Google Scholar] [CrossRef]

- Do, C.M. A Strategy for Training 3D Object Recognition Models with Limited Training Data Using Transfer Learning. In Applications of Machine Learning 2020; International Society for Optics and Photonics: Bellingham, WA, USA, 2020; Volume 11511, p. 1151113. [Google Scholar]

- Casella, V.; Chiabrando, F.; Franzini, M.; Manzino, A.M. Accuracy Assessment of A UAV Block by Different Software Packages, Processing Schemes and Validation Strategies. ISPRS Int. J. Geo-Inf. 2020, 9, 164. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Y.; Daakir, M.; Rupnik, E.; Pierrot-Deseilligny, M. A two-step approach for the correction of rolling shutter distortion in UAV photogrammetry. ISPRS J. Photogramm. Remote Sens. 2020, 160, 51–66. [Google Scholar] [CrossRef]

- Martin, A.; Heiner, B.; Hedengren, J.D. Targeted 3D Modeling from UAV Imagery. In Proceedings of the Geospatial Informatics, Motion Imagery, and Network Analytics VIII, Orlando, FL, USA, 16–17 April 2018; Palaniappan, K., Seetharaman, G., Doucette, P.J., Eds.; SPIE: Orlando, FL, USA, 2018; p. 13. [Google Scholar] [CrossRef]

- Kingsland, K. Comparative analysis of digital photogrammetry software for cultural heritage. Digit. Appl. Archaeol. Cult. Herit. 2020, 18, e00157. [Google Scholar] [CrossRef]

- Adjidjonu, D.; Burgett, J. Assessing the Accuracy of Unmanned Aerial Vehicles Photogrammetric Survey. Int. J. Constr. Educ. Res. 2020, 17, 85–96. [Google Scholar] [CrossRef]

- Girardeau-Montaut, D. CloudCompare. 2016. Available online: http://pcp2019.ifp.uni-stuttgart.de/presentations/04-CloudCompare_PCP_2019_public.pdf (accessed on 6 November 2021).

- Dewez, T.; Girardeau-Montaut, D.; Allanic, C.; Rohmer, J. Facets: A Cloudcompare Plugin to Extract Geological Planes from Unstructured 3d Point Clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41. [Google Scholar] [CrossRef]

- Nagle-McNaughton, T.; Cox, R. Measuring Change Using Quantitative Differencing of Repeat Structure-From-Motion Photogrammetry: The Effect of Storms on Coastal Boulder Deposits. Remote Sens. 2020, 12, 42. [Google Scholar] [CrossRef] [Green Version]

- Kaarta Cloud. 2020. Available online: https://www.kaarta.com/kaarta-cloud/ (accessed on 6 November 2021).

- Chiappini, S.; Fini, A.; Malinverni, E.; Frontoni, E.; Racioppi, G.; Pierdicca, R. Cost Effective Spherical Photogrammetry: A Novel Framework for the Smart Management of Complex Urban Environments. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 441–448. [Google Scholar] [CrossRef]

- Barazzetti, L.; Previtali, M.; Scaioni, M. Procedures for condition mapping using 360 images. ISPRS Int. J. Geo-Inf. 2020, 9, 34. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Zhao, P.; Hu, Q.; Ai, M.; Hu, D.; Li, J. A UAV-based panoramic oblique photogrammetry (POP) approach using spherical projection. ISPRS J. Photogramm. Remote Sens. 2020, 159, 198–219. [Google Scholar] [CrossRef]

- Marziali, S.; Dionisio, G. Photogrammetry and macro photography. The experience of the MUSINT II Project in the 3D digitization of small archaeological artifacts. Stud. Digit. Herit. 2017, 1, 299–309. [Google Scholar]

- Giuliano, M. Cultural Heritage: An example of graphical documentation with automated photogrammetric systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 251. [Google Scholar] [CrossRef] [Green Version]

- Willingham, A.L.; Herriott, T.M. Photogrammetry-Derived Digital Surface Model and Orthoimagery of Slope Mountain, North Slope, Alaska, June 2018. Available online: https://www.semanticscholar.org/paper/Photogrammetry-derived-digital-surface-model-and-of-Willingham-Herriott/7f81d6ca3e64642397dc188e38640a043fb1d2fd (accessed on 6 November 2021).

- Alfio, V.S.; Costantino, D.; Pepe, M. Influence of Image TIFF Format and JPEG Compression Level in the Accuracy of the 3D Model and Quality of the Orthophoto in UAV Photogrammetry. J. Imaging 2020, 6, 30. [Google Scholar] [CrossRef]

- Velodyne. Velodynelidar. 2021. Available online: https://velodynelidar.com/ (accessed on 6 November 2021).

- Epic Games. Unreal Engine. Available online: https://www.unrealengine.com/ (accessed on 6 November 2021).

- Facebook Technologies, LLC. Oculus. 2021. Available online: https://www.oculus.com/ (accessed on 6 November 2021).

- 3D Builder Resources. 2021. Available online: https://www.microsoft.com/en-us/p/3d-builder/9wzdncrfj3t6 (accessed on 6 November 2021).

- Okeson, T.J. Camera View Planning for Structure from Motion: Achieving Targeted Inspection through More Intelligent View Planning Methods. Ph.D. Thesis, Brigham Young University, Provo, UT, USA, 2018. [Google Scholar]

- Stott, E.; Williams, R.D.; Hoey, T.B. Ground control point distribution for accurate kilometre-scale topographic mapping using an RTK-GNSS unmanned aerial vehicle and SfM photogrammetry. Drones 2020, 4, 55. [Google Scholar] [CrossRef]

- Jaud, M.; Bertin, S.; Beauverger, M.; Augereau, E.; Delacourt, C. RTK GNSS-Assisted Terrestrial SfM Photogrammetry without GCP: Application to Coastal Morphodynamics Monitoring. Remote Sens. 2020, 12, 1889. [Google Scholar] [CrossRef]

- Štroner, M.; Urban, R.; Reindl, T.; Seidl, J.; Brouček, J. Evaluation of the georeferencing accuracy of a photogrammetric model using a quadrocopter with onboard GNSS RTK. Sensors 2020, 20, 2318. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Photo | Points “Seen” | Selection Order |

|---|---|---|

| 1 | 1000 | Second |

| 2 | 300 | N/A |

| 3 | 700 | Third |

| 4 | 2000 | First |

| Stage | Photos | % of Total | % of Edited | % of Imported | % of Final Model |

|---|---|---|---|---|---|

| Total Photos Taken | 125,527 | 100% | |||

| Lightroom Edited | 115,301 | 92% | 100% | ||

| Drone Photos | 77,685 | 62% | 67% | ||

| Terrestrial Photos | 37,616 | 30% | 33% | ||

| ContextCapture Imported | 102,818 | 82% | 89% | 100% | |

| Used in Final Model | 80,384 | 64% | 70% | 78% | 100% |

| Drone Photos | 64,857 | 52% | 56% | 63% | 81% |

| Terrestrial Photos | 15,527 | 12% | 13% | 15% | 19% |

| Full Model Error | |||

|---|---|---|---|

| Statistic | X [cm] | Y [cm] | Z [cm] |

| Mean | −0.12 | −0.38 | −0.53 |

| Standard Deviation | 2.41 | 4.06 | 5.34 |

| Upper 95% Mean | 0.34 | 0.38 | 0.48 |

| Lower 95% Mean | −0.57 | −1.15 | −1.53 |

| Average Model Error | ||||

|---|---|---|---|---|

| Statistic | RMS Reprojection [px] | 3D Distance [cm] | Horizontal [cm] | Vertical [cm] |

| Mean | 1.6 | 3.3 | 1.9 | 2.3 |

| Standard Deviation | 1.2 | 3.4 | 1.7 | 3.2 |

| Upper 95% Mean | 1.8 | 3.9 | 2.2 | 2.9 |

| Lower 95% Mean | 1.4 | 2.7 | 1.6 | 1.7 |

| Maeser Building Model Comparisons | |||||

|---|---|---|---|---|---|

| Month | Acquisition | Photos Taken | Align Success | Ave GSD [mm/px] | Process Time [min] |

| May | Manual and Grid | 1859 | 98% | 3.88 | 30 |

| June | Optimized 75 ft | 264 | 90% | 6.94 | 4.5 |

| June | Optimized All | 434 | 93% | 8.34 | 11 |

| August | Manual | 1077 | 98% | 5.41 | 71 * |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Berrett, B.E.; Vernon, C.A.; Beckstrand, H.; Pollei, M.; Markert, K.; Franke, K.W.; Hedengren, J.D. Large-Scale Reality Modeling of a University Campus Using Combined UAV and Terrestrial Photogrammetry for Historical Preservation and Practical Use. Drones 2021, 5, 136. https://doi.org/10.3390/drones5040136

Berrett BE, Vernon CA, Beckstrand H, Pollei M, Markert K, Franke KW, Hedengren JD. Large-Scale Reality Modeling of a University Campus Using Combined UAV and Terrestrial Photogrammetry for Historical Preservation and Practical Use. Drones. 2021; 5(4):136. https://doi.org/10.3390/drones5040136

Chicago/Turabian StyleBerrett, Bryce E., Cory A. Vernon, Haley Beckstrand, Madi Pollei, Kaleb Markert, Kevin W. Franke, and John D. Hedengren. 2021. "Large-Scale Reality Modeling of a University Campus Using Combined UAV and Terrestrial Photogrammetry for Historical Preservation and Practical Use" Drones 5, no. 4: 136. https://doi.org/10.3390/drones5040136

APA StyleBerrett, B. E., Vernon, C. A., Beckstrand, H., Pollei, M., Markert, K., Franke, K. W., & Hedengren, J. D. (2021). Large-Scale Reality Modeling of a University Campus Using Combined UAV and Terrestrial Photogrammetry for Historical Preservation and Practical Use. Drones, 5(4), 136. https://doi.org/10.3390/drones5040136