Computer Vision Based Path Following for Autonomous Unmanned Aerial Systems in Unburied Pipeline Onshore Inspection

Abstract

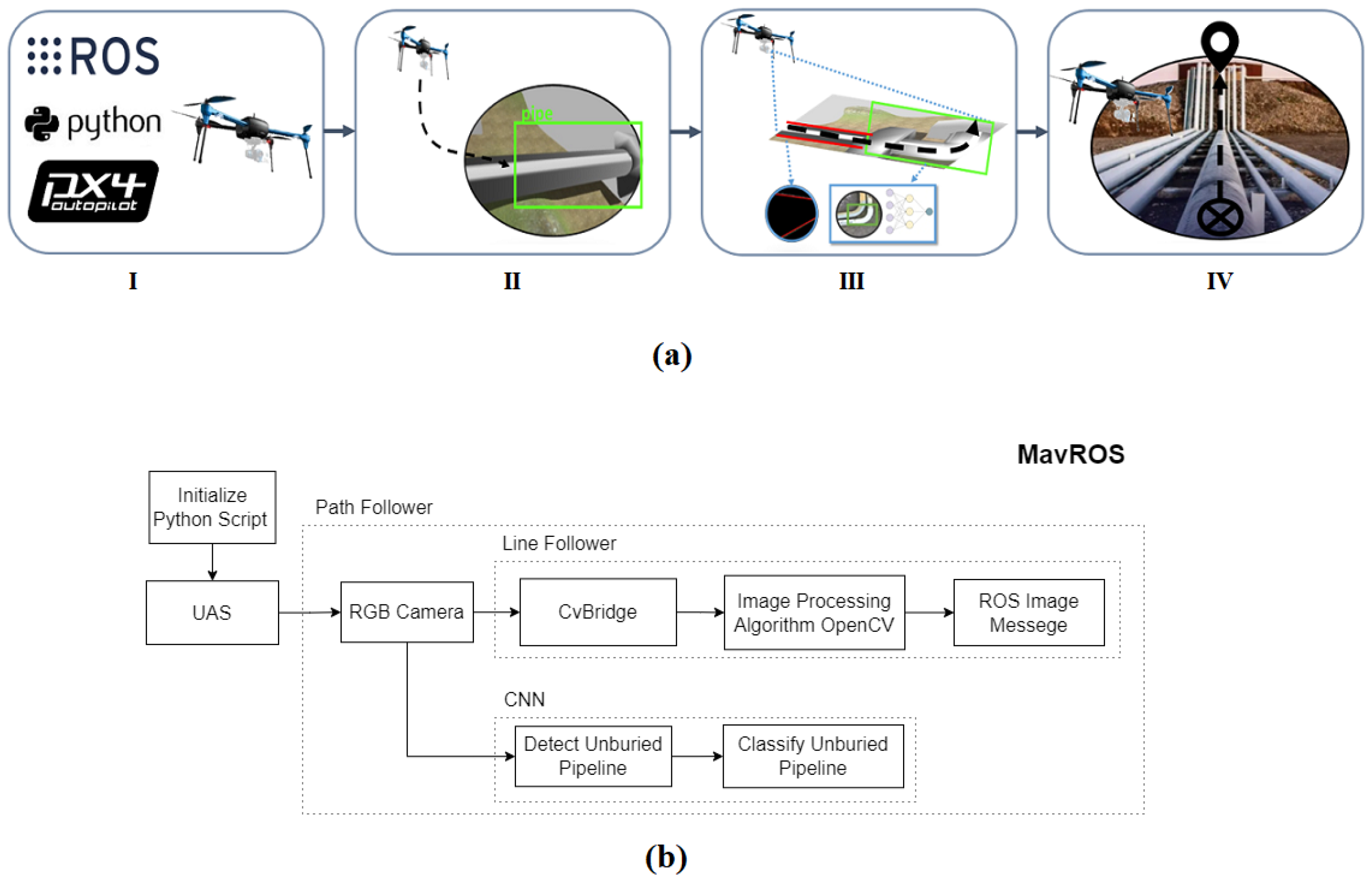

:1. Introduction

- An object detection solution, with image processing and Convolution Neural Networks to detect different types of unburied pipes in onshore O&G installations;

- A path-following solution to navigate the UAS over the extensive structures of unburied pipelines;

- Implementation of the full solution using Robot Operating System and the PX4 flight control unit;

- Software-In-The-Loop simulation environment to test similar solutions in a virtual O&G installation using Gazebo;

- Test and evaluation of the proposed solution with a real drone to prove its functionality in a real application.

2. Materials and Methods

2.1. Problem Formulation

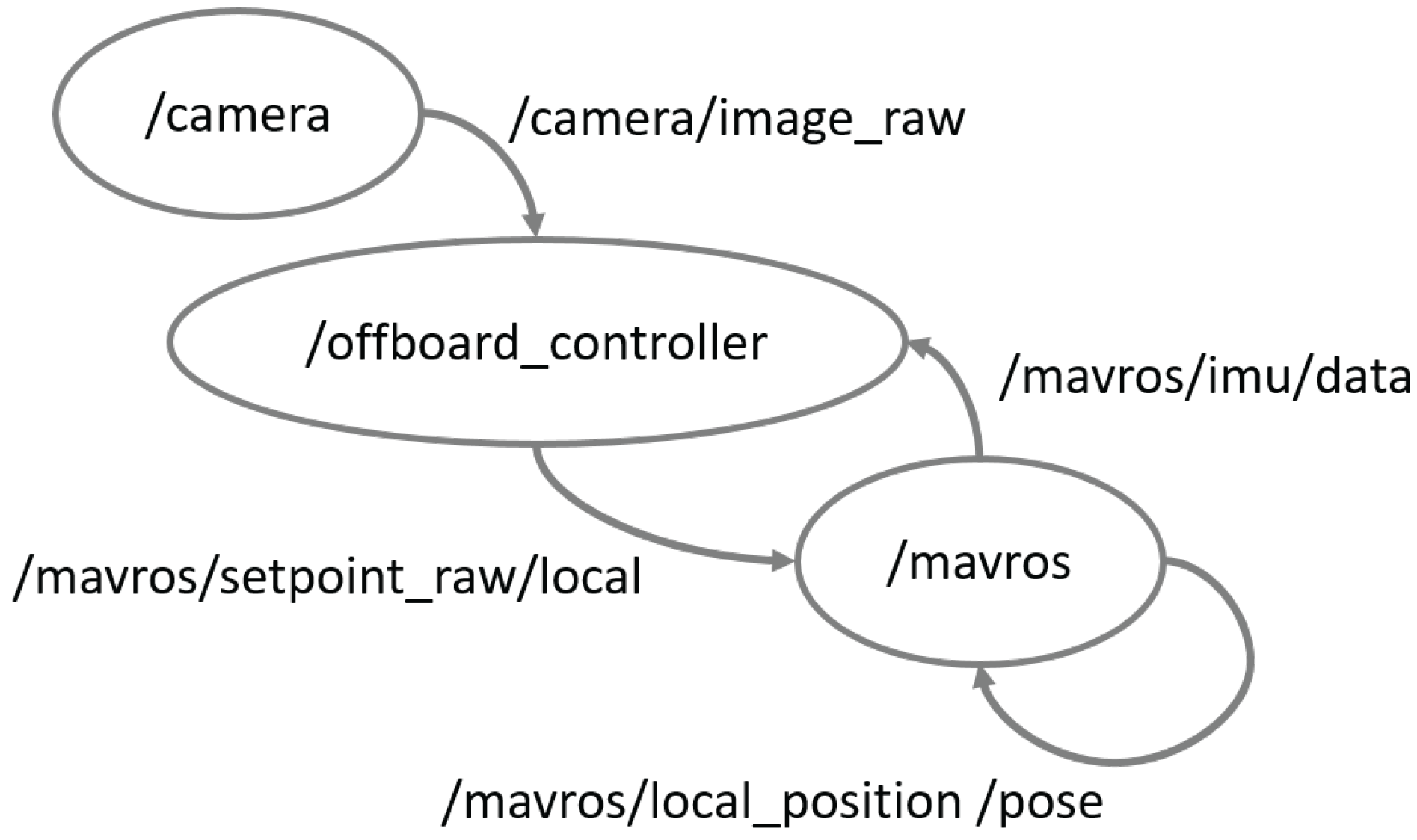

2.2. Solution Setup

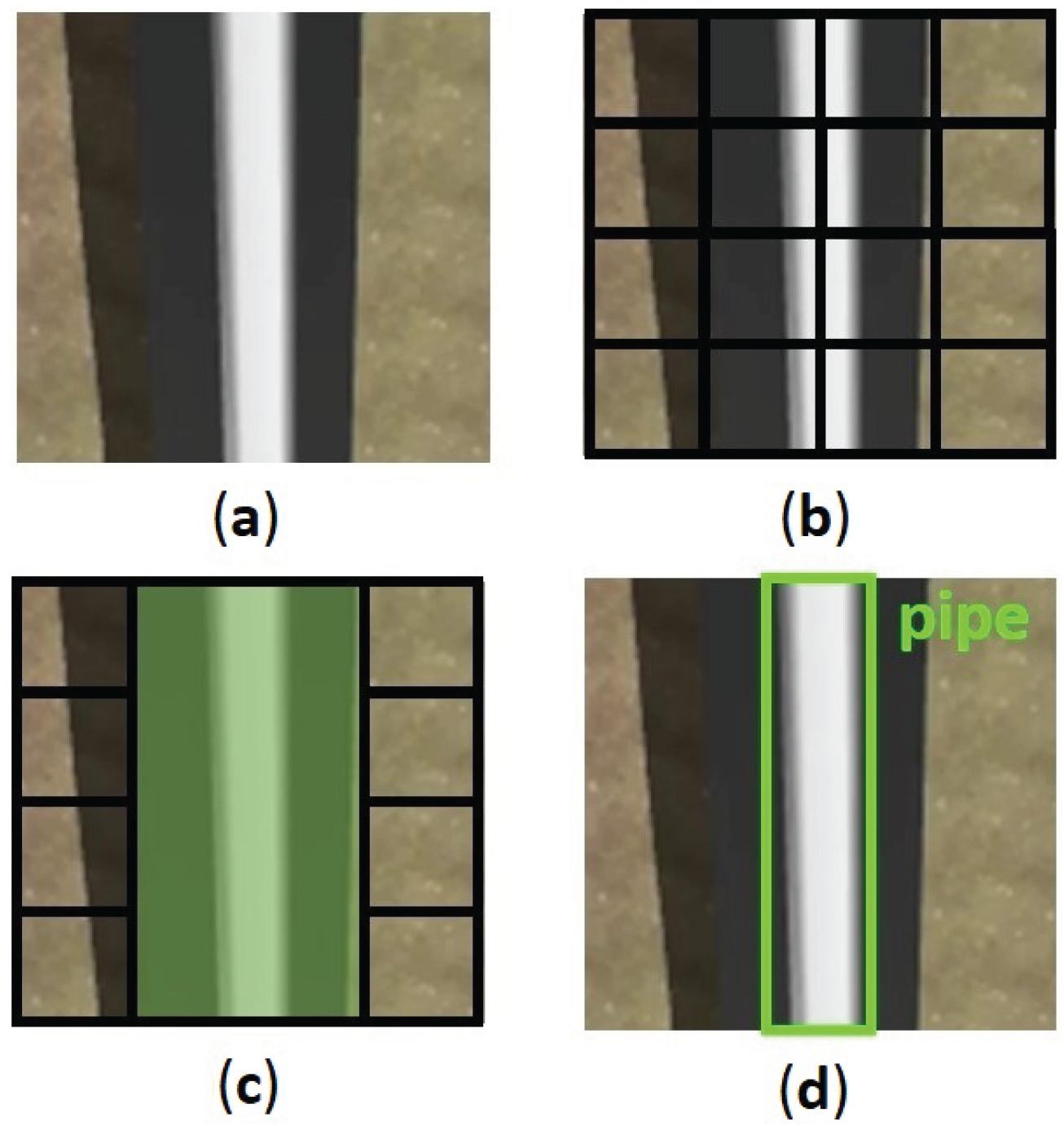

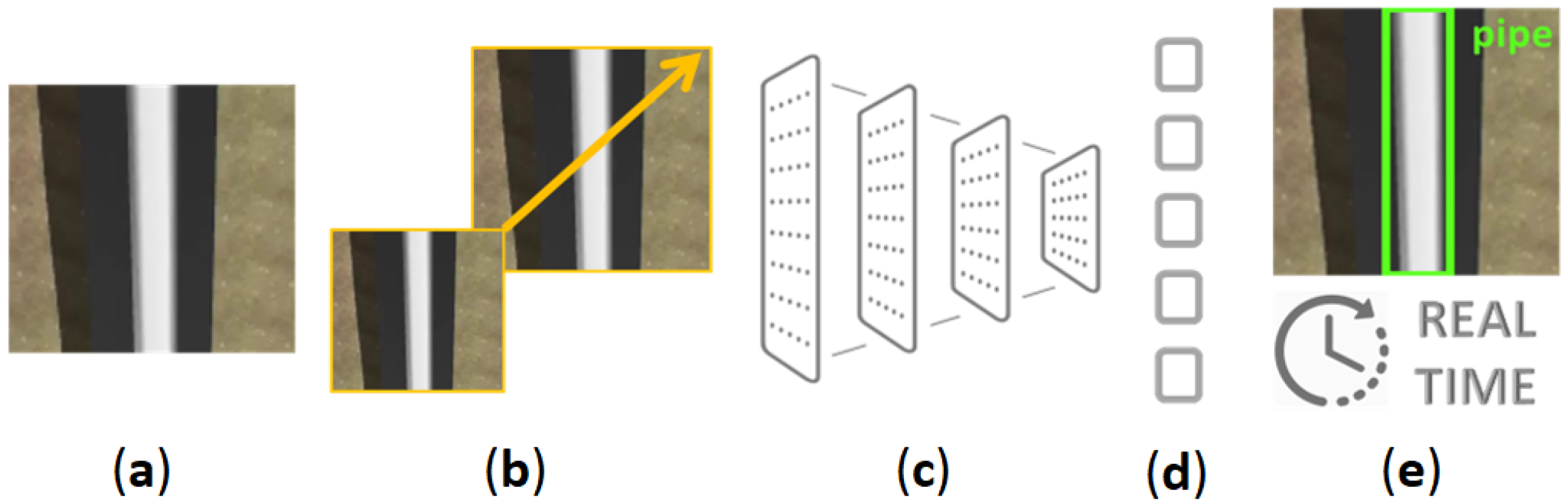

2.3. Yolov4 Neural Network

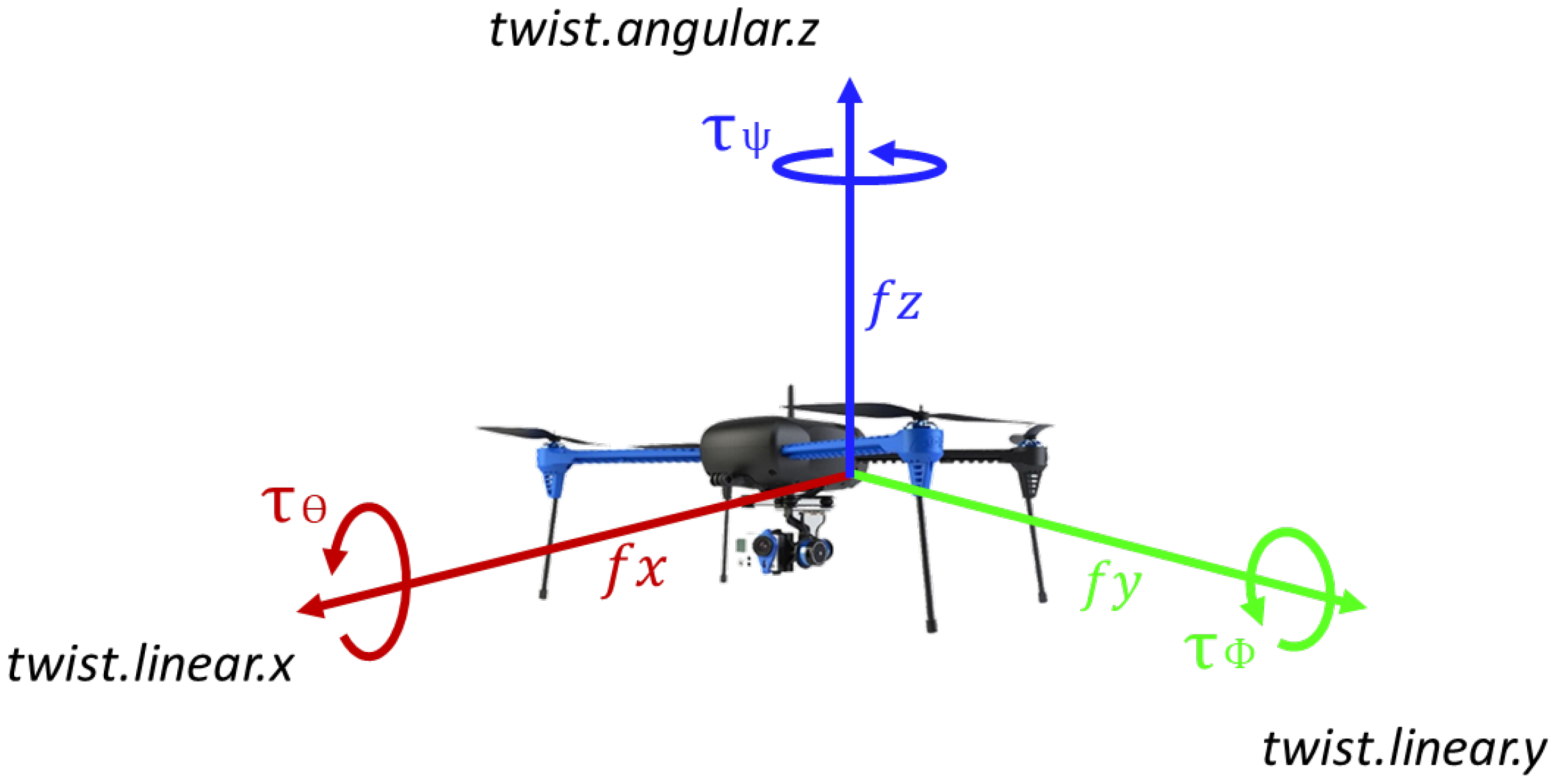

2.4. Quadrotor Model

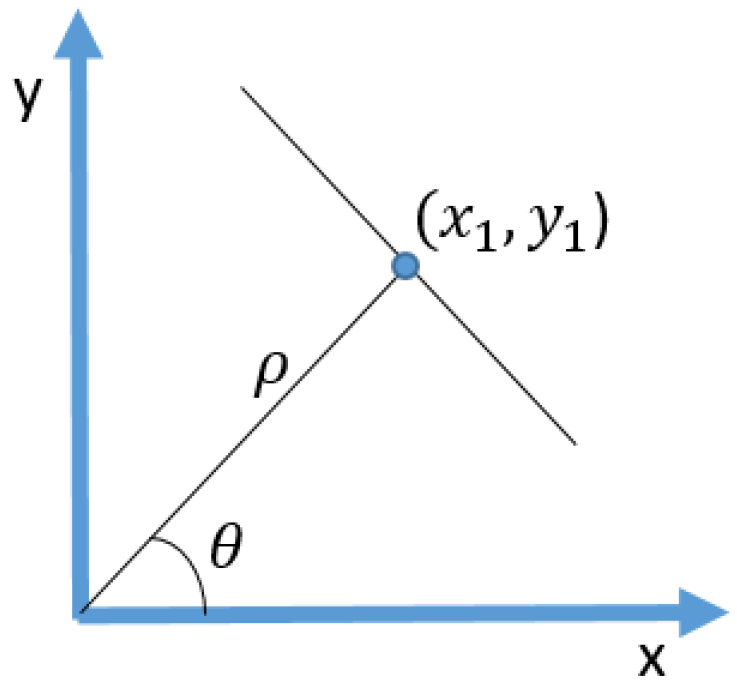

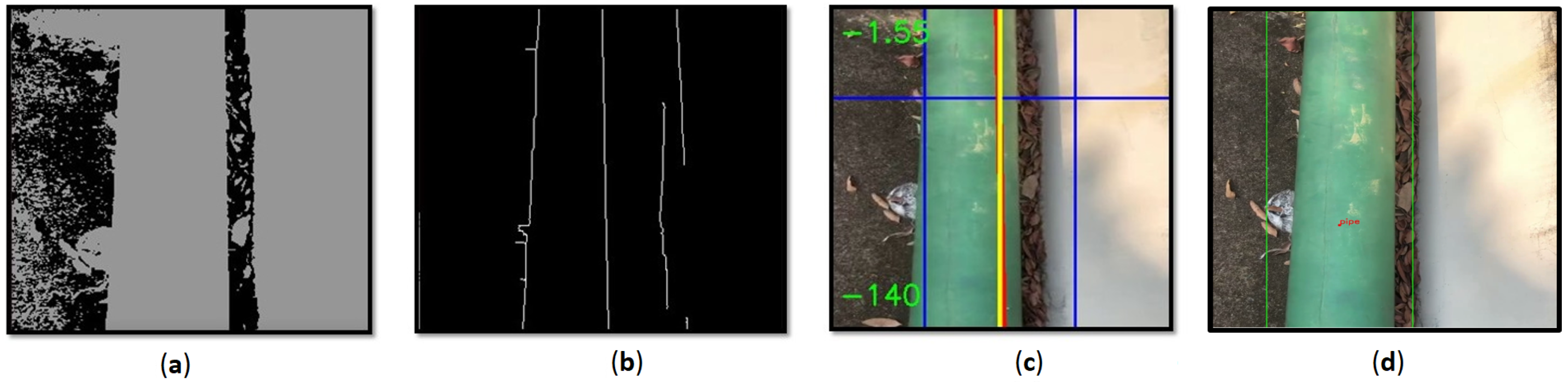

2.5. Pipe Follower

3. Results and Discussion

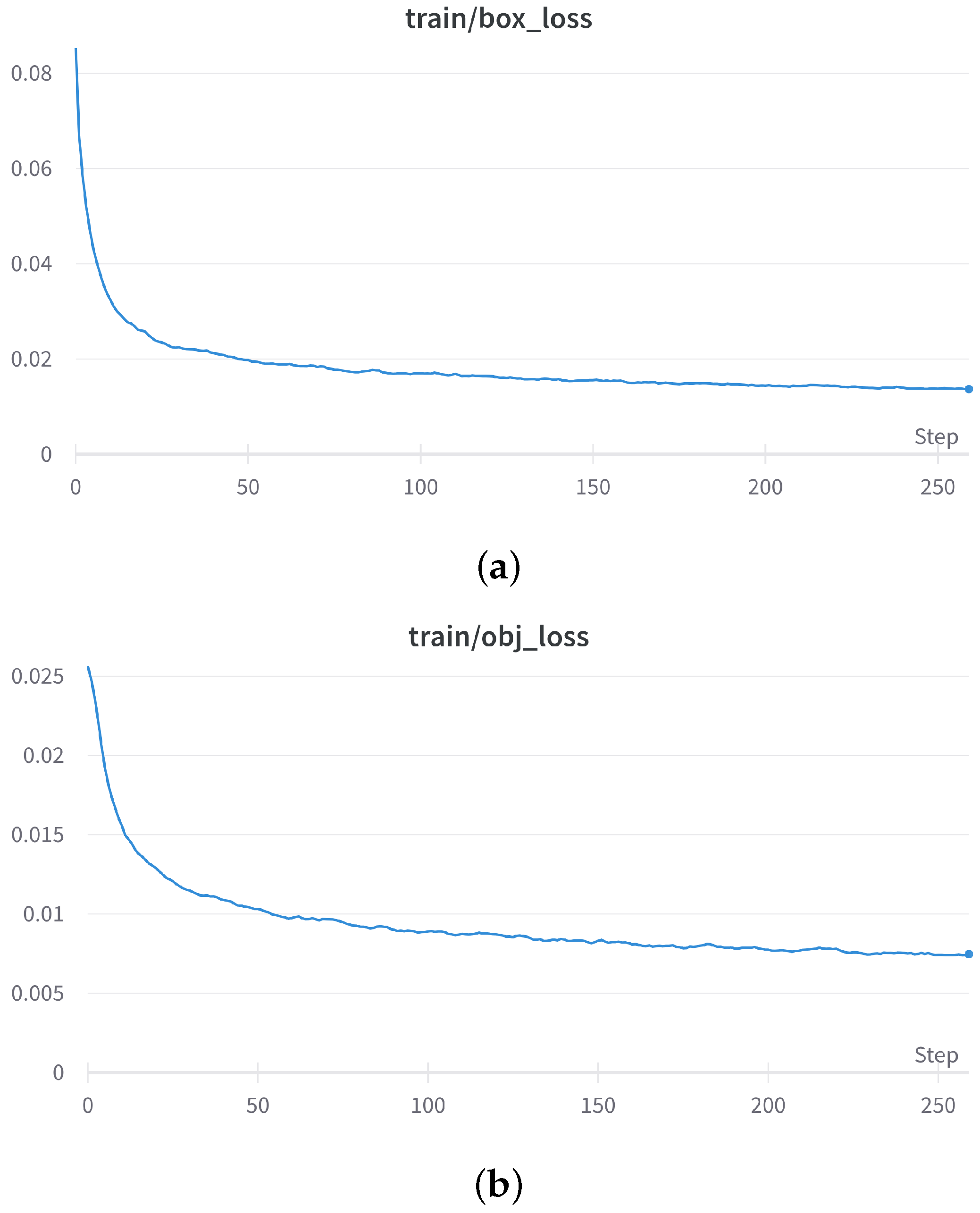

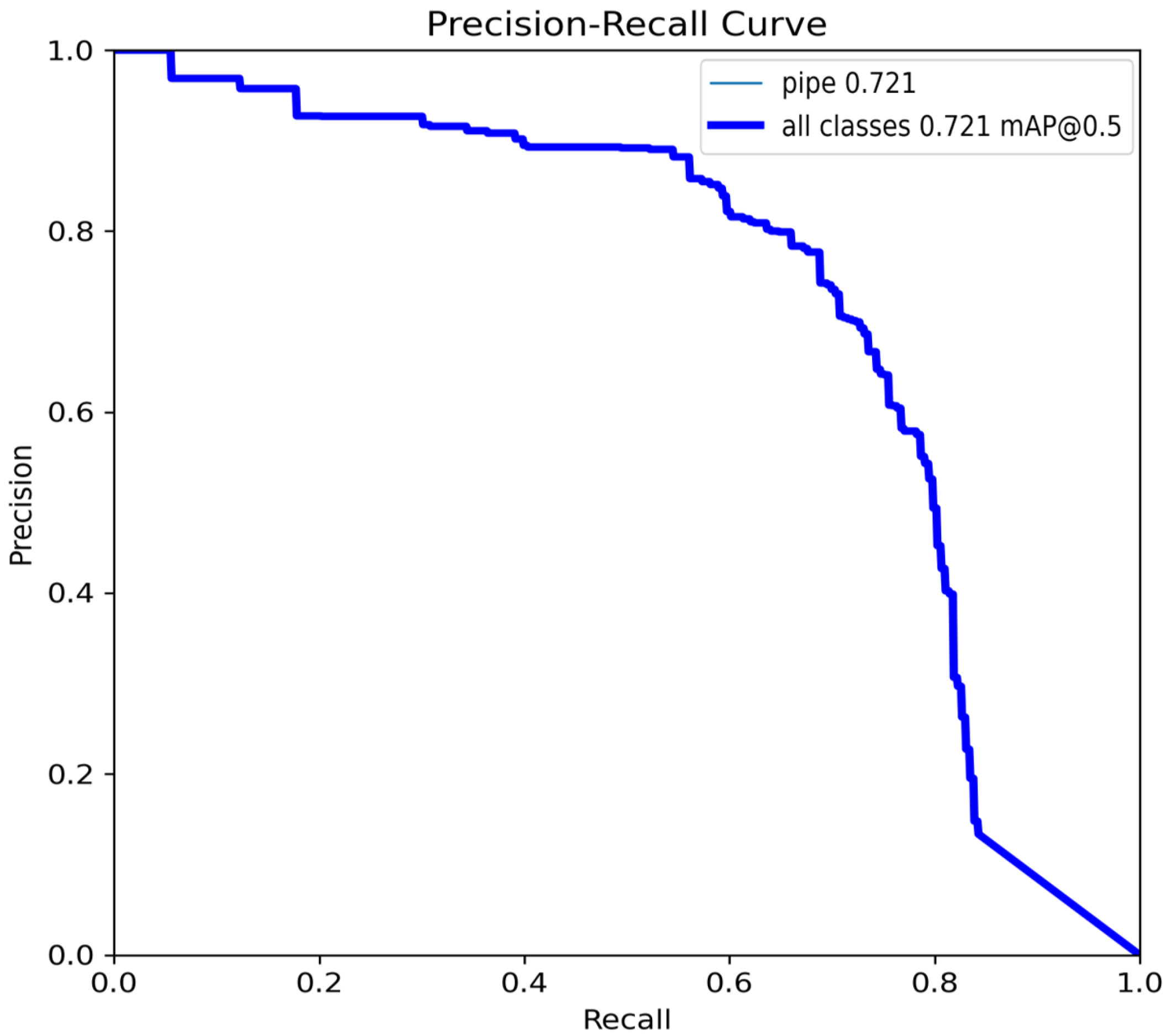

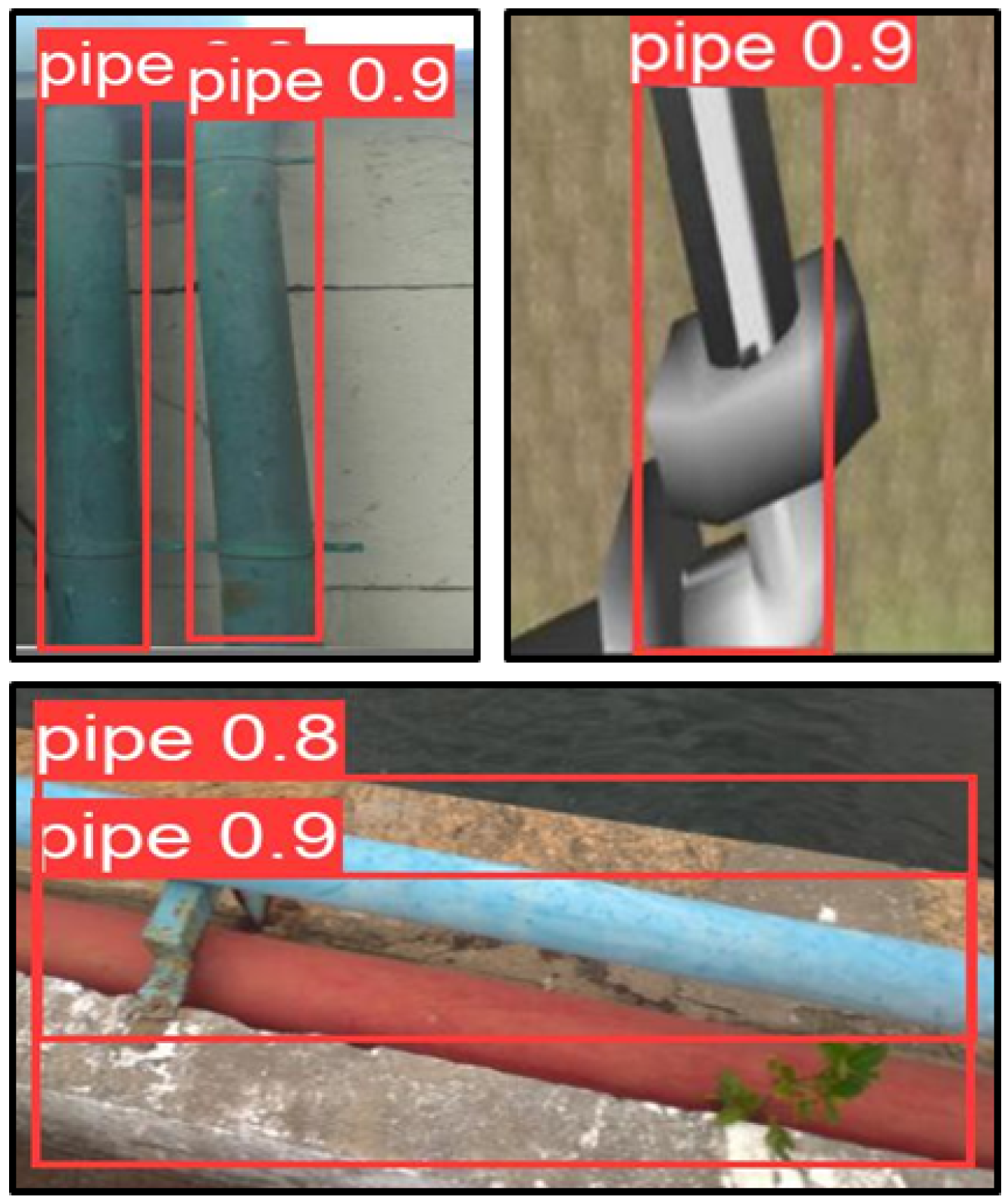

3.1. YOLO Training and Results

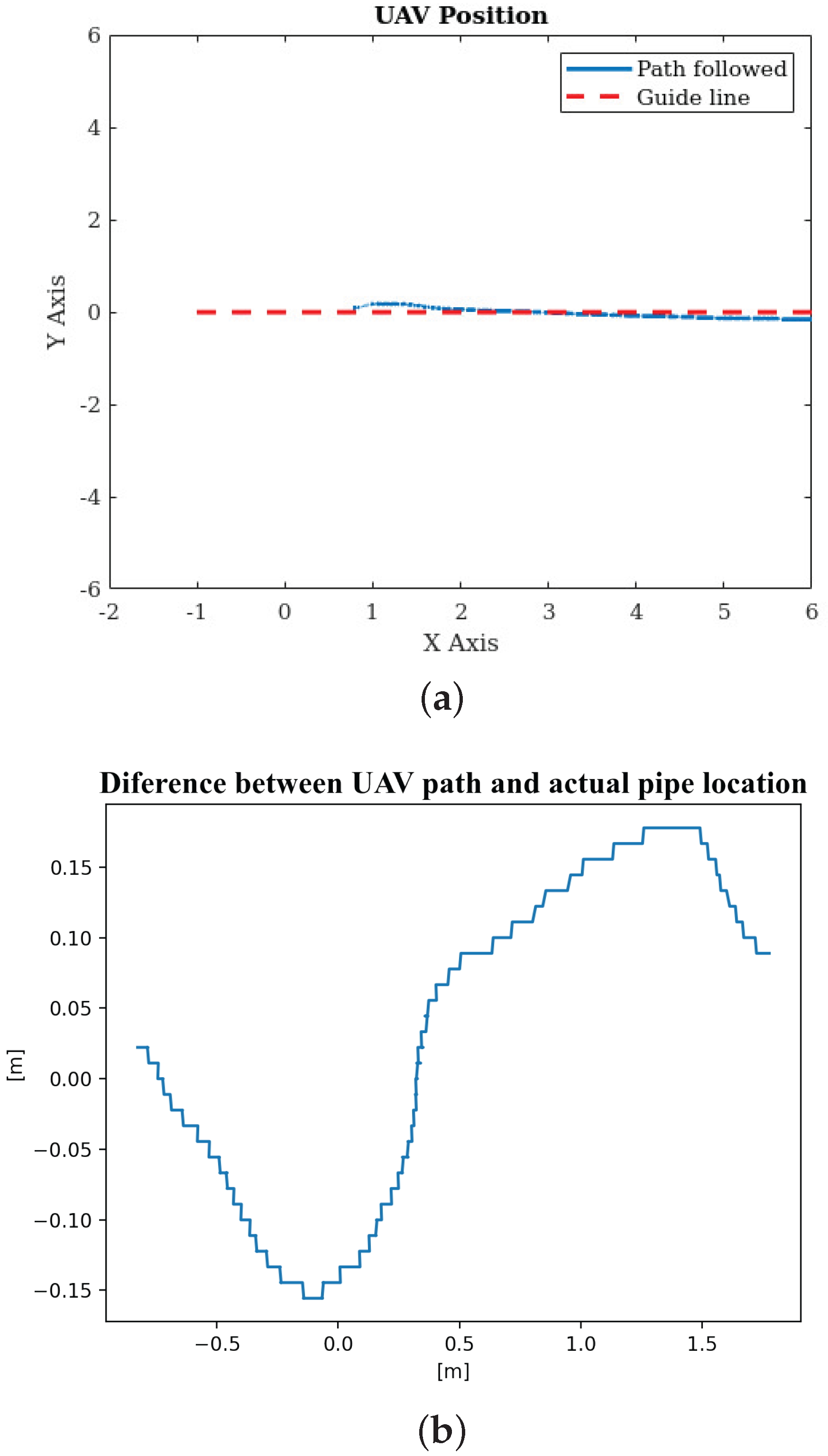

3.2. Simulation Tests

3.3. Real Flight Tests

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| AUV | Autonomous Underwater Vehicle |

| CED | Canny Edge Detector |

| CNN | Convolutional Neural Network |

| LFA | Line Follower Algorithm |

| PID | Proportional Integral Derivative |

| MS COCO | Microsoft Common Objects in Context |

| UAS | Unmanned Aerial System |

| UAV | Unmanned Aerial Vehicle |

| ROS | Robotic Operating System |

| SITL | Software in the Loop |

| YOLO | You Only Look Once |

References

- Pinto, M.F.; Melo, A.G.; Marcato, A.L.; Urdiales, C. Case-based reasoning approach applied to surveillance system using an autonomous unmanned aerial vehicle. In Proceedings of the 2017 IEEE 26th International Symposium on Industrial Electronics (ISIE), Edinburgh, UK, 19–21 June 2017; pp. 1324–1329. [Google Scholar]

- Pinto, M.F.; Coelho, F.O.; De Souza, J.P.; Melo, A.G.; Marcato, A.L.; Urdiales, C. Ekf design for online trajectory prediction of a moving object detected onboard of a uav. In Proceedings of the 2018 13th APCA International Conference on Automatic Control and Soft Computing (CONTROLO), Ponta Delgada, Portugal, 4–6 June 2018; pp. 407–412. [Google Scholar]

- Madridano, Á.; Al-Kaff, A.; Martín, D.; de la Escalera, A. 3d trajectory planning method for uavs swarm in building emergencies. Sensors 2020, 20, 642. [Google Scholar] [CrossRef] [PubMed]

- Melo, A.G.; Pinto, M.F.; Marcato, A.L.; Honório, L.M.; Coelho, F.O. Dynamic Optimization and Heuristics Based Online Coverage Path Planning in 3D Environment for UAVs. Sensors 2021, 21, 1108. [Google Scholar] [CrossRef] [PubMed]

- Melo, A.G.; Pinto, M.F.; Honorio, L.M.; Dias, F.M.; Masson, J.E. 3D Correspondence and Point Projection Method for Structures Deformation Analysis. IEEE Access 2020, 8, 177823–177836. [Google Scholar] [CrossRef]

- Pinto, M.F.; Honorio, L.M.; Melo, A.; Marcato, A.L. A Robotic Cognitive Architecture for Slope and Dam Inspections. Sensors 2020, 20, 4579. [Google Scholar] [CrossRef]

- Pinto, M.F.; Honório, L.M.; Marcato, A.L.; Dantas, M.A.; Melo, A.G.; Capretz, M.; Urdiales, C. ARCog: An Aerial Robotics Cognitive Architecture. Robotica 2020, 39, 483–502. [Google Scholar] [CrossRef]

- Dijkshoorn, N.; Visser, A. Integrating sensor and motion models to localize an autonomous ar. drone. Int. J. Micro Air Veh. 2011, 3, 183–200. [Google Scholar] [CrossRef]

- Tang, D.; Kou, K.; Tang, Y. Autolanding System of Shipborne UAV Based on Fusion of INS, CDGPS and Vision-Based Navigation. In Advances in Guidance, Navigation and Control; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1609–1618. [Google Scholar]

- Kakaletsis, E.; Symeonidis, C.; Tzelepi, M.; Mademlis, I.; Tefas, A.; Nikolaidis, N.; Pitas, I. Computer Vision for Autonomous UAV Flight Safety: An Overview and a Vision-based Safe Landing Pipeline Example. ACM Comput. Surv. (CSUR) 2021, 54, 1–37. [Google Scholar] [CrossRef]

- Coelho, F.O.; Pinto, M.F.; Souza, J.P.C.; Marcato, A.L. Hybrid Methodology for Path Planning and Computational Vision Applied to Autonomous Mission: A New Approach. Robotica 2020, 38, 1000–1018. [Google Scholar] [CrossRef]

- Biundini, I.Z.; Melo, A.G.; Pinto, M.F.; Marins, G.M.; Marcato, A.L.; Honorio, L.M. Coverage path planning optimization for slopes and dams inspection. In Iberian Robotics Conference; Springer: Cham, Switzerland, 2019; pp. 513–523. [Google Scholar]

- Coelho, F.O.; Carvalho, J.P.; Pinto, M.F.; Marcato, A.L. Ekf and computer vision for mobile robot localization. In Proceedings of the 2018 13th APCA International Conference on Automatic Control and Soft Computing (CONTROLO), Ponta Delgada, Portugal, 4–6 June 2018; pp. 148–153. [Google Scholar]

- Ramos, G.S.; Pinto, M.F.; Coelho, F.O.; Honório, L.M.; Haddad, D.B. Hybrid methodology based on computational vision and sensor fusion for assisting autonomous UAV on offshore messenger cable transfer operation. Robotica 2022, 40, 1–29. [Google Scholar] [CrossRef]

- Khaloo, A.; Lattanzi, D.; Jachimowicz, A.; Devaney, C. Utilizing UAV and 3D computer vision for visual inspection of a large gravity dam. Front. Built Environ. 2018, 4, 31. [Google Scholar] [CrossRef]

- Van Dam, J.; Krasne, A.; Gabbard, J.L. Drone-based augmented reality platform for bridge inspection: Effect of ar cue design on visual search tasks. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 201–204. [Google Scholar]

- Biundini, I.Z.; Pinto, M.F.; Melo, A.G.; Marcato, A.L.; Honorio, L.M. Coverage Path Planning Optimization Based on Point Cloud for Structural Inspection. In Frontiers in Nature-Inspired Industrial Optimization; Springer: Berlin/Heidelberg, Germany, 2022; pp. 141–156. [Google Scholar]

- Kus, S.; Srinivasan, S. Remote, Visual Inspection and Digital Analysis for External Corrosion Characterization in Refinery Unit Applications. In Proceedings of the CORROSION 2021, Online, 19–30 April 2021. [Google Scholar]

- Nikolic, J.; Burri, M.; Rehder, J.; Leutenegger, S.; Huerzeler, C.; Siegwart, R. A UAV system for inspection of industrial facilities. In Proceedings of the 2013 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2013; pp. 1–8. [Google Scholar]

- Petillot, Y.R.; Antonelli, G.; Casalino, G.; Ferreira, F. Underwater Robots: From Remotely Operated Vehicles to Intervention-Autonomous Underwater Vehicles. IEEE Robot. Autom. Mag. 2019, 26, 94–101. [Google Scholar] [CrossRef]

- Yu, L.; Yang, E.; Ren, P.; Luo, C.; Dobie, G.; Gu, D.; Yan, X. Inspection robots in oil and gas industry: A review of current solutions and future trends. In Proceedings of the 2019 25th International Conference on Automation and Computing (ICAC), Lancaster, UK, 5–7 September 2019; pp. 1–6. [Google Scholar]

- Ramos, G.; Pinto, M.; de Souza, E.; Machado, G.; de Castro, G. Technical and Economic Feasibility Study for Implementing a Novel Mooring-Assisting Methodology in Offloading Operations Using Autonomous Unmanned Aerial Vehicles. SPE Prod. Oper. 2022, 37, 72–87. [Google Scholar] [CrossRef]

- Frederiksen, M.; Knudsen, M. Drones for Offshore and Maritime Missions: Opportunities and Barriers; Center for Integrative Innovation Management, University of Southern Denmark, SDU: Odense, Denmark, 2018. [Google Scholar]

- Durdevic, P.; Ortiz-Arroyo, D.; Li, S.; Yang, Z. Vision aided navigation of a quad-rotor for autonomous wind-farm inspection. IFAC-PapersOnLine 2019, 52, 61–66. [Google Scholar] [CrossRef]

- Wang, C.; Cui, L. The Implementation of Automatic Inspection Algorithm for Underwater Vehicles Based on Hough Transform. In Proceedings of the 2018 7th International Conference on Sustainable Energy and Environment Engineering (ICSEEE 2018); Atlantis Press: Paris, France, 2019; pp. 459–464. [Google Scholar]

- Mazreah, A.A.; Alnaimi, F.B.I.; Sahari, K.S.M. Novel design for PIG to eliminate the effect of hydraulic transients in oil and gas pipelines. J. Pet. Sci. Eng. 2017, 156, 250–257. [Google Scholar] [CrossRef]

- Kakogawa, A.; Ma, S. Design of a multilink-articulated wheeled pipeline inspection robot using only passive elastic joints. Adv. Robot. 2018, 32, 37–50. [Google Scholar] [CrossRef]

- Kwon, Y.S.; Yi, B.J. Design and motion planning of a two-module collaborative indoor pipeline inspection robot. IEEE Trans. Robot. 2012, 28, 681–696. [Google Scholar] [CrossRef]

- Iwaszenko, S.; Kalisz, P.; Słota, M.; Rudzki, A. Detection of natural gas leakages using a laser-based methane sensor and uav. Remote Sens. 2021, 13, 510. [Google Scholar] [CrossRef]

- Gómez, C.; Green, D.R. Small unmanned airborne systems to support oil and gas pipeline monitoring and mapping. Arab. J. Geosci. 2017, 10, 1–17. [Google Scholar] [CrossRef]

- Bretschneider, T.R.; Shetti, K. UAV-based gas pipeline leak detection. In Proceedings of the ARCS 2015, Porto, Portugal, 24–27 March 2015. [Google Scholar]

- Shukla, A.; Xiaoqian, H.; Karki, H. Autonomous tracking and navigation controller for an unmanned aerial vehicle based on visual data for inspection of oil and gas pipelines. In Proceedings of the 2016 16th International Conference on Control, Automation and Systems (ICCAS), Gyeongju, Republic of Korea, 16–19 October 2016; pp. 194–200. [Google Scholar]

- Yan, Y.; Liang, Y.; Zhang, H.; Zhang, W.; Feng, H.; Wang, B.; Liao, Q. A two-stage optimization method for unmanned aerial vehicle inspection of an oil and gas pipeline network. Pet. Sci. 2019, 16, 458–468. [Google Scholar] [CrossRef]

- Mangayarkarasi, N.; Raghuraman, G.; Kavitha, S. Influence of computer vision and iot for pipeline inspection-a review. In Proceedings of the 2019 International Conference on Computational Intelligence in Data Science (ICCIDS), Chennai, India, 21–23 February 2019; pp. 1–6. [Google Scholar]

- Motamedi, M.; Faramarzi, F.; Duran, O. New concept for corrosion inspection of urban pipeline networks by digital image processing. In Proceedings of the IECON 2012—38th Annual Conference on IEEE Industrial Electronics Society, Montreal, QC, Canada, 25–28 October 2012; pp. 1551–1556. [Google Scholar]

- Bondada, V.; Pratihar, D.K.; Kumar, C.S. Detection and quantitative assessment of corrosion on pipelines through image analysis. Procedia Comput. Sci. 2018, 133, 804–811. [Google Scholar] [CrossRef]

- Prema Kirubakaran, A.; Murali Krishna, I. Pipeline crack detection using mathematical morphological operator. In Knowledge Computing and its Applications; Springer: Berlin/Heidelberg, Germany, 2018; pp. 29–46. [Google Scholar]

- Su, T.C.; Yang, M.D. Application of morphological segmentation to leaking defect detection in sewer pipelines. Sensors 2014, 14, 8686–8704. [Google Scholar] [CrossRef] [PubMed]

- Sinha, S.K.; Fieguth, P.W. Morphological segmentation and classification of underground pipe images. Mach. Vis. Appl. 2006, 17, 21–31. [Google Scholar] [CrossRef]

- Sinha, S.K.; Fieguth, P.W. Segmentation of buried concrete pipe images. Autom. Constr. 2006, 15, 47–57. [Google Scholar] [CrossRef]

- Su, T.C. Segmentation of crack and open joint in sewer pipelines based on CCTV inspection images. In Proceedings of the 2015 AASRI International Conference on Circuits and Systems, Paris, France, 9–10 August 2015; Volume 2. [Google Scholar]

- Ting, L.L.; Tey, J.Y.; Tan, A.C.; King, Y.J.; Abd Rahman, F. Water leak location based on improved dual-tree complex wavelet transform with soft thresholding de-noising. Appl. Acoust. 2021, 174, 107751. [Google Scholar] [CrossRef]

- Hawari, A.; Alamin, M.; Alkadour, F.; Elmasry, M.; Zayed, T. Automated defect detection tool for closed circuit television (cctv) inspected sewer pipelines. Autom. Constr. 2018, 89, 99–109. [Google Scholar] [CrossRef]

- Kumar, S.S.; Abraham, D.M.; Jahanshahi, M.R.; Iseley, T.; Starr, J. Automated defect classification in sewer closed circuit television inspections using deep convolutional neural networks. Autom. Constr. 2018, 91, 273–283. [Google Scholar] [CrossRef]

- Yin, X.; Chen, Y.; Bouferguene, A.; Zaman, H.; Al-Hussein, M.; Kurach, L. A deep learning-based framework for an automated defect detection system for sewer pipes. Autom. Constr. 2020, 109, 102967. [Google Scholar] [CrossRef]

- Xiaoqian, H.; Karki, H.; Shukla, A.; Xiaoxiong, Z. Variant PID controller design for autonomous visual tracking of oil and gas pipelines via an unmanned aerial vehicle. In Proceedings of the 2017 17th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 18–21 October 2017; pp. 368–372. [Google Scholar]

- Basso, M.; Pignaton de Freitas, E. A UAV guidance system using crop row detection and line follower algorithms. J. Intell. Robot. Syst. 2020, 97, 605–621. [Google Scholar] [CrossRef]

- Okoli, J.; Ubochi, B. Autonomous Robot for Gas Pipeline Inspection and Leak Detection. Int. J. Comput. Digit. Syst. 2022, 11, 811–820. [Google Scholar] [CrossRef]

- Santa Maria, T.H.; Pusssente, G.A.N.; Marcato, A.L.M.; de Aguiar, E.P. NMPC controller applied to an UAV Path Following Problem. In Proceedings of the 2020 Latin American Robotics Symposium (LARS), 2020 Brazilian Symposium on Robotics (SBR) and 2020 Workshop on Robotics in Education (WRE), Natal, Brazil, 9–12 November 2020; pp. 1–6. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 679–698. [Google Scholar] [CrossRef]

- Ballard, D.H. Generalizing the Hough transform to detect arbitrary shapes. Pattern Recognit. 1981, 13, 111–122. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

- Ardupilot. APM Planner 2. 2020. Available online: https://ardupilot.org/planner2/ (accessed on 9 June 2020).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Silva, Y.; Sousa, L.; Souza, C. Pipeline Recognition for Drone Navegation. IEEE Dataport 2022. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 22–24 June 2009; pp. 248–255. [Google Scholar]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The open images dataset v4. Int. J. Comput. Vis. 2020, 128, 1956–1981. [Google Scholar] [CrossRef]

- Community, B.O. Blender—A 3D Modelling and Rendering Package; Blender Foundation, Stichting Blender Foundation: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Tzutalin, D. LabelImg. GitHub Repos. 2015, 6. Available online: https://github.com/tzutalin/labelImg (accessed on 20 June 2022).

- Hinton, G.E. A practical guide to training restricted Boltzmann machines. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 599–619. [Google Scholar]

- Ćorović, A.; Ilić, V.; Ðurić, S.; Marijan, M.; Pavković, B. The Real-Time Detection of Traffic Participants Using YOLO Algorithm. In Proceedings of the 2018 26th Telecommunications Forum (TELFOR), Belgrade, Serbia, 20–21 November 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Brandao, A.S.; Martins, F.N.; Soneguetti, H.B. A vision-based line following strategy for an autonomous UAV. In Proceedings of the 2015 12th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Colmar, France, 21–23 July 2015; Volume 2, pp. 314–319. [Google Scholar]

- Ding, L.; Goshtasby, A. On the Canny edge detector. Pattern Recognit. 2001, 34, 721–725. [Google Scholar] [CrossRef]

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Mu, Z.; Li, Z. Intelligent tracking car path planning based on Hough transform and improved PID algorithm. In Proceedings of the 2018 5th International Conference on Systems and Informatics, Nanjing, China, 10–12 November 2018; pp. 24–28. [Google Scholar]

- Bisong, E. Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners; Apress: Berkeley, CA, USA, 2019; pp. 59–64. [Google Scholar]

| Path Planning | |||||

|---|---|---|---|---|---|

| Work | Robot | Segmentation | IR Sensor | Identification of Curves and Pipeline | O & G |

| Wang et al. [25] | AUV | Yes | No | No | Yes |

| Mazreah et al. [26] | Pipeline Inner Robot | No | No | No | Yes |

| Kakogawa et al. [27] | Wheeled Robot | No | No | No | Yes |

| Basso et al. [47] | UAS | Yes | No | No | No |

| Okoli et al. [48] | Wheeled Robot | No | Yes | No | Yes |

| Santa et al. [49] | UAS | Yes | No | No | No |

| Proposed System | UAS | Yes | No | Yes | Yes |

| Optimizer | SGD (learning rate = 0.01) |

| Epochs | 2000 |

| Batch size | 16 |

| Patience | 100 |

| Image Size | 448 × 448 |

| Weight Decay | 0.0004 |

| Pipe | FP | |

| Pipe | 0.64 | 1 |

| FN | 0.36 | 0 |

| Parameter | Simulation | Real Environment |

|---|---|---|

| Error Deviation (m) | 0.0074 | 0.0111 |

| Mean CNN Confidence | 0.8774 | 0.9765 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

da Silva, Y.M.R.; Andrade, F.A.A.; Sousa, L.; de Castro, G.G.R.; Dias, J.T.; Berger, G.; Lima, J.; Pinto, M.F. Computer Vision Based Path Following for Autonomous Unmanned Aerial Systems in Unburied Pipeline Onshore Inspection. Drones 2022, 6, 410. https://doi.org/10.3390/drones6120410

da Silva YMR, Andrade FAA, Sousa L, de Castro GGR, Dias JT, Berger G, Lima J, Pinto MF. Computer Vision Based Path Following for Autonomous Unmanned Aerial Systems in Unburied Pipeline Onshore Inspection. Drones. 2022; 6(12):410. https://doi.org/10.3390/drones6120410

Chicago/Turabian Styleda Silva, Yago M. R., Fabio A. A. Andrade, Lucas Sousa, Gabriel G. R. de Castro, João T. Dias, Guido Berger, José Lima, and Milena F. Pinto. 2022. "Computer Vision Based Path Following for Autonomous Unmanned Aerial Systems in Unburied Pipeline Onshore Inspection" Drones 6, no. 12: 410. https://doi.org/10.3390/drones6120410

APA Styleda Silva, Y. M. R., Andrade, F. A. A., Sousa, L., de Castro, G. G. R., Dias, J. T., Berger, G., Lima, J., & Pinto, M. F. (2022). Computer Vision Based Path Following for Autonomous Unmanned Aerial Systems in Unburied Pipeline Onshore Inspection. Drones, 6(12), 410. https://doi.org/10.3390/drones6120410