Abstract

In recent years, visual tracking has been employed in all walks of life. The Siamese trackers formulate the tracking problem as a template-matching process, and most of them can meet the real-time requirements, making them more suitable for UAV tracking. Because existing trackers can only use the first frame of a video sequence as a reference, the appearance of the tracked target will change when an occlusion, fast motion, or similar target appears, resulting in tracking drift. It is difficult to recover the tracking process once the drift phenomenon occurs. Therefore, we propose a motion-aware Siamese framework to assist Siamese trackers in detecting tracking drift over time. The base tracker first outputs the original tracking results, after which the drift detection module determines whether or not tracking drift occurs. Finally, the corresponding tracking recovery strategies are implemented. More stable and reliable tracking results can be obtained using the Kalman filter’s short-term prediction ability and more effective tracking recovery strategies to avoid tracking drift. We use the Siamese region proposal network (SiamRPN), a typical representative of an anchor-based algorithm, and Siamese classification and regression (SiamCAR), a typical representative of an anchor-free algorithm, as the base trackers to test the effectiveness of the proposed method. Experiments were carried out on three public datasets: UAV123, UAV20L, and UAVDT. The modified trackers (MaSiamRPN and MaSiamCAR) both outperformed the base tracker.

1. Introduction

As one of the emerging industries, the unmanned aerial vehicle (UAV) industry has witnessed rapid progress in recent years [1,2]. Visual tracking, which is one of the core functions of UAVs, has been performed for air reconnaissance, remote sensing, patrol inspection, and other applications. Compared with traditional video sequences, aerial video scenes include more complex factors, such as occlusion, appearance change, scale change, etc. Although it has been studied for many years, visual tracking under the influence of these complex factors remains challenging.

With the development of artificial intelligence technology, deep learning (DL) has received increased research interest. Visual tracking technology also benefits from DL technology and has made great progress. In recent years, the Siamese tracker [3,4,5] has emerged in the field of video target tracking due to its concise structure and superior performance. The Siamese tracker simplifies the visual tracking problem into a matching problem by using a two-branch network and employs the cross-correlation operation to compare the similarity between the template image and the search image. To improve the tracking accuracy, extensive research has been conducted, including adding regression modules, using deeper networks, and obtaining more robust features by feature fusion. Because the performance of this matching-based method greatly depends on the quality of the template, in some simple scenarios, tracking can be realized only by relying on the information provided by the first frame. However, the appearance of the target often changes due to occlusion, rotation, and angle change, resulting in a decline in the tracking performance. To address this issue, some methods put forward the strategy of template update. Zhao et al. proposed using reinforcement learning to update the template online. The best template for the follow-up tracking process is selected during the tracking process. However, because such an online learning strategy necessitates a large amount of computing resources, the tracking frame rate is limited to 20 fps. Furthermore, due to the small number of samples used in training, it is prone to overfitting [6]. Yang et al. proposed a template-driven Siamese network that fuses features from multiple templates to better adapt to target appearance changes [7]. Xu et al. created the contour proposal template by incorporating the contour-detection network into the Siamese tracker to resolve the partial-occlusion problem [8]. They adopted multiple templates to adapt to complex situations for better performance, which brings higher consumption of computing resources, and inappropriate template update opportunities are more likely to introduce interference, resulting in a drop in tracking performance.

As an optimal estimation algorithm for linear systems, the Kalman filter [9] algorithm is extensively used in the state estimation of moving targets. However, the motion state of the target often changes in the UAV’s field of view due to camera motion, making it difficult to obtain ideal results by directly combining the Kalman filter with the visual tracker. To fully utilize the prediction ability of the Kalman filter to avoid tracking drift and improve the tracking performance of the Siamese tracker, in this paper, we develop a motion-aware tracking framework by using the Kalman filter and a Siamese network. First, the proposed method predicts the target motion state using the Kalman filter according to the short-term historical trajectory information. Next, the tracking drift phenomenon is monitored based on the difference between the original Siamese tracker’s tracking results and the Kalman filter’s prediction results. When tracking drift occurs, the Kalman prediction results are used in time, and the corresponding tracking recovery strategies are combined for effective correction. In addition, a lightweight model (AlexNet) is adopted for faster tracking speed. We integrate the motion-aware Siamese framework described above into the base trackers SiamRPN and SiamCAR, and compare the performance with some traditional algorithms. The proposed algorithms (MaSiamRPN and MaSiamCAR) reached 160 and 192 fps in multiple datasets (UAV123, UAV20L, and UAVDT), respectively, which is slightly lower than the 180 and 220 fps of the base trackers SiamRPN and SiamCAR. However, the tracking accuracy of the proposed algorithms has been significantly improved, particularly when occlusion, rotation, and angle of view change are present. The accuracy and success rate of MaSiamCAR are increased by 9.4% and 11.2%, respectively, compared to the benchmark algorithm (SiamCAR) under the UAVDT dataset.

2. Related Works

With the rapid development of DL technology in recent years, the visual target tracking technology has witnessed an immense improvement. DL technology is being applied to various fields, and the field of UAV video target tracking is no exception. In this section, we briefly discuss the research progress of the UAV visual tracking and Siamese tracking algorithms.

2.1. UAV Visual Tracking Algorithms

Targets in aerial videos pose many problems, such as changeable appearance, high interference, and frequent occlusion. In addition, the load capacity of the UAV platform does not allow the mounting of large computing equipment. Most existing visual tracking algorithms are improvements over the traditional visual tracking algorithm to make them suitable for application in UAVs. Next, we briefly discuss the progress of UAV visual tracking.

Fu et al. located the interference source according to the local maximum value of the response map and adaptively changed the target of the regressor by punishing the response value of the interference source during the training process to improve the anti-interference ability of the discriminative correlation filter (DCF) tracker in UAVs [10]. Fan et al. introduced the peak to sidelobe ratio (PSR) index into the kernelized correlation filter (KCF) algorithm to evaluate the tracking quality and used the speeded up robust features (SURF) random sample consistency target retrieval matching strategy to rematch the candidate boxes in case the target was blocked or lost [11]. Li et al. used the lightweight convolutional feature to ensure high tracking speed and introduced the intermittent context learning strategy to improve the discrimination ability of the filter to ensure the tracking performance in long-term tracking tasks [12]. Zhang et al. used single exponential smoothing forecasting to predict the future state information of the target according to the time series. Then, more contextual information can be obtained to improve the robustness of the filter [13]. Deng et al. used the dynamic space regularization weight and multiparticle alternating direction technology to train the filter to make it focus more on reliable regions to suppress interference [14]. The AutoTrack algorithm adopted the spatial local response map for spatial regularization and determined the updated frequency of the filter from the change in the global response map [15]. Zhang et al. used a regularization term to learn the environmental residuals between two adjacent frames to enhance the resolution of the filter in complex and changeable UAV operating environments [16]. He et al. incorporated contextual attention, dimensional attention, and spatial attention in the training and reasoning stages of the filter to improve the tracking performance in complex scenes [17]. These studies were carried out from various perspectives and emphases. For example, some studies [10,11,14] improved the robustness of the algorithm by suppressing interference and [12] achieved better tracking speed through lightweight models. It was attempted to adapt to changes in target appearance by obtaining more useful information related to the target [13,15,16,17]. The motion state of the target is constantly evolving, with continuity and continuity. The motion information of the target is critical for the continuous tracking process, but it is frequently ignored rather than fully utilized.

2.2. The Siamese Trackers

The visual tracking algorithm based on the Siamese network constitutes an important research topic. It transforms the tracking task into the problem of matching between the template image and the search image. Different from the traditional matching method, the original Siamese tracker uses a large amount of offline data to learn the embedding function , and the extracted features are matched using a cross-correlation operation. Finally, the maximum value in the response map is obtained to determine the target area. This process can be formulated as follows:

where z is the template image, x is the search image, and * denotes the cross-correlation operation.

To overcome the problem of scale change, the Siamese fully convolutional (SiamFC) tracker performs scale estimation through multiscale matching. However, this increases the computational burden and makes it difficult to manually select the scale suitable for most cases. Subsequently, Li et al. integrated the region proposal network (RPN) into the Siamese tracker. They proposed the SiamRPN tracker to improve the tracking speed and accuracy of scale estimation by using predefined anchor boxes [3]. To study the performance gain provided by deep convolutional neural networks, Li et al. started with the training data and weakened the learning bias of deep networks by moving the targets randomly in the training data [18]. Furthermore, Zhang et al. performed experiments and analysis on the network structure and found that the padding operation in deep networks is the main reason affecting the tracking performance; they also proposed the deeper and wider Siamese networks (SiamDW) algorithm to effectively crop the area affected by the padding operation [19]. Zhu et al. introduced several strategies for the imbalance of training data to enhance the generalization ability of deep features and used a long-term tracking strategy to improve the tracking performance after the target disappeared [20].

In recent years, anchor-free tracking algorithms have become an important research topic in the field of visual tracking. Xu et al. proposed four guidelines for designing trackers, one of which mentions that “tracking should not provide prior information about the state of the target scale distribution” [21]. In contrast, anchor-based tracking algorithms define the anchor frame of a specific scale in advance, thus providing a priori scale information for the tracking algorithm. Guo et al. proposed the SiamCAR algorithm [4], which adds a center-ness branch based on the classification branch and a regression branch to reduce the effect of low-quality samples far away from the target during training. Chen et al. proposed the Siamese box adaptive network (SiamBAN) algorithm, which adopts a multilevel prediction network and a more refined discrimination method between the positive and negative samples and has achieved good results on multiple datasets [22]. To sum up, although anchor-free tracking algorithms reduce the use of prior information, they require more training data to obtain more ideal results compared with anchor-based tracking algorithms.

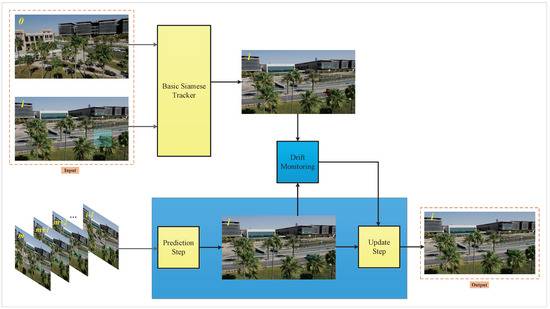

3. Motion-Aware Siamese Framework

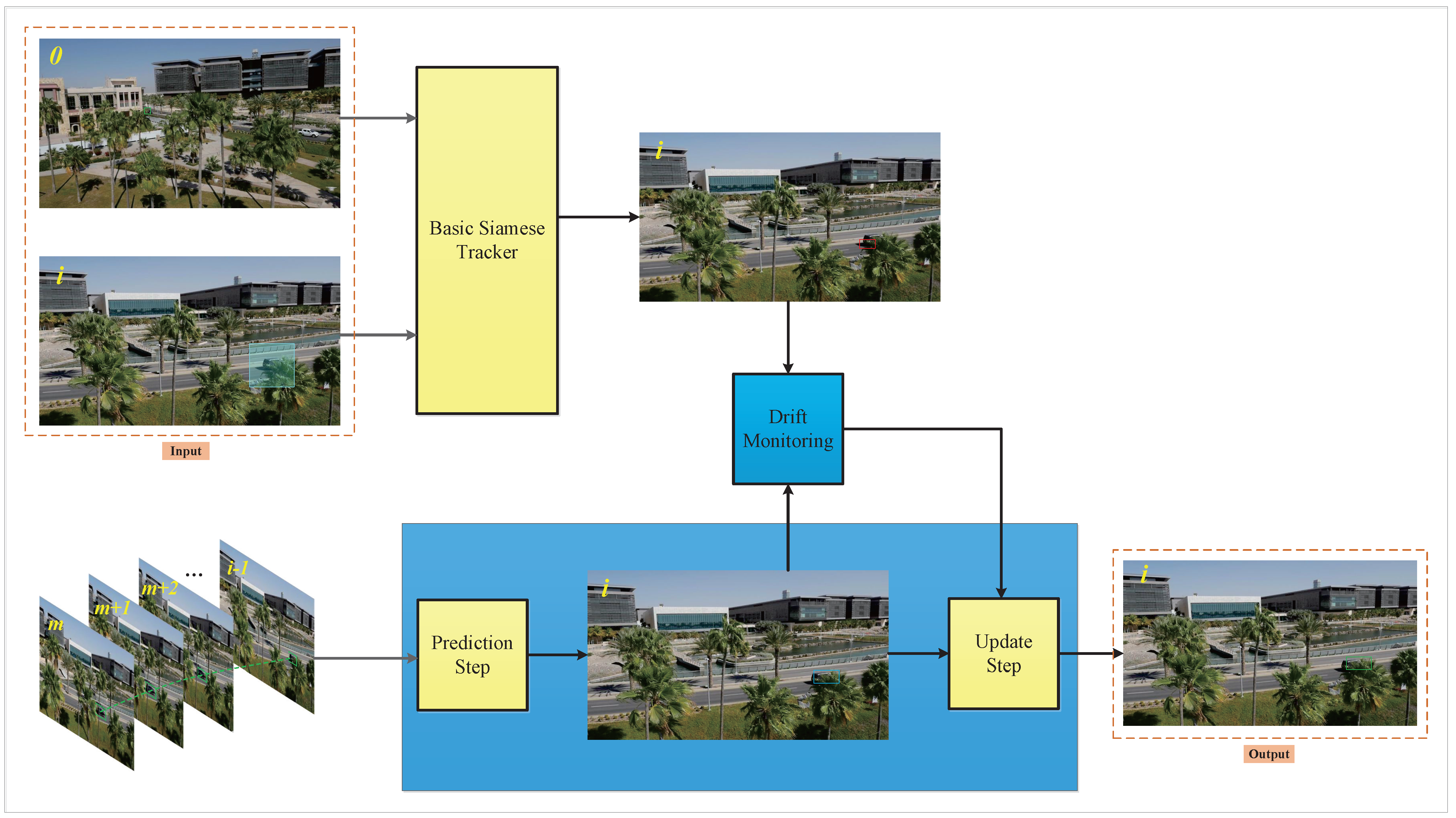

In this section, we describe the proposed motion-aware Siamese framework in detail. The proposed motion-aware Siamese framework, shown in Figure 1, includes three main parts: a basic Siamese tracker, a Kalman filter, and a drift detection module. First, video sequences are input into the Siamese tracker, and the original tracking results are obtained after classification and parametric regression. Next, the Kalman filter performs the prediction step according to the tracking trajectory information of the previous frames and predicts the motion state to obtain the prior estimation. The original tracking results and Kalman’s prior prediction results are then sent to the tracking drift detection module to detect the occurrence of tracking drift. Finally, corresponding tracking recovery strategies are adopted according to various situations.

Figure 1.

The architecture of the motion-aware Siamese framework.

The input module is in the upper left corner of Figure 1, and the output module is in the lower right corner. The input module continuously receives video image data frame by frame for processing, primarily cutting the fixed size area based on the previous frame (such as the solid rectangle in the image of the frame) to reduce calculation cost and interference from far interfering objects. When the target is near the boundary and the image size is insufficient, the image pixel mean value is used to fill the specified size. The output module is primarily used to receive the final tracking results, which are defined by four parameters . Here, define the center position of the target, while w and h represent the width and height of the predicted target, respectively. The baseline tracker and the Kalman filter are discussed in Section 3.1 and Section 3.2, respectively, while the tracking drift monitoring mechanism and tracking recovery strategy are described in Section 3.3.

3.1. Basic Siamese Tracker

Existing Siamese visual trackers can be classified as anchor-based trackers and anchor-free trackers. This paper uses the anchor-based SiamRPN and the anchor-free SiamCAR algorithms for experiments.

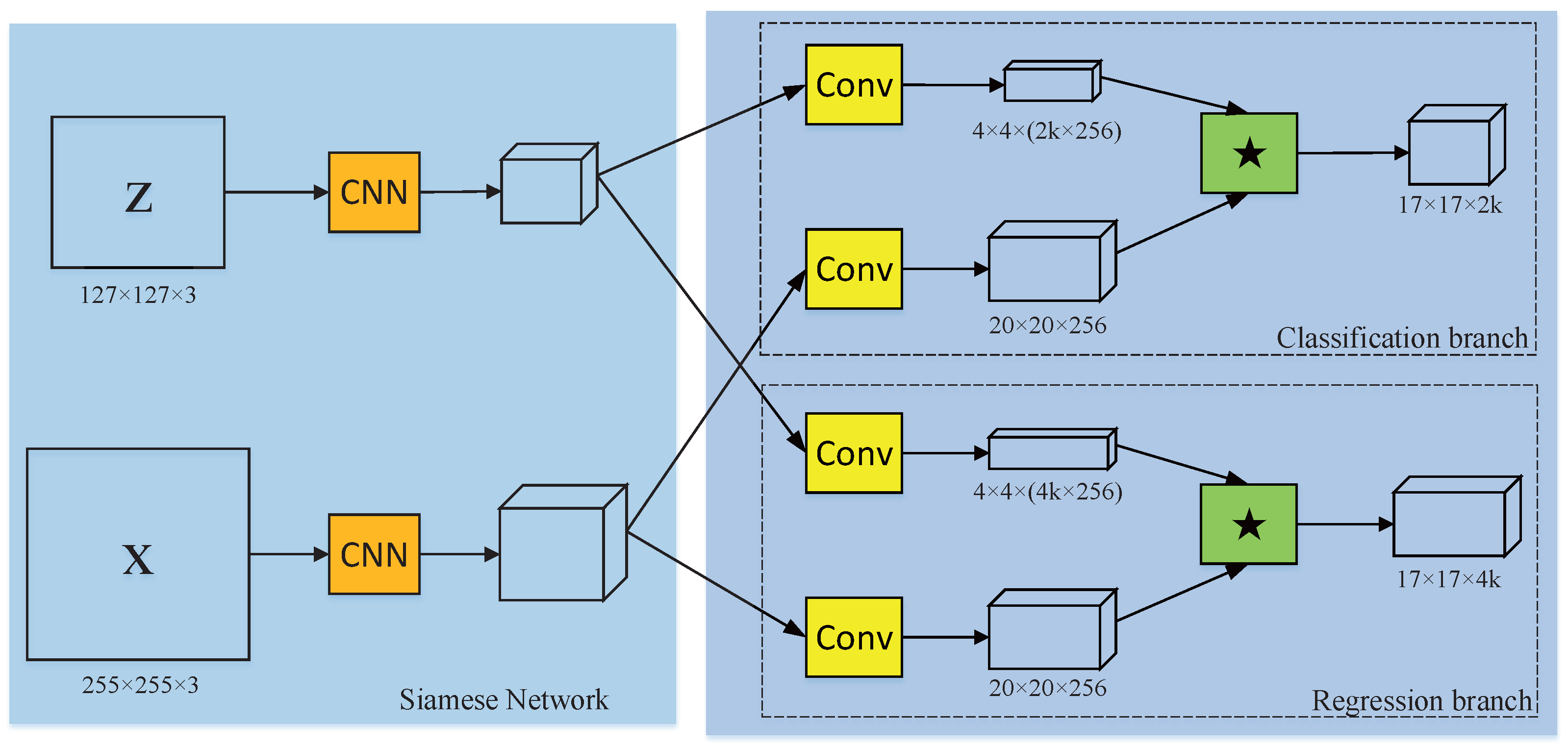

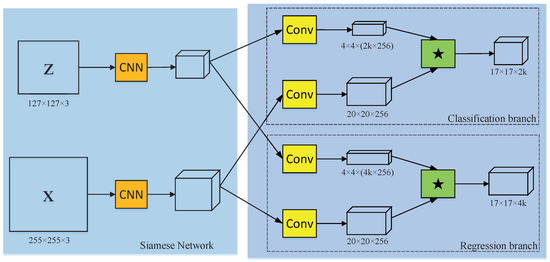

SiamRPN is a visual tracking algorithm based on the Siamese network and RPN. Li et al. combined the RPN and Siamese networks effectively for the first time and conducted experiments to demonstrate the superiority of the algorithm [3]. The network structure of SiamRPN is illustrated in Figure 2. The left part is the Siamese feature extraction network, and the right part is the RPN network. The input part of the Siamese network contains two branches: template image and search image; both branches have the same weight. The RPN network has two branches: classification subnetwork and regression subnetwork. The classification branch distinguishes whether the predefined k anchor box contains the target. The regression branch performs regression on the anchor box containing the target to obtain more accurate bounding box parameters. At the reasoning stage, the template image and search area image are first input to the Siamese network for feature extraction, and then the obtained features are sent to the RPN network. Next, according to the classification score output by the classification network, the anchor frame that is most likely to contain the target is screened by maximum suppression. Finally, the anchor frame parameters are further regressed by the regression network to obtain more accurate tracking results.

Figure 2.

The framework of the SiamRPN algorithm.

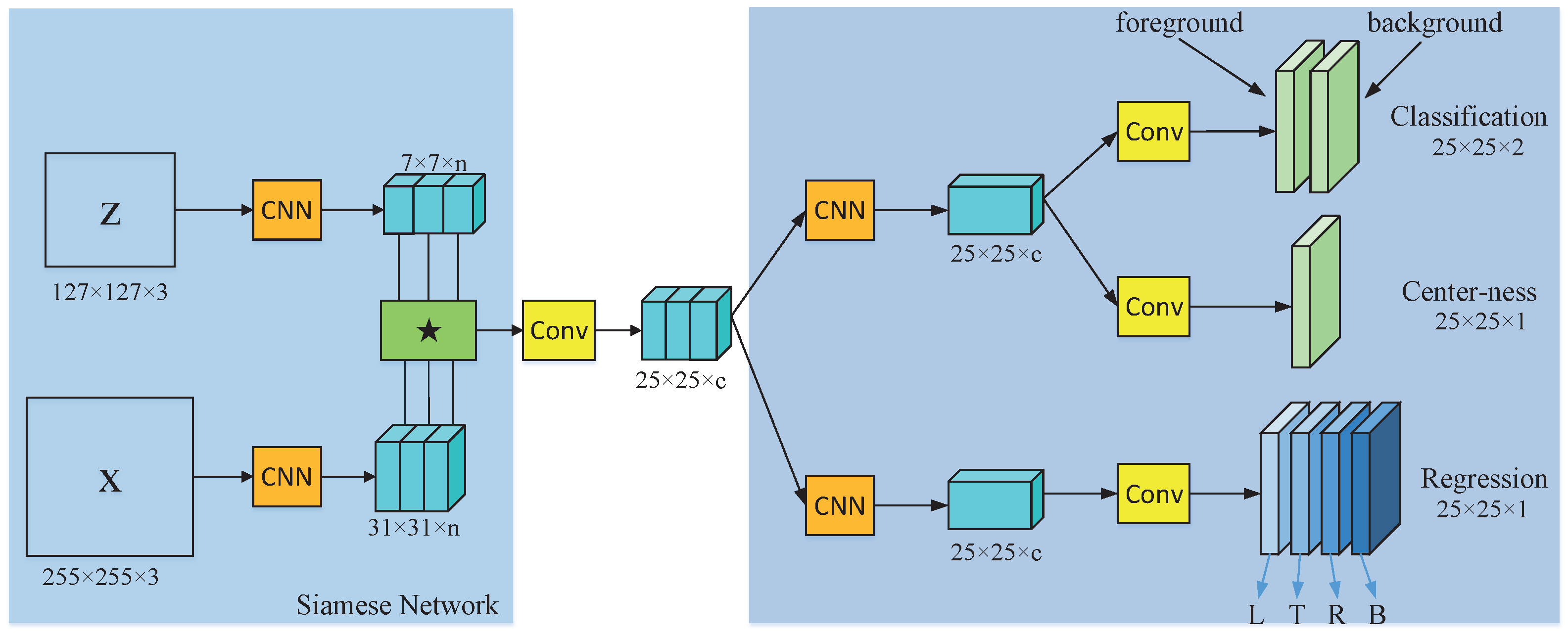

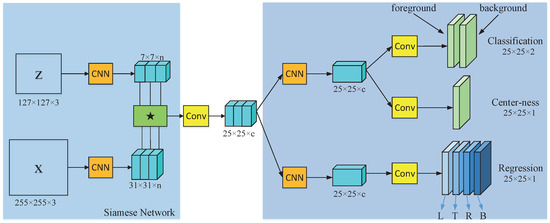

The SiamCAR is a typical anchor-free Siamese tracking algorithm proposed by Guo et al. [4]. In contrast to SiamRPN’s task of classifying and regressing anchor boxes, the SiamCAR algorithm does not rely on anchor boxes but directly classifies and regresses the original image area corresponding to each value in the classification response map. The network structure of SiamCAR is illustrated in Figure 3. The left side of the network in SiamCAR is similar to that of SiamRPN. The template image and search image are sent to the Siamese network for feature extraction. After obtaining the features extracted by the Siamese network, the cross-correlation operation is carried out, and the features more suitable for the tracking task are obtained via multilayer convolution. Since there is no anchor box, the SiamCAR algorithm maps the response value in the classification branch to a corresponding area of the original map. Then, it performs parameter regression on the tracking frame mapped back to the original map through the regression branch to obtain more accurate scale estimation results. In addition, a center-ness branch is introduced to suppress low-quality samples that are far from the target.

Figure 3.

The framework of the SiamCAR algorithm.

3.2. Motion Information Prediction by Using the Kalman Filter

Traditional Siamese trackers concentrate only on spatial feature information and ignore the importance of motion information for the continuous and stable tracking of the target. However, targets in the UAV’s view often drift due to occlusion and appearance changes, leading to tracking failure. The Kalman filter is an algorithm that uses the linear system state equation to optimally estimate the state by using the observed data. The motion state of the UAV changes due to maneuvering, and the camera motion also introduces noise. In addition, the frame rate of aerial videos obtained using a UAV is usually 30 fps or higher. Thus, the short-time trajectory of a target in a high-frame-rate video sequence can be simplified to linear motion processing; this process can be formulated as follows:

where is the time index, denotes the state vector at k, A is the state transition matrix, represents the measurement vector at k, and H is the observation matrix. Here, is the process noise, which denotes the uncertainty of modeling the prediction process, and is the measurement noise, which represents the uncertainty of the observation data. Supposing that and belong to Gaussian distributions with covariance matrix Q and R, respectively:

The Kalman filtering process mainly includes two stages: prediction and update. During the prediction process, the pre-established motion model is used to estimate the state of the object according to the historical measurement information:

where represents the measurement information before , denotes the conditional expectation of a random vector given , and is the prediction error.

Combined with the newly received measurement data , the updated state and covariance matrix can be obtained as follows:

where denotes the Kalman gain, which can be calculated as follows:

In this study, we regarded the output of the Siamese tracking algorithm as a Markov chain containing Gaussian noise. The Kalman filter can retrieve more accurate information from the observation data. The prediction process is given in Equations (6) and (7), and the update process is given by Equations (8)–(10). In this study, we define an eight-dimensional state space to describe the state information of the target:

where x and y determine the center position of the target, r is the aspect ratio, h is the height of the target, and , , , and denote the change rate of x, y, r, and h with respect to time, respectively. The motion in a short duration can be regarded as constant velocity; thus, the whole motion process can be simplified into multiple continuous processes with constant velocity. The state transition matrix A and observation matrix H are set as follows:

3.3. Drift Monitoring and Tracking Recovery

Tracking drift can occur due to factors, such as appearance changes, occlusion, and interference from similar objects, and this phenomenon often lasts for multiple frames. The use of such low-quality measurement data in the updating process of the Kalman filter will have a significant impact on the estimation accuracy. To address this issue, we propose a novel tracking drift monitoring method in this paper. First, the difference between the prediction result obtained using the Kalman filter and the original tracking result obtained using the Siamese tracker is compared. Next, the appearance information is combined to detect whether tracking drift occurs. In case tracking drift occurs, different tracking recovery strategies are adopted according to the degree of drift to ensure stable tracking. Finally, more reliable position and scale estimation results are obtained through the update step of the Kalman filter. The specific operations involved in the proposed method are presented in detail here.

First, the intersection of union (IOU) between the prediction results obtained using the Kalman filter and the tracking results obtained using the original Siamese tracker is used to judge whether the tracking process is stable. There is no doubt that the error of both the original tracking algorithm and the prediction step of the Kalman filter may lead to a rapid decline of the IOU between them. Although this indicator can well reflect whether a tracking anomaly exists, it is not enough to determine whether the Siamese tracking algorithm has drifted or the Kalman filter has failed. Therefore, a second index, that is, appearance similarity, is introduced for further differentiation. The target features in the first m frames of the image sequence are extracted and compared with the regional features in the current frame prediction and tracking results, respectively. The procedure can be formulated as follows:

where z is the regional feature extracted from the bounding box, and represents the inner product operation. The feature similarity between the first m frame images and the Kalman prediction result is represented by . Accordingly, the feature similarity between the first m frame image and the Siamese tracking result can be represented by . indicates that the prediction area of the Kalman filter is similar to the appearance of the target. In contrast, indicates that the motion model is no longer applicable and needs to be reinitialized at the current position.

The tracking process can be divided into three cases based on these two indicators (i.e., IOU and appearance similarity), as shown in Table 1. indicates that both the Siamese tracking algorithm and the motion model are capable of tracking the target consistently. At this point, the tracking results can be used as measurement data to update the Kalman filter. indicates that either the Siamese tracking algorithm or the motion model is experiencing a severe drift phenomenon. In this event, further judgment is performed using the index S. If it is found that the Siamese algorithm has drifted, the Kalman prediction results are used to extract the template and update the initial template. After updating, the tracking process is performed again to ensure effective tracking. indicates that slight drift has occurred in either the Siamese tracking algorithm or the motion model. In this situation, the same template update strategy as that used in the case of is adopted. Nevertheless, to ensure a high tracking speed, the retraining strategy need not be implemented; instead, the updated results obtained using the Kalman filter can be directly used as the final estimation.

Table 1.

Drift monitoring and tracking recovery strategy.

In the template updating process, to retain more target appearance information, the method of linear weighting between the initial template and the current template is adopted. The process can be formulated as follows:

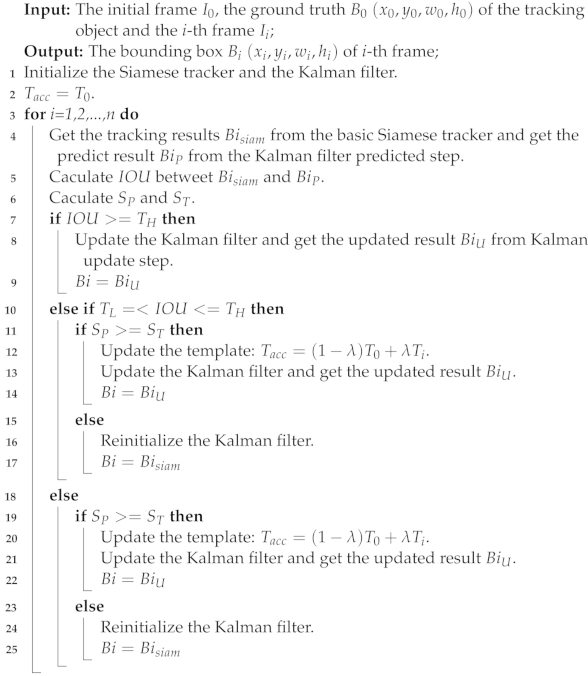

Algorithm 1 enlists the entire proposed motion-aware Siamese framework algorithm for unmanned aerial vehicle tracking.

| Algorithm 1: The proposed motion-aware Siamese (MaSiam) framework algorithm |

|

4. Experiments

UAV123 [23], UAV20L [23], and UAVDT [24] are typical representative datasets with diverse attributes and rich scenes in the field of UAV tracking. Some complex scenes, such as camera rotation (CR), out-of-view (OV), and full occlusion (FO), bring difficulties and challenges to fast and accurate UAV tracking. Therefore, they are widely adopted to verify the universality and effectiveness of the tracking algorithms [3,4,7,20,22,25,26,27]. To test the effectiveness of the proposed method, we performed quantitative and qualitative experiments on three typical UAV datasets: UAV123, UAV20L, and UAVDT. The quantitative experiment can be divided into overall performance evaluation and sub-attribute performance evaluation.

4.1. Experimental Platform and Parameters

During the training of the Siamese algorithm, the samples satisfying the condition of are defined as positive samples, and the samples satisfying the condition of are defined as negative samples. Therefore, we set as 0.6, as 0.3, and m as 5. In addition, the hyperparameter in the template update process was set as 0.1. To facilitate the comparison of the experimental results, the same training datasets VID [28] and GOT10K [29] were used for SiamRPN and SiamCAR. The stochastic gradient descent (SGD) optimization algorithm was used for 50 rounds of training, and the best result was selected as the benchmark algorithm. The training and testing hardware platforms used in this experiment were Intel(R) Core (TM) i7–10750H CPU @ 2.60GHz CPU and NVIDIA GeForce RTX2060 GPU.

4.2. Quantitative Experiment

4.2.1. Experimental Analysis Using the UAV123 Dataset

The UAV123 dataset is an evaluation dataset for single-target tracking in the UAV’s field of view. It contains 123 video sequences, and the frame rate of each video sequence is 30 fps or higher. Each video sequence has 12 complex attributes: aspect ratio change (ARC), background clutter (BC), camera motion (CM), fast motion (FM), full occlusion (FO), illumination variation (IV), low resolution (LR), out of view (OV), partial occlusion (PO), scale variation (SV), similar object (SO), and viewpoint change (VC).

- (1)

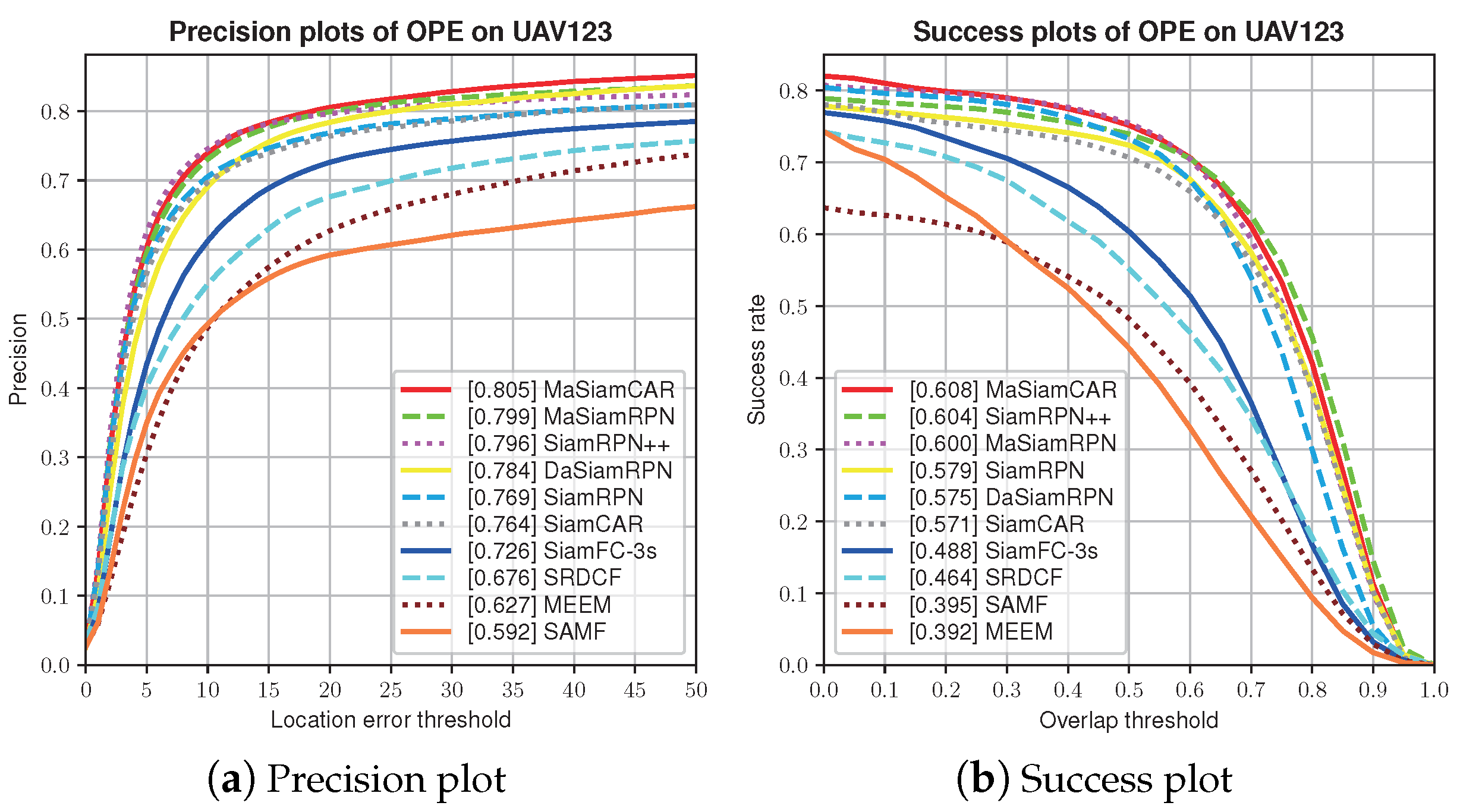

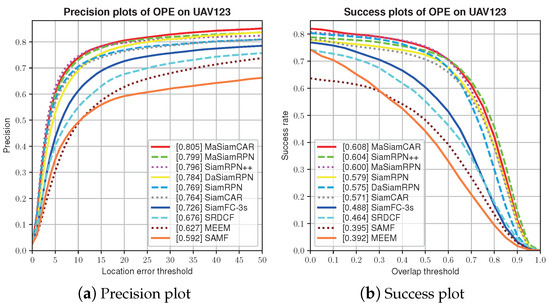

- Overall evaluation

The overall evaluation results of the proposed MaSiamRPN algorithm on the UAV123 dataset are shown in Figure 4. Precision score and success score are commonly used in the tracking field to quantify the performance of the algorithm. The precision in Figure 4a represents the percentage of video frames in which the distance between the center point of the bounding box and the ground truth is less than the given threshold. Different distance thresholds are represented on the horizontal axis. A series of precision can be obtained according to these different threshold values, and thus a curve can be obtained. Here, the value in front of each algorithm represents the precision rate, that is, the corresponding value when the threshold is set to 20. The overlap in Figure 4b refers to the overlap rate between the bounding box (marked as A) obtained by the tracking algorithm and the box (marked as B) given by ground truth. It can be defined as follows: , where represents the number of pixels in the region. When the overlap of a frame exceeds the threshold, the frame is considered successful. The success rate is defined as the percentage of total successful frames in all frames. The value of the overlap ranges from 0 to 1; thus a curve can be drawn. Using one success rate value at a specific threshold (e.g., 0.8) for tracker evaluation may not be fair or representative [30]. Therefore, the area under curve (AUC) of each success plot is used to rank the tracking algorithms.

Figure 4.

Overall evaluation results of the UAV123 dataset.

The precision and success rate of the proposed MaSiamRPN algorithm were 0.799 and 0.600, an increase of 3.9% and 4.3% compared with the baseline tracker (SiamRPN). Thus, the performance of the proposed algorithm is close to that of SiamRPN++, whose backbone is ResNet50. The precision and success rate of the MaSiamCAR algorithm were 0.764 and 0.571, an increase of 5.4% and 6.5% compared with the baseline tracker (SiamCAR), surpassing the SiamRPN++ algorithm. Thus, the addition of the Kalman filter not only improves the prediction accuracy of the target position but also contributes to the scale estimation of the target.

- (2)

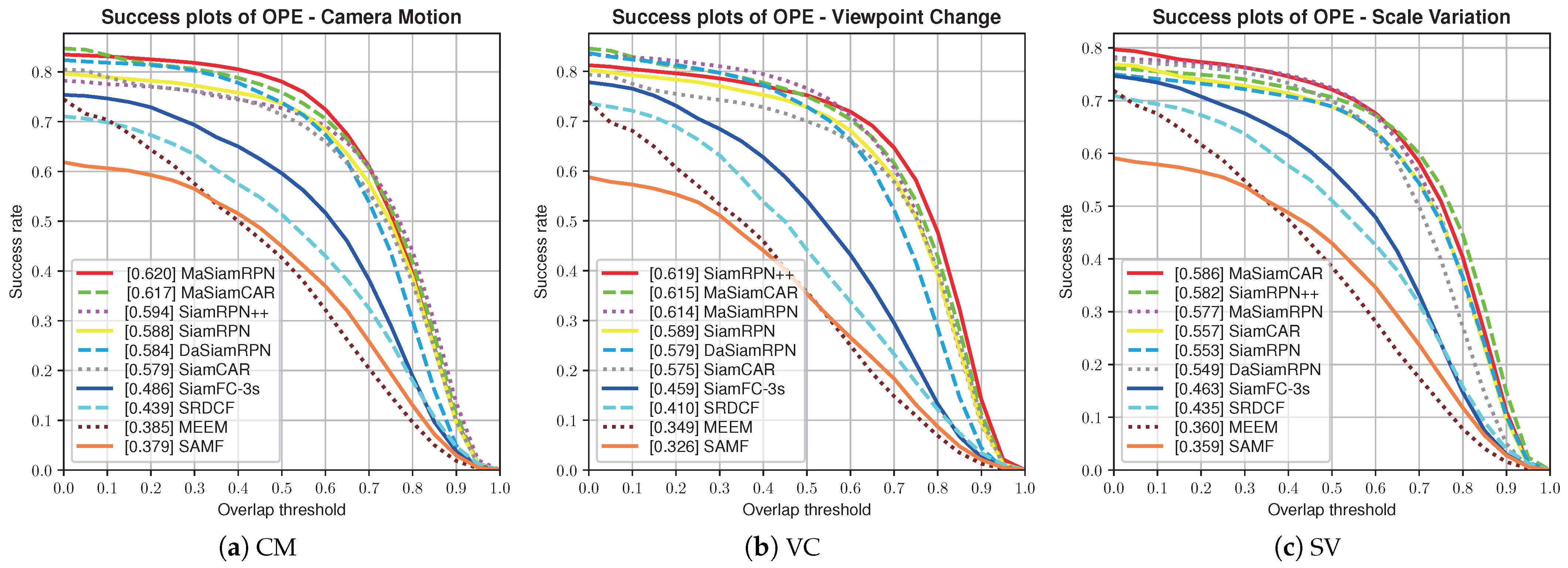

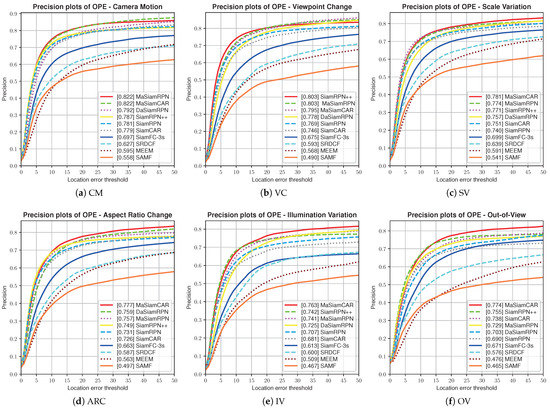

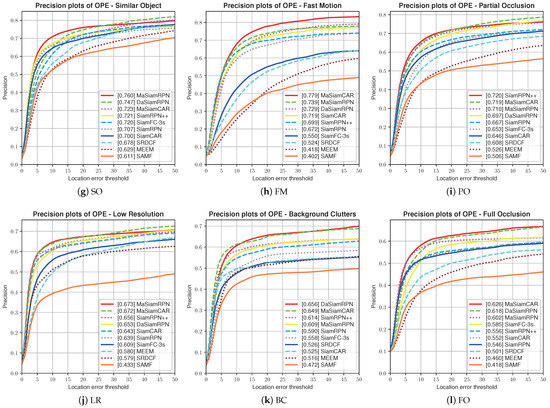

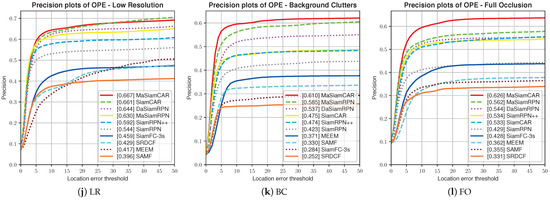

- Attribute evaluation

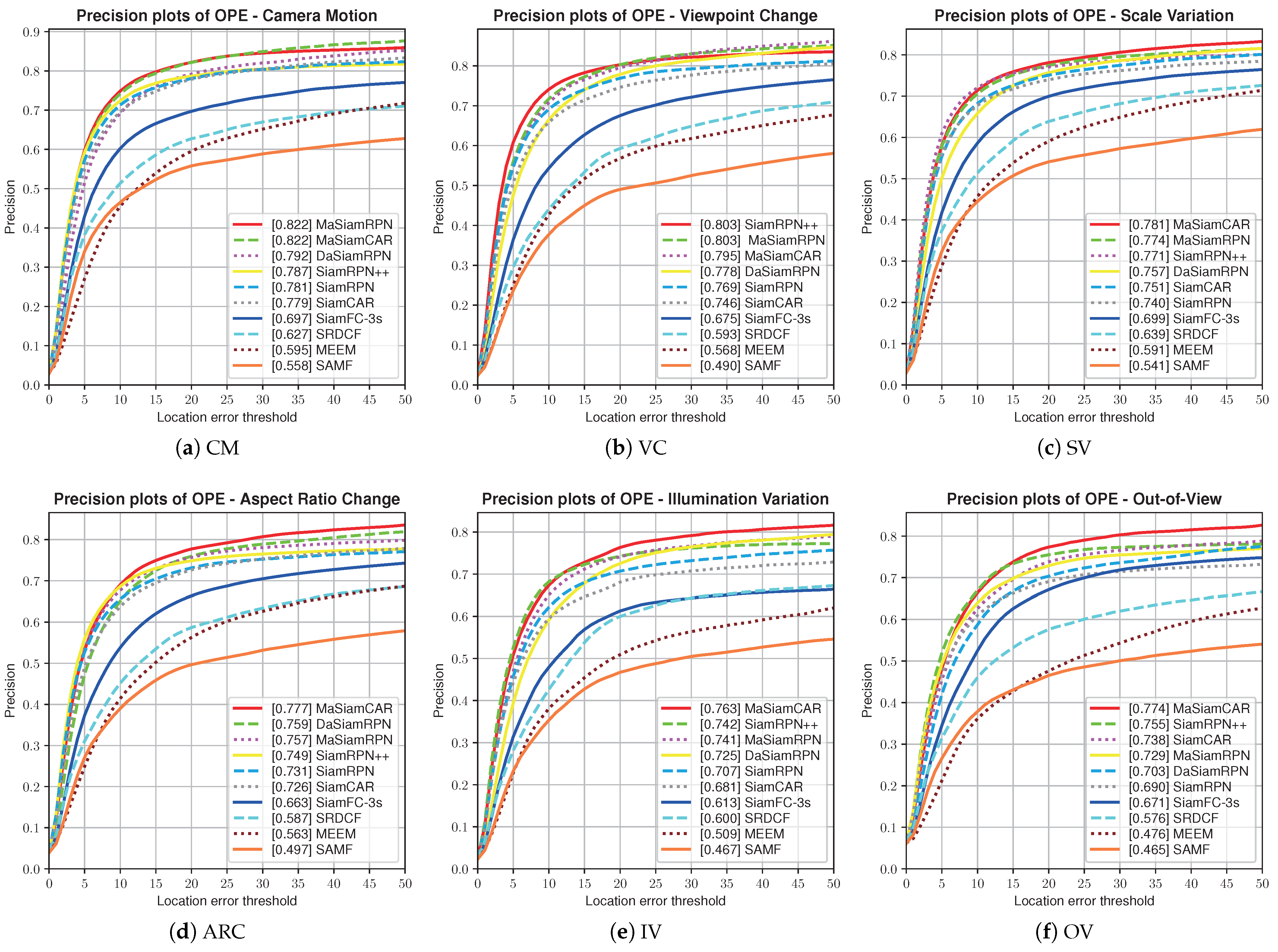

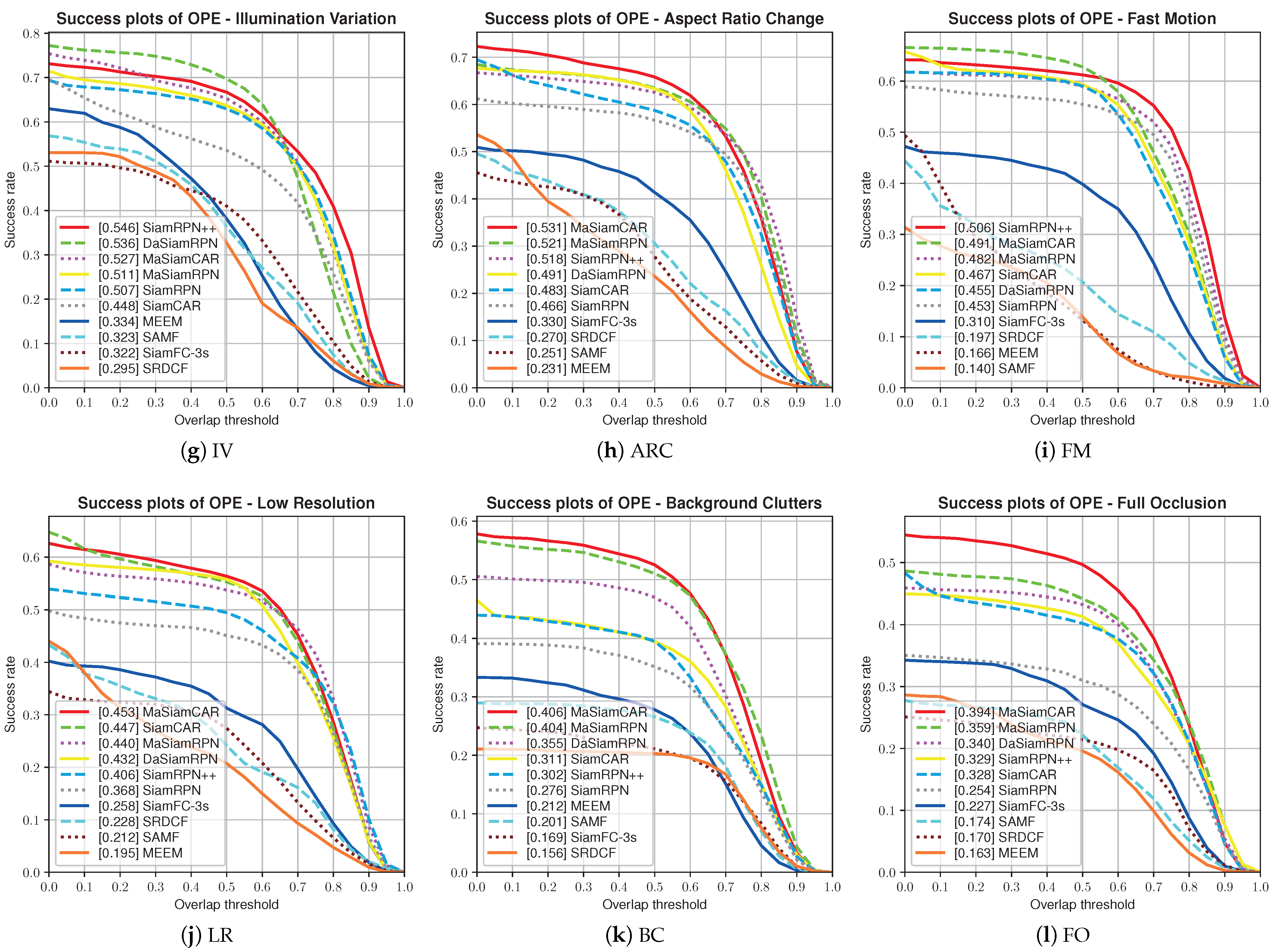

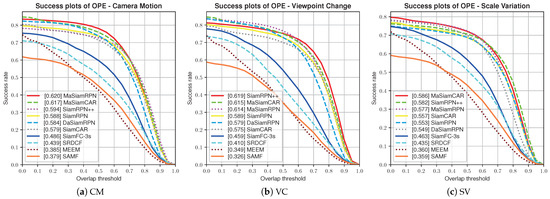

The UAV123 dataset contains 12 different attributes. The evaluation results in terms of these 12 attributes are shown in Figure 5 and Figure 6. The performance of MaSiamRPN in terms of all 12 attributes was better than that of the benchmark algorithm (SiamRPN). The success rates were CM (0.62), VC (0.614), SV (0.577), ARC (0.557), IV (0.546), OV (0.544), SO (0.543), FM (0.539), PO (0.512), LR (0.441), BC (0.418), and FO (0.372). The precision rates were CM (0.822), VC (0.803), SV (0.774), ARC (0.757), IV (0.741), OV (0.0.729), SO (0.76), FM (0.739), PO (0.71), LR (0.673), BC (0.609), and FO (0.602).

Figure 5.

Precision comparison of 12 attributes on the UAV123 dataset.

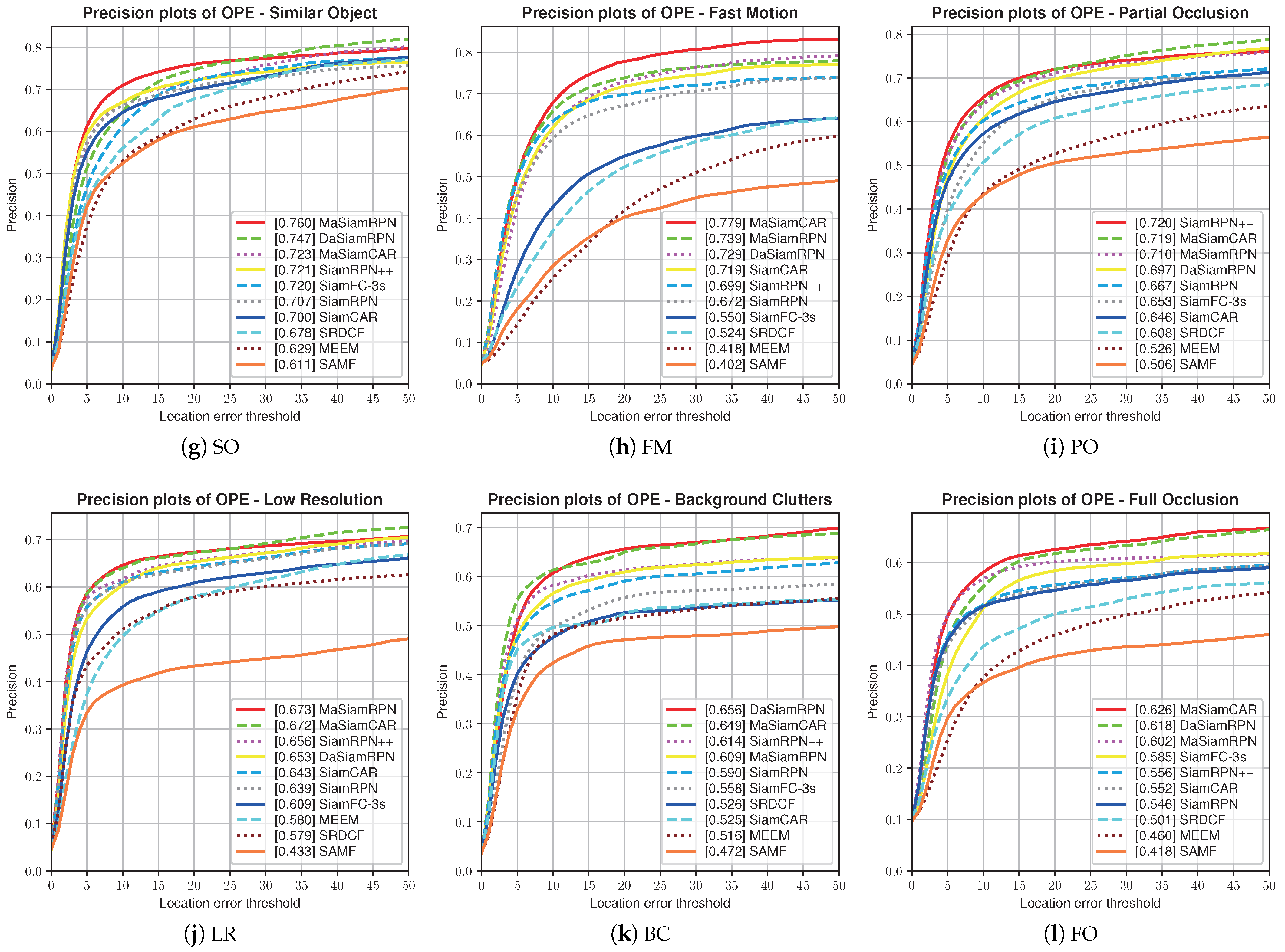

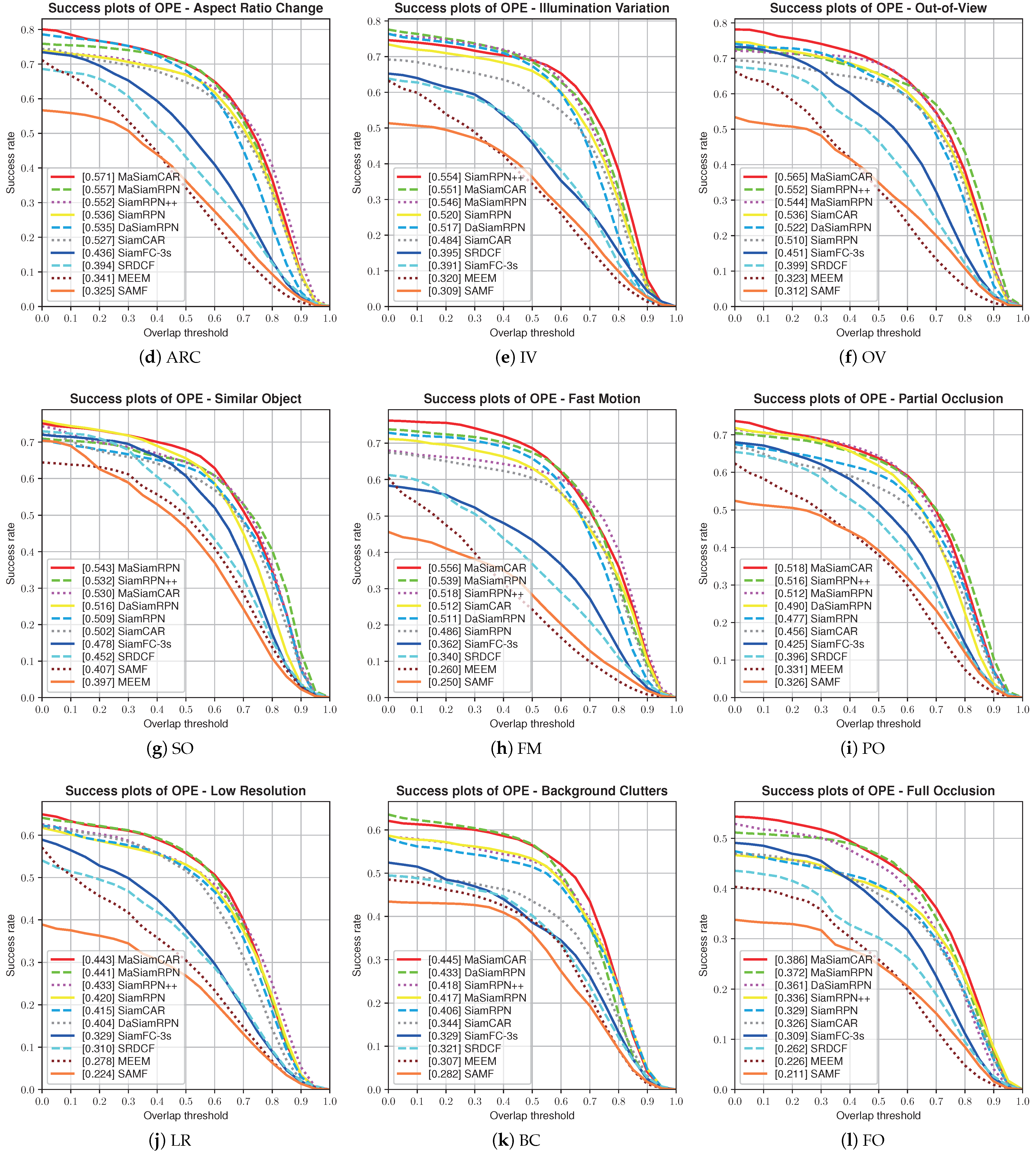

Figure 6.

Success comparison of 12 attributes on the UAV123 dataset.

Among them, the improvements in FO, FM, SO, and PO were more obvious. The success rates under these four attributes increased by 13.1%, 10.9%, 6.7%, and 7.3%, respectively, and the precision rates increased by 10.3%, 10%, 7.5%, and 6.4%, respectively. For MaSiamCAR, the success rates were CM (0.617), VC (0.615), SV (0.586), ARC (0.571), IV (0.551), OV (0.565), SO (0.53), FM (0.556), PO (0.518), LR (0.443), BC (0.445), and FO (0.386), and the precision rates were CM (0.822), VC (0.795), SV (0.781), ARC (0.777), IV (0.763), OV (0.774), SO (0.723), FM (0.779), PO (0.719), LR (0.672), BC (0.649), and FO (0.626).

In addition, compared with the SiamRPN++ algorithm, whose backbone is ResNet50, the proposed MaSiamCAR exhibited superior performance in terms of three attributes, namely FO, FM, and SO, yielding an increased precision rate of 8.3%, 5.7%, and 5.4%, respectively. Thus, the introduction of the Kalman filter improves the adaptability of the algorithm to occlusion and interference from similar objects.

4.2.2. Experimental Analysis Using the UAV20L Dataset

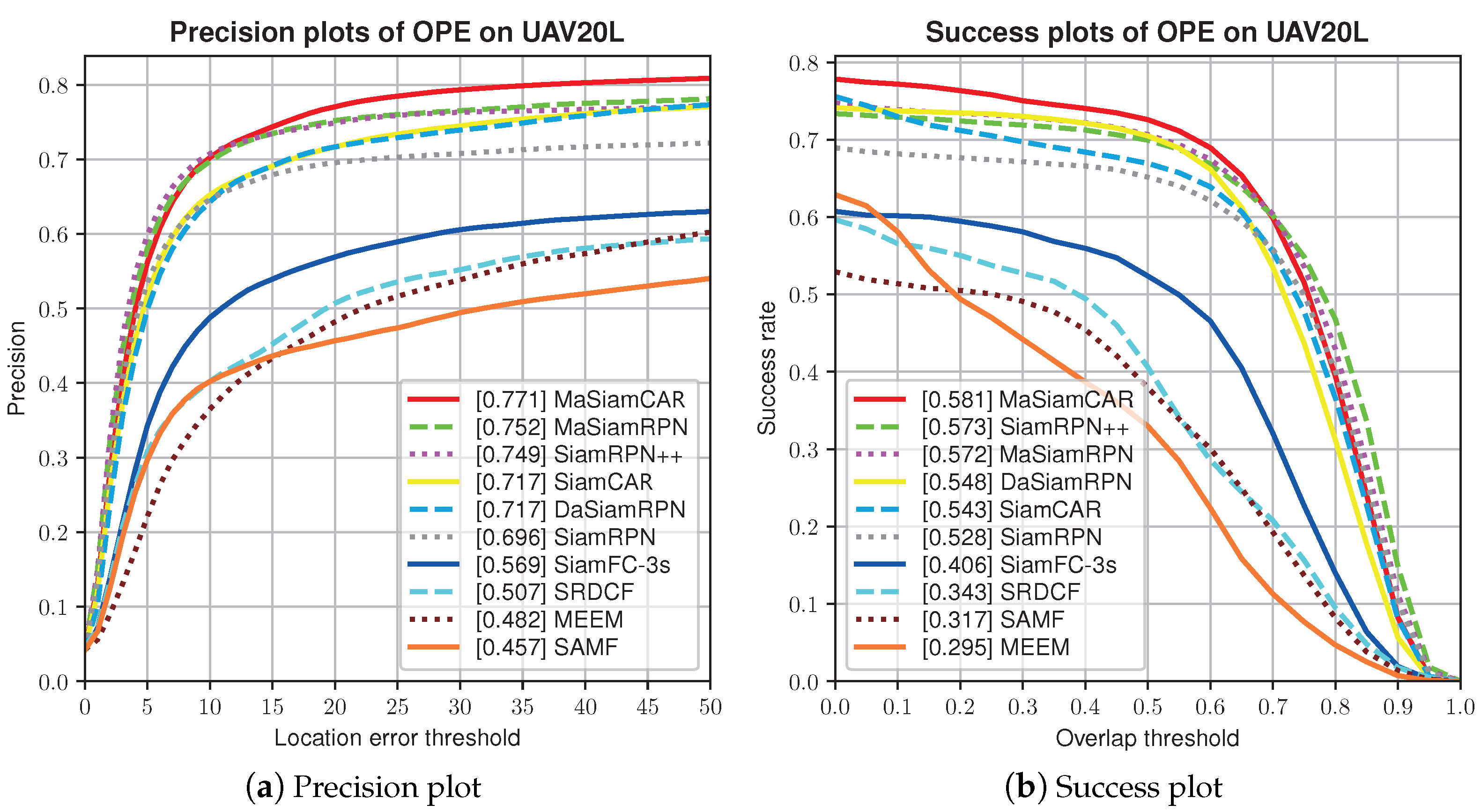

The UAV20L dataset contains 20 long-term video sequences with a frame rate of more than 30 fps. It was used to evaluate the long-term tracking performance of the proposed algorithm. The complex attributes contained in the video sequence and the evaluation methods employed are consistent with those for the UAV123 dataset.

- (1)

- Overall evaluation

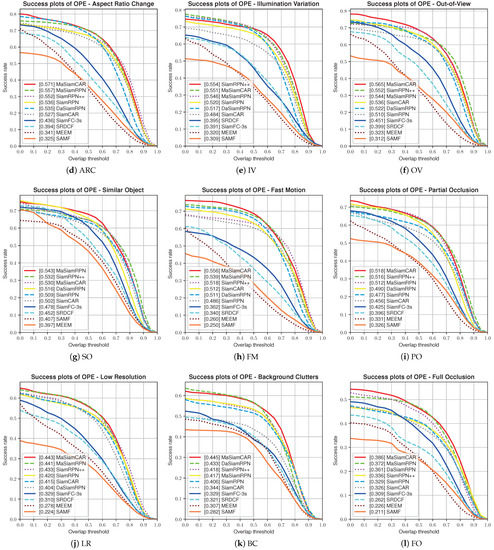

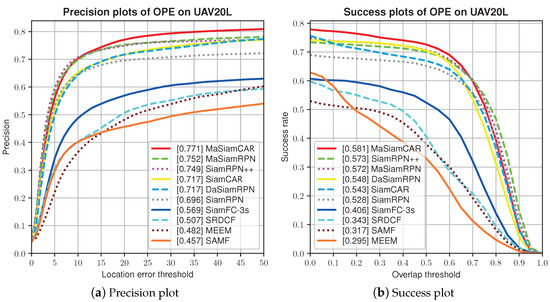

The overall evaluation results of the proposed MaSiamRPN algorithm on the UAV20L dataset are shown in Figure 7. The precision and success rate were 0.752 and 0.572, an increase of 8.0% and 8.3% compared with the SiamRPN algorithm. Thus, the performance of the proposed algorithm is similar to that of the more advanced SiamRPN++ algorithm. For the MaSiamCAR algorithm, the precision and success rate were 0.771 and 0.581, the highest among the algorithms included for the comparison analysis. Moreover, compared with the benchmark algorithm (SiamCAR), the precision and success rate were improved by 7.5% and 7.0%, respectively. Therefore, the addition of the Kalman filter can greatly improve the long-term tracking performance of the algorithm.

Figure 7.

Overall evaluation results of the UAV20L dataset.

- (2)

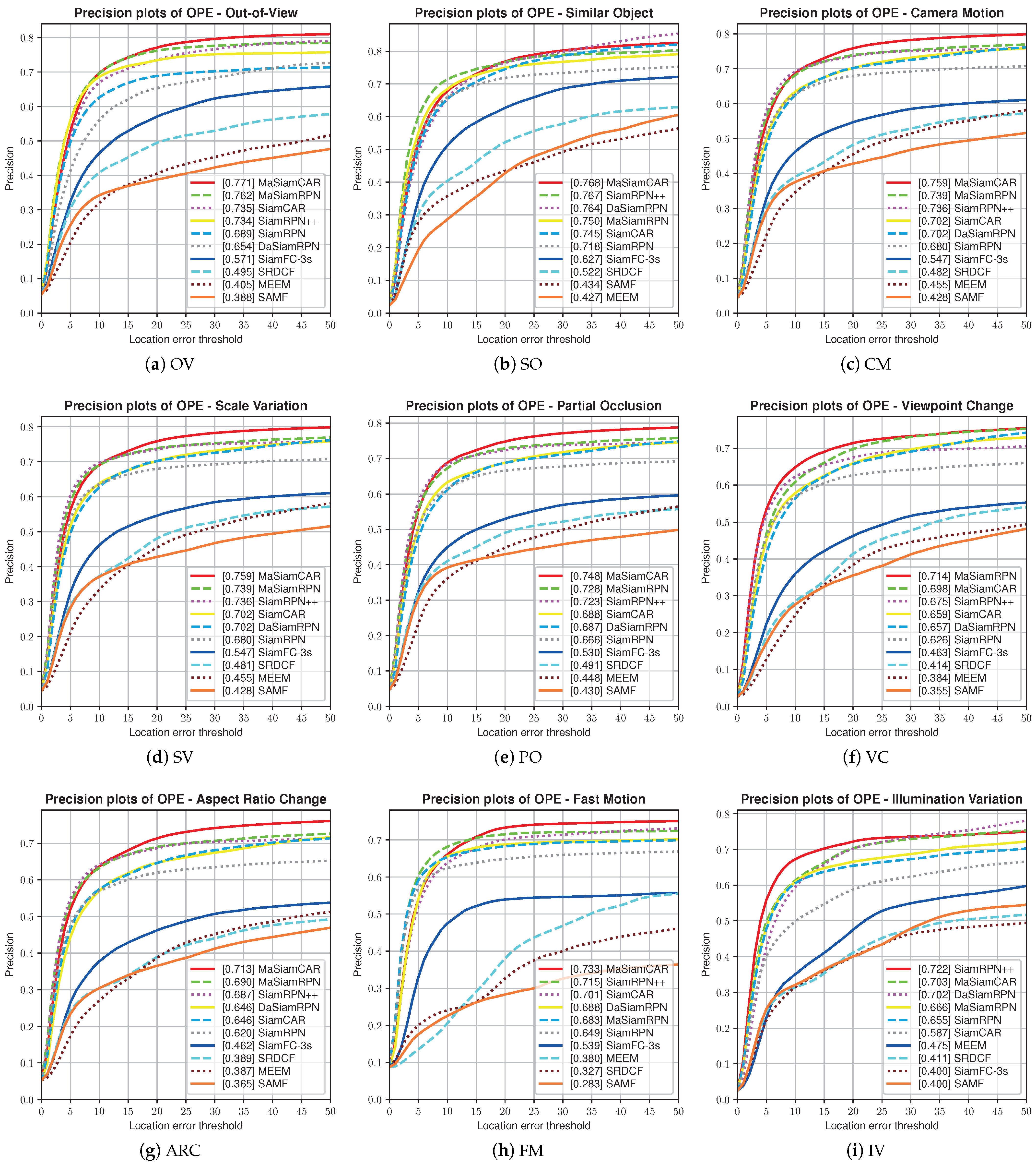

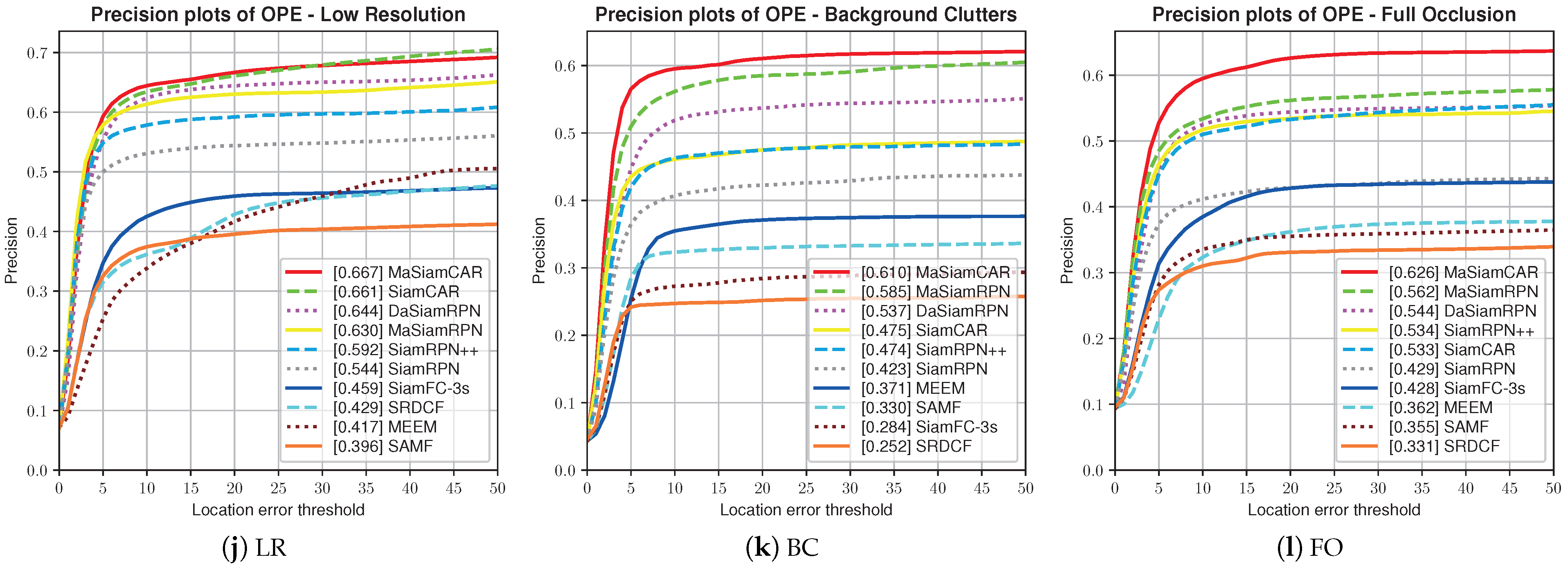

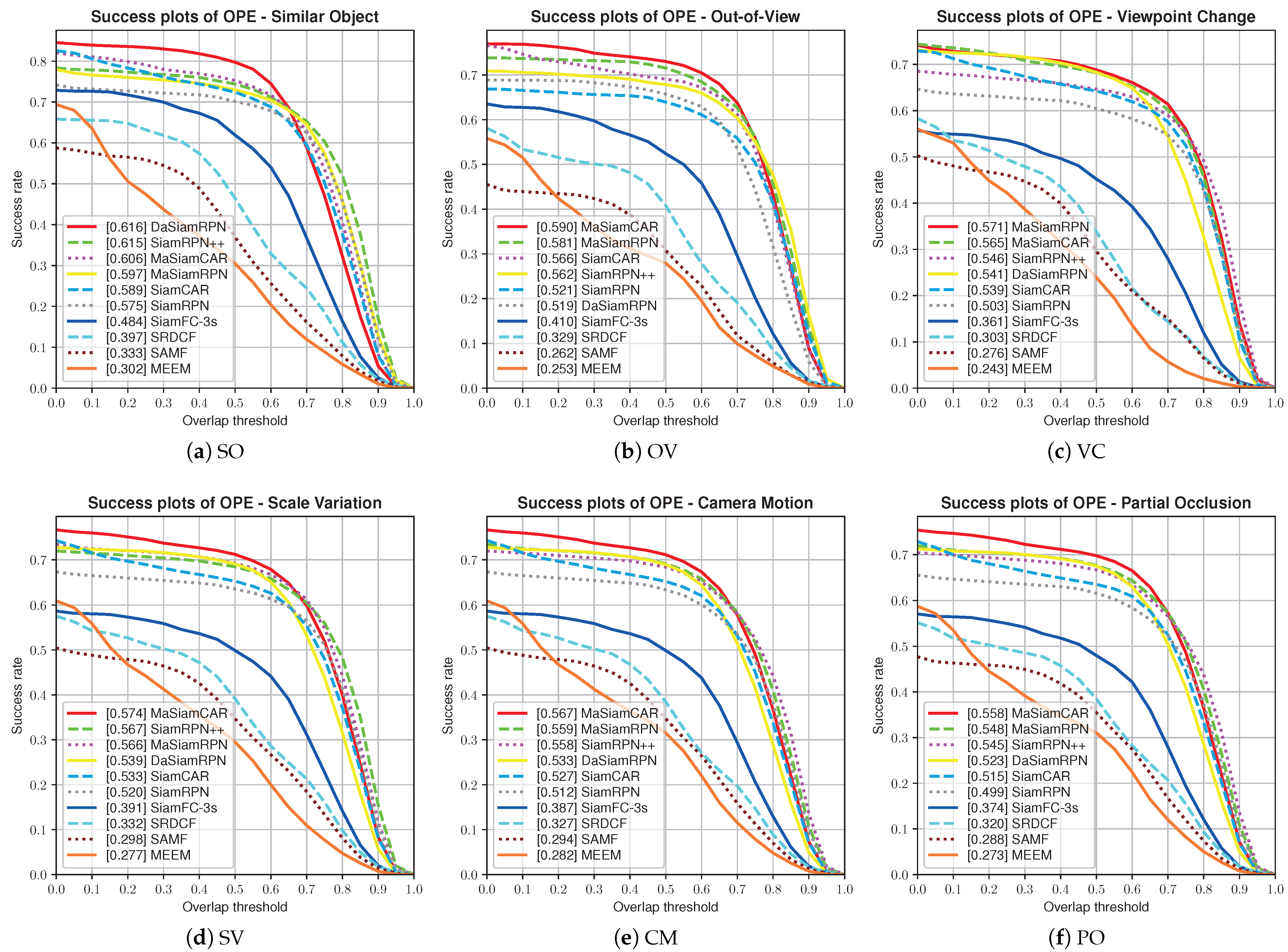

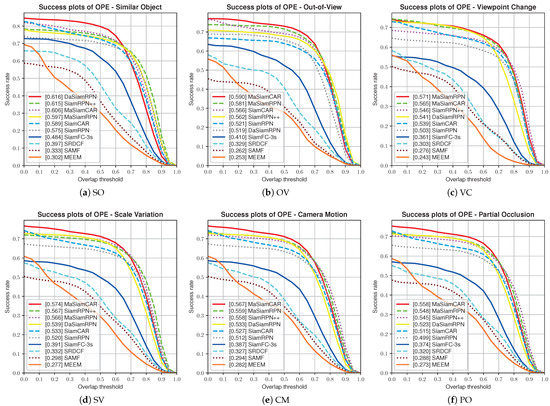

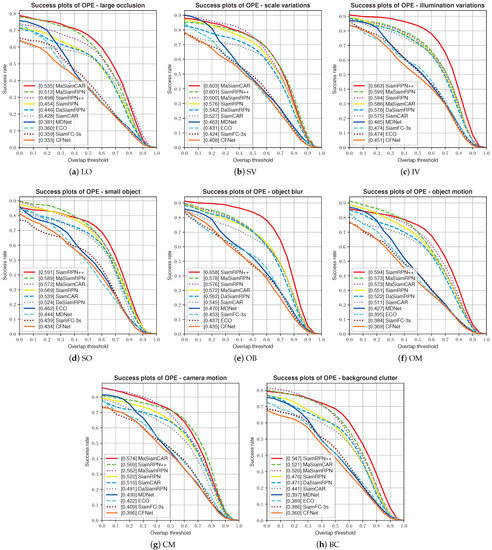

- Attribute evaluation

To further verify the performance gain realized by the proposed algorithm, we conducted attribute evaluation experiments on the UAV20L dataset. As shown in Figure 8 and Figure 9, the proposed MaSiamRPN algorithm achieved different degrees of improvement over the benchmark algorithm (SiamRPN) for the 12 attributes. The precision plots were OV (0.762), SO (0.75), CM (0.739), SV (0.739), PO (0.728), VC (0.714), ARC (0.69), FM (0.688), IV (0.666), LR (0.63), BC (0.585), and FO (0.562), and the success plots were SO (0.597), OV (0.581), VC (0.571), SV (0.566), CM (0.559), PO (0.548), IV (0.511), ARC (0.491), FM (0.482), LR (0.44), BC (0.404), and FO (0.359). Compared with the benchmark algorithm, the performance in terms of the BC and FO attributes improved by 0.162 and 0.128, respectively, and the precision plots in terms of the LR, VC, ARC, and OV attributes increased by more than 10%. Furthermore, the performance of MaSiamRPN in terms of the BC, LR, VC, and FO attributes surpassed that of SiamRPN++ by more than 5%.

Figure 8.

Precision comparison of 12 attributes on the UAV20L dataset.

Figure 9.

Success comparison of 12 attributes on the UAV20L dataset.

Compared with the benchmark algorithm (SiamCAR), the performance of MaSiamCAR in terms of the 12 attributes improved by varying degrees. The precision plots were OV (0.771), SO (0.768), CM (0.759), SV (0.759), PO (0.748), FM (0.733), ARC (0.713), IV (0.703), VC (0.698), LR (0.667), FO (0.626), and BC (0.61), and the success plots were SO (0.606), OV (0.59), SV (0.574), CM (0.567), VC (0.565), PO (0.558), ARC (0.531), IV (0.527), FM (0.491), LR (0.453), BC (0.406), and FO (0.394). In particular, the precision and success plot under the BC, FO, IV, and ARC attributes improved by more than 10% and 9%, respectively.

4.2.3. Experimental Analysis Using the UAVDT Dataset

The UAVDT dataset contains 50 UAV aerial video sequences with a frame rate of 30 fps or higher. This dataset was used for evaluating single-target tracking and detection. The UAVDT dataset includes ten attributes: background clutter (BC), small object (SO), scale variation (SV), object motion (OM), object blur (OB), long-term tracking (LT), large occlusion (LO), illumination variation (IV), camera rotation (CR), and camera motion (CM).

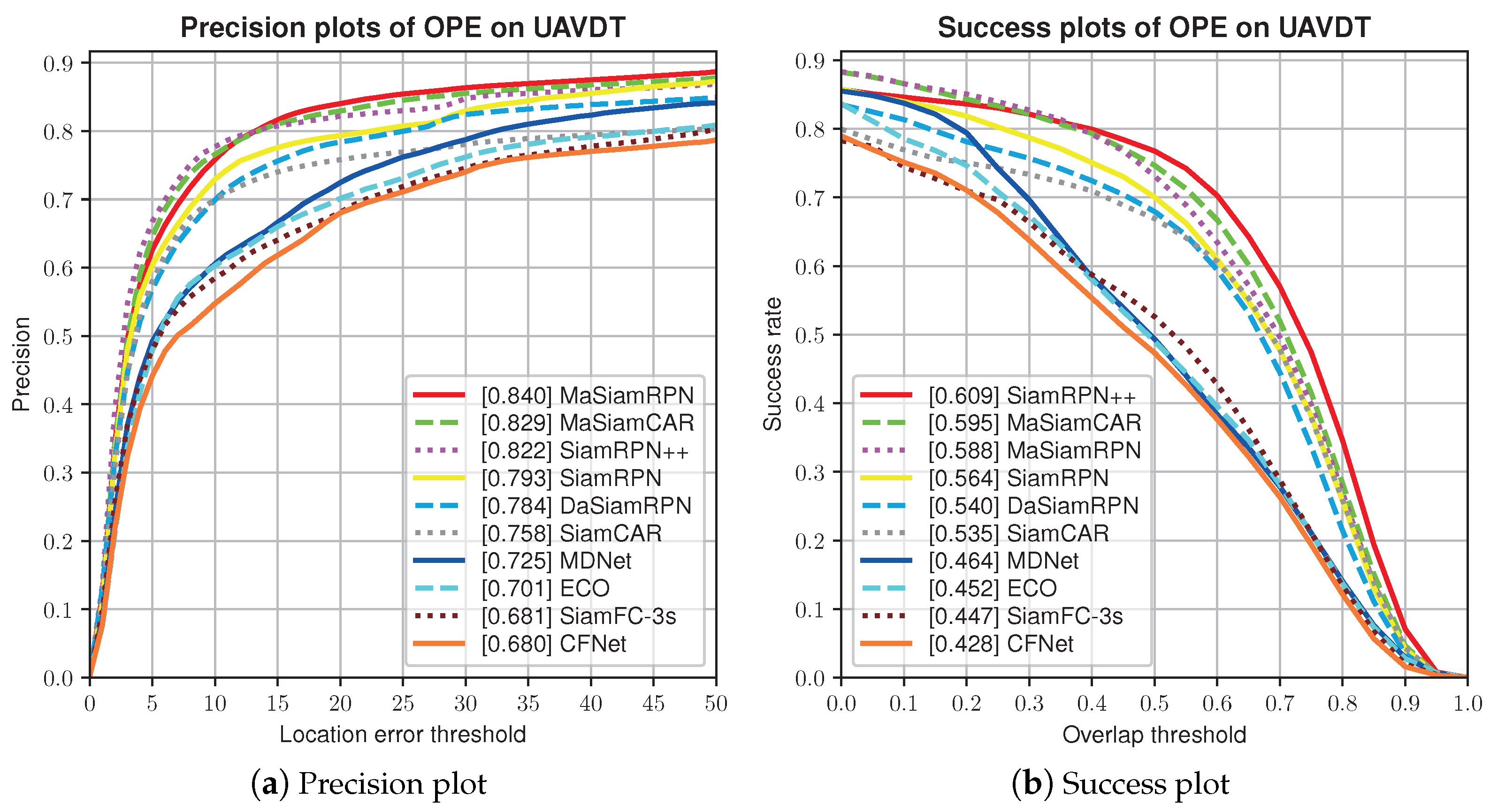

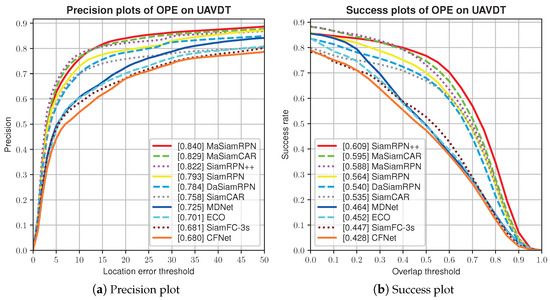

- (1)

- Overall evaluation

The overall evaluation results of the proposed MaSiamRPN algorithm on the UAVDT dataset are shown in Figure 10. The precision and success plots were 0.840 and 0.588, an increase of 5.9% and 4.3% compared with the SiamRPN algorithm. Moreover, compared with the more advanced algorithm SiamRPN++, its precision plot improved by 2.2%. Because SiamRPN++ uses a deeper network (ResNet50) and multilevel RPN, the success plot of the proposed MaSiamRPN algorithm is low compared with SiamRPN++. For MaSiamCAR, the precision and success plot were 0.829 and 0.595, an increase of 9.4% and 11.2% compared with the benchmark algorithm (SiamCAR). In addition, its precision plot was higher than that of SiamRPN++. However, its success plot was slightly lower than that of SiamRPN++ because of its insufficient scale estimation capability due to the use of a shallow network.

Figure 10.

Overall evaluation results of the UAVDT dataset.

- (2)

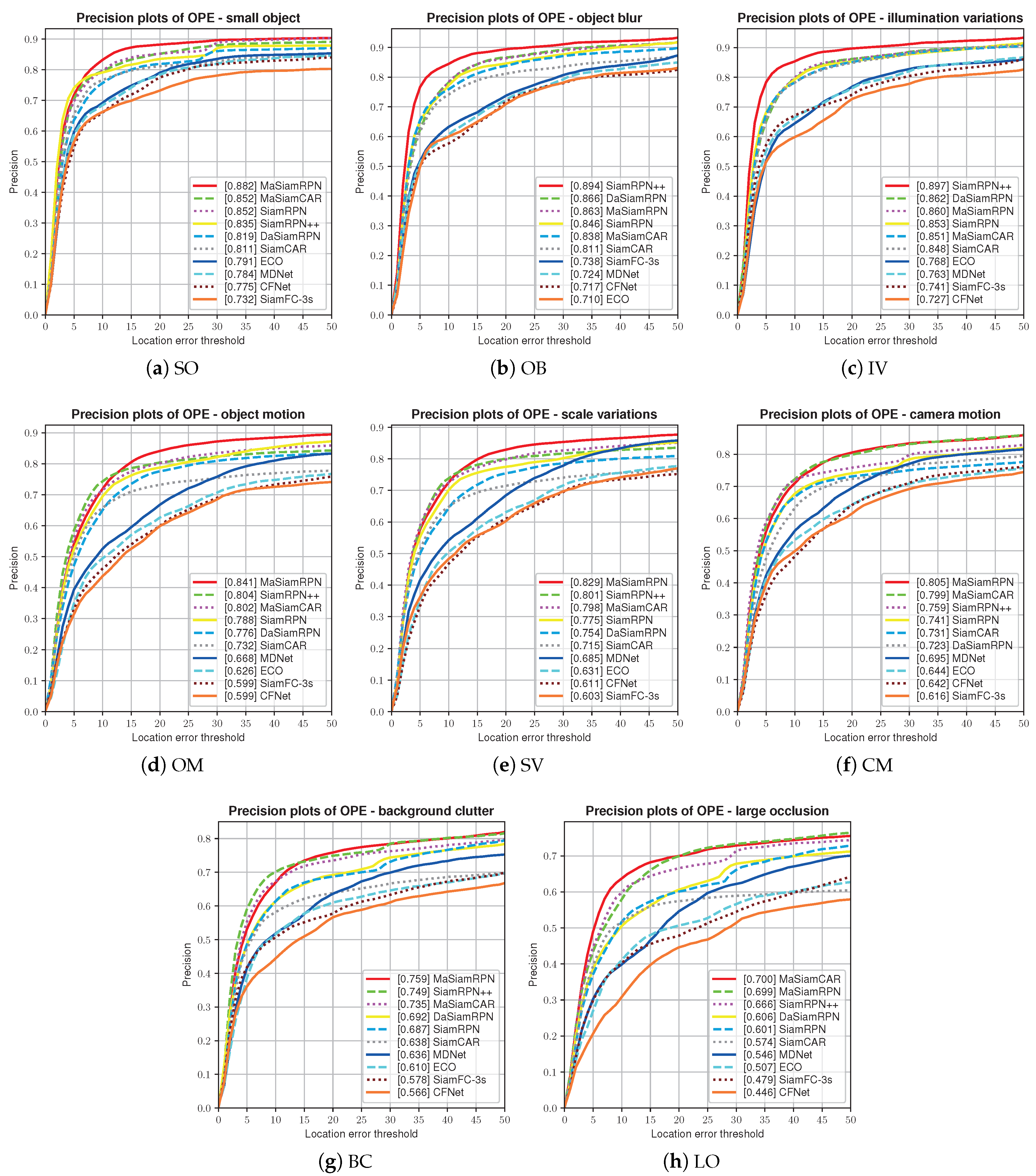

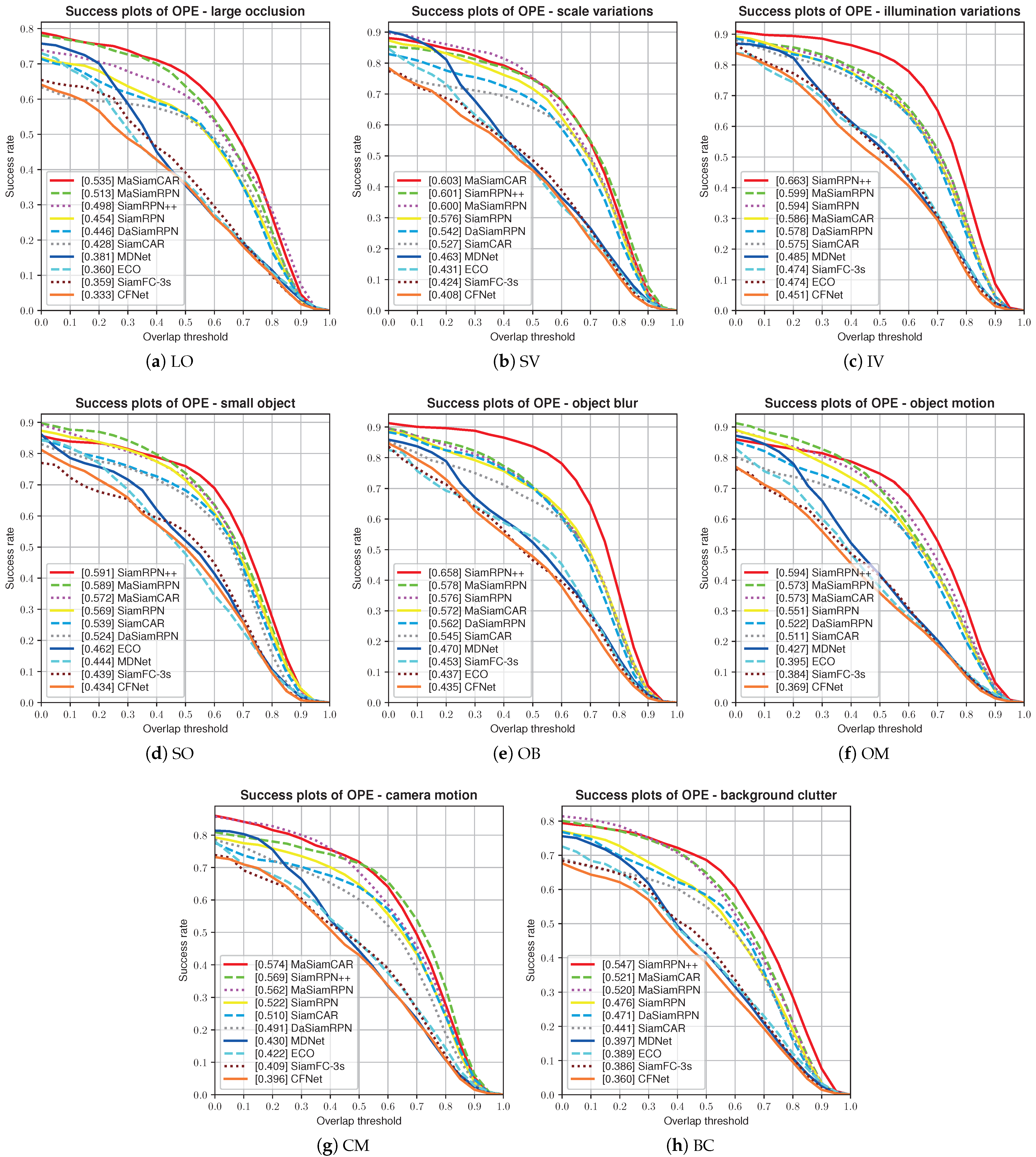

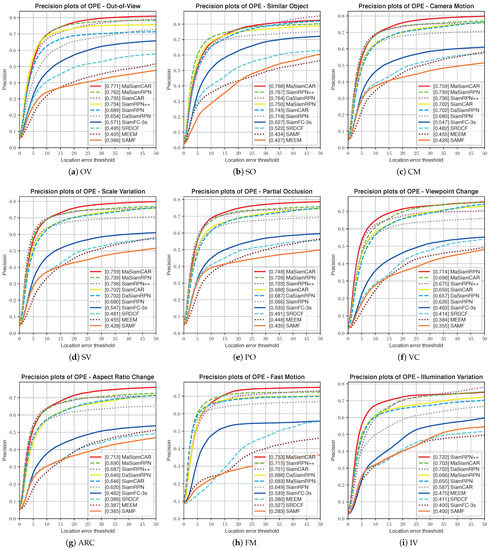

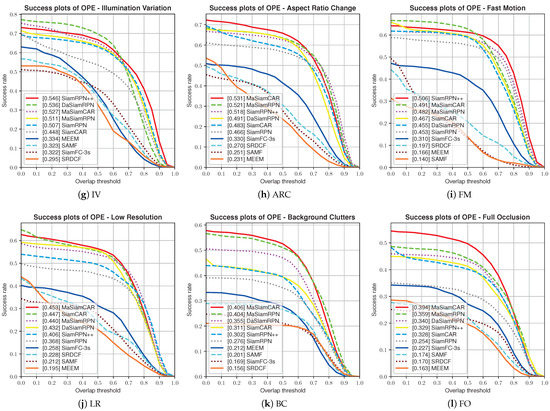

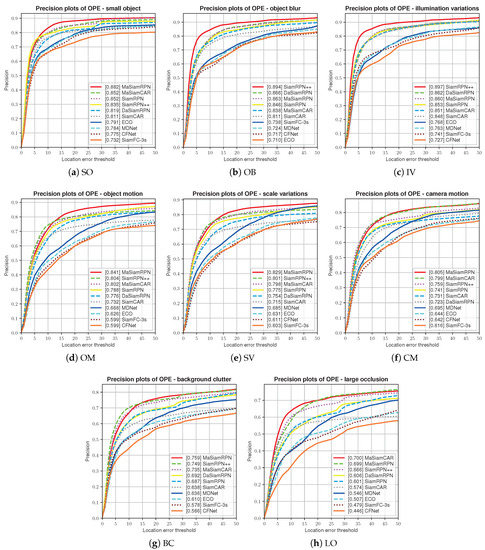

- Attribute evaluation

As shown in Figure 11 and Figure 12, the proposed MaSiamRPN algorithm exhibited different degrees of improvement over the benchmark algorithm (SiamRPN) for eight attributes. The precision plots were SO (0.882), OB (0.863), IV (0.86), OM (0.841), SV (0.829), CM (0.805), BC (0.759), and LO (0.699), and the success plots were LO (0.687), SV (0.6), IV (0.599), SO (0.589), OB (0.578), OM (0.573), CM (0.562), and BC (0.52). Among them, the precision plots under the LO, BC, and CM attributes increased by 16.3%, 10.5%, and 8.6%, and the success plots increased by 13%, 9.2%, and 7.7%, respectively. Compared with SiamRPN++, the proposed MaSiamRPN algorithm improved by 6.1%, 5.6%, and 5.0%, respectively, under the CM, SO, and LO attributes. The performance of the MaSiamCAR algorithm under the eight attributes improved by varying degrees; the precision plots were SO (0.852), OB (0.838), IV (0.86), OM (0.802), SV (0.798), CM (0.799), BC (0.735), and LO (0.700), and the success plots were SO (0.572), OB (0.572), IV (0.586), OM (0.573), SV (0.603), CM (0.574), BC (0.521), and LO (0.535). In particular, the precision and success rate improved by more than 10% under the LO, BC, and SV attributes.

Figure 11.

Precision comparison of 8 attributes on the UAVDT dataset.

Figure 12.

Success comparison of 8 attributes on the UAVDT dataset.

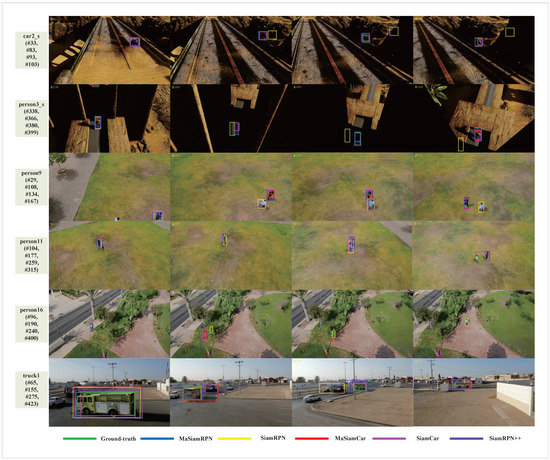

4.3. Qualitative Experimental Analysis

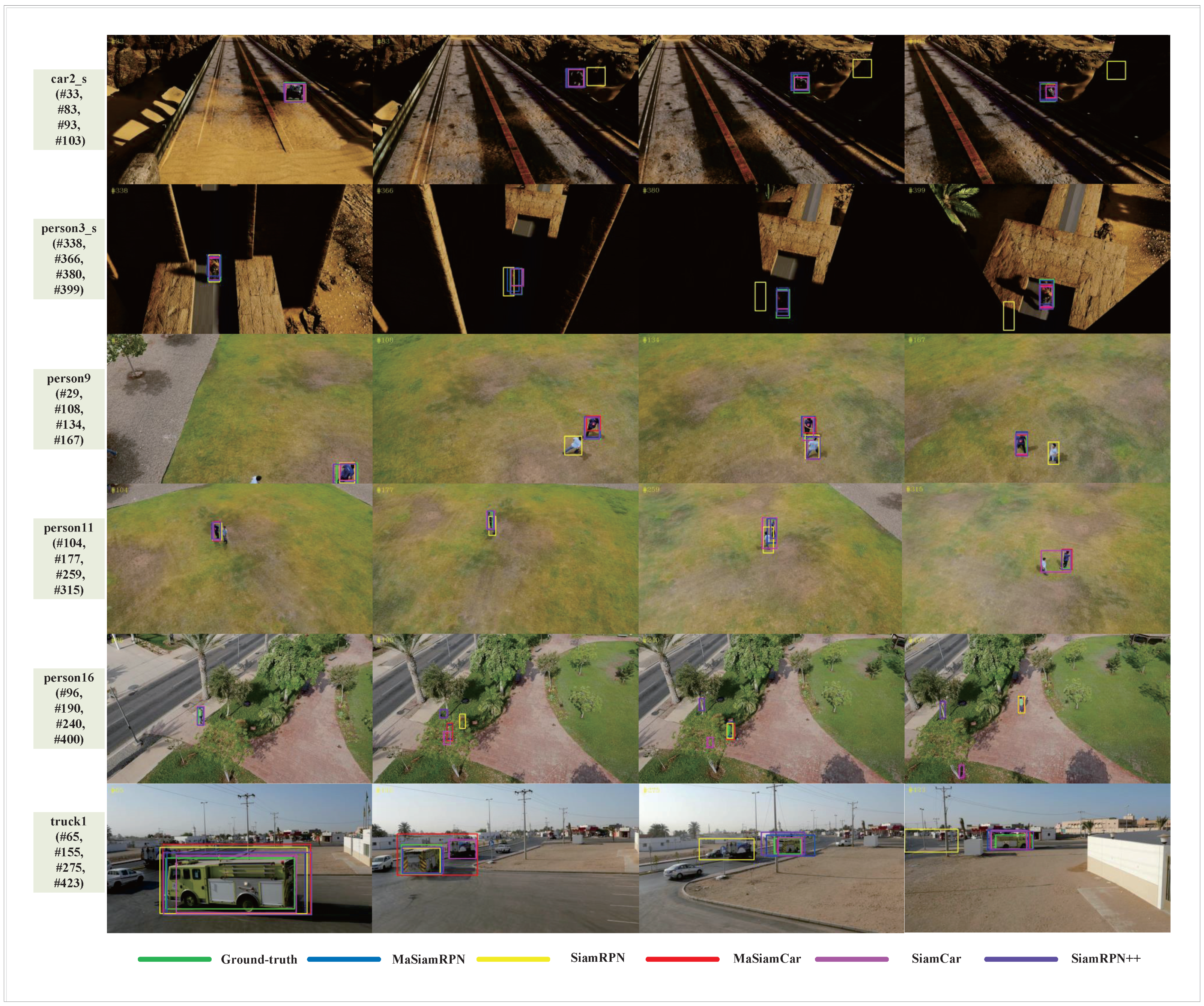

To more intuitively analyze the effectiveness of the proposed algorithm, six typical video sequences from the UAV123 dataset were selected for analysis, as shown in Figure 13 (from top to bottom): car2_s, person3_s, person9, person11, person16, and truck1. The following algorithms were included in the comparison: baseline tracker (SiamRPN and SiamCAR), proposed tracker (MaSiamRPN, MaSiamCAR), and similar algorithm (SiamRPN++).

Figure 13.

The visualization of tracking results.

Video sequence car2_s: This video sequence includes complex scenarios, such as rotation, illumination change, and occlusion. In frame 33, it can be seen that all the algorithms tracked the target normally. However, in frame 83, the appearance of the target changed greatly due to rotation and illumination changes, and the tracking drift phenomenon was observed in SiamRPN, resulting in the tracking of background distractors. Under the action of the Kalman filter, MaSiamRPN suppressed tracking drift and realized continuous and stable tracking.

Video sequence person3_s: This video sequence contains scenes with extreme changes in lighting. All of the tracking algorithms successfully tracked the target in frame 338. However, in frame 366, the light quickly dimmed, reducing target visibility. The SiamRPN algorithm displayed a trend of tracking drift in this case. The tracking result of the SiamRPN algorithm completely deviated from the true position in frame 380. However, with the help of the Kalman prediction result, MaSiamRPN could still stably complete the tracking process.

Video sequence person9: This video sequence includes factors, such as camera motion and interference from similar objects. In frame 29, all of the algorithms successfully tracked the target. Tracking drift occurred in SiamRPN in frame 108 due to interference from similar objects. In frame 134, the SiamRPN++ algorithm also experienced tracking drift due to cross-occlusion between targets but gradually recovered as the distractors moved away. The proposed algorithm MaSiamRPN shows its superior performance and never experienced tracking drift by virtue of the Kalman filter.

Video sequence person11: This video sequence includes many complex factors, such as angle of view change, camera motion, and occlusion. In frame 104, all the algorithms tracked normally. In frame 177, tracking drift occurred in SiamRPN due to target occlusion. In frames 259–315, SiamCAR also exhibited tracking drift. In contrast, MaSiamRPN and MaSiamCAR detected the trend of tracking drift and timely suppressed its occurrence, and both of them performed well in the whole video sequence.

Video sequence person16: This video sequence includes some factors, such as occlusion and similar complex backgrounds. Before frame 96, all the algorithms could track the target effectively. However, in frame 190, the target was completely occluded; only MaSiamRPN and MaSiamCAR continued tracking the target, and tracking loss occurred in the other algorithms. In frames 240–400, the SiamRPN algorithm recovered tracking, whereas SiamCAR and SiamRPN++ algorithms failed to track.

Video sequence truck1: This video sequence includes complex situations, such as angle of view change, scale change, and interference from similar objects. In frame 65, all the algorithms tracked the target normally. In frames 155–275, SiamCAR and SiamRPN exhibited tracking drift due to interference from similar objects. In frame 423, SiamCAR recovered tracking; however, SiamRPN could not perform continuous tracking. Only MaSiamRPN and MaSiamCAR accomplished tracking during the whole video sequence.

According to the above results of quantitative and qualitative experimental analysis under multiple datasets (UAV 123, UAV20L, UAVDT), the proposed algorithms (MaSiamRPN and MaSiamCAR) have significantly improved performance in a variety of complex scenarios, such as full occlusion (FO), fast motion (FM), similar object (SO), background cluster (BC), large occlusion (LO), scale variations (SV), viewpoint change (VC), and aspect ratio change (ARC). In particular, the precision and success rates of the proposed algorithm were improved by more than 10% when the target moves quickly, is occluded for an extended period of time, or is even completely occluded. This is primarily due to the proposed motion-aware Siamese framework’s ability to fully utilize historical track information to detect whether the tracking drift phenomenon occurs in time and then implement corresponding recovery strategies once the drift occurs, ensuring the continuity of the whole tracking process.

5. Conclusions

Aiming at the problem that Siamese series tracking algorithms are prone to tracking drift due to interference, we integrated a tracking drift detection module for a Siamese tracker and proposed a motion-aware Siamese framework for visual tracking. In addition, a lightweight model of AlexNet was also used to obtain faster tracking speed. To test the effectiveness of the proposed method, we chose the typical SiamRPN and SiamCar algorithms as the representative of anchor-base and anchor-free type algorithms. The experiment was carried out on three popular UAV video datasets: UAV123, UAV20L, and UAVDT. The results show that the proposed method can make better use of the motion trajectory information and historical appearance information of the target in the video sequence, especially in the typical scenarios such as occlusion, fast motion, and similar object. To sum up, the proposed algorithms (MaSiamRPN and MaSiamCAR) perform better.

The proposed approaches still have room for further development; more work remains to be performed. Reducing computing costs is also a very worthwhile research direction. In future work, we will pay more attention to further reduce the computational cost and propose more effective tracking algorithms. For example, we will consider designing a lightweight network to achieve better performance, which may be easily applied in UAV tracking platforms. Furthermore, while the basic Kalman filter can predict the linear system very well, there still exists a lot of nonlinear motion scenes in the video sequence. We consider further improving our approaches by integrating nonlinear filters (e.g., EKF, UKF, CKF) into the proposed tracking framework in the future. Meanwhile, how to improve the system mathematical modeling by introducing more mathematical definitions should also be taken into account.

Author Contributions

L.S. and J.Z. conceived of the idea and developed the proposed approaches. Z.Y. advised the research and helped edit the paper. B.F. improved the quality of the manuscript and the completed revision. All authors have read and agreed to the published version of the manuscript.

Funding

The research was funded by the National Natural Science Foundation of China (No. 62271193), the Aeronautical Science Foundation of China (No. 20185142003), Natural Science Foundation of Henan Province, China (No. 222300420433), the Science and Technology Innovative Talents in Universities of Henan Province, China (No. 21HASTIT030), Young Backbone Teachers in Universities of Henan Province, China (No. 2020GGJS073).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tullu, A.; Hassanalian, M.; Hwang, H.Y. Design and Implementation of Sensor Platform for UAV-Based Target Tracking and Obstacle Avoidance. Drones 2022, 6, 89. [Google Scholar] [CrossRef]

- Wang, C.; Shi, Z.; Meng, L.; Wang, J.; Wang, T.; Gao, Q.; Wang, E. Anti-Occlusion UAV Tracking Algorithm with a Low-Altitude Complex Background by Integrating Attention Mechanism. Drones 2022, 6, 149. [Google Scholar] [CrossRef]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese Fully Convolutional Classification and Regression for Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6269–6277. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional Siamese Networks for Object Tracking. In Proceedings of the Computer Vision–ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 850–865. [Google Scholar]

- Zhao, F.; Zhang, T.; Song, Y.; Tang, M.; Wang, X.; Wang, J. Siamese Regression Tracking with Reinforced Template Updating. IEEE Trans. Image Process. 2020, 30, 628–640. [Google Scholar] [CrossRef] [PubMed]

- Sun, L.; Yang, Z.; Zhang, J.; Fu, Z.; He, Z. Visual Object Tracking for Unmanned Aerial Vehicles Based on the Template-Driven Siamese Network. Remote Sens. 2022, 14, 1584. [Google Scholar] [CrossRef]

- Xu, Z.; Luo, H.; Hui, B.; Chang, Z.; Ju, M. Siamese Tracking with Adaptive Template-updating Strategy. Appl. Sci. 2019, 9, 3725. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach To Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82D, 35–45. [Google Scholar] [CrossRef]

- Fu, C.; Ding, F.; Li, Y.; Jin, J.; Feng, C. DR 2 track: Towards Real-time Visual Tracking for UAV Via Distractor Repressed Dynamic Regression. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 1597–1604. [Google Scholar]

- Fan, J.; Yang, X.; Lu, R.; Li, W.; Huang, Y. Long-term Visual Tracking Algorithm for UAVs Based on Kernel Vorrelation Filtering and SURF Features. Vis. Comput. 2022, 39, 319–333. [Google Scholar] [CrossRef]

- Li, Y.; Fu, C.; Huang, Z.; Zhang, Y.; Pan, J. Intermittent Contextual Learning for Keyfilter-aware UAV Object Tracking Using Deep Convolutional Feature. IEEE Trans. Multimed. 2020, 23, 810–822. [Google Scholar] [CrossRef]

- Zhang, F.; Ma, S.; Yu, L.; Zhang, Y.; Qiu, Z.; Li, Z. Learning Future-Aware Correlation Filters for Efficient UAV Tracking. Remote Sens. 2021, 13, 4111. [Google Scholar] [CrossRef]

- Deng, C.; He, S.; Han, Y.; Zhao, B. Learning Dynamic Spatial-temporal Regularization for UAV Object Tracking. IEEE Signal Process. Lett. 2021, 28, 1230–1234. [Google Scholar] [CrossRef]

- Li, Y.; Fu, C.; Ding, F.; Huang, Z.; Lu, G. AutoTrack: Towards High-performance Visual Tracking for UAV with Automatic Spatio-temporal Regularization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11923–11932. [Google Scholar]

- Zhang, F.; Ma, S.; Zhang, Y.; Qiu, Z. Perceiving Temporal Environment for Correlation Filters in Real-Time UAV Tracking. IEEE Signal Process. Lett. 2021, 29, 6–10. [Google Scholar] [CrossRef]

- He, Y.; Fu, C.; Lin, F.; Li, Y.; Lu, P. Towards Robust Visual Tracking for Unmanned Aerial Vehicle with Tri-attentional Correlation Filters. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2020; pp. 1575–1582. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J.S. Evolution of Siamese Visual Tracking with Very Deep Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 16–20. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and Wider Siamese Networks for Real-time Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 4591–4600. [Google Scholar]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware Siamese Networks for Visual Object Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 101–117. [Google Scholar]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y.; Yu, G. Siamfc++: Towards Robust and Accurate Visual Tracking with Target Estimation Guidelines. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12549–12556. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese Box Adaptive Network for Visual Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6668–6677. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A Benchmark and Simulator for UAV Tracking. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 445–461. [Google Scholar]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The Unmanned Aerial Vehicle Benchmark: Object Detection and Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 370–386. [Google Scholar]

- Li, D.; Wen, G.; Kuai, Y.; Porikli, F. End-to-end feature integration for correlation filter tracking with channel attention. IEEE Signal Process. Lett. 2018, 25, 1815–1819. [Google Scholar] [CrossRef]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Guo, D.; Shao, Y.; Cui, Y.; Wang, Z.; Zhang, L.; Shen, C. Graph attention tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9543–9552. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A Large High-diversity Benchmark for Generic Object Tracking in the Wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).