Feasibility Study of Detection of Ochre Spot on Almonds Aimed at Very Low-Cost Cameras Onboard a Drone

Abstract

1. Introduction

2. Materials and Method

2.1. System Overview and Materials

- Regarding the selection of the RGB camera to take photographs of plants, the requirements for it were a low price and as small and light as possible. Cameras used in other articles about agriculture exceed $1000. For this work, we chose the Victure Action Camera AC700. Its low price (less than $55), weight (61 g), small size (8 cm × 6 cm × 2.5 cm), and 16 MP resolution made it appropriate for our application. Additionally, the camera had a special operating mode (electronic image stabilization) to reduce vibration problems caused by drone flight. We undertook a variety of tests with this camera, both in a laboratory with single leaves and in a field of almond trees, at different distances from the object of interest. We concluded that the drone should take photographs at a distance of around 1 m or 1.5 m from the tree to distinguish the symptoms of the disease. As well, in general, the value of the ISO parameter (or sensitivity to light) of the camera should be set as low as possible (ISO 100, for example).

2.2. Study of Color Carried out for Ochre Spot

2.3. Algorithm

2.3.1. Shape Recognition

2.3.2. Object RGB Reconstruction

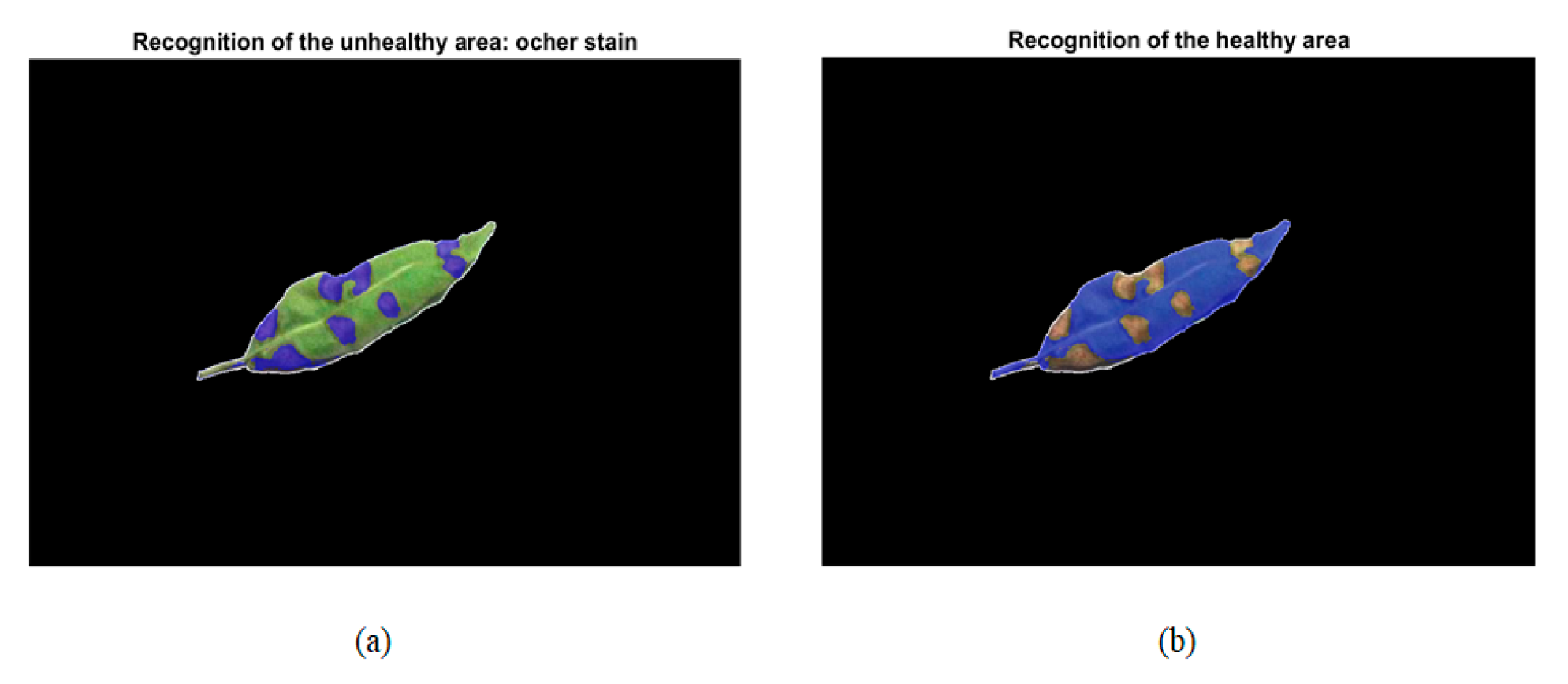

2.3.3. Disease Recognition

2.3.4. Damage Quantification

2.4. Operation of the Algorithm with Simple Photographs

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Federal Aviation Administration. FAA Aerospace Forecast Fiscal Years 2020–2040. 2020. Available online: https://www.faa.gov/data_research/aviation/aerospace_forecasts/media/FY2020-40_faa_aerospace_forecast.pdf (accessed on 9 September 2022).

- PwC. Clarity from above: PwC Global Report on the Commercial Applications of Drone Technology. 2016. Available online: https://www.pwc.pl/pl/pdf/clarity-from-above-pwc.pdf (accessed on 9 September 2022).

- Pardey, P.G.; Beddow, J.M.; Hurley, T.M.; Beatty, T.K.; Eidman, V.R. A Bounds Analysis of World Food Futures: Global Agriculture Through to 2050. Aust. J. Agric. Resour. Econ. 2014, 58, 571–589. [Google Scholar] [CrossRef]

- Wang, K.; Huggins, D.R.; Tao, H. Rapid mapping of winter wheat yield, protein, and nitrogen uptake using remote and proximal sensing. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101921. [Google Scholar] [CrossRef]

- Vizzari, M.; Santaga, F.; Benincasa, P. Sentinel 2-Based Nitrogen VRT Fertilization in Wheat: Comparison between Traditional and Simple Precision Practices. Agronomy 2019, 9, 278. [Google Scholar] [CrossRef]

- Saifuzzaman, M.; Adamchuk, V.; Buelvas, R.; Biswas, A.; Prasher, S.; Rabe, N.; Aspinall, D.; Ji, W. Clustering Tools for Integration of Satellite Remote Sensing Imagery and Proximal Soil Sensing Data. Remote Sens. 2019, 11, 1036. [Google Scholar] [CrossRef]

- Zhao, B.; Liu, M.; Wu, J.; Liu, X.; Liu, M.; Wu, L. Parallel Computing for Obtaining Regional Scale Rice Growth Conditions Based on WOFOST and Satellite Images. IEEE Access 2020, 8, 223675–223685. [Google Scholar] [CrossRef]

- Hu, X.; Chen, W.; Xu, W. Adaptive Mean Shift-Based Identification of Individual Trees Using Airborne LiDAR Data. Remote Sens. 2017, 9, 148. [Google Scholar] [CrossRef]

- White, J.C.; Tompalski, P.; Coops, N.C.; Wulder, M.A. Comparison of airborne laser scanning and digital stereo imagery for characterizing forest canopy gaps in coastal temperate rainforests. Remote Sens. Environ. 2018, 208, 1–14. [Google Scholar] [CrossRef]

- Olanrewaju, S.; Rajan, N.; Ibrahim, A.M.; Rudd, J.C.; Liu, S.; Sui, R.; Jessup, K.E.; Xue, Q. Using aerial imagery and digital photography to monitor growth and yield in winter wheat. Int. J. Remote Sens. 2019, 40, 6905–6929. [Google Scholar] [CrossRef]

- Zhao, J.; Zhong, Y.; Hu, X.; Wei, L.; Zhang, L. A robust spectral-spatial approach to identifying heterogeneous crops using remote sensing imagery with high spectral and spatial resolutions. Remote Sens. Environ. 2020, 239, 111605. [Google Scholar] [CrossRef]

- Hassler, S.C.; Baysal-Gurel, F. Unmanned Aircraft System (UAS) Technology and Applications in Agriculture. Agronomy 2019, 9, 618. [Google Scholar] [CrossRef]

- Mukherjee, A.; Misra, S.; Raghuwanshi, N.S. A survey of unmanned aerial sensing solutions in precision agriculture. J. Netw. Comput. Appl. 2019, 148, 102461. [Google Scholar] [CrossRef]

- Del Cerro, J.; Ulloa, C.C.; Barrientos, A.; Rivas, J.D.L. Unmanned Aerial Vehicles in Agriculture: A Survey. Agronomy 2021, 11, 203. [Google Scholar] [CrossRef]

- Olson, D.; Anderson, J. Review on unmanned aerial vehicles, remote sensors, imagery processing, and their applications in agriculture. Agron. J. 2021, 113, 971–992. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H.; Du, Q.; Luo, B.; Chanussot, J. Using High-Resolution Airborne and Satellite Imagery to Assess Crop Growth and Yield Variability for Precision Agriculture. Proc. IEEE 2013, 101, 582–592. [Google Scholar] [CrossRef]

- Gevaert, C.M.; Suomalainen, J.; Tang, J.; Kooistra, L. Generation of Spectral—Temporal Response Surfaces by Combining Multispectral Satellite and Hyperspectral UAV Imagery for Precision Agriculture Applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3140–3146. [Google Scholar] [CrossRef]

- Murugan, D.; Garg, A.; Singh, D. Development of an Adaptive Approach for Precision Agriculture Monitoring with Drone and Satellite Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 5322–5328. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Nafziger, E.; Chowdhary, G.; Peng, B.; Jin, Z.; Wang, S.; Wang, S. Detecting In-Season Crop Nitrogen Stress of Corn for Field Trials Using UAV- and CubeSat-Based Multispectral Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 5153–5166. [Google Scholar] [CrossRef]

- Vargas, J.Q.; Khot, L.R.; Peters, R.T.; Chandel, A.K.; Molaei, B. Low Orbiting Satellite and Small UAS-Based High-Resolution Imagery Data to Quantify Crop Lodging: A Case Study in Irrigated Spearmint. IEEE Geosci. Remote Sens. Lett. 2020, 17, 755–759. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A Review on the Use of Unmanned Aerial Vehicles and Imaging Sensors for Monitoring and Assessing Plant Stresses. Drones 2019, 3, 40. [Google Scholar] [CrossRef]

- Kim, J.; Kim, S.; Ju, C.; Son, H.I. Unmanned Aerial Vehicles in Agriculture: A Review of Perspective of Platform, Control, and Applications. IEEE Access 2019, 7, 105100–105115. [Google Scholar] [CrossRef]

- Zhang, C.; Valente, J.; Kooistra, L.; Guo, L.; Wang, W. Orchard management with small unmanned aerial vehicles: A survey of sensing and analysis approaches. Precis. Agric. 2021, 22, 2007–2052. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, L.; Tian, T.; Yin, J. A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring in China. Remote Sens. 2021, 13, 1221. [Google Scholar] [CrossRef]

- Franceschini, M.H.D.; Bartholomeus, H.; van Apeldoorn, D.; Suomalainen, J.; Kooistra, L. Assessing Changes in Potato Canopy Caused by Late Blight in Organic Production Systems through UAV-based Pushroom Imaging Spectrometer. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 109–112. [Google Scholar] [CrossRef]

- Vargas, J.Q.; Bendig, J.; Mac Arthur, A.; Burkart, A.; Julitta, T.; Maseyk, K.; Thomas, R.; Siegmann, B.; Rossini, M.; Celesti, M.; et al. Unmanned Aerial Systems (UAS)-Based Methods for Solar Induced Chlorophyll Fluorescence (SIF) Retrieval with Non-Imaging Spectrometers: State of the Art. Remote Sens. 2020, 12, 1624. [Google Scholar] [CrossRef]

- Sanchez-Rodriguez, J.-P.; Aceves-Lopez, A.; Martinez-Carranza, J.; Flores-Wysocka, G. Onboard plane-wise 3D mapping using super-pixels and stereo vision for autonomous flight of a hexacopter. Intell. Serv. Robot. 2020, 13, 273–287. [Google Scholar] [CrossRef]

- Busch, C.A.M.; Stol, K.A.; van der Mark, W. Dynamic tree branch tracking for aerial canopy sampling using stereo vision. Comput. Electron. Agric. 2021, 182, 106007. [Google Scholar] [CrossRef]

- Lin, Y.-C.; Habib, A. Quality control and crop characterization framework for multi-temporal UAV LiDAR data over mechanized agricultural fields. Remote Sens. Environ. 2021, 256, 112299. [Google Scholar] [CrossRef]

- Luo, S.; Liu, W.; Zhang, Y.; Wang, C.; Xi, X.; Nie, S.; Ma, D.; Lin, Y.; Zhou, G. Maize and soybean heights estimation from unmanned aerial vehicle (UAV) LiDAR data. Comput. Electron. Agric. 2021, 182, 106005. [Google Scholar] [CrossRef]

- Reiser, D.; Martín-López, J.M.; Memic, E.; Vázquez-Arellano, M.; Brandner, S.; Griepentrog, H.W. 3D Imaging with a Sonar Sensor and an Automated 3-Axes Frame for Selective Spraying in Controlled Conditions. J. Imaging 2017, 3, 9. [Google Scholar] [CrossRef]

- Cooper, I.; Hotchkiss, R.; Williams, G. Extending Multi-Beam Sonar with Structure from Motion Data of Shorelines for Complete Pool Bathymetry of Reservoirs. Remote Sens. 2021, 13, 35. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Potgieter, A.B.; George-Jaeggli, B.; Chapman, S.; Laws, K.; Cadavid, L.A.S.; Wixted, J.; Watson, J.; Eldridge, M.; Jordan, D.; Hammer, G. Multi-Spectral Imaging from an Unmanned Aerial Vehicle Enables the Assessment of Seasonal Leaf Area Dynamics of Sorghum Breeding Lines. Front. Plant Sci. 2017, 8, 1532. [Google Scholar] [CrossRef]

- Zhong, Y.; Wang, X.; Xu, Y.; Wang, S.; Jia, T.; Hu, X.; Zhao, J.; Wei, L.; Zhang, L. Mini-UAV-Borne Hyperspectral Remote Sensing: From Observation and Processing to Applications. IEEE Geosci. Remote Sens. Mag. 2018, 6, 46–62. [Google Scholar] [CrossRef]

- Jay, S.; Baret, F.; Dutartre, D.; Malatesta, G.; Héno, S.; Comar, A.; Weiss, M.; Maupas, F. Exploiting the centimeter resolution of UAV multispectral imagery to improve remote-sensing estimates of canopy structure and biochemistry in sugar beet crops. Remote Sens. Environ. 2019, 231, 110898. [Google Scholar] [CrossRef]

- Gao, D.; Sun, Q.; Hu, B.; Zhang, S. A Framework for Agricultural Pest and Disease Monitoring Based on Internet-of-Things and Unmanned Aerial Vehicles. Sensors 2020, 20, 1487. [Google Scholar] [CrossRef]

- Xavier, T.W.F.; Souto, R.N.V.; Statella, T.; Galbieri, R.; Santos, E.S.; Suli, G.S.; Zeilhofer, P. Identification of Ramularia Leaf Blight Cotton Disease Infection Levels by Multispectral, Multiscale UAV Imagery. Drones 2019, 3, 33. [Google Scholar] [CrossRef]

- Heim, R.H.; Wright, I.J.; Scarth, P.; Carnegie, A.J.; Taylor, D.; Oldeland, J. Multispectral, Aerial Disease Detection for Myrtle Rust (Austropuccinia psidii) on a Lemon Myrtle Plantation. Drones 2019, 3, 25. [Google Scholar] [CrossRef]

- Messina, G.; Modica, G. Applications of UAV Thermal Imagery in Precision Agriculture: State of the Art and Future Research Outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Maguire, M.; Neale, C.; Woldt, W. Improving Accuracy of Unmanned Aerial System Thermal Infrared Remote Sensing for Use in Energy Balance Models in Agriculture Applications. Remote Sens. 2021, 13, 1635. [Google Scholar] [CrossRef]

- Calvario, G.; Alarcón, T.; Dalmau, O.; Sierra, B.; Hernandez, C. An Agave Counting Methodology Based on Mathematical Morphology and Images Acquired through Unmanned Aerial Vehicles. Sensors 2020, 20, 6247. [Google Scholar] [CrossRef]

- Dehkordi, R.H.; El Jarroudi, M.; Kouadio, L.; Meersmans, J.; Beyer, M. Monitoring Wheat Leaf Rust and Stripe Rust in Winter Wheat Using High-Resolution UAV-Based Red-Green-Blue Imagery. Remote Sens. 2020, 12, 3696. [Google Scholar] [CrossRef]

- dos Santos, L.M.; Ferraz, G.A.E.S.; Barbosa, B.D.D.S.; Diotto, A.V.; Andrade, M.T.; Conti, L.; Rossi, G. Determining the Leaf Area Index and Percentage of Area Covered by Coffee Crops Using UAV RGB Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6401–6409. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Narmilan, A.; Gonzalez, F.; Salgadoe, A.S.A.; Powell, K. Detection of White Leaf Disease in Sugarcane Using Machine Learning Techniques over UAV Multispectral Images. Drones 2022, 6, 230. [Google Scholar] [CrossRef]

- Chaschatzis, C.; Karaiskou, C.; Mouratidis, E.G.; Karagiannis, E.; Sarigiannidis, P.G. Detection and Characterization of Stressed Sweet Cherry Tissues Using Machine Learning. Drones 2022, 6, 3. [Google Scholar] [CrossRef]

- Niu, H.; Hollenbeck, D.; Zhao, T.; Wang, D.; Chen, Y. Evapotranspiration Estimation with Small UAVs in Precision Agriculture. Sensors 2020, 20, 6427. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Majeed, Y.; Naranjo, G.D.; Gambacorta, E.M. Assessment for crop water stress with infrared thermal imagery in precision agriculture: A review and future prospects for deep learning applications. Comput. Electron. Agric. 2021, 182, 106019. [Google Scholar] [CrossRef]

- Raj, M.; Gupta, S.; Chamola, V.; Elhence, A.; Garg, T.; Atiquzzaman, M.; Niyato, D. A survey on the role of Internet of Things for adopting and promoting Agriculture 4. J. Netw. Comput. Appl. 2021, 187, 103107. [Google Scholar] [CrossRef]

- Marimon, N.; Luque, J.; Vargas, F.J.; Alegre, S.; Miarnau, X. Susceptibilidad varietal a la ‘mancha ocre’ (Polystigma ochraceum (Whalenb.) Sacc.) en el cultivo del almendro. In Proceedings of the XVI Spanish National Congress of the Phytopathology Society, Barcelona, Spain, 21–23 September 2012. [Google Scholar]

- López-López, M.; Calderón, R.; González-Dugo, V.; Zarco-Tejada, P.J.; Fereres, E. Early Detection and Quantification of Almond Red Leaf Blotch Using High-Resolution Hyperspectral and Thermal Imagery. Remote Sens. 2016, 8, 276. [Google Scholar] [CrossRef]

- Saravanan, G.; Yamuna, G.; Nandhini, S. Real time implementation of RGB to HSV/HSI/HSL and its reverse color space models. In Proceedings of the 2016 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 6–8 April 2016; pp. 0462–0466. [Google Scholar] [CrossRef]

- Jung, K.Y.; Park, J.K. Analysis of Vegetation Infection Information Using Unmanned Aerial Vehicle with Optical Sensor. Sensors Mater. 2019, 31, 3319. [Google Scholar] [CrossRef]

- Nazir, M.N.M.M.; Terhem, R.; Norhisham, A.R.; Razali, S.M.; Meder, R. Early Monitoring of Health Status of Plantation-Grown Eucalyptus pellita at Large Spatial Scale via Visible Spectrum Imaging of Canopy Foliage Using Unmanned Aerial Vehicles. Forests 2021, 12, 1393. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, Y.; Pan, L.; Xie, Y.; Zhang, B.; Liang, R.; Sun, Y. Pine wilt disease detection in high-resolution UAV images using object-oriented classification. J. For. Res. 2021, 33, 1377–1389. [Google Scholar] [CrossRef]

- Oliveira, H.C.; Guizilini, V.C.; Nunes, I.P.; Souza, J.R. Failure Detection in Row Crops From UAV Images Using Morphological Operators. IEEE Geosci. Remote Sens. Lett. 2018, 15, 991–995. [Google Scholar] [CrossRef]

- Marques, P.; Pádua, L.; Adão, T.; Hruška, J.; Peres, E.; Sousa, A.; Sousa, J.J. UAV-Based Automatic Detection and Monitoring of Chestnut Trees. Remote Sens. 2019, 11, 855. [Google Scholar] [CrossRef]

- Siebring, J.; Valente, J.; Franceschini, M.H.D.; Kamp, J.; Kooistra, L. Object-Based Image Analysis Applied to Low Altitude Aerial Imagery for Potato Plant Trait Retrieval and Pathogen Detection. Sensors 2019, 19, 5477. [Google Scholar] [CrossRef]

- Zhang, J.; Virk, S.; Porter, W.; Kenworthy, K.; Sullivan, D.; Schwartz, B. Applications of Unmanned Aerial Vehicle Based Imagery in Turfgrass Field Trials. Front. Plant Sci. 2019, 10, 279. [Google Scholar] [CrossRef]

- Barbosa, B.D.S.; Ferraz, G.A.E.S.; Santos, L.M.; Santana, L.S.; Marin, D.B.; Rossi, G.; Conti, L. Application of RGB Images Obtained by UAV in Coffee Farming. Remote Sens. 2021, 13, 2397. [Google Scholar] [CrossRef]

- Ganthaler, A.; Losso, A.; Mayr, S. Using image analysis for quantitative assessment of needle bladder rust disease of Norway spruce. Plant Pathol. 2018, 67, 1122–1130. [Google Scholar] [CrossRef]

| Healthy Part: Green | Unhealthy Part: Ochre Spot | ||||||

|---|---|---|---|---|---|---|---|

| R | G | B | H | R | G | B | H |

| 142 | 170 | 68 | 76 | 144 | 146 | 84 | 62 |

| 150 | 172 | 104 | 79 | 196 | 166 | 124 | 35 |

| 114 | 144 | 62 | 82 | 212 | 188 | 136 | 41 |

| 90 | 114 | 66 | 90 | 108 | 84 | 56 | 32 |

| 96 | 126 | 60 | 87 | 178 | 136 | 102 | 27 |

| 124 | 140 | 66 | 73 | 92 | 62 | 52 | 15 |

| 184 | 196 | 104 | 68 | 90 | 66 | 51 | 23 |

| 138 | 146 | 100 | 70 | 164 | 126 | 92 | 28 |

| 180 | 206 | 114 | 77 | 112 | 102 | 58 | 50 |

| 156 | 192 | 96 | 82 | 74 | 74 | 58 | 60 |

| 192 | 210 | 120 | 72 | 80 | 62 | 52 | 21 |

| 90 | 110 | 62 | 85 | 70 | 62 | 56 | 26 |

| 150 | 180 | 94 | 81 | 110 | 78 | 78 | 0 |

| 134 | 178 | 86 | 89 | 98 | 70 | 64 | 11 |

| 85 | 130 | 57 | 97 | 92 | 56 | 52 | 6 |

| 42 | 60 | 36 | 105 | 146 | 100 | 88 | 12 |

| 170 | 184 | 80 | 68 | 92 | 64 | 62 | 4 |

| 190 | 218 | 129 | 77 | 76 | 60 | 54 | 16 |

| 182 | 192 | 84 | 66 | 66 | 51 | 51 | 0 |

| 67 | 89 | 43 | 89 | 110 | 112 | 51 | 62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martínez-Heredia, J.M.; Gálvez, A.I.; Colodro, F.; Mora-Jiménez, J.L.; Sassi, O.E. Feasibility Study of Detection of Ochre Spot on Almonds Aimed at Very Low-Cost Cameras Onboard a Drone. Drones 2023, 7, 186. https://doi.org/10.3390/drones7030186

Martínez-Heredia JM, Gálvez AI, Colodro F, Mora-Jiménez JL, Sassi OE. Feasibility Study of Detection of Ochre Spot on Almonds Aimed at Very Low-Cost Cameras Onboard a Drone. Drones. 2023; 7(3):186. https://doi.org/10.3390/drones7030186

Chicago/Turabian StyleMartínez-Heredia, Juana M., Ana I. Gálvez, Francisco Colodro, José Luis Mora-Jiménez, and Ons E. Sassi. 2023. "Feasibility Study of Detection of Ochre Spot on Almonds Aimed at Very Low-Cost Cameras Onboard a Drone" Drones 7, no. 3: 186. https://doi.org/10.3390/drones7030186

APA StyleMartínez-Heredia, J. M., Gálvez, A. I., Colodro, F., Mora-Jiménez, J. L., & Sassi, O. E. (2023). Feasibility Study of Detection of Ochre Spot on Almonds Aimed at Very Low-Cost Cameras Onboard a Drone. Drones, 7(3), 186. https://doi.org/10.3390/drones7030186