Identifying Tree Species in a Warm-Temperate Deciduous Forest by Combining Multi-Rotor and Fixed-Wing Unmanned Aerial Vehicles

Abstract

:1. Introduction

2. Materials and Methods

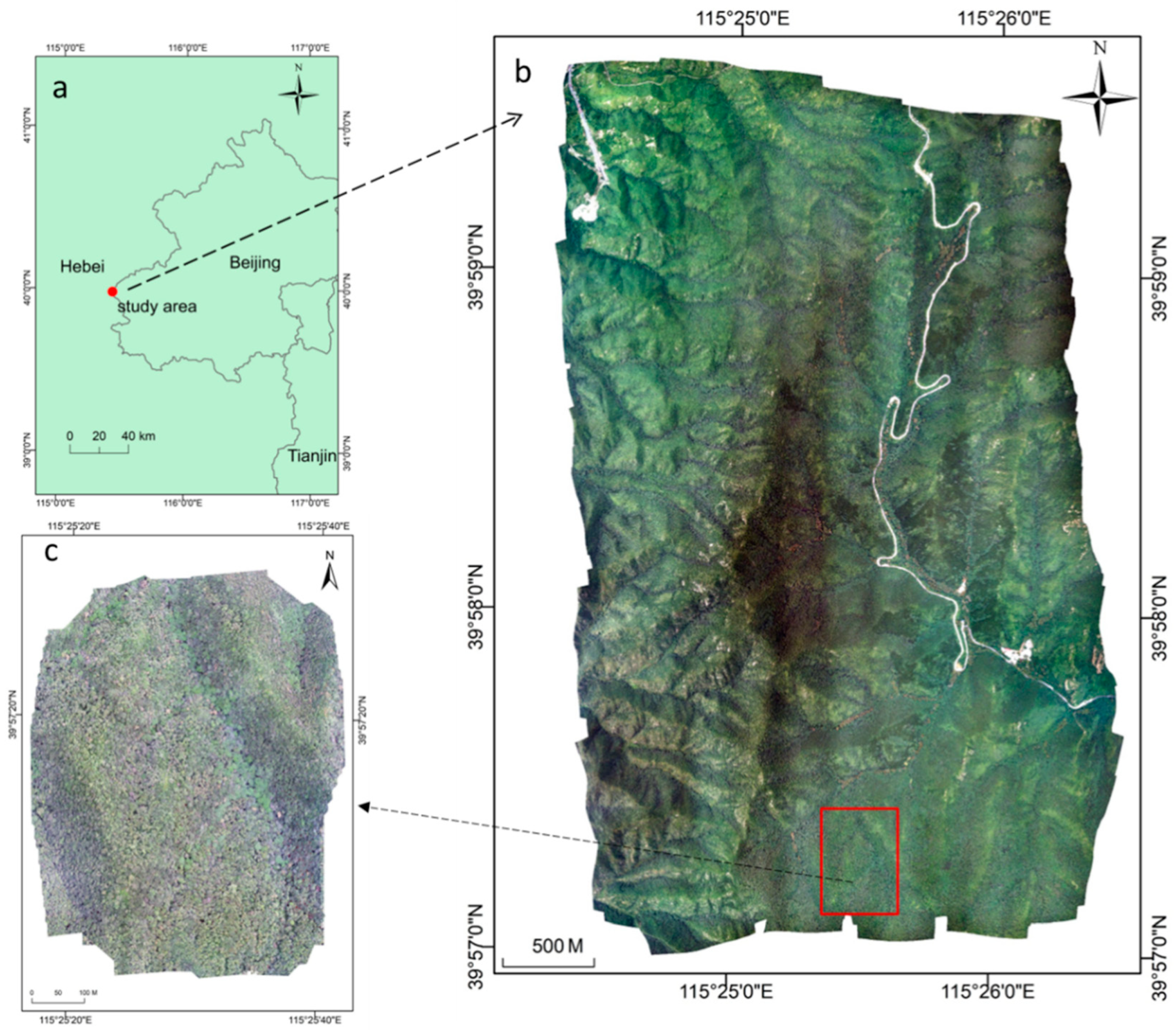

2.1. Study Area

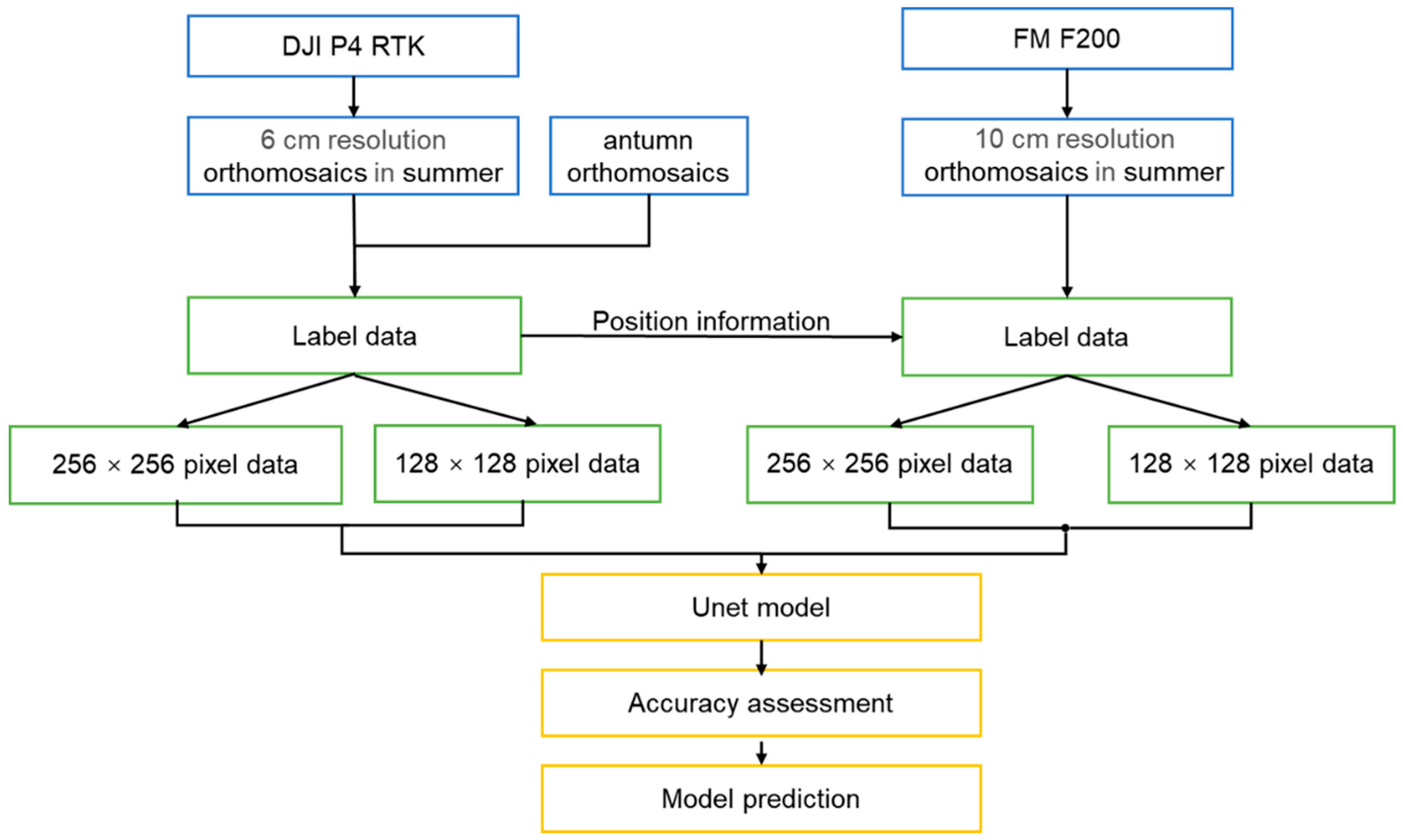

2.2. Workflow Description

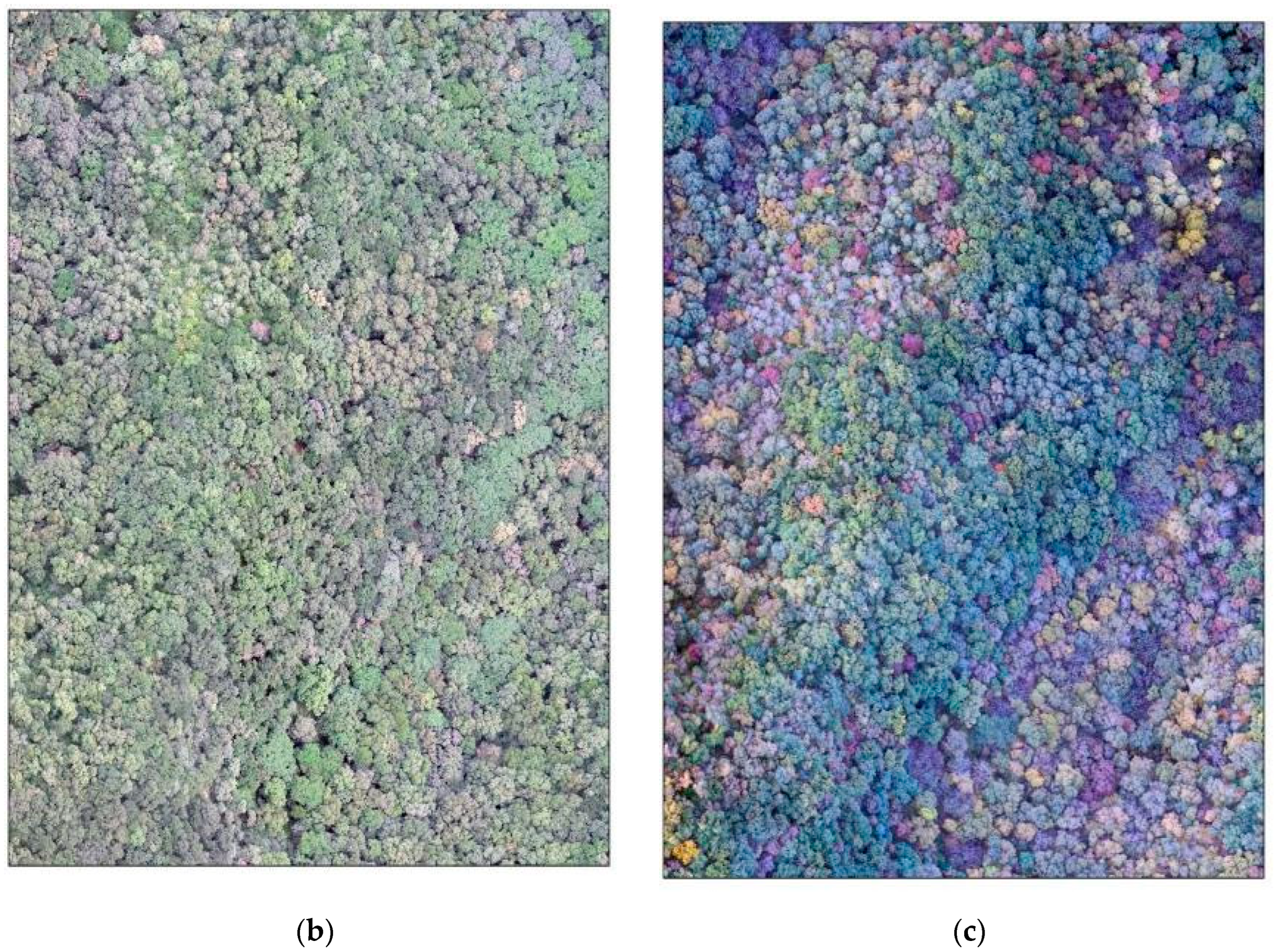

2.3. Data Acquisition

2.3.1. Fixed-Wing UAV Platform and Data Acquisition

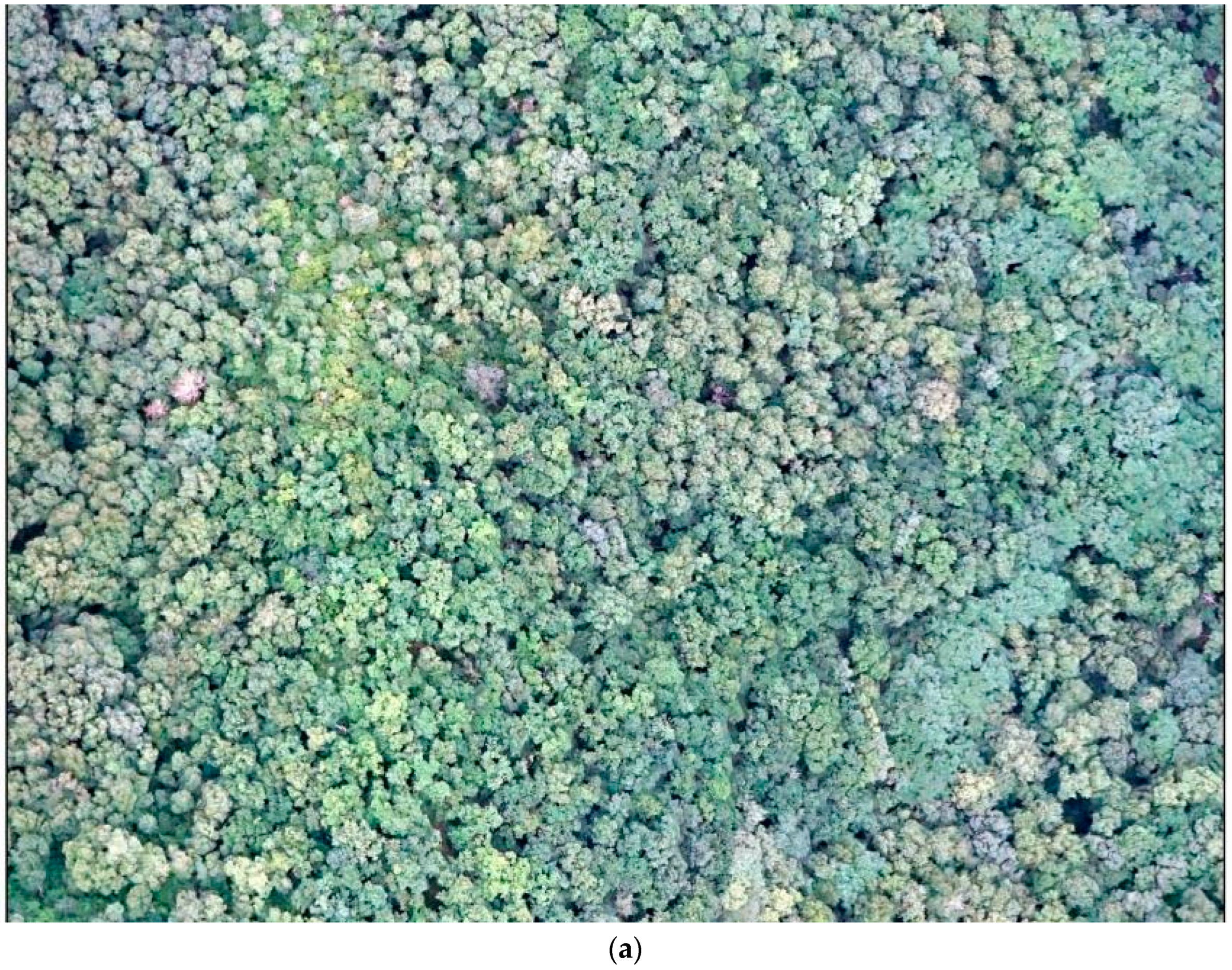

2.3.2. Multi-Rotor UAV Platform and Data Acquisition

2.4. Reference Data Extraction

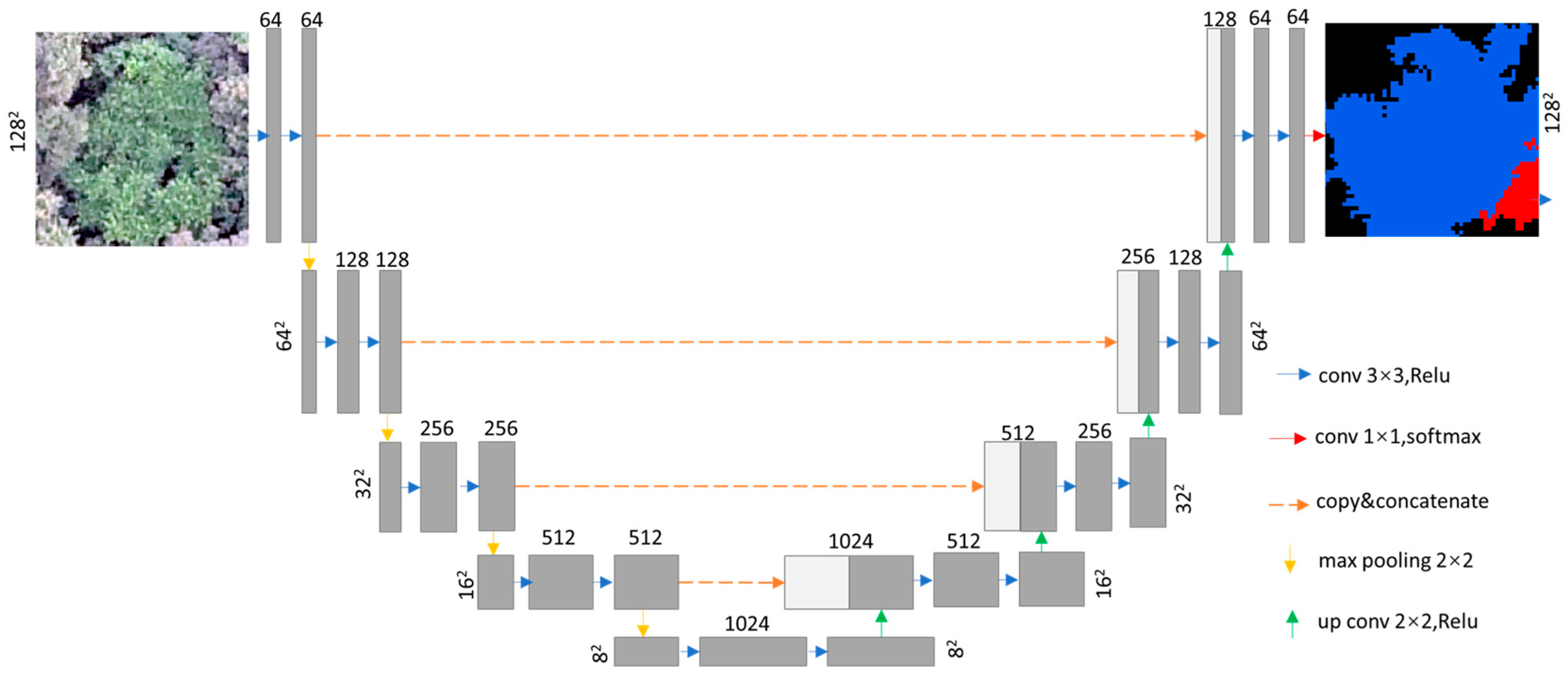

2.5. Classification Method

2.6. Classification Accuracy Assessment

3. Results

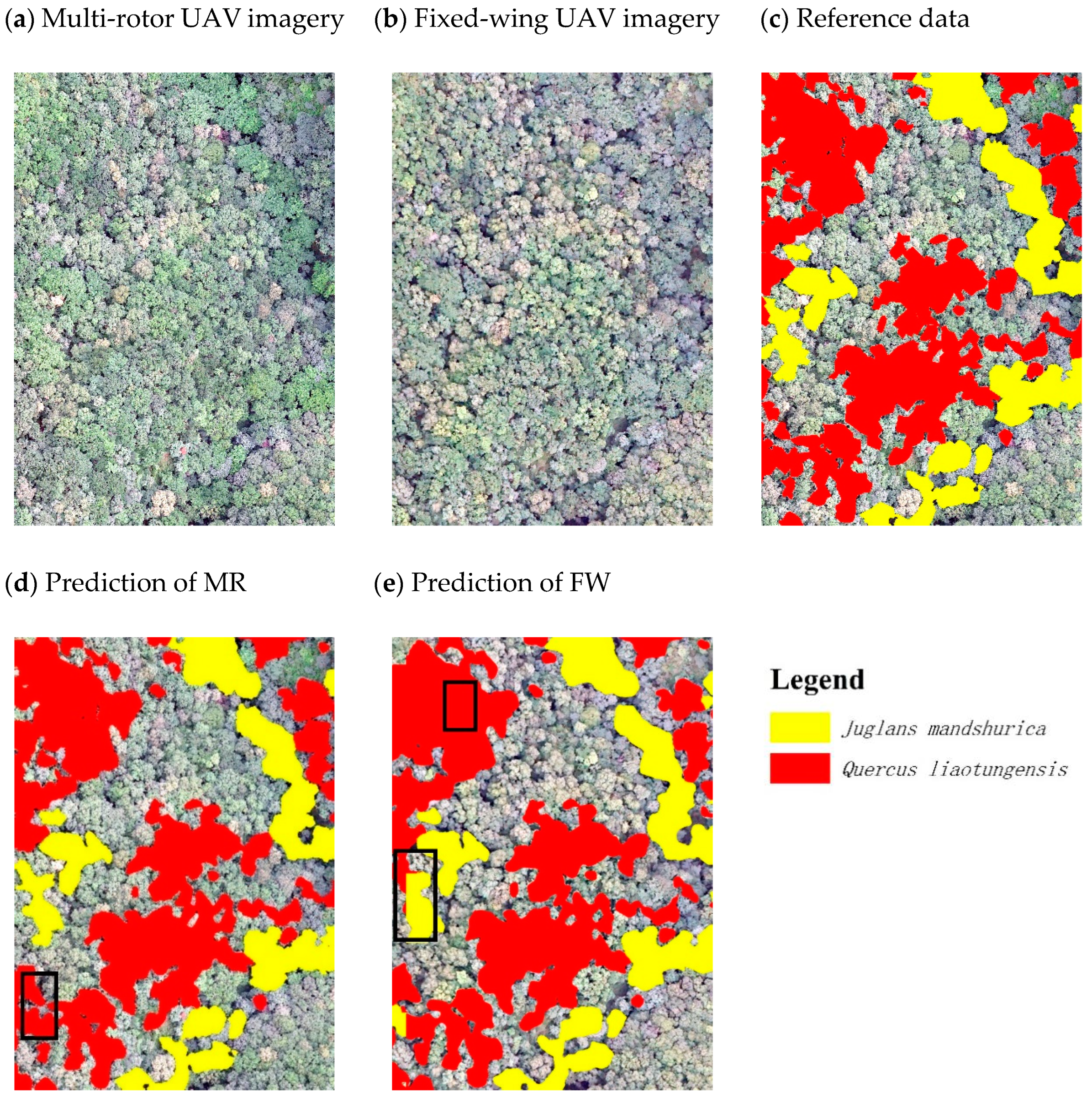

3.1. Model Accuracy Using Multi-Rotor UAV Imagery and Fixed-Wing UAV Imagery

3.2. Effect of Size on the UAV Image Model

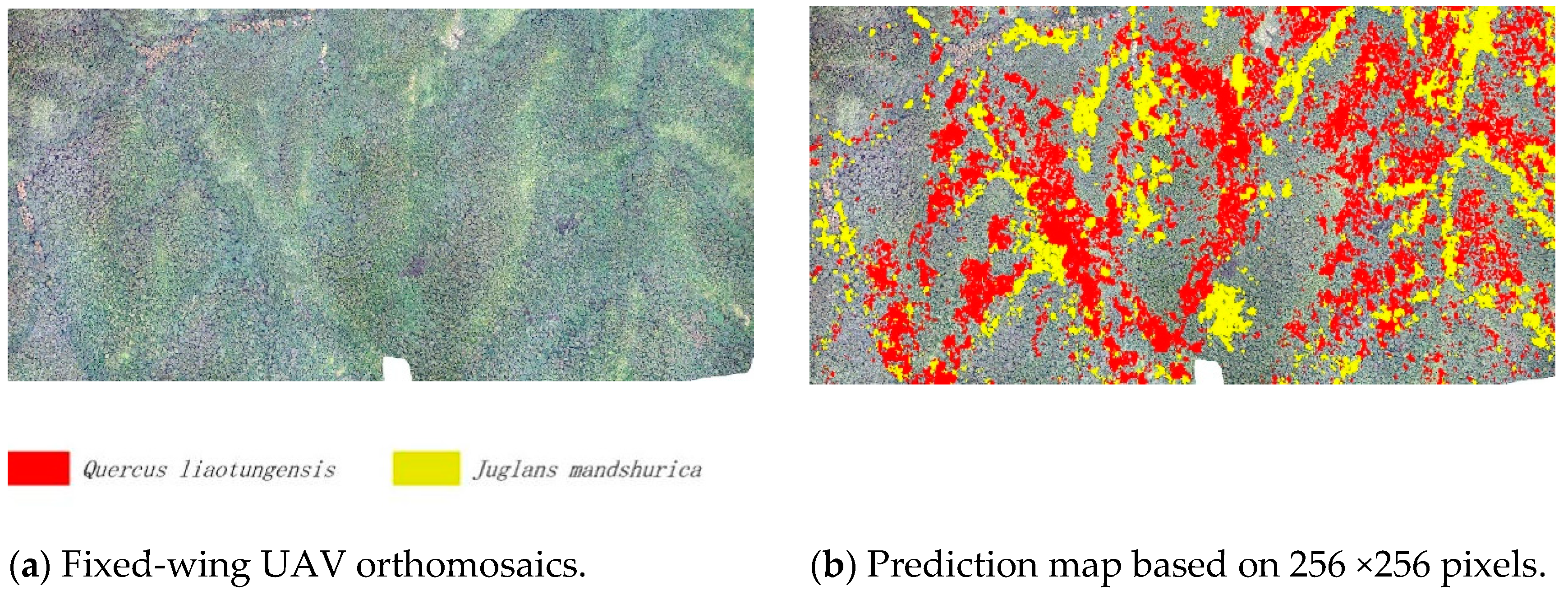

3.3. Model Prediction

4. Discussion

4.1. Comparison of Multi-Rotor and Fixed-Wing Model Capabilities

4.2. Combined Multi-Rotor and Fixed-Wing UAV for Large-Area Mapping

4.3. Tile Size

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Perera, A.H.; Peterson, U.; Pastur, G.M.; Iverson, L.R. Ecosystem Services from Forest Landscapes: Where We Are and Where We Go. Ecosyst. Serv. For. Landsc. 2018, 249–258. [Google Scholar] [CrossRef]

- Pan, Y.; Birdsey, R.A.; Fang, J.; Houghton, R.; Kauppi, P.E.; Kurz, W.A.; Phillips, O.L.; Shvidenko, A.; Lewis, S.L.; Canadell, J.G.; et al. A Large and Persistent Carbon Sink in the World’s Forests. Science 2011, 333, 988–993. [Google Scholar] [CrossRef]

- Bonan, G.B. Forests and climate change: Forcings, feedbacks, and the climate benefits of forests. Science 2008, 320, 1444–1449. [Google Scholar] [CrossRef]

- Liu, H.; Li, L.; Sang, W. Species composition and community structure of the Donglingshan forest dynamic plot in a warm temperate deciduous broad-leaved secondary forest, China. Biodivers. Sci. 2011, 19, 232–242. [Google Scholar] [CrossRef]

- Zhu, Y.; Bai, F.; Liu, H.; Li, W.; Li, L.; Li, G.; Wang, S.; Sang, W. Population distribution patterns and interspecific spatial associations in warm temperate secondary forests, Beijing. Biodivers. Sci. 2011, 19, 252–259. [Google Scholar]

- Bai, F.; Zhang, W.; Wang, Y. A dataset of seasonal dynamics of the litter fall production of deciduous broad-leaf forest in the warm temperate zone of Beijing Dongling Mountain (2005–2015). China Sci. Data 2020, 5, 1-8–8-8. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Pu, R. Mapping Tree Species Using Advanced Remote Sensing Technologies: A State-of-the-Art Review and Perspective. J. Remote Sens. 2021, 2021, 9812624. [Google Scholar] [CrossRef]

- Stoffels, J.; Hill, J.; Sachtleber, T.; Mader, S.; Buddenbaum, H.; Stern, O.; Langshausen, J.; Dietz, J.; Ontrup, G. Satellite-Based Derivation of High-Resolution Forest Information Layers for Operational Forest Management. Forests 2015, 6, 1982–2013. [Google Scholar] [CrossRef]

- Ke, Y.; Quackenbush, L.J.; Im, J. Synergistic use of QuickBird multispectral imagery and LIDAR data for object-based forest species classification. Remote Sens. Environ. 2010, 114, 1141–1154. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree Species Classification with Random Forest Using Very High Spatial Resolution 8-Band WorldView-2 Satellite Data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Zhang, J.; Hu, J.; Lian, J.; Fan, Z.; Ouyang, X.; Ye, W. Seeing the forest from drones: Testing the potential of lightweight drones as a tool for long-term forest monitoring. Biol. Conserv. 2016, 198, 60–69. [Google Scholar] [CrossRef]

- Pajares, G. Overview and Current Status of Remote Sensing Applications Based on Unmanned Aerial Vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–329. [Google Scholar] [CrossRef]

- Liao, X.; Xiao, Q.; Zhang, H. UAV remote sensing: Popularization and expand application development trend. J. Remote Sens 2019, 23, 1046–1052. [Google Scholar] [CrossRef]

- Liao, X.; Zhou, C.; Su, F.; Lu, H.; Yue, H.; Gou, J. The Mass Innovation Era of UAV Remote Sensing. J. Geo-Inf. Sci. 2016, 18, 1439–1447. [Google Scholar]

- Kiyak, E.; Unal, G. Small aircraft detection using deep learning. Aircr. Eng. Aerosp. Technol. 2021, 93, 671–681. [Google Scholar] [CrossRef]

- He, C.; Zhang, S.; Yao, S. Forest Fires Locating Technology Based on Rotor UAV. Bull. Surv. Mapp. 2014, 12, 24–27. [Google Scholar]

- Zheng, J.; Fu, H.; Li, W.; Wu, W.; Yu, L.; Yuan, S.; Tao, W.Y.W.; Pang, T.K.; Kanniah, K.D. Growing status observation for oil palm trees using Unmanned Aerial Vehicle (UAV) images. ISPRS J. Photogramm. Remote Sens. 2021, 173, 95–121. [Google Scholar] [CrossRef]

- Yu, J.-W.; Yoon, Y.-W.; Baek, W.-K.; Jung, H.-S. Forest Vertical Structure Mapping Using Two-Seasonal Optic Images and LiDAR DSM Acquired from UAV Platform through Random Forest, XGBoost, and Support Vector Machine Approaches. Remote Sens. 2021, 13, 4282. [Google Scholar] [CrossRef]

- Liu, J.; Liao, X.; Ni, W.; Wang, Y.; Ye, H.; Yue, H. Individual Tree Recognition Algorithm of UAV Stereo Imagery Considering Three-dimensional Morphology of Tree. J. Geo-Inf. Sci. 2021, 23, 1861–1872. [Google Scholar] [CrossRef]

- Onwudinjo, K.C.; Smit, J. Estimating the performance of multi-rotor unmanned aerial vehicle structure-from-motion (UAVsfm) imagery in assessing homogeneous and heterogeneous forest structures: A comparison to airborne and terrestrial laser scanning. S. Afr. J. Geomat. 2022, 11, 1. [Google Scholar] [CrossRef]

- Chandrasekaran, A.; Shao, G.; Fei, S.; Miller, Z.; Hupy, J. Automated Inventory of Broadleaf Tree Plantations with UAS Imagery. Remote Sens. 2022, 14, 1931. [Google Scholar] [CrossRef]

- Feng, Q.; Yang, J.; Liu, Y.; Ou, C.; Zhu, D.; Niu, B.; Liu, J.; Li, B. Multi-Temporal Unmanned Aerial Vehicle Remote Sensing for Vegetable Mapping Using an Attention-Based Recurrent Convolutional Neural Network. Remote Sens. 2020, 12, 1668. [Google Scholar] [CrossRef]

- Shi, W.; Liao, X.; Sun, J.; Zhang, Z.; Wang, D.; Wang, S.; Qu, W.; He, H.; Ye, H.; Yue, H.; et al. Optimizing Observation Plans for Identifying Faxon Fir (Abies fargesii var. Faxoniana) Using Monthly Unmanned Aerial Vehicle Imagery. Remote Sens. 2023, 15, 2205. [Google Scholar] [CrossRef]

- Saarinen, N.; Vastaranta, M.; Näsi, R.; Rosnell, T.; Hakala, T.; Honkavaara, E.; Wulder, M.; Luoma, V.; Tommaselli, A.; Imai, N.; et al. Assessing Biodiversity in Boreal Forests with UAV-Based Photogrammetric Point Clouds and Hyperspectral Imaging. Remote Sens. 2018, 10, 338. [Google Scholar] [CrossRef]

- Zhang, J.; Sun, Q.; Ye, Z.; Yang, M.; Zhao, X.; Ju, Y.; Hu, T.; Guo, Q. New Technology for Ecological Remote Sensing: Light, Small Unmanned Aerial Vehicles (UAV). Trop. Geogr. 2019, 39, 604–615. [Google Scholar]

- Guo, Q.; Wu, F.; Hu, T.; Chen, L.; Liu, J.; Zhao, X.; Gao, S.; Pang, S. Perspectives and prospects of unmanned aerial vehicle in remote sensing monitoring of biodiversity. Biodivers. Sci. 2016, 24, 1267–1278. [Google Scholar] [CrossRef]

- Jayathunga, S.; Owari, T.; Tsuyuki, S. Evaluating the Performance of Photogrammetric Products Using Fixed-Wing UAV Imagery over a Mixed Conifer–Broadleaf Forest: Comparison with Airborne Laser Scanning. Remote Sens. 2018, 10, 187. [Google Scholar] [CrossRef]

- Zhou, M.; Zhou, Z.; Liu, L.; Huang, J.; Lyu, Z. Review of vertical take-off and landing fixed-wing UAV and its application prospect in precision agriculture. Int. J. Precis. Agric. Aviat. 2020, 3, 8–17. [Google Scholar] [CrossRef]

- Gonçalves, G.; Gonçalves, D.; Gómez-Gutiérrez, Á.; Andriolo, U.; Pérez-Alvárez, J.A. 3D Reconstruction of Coastal Cliffs from Fixed-Wing and Multi-Rotor UAS: Impact of SfM-MVS Processing Parameters, Image Redundancy and Acquisition Geometry. Remote Sens. 2021, 13, 1222. [Google Scholar] [CrossRef]

- Wandrie, L.J.; Klug, P.E.; Clark, M.E. Evaluation of two unmanned aircraft systems as tools for protecting crops from blackbird damage. Crop Prot. 2019, 117, 15–19. [Google Scholar] [CrossRef]

- Boon, M.A.; Drijfhout, A.P.; Tesfamichael, S. Comparison of a Fixed-Wing and Multi-Rotor Uav for Environmental Mapping Applications: A Case Study. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W6, 47–54. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, J.; Zhang, B.; Cheng, J.; Tian, S.; Suriguga. Pattern of Larix principis-rupprechtii Plantation and Its Environmental Interpretation in Dongling Mountain. J. Wuhan Bot. Res. 2010, 28, 577–582. [Google Scholar]

- Li, Z.; Chen, W.; Wei, J.; Maierdang, K.; Zhang, Y.; Zhang, S.; Wang, X. Tree-ring growth responses of Liaodong Oak (Quercus wutaishanica) to climate in the Beijing Dongling Mountain of China. Acta Ecol. Sin. 2021, 41, 27–37. [Google Scholar]

- Wu, K.; Sun, X.; Zhang, J.; Chen, F. Terrain Following Method of Plant Protection UAV Based on Height Fusion. Trans. Chin. Soc. Agric. Mach. 2018, 49, 17–23. [Google Scholar]

- Ma, F.; Wang, S.; Feng, J.; Sang, W. The study of the effect of tree death on spatial pattern and habitat associations in dominant populations of Dongling Mountains in Beijing. Acta Ecol. Sin. 2018, 38, 7669–7678. [Google Scholar]

- Liu, H.; Sang, W.; Xue, D. Topographical habitat variability of dominant species populations in a warm temperate forest. Chin. J. Ecol. 2013, 32, 795–801. [Google Scholar]

- Tan, W.; Wang, K.L.; Luo, X.; Wang, Z.J. Positioning precision with handset GPS receiver in different stands. Beijing Linye Daxue Xuebao/J. Beijing For. Univ. 2008, 30, 163–167. [Google Scholar]

- Kaartinen, H.; Hyyppä, J.; Vastaranta, M.; Kukko, A.; Jaakkola, A.; Yu, X.; Pyörälä, J.; Liang, X.; Liu, J.; Wang, Y.; et al. Accuracy of Kinematic Positioning Using Global Satellite Navigation Systems under Forest Canopies. Forests 2015, 6, 3218–3236. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping forest tree species in high resolution UAV-based RGB-imagery by means of convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Zhang, C.; Atkinson, P.M.; George, C.; Wen, Z.; Diazgranados, M.; Gerard, F. Identifying and mapping individual plants in a highly diverse high-elevation ecosystem using UAV imagery and deep learning. ISPRS J. Photogramm. Remote Sens. 2020, 169, 280–291. [Google Scholar] [CrossRef]

- Wang, M.; Sang, W. The change of phenology of tree and shrub in warm temperate zone and their relationships with climate change. Ecol. Sci. 2020, 39, 164–175. [Google Scholar]

- Liu, H.; Xue, D.; Sang, W. Effect of topographic factors on the relationship between species richness and aboveground biomass in a warm temperate forest. Ecol. Environ. Sci. 2012, 21, 1403–1407. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Revuelto, J.; Alonso-Gonzalez, E.; Vidaller-Gayan, I.; Lacroix, E.; Izagirre, E.; Rodríguez-López, G.; López-Moreno, J.I. Intercomparison of UAV platforms for mapping snow depth distribution in complex alpine terrain. Cold Reg. Sci. Technol. 2021, 190, 103344. [Google Scholar] [CrossRef]

- Liu, K.; Gong, H.; Cao, J.; Zhu, Y. Comparison of Mangrove Remote Sensing Classification Based on Multi-type UAV Data. Trop. Geogr. 2019, 39, 492–501. [Google Scholar]

- Lin, Z.; Ding, Q.; Huang, J.; Tu, W.; Hu, D.; Liu, J. Study on Tree Species Classification of UAV Optical Image based on DenseNet. Remote Sens. Technol. Appl. 2019, 34, 704–711. [Google Scholar]

- Alvarez-Vanhard, E.; Corpetti, T.; Houet, T. UAV & satellite synergies for optical remote sensing applications: A literature review. Sci. Remote Sens. 2021, 3, 100019. [Google Scholar] [CrossRef]

| Drone Types | Multi-Rotors | Fixed-Wing | |

|---|---|---|---|

| Drone models | DJI Phantom 4 RTK | DJI Mavic 2 Pro | FEIMA F200 |

| Takeoff Weight | 1391 g | 907 g | 3193 g |

| Flight Speed | 50 km/h (max speed) | 50 km/h (max speed) | 60 km/h (mid-speed) |

| Max Flight Time | 30 min | 30 min | 1 h and 30 min |

| Flight Altitude | 0–500 m | 0–500 m | 150–1500 m |

| Operating Temperature | 0 to 40 °C | 0 to 40 °C | Above −10 °C |

| Camera Model | SONY FC6310 | Hasselblad L1D-20c | SONY DSC-RX1R II |

| Sensor size | 1” CMOS | 1” CMOS | 35.9 × 24.0 mm |

| Effective pixels | 20 Million | 20 Million | 42.4 Million |

| UAV Mode | DJI Phantom 4 RTK | DJI Mavic 2 Pro | FEIMA F200 |

|---|---|---|---|

| Flight Date | 22 July | 1 October | 16 August |

| Flight time | 9:15 | 16:12 | 15:12 |

| Flight height | 200 m | 150 m | 800 m |

| Flight Strategy | terrain following | zonal flights | Fixed height |

| Total images | 270 | 552 | 238 |

| Spatial resolution | 6 cm | 4 cm | 10 cm |

| Literature | Vegetation Condition |

|---|---|

| Liu et al. [4], Ma et al. [37] | Dominant species in the canopy layer were mainly composed of Quercus wutaishanica, Betula dahurica, Populus davidiana, Juglans mandshurica, and other tall positive trees. |

| Liu et al. [38], Liu et al. [45] | Distribution of Quercus wutaishanica and Juglans mandshurica in relation to topography |

| UAV Types | DJI Phantom 4 RTK | FEIMA F200 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tile Size | 256 Pixel | 128 Pixel | 256 Pixel | 128 Pixel | ||||||||

| P | R | F1 | P | R | F1 | P | R | F1 | P | R | F1 | |

| Quercus liaotungensis | 95.23% | 95.80% | 95.52% | 84.16% | 82.65% | 83.40% | 91.73% | 95.11% | 92.00% | 83.68% | 83.63% | 83.65% |

| Juglans mandshurica | 96.29% | 96.90% | 96.59% | 90.20% | 92.92% | 91.54% | 92.65% | 95.10% | 93.86% | 87.47% | 90.84% | 89.12% |

| Mean F1 | 96.06% | 87.47% | 92.93% | 86.39% | ||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, W.; Wang, S.; Yue, H.; Wang, D.; Ye, H.; Sun, L.; Sun, J.; Liu, J.; Deng, Z.; Rao, Y.; et al. Identifying Tree Species in a Warm-Temperate Deciduous Forest by Combining Multi-Rotor and Fixed-Wing Unmanned Aerial Vehicles. Drones 2023, 7, 353. https://doi.org/10.3390/drones7060353

Shi W, Wang S, Yue H, Wang D, Ye H, Sun L, Sun J, Liu J, Deng Z, Rao Y, et al. Identifying Tree Species in a Warm-Temperate Deciduous Forest by Combining Multi-Rotor and Fixed-Wing Unmanned Aerial Vehicles. Drones. 2023; 7(6):353. https://doi.org/10.3390/drones7060353

Chicago/Turabian StyleShi, Weibo, Shaoqiang Wang, Huanyin Yue, Dongliang Wang, Huping Ye, Leigang Sun, Jia Sun, Jianli Liu, Zhuoying Deng, Yuanyi Rao, and et al. 2023. "Identifying Tree Species in a Warm-Temperate Deciduous Forest by Combining Multi-Rotor and Fixed-Wing Unmanned Aerial Vehicles" Drones 7, no. 6: 353. https://doi.org/10.3390/drones7060353

APA StyleShi, W., Wang, S., Yue, H., Wang, D., Ye, H., Sun, L., Sun, J., Liu, J., Deng, Z., Rao, Y., Hu, Z., & Sun, X. (2023). Identifying Tree Species in a Warm-Temperate Deciduous Forest by Combining Multi-Rotor and Fixed-Wing Unmanned Aerial Vehicles. Drones, 7(6), 353. https://doi.org/10.3390/drones7060353