Flight Test Analysis of UTM Conflict Detection Based on a Network Remote ID Using a Random Forest Algorithm

Abstract

1. Introduction

1.1. Vehicle-to-Ground (V2G) Communication

1.2. Vehicle-to-Vehicle (V2V) Communication

1.3. Mixed V2G and V2V Communication

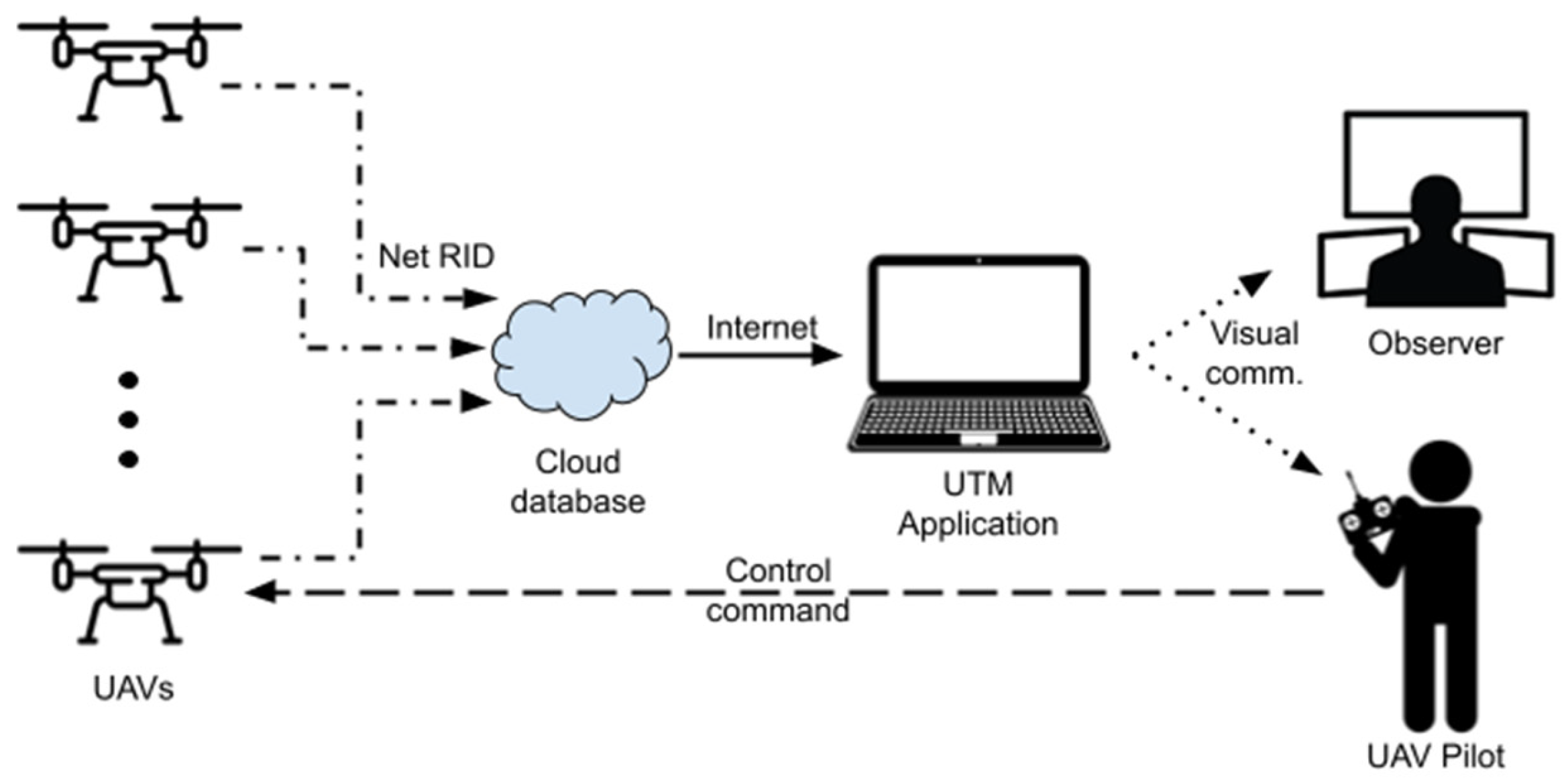

2. UTM Monitoring Framework

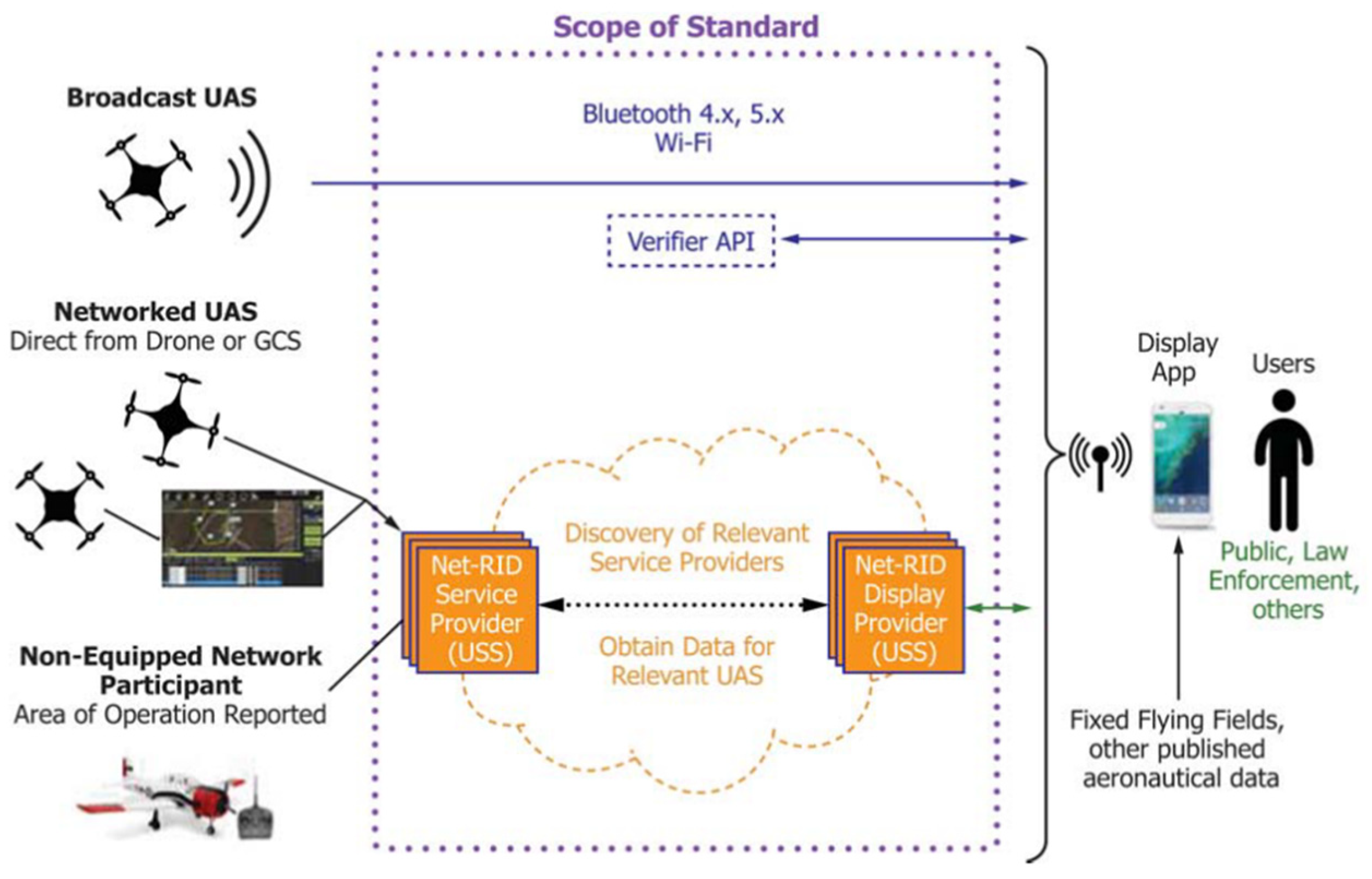

2.1. Network Remote ID

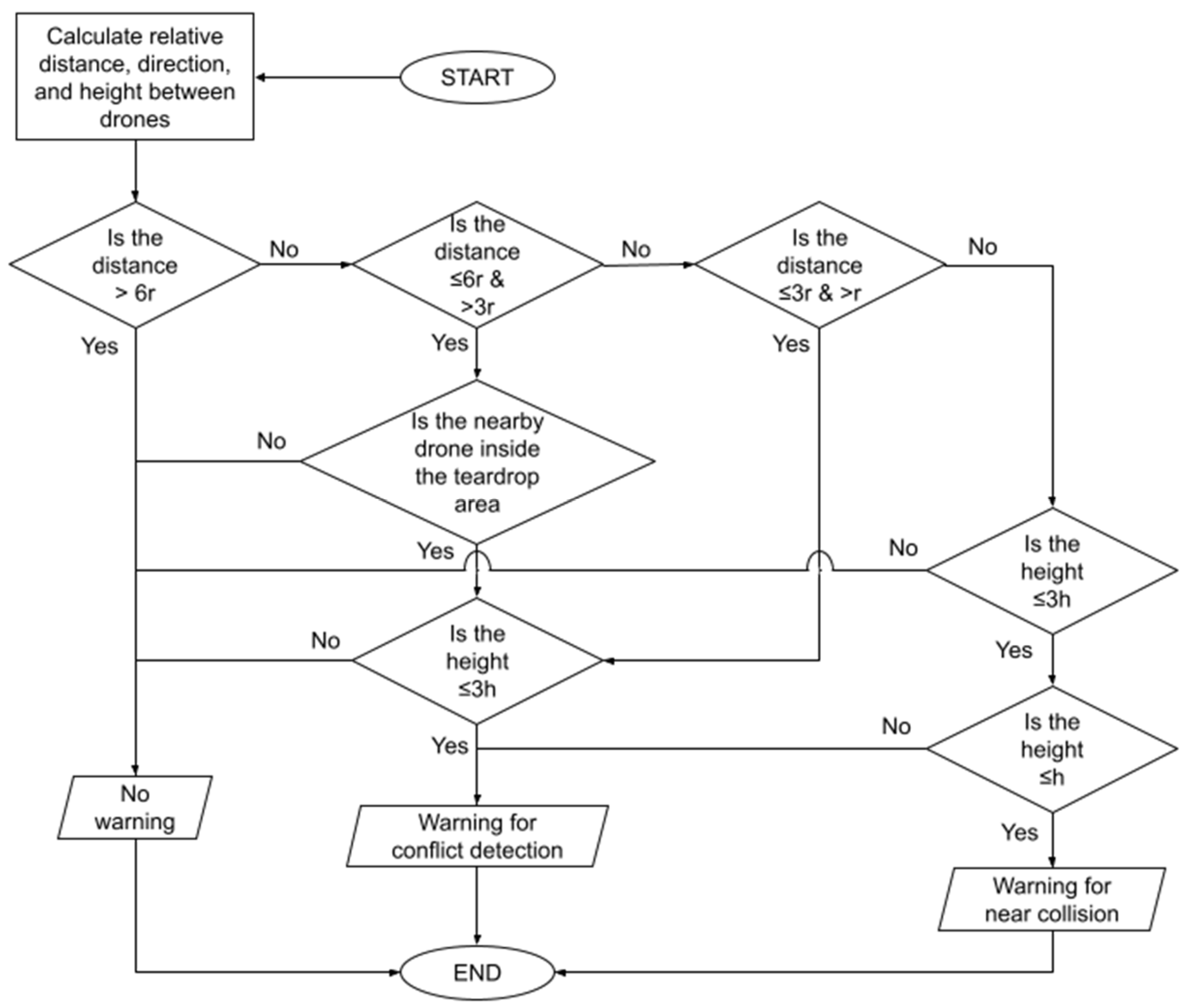

2.2. Conflict Detection Algorithm

2.3. Flight Data Measurement

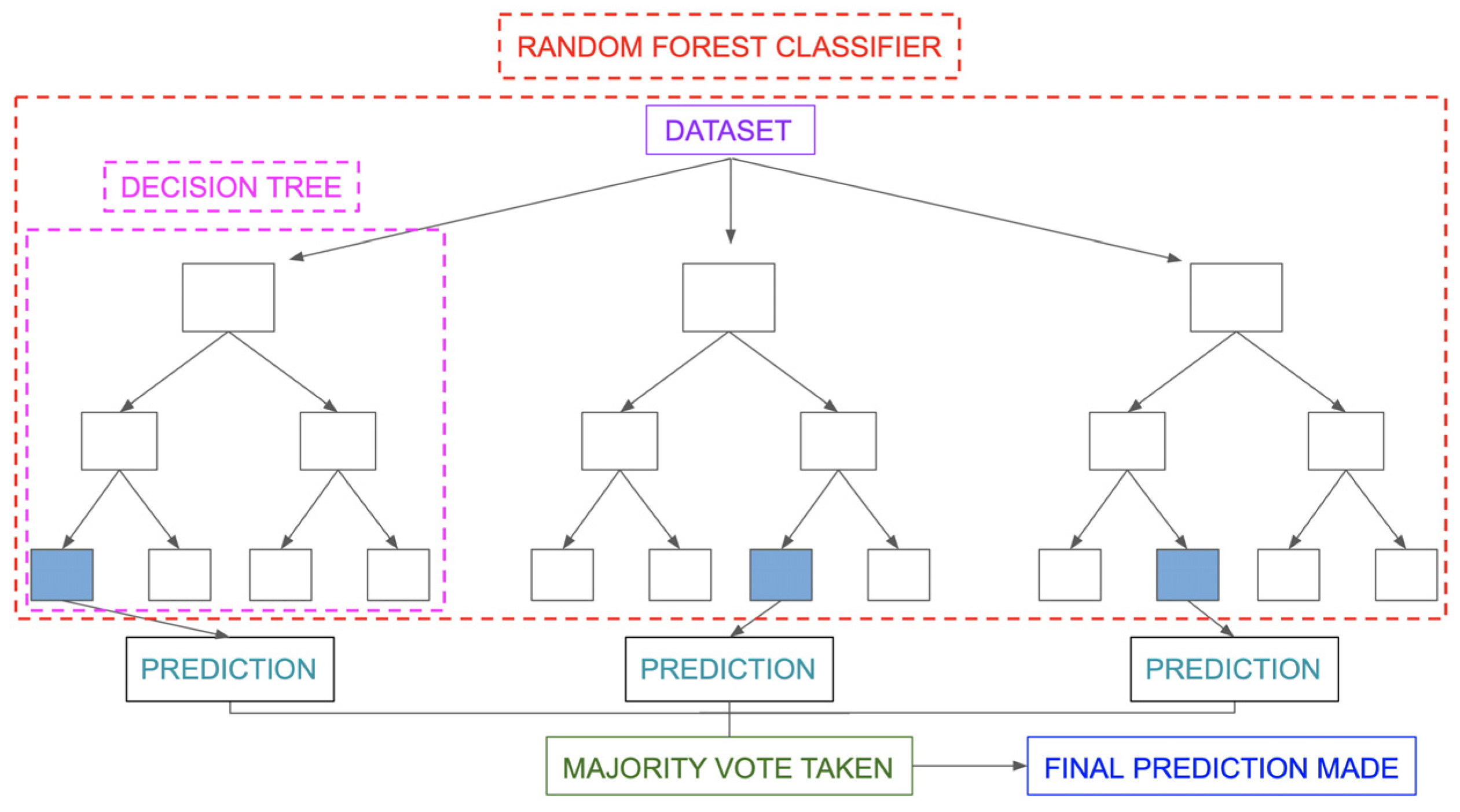

2.4. Machine Learning Algorithm

- Take a bootstrap sample [] of size N from [x, y];

- Use [] as the training data to train the t-th decision nodes by using a binary recursive function;

- Repeat the following steps recursively for each unsplit node until the stopping criteria are met.

3. Methodology

3.1. UTM Monitoring Application Setup

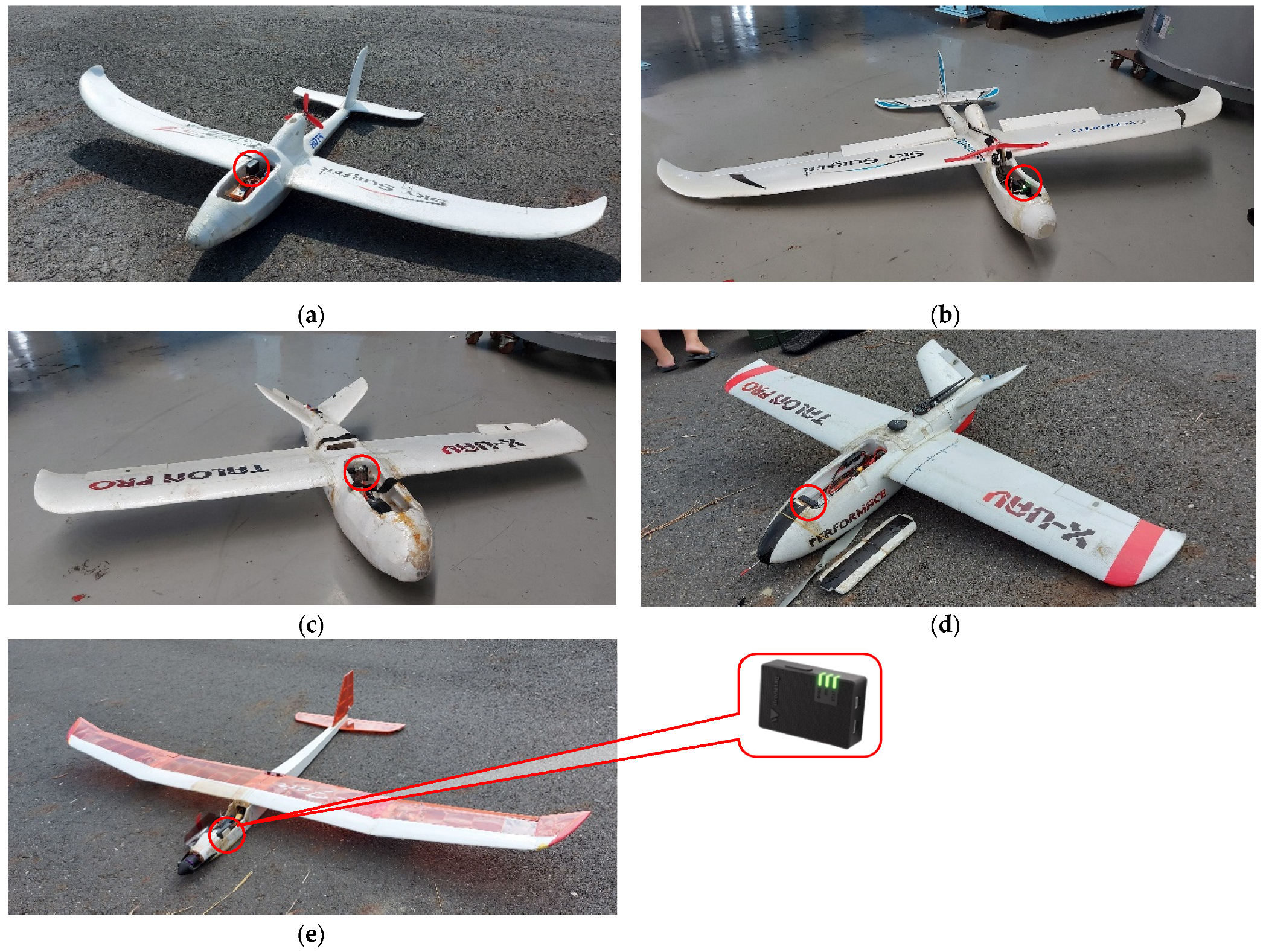

3.2. Remote ID and UAV Hardware

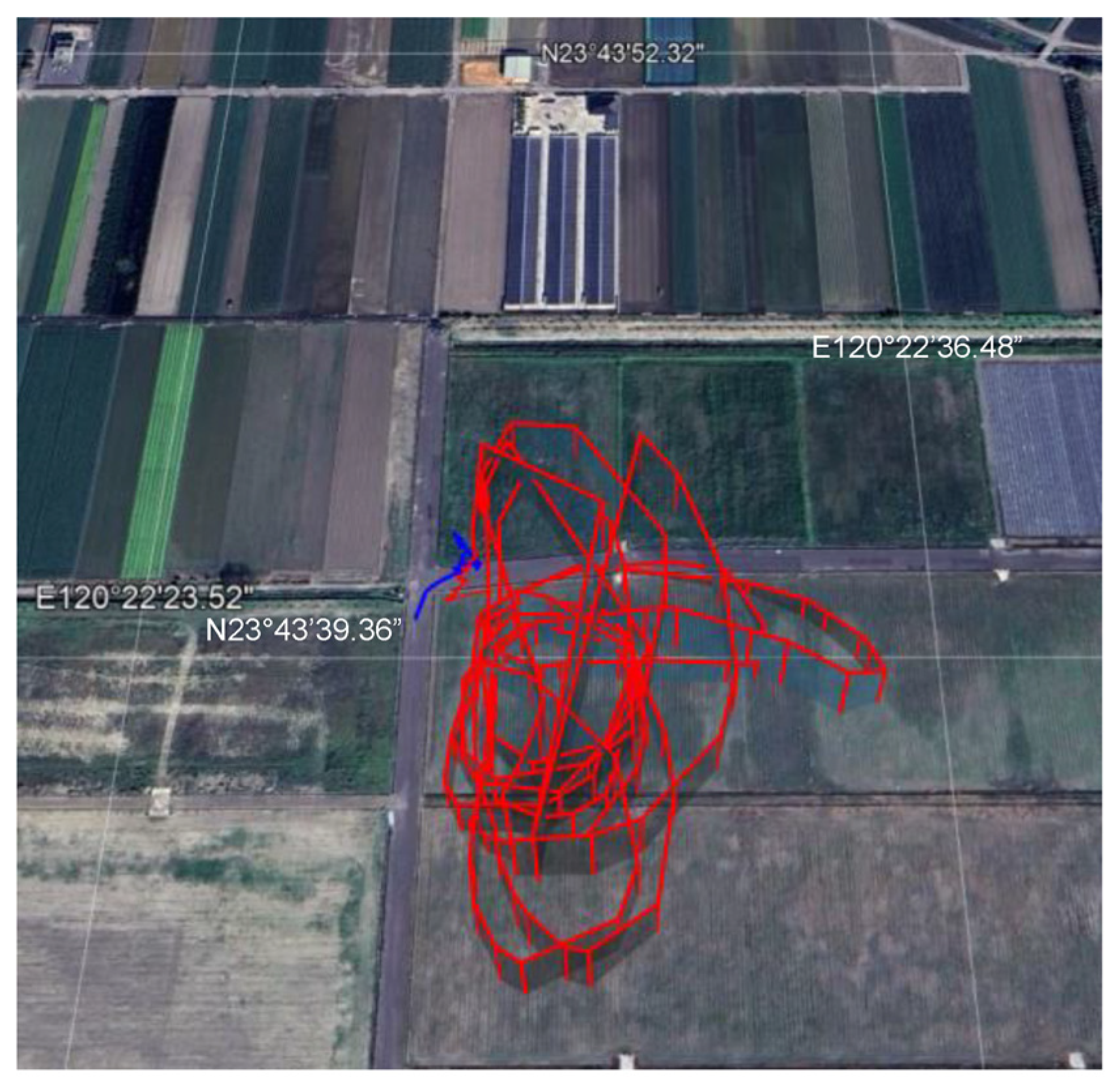

3.3. Location and Flight Test Scenarios

4. Flight Test Results and Discussion

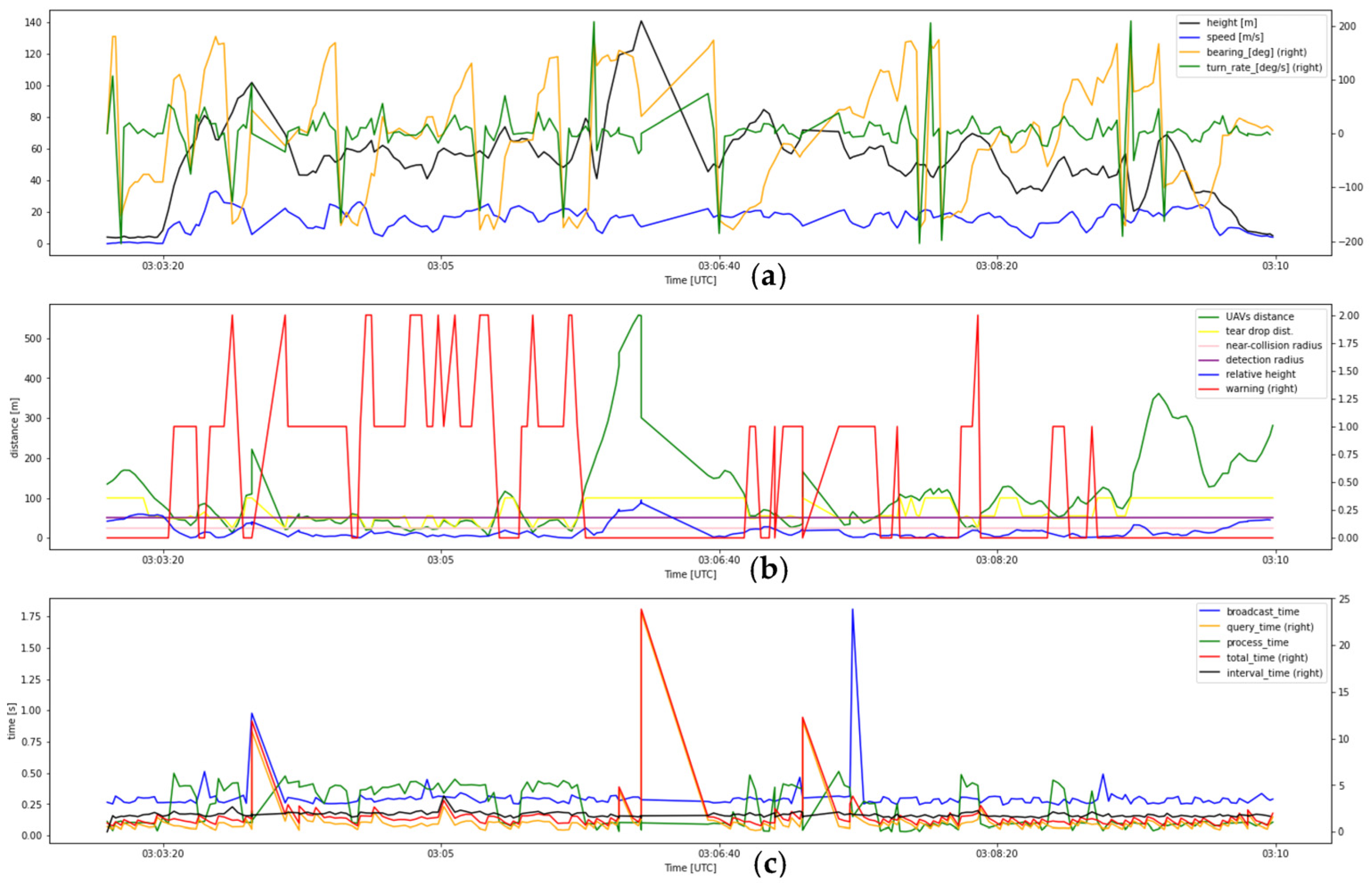

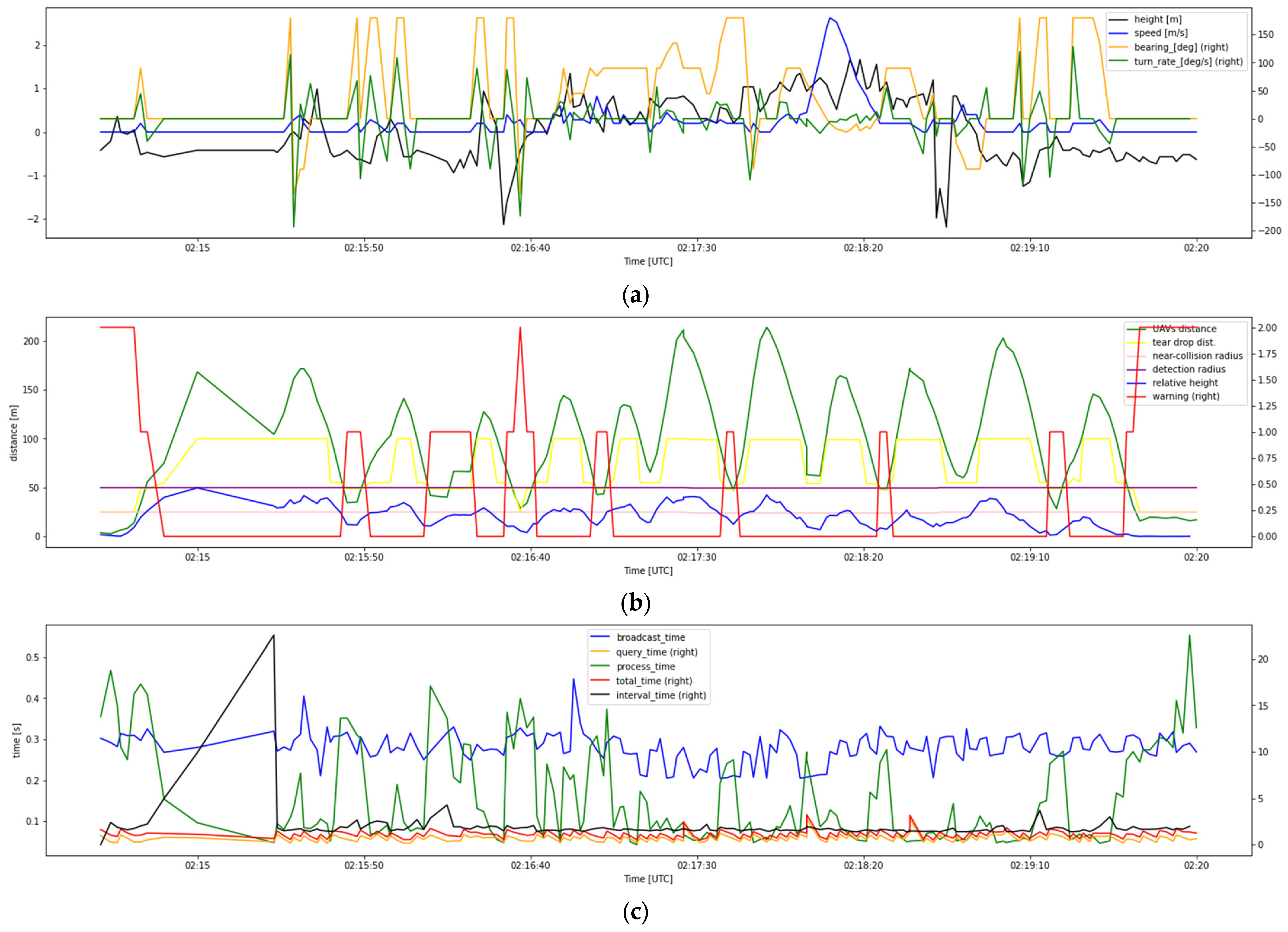

4.1. Flight Test Records

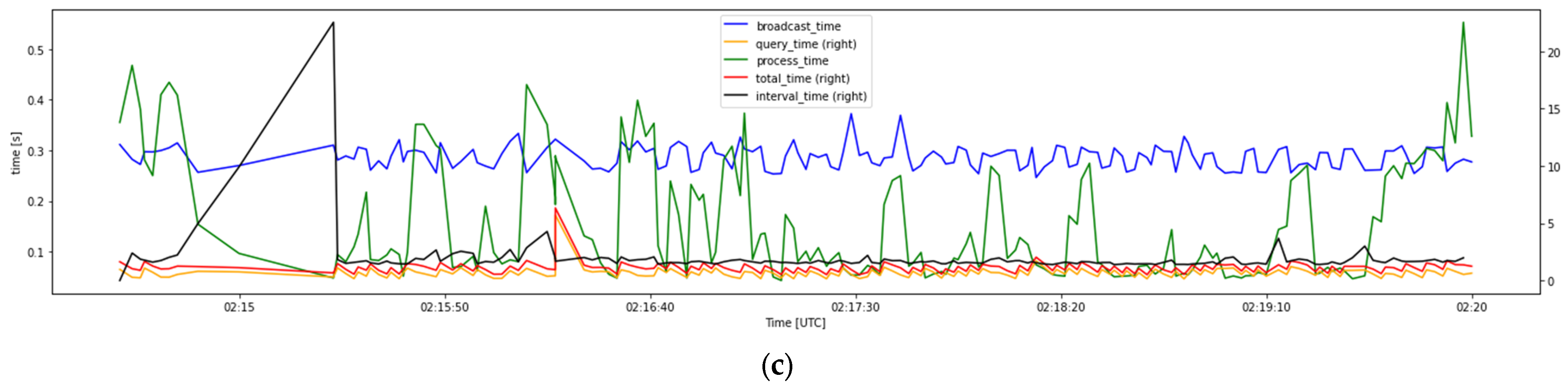

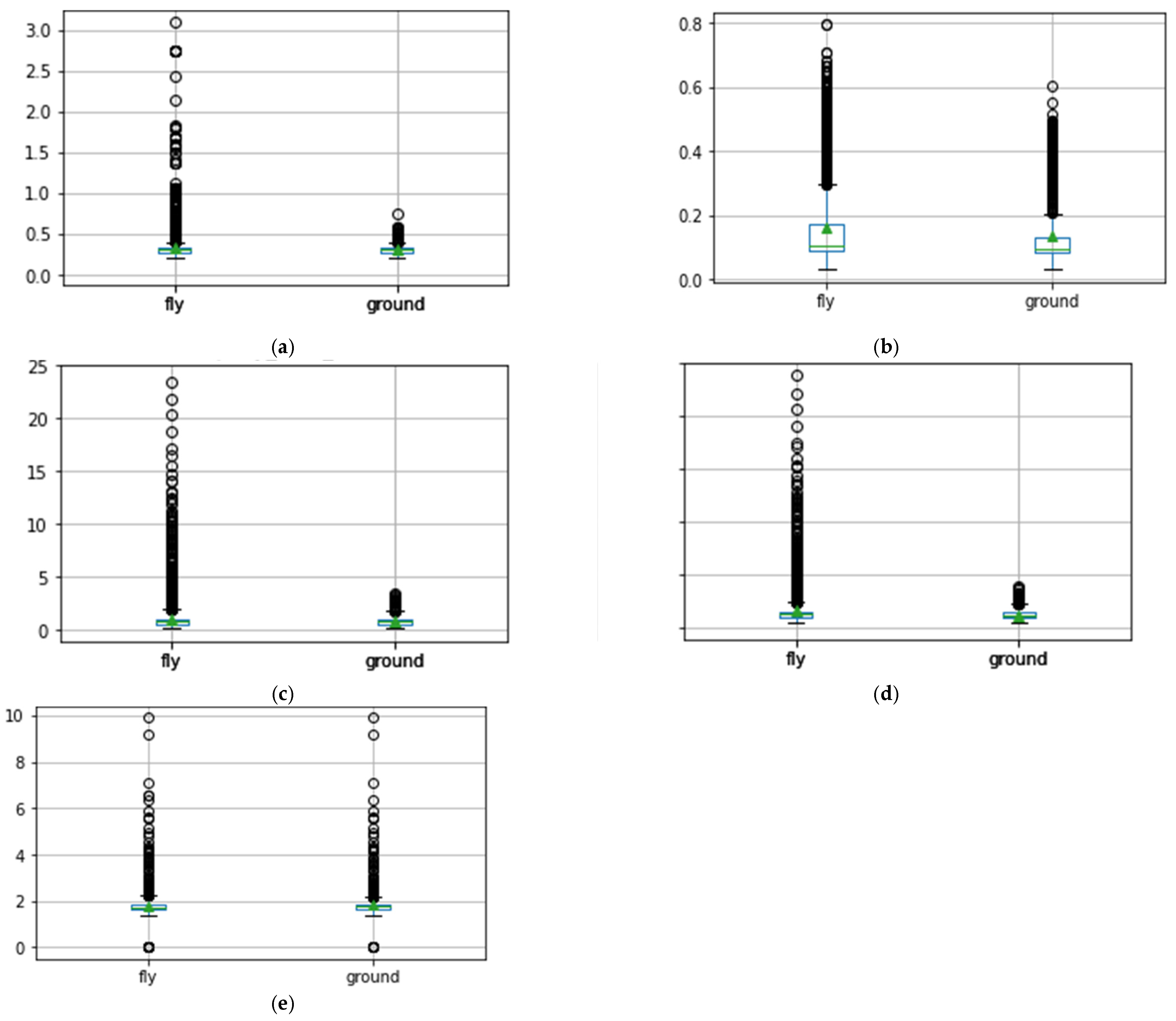

4.2. Latency Time Analysis

4.2.1. Livelihood of Location Analysis

4.2.2. Flying Condition Analysis

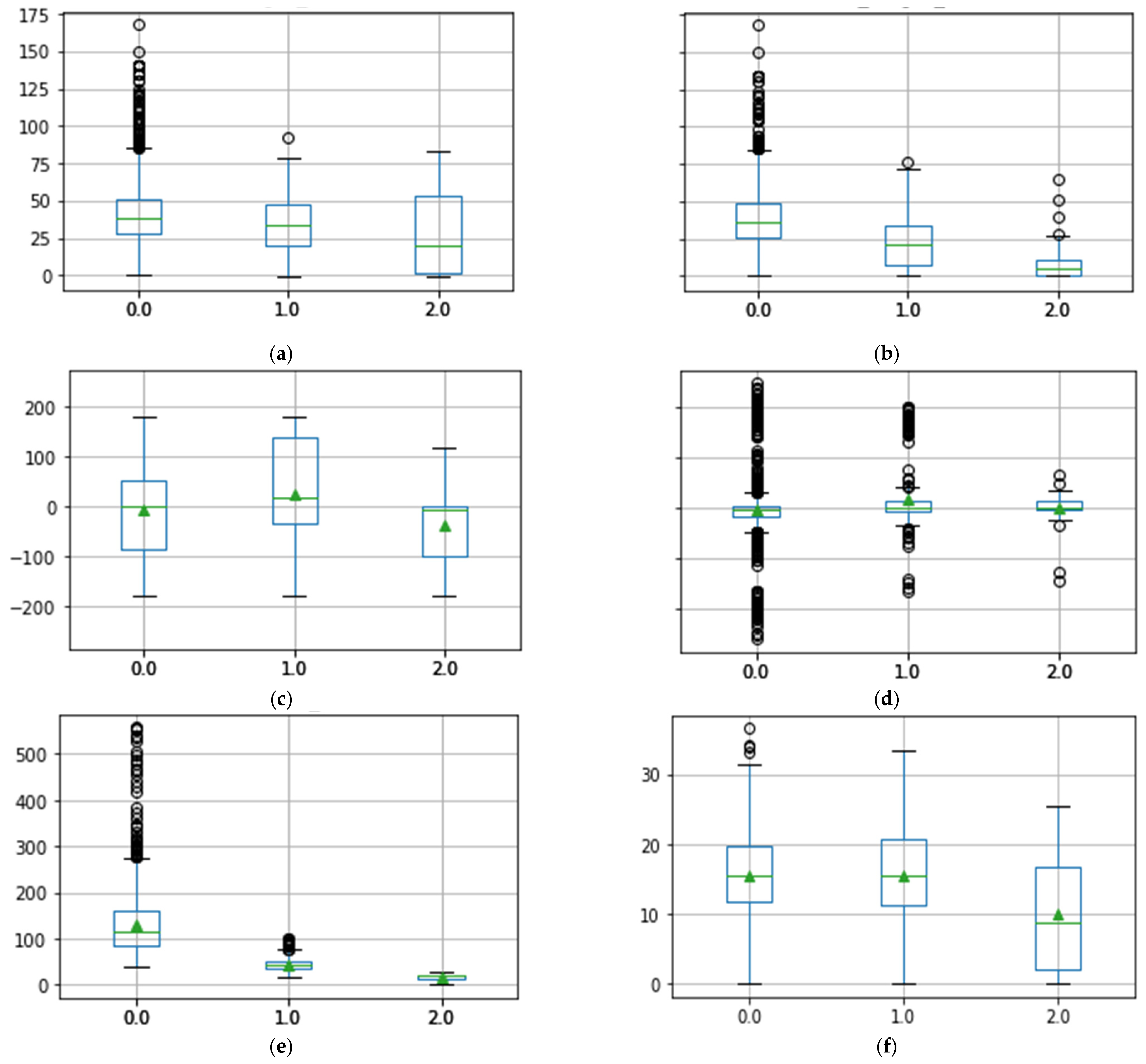

4.3. Detection Warning Analysis

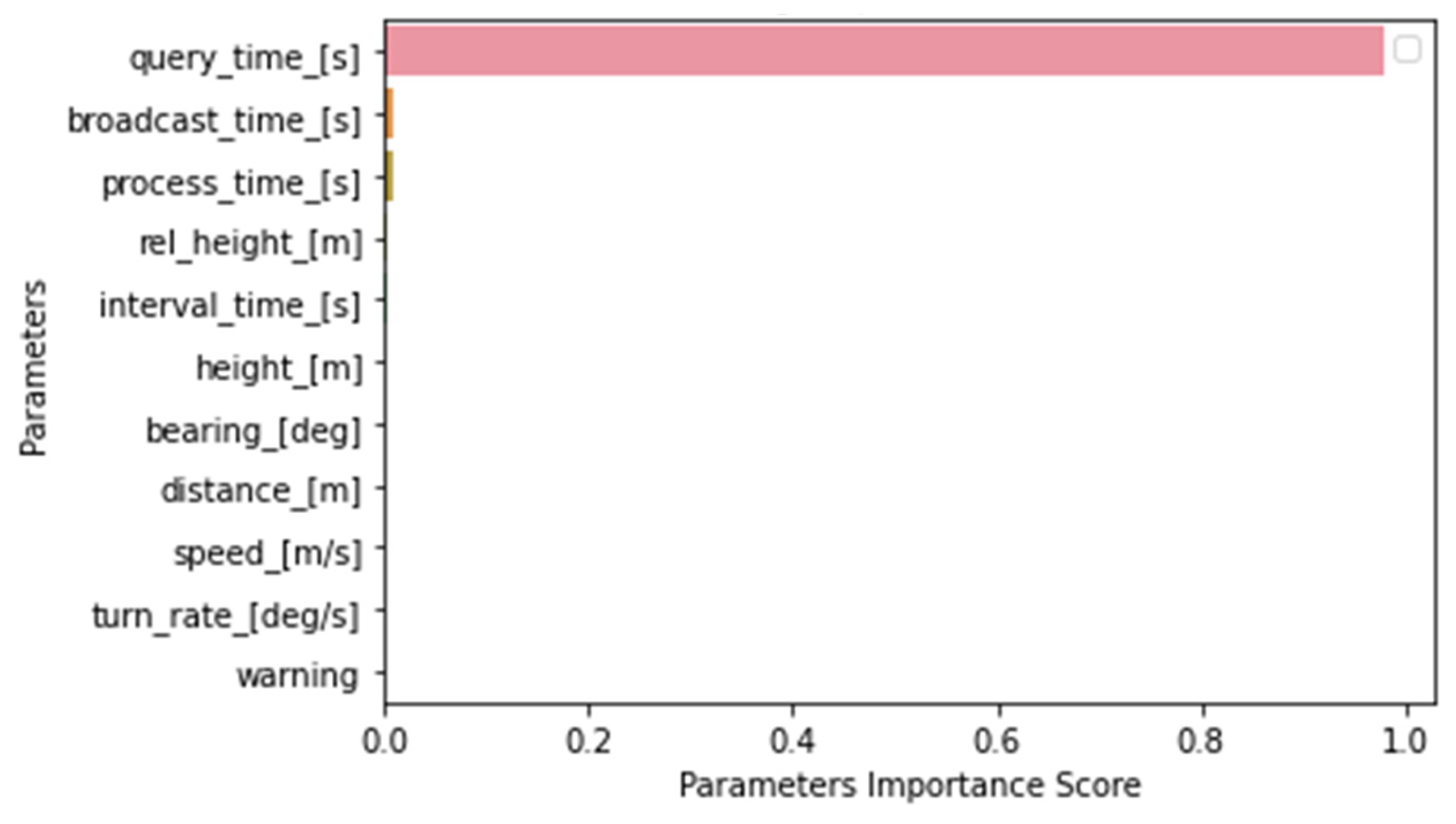

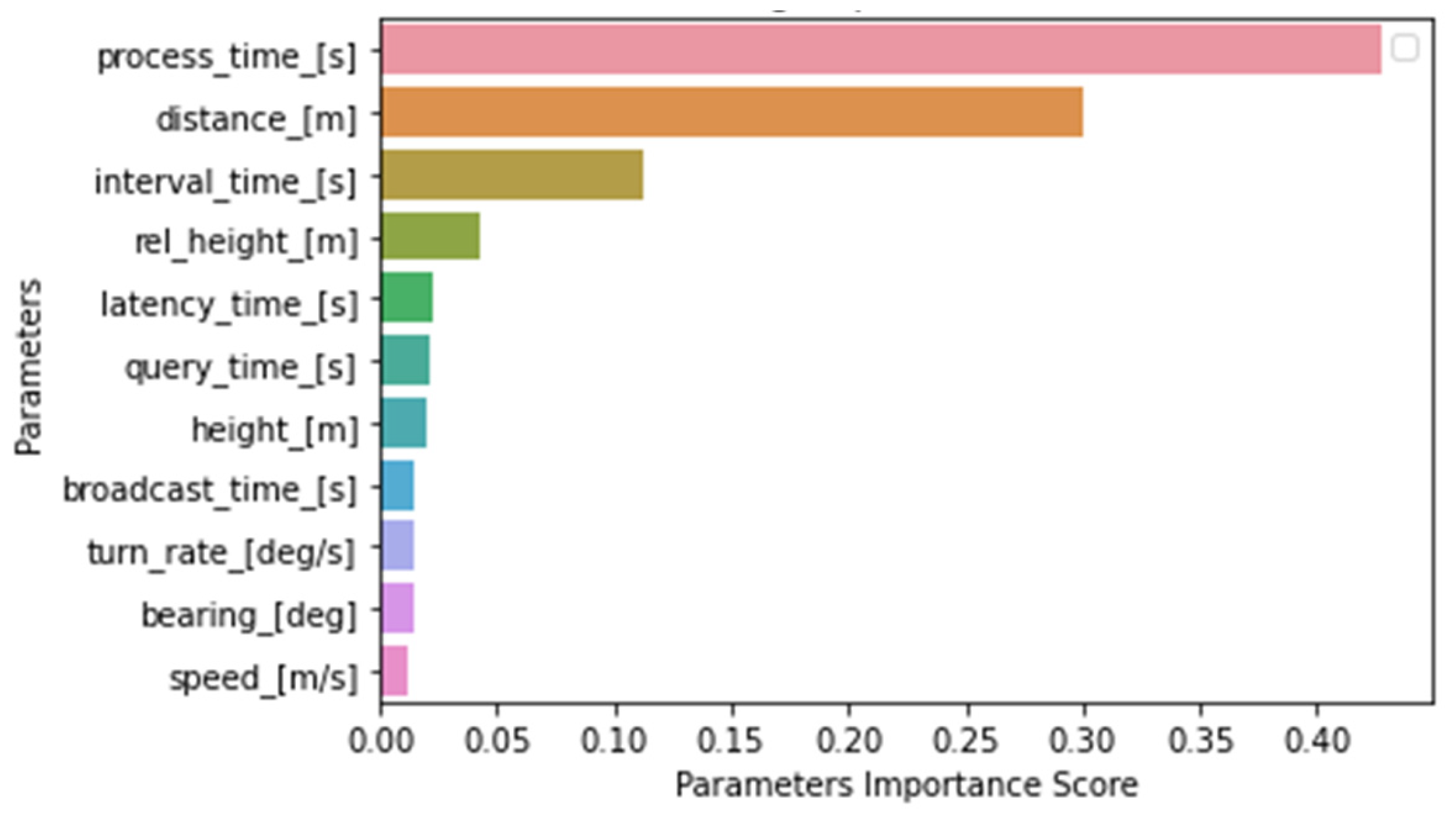

4.4. Random Forest Analysis

4.4.1. Latency Time Analysis

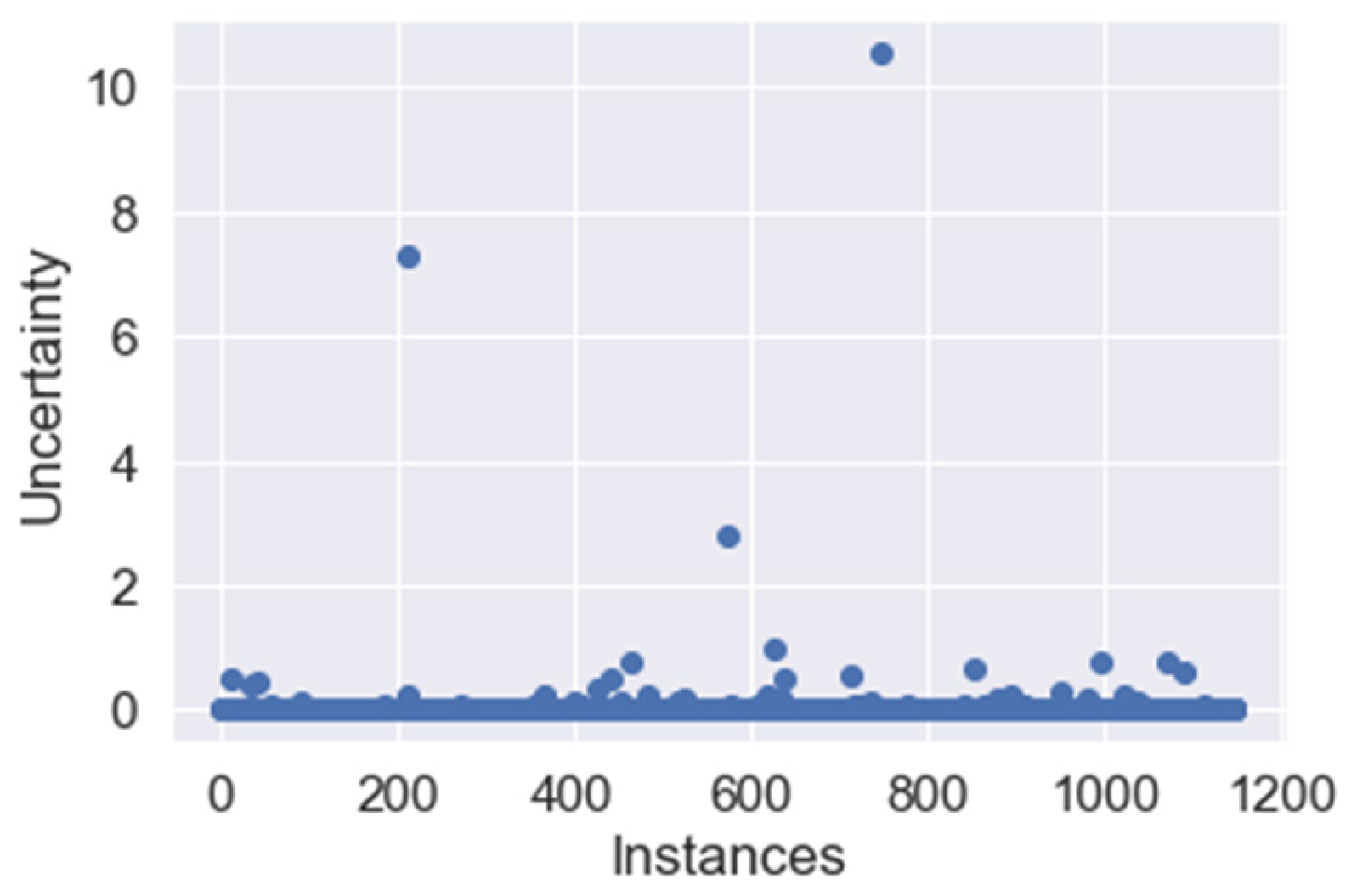

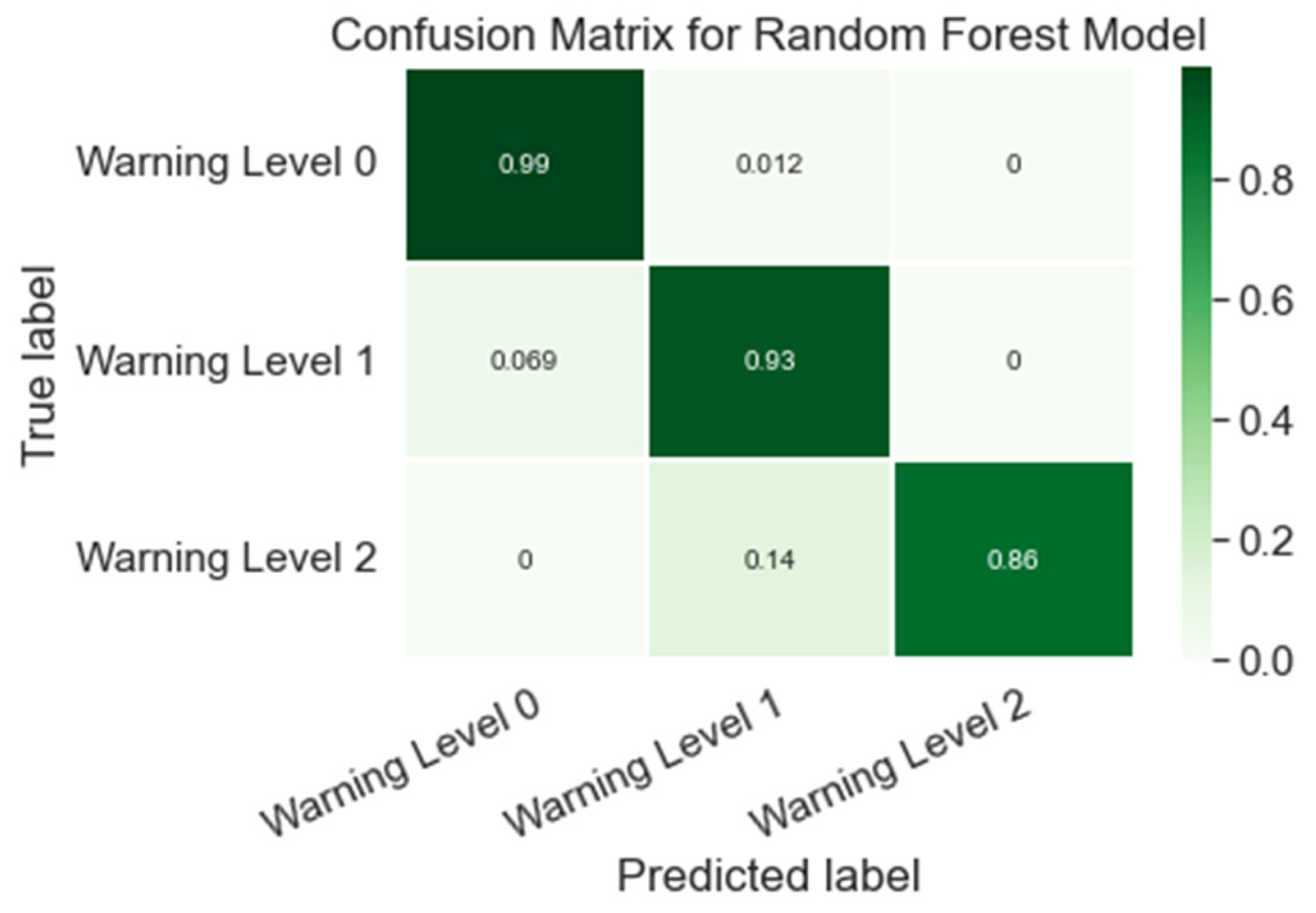

4.4.2. Detection Warning Analysis

5. Conclusions and Recommendations

5.1. Conclusions

5.2. Recommendations

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

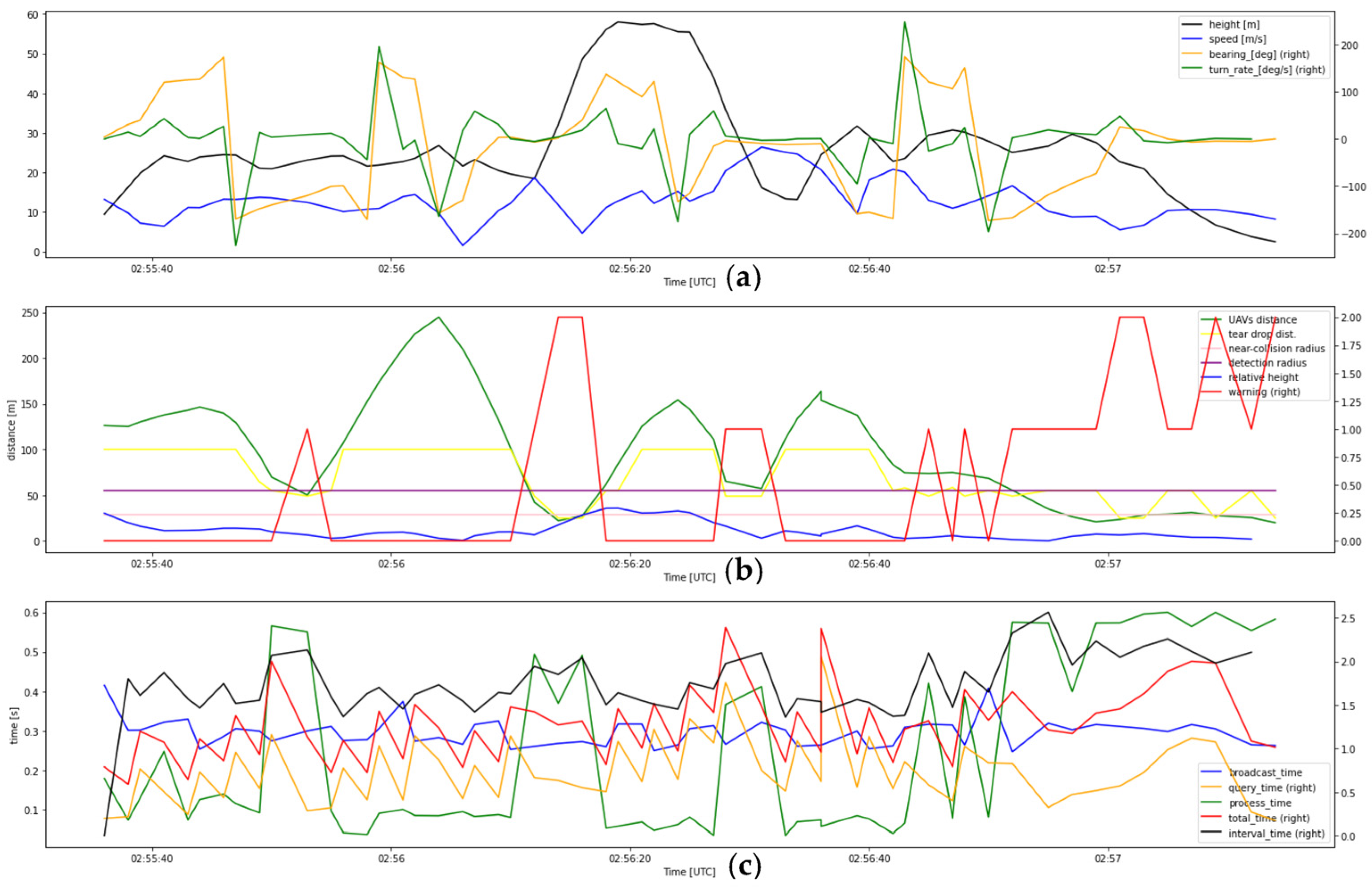

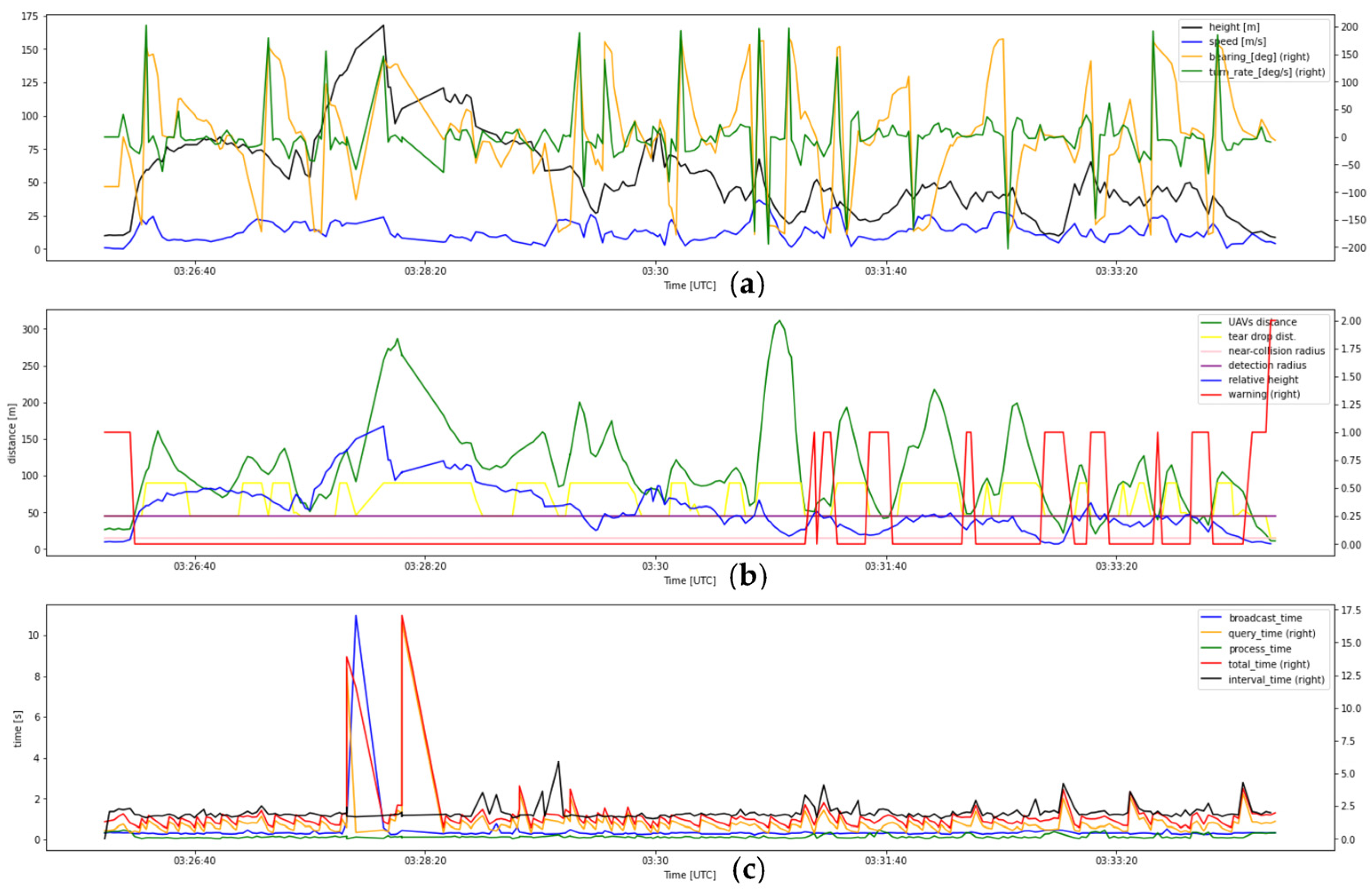

- Flight test data of record number 1

- 2.

- Flight test data of record number 2

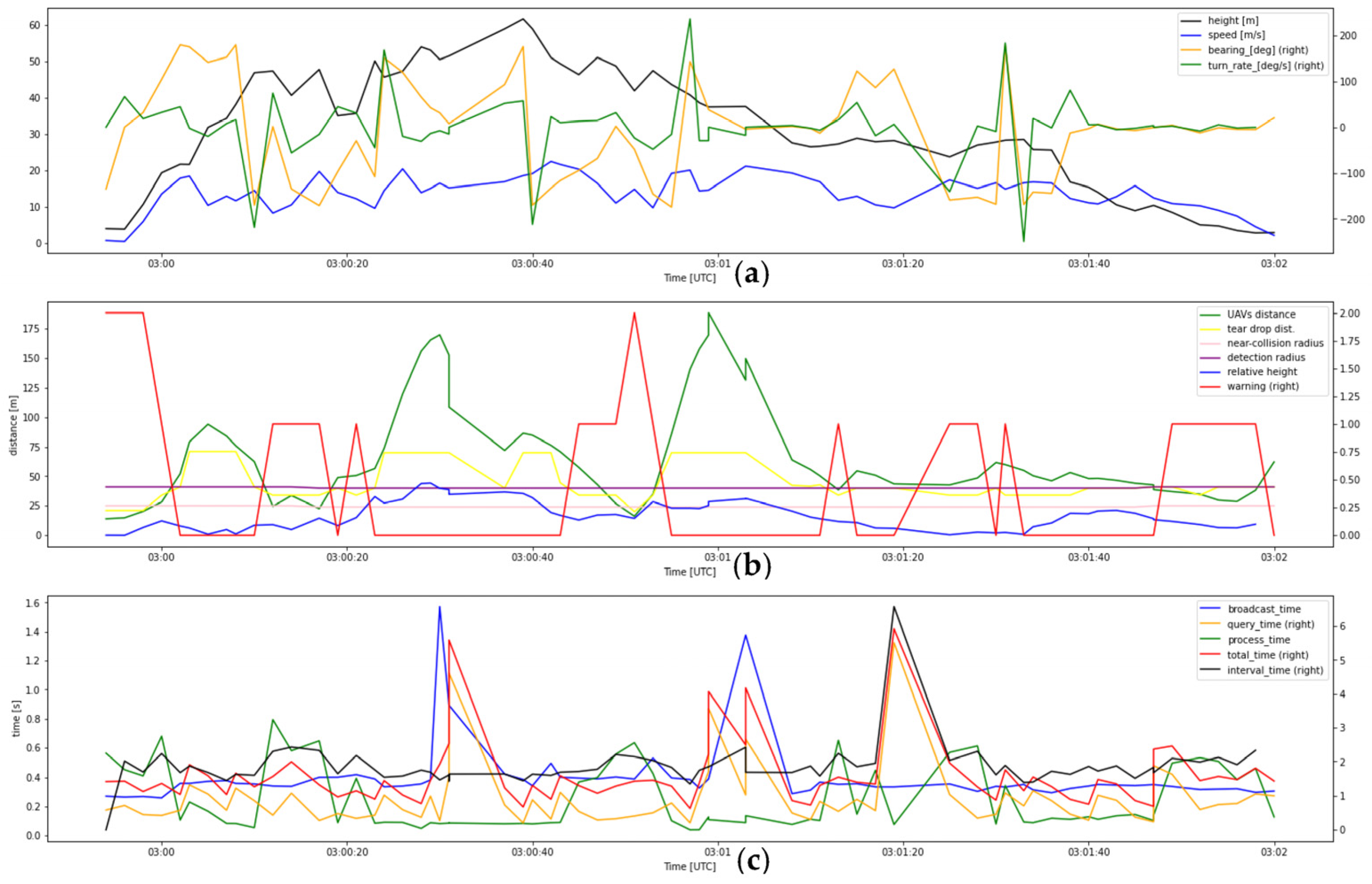

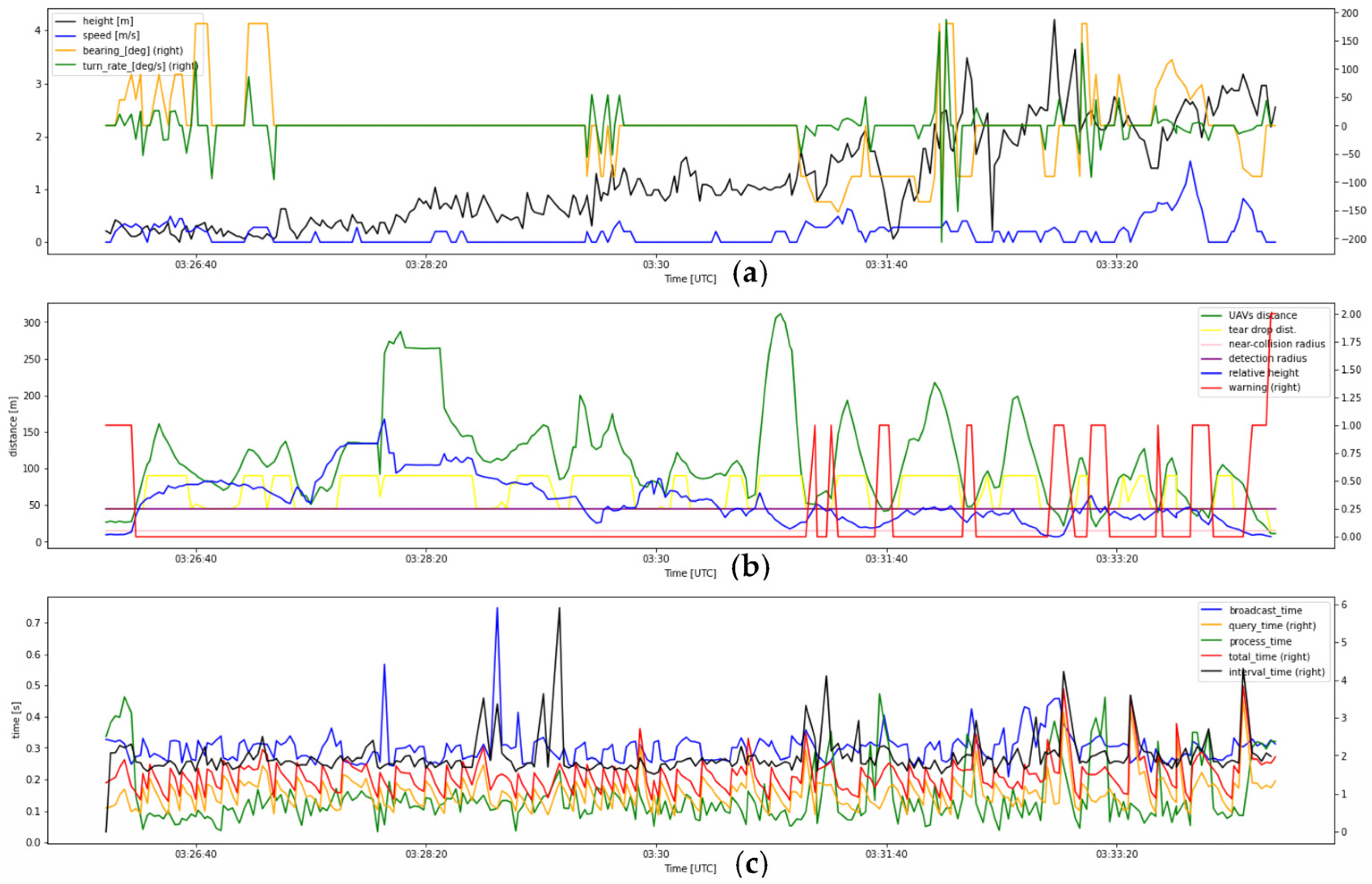

- 3.

- Flight test data of record number 3

- 4.

- Flight test data of record number 4

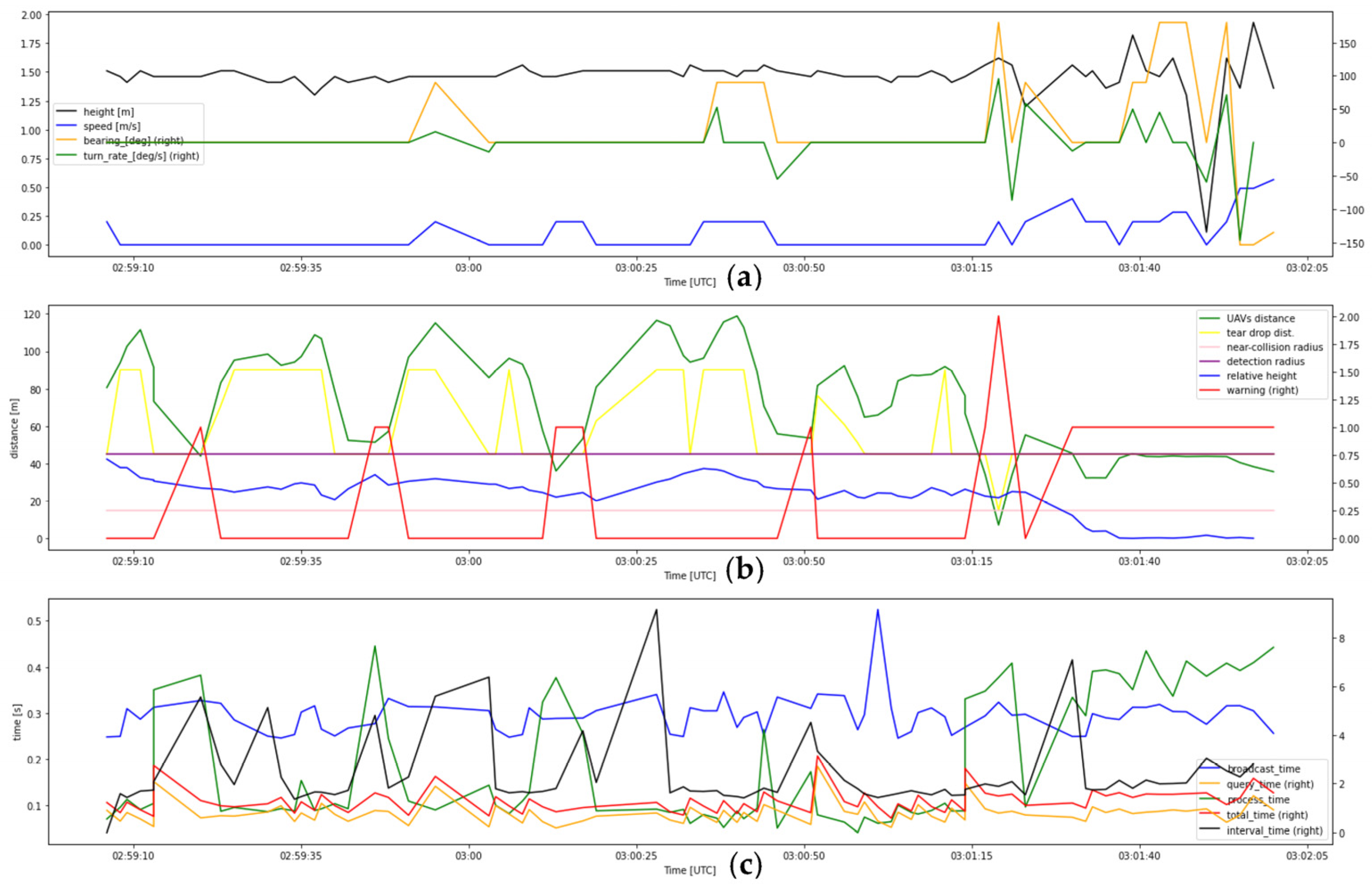

- 5.

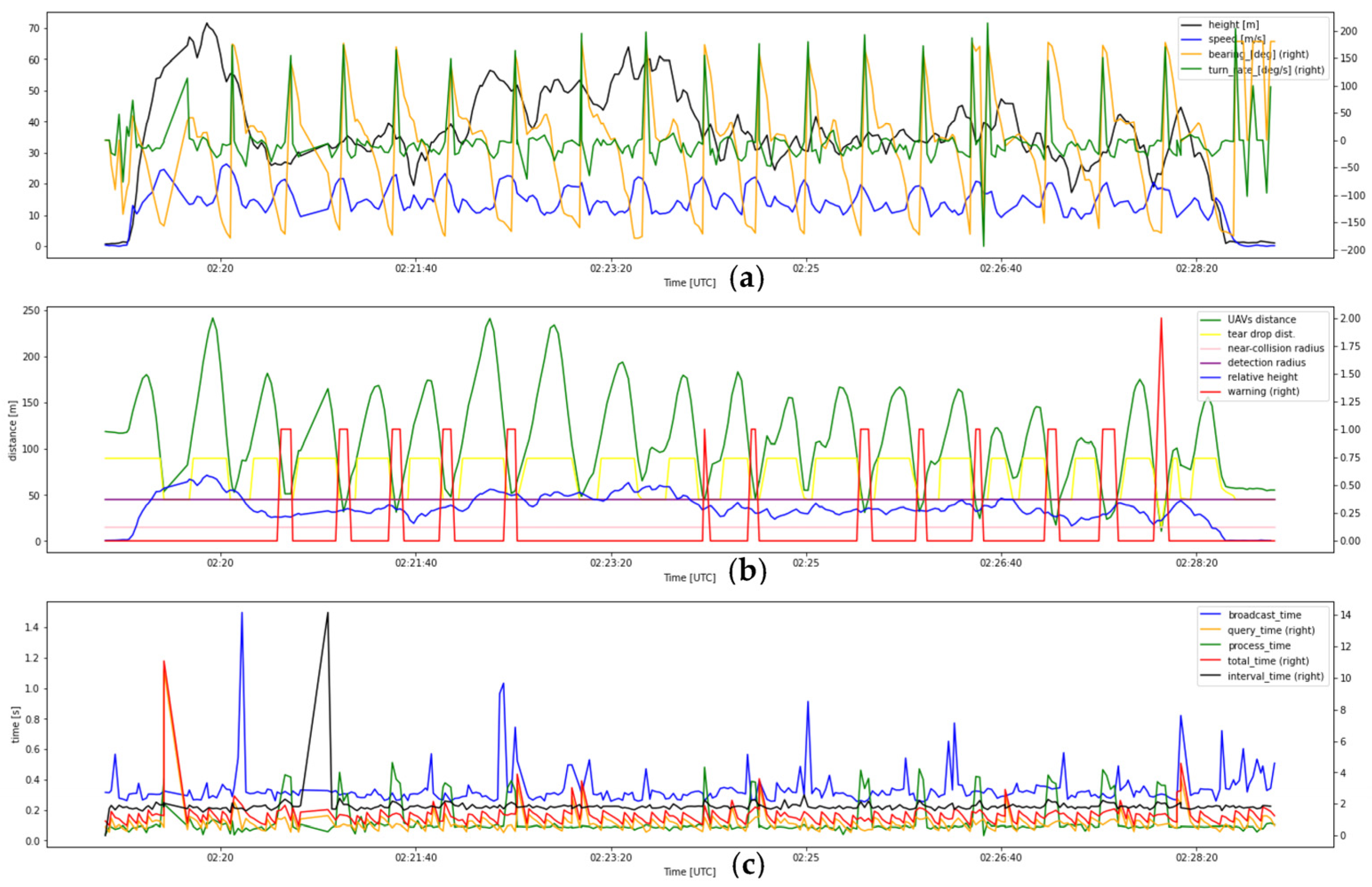

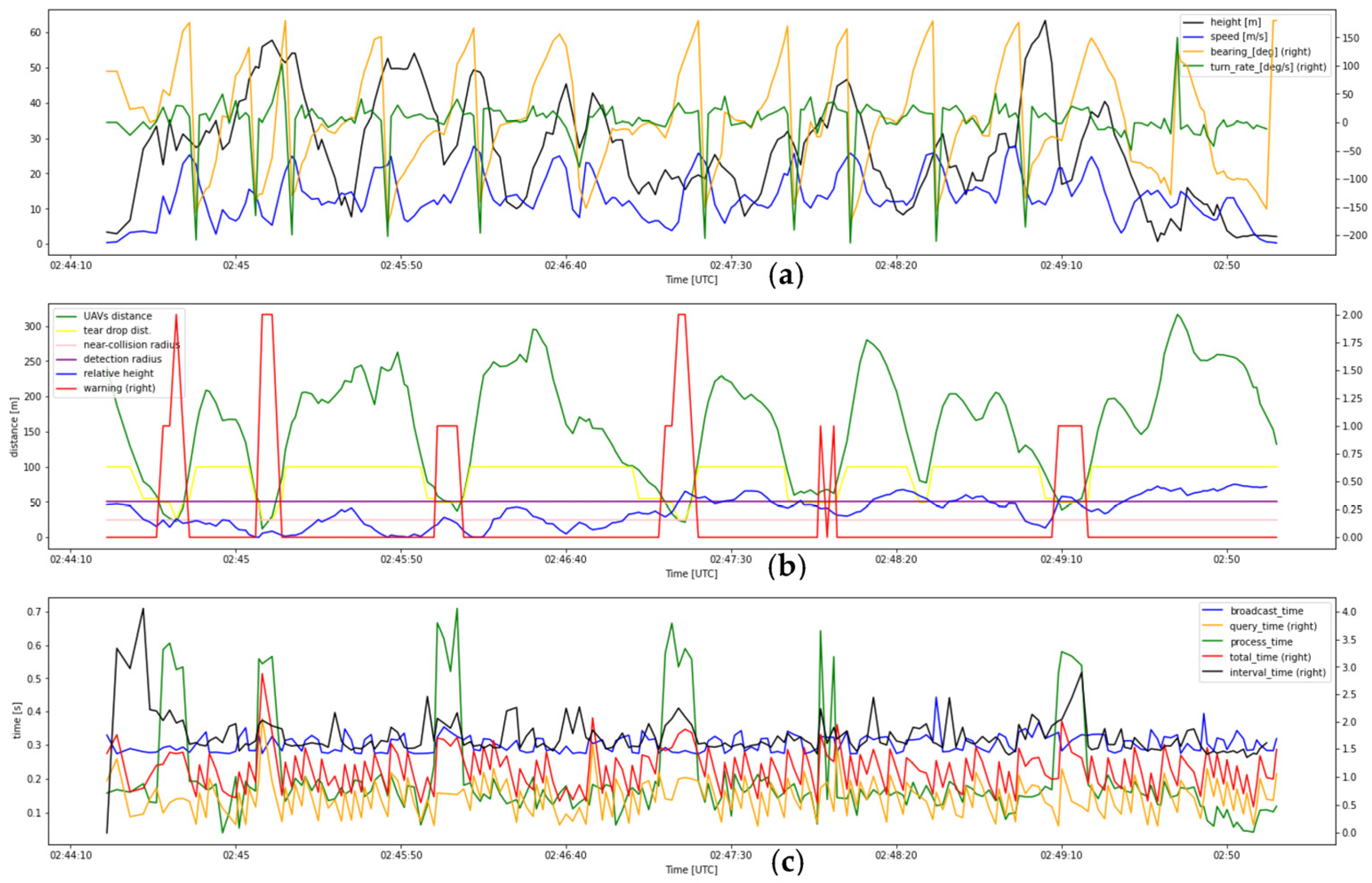

- Flight test data of record number 5

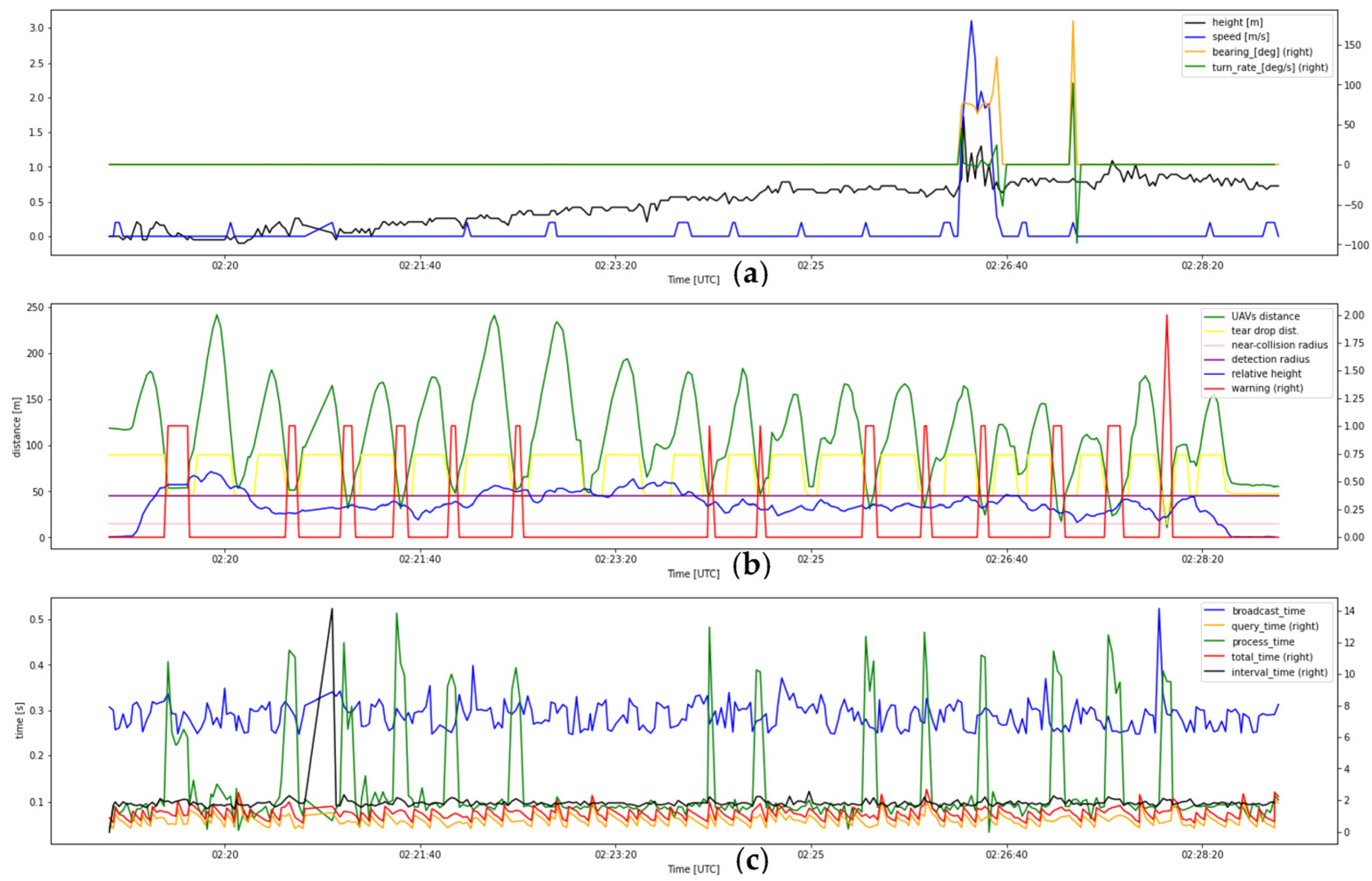

- 6.

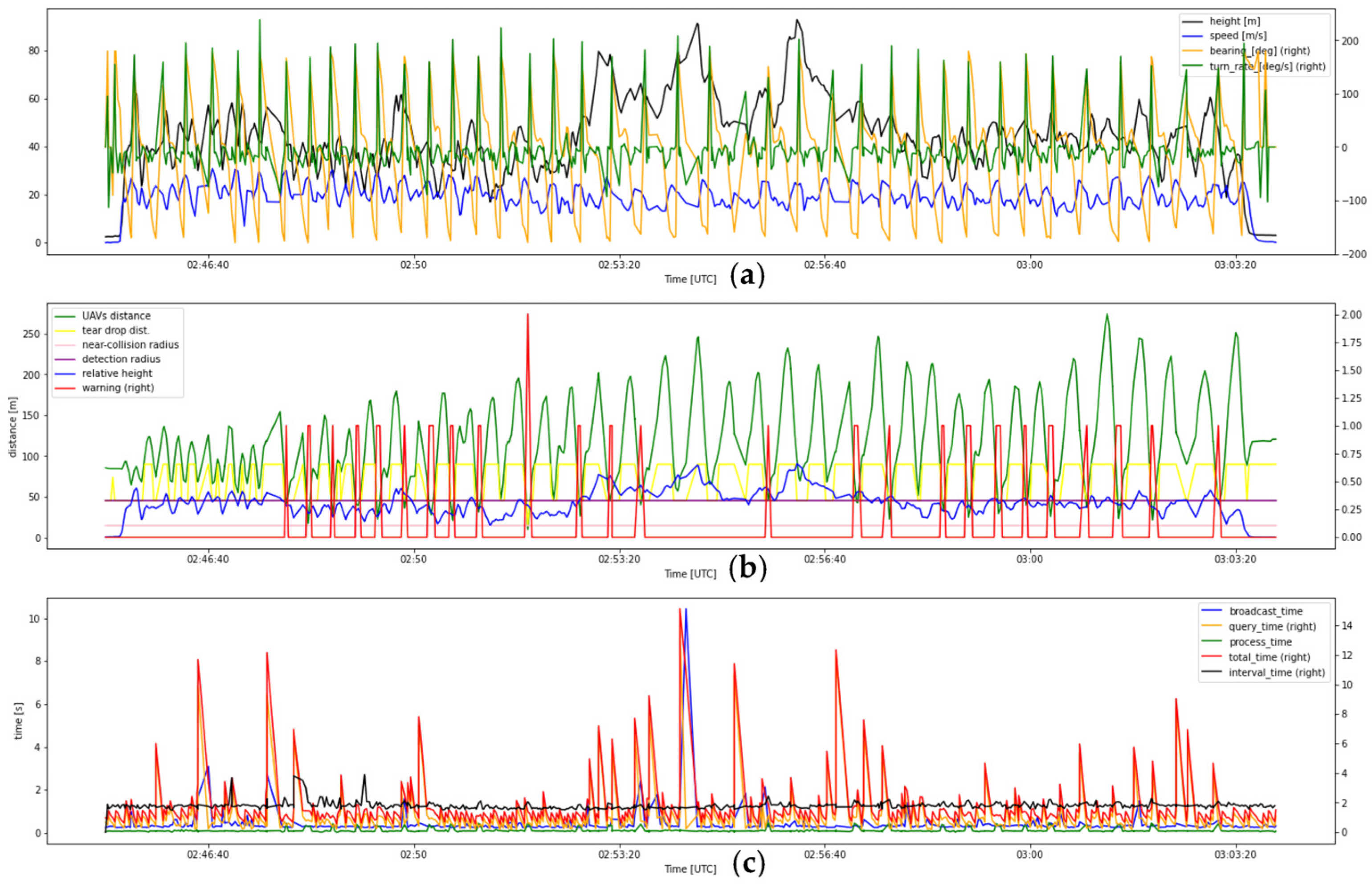

- Flight test data of record number 6

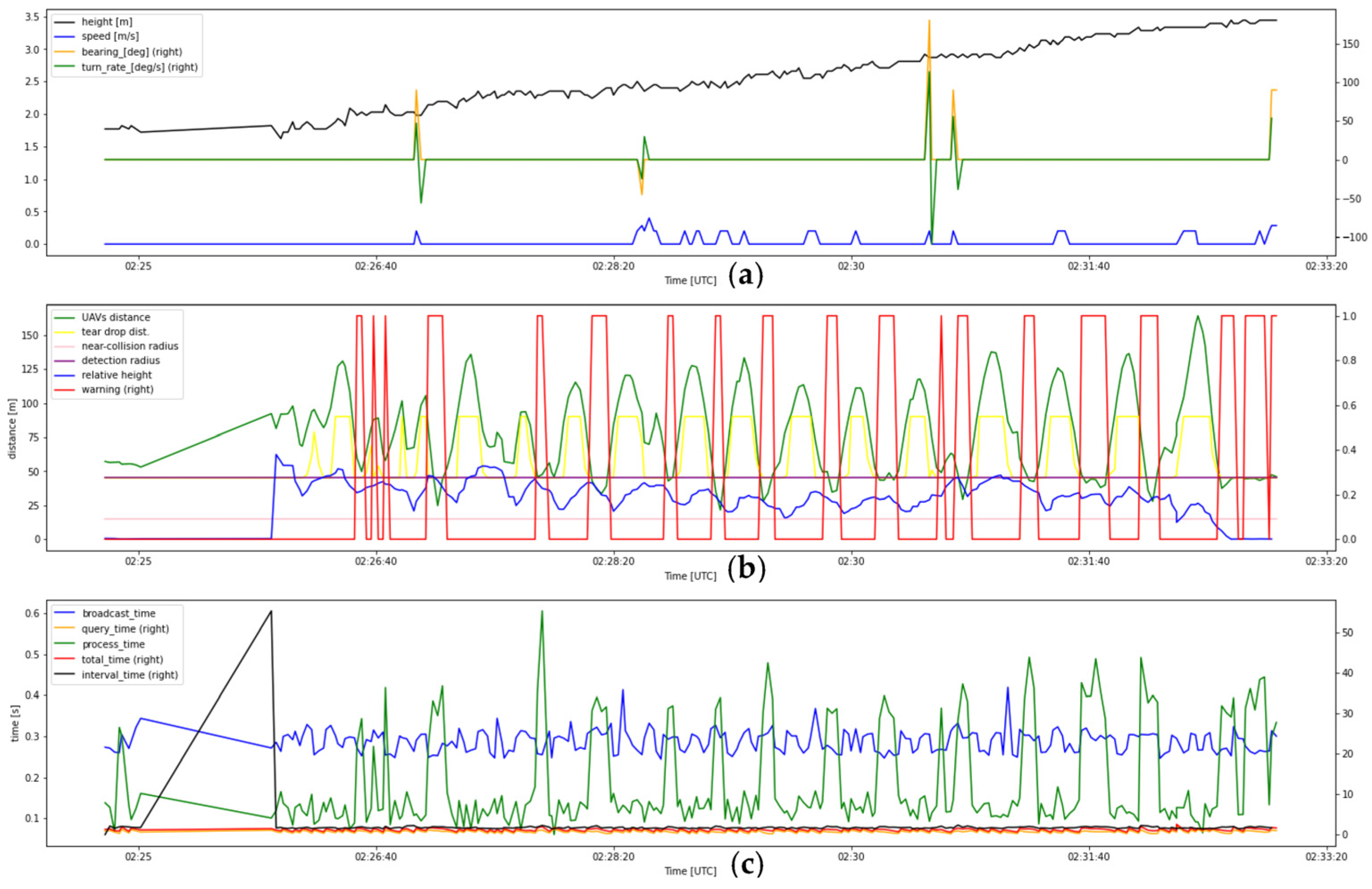

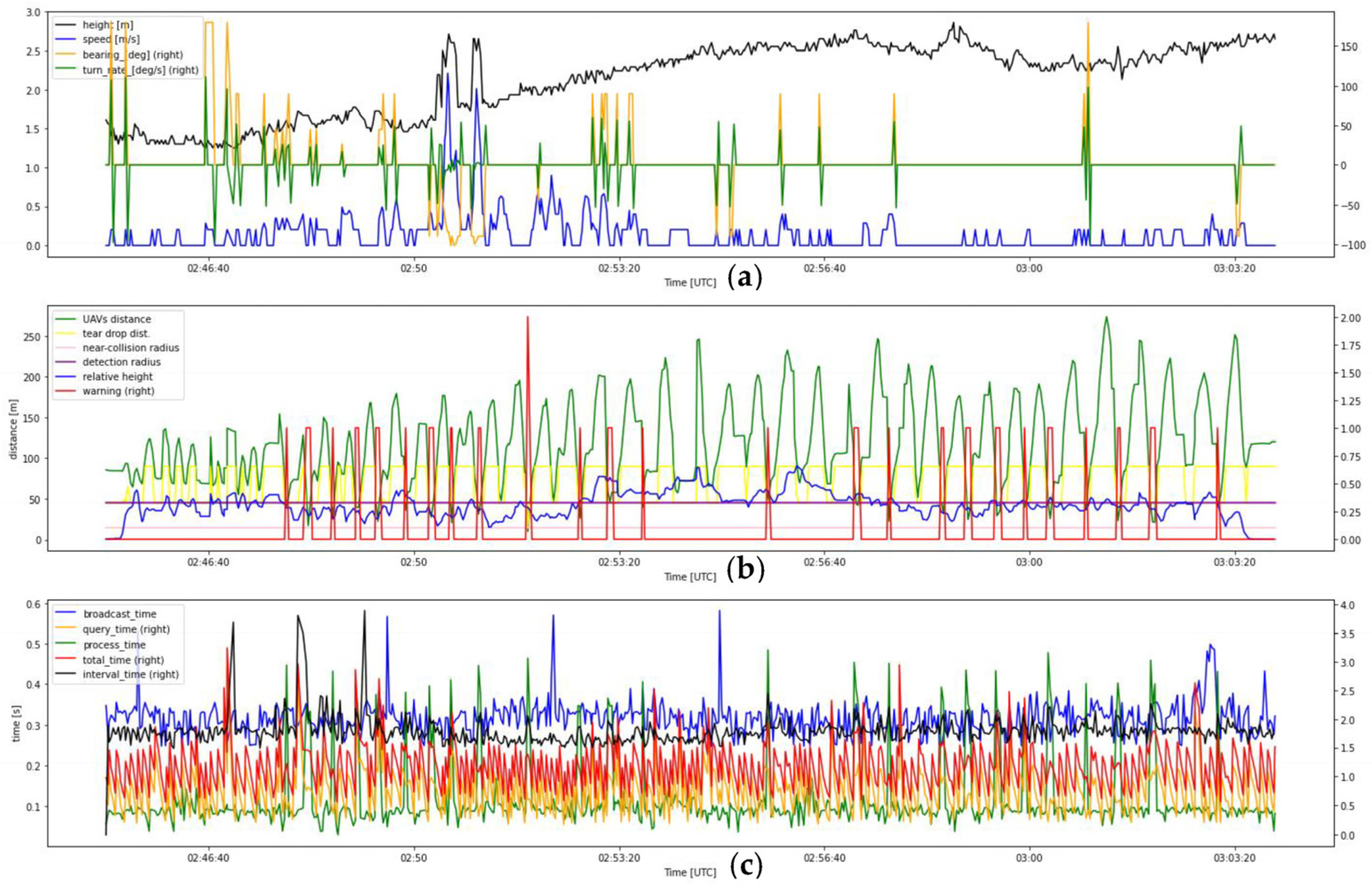

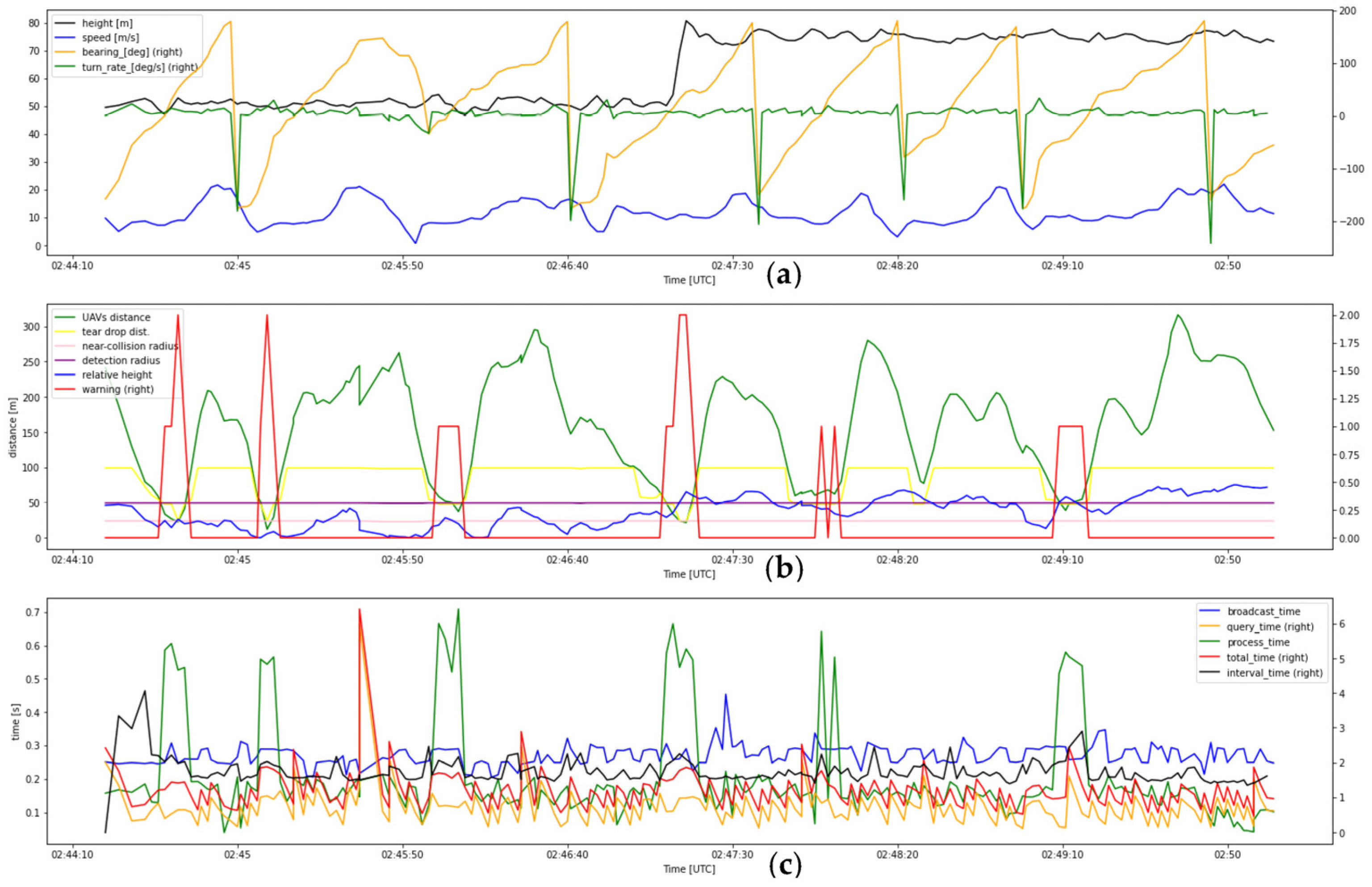

- 7.

- Flight test data of record number 7

- 8.

- 9.

- Flight test data of record number 9

- 10.

- Flight test data of record number 10

References

- Moshref-Javadi, M.; Winkenbach, M. Applications and Research avenues for drone-based models in logistics: A classification and review. Expert Syst. Appl. 2021, 177, 114854. [Google Scholar] [CrossRef]

- Gallacher, D. Drone Applications for Environmental Management in Urban Spaces: A Review. Int. J. Sustain. Land Use Urban Plan. 2017, 3, 1–14. [Google Scholar] [CrossRef]

- Orusa, T.; Viani, A.; Moyo, B.; Cammareri, D.; Borgogno-Mondino, E. Risk Assessment of Rising Temperatures Using Landsat 4–9 LST Time Series and Meta® Population Dataset: An Application in Aosta Valley, NW Italy. Remote Sens. 2023, 15, 2348. [Google Scholar] [CrossRef]

- Abdulridha, J.; Batuman, O.; Ampatzidis, Y. UAV-based remote sensing technique to detect citrus canker disease utilizing hyperspectral imaging and machine learning. Remote Sens. 2019, 11, 1373. [Google Scholar] [CrossRef]

- Dilshad, N.; Hwang, J.Y.; Song, J.S.; Sung, N.M. Applications and Challenges in Video Surveillance via Drone: A Brief Survey. In Proceedings of the International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 21–23 October 2020; pp. 728–732. [Google Scholar] [CrossRef]

- Simic Milas, A.; Cracknell, A.P.; Warner, T.A. Drones—The third generation source of remote sensing data. Int. J. Remote Sens. 2018, 39, 7125–7137. [Google Scholar] [CrossRef]

- Kuchar, J.K.; Yang, L.C. A Review of Conflict Detection and Resolution Modeling Methods. IEEE Trans. Intell. Transp. Syst. 2000, 1, 179–189. [Google Scholar] [CrossRef]

- Baum, M.S. Unmanned Aircraft Systems Traffic Management: UTM; CRC Press: Boca Raton, FL, USA, 2022; ISBN 9780367644734. [Google Scholar]

- Hu, J.; Yang, X.; Wang, W.; Wei, P.; Ying, L.; Liu, Y. UAS Conflict Resolution in Continuous Action Space using Deep Reinforcement Learning. In Proceedings of the AIAA Aviation 2020 Forum, Virtual, 15–19 June 2020. [Google Scholar] [CrossRef]

- Guo, Q.; Zhang, J.; Guo, S.; Ye, Z.; Deng, H.; Hou, X.; Zhang, H. Urban Tree Classification Based on Object-Oriented Approach and Random Forest Algorithm Using Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2022, 14, 3885. [Google Scholar] [CrossRef]

- Arteaga, R.; Dandachy, M.; Truong, H.; Aruljothi, A.; Vedantam, M.; Epperson, K.; Mccartney, R.; Systems, V.A.; City, O.; Aerospace, V.; et al. µADS-B Detect and Avoid Flight Tests on Phantom 4 Unmanned Aircraft System. In Proceedings of the AIAA Infotech @ Aerospace Conference, Kissimmee, FL, USA, 8–12 January 2018. [Google Scholar]

- Mitchell, T.; Hartman, M.; Jacob, J.D. Testing and Evaluation of UTM Systems in a BVLOS Environment. In Proceedings of the AIAA Aviation Forum, Virtual, 15–19 June 2020. [Google Scholar]

- Schelle, A.; Völk, F.; Schwarz, R.T.; Knopp, A.; Stütz, P. Evaluation of a Multi-Mode-Transceiver for Enhanced UAV Visibility and Connectivity in Mixed ATM/UTM Contexts. Drones 2022, 6, 80. [Google Scholar] [CrossRef]

- Rymer, N.; Moore, A.J.; Young, S.; Glaab, L.; Smalling, K.; Consiglio, M. Demonstration of Two Extended Visual Line of Sight Methods for Urban UAV Operations. In Proceedings of the AIAA Aviation Forum, Virtual, 15–19 June 2020; pp. 1–14. [Google Scholar]

- Wang, C.H.J.; Ng, E.M.; Low, K.H. Investigation and Modeling of Flight Technical Error (FTE) Associated With UAS Operating With and Without Pilot Guidance. IEEE Trans. Veh. Technol. 2021, 70, 12389–12401. [Google Scholar] [CrossRef]

- Dolph, C.; Glaab, L.; Allen, B.; Consiglio, M.; Iftekharuddin, K. An Improved Far-Field Small Unmanned Aerial System Optical Detection Algorithm. In Proceedings of the IEEE/AIAA 38th Digital Avionics Systems Conference (DASC), San Diego, CA, USA, 8–12 September 2019. [Google Scholar] [CrossRef]

- Huang, Z.; Lai, Y. Image-Based Sense and Avoid of Small Scale UAV Using Deep Learning Approach. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Athenes, Greece, 1–4 September 2020. [Google Scholar]

- Loffi, J.M.; Vance, S.M.; Jacob, J.; Spaulding, L.; Dunlap, J.C. Evaluation of Onboard Detect-and-Avoid System for sUAS BVLOS Operations. Int. J. Aviat. Aeronaut. Aerosp. 2022, 9, 9. [Google Scholar] [CrossRef]

- Consiglio, M.; Duffy, B.; Balachandran, S.; Glaab, L.; Muñoz, C. Sense and avoid characterization of the independent configurable architecture for reliable operations of unmanned systems. In Proceedings of the 13th USA/Europe Air Traffic Management Research and Development Seminar (ATM2019), Vienna, Austria, 17–21 June 2019. [Google Scholar]

- Szatkowski, G.N.; Kriz, A.; Ticatch, L.A.; Briggs, R.; Coggin, J.; Morris, C.M. Airborne Radar for sUAS Sense and Avoid. In Proceedings of the IEEE/AIAA 38th Digital Avionics Systems Conference (DASC), San Diego, CA, USA, 8–12 September 2019. [Google Scholar] [CrossRef]

- Duffy, B.; Balachandran, S.; Peters, A.; Smalling, K.; Consiglio, M.; Glaab, L.; Moore, A.; Muñoz, C. Onboard Autonomous Sense and Avoid of Non-Conforming Unmanned Aerial Systems. In Proceedings of the AIAA/IEEE Digital Avionics Systems Conference, San Antonio, TX, USA, 11–15 October 2020. [Google Scholar]

- Marques, M.; Brum, A.; Antunes, S. Sense and Avoid implementation in a small Unmanned Aerial Vehicle. In Proceedings of the 13th APCA International Conference on Control and Soft Computing, CONTROLO 2018, Ponta Delgada, Portugal, 4–6 June 2018; pp. 395–400. [Google Scholar]

- Murrell, E.; Walker, Z.; King, E.; Namuduri, K. Remote ID and Vehicle-to-Vehicle Communications for Unmanned Aircraft System Traffic Management. In Proceedings of the International Workshop on Communication Technologies for Vehicles, Bordeaux, France, 16–17 November 2020; pp. 194–202. [Google Scholar]

- Peters, A.; Balachandran, S.; Duffy, B.; Smalling, K.; Consiglio, M.; Munoz, C. Flight test results of a distributed merging algorithm for autonomous UAS operations. In Proceedings of the AIAA/IEEE 39th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 11–15 October 2020. [Google Scholar] [CrossRef]

- Lopez, J.G.; Ren, L.; Meng, B.; Fisher, R.; Markham, J.; Figard, M.; Evans, R.; Spoelhof, R.; Edwards, S. Integration and Flight Test of Small UAS Detect and Avoid on A Miniaturized Avionics Platform. In Proceedings of the IEEE/AIAA 38th Digital Avionics Systems Conference (DASC), San Diego, CA, USA, 8–12 September 2019; pp. 1–5. [Google Scholar]

- Moore, A.J.; Balachandran, S.; Young, S.; Dill, E.; Logan, M.J.; Glaab, L.; Munoz, C.; Consiglio, M. Testing enabling technologies for safe UAS urban operations. In Proceedings of the Aviation Technology, Integration, and Operations Conference, Atlanta, GA, USA, 25–29 June 2018; pp. 1–12. [Google Scholar] [CrossRef]

- F3411-19; Standard Specification for Remote ID and Tracking. ASTM: West Conshohocken, PA, USA, 2019; pp. 1–67.

- FAA. CFR 14 Part 89: Remote Identification of Unmanned Aircraft; FAA: Washington, DC, USA, 2021.

- Weinert, A.; Alvarez, L.; Owen, M.; Zintak, B. Near Midair Collision Analog for Drones Based on Unmitigated Collision Risk. J. Air Transp. 2022, 30, 37–48. [Google Scholar] [CrossRef]

- Weinert, A.; Campbell, S.; Vela, A.; Schuldt, D.; Kurucar, J. Well-clear recommendation for small unmanned aircraft systems based on unmitigated collision risk. J. Air Transp. 2018, 26, 113–122. [Google Scholar] [CrossRef]

- Raheb, R.; James, S.; Hudak, A.; Lacher, A. Impact of Communications Quality of Service (QoS) on Remote ID as an Unmanned Aircraft (UA) Coordination Mechanism. In Proceedings of the IEEE/AIAA 40th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 3–7 November 2021. [Google Scholar]

- Wikipedia. Field of View. Available online: https://en.wikipedia.org/wiki/Field_of_view (accessed on 20 January 2023).

- Tan, C.Y.; Huang, S.; Tan, K.K.; Teo, R.S.H.; Liu, W.Q.; Lin, F. Collision Avoidance Design on Unmanned Aerial Vehicle in 3D Space. Unmanned Syst. 2018, 6, 277–295. [Google Scholar] [CrossRef]

- Community, E. Distance on a Sphere: The Haversine Formula. 2017. Available online: https://community.esri.com/t5/coordinate-reference-systems-blog/distance-on-a-sphere-the-haversine-formula/ba-p/902128 (accessed on 16 July 2022).

- DroneTag. All-in-One Solution for Safe Drone Flights. 2020. Available online: https://dronetag.cz/en/product/ (accessed on 7 March 2022).

- Wu, Q.; Sun, P.; Boukerche, A. Unmanned Aerial Vehicle-Assisted Energy-Efficient Data Collection Scheme for Sustainable Wireless Sensor Networks. Comput. Netw. 2019, 165, 106927. [Google Scholar] [CrossRef]

- Lee, H.K.; Madar, S.; Sairam, S.; Puranik, T.G.; Payan, A.P.; Kirby, M.; Pinon, O.J.; Mavris, D.N. Critical parameter identification for safety events in commercial aviation using machine learning. Aerospace 2020, 7, 73. [Google Scholar] [CrossRef]

- Mbaabu, O. Introduction to Random Forest in Machine Learning. Section. Available online: https://www.section.io/engineering-education/introduction-to-random-forest-in-machine-learning/#:~:text=Arandomforestisamachinelearningtechniquethat’sused,consistsofmanydecisiontrees (accessed on 20 June 2023).

- Duangsuwan, S.; Maw, M.M. Comparison of path loss prediction models for UAV and IoT air-to-ground communication system in rural precision farming environment. J. Commun. 2021, 16, 60–66. [Google Scholar] [CrossRef]

- Schott, M. Random Forest Algorithm for Machine Learning. Capital One Tech. 2019. Available online: https://medium.com/capital-one-tech/random-forest-algorithm-for-machine-learning-c4b2c8cc9feb (accessed on 24 January 2023).

- AliExpress. SKYSURFER X9-II 1400mm Wingspan FPV RC Plane Glider. Available online: https://www.aliexpress.com/i/33027340643.html (accessed on 17 January 2023).

- AliExpress. 2018 Most New 2000mm Wingspan FPV SkySurfer RC Glider Plane. Available online: https://www.aliexpress.com/item/32869734504.html (accessed on 17 January 2023).

- AliExpress. X-UAV Upgraded Fat Soldier Talon Pro 1350mm Wingspan EPO Fixed Wing Aerial Survey FPV Carrier Model Building RC Airplane Drone. Available online: https://www.aliexpress.com/item/1005001870155187.html (accessed on 17 January 2023).

- AliExpress. Balsa Radio Controlled Airplane Lanyu 1540 mm E-Fair P5B RC Glider Avion Model. Available online: https://www.aliexpress.com/item/1005002417625454.html (accessed on 17 January 2023).

- Akselrod, M.; Becker, N.; Fidler, M.; Lübben, R. 4G LTE on the road—What impacts download speeds most? In Proceedings of the IEEE 86th Vehicular Technology Conference (VTC-Fall), Toronto, ON, Canada, 24–27 September 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Mohd Kamal, N.L.; Sahwee, Z.; Norhashim, N.; Lott, N.; Abdul Hamid, S.; Hashim, W. Throughput Performance of 4G-based UAV in a Sub-Urban Environment in Malaysia. In Proceedings of the IEEE International Conference on Wireless for Space and Extreme Environments (WiSEE), Vicenza, Italy, 12–14 October 2020; pp. 49–53. [Google Scholar] [CrossRef]

| UAV Parameters | Sky Surfer 1 [41] | Sky Surfer 2 [42] | Mini Talon 1 [43] | Mini Talon 2 [43] | Lanyu E-Fair [44] |

|---|---|---|---|---|---|

| Type | Glider | FPV glider | V-tail | V-tail | Glider |

| Wingspan | 1420 mm | 2000 mm | 1350 m | 1350 m | 1540 mm |

| Length | 960 mm | 1350 mm | 828 mm | 828 mm | 980 mm |

| Kit weight | 690 g | 1350 g | 400 g | 400 g | 545 g |

| Material | EPO | EPO | EPO | EPO | Balsa wood |

| No | Date [YYYY-MM-DD] | Time [UTC] | Location | Scenario | UAV 1 | UAV 2 |

|---|---|---|---|---|---|---|

| 1 | 2022-10-29 | 02:55:35–02:57:15 | NFU Agriculture | 2 | Sky Surfer 1 | Lanyu E-Fair |

| 2 | 2022-10-29 | 02:59:00–03:02:00 | NFU Agriculture | 2 | Sky Surfer 1 | Lanyu E-Fair |

| 3 | 2022-11-11 | 02:59:00–03:02:00 | Yunlin HSR | 1 | Mini Talon 1 | On ground |

| 4 | 2022-11-21 | 02:24:45–02:33:00 | Yunlin HSR | 1 | Mini Talon 1 | On ground |

| 5 | 2022-11-24 | 02:19:00–02:29:00 | Yunlin HSR | 1 | Mini Talon 1 | On ground |

| 6 | 2022-11-24 | 02:45:00–03:04:00 | Yunlin HSR | 1 | Mini Talon 1 | On ground |

| 7 | 2022-12-02 | 03:26:00–03:34:30 | NFU Agriculture | 1 | Sky Surfer 2 | On ground |

| 8 | 2022-12-09 | 02:14:30–02:20:00 | NFU Agriculture | 1 | Sky Surfer 1 | On ground |

| 9 | 2022-12-09 | 02:44:15–02:50:15 | NFU Agriculture | 2 | Lanyu E-Fair | Mini Talon 2 |

| 10 | 2022-12-23 | 03:03:00–03:10:00 | NFU Agriculture | 2 | Sky Surfer 1 | Mini Talon 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruseno, N.; Lin, C.-Y.; Guan, W.-L. Flight Test Analysis of UTM Conflict Detection Based on a Network Remote ID Using a Random Forest Algorithm. Drones 2023, 7, 436. https://doi.org/10.3390/drones7070436

Ruseno N, Lin C-Y, Guan W-L. Flight Test Analysis of UTM Conflict Detection Based on a Network Remote ID Using a Random Forest Algorithm. Drones. 2023; 7(7):436. https://doi.org/10.3390/drones7070436

Chicago/Turabian StyleRuseno, Neno, Chung-Yan Lin, and Wen-Lin Guan. 2023. "Flight Test Analysis of UTM Conflict Detection Based on a Network Remote ID Using a Random Forest Algorithm" Drones 7, no. 7: 436. https://doi.org/10.3390/drones7070436

APA StyleRuseno, N., Lin, C.-Y., & Guan, W.-L. (2023). Flight Test Analysis of UTM Conflict Detection Based on a Network Remote ID Using a Random Forest Algorithm. Drones, 7(7), 436. https://doi.org/10.3390/drones7070436