Abstract

In this paper, we introduce a fault-tolerant multi-agent reinforcement learning framework called SERT-DQN to optimize the operations of UAVs with UGV central control in coverage path planning missions. Our approach leverages dual learning systems that combine individual agent autonomy with centralized strategic planning, thus enhancing the efficiency of cooperative path planning missions. This framework is designed for high performance in environments with fault uncertainty detected and operational challenges such as interruptions in connectivity and compromised sensor reliability. With the integration of an innovative communication system between agents, our system appropriately handles both static and dynamic environments. Also, we introduce similarity-based shared experience replay to attain faster convergence and sample efficiency in the multi-agent system. The architecture is specially designed to respond adaptively to such irregularities by effectively showing enhanced resilience in scenarios where data integrity is impaired due to faults or the UAV faces disruptions. Simulation results indicate that our fault tolerance algorithms are very resilient and do indeed improve mission outcomes, especially under dynamic and highly uncertain operating conditions. This approach becomes critical for the most recent sensor-based research in autonomous systems.

1. Introduction

Exploration of Mars presents myriad opportunities for advancement in fields such as innovation, culture, and technology. The endeavor to decipher the geology, climate, and potential for harboring life, either in the past or present, on Mars has catalyzed considerable interest and investment, resulting in the deployment of various robotic systems with specialized capabilities and applications [1,2]. Owing to its potential for previous life, Mars has been a significant focus of astrobiological studies, positioning it as an ideal target for human exploration and potential settlement [3]. For many years, researchers have continuously sought to address the complex challenges associated with extraplanetary missions, particularly focusing on key issues such as planetary landing techniques [4,5] and stable hovering operations [6]. Historically, the exploration of Mars commenced with the utilization of rovers and unmanned ground vehicles (UGVs) [7] tasked with navigating the Martian terrain [8], collecting data, and performing scientific experiments. Nevertheless, the slow operational pace and limited sensing range of these rovers have constrained their utility [9]. For example, the Spirit rover, which represents one of the most successful missions to date, traversed a mere 7.73 km over a six-year span [10]. This limitation underscores the imperative to re-engineer exploration systems to enhance efficiency and expand operational coverage [11].

Recent technological advancements have introduced unmanned aerial vehicles (UAVs) [12] as a promising solution to enhancing the efficiency and scope of Mars missions. UAVs provide a significant leap in capability over traditional rovers due to their ability to cover vast areas quickly, access challenging terrains, and gather high-resolution data from multiple perspectives [13]. UAVs are advantageous for planetary exploration due to their ability to move over the surface and through the atmosphere, enhancing cost-effectiveness and efficiency compared to wheeled rovers or static explorers. Their applications include surface exploration, determining potential rover paths, identifying human landing sites, and hazard detection [14]. The inclusion of UAVs in Mars missions, such as NASA’s Perseverance rover mission with the deployment of the Ingenuity drone, exemplifies this technological shift and the potential for UAVs to revolutionize planetary exploration [15].

The transition from single robotic systems to integrated multi-robot systems, which combine UGVs and UAVs, marks an advancement in Mars exploration strategies. Multi-robot systems are valued for their ability to enhance mission efficiency and accuracy through task division [16], collaborative area coverage, and real-time data sharing among multiple agents. By distributing tasks and coordinating efforts, these systems accelerate coverage and reduce mission duration and introduce redundancy; if one UAV fails, others can seamlessly continue the mission, minimizing the risk of overall failure [17]. In addition, the UGV can function as a central computational hub, managing and synchronizing the entire system. In a related study [18], an integrated UAV-UGV system was developed for data collection in cluttered environments, where the UGV functions as the central controller for autonomous navigation, while the UAV supports the UGV by surveying areas inaccessible to the UGV, thus improving data collection efficiency. The benefits of employing multi-agent systems extend beyond just reliability. They enable simultaneous coverage of larger areas while aggregating substantial environmental data [19], which is essential in exploration missions [20]. Furthermore, the cooperative nature of these systems allows UAVs to learn from and build on the experiences of their peers, increasing learning speed and reducing redundant explorations [21]. This cooperative behavior is important in dynamic environments like Mars, where real-time adaptability is critical for mission success. The Mars 2020 mission [11], featuring both the Mars Helicopter Scout and the Mars Rover, exemplifies this shift toward coordinated multi-robot systems. By enabling complex operations such as coordinated mapping and sampling, which are difficult for a single unit to perform, multi-agent systems improve the robustness and resilience of missions, especially in unpredictable environments. Additionally, cooperative UAV-UGV planning maximizes mission efficiency by allowing UAVs to rely on UGVs as mobile charging stations and operational bases, addressing the energy constraints that are critical for long-duration Mars exploration [22]. Furthermore, UAV-UGV cooperation greatly improves navigation and mapping in GPS-denied environments like Mars, as demonstrated through SLAM-based technologies [23]. In another work, tethered UAV-UGV systems have been shown to optimize path and trajectory planning, ensuring synchronized movements across Mars’ challenging terrains [24]. Similarly, UAV-UGV cooperation has been demonstrated to optimize pathfinding and data collection, even in cluttered environments like Mars. This system architecture enables automated path planning and navigation using SLAM, improving mission efficiency by enhancing both 2D and 3D data collection capabilities [25].

A key challenge in Mars exploration is the coverage path planning (CPP) problem. CPP [26] seeks to determine optimal paths to ensure full area coverage with minimal overlap and efficient time management. The goal is to cover the entire target area while addressing factors such as obstacles, limited battery life, and communication constraints. Given Mars’s vast and varied landscape, optimal routes must maximize coverage while reducing time and resource usage [27]. Recent studies aim to improve CPP algorithms to address the unique challenges of the Martian environment, including large obstacles, harsh weather conditions, and potential communication loss during missions [28]. In many cases, CPP methods operate UAVs independently, leading to inefficiencies. Without proper coordination, UAVs may follow overlapping paths, miss certain areas, or even collide, which increases mission time and decreases coverage efficiency [26]. Furthermore, few CPP methods incorporate fault tolerance, a critical feature for Mars exploration given the harsh environment and the high likelihood of communication loss among UAVs. Such disruptions can jeopardize the mission and cause significant failures [29].

Recent research focuses on developing CPP methods capable of operating in model-free environments and enabling collaboration among UAVs to explore uncertain terrains in real-time. These methods enhance system robustness and adaptability. Many approaches use neural networks, ant colony algorithms, and other advanced technologies for multi-agent online CPP in dynamic settings. However, most do not consider the observations and states of other UAVs, limiting their effectiveness. A CPP method has been proposed based on an information map that tracks joint coverage records, but it did not account for the entire coverage process to achieve a globally optimal solution. It is crucial that these methods function autonomously and remain fault-tolerant due to the challenging conditions on Mars [30].

In recent years, reinforcement learning (RL) methods have gained prominence in autonomous decision making include UAV path planning, especially for CPP problems in both known and unknown environments [31,32,33]. RL-based approaches are particularly suitable for Mars exploration due to the uncertain and dynamic nature of the environment. Autonomous agents must make real-time decisions with limited prior knowledge, and RL enables them to learn optimal policies through interactions with the environment [34]. Q-learning, a widely used RL algorithm for path planning and coverage optimization in discrete spaces, reliably converges to optimal solutions but is susceptible to the curse of dimensionality in more complex environments [12]. To address this limitation, deep neural networks (DNNs) are used to approximate Q-values in large state-action spaces, leading to deep Q-learning (DQN) [35].

Multi-agent systems like [36,37] present new opportunities and challenges when applying RL. One major advantage of RL in multi-agent systems is the ability for agents to learn from their interactions, adapt to dynamic environments, and improve coordination. However, multi-agent RL (MARL) also introduces complexities, such as the need to coordinate actions, divide tasks optimally, manage shared resources, and maintain adaptability in changing environments [38]. In multi-agent cooperative coverage scenarios [39], achieving real-time, fault-tolerant planning [40] remains particularly challenging. Centralized RL algorithms generally offer better communication and faster convergence by allowing agents to learn as a unified system. However, they are vulnerable to single points of failure and scalability issues, especially when the number of agents increases [41]. Decentralized RL algorithms, on the other hand, provide greater robustness against individual agent failures and allow for more flexible and scalable systems. Yet, they require higher computational resources for each agent and face challenges in achieving global coordination. An optimal approach would combine centralized exploration, which ensures comprehensive learning, with decentralized exploitation, which reduces resource demands on each agent while retaining flexibility [42]. A popular framework that combines both approaches is centralized training with decentralized execution (CTDE). In this model, agents learn collectively during training but operate independently during execution [43]. Furthermore, cooperative exploration methods, where agents share a common exploration objective, help to avoid redundancy and improve overall performance. However, coordinating these efforts requires careful design to avoid conflicts [44]. Strategies like role assignment and hierarchical coordination can optimize policies by improving agent-level interactions and cooperation in complex environments [45,46]. Regarding the specific MARL methods, actor–critic approaches, such as asynchronous advantage actor–critic (A3C), have shown promise by combining the strengths of value-based and policy-based techniques, enhancing both stability and exploration. However, these methods require significant computational resources and can be difficult to scale efficiently in multi-agent settings [47]. Similarly, the QMIX algorithm is widely used in MARL tasks because it combines individual Q-values into a joint Q-value using a mixing network, while ensuring monotonicity. However, QMIX is fully centralized during training, making it susceptible to single-agent failures, which can disrupt the entire system and reduce its effectiveness in fault-tolerant scenarios. While methods like QMIX have advanced multi-agent coordination through CTDE frameworks, they face challenges in scaling to larger systems or operating under unreliable communication—conditions commonly encountered in Mars exploration. The dependence on centralized value functions during training creates bottlenecks and vulnerabilities; if the central entity fails or communication is interrupted, system performance can degrade. Additionally, the monotonic value function constraint of QMIX can restrict its ability to handle more complex tasks [48].

To address these challenges in Mars exploration, we utilize a UGV system as a central computational hub, with UAVs dedicated to coverage path planning. To achieve this, we propose a novel method called SERT-DQN (similarity-based experience replay enhanced target deep Q-network), which utilizes the simplicity of DQN with the coordination capabilities of QMIX. In SERT-DQN, primary Q-networks for each UAV, implemented using Multi-layer perceptron (MLPs), handle decentralized decision-making locally within each UAV. Meanwhile, the intermediate Q-network, responsible for joint Q-value calculations, is centralized and computed within the UGV. This structure facilitates cooperation within the UAV-UGV system, reduces conflicts, and enhances overall performance. By integrating centralized strategic oversight with decentralized autonomous actions, SERT-DQN optimizes both exploration and exploitation. The algorithm aligns individual training with joint training, ensuring that individual decision-making is consistent with both local and global objectives. This alignment helps maintain coordination among agents, leading to more cohesive decision-making across the system. As a result, the method improves sampling efficiency and enables multi-agent systems to benefit from shared knowledge, leading to higher success rates and faster convergence. This framework is particularly well-suited for environments characterized by uncertainty and operational challenges. To further enhance performance, SERT-DQN incorporates a similarity-based experience replay mechanism that prioritizes experiences based on state similarity, focusing on relevant data while minimizing the influence of less pertinent or misleading information. This selective experience replay promotes balanced exploration, faster convergence, and improved stability. Additionally, SERT-DQN is designed with fault tolerance in mind. The enhanced version, SERT-DQN+, ensures that UAVs maintain effective path planning and mitigate overlap issues during communication disruptions until the connection is restored, which is critical for ensuring mission efficiency in Mars exploration.

This paper proposes a multi-agent fault-tolerant online cooperative CPP method aimed at optimizing fault-tolerant coverage paths and minimizing task completion time for Mars exploration. The main contributions are as follows:

- Enhances UAV cooperation by expanding local observations into global observations, improving decision-making and coordination.

- Introduces a fault-tolerant algorithm for online coverage path optimization that handles connection losses while avoiding overlaps, uncovered areas, and collisions. The approach maintains low computational demands on each UAV, enabling fast online coverage path planning.

- Improves sample efficiency and accelerates convergence by prioritizing relevant experiences, optimizing the learning process.

The structure of this paper is organized as follows: Section 2 covers the essential preliminaries; Section 3 explains the system model and problem formulation; Section 4 describes the proposed algorithm; Section 5 presents simulation results and discussions; and Section 6 concludes the paper while outlining potential future research directions.

2. Preliminary

2.1. Multi-Agent DQN

In multi-agent systems, agents must learn to make decisions that balance individual objectives with overall group goals. The DQN algorithm, which integrates Q-learning [49] with deep neural networks, has proven effective in large state-action spaces. However, extending DQN to multi-agent environments introduces challenges such as coordinating multiple agents, managing shared resources, and addressing non-stationarity due to the dynamic nature of interactions among agents.

DQN uses deep neural networks to approximate the Q-value function , defined as follows [50]:

where and represent the current and next states, and are the current and next actions, is the reward, is the discount factor, and represents the network parameters. The agent learns by minimizing the loss function:

where denotes the parameters of the target network, periodically updated for stability.

In multi-agent DQN frameworks, each agent learns its own Q-value function , where and represent the agent’s local state and action. The challenge lies in combining these individual Q-values into a joint value function that facilitates global coordination. Formulations like QMIX achieve this by aggregating individual Q-values using a mixing function:

where represents the joint action of all agents, and f is constrained to be monotonic with respect to each .

Approaches like CTDE and QMIX offer solutions for multi-agent DQN settings. However, these methods have limitations, such as centralization vulnerabilities and difficulties in managing non-stationarity. To address these issues, this paper proposes SERT-DQN, which combines decentralized decision-making with centralized coordination for improved CPP in Mars exploration.

2.2. Markov Game

In multi-agent systems, the Markov game [51], or stochastic game, extends the classical Markov decision process (MDP) [52] to multiple agents. Formally, it is defined by the tuple , where represents the number of agents (UAVs in our case), denotes the global state space encompassing the environment and all agent states, defines each agent’s action space, is the state transition function determining the probability distribution over the next state given the current state and joint actions, and represents the reward functions for each agent, mapping the global state and joint actions to real-valued rewards.

In this framework, each agent independently selects actions based on the observed global state. The environment transitions to a new state based on the collective actions, and agents receive rewards according to their individual reward functions. The objective of each agent is to maximize its cumulative reward while considering the actions and goals of other agents.

3. System Framework

In this section, we discuss the method of autonomous CPP by UAVs and a central UGV. In this work, we introduce coverage maps for each UAV. These maps are designed not only to enable accurate modeling of the environment but also to allow each UAV to access observations collected by other UAVs. This modeling aids in better organization and coordination within the UAV-UGV system. The problem of coverage path planning for multiple UAVs is then formulated as an optimization problem, with the objective of minimizing mission completion time and reducing area overlap. To ensure effective online collaboration among the UAVs, each UAV operates autonomously while coordinating with the central UGV. This collaboration aims to gather and share environmental information, resulting in the development and expansion of environmental observations.

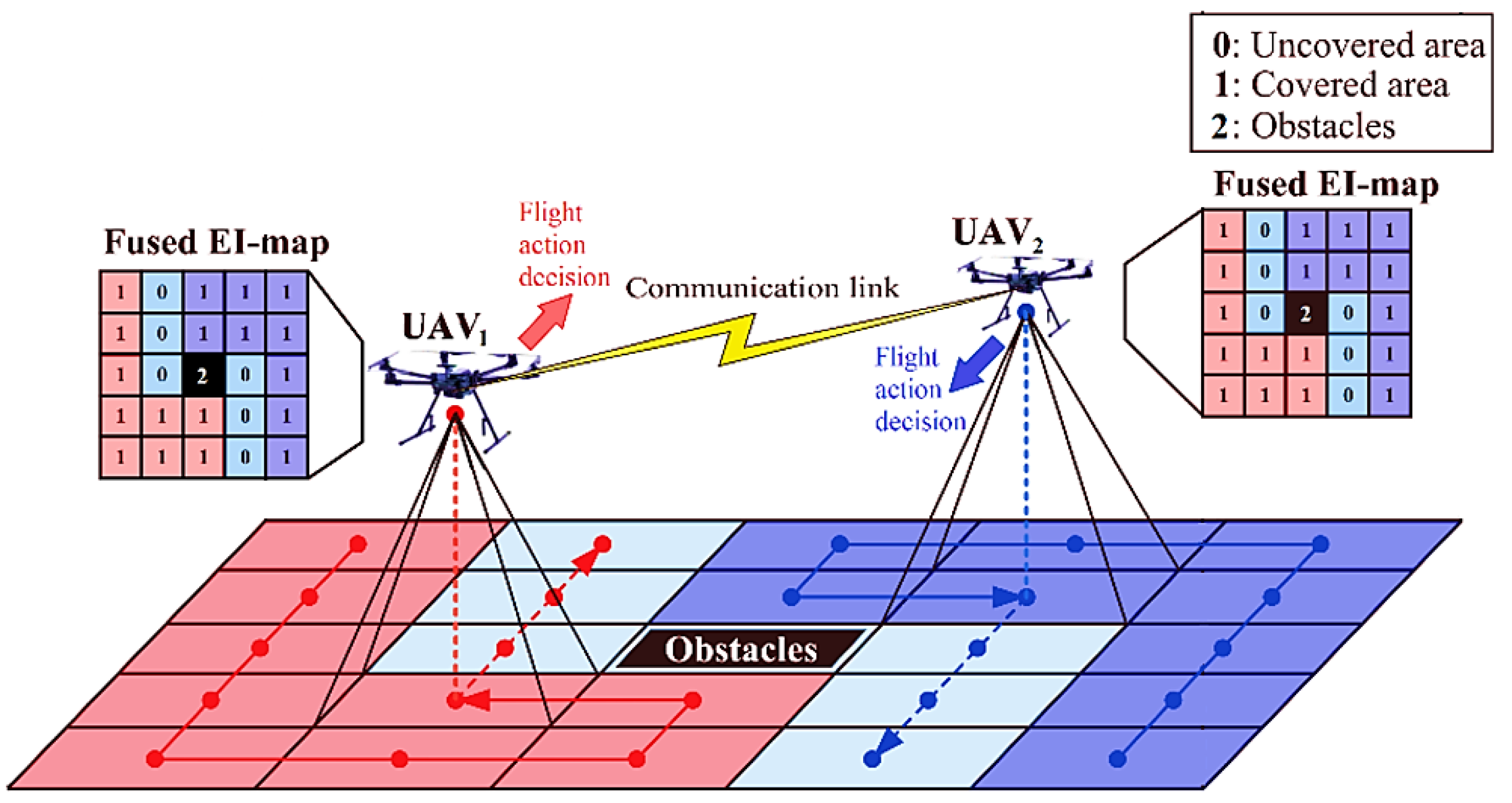

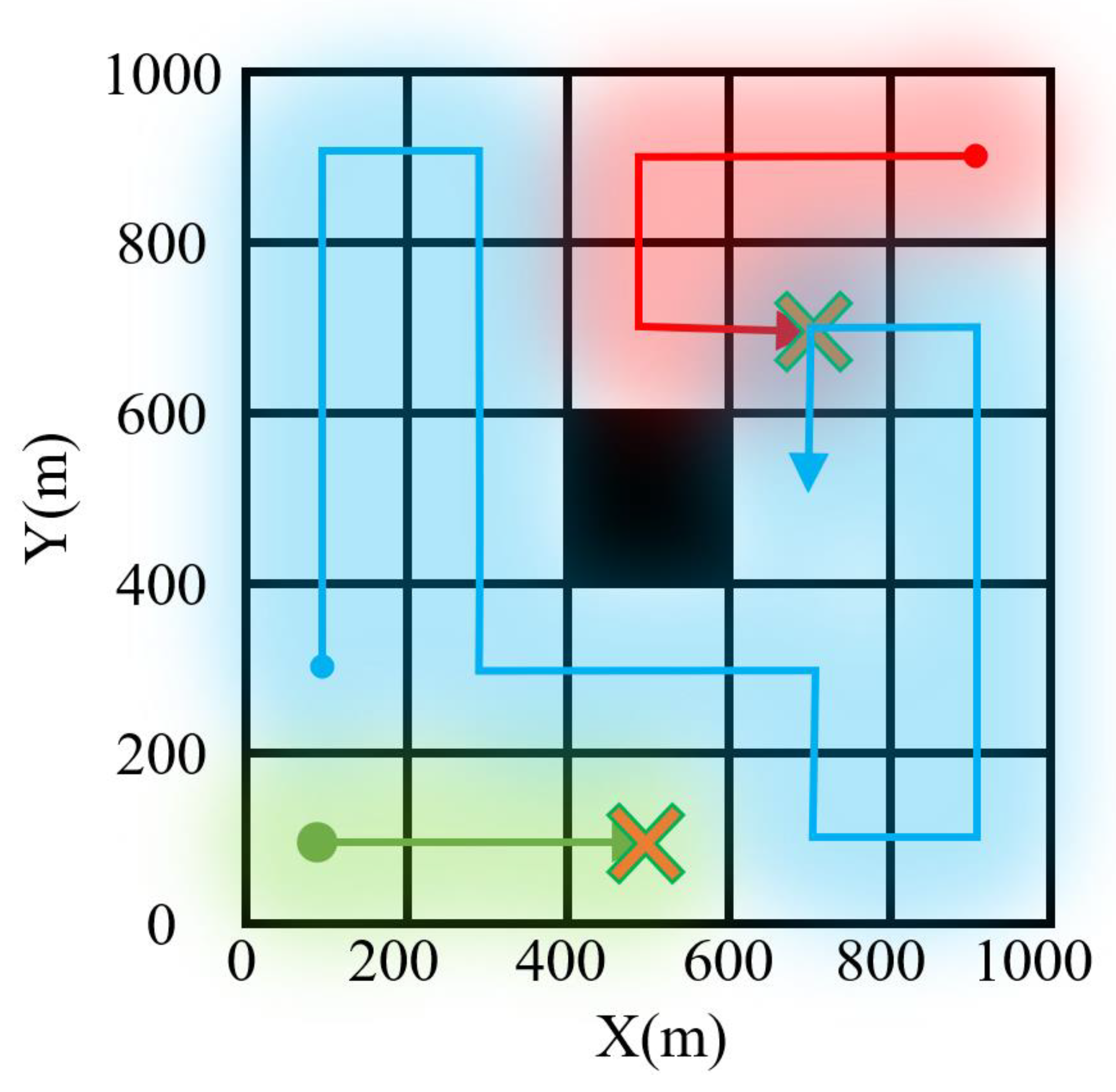

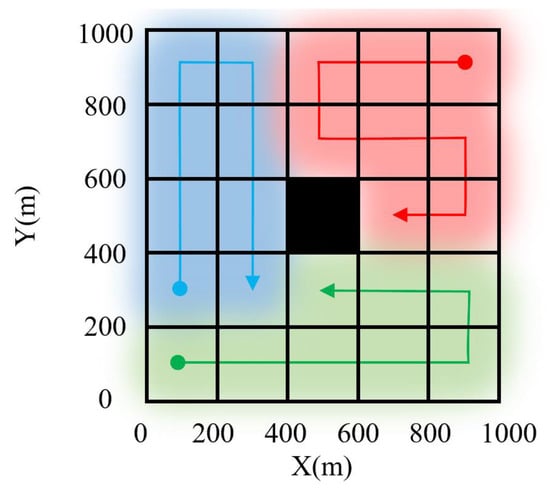

We have configured a fleet of UAVs comprising UAVs, represented by the set . The target area is defined as an rectangle. Figure 1 illustrates the online coverage path planning process by the UAVs. The map presents a two-dimensional grid representation of the three-dimensional environment used.

Figure 1.

Schematic diagraph of agent communication.

In the introduced system, UAVs use aerial cameras to cover the target area. The environment for these UAVs is unknown and contains a number of obstacles. The UAVs start their activity from initial positions and proceed step-by-step with the goal of minimizing mission completion time while full coverage path planning. Each UAV’s coverage path consists of a sequence of points defined over multiple moves. At the beginning of each move, each UAV makes its flight decision online at the grid point it occupies, moving toward one of its neighboring points. As shown in Figure 1, each position in the grid has four neighbors. Therefore, each UAV’s coverage path consists of multiple connected grid points. Additionally, UAVs can utilize information obtained from their environment during decision-making, which results from the collaboration among multiple UAVs.

The UAVs are homogeneous and do not differ in performance. They have the same flight speed and operational space. Moreover, the camera detection radius projected onto the ground and the communication radius for each UAV are identical. The target area’s environment remains constant during the mission and contains fixed, unknown obstacles. The initial path position of a UAV can be at the edge or inside the target area, while the final position must be on the edge for easier retrieval. The camera used for ground coverage is mounted underneath the UAV. The field of view of this camera is circular, referred to as the UAV’s detection area. The detection radius depends on the UAV’s fixed flight height and the aerial photography angle , which can be calculated using the following formula:

3.1. UAV Mathematical Model

3.1.1. Environment Modeling Using the Communication Data Matrix

For each UAV, the obtained images are modeled frame by frame. Therefore, the target area is modeled using a grid based on the camera’s field of view for each UAV. To ensure accurate coverage, the grid map units are divided according to the inner rectangle of the UAV’s circular detection area. Since the inner square covers the largest area among rectangles inscribed in a circle, we propose a grid where cells are set as inscribed squares within the detection range to fully utilize the detected environmental information by the UAV.

To achieve better communication between UAVs and the central UGV, we introduce a communication system. For this purpose, we propose a communication data matrix (CDM), which employs a value-based representation method to track coverage records and identified obstacles within the improved grid structure. The CDM is designed to allow each UAV to share its data with the central UGV and neighboring UAVs. The UGV collects all the maps, updates them, and shares the information with all UAVs. Additionally, each UAV can exchange its CDM with neighboring UAVs. This ensures that UAVs consistently move toward uncovered grid cells during the mission while avoiding obstacles and updating others on their locations via the CDM. This collaborative approach enhances the UAVs’ ability to support each other in path planning, ultimately reducing task duration.

By mapping the relationship from the 3D environment to a 2D representation, the target area is divided into an improved grid map consisting of rectangular regions, where is calculated as and . Here, and represent the actual width and length of the target area, respectively. The geometric center of each grid cell, referred to as the grid point or -point, is represented by the grid map coordinates , where and . This definition ensures that when is positioned above the -point of a grid cell, that cell is fully covered. The current grid points for is denoted as , and its target grid point is denoted as . The position of is given by

where is the position index function, and the position of is determined similarly. We define as the grid centered at so that the entire grid map can be represented as the set , with denoting the set of grid cells located at the edges of the grid map. If has been covered, then ; if not, . Additionally, if the grid contains obstacles, then . Based on these values, the CDM perceived by is represented by a matrix CDM:

in which represents the environmental information value for grid as perceived by . The value at the current grid location is denoted as . The process of merging and updating environmental information is defined as follows:

3.1.2. Coverage Time

In a defined system, the overall task completion time is determined by the UAV that takes the longest to complete its assigned mission. UAVs commence their tasks from distinct starting positions and move iteratively to cover their designated target areas. Each UAV is responsible for a specific segment of the area, referred to as a subtask, and the union of these subtasks ensures complete coverage of the target area. Due to differences in initial positions, obstacle distribution, and other environmental factors, the workload for each UAV may vary. Consequently, the time taken by the last UAV to complete its subtask dictates the total task completion time for the system.

According to the updated CDM, the time for to complete its subtask is expressed as follows:

where represents the total number of steps required by to complete its subtask, is the final step in each episode for each UAV, denotes the set of target positions for each movement, is the Euclidean distance between the starting grid point and the target grid point for during the th movement. Moreover, is the UAV’s flight speed. The overall task completion time for the UAVs is equal to the maximum time taken by any single UAV to cover its assigned path.

3.1.3. Target Area Coverage

To quantify the coverage of the target area achieved by the UAVs, we introduce the concept of the effective coverage area for . This area is defined as the difference between the total coverage area and the overlap area. Specifically, the effective coverage area, , which is the coverage area, , minus the overlap area, , is represented as follows:

In this formulation, is the set of all the grids point which covers or has overlaps. can represent either coverage or overlap. The function calculates the number of elements in the set, and represents the area of each grid cell.

3.1.4. Optimization-Based Problem Modeling

Maximizing coverage efficiency is equivalent to minimizing task completion time and reducing overlap in coverage. To achieve this goal, the UAVs must collaboratively optimize their paths, including the optimization of both the number of movements and the target positions for each UAV. Consequently, the multi-UAV CPP optimization problem, based on the defined system framework, is formulated as follows:

These constraints ensure that (1) no UAV operates beyond its endurance limit; (2) the current and target positions of each UAV remain within the designated grid map of the target area; (3) collisions between UAVs are prevented; (4) UAVs effectively avoid grid cells containing obstacles; (5) each UAV terminates its subtask at a boundary grid point; and (6) the fleet of UAVs collectively achieves complete and discrete coverage of the target area. It should be noted that the effect of Mars’s curvature on the calculation is not taken into account.

3.2. Mathematical Modeling of Proposed Method

In this section, we utilize a Markov game to formulate the CPP problem for a multiple UAVs-UGV system. In this model, the state space, action space, and reward function for system are defined. Although the Markov game framework typically includes a transition function, in this work, the transition function is not explicitly modeled due to the use of model-free RL.

3.2.1. State Space

In reinforcement learning methods for CPP, each UAV defines its state space based solely on perceived environmental information, disregarding information from other agents. These issues can negatively impact the task completion time and coverage efficiency in the multi-agent system.

To avoid these problems, each UAV needs to cooperate with others to gain a comprehensive observation of the environment, which includes the latest records of covered areas and the positions of detected obstacles. Additionally, each UAV must consider not only its own target location but also the target locations of other UAVs to avoid collisions. Ultimately, UAVs can make flight decisions based on these factors to reduce task completion time and minimize overlap, thereby improving coverage efficiency.

At each step, collaborates with other UAVs to obtain their movement maps and target positions. It then updates and combines its map based on the mentioned equations. After that, makes its decision based on the local state space , defined for each at movement as follows: , where represents the UAV’s position and is the communication matrix of UAVs and each respective target positions . This state is individual for each UAV.

The global states responsible for joint states, includes the positions of each , their respective target positions , and the :

3.2.2. Action Space

The action space for each is discrete, denoted as , while the joint action space for all agents is represented by A, which includes the set of actions of each at step k. The action set is identical for all UAVs. When is in state , its available target grid points are the four adjacent locations: , , , . If is positioned at the edge or corner of the target area, actions that would lead it outside the boundary are excluded.

The action set for each can be represented as The joint action space A for all UAVs is the Cartesian product of the individual action spaces: , where N is the number of UAVs.

3.2.3. Reward Function

The reward function should be designed to incentivize each UAV to fully cover all obstacle-free grid points with the minimum number of movements. On the one hand, actions that lead to exploring unknown areas should be rewarded to ensure complete coverage of the target area without missing any grids. On the other hand, actions that lead to overlapping or collisions should be penalized, as they may result in redundant movements or task failure. The reward function for each UAV is defined as follows:

Thus, the reward function is defined to encourage actions leading to exploration and full area coverage while penalizing actions that cause overlap or collisions, with the goal of minimizing task completion time.

The joint reward function for all UAVs at step k is the sum of the individual rewards of each UAV:

4. Proposed Algorithm for CPP Problem

This section presents our proposed algorithm, the similarity experience replay enhanced target deep Q-network (SERT-DQN). First, we outline the key details of the SERT-DQN algorithm, followed by a discussion of the similarity-based experience replay mechanism. Next, we introduce an enhanced version, SERT-DQN+, designed to handle fault-tolerant scenarios. Lastly, we model the environment using a Markov game framework to further support the algorithm’s applicability.

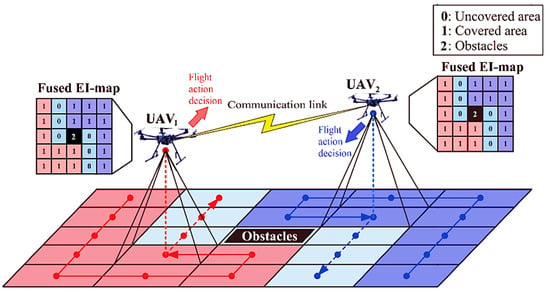

4.1. Overview of the SERT-DQN

The SERT-DQN algorithm integrates reinforcement learning principles with our proposed coordination strategies, utilizing two Q-networks to optimize UAV path planning. In this section, we delve into the details of the SERT-DQN algorithm, explaining how it can work with two Q-networks and take advantage of both global and individual learning. The SERT-DQN algorithm enables each UAV to operate autonomously based on local information while synchronizing its actions through a global evaluation framework. This global evaluation is achieved by integrating a primary network for each UAV with an additional intermediate network shared for all UAVs to the DQN framework which facilitates coordination among all UAVs. This combined approach is designed to enhance coverage efficiency and reduce task completion time by leveraging both local and global data. The algorithm is centered around a UGV, which serves as a central control system. Unlike traditional CTDE approaches, where centralized coordination is limited to training, our method extends the UGV’s role into the execution phase.

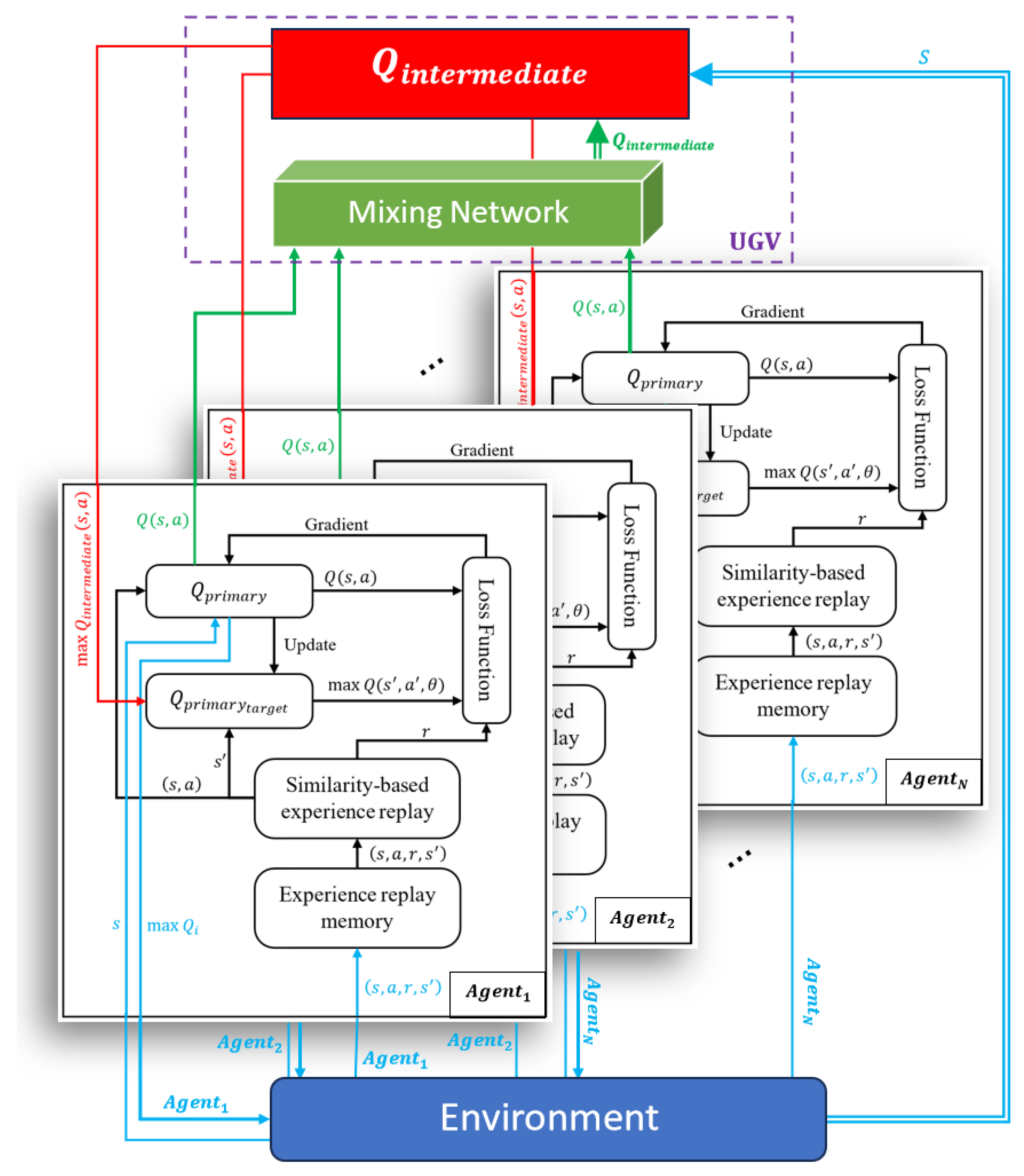

The SERT-DQN consists of two Q-networks. One is called the intermediate Q-network, which is responsible for calculating the joint value using joint shared experience replay. The other is the primary Q-network for each individual agent, using individual experience replay for each agent and shared similarity-based experience replay. Each primary Q-network’s loss function is updated based on both individual and joint Q-network parameters. This ensures that each UAV’s decision is based on both individual and global states. The action selection is based on each primary Q-value, allowing each UAV to decide individually while considering both global and individual states. This configuration makes SERT-DQN scalable and computationally efficient, allowing it to support larger UAV fleets and more complex environments without performance loss, making it a robust solution for multi-agent reinforcement learning in UAV networks. The schematic diagram of the method is shown in Figure 2.

Figure 2.

The schematic diagram of the SERT-DQN algorithm.

Knowledge transfer in MARL aims to enhance learning efficiency by sharing policies or experiences among agents or across tasks [53,54], facilitating inter-agent knowledge transfer and accelerating convergence. While these approaches focus on transferring knowledge to new tasks or between agents, our SERT-DQN algorithm differs by enhancing coordination within the same task through a similarity-based experience replay mechanism. Rather than transferring learned policies, we prioritize relevant experiences based on state similarity, improving sample efficiency and cooperation among agents without the overhead of transferring knowledge across different domains. This distinction is crucial for Mars exploration, where agents operate collaboratively in the same environment and must adapt to dynamic conditions in real-time.

The summary of the SERT-DQN elements is as follows:

- Individual primary Q-networks: These networks guide local decision-making for each UAV based on immediate environmental data.

- Mutual intermediate Q-network: This network facilitates collaboration by aggregating and processing data across multiple UAVs.

- Target network for primary Q-network: This target network provides stable Q-values for primary which are calculated based on the mixed of the primary q-network and intermediate Q-network.

- Target network for intermediate Q-network: This ensures stable training by periodically updating towards the intermediate network’s weights.

4.1.1. Intermediate Q-Network

The intermediate Q-network in the SERT-DQN framework plays a pivotal role as it calculates joint Q-values that account for dependencies among the individual Q-values of each UAV. It updates at a constant interval shorter than the primary Q-network, offering a global perspective that enhances coordination and aligns individual UAV actions with overall mission objectives. This alignment reduces conflicts and improves system performance. Additionally, the less-frequent updates balance timely global feedback and computational efficiency, enabling the system to scale effectively while ensuring coordinated operations across the UAV fleet.

The calculation of the is based on the QMIX. QMIX is a method in multi-agent reinforcement learning that ensures the shared Q-value is a monotonic function of the individual Q-values of each agent. This is achieved through a mixing network that combines the individual Q-values using a function constrained to monotonicity. This constraint ensures that increasing the Q-value of any agent cannot decrease the shared Q-value. In other words, if one UAV decides to improve its performance and increase its Q-value, this change is applied in a way that always increases or maintains the shared Q-value and never decreases it. This is important because it ensures that cooperation between UAVs is maintained and there is no competition that undermines overall performance. The monotonicity constraints defined as . The primary Q-values for each UAV are combined using a mixing function to calculate . The weights of this function are dynamically generated by a hypernetwork () based on the global state S. The input to the hypernetwork is the global state S. This global state is fed into a fully connected layer that extracts relevant features from the global state. In our proposed method, the hypernetwork includes two hidden layers, each fully connected. These layers are used to process state information and transform them into a more abstract representation. To introduce non-linearity into the network and learn complex patterns, we have used ReLU activation functions. The output layer of the hypernetwork generates the weights used in the mixing function. To ensure these weights are non-negative, ReLU activation functions are used. Finally, the mixing network combines the weighted individual Q-values into . It consists of a single fully connected layer that sums the contributions from each agent, using weights provided by the hypernetworks:

where the weights from the hypernetwork are given by

Thus, the is formulated as

where , , and are parameters for each , mixing network, and , respectively. In addition, in this architecture, the first and second layers use non-negative weight matrices, and , along with bias vectors, and , which help refine the output and ensure monotonicity while optimizing the learning process.

4.1.2. Intermediate Target Network

To ensure stability in the , we use a target network called intermediate target network. The parameters of the target network, denoted by , are periodically updated to provide stable learning targets. This periodic update mechanism is crucial for maintaining stability in the learning process and ensuring coherent convergence of Q-values. It updates as follows:

The loss function for updating the parameters of the intermediate Q-network is designed to minimize the squared error between the predicted intermediate Q-values and the target values. The loss function is given by

where represents the target value, defined by the following Bellman equation:

4.1.3. Primary Q-Network

Each UAV has its primary Q-network responsible for local decision-making. The primary Q-network for each UAV is implemented using a multi-layer perceptron (MLP) [55]. This architecture ensures that each UAV can process its local state information and make informed decisions. The simplicity of the MLP architecture also reduces computational load for each UAV. The input to the primary Q-network consists of a local state vector for at movement k and a corresponding action . The primary Q-network for each UAV is designed with two hidden layers. The first hidden layer applies a ReLU activation function to the weighted input; the second hidden layer processes the output with a similar ReLU activation. The final output layer computes the Q-value for the given state-action pair, helping the UAV make informed decisions. Further details about the specific hyperparameters and network architecture are provided in the experiment section of the paper.

4.1.4. Q-Primary Target Network

In this algorithm, the network is responsible to evaluate and stabilize the value. Its parameter introduces based on each parameter and parameter, to help balance between global and local information. Unlike the intermediate target network, which is updated based solely on collective behaviors, this serves as a balance point between individual optimizations from primary Q-networks and collective insights from the intermediate target network.

The network in the SERT-DQN framework ensures cohesive fleet behavior by balancing local and global information, preventing UAVs from acting solely in self-interest and reducing conflicts such as overlapping coverage or redundant paths. This network also enhances adaptability, allowing UAVs to adjust strategies based on dynamic environments and shared experiences. The integration of individual and collective insights stabilizes the learning process by minimizing policy fluctuations. Furthermore, it aligns individual actions with group objectives improves overall mission efficiency, ensuring optimal resource allocation and comprehensive coverage. The Q target value at movement k is calculated using a combination of Q values from the and the :

where includes the states of each after the next action. Each agent’s primary Q-network updates its parameters by minimizing the mean squared error between the predicted Q-values and the target Q-values provided by the :

4.1.5. Updating Intermediate and Primary Network Parameters

Stochastic gradient descent (SGD) [56] adjusts the parameters of each primary Q-network, , and the intermediate Q-network, , to minimize their respective error functions. The update process proceeds as follows:

represent the learning rates of the primary network and intermediate network, which determine the extent of parameter adjustments at each update step. Using an appropriate learning rate can help achieve faster and more stable convergence of the algorithm. The SGD method optimizes parameters by calculating the gradient of the loss function concerning the parameters and making small adjustments towards minimizing the loss. This iterative process continues until the loss function reaches its minimum possible value, and the parameters reach their optimal values.

4.2. Action Selection

During action selection, each UAV chooses its action using an epsilon-greedy policy [57] and the Q-value function computed by its primary Q-network. This method ensures that action selection is based on local evaluations updated in line with common actions. In other words, each UAV selects its action as follows:

In the epsilon-greedy method, UAVs select a random action with probability and select the best action based on Q-values with probability . In the early stages of learning, there is a higher probability of selecting random actions to better explore the environment and gather more experiences. Over time, as learning improves, the value of decreases, and UAVs increasingly select the best action based on Q-values.

4.3. Experience Mechanism for the Q-Intermediate

The experience of the multi-UAV system at a given time step is captured in a shared tuple , where all parameters are considered jointly across the UAVs. The global state represents the aggregated states of all UAVs at time , reflecting the overall condition of the system. The joint action consists of the combined decisions made by all UAVs from their respective action spaces at time . Upon executing these actions, the system receives a collective reward , which quantifies the immediate outcome of the actions and guides the learning process by highlighting favorable or unfavorable behaviors across the entire system. The tuple concludes with the next global state , which captures the updated state of the system after the joint actions have been executed. These shared experiences are stored in a joint replay buffer , which is accessible to all UAVs. During the training phase, data are randomly sampled from this joint buffer in mini batches, enabling the function to learn in a more stable and coordinated manner. All these shared parameters, , , and , are equivalent to the set of individual parameters for each UAV.

4.4. Similarity Experienced Replay for the Q-Primary

For improved exploration, we propose a shared experience replay mechanism that allows each UAV to randomly sample not only from its own experiences but also from the experiences of other UAVs. However, despite its advantages, using a shared experience replay buffer can introduce non-stationarity issues. This occurs when agents encounter states and actions they have not personally experienced, leading to difficulties in learning appropriate policies. To address this challenge, we propose a cosine similarity-based state sampling mechanism to enhance the relevance of sampled experiences for networks, thereby stabilizing the learning process and promoting effective exploration. To improve the relevance of sampled experiences, we introduce a cosine similarity-based sampling method. This method prioritizes experiences that state are more relevant to the current state of each UAV; it guarantees more stable and effective learning. For each , the cosine similarity between its current state and the states stored in the shared experience replay buffer is measured. Cosine similarity is defined as

To reduce computational demand, a subset of experiences (100 sample) is randomly selected from the shared experience replay buffer before applying the cosine similarity measurement. Within this subset, experiences are tiered based on their similarity scores into three categories: similar tier (top 10% most similar); less-similar tier (next 20% moderately similar); and non-similar tier (bottom 70% least similar).

The experience selection process is based on a tiered sampling mechanism that prioritizes experiences from the most relevant tier while still incorporating less-similar and non-similar experiences to encourage exploration. The similar tier, which comprises experiences most relevant to the current state of the UAV, is sampled with the highest probability (80% of the time). To form a mini-batch for training, we determine the number of experiences to sample from each tier based on the desired mini-batch size. For each sampled experience from the shared buffer, a comparison is made against a corresponding experience sampled from the UAV’s individual replay buffer. The comparison is based on the temporal difference (TD) error; the experience with the highest TD error among the samples is selected for training. It calculated using the following formula:

We compute the TD error for both the shared experience and the local experience. The experience with the higher TD error is selected for inclusion in the final training mini-batch. For the less-similar tier, which contains experiences moderately relevant to the current state, sampling occurs less frequently (15% of the time). For each selected experience from this tier, a comparison is made against a sample from the similar tier and a sample from each agent’s individual replay buffer. The experience with the highest TD error is selected for training. This approach allows the model to explore slightly different experiences while still maintaining relevance, contributing to more comprehensive learning.

The non-similar tier is sampled occasionally (5% of the time) to introduce more diverse and exploratory experiences into the learning process. For each selected experience from this tier, the same comparison process as with the less-similar tier is used. However, if the TD error of the non-similar experience exceeds a predefined threshold, indicating that it is too different and potentially harmful to the learning process, it is discarded. Otherwise, the experience with the highest TD error is chosen for training. This threshold ensures that while exploration is encouraged, experiences that are too far from the UAV’s current state do not destabilize the learning process. The pseudo code of the proposed method is presented as Algorithm 1.

| Algorithm 1: SERT-DQN Algorithm |

| Initialize parameters For to do: Initialize environment and obtain initial local states for each and global state For time step to do: For each do: With probability : Select random action from action space With probability : Select action Collect joint action Execute joint action in the environment Observe next local states and individual rewards for each Update CDM and global state Compute joint reward Store individual experience in End For Store shared experience in Training Updates: For each do: Sample mini-batch from using Similarity-Based Experience Replay: For each experience in do: Compute target and loss Update End For End For Sample mini-batch from For each experience in do: Compute target and loss Update End For Update Target Networks Periodically: If then: For each do: End For End If Update States: For each do: End For Adjust Exploration Rate by End For (time steps) End For (episodes) |

4.5. Fault-Tolerant Version of the SERT-DQN

This section presents a fault-tolerant extension of the SERT-DQN algorithm. The primary objective of the proposed method is to enhance the robustness and fault tolerance of UAV operations in scenarios where individual UAVs may lose connection to the central controller. In this part, we introduce mechanisms to handle scenarios where one or more UAVs lose connection to the central UGV. The fault-tolerant extension, SERT-DQN+, is designed to handle communication losses that may occur during the execution phase of the mission.

4.5.1. Fault-Tolerant Q-Intermediate Approximation for Lost UAV

When a UAV loses connection to the central UGV, it needs to approximate the values to continue making informed decisions. The proposed method achieves this through a combination of the last known QMIX values from the central UGV and an averaging mechanism involving the lost UAV’s . When connected, each UAV updates its based on the standard SERT-DQN approach. Also, the values are calculated centrally and disseminated to all UAVs. Upon losing connection, a UAV approximates its values using the following formula:

This balances the UAV’s own with the last known QMIX values to approximate the intermediate Q-values based on the concept of the value decomposition method [58].

4.5.2. Centralized Q-Intermediate Approximation for Disconnected UAVs

For the central system in the UGV, when a UAV is disconnected, as the update is less frequent, it uses last values of the lost UAV to calculate the central to let other UAVs know about the prediction of the next states of the lost-UAV to avoid overlap. The proposed fault-tolerant method enhances the robustness of UAV operations in case of a lost connection. If the connection did not connect after considered time, the lost UAV will go back to the UGV, and calculation will continue without it.

5. Experiments and Results

This section presents the numerical evaluation of the proposed multi-UAV system for CPP. The performance of the SERT-DQN algorithm is assessed through a series of simulations conducted using MATLAB 2023. The simulation environment is configured within MATLAB 2023 and executed on a system running Windows 10. To ensure the robustness and adaptability of the algorithm, various simulation scenarios are explored with different initial configurations and five random seeds. This comprehensive approach allows for a thorough analysis of the algorithm’s performance under diverse conditions, providing insights into its effectiveness and scalability. A summary of the key hyperparameters, derived from multiple simulation runs, is presented in Table 1.

Table 1.

Network structures and parameters.

5.1. Experiment Setting

The simulations are designed to address the coverage CPP problem in a two-dimensional plane. The UAVs are assumed to maintain a constant altitude throughout the mission. The environment is modeled as a square grid with varying dimensions to assess the algorithm’s adaptability across different coverage areas. Obstacles are incorporated within the environment to evaluate the UAVs’ ability to navigate and maintain coverage efficiency in the presence of operational challenges. Each grid cell within the environment has a side length of 200 m, corresponding to the UAVs’ detection radius. During the coverage mission, each UAV moves at a constant speed of 20 m per second, with a maximum flight duration of 30 min. The UAVs aim to maximize the area covered while avoiding obstacles and maintaining coordinated movement. The communication range is configured so that all UAVs maintain a connection with the central UGV, with the central UGV’s coverage extending to each UAV across different simulation areas.

The simulations run for 3000 episodes. At the start of each episode, the UAVs are randomly positioned within the grid and must autonomously explore and cover the environment using the learned policy. The performance of the SERT-DQN algorithm is compared against a baseline multi-agent DQN method, which is commonly employed approaches for discrete coverage problems. The evaluation metrics are based on mission reward function, convergence speed, completion time, coverage rate, and the system’s resilience to UAV failures. Simulation results indicate that the proposed algorithm outperforms baselines.

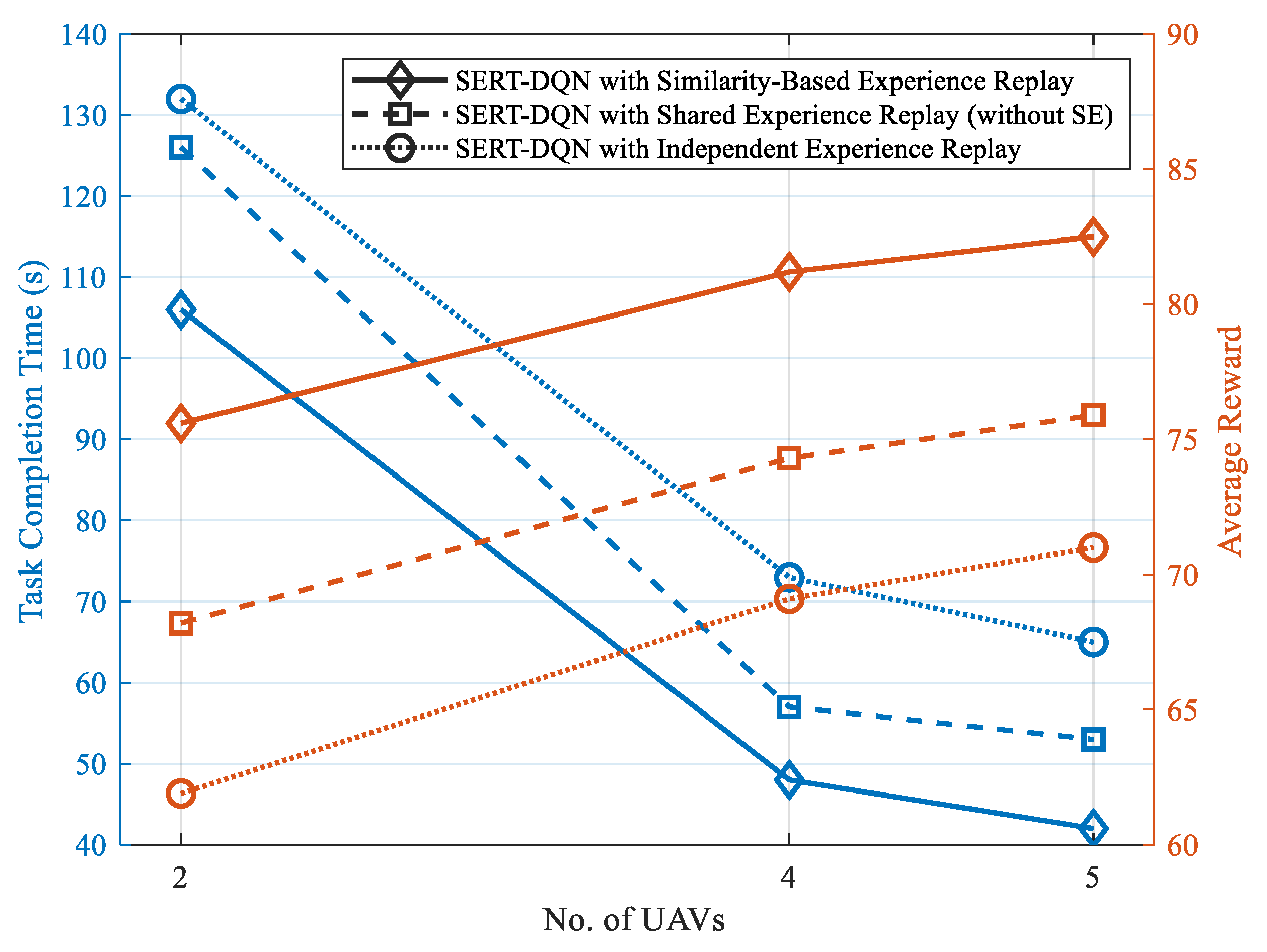

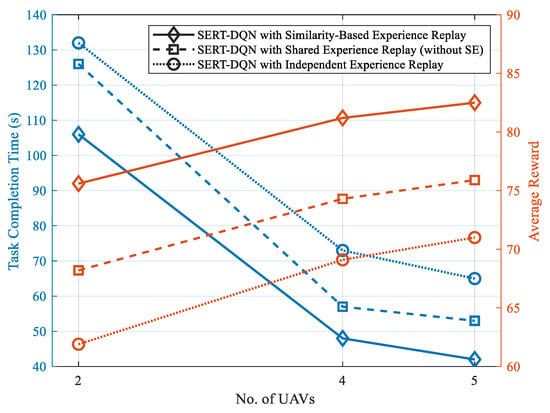

5.2. Evaluation of the Similarity-Based Experience Replay

In this study, we first evaluate the impact of similarity-based experience replay on the learning process. The evaluation focuses on how the prioritization of relevant experiences based on similarity improves task efficiency and reward outcomes across multiple UAVs and balances computational overhead. For this evaluation, different number of UAVs are selected as representative samples to simulate their path planning processes using three configurations are compared: (1) SERT-DQN with similarity-based experience replay (baseline): This setup filters shared experiences by similarity to prioritize relevant samples; (2) SERT-DQN with shared experience replay: This setup samples experiences uniformly from the shared buffer without prioritization; (3) SERT-DQN with independent experience replay (non-shared): Here, each UAV maintains its own experience buffer without sharing data. Simulations were conducted in a 1000 × 1000-m grid environment with different numbers of UAVs (2, 4, and 5). Also, consider a single obstacle in the environment, randomly positioned in each episode. The primary metrics used to evaluate performance include task completion time and the average reward function. Figure 3 presents the results obtained for each method. The results, averaged over five random seeds and after 2000 episodes, indicate that the similarity-based replay configuration consistently leads to faster task completion and higher average rewards, particularly with an increasing number of UAVs. Although the similarity-based approach introduces a slight computational overhead, this is offset by faster convergence and more efficient task execution.

Figure 3.

Task completion time and average reward vs. number of UAVs.

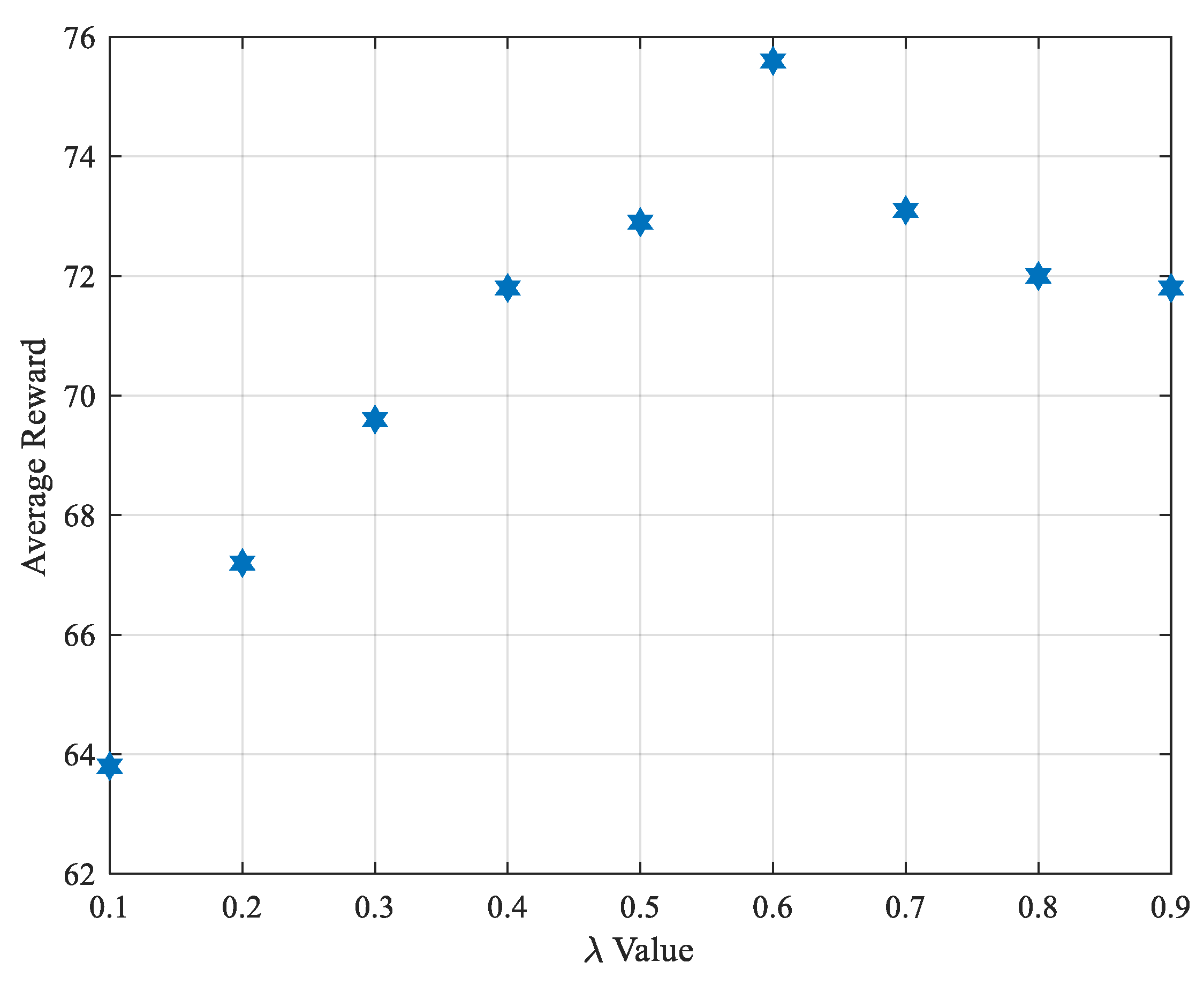

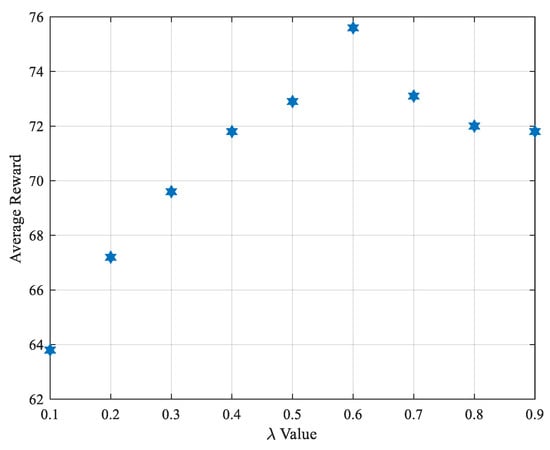

5.3. Sensitivity Analysis of λ in the SERT-DQN Algorithm

This section investigates the sensitivity of the parameter in the SERT-DQN algorithm and its impact on training performance. The parameter controls the balance between local (primary Q-networks) and global (intermediate Q-network) learning; it can influence the coordination among UAVs and their adaptability to local conditions directly. Simulations are conducted with varying values ranging from 0.1 to 0.9. These values are tested with different numbers of UAVs in the CPP environment.

5.3.1. Impact of λ during Different Phases of Training

- Early training phase: In the initial stages, exploration is crucial, and prioritizing local learning can be advantageous. A higher (0.6–0.9) focuses more on individual UAV exploration and decision-making, allowing each agent to gather unique environmental information without excessive influence from global strategies. This approach accelerates the discovery of diverse paths and regions, avoiding premature convergence on global coordination strategies. However, too high a can lead to fragmented paths if global coordination is underemphasized.

- Late training phase: In the final stages, the focus shifts to fine-tuning and stabilizing the learned policies. Here, a slightly lower (around 0.3 to 0.5) emphasizes global coordination, ensuring the UAVs operate in unison and follow optimized paths for the final coverage. This setup aligns the agents’ actions with the overall mission objective, leading to stable and robust policy convergence.

5.3.2. Scalability and Coordination Across Multiple UAVs

Scalability refers to the system’s capacity to maintain performance as the number of UAVs increases.

- : Leads to poor scalability as the UAVs operate too independently. It causes inefficient paths and frequent overlaps in larger fleets.

- : Over-coordination can cause rigidity, slowing down response times and making the system less scalable due to excessive reliance on global strategies.

- : The best scalability is observed with this range. This ensures consistent performance across different scales, from small to large fleets.

Figure 4 illustrates the result for 2 UAVs. The experimental results indicate that values around 0.6 offer the best balance between local autonomy and global coordination, leading to faster convergence, higher rewards, and more-stable learning across all UAV configurations.

Figure 4.

The variation of the average reward with respect to λ.

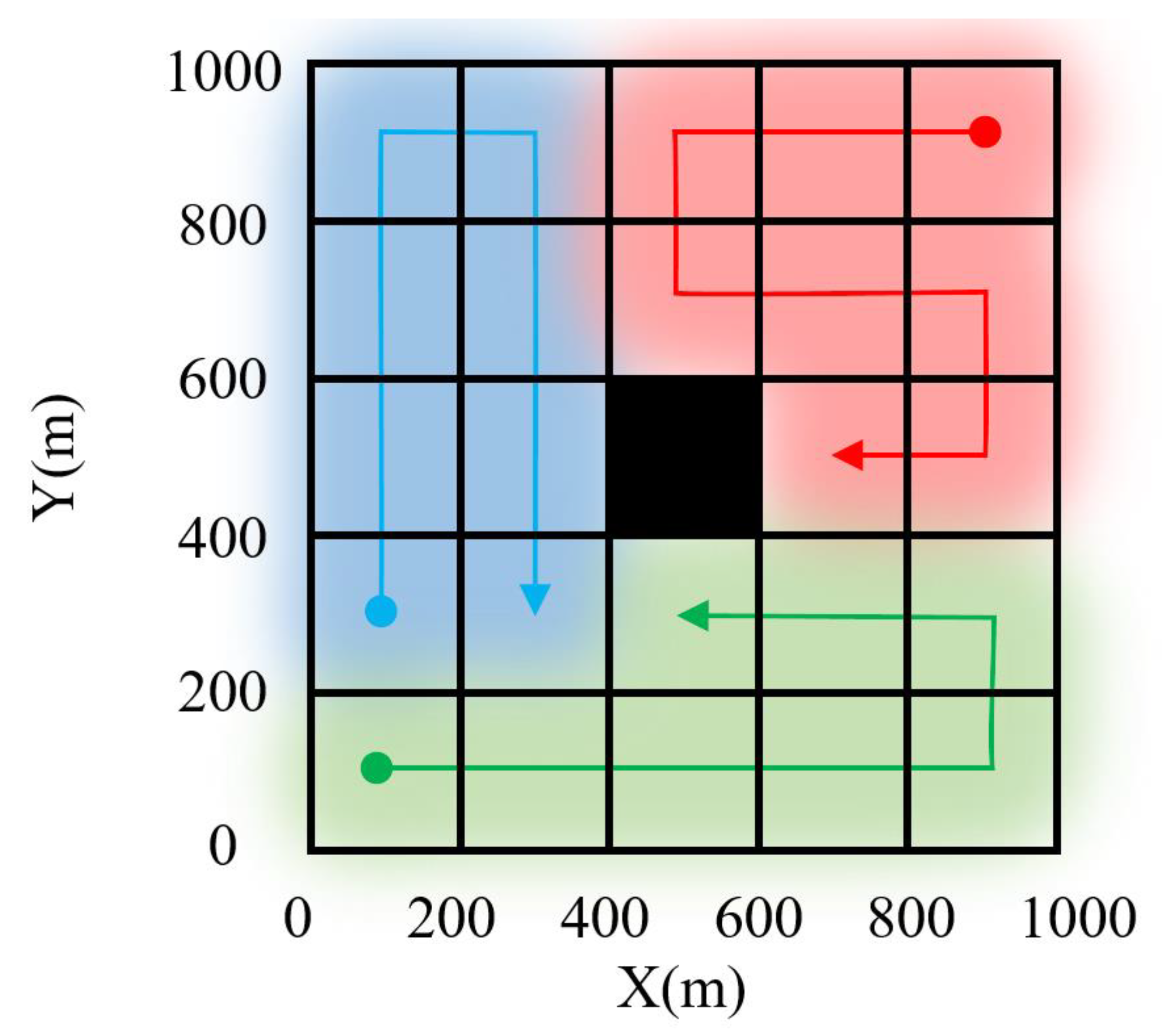

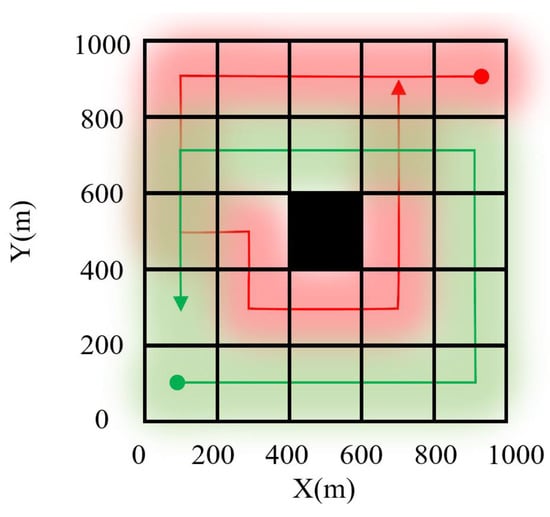

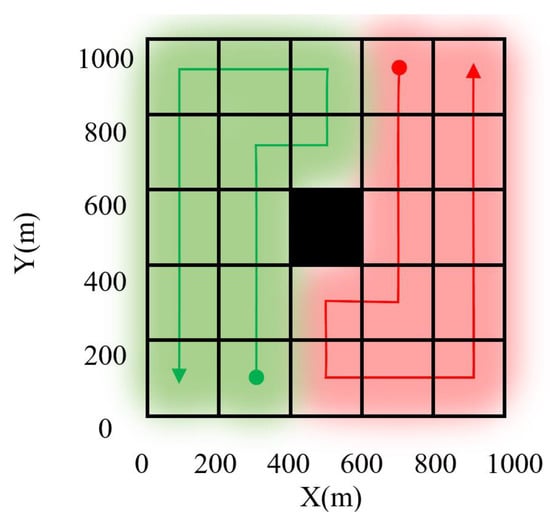

5.4. Evaluation of the Proposed Method Regarding the MADQN

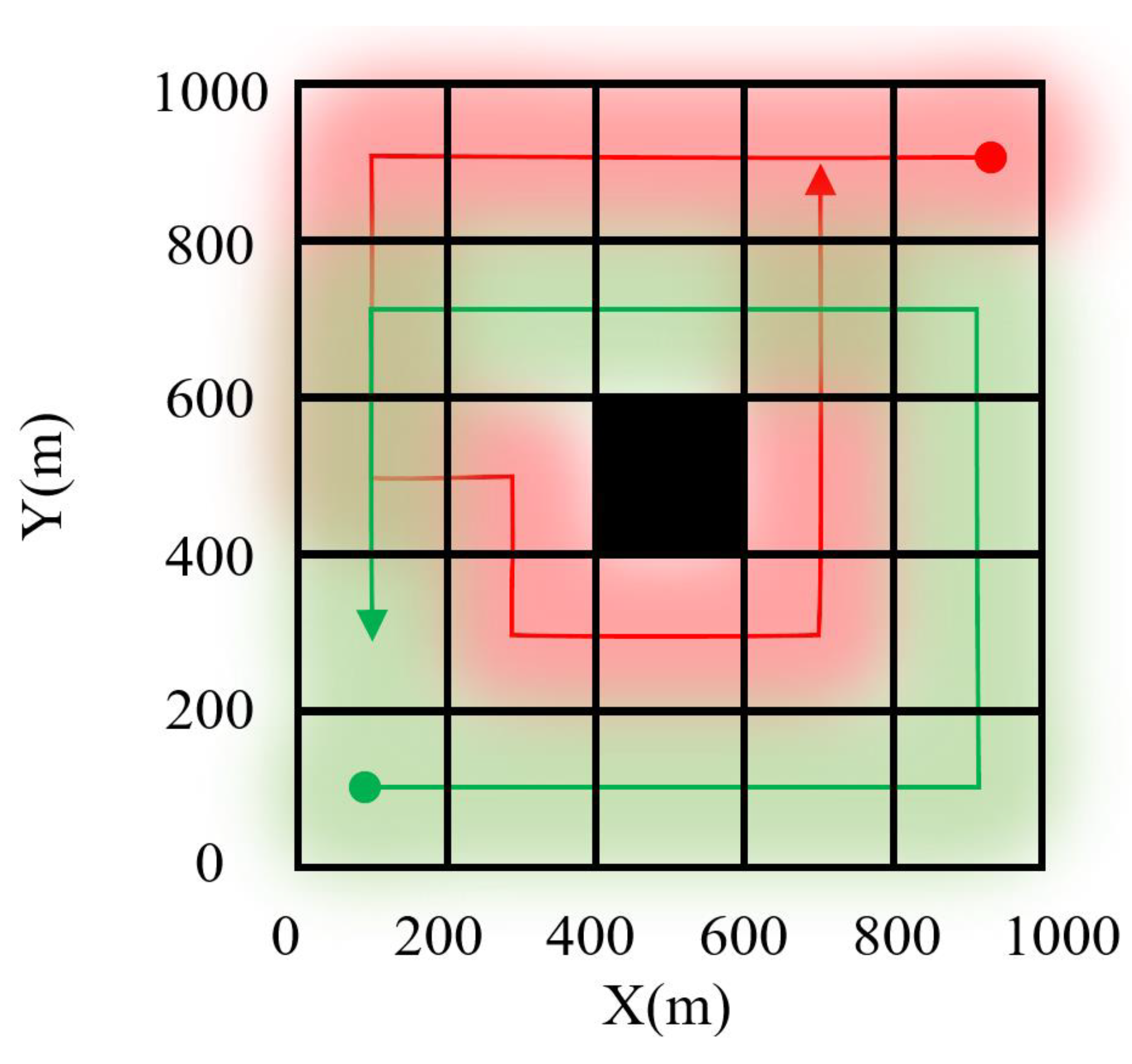

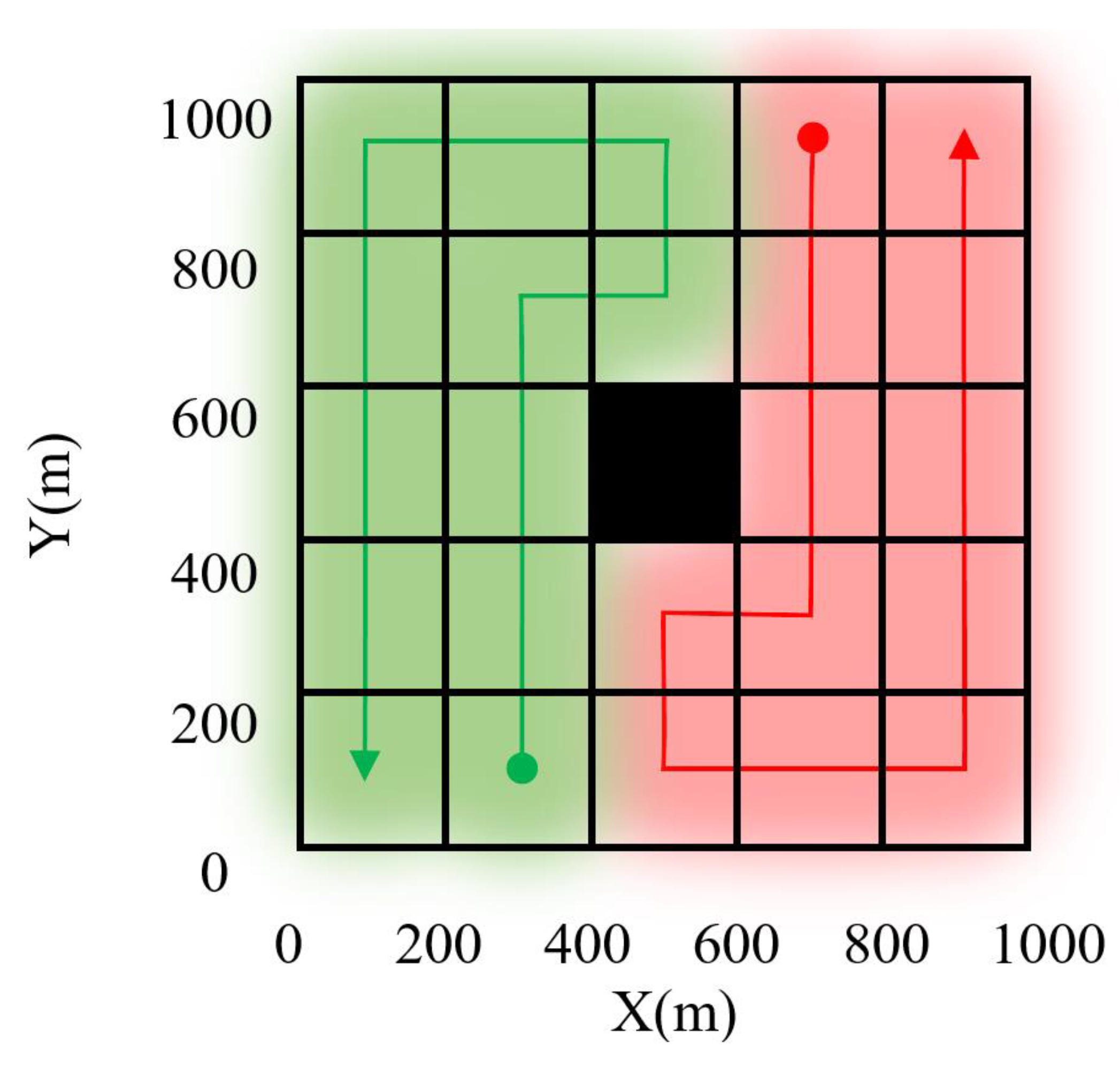

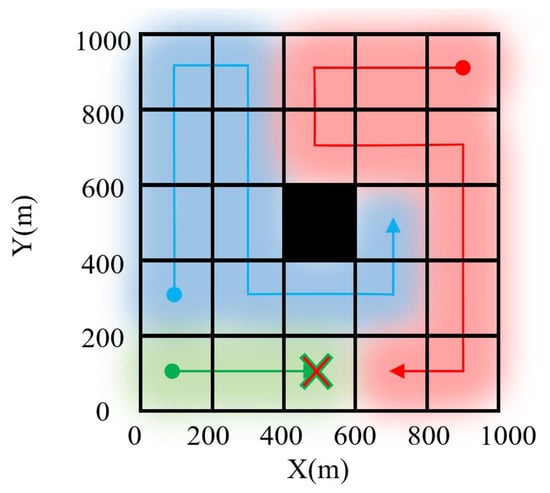

Figure 5 and Figure 6 illustrate the coverage paths of the UAVs using the proposed method with similarity-based experience replay and the benchmark MADQN method. The comparison indicates that the DQN-based approach proves less effective in the CPP problem. The paths generated by the MADQN method contain more overlap, which reduces coverage efficiency. In the DQN-based method, each UAV bases its flight decisions solely on limited local observations, which causes suboptimal paths with frequent overlaps. This redundancy results in prolonged mission completion times due to repetitive coverage tasks. On the other hand, the proposed method minimizes path overlap by allowing each UAV to access shared information, such as the overlap map, while planning its coverage path in real-time. As a result, the UAVs achieve a more uniform and non-overlapping division of the coverage area, thereby optimizing task completion time.

Figure 5.

Coverage path planning with benchmark algorithm (DQN) using two UAVs.

Figure 6.

Coverage path planning with proposed algorithm using Two UAVs.

5.5. Performance Analysis of the Proposed Method

To evaluate the performance of the SERT-DQN method, experiments assess mission metrics across multi-UAV systems with different scales and target area sizes. The evaluation criteria include mission completion time and overlap area. The results are compared with those of a standard multi-agent DQN approach, with multiple runs ensuring consistent and representative outcomes.

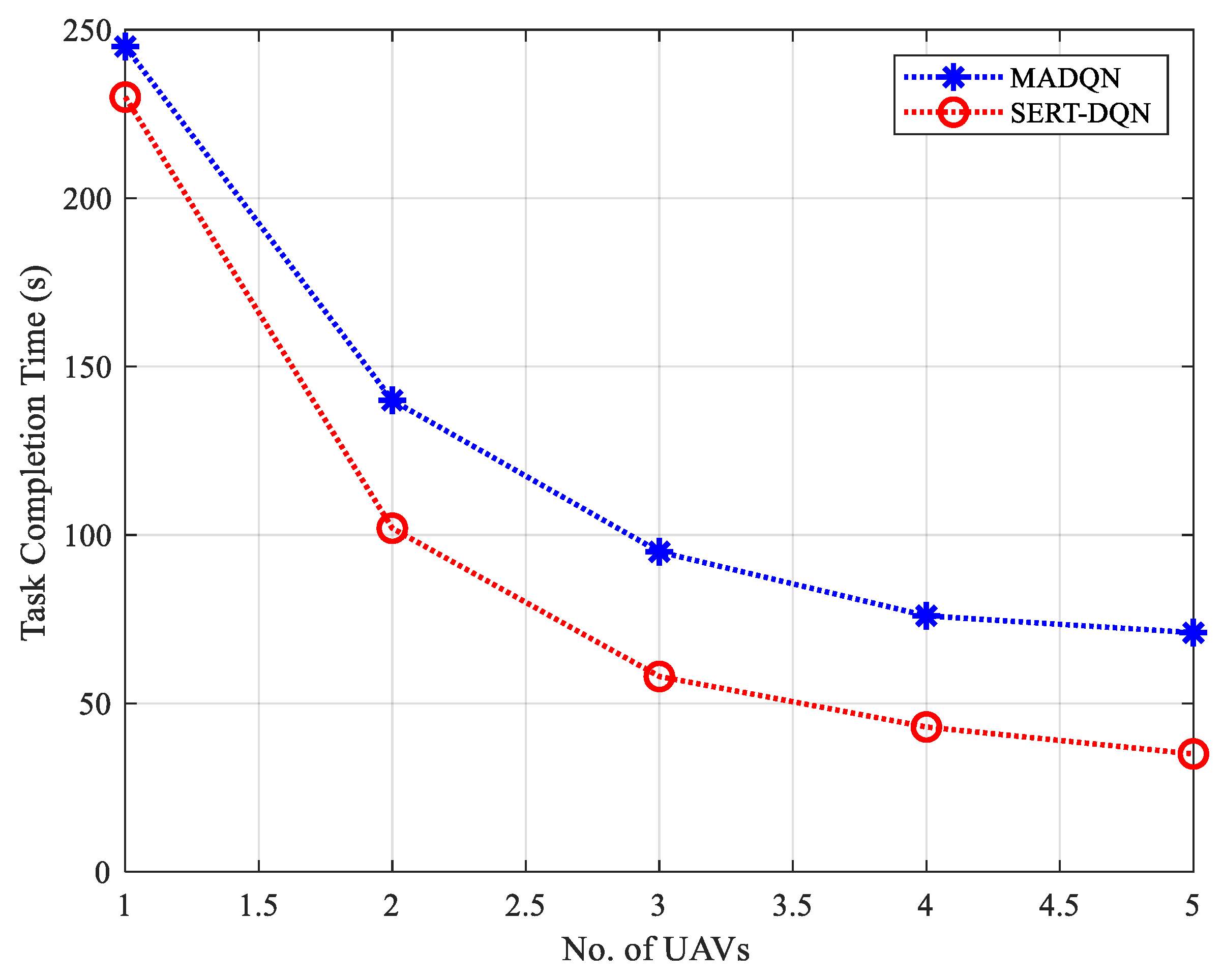

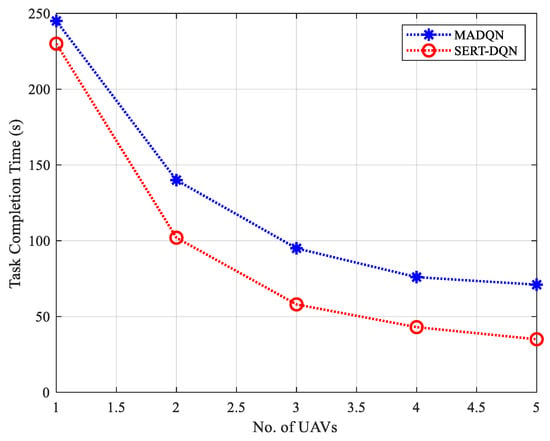

Figure 7 compares the mission completion times of the MADQN method and our proposed SERT-DQN method for varying numbers of UAVs over a target area of 1000 m by 1000 m. Even with a single UAV, SERT-DQN outperforms MADQN due to its similarity-based experience replay, which prioritizes relevant states and improves sampling quality. As the number of UAVs increases, the cooperative learning mechanism in SERT-DQN further enhances performance by integrating both individual and shared observations, leading to better coordination and reduced overlap. The algorithm’s scalability is evident in the consistently shorter completion times as the number of UAVs grows.

Figure 7.

Comparison of mission completion time with increasing number of UAVs.

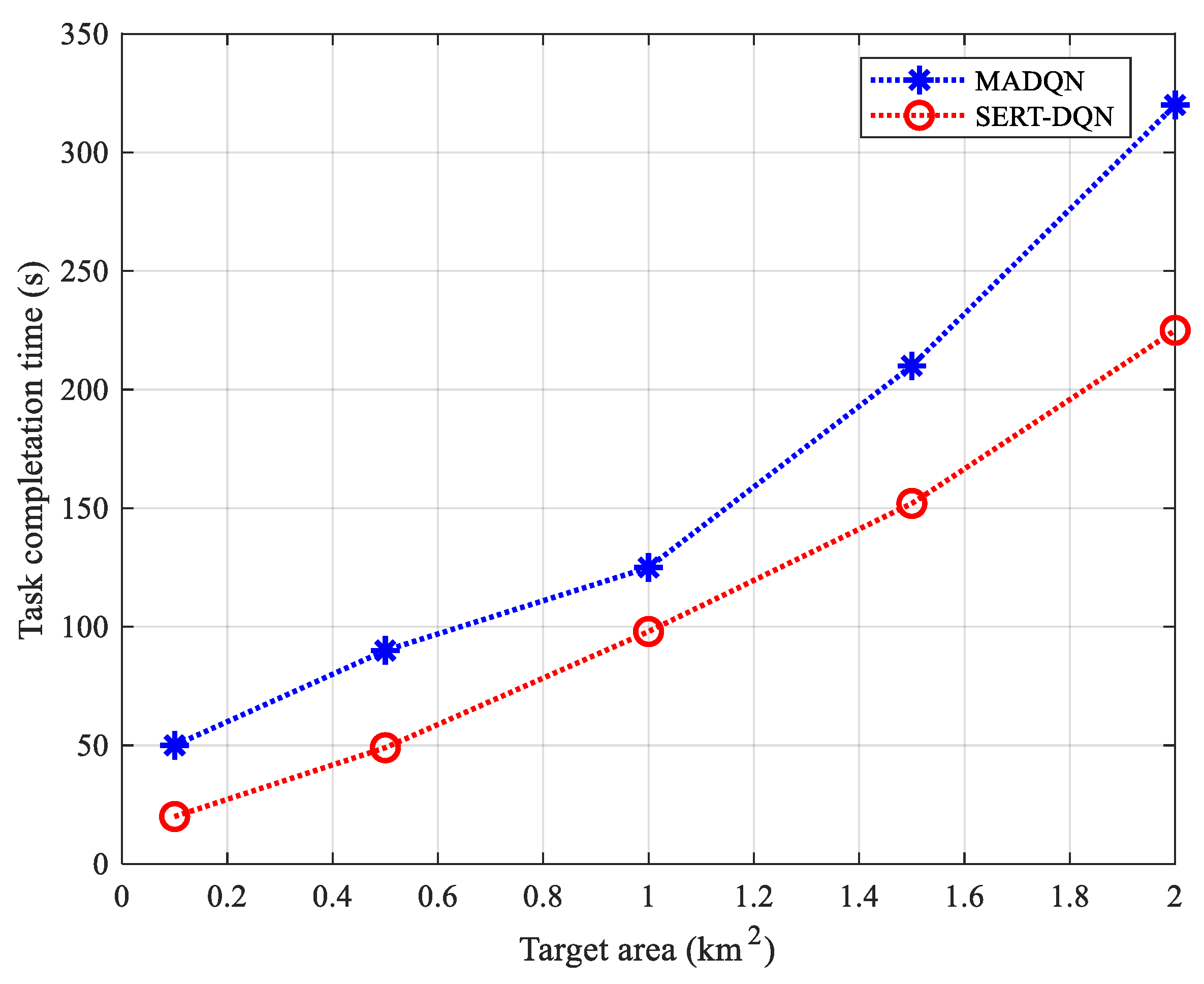

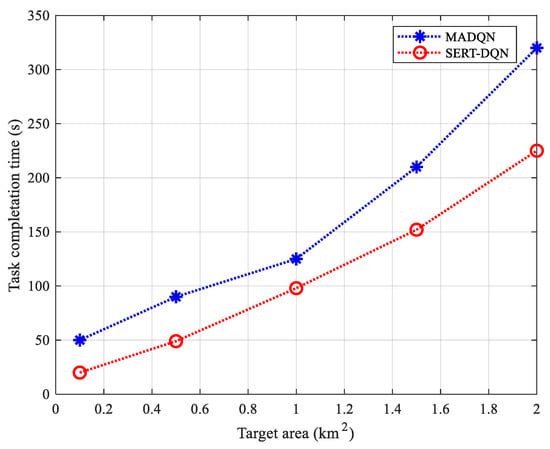

Figure 8 presents the results for completion time as the target area size increases, where our method shows better performance. Analysis indicates that SERT-DQN outperforms MADQN in overlap area and mission completion time across various target area sizes. In larger environments, individual UAVs have fewer observations. SERT-DQN addresses this limitation through UAVs sharing exploration data and learning experiences, effectively expanding their observational scope.

Figure 8.

Comparison of mission completion time with increasing target area size.

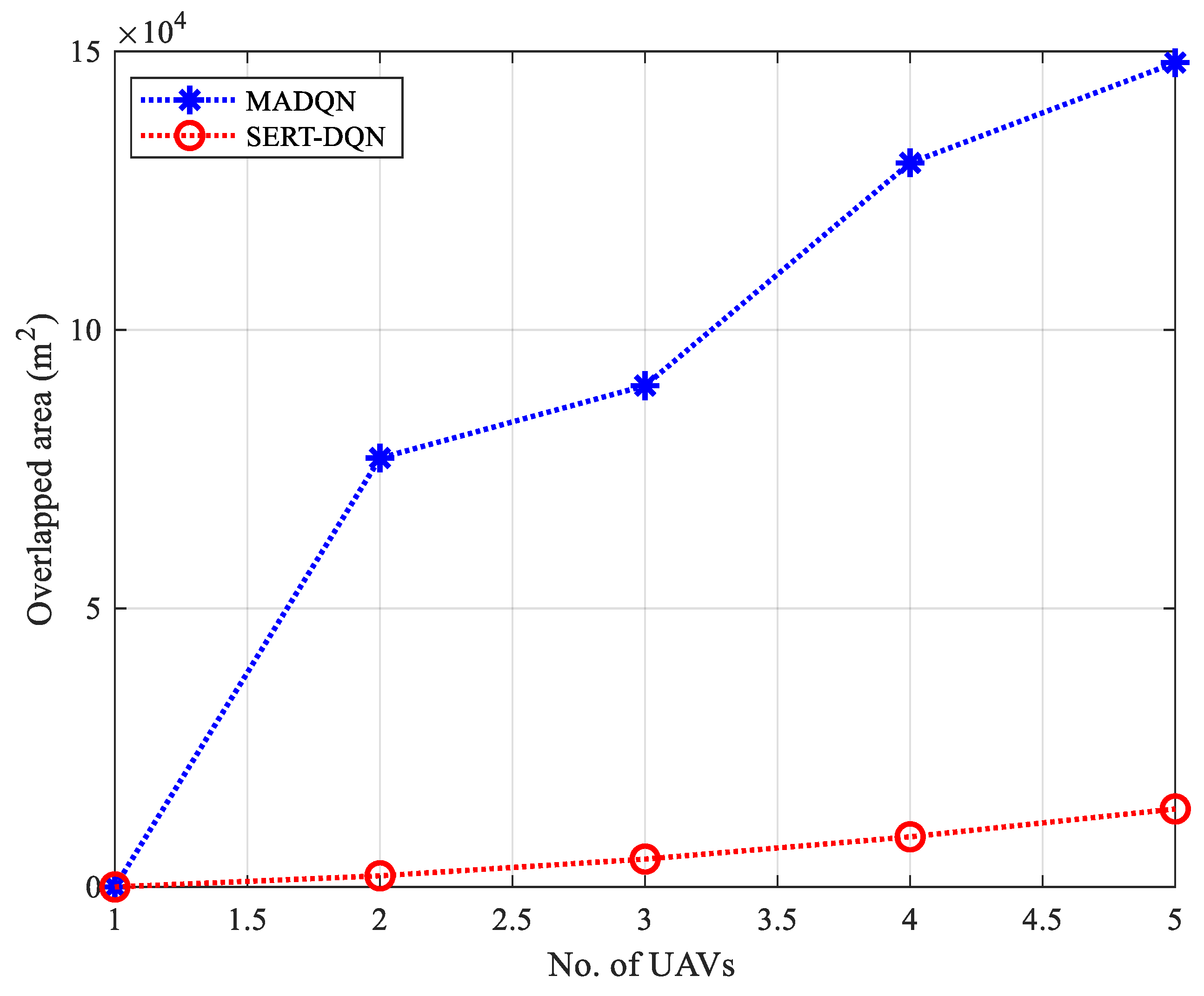

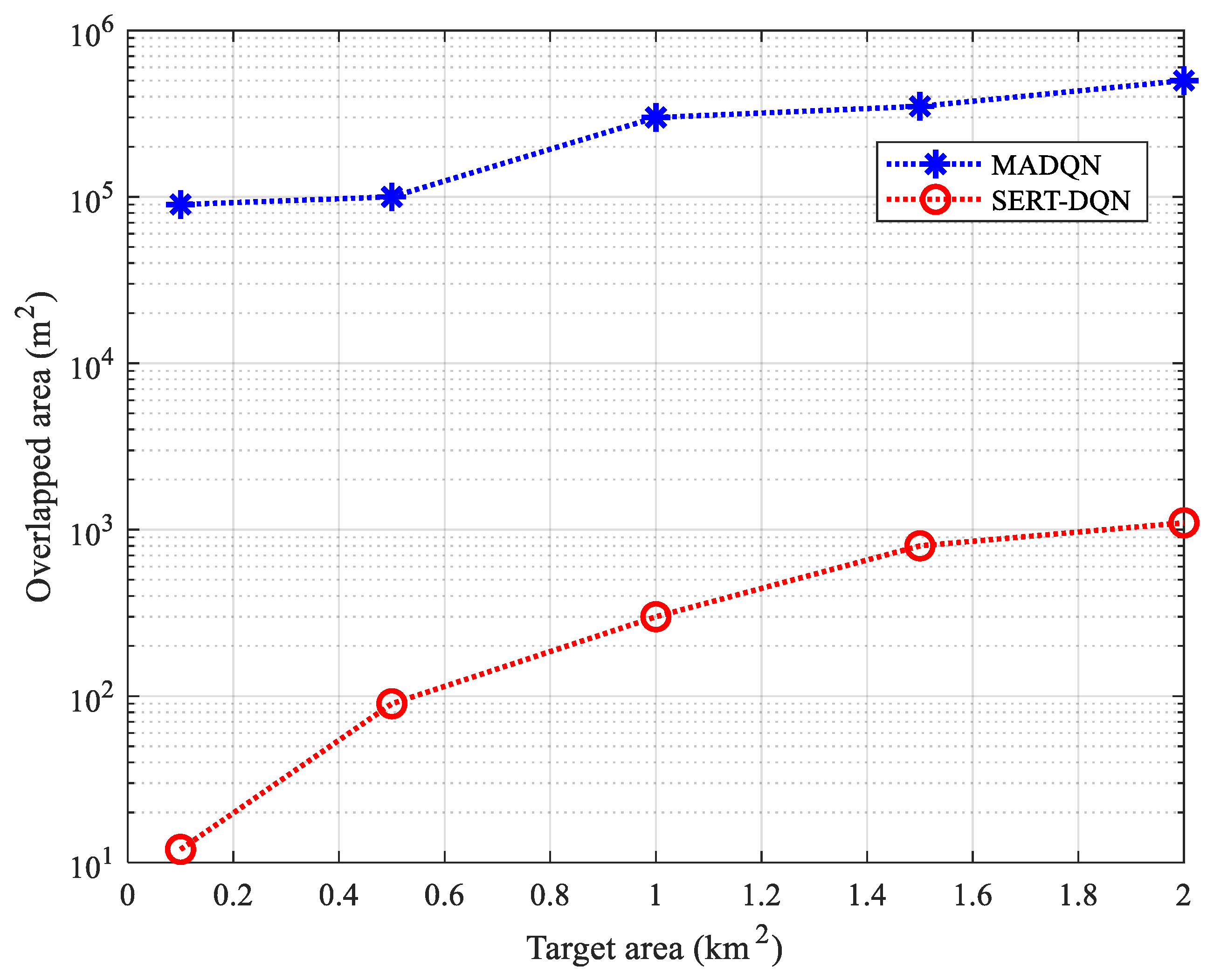

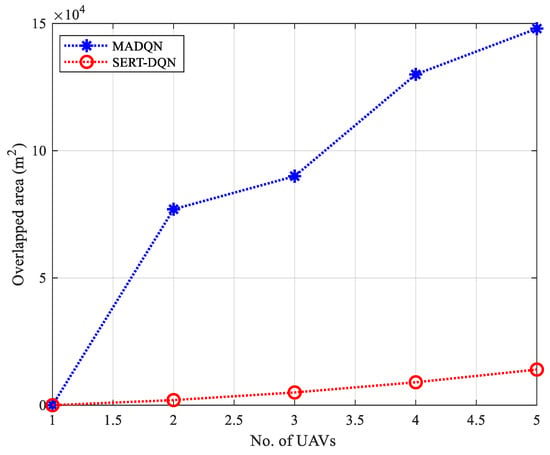

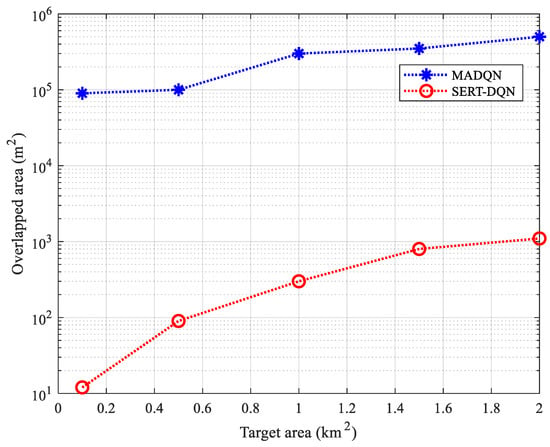

The comparison of overlap areas between our proposed method and MADQN appears in Figure 9 and Figure 10. As the number of UAVs increases, the overlap area in the SERT-DQN method remains lower compared to the MADQN approach. Additionally, even as the target area expands, our proposed method maintains a lower overlap than MADQN.

Figure 9.

Comparison of overlap areas with increasing number of UAVs.

Figure 10.

Comparison of overlap areas with increasing target area size.

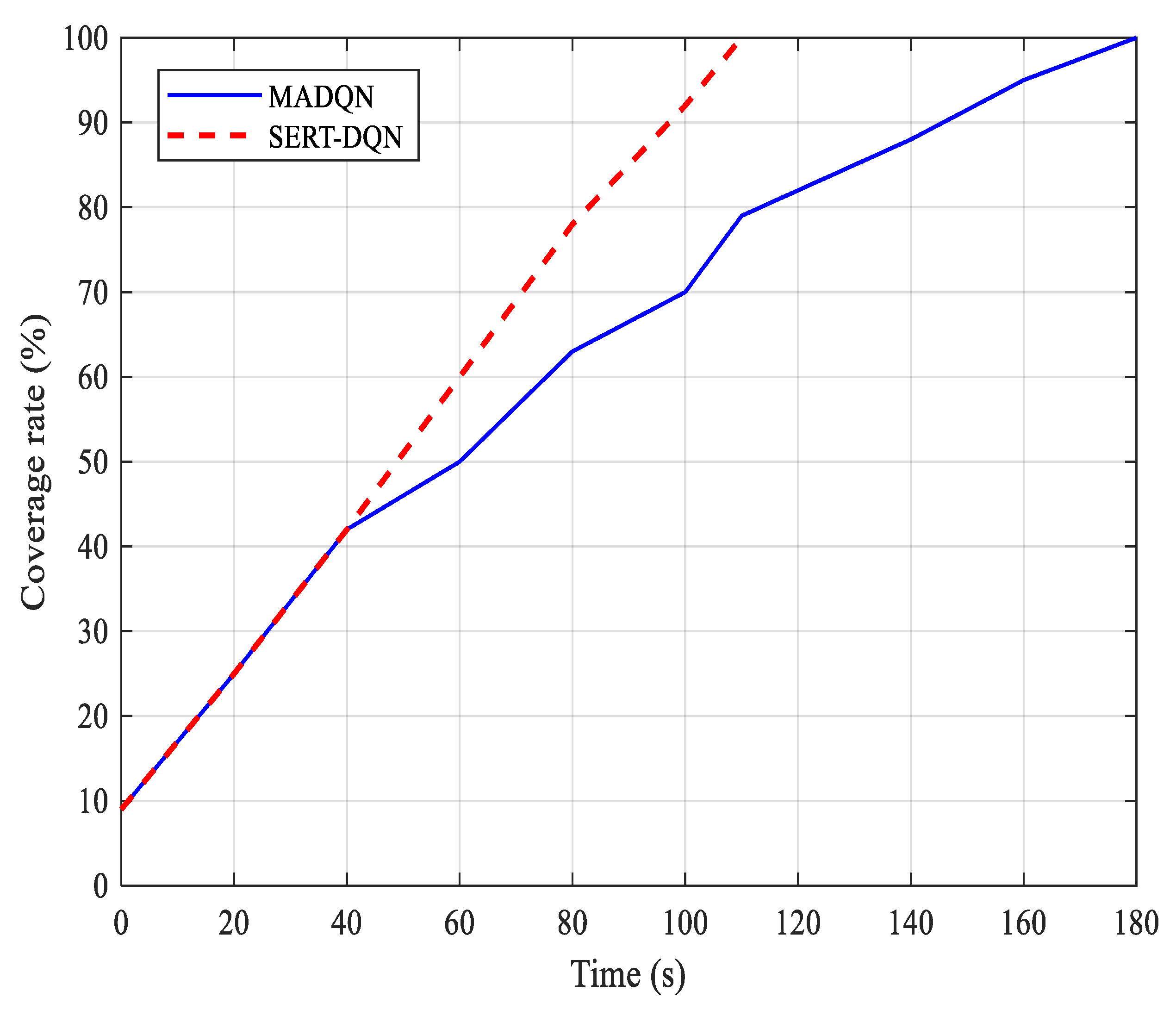

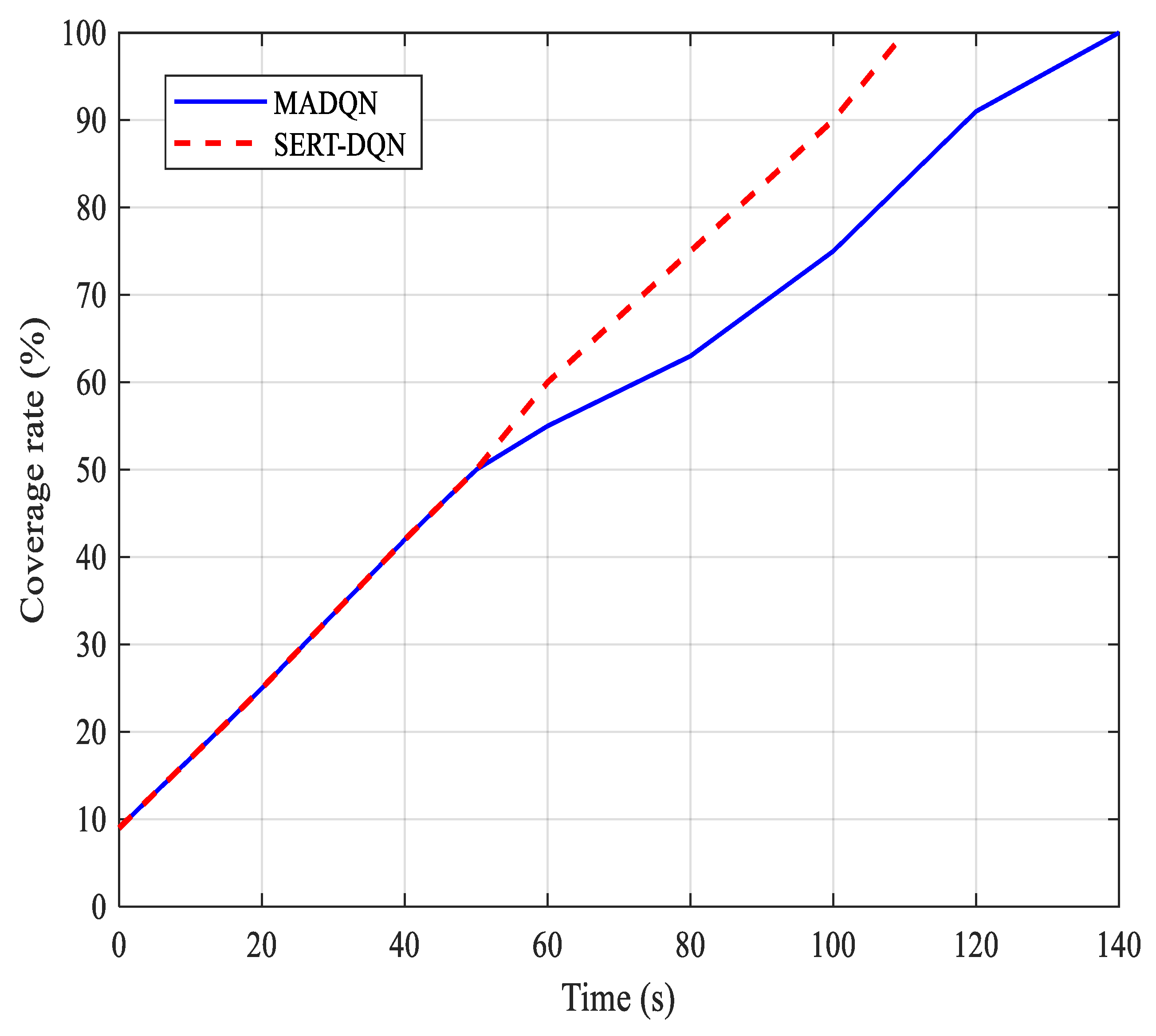

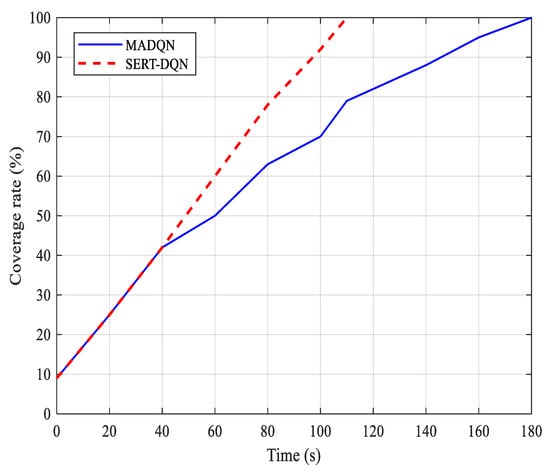

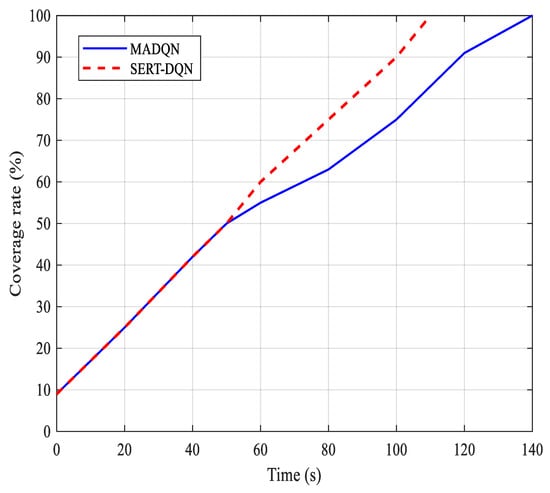

Figure 11 and Figure 12 present the results of coverage rate comparisons using two UAVs and three UAVs, respectively. The data show that the SERT-DQN method consistently outperforms MADQN in both scenarios. With two UAVs, the difference in coverage rate is clear, demonstrating the advantage of the similarity-based experience replay in optimizing coordination. As the number of UAVs increases to three, the performance gap widens, showing the strength of the SERT-DQN approach in managing multi-agent coverage tasks more effectively. The results confirm that our method scales well with an increasing number of agents, leading to more efficient and comprehensive area coverage.

Figure 11.

Comparison of coverage rate with two UAVs.

Figure 12.

Comparison of coverage rate with three UAVs.

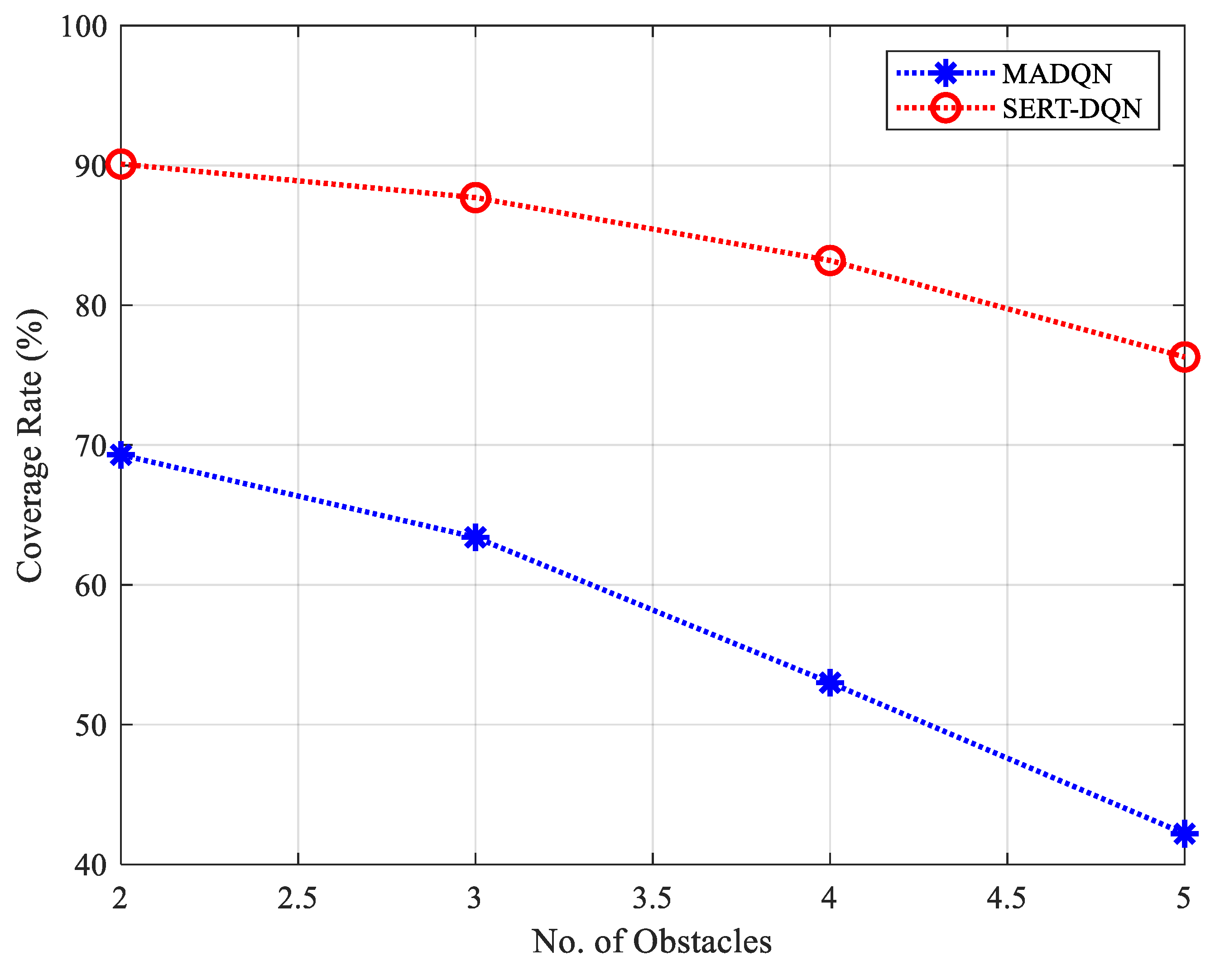

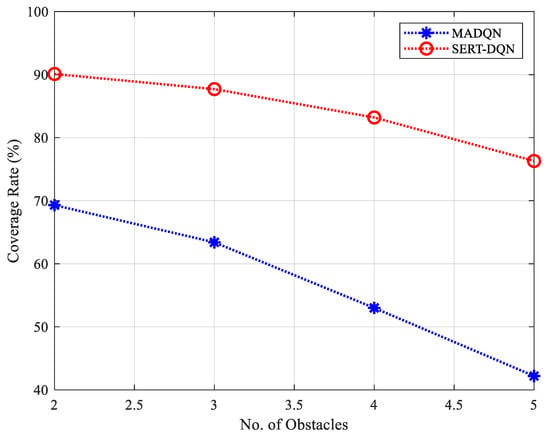

In this experiment, the number of obstacles varied from two to five, and the coverage rate for both SERT-DQN and MADQN is recorded after 100 s with two UAVs. The target area is fixed. The inclusion of obstacles increases complexity and poses challenges for UAVs to navigate while maintaining effective coverage. Figure 13 illustrates the coverage rates as the number of obstacles increases, with each obstacle occupying a single grid cell. As shown, our proposed approach demonstrates superior adaptability in obstacle-dense environments compared to MADQN. This improved performance is primarily due to the similarity-based experience replay mechanism and the decentralized training capability of each UAV, which allows for more-efficient navigation and coverage despite the presence of obstacles.

Figure 13.

Comparison of the coverage rate of the proposed method with MADQN under an increasing number of obstacles.

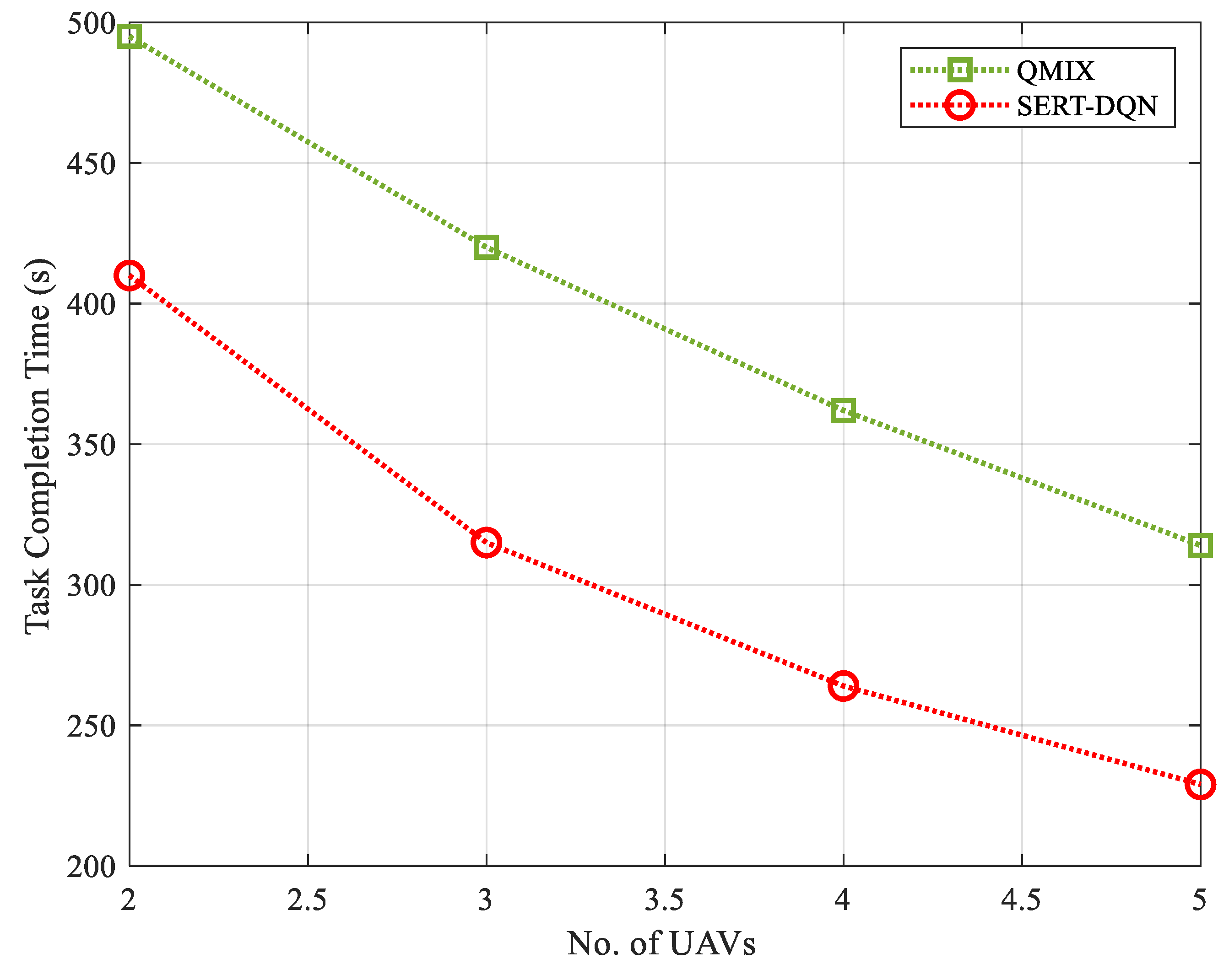

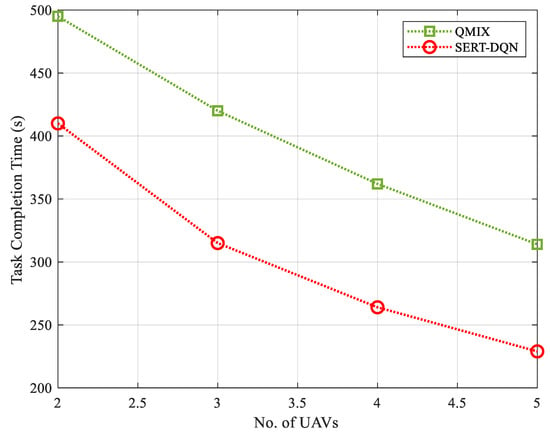

5.6. Comparative Analysis of SERT-DQN with QMIX

For further evaluation, we conducted a comparative analysis between our proposed method, SERT-DQN, and the QMIX algorithm, with a focus on scalability as the primary performance metric. The experimental environment consisted of a 3000 × 3000 m grid, featuring five obstacles randomly placed in each episode. Additionally, the starting positions of the UAVs were randomized at the beginning of each episode to ensure diverse exploration patterns. To ensure the scalability of our results, each metric was measured across varying numbers of UAVs, with the outcomes averaged over 10 simulation runs. Both algorithms were trained for 3000 episodes to allow for sufficient convergence and performance optimization. The comparative evaluation highlights the differences in scalability between the two methods, particularly in large-scale multi-agent scenarios. Figure 14 illustrates the result.

Figure 14.

Task completion time comparison between SERT-DQN and QMIX.

The comparison between SERT-DQN and QMIX reveals that SERT-DQN handles scalability more effectively, especially as the number of UAVs increases. With fewer UAVs, SERT-DQN completes tasks faster than QMIX, which shows that QMIX faces some initial coordination challenges. As more UAVs are added, SERT-DQN continues to perform efficiently, completing tasks quicker than QMIX. This difference in performance highlights that QMIX struggles with the complexity of coordinating a larger number of UAVs, which results in slower task completion. In contrast, SERT-DQN’s approach, which does not rely heavily on central coordination, adapts better to an increase in UAVs and proves to be more suitable for large-scale multi-agent tasks. It is observed from Figure 14 that while the number of UAVs has more than doubled (from 2 to 5), the task completion time is reduced by only approximately 40%, which deviates from the results previously observed in Figure 7. This discrepancy can be attributed to the differences in environmental conditions between the two scenarios. In Figure 7, the environment was smaller and featured fewer obstacles. In contrast, the current scenario involves a greater number of obstacles, leading to increased overlap between UAVs and a consequent reduction in coverage efficiency. As a result, the expected reduction in completion time does not scale proportionally with the increase in the number of UAVs.

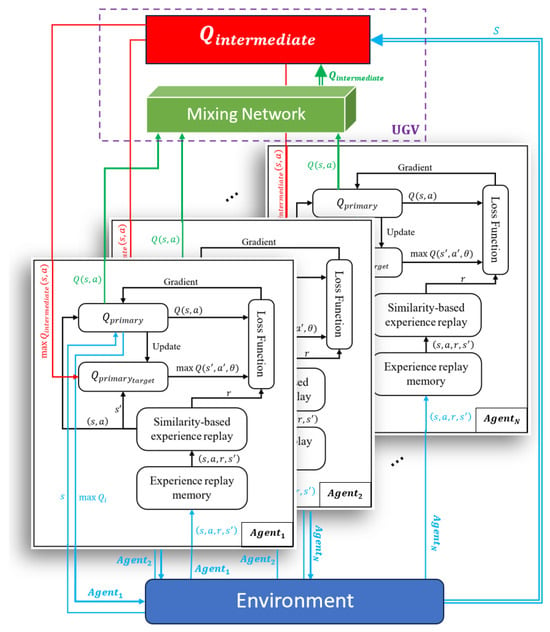

5.7. Fault Tolerance Analysis

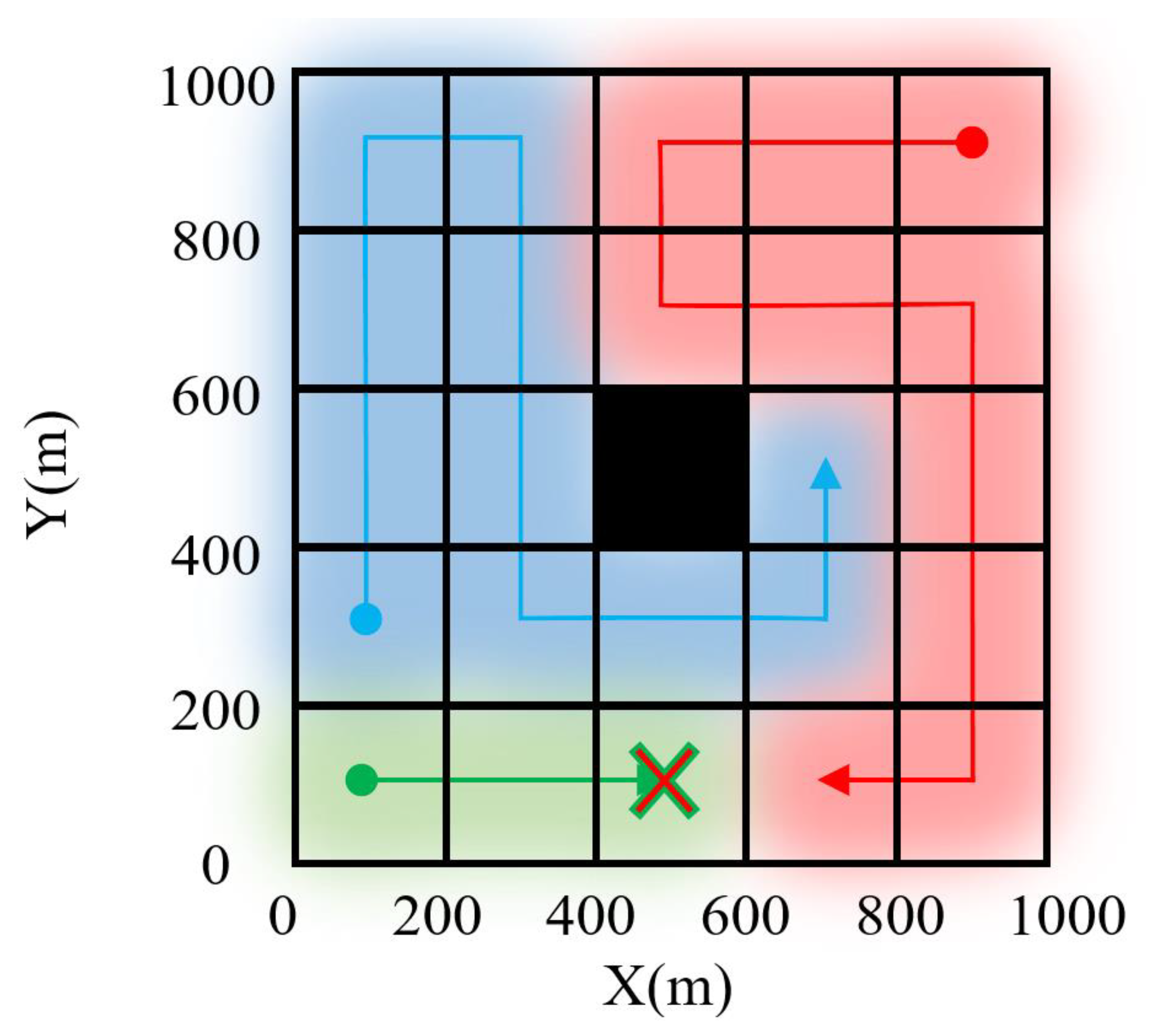

A key feature of the proposed method, especially in its enhanced version (SERT-DQN+), is its fault tolerance capability. In multi-UAV systems, two critical faults must typically be addressed: communication loss between UAVs, which can lead to a UAV becoming uncoordinated; and the failure of a UAV. To assess the fault tolerance of the proposed method, we simulate scenarios involving both UAV failures and communication interruptions.

5.7.1. UAV Failure Scenario

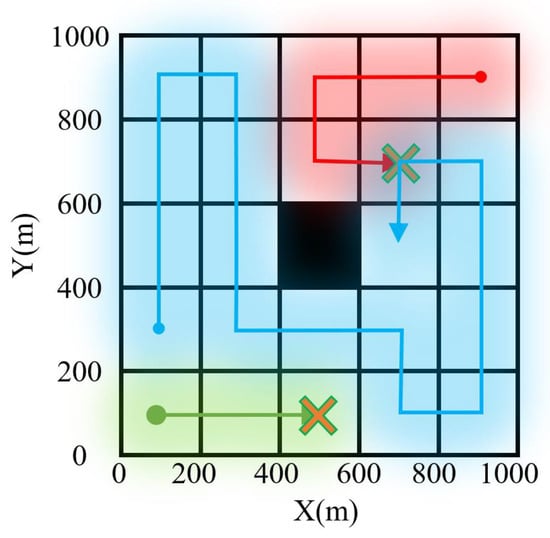

A challenge arises when a UAV fails due to external or internal factors, such as energy depletion or sensor malfunction, which can potentially compromise the stability of the system. To assess this, we simulated a scenario in which one of three UAVs fails unexpectedly and examined how the remaining UAVs adapt to continue the mission. Figure 15 illustrates the system before the failure, and as shown in Figure 16, UAV 3 fails after covering only three grid cells. The remaining two UAVs successfully complete the coverage of the target area. The algorithm was trained for both two- and three-UAV configurations. Upon the failure of UAV 3, UAVs 1 and 2 promptly adjust to the two-UAV configuration, continuing to make real-time flight decisions. This demonstrates the fault tolerance of the proposed method.

Figure 15.

Coverage path planning with three UAVs.

Figure 16.

Coverage path planning with three UAVs facing failure of one UAV.

Furthermore, we considered a more complex scenario where, after several steps following the failure of UAV 3, UAV 2 also fails after covering five additional grid cells. The mission then proceeds with just one UAV, as shown in Figure 17. The continuation of the mission after the second failure is managed using the last available simulation data from the previous configuration. As can be seen, the mission was successfully completed with a single UAV after the failure of the others. However, this resulted in some overlap of previously covered regions, contributing to the extended completion time.

Figure 17.

Coverage path planning with three UAVs facing failure of two UAVs.

5.7.2. UAV Communication Loss Scenario

In Mars exploration, communication interruptions caused by adverse weather conditions or terrain obstructions are frequent challenges. These interruptions require robust, fault-tolerant solutions that maintain performance during communication failures. UAVs rely on onboard sensors, such as cameras, to monitor their surroundings, detect obstacles, and identify other UAVs. When a UAV loses communication with the central UGV, it transitions seamlessly to autonomous operation, relying on its local RL policy for safe navigation.

Since obstacle detection and collision avoidance are handled locally, the loss of communication does not impair the UAV’s ability to avoid obstacles or collisions. While the central UGV cannot update the CDM, each UAV compensates by using its onboard detection systems, ensuring consistent collision avoidance in both normal and communication loss scenarios. However, because the CDM is not updated during communication loss, each UAV must detect and respond to obstacles directly, which may result in longer task completion times.

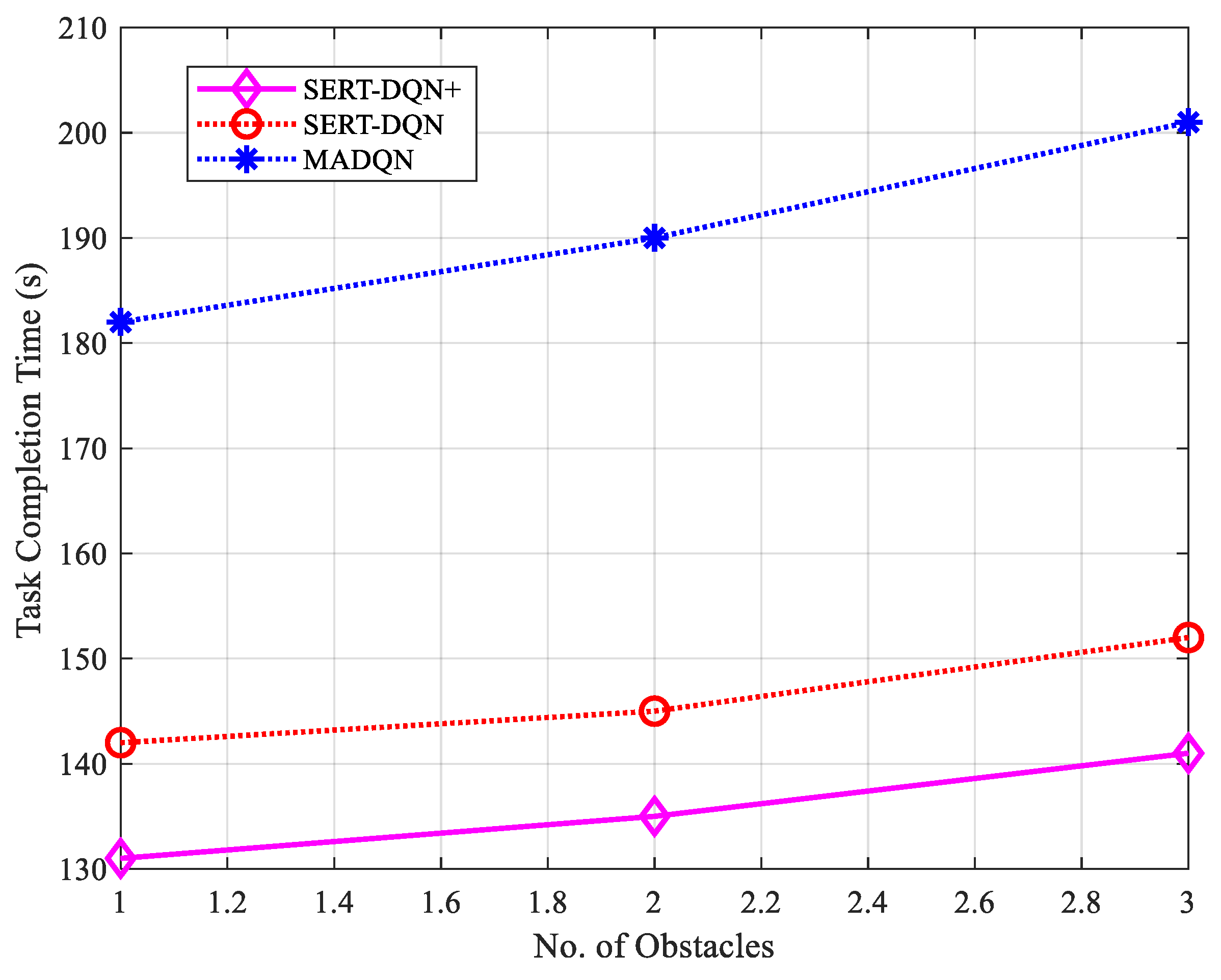

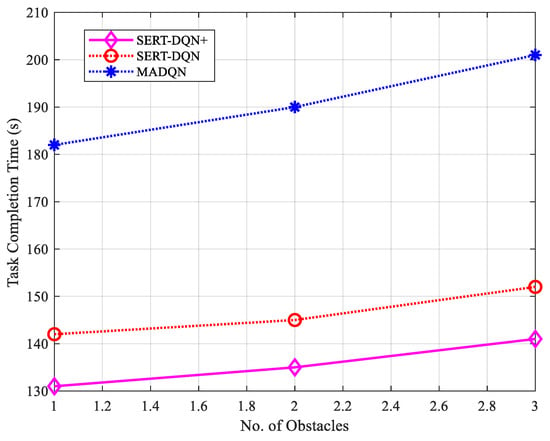

To evaluate the performance of SERT-DQN+ during communication loss, we conducted simulations with varying numbers of obstacles. The environment featured one to three obstacles, each occupying a grid cell, and two UAVs were deployed. During each simulation, communication loss occurred three times for UAV 2, with each loss happening at different stages of the mission. After losing communication, UAV 2 continued autonomously without input from the UGV. Results were compared to the SERT-DQN algorithm and MADQN. Table 2 summarizes the results, comparing task completion time and the number of overlapped grids under a single-obstacle configuration.

Table 2.

Comparison of SERT-DQN, SERT-DQN+, and MADQN in UAV communication loss scenarios.

SERT-DQN+ demonstrated superior performance over both SERT-DQN and MADQN in communication loss scenarios, with shorter task completion times, fewer overlapped grids, and more efficient coverage. Its simple method for estimating the position of the disconnected UAV, combined with low computational requirements, enables effective decision-making. Although the estimation method is basic, it has a noticeable positive impact on task completion time. In contrast, MADQN exhibited more frequent overlaps, lower coverage efficiency, and higher collision rates as the number of obstacles increased. The use of similarity-based experience replay in SERT-DQN and SERT-DQN+ improved performance, particularly in communication loss scenarios and environments with multiple obstacles.

Figure 18 illustrates the effect of increasing the number of obstacles on task completion time. As the number of obstacles rises, task completion times increase for all algorithms. However, SERT-DQN+ shows a smaller increase, reflecting its ability to handle complex environments more efficiently compared to MADQN.

Figure 18.

The effect of increasing the number of obstacles on task completion time.

As the number of obstacles increases, UAVs must navigate more complex paths to avoid collisions, leading to longer flight paths and higher task completion times. Frequent route adjustments around obstacles can result in less efficient coverage patterns, causing UAVs to revisit surveyed areas unintentionally, thereby increasing overlap. SERT-DQN+ effectively manages these challenges due to its superior path planning and adaptability, while MADQN’s less sophisticated approach leads to greater increases in both task completion time and overlap.

6. Conclusions

In this paper, the algorithm SERT-DQN has been developed for enhancing coverage path planning in multi-agent systems, particularly for Mars exploration. The algorithm has the capability to optimize task completion time, reduce path overlap, and improve coordination among UAVs and UGVs. SERT-DQN is composed of decentralized decision-making mechanisms combined with centralized coordination, incorporating similarity-based experience replay to prioritize relevant experiences and enhance learning efficiency.

Performance evaluation of SERT-DQN occurs through simulations conducted in the MATLAB environment, exploring various scenarios to assess robustness and scalability. Results indicate that SERT-DQN consistently outperforms traditional multi-agent DQN approaches, with improvements in mission completion time, coverage rate, and resilience to UAV failures.

The fault-tolerant extension, SERT-DQN+, shows reliable performance during communication disruptions and UAV failures, maintaining operational stability under conditions typical of Mars exploration. Comparative analysis with QMIX further validates SERT-DQN’s effectiveness in handling scalability and coordinating multiple agents efficiently.

Author Contributions

Conceptualization, M.R., M.A.A.A. and A.R.; Methodology, M.R.; Validation, M.R.; Formal analysis, M.R.; Investigation, M.R.; Writing—original draft, M.R.; Writing—review & editing, M.A.A.A. and A.R.; Supervision, M.A.A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the main author due to ramezani.mahya@ut.ac.ir.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| Acronym | Full Form |

| A3C | Asynchronous advantage actor–critic |

| CDM | Communication data matrix |

| CPP | Coverage path planning |

| CTDE | Centralized training with decentralized execution |

| DNN | Deep neural networks |

| DRL | Deep reinforcement learning |

| DQN | Deep Q-network |

| MADQN | Multi-agent deep Q-network |

| MARL | Multi-agent reinforcement learning |

| MDP | Markov decision process |

| MLP | Multi-layer perceptron |

| PPO | Proximal policy optimization |

| QMIX | Q-value mixing network |

| RL | Reinforcement learning |

| SERT-DQN | Similarity-based experience replay enhanced target DQN |

| SGD | Stochastic gradient descent |

| UAV | Unmanned aerial vehicle |

| UGV | Unmanned ground vehicle |

References

- Zubrin, R. How to Live on Mars: A Trusty Guidebook to Surviving and Thriving on the Red Planet; Crown: New York, NY, USA, 2008. [Google Scholar]

- Islam, M.R.; Chowdhury, F.H.; Rezwan, S.; Ishaque, M.J.; Akanda, J.U.; Tuhel, A.S.; Riddhe, B.B. Novel design and performance analysis of a Mars exploration robot: Mars rover mongol pothik. In Proceedings of the 2017 Third International Conference on Research in Computational Intelligence and Communication Networks (ICRCICN), Kolkata, India, 3–5 November 2017; pp. 132–136. [Google Scholar]

- Fairén, A.G. A cold and wet Mars. Icarus 2010, 208, 165–175. [Google Scholar] [CrossRef]

- AlandiHallaj, M.; Assadian, N. Soft landing on an irregular shape asteroid using multiple-horizon multiple-model predictive control. Acta Astronaut. 2017, 140, 225–234. [Google Scholar] [CrossRef]

- AlandiHallaj, M.; Assadian, N. Asteroid precision landing via probabilistic multiple-horizon multiple-model predictive control. Acta Astronaut. 2019, 161, 531–541. [Google Scholar] [CrossRef]

- Alandihallaj, M.A.; Assadian, N.; Varatharajoo, R. Finite-time asteroid hovering via multiple-overlapping-horizon multiple-model predictive control. Adv. Space Res. 2023, 71, 645–653. [Google Scholar] [CrossRef]

- Dinelli, C.; Racette, J.; Escarcega, M.; Lotero, S.; Gordon, J.; Montoya, J.; Dunaway, C.; Androulakis, V.; Khaniani, H.; Shao, S. Configurations and applications of multi-agent hybrid drone/unmanned ground vehicle for underground environments: A review. Drones 2023, 7, 136. [Google Scholar] [CrossRef]

- Crisp, J.A.; Adler, M.; Matijevic, J.R.; Squyres, S.W.; Arvidson, R.E.; Kass, D.M. Mars exploration rover mission. J. Geophys. Res. Planets 2003, 108, 8061. [Google Scholar] [CrossRef]

- Lamarre, O.; Kelly, J. Overcoming the challenges of solar rover autonomy: Enabling long-duration planetary navigation. arXiv 2018, arXiv:1805.05451. [Google Scholar]

- Arvidson, R.E.; Bell III, J.F.; Bellutta, P.; Cabrol, N.A.; Catalano, J.; Cohen, J.; Crumpler, L.S.; Des Marais, D.; Estlin, T.; Farrand, W. Spirit Mars Rover Mission: Overview and selected results from the northern Home Plate Winter Haven to the side of Scamander crater. J. Geophys. Res. Planets 2010, 115. [Google Scholar] [CrossRef]

- Farley, K.A.; Williford, K.H.; Stack, K.M.; Bhartia, R.; Chen, A.; de la Torre, M.; Hand, K.; Goreva, Y.; Herd, C.D.; Hueso, R. Mars 2020 mission overview. Space Sci. Rev. 2020, 216, 1–41. [Google Scholar] [CrossRef]

- Ramezani, M.; Atashgah, M.; Sanchez-Lopez, J.L.; Voos, H. Human-Centric Aware UAV Trajectory Planning in Search and Rescue Missions Employing Multi-Objective Reinforcement Learning with AHP and Similarity-Based Experience Replay. In Proceedings of the 2024 International Conference on Unmanned Aircraft Systems (ICUAS), Chania, Crete, 4–7 June 2024; pp. 177–184. [Google Scholar]

- Mohsan, S.A.H.; Othman, N.Q.H.; Li, Y.; Alsharif, M.H.; Khan, M.A. Unmanned aerial vehicles (UAVs): Practical aspects, applications, open challenges, security issues, and future trends. Intell. Serv. Robot. 2023, 16, 109–137. [Google Scholar] [CrossRef] [PubMed]

- Muchiri, G.; Kimathi, S. A review of applications and potential applications of UAV. In Proceedings of the Annual Conference on Sustainable Research and Innovation Conference, Nairobi, Kenya, 4–6 May 2016. [Google Scholar]

- Serna, J.G.; Vanegas, F.; Gonzalez, F.; Flannery, D. A review of current approaches for UAV autonomous mission planning for Mars biosignatures detection. In 2020 IEEE Aerospace Conference; IEEE: NewYork, NY, USA, 2020; pp. 1–15. [Google Scholar]

- Rizk, Y.; Awad, M.; Tunstel, E.W. Cooperative heterogeneous multi-robot systems: A survey. ACM Comput. Surv. (CSUR) 2019, 52, 1–31. [Google Scholar] [CrossRef]

- Xing, L.; Johnson, B.W. Reliability theory and practice for unmanned aerial vehicles. IEEE Internet Things J. 2022, 10, 3548–3566. [Google Scholar] [CrossRef]

- Asadi, K.; Suresh, A.K.; Ender, A.; Gotad, S.; Maniyar, S.; Anand, S.; Noghabaei, M.; Han, K.; Lobaton, E.; Wu, T. An integrated UGV-UAV system for construction site data collection. Autom. Constr. 2020, 112, 103068. [Google Scholar] [CrossRef]

- Emami, M.R.; Alandihallaj, M.A. Performance Enhancement of Fractionated Spacecraft for Earth Observation. In Proceedings of the 4th COSPAR Scientific Assembly, Athens, Greece, 16–24 July 2022; Volume 44, p. 57. [Google Scholar]

- Ohradzansky, M.T.; Rush, E.R.; Riley, D.G.; Mills, A.B.; Ahmad, S.; McGuire, S.; Biggie, H.; Harlow, K.; Miles, M.J.; Frew, E.W. Multi-agent autonomy: Advancements and challenges in subterranean exploration. arXiv 2021, arXiv:2110.04390. [Google Scholar] [CrossRef]

- Bartolomei, L.; Teixeira, L.; Chli, M. Fast multi-UAV decentralized exploration of forests. IEEE Robot. Autom. Lett. 2023, 8, 5576–5583. [Google Scholar] [CrossRef]

- Ropero, F.; Muñoz, P.; R-Moreno, M.D. A Strategical Path Planner for UGV-UAV Cooperation in Mars Terrains. In Proceedings of the International Conference on Innovative Techniques and Applications of Artificial Intelligence, Tokyo, Japan, 16–18 March 2024; pp. 106–118. [Google Scholar]

- Nowak, S.; Krüger, T.; Matthaei, J.; Bestmann, U. Martian swarm exploration and mapping using laser SLAM. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 299–303. [Google Scholar] [CrossRef]

- Martinez-Rozas, S.; Alejo, D.; Caballero, F.; Merino, L. Path and trajectory planning of a tethered UAV-UGV marsupial robotic system. IEEE Robot. Autom. Lett. 2023, 8, 6475–6482. [Google Scholar] [CrossRef]

- Hu, D.; Gan, V.J.; Wang, T.; Ma, L. Multi-agent robotic system (MARS) for UAV-UGV path planning and automatic sensory data collection in cluttered environments. Build. Environ. 2022, 221, 109349. [Google Scholar] [CrossRef]

- Cabreira, T.M.; Brisolara, L.B.; Ferreira, P.R., Jr. Survey on coverage path planning with unmanned aerial vehicles. Drones 2019, 3, 4. [Google Scholar] [CrossRef]