Thermal Image Tracking for Search and Rescue Missions with a Drone

Abstract

1. Introduction

2. YOLOv5 for Thermal Videos

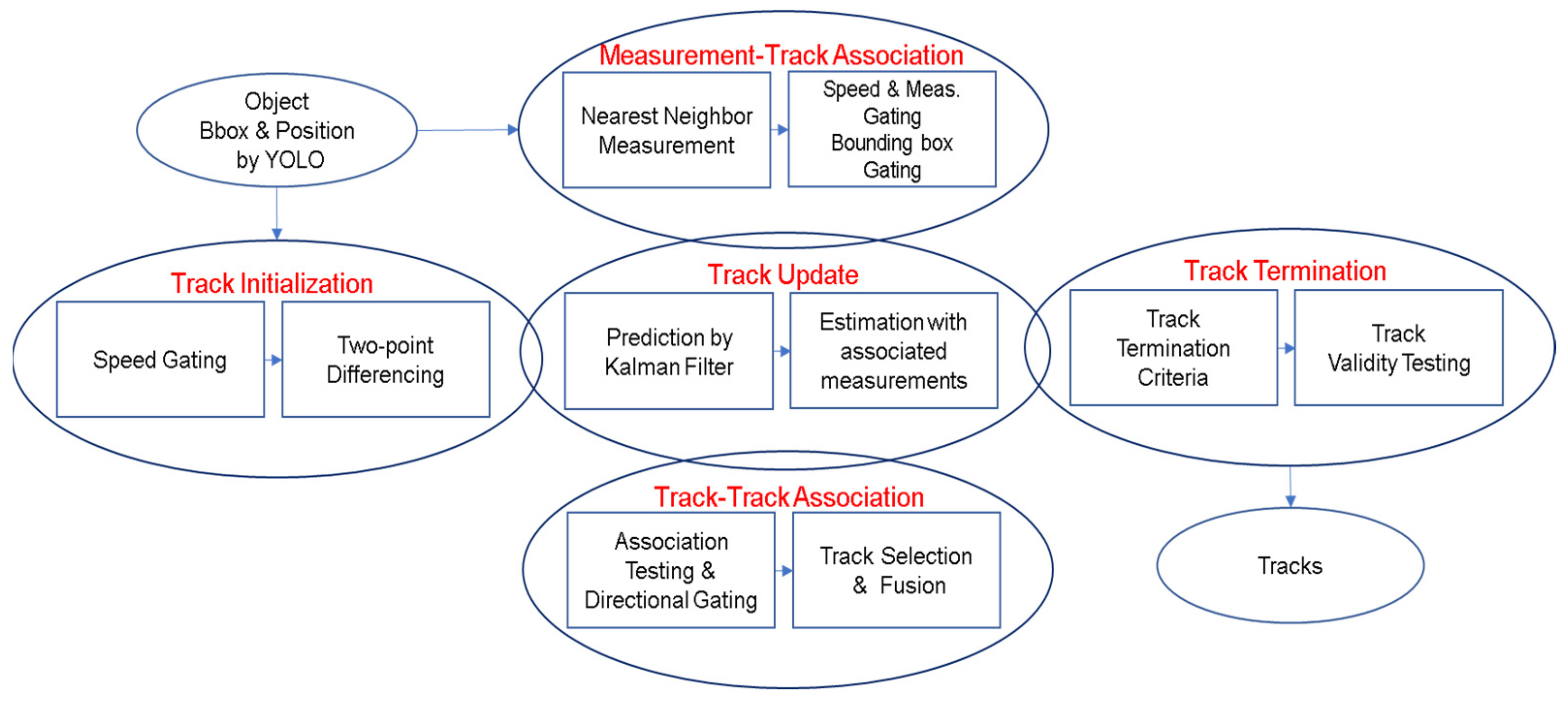

3. Multiple-Target Tracking

3.1. System Modeling

3.2. Two-Point Initialization

3.3. Prediction and Filter Gain

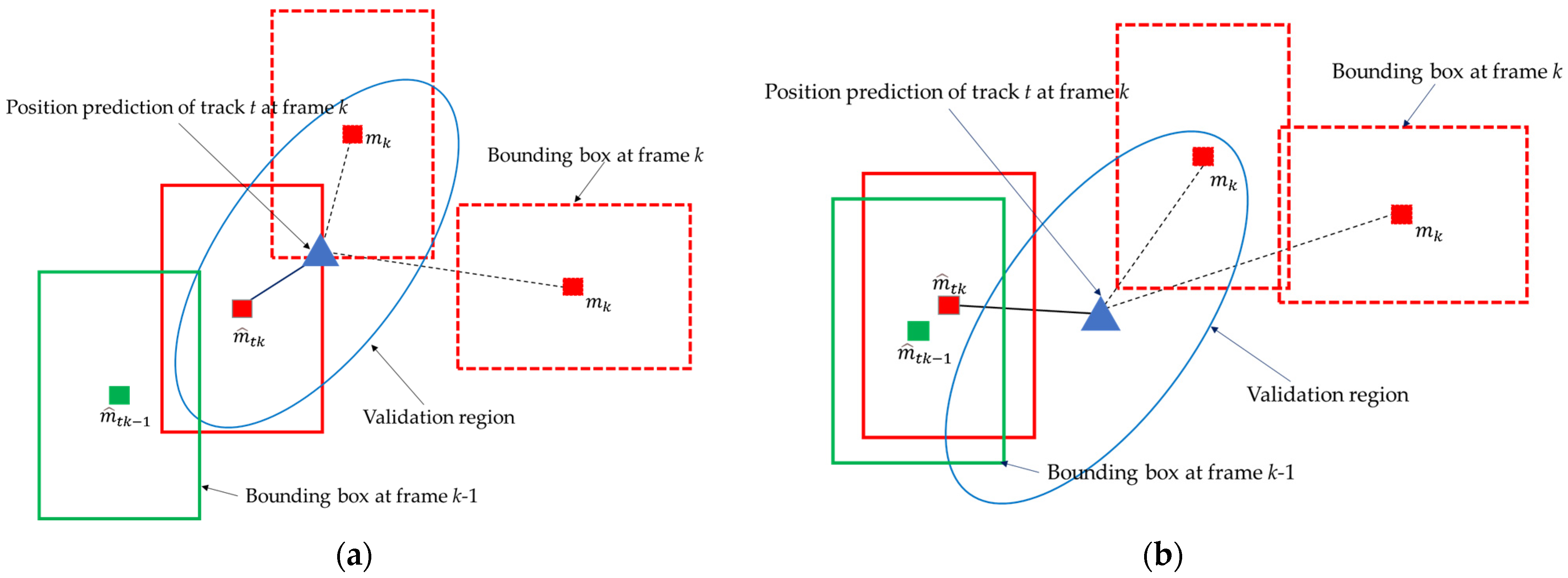

3.4. Measurement-to-Track Association with Bounding Box Gating

3.5. State Estimate and Covariance Update

3.6. Track-to-Track Association

3.7. Track Termination and Validation Testing

3.8. Performance Evaluation

4. Results

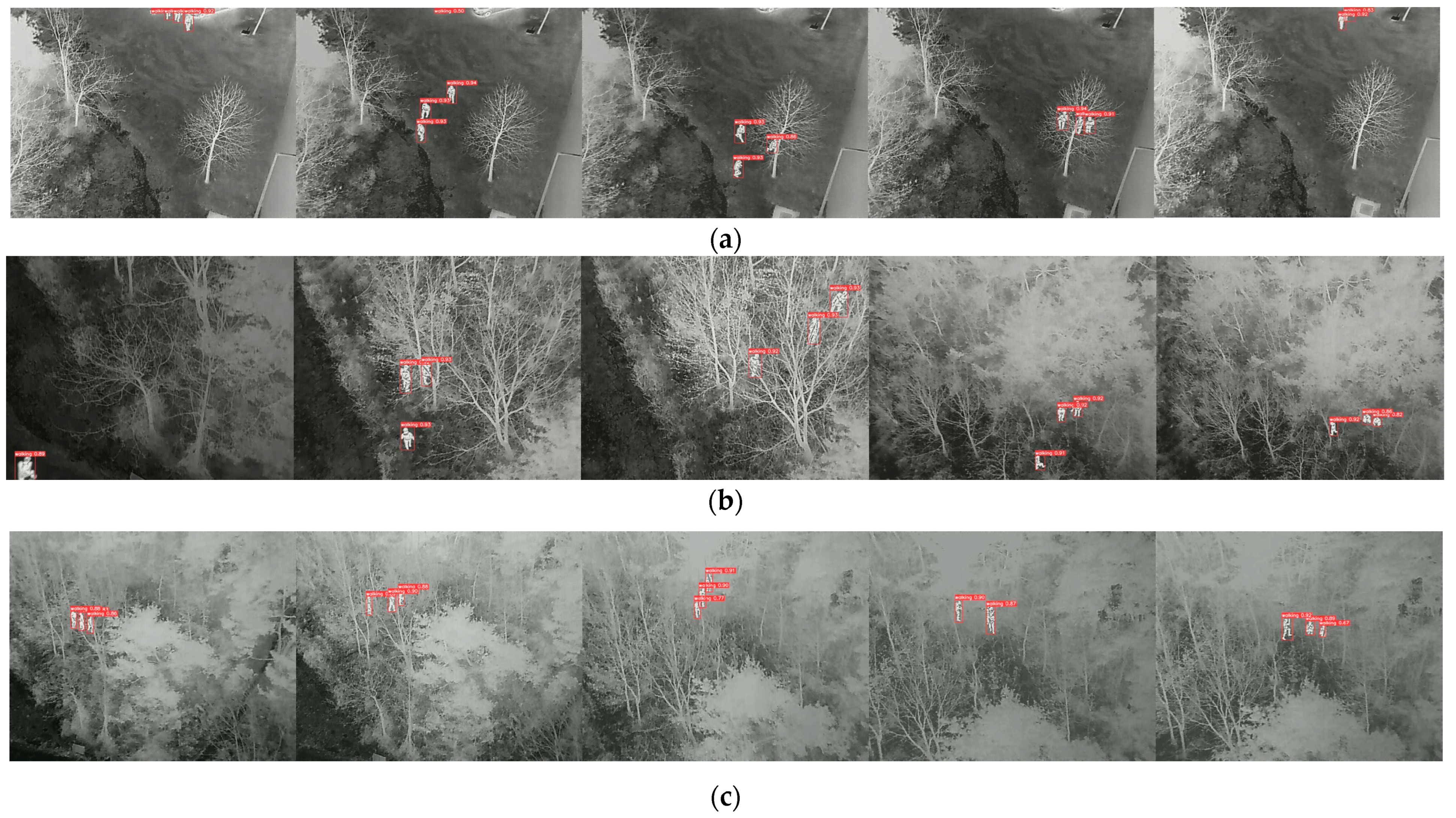

4.1. Video Description

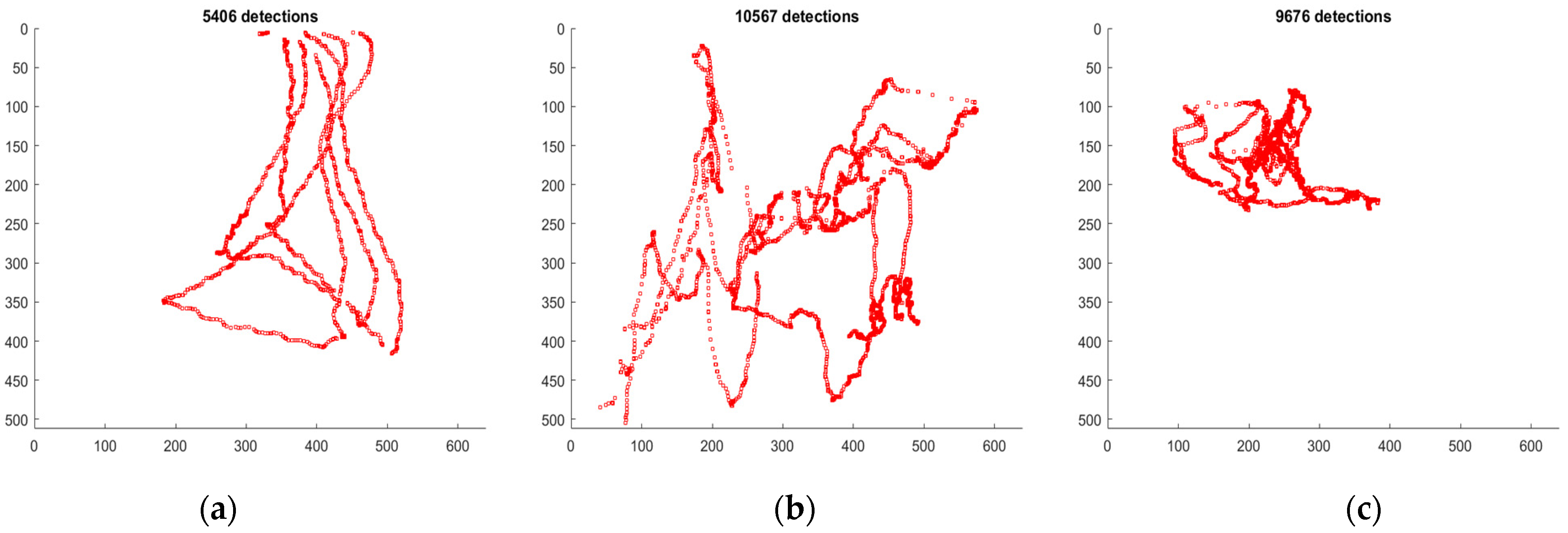

4.2. People Detection by YOLOv5

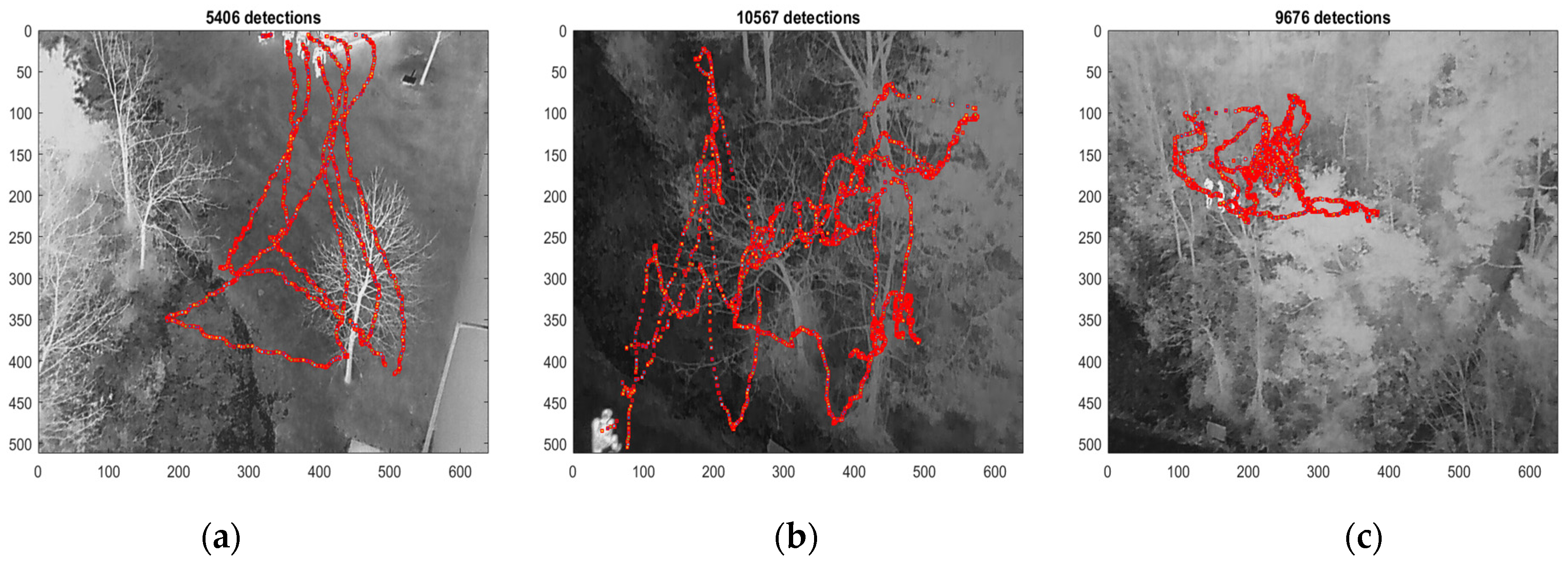

4.3. Multiple-Target Tracking

4.3.1. Parameter Set-Up

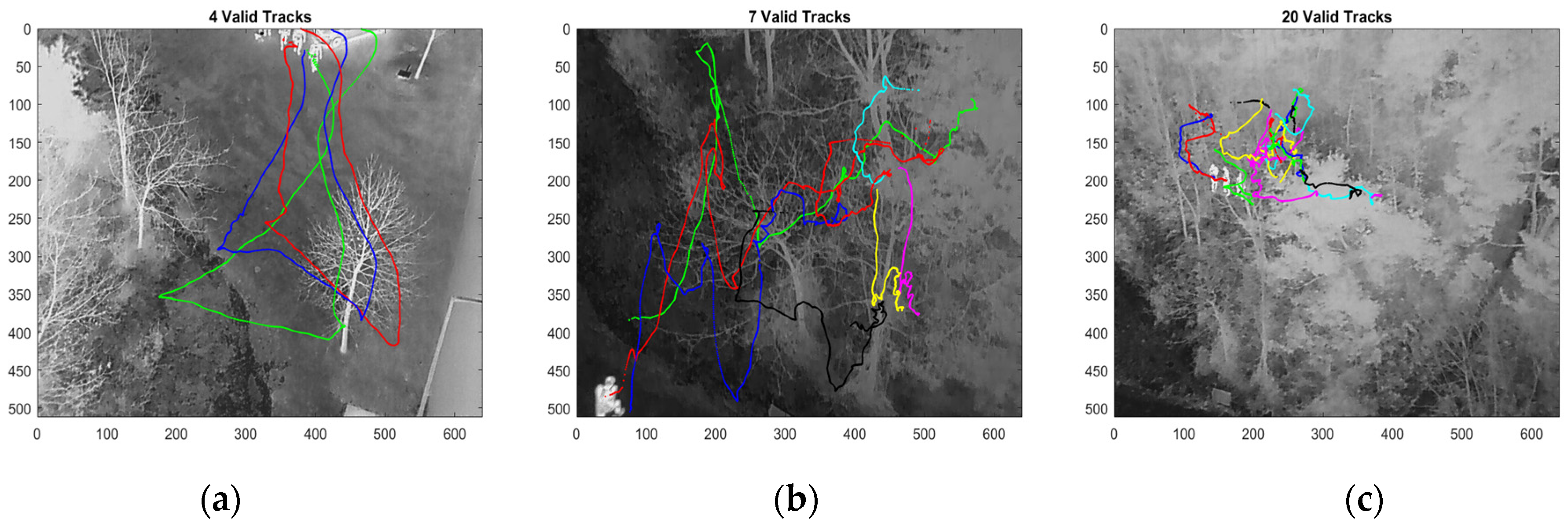

4.3.2. Target Tracking Results

5. Discussion

6. Conclusions

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alzahrani, B.; Oubbati, O.S.; Barnawi, A.; Atiquzzaman, M.; Alghazzawi, D. UAV assistance paradigm: State-of-the-art in applications and challenges. J. Netw. Comput. Appl. 2020, 166, 102706. [Google Scholar] [CrossRef]

- Vollmer, M.; Mollmann, K.-P. Infrared Thermal Imaging: Fundamentals, Research and Applications; Wiley-VCH: Weinheim, Germany, 2010. [Google Scholar]

- Kirk, J.; Havens Edward, J. Sharp, Thermal Imaging Techniques to Survey and Monitor Animals in the Wild; Academic Press: Cambridge, MA, USA, 2016; ISBN 9780128033845. [Google Scholar] [CrossRef]

- Rudol, P.; Doherty, P. Human Body Detection and Geolocalization for UAV Search and Rescue Missions Using Color and Thermal Imagery. In Proceedings of the 2008 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2008; pp. 1–8. [Google Scholar] [CrossRef]

- Jamjoum, M.; Siouf, S.; Alzubi, S.; AbdelSalam, E.; Almomani, F.; Salameh, T.; Al Swailmeen, Y. DRONA: A Novel Design of a Drone for Search and Rescue Operations. In Proceedings of the 2023 Advances in Science and Engineering Technology International Conferences (ASET), Dubai, United Arab Emirates, 20–23 February 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Thermal Camera-Equipped UAVs Spot Hard-to-Find Subjects. Available online: https://www.photonics.com/Articles/Thermal_Camera-Equipped_UAVs_Spot_Hard-to-Find/a63435 (accessed on 30 January 2024).

- Gonzalez, L.F.; Montes, H.G.A.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K.J. Unmanned Aerial Vehicles (UAVs) and Artificial Intelligence Revolutionizing Wildlife Monitoring and Conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef] [PubMed]

- Messina, G.; Modica, G. Applications of UAV Thermal Imagery in Precision Agriculture: State of the Art and Future Research Outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Krišto, M.; Ivasic-Kos, M.; Pobar, M. Thermal Object Detection in Difficult Weather Conditions Using YOLO. IEEE Access 2020, 8, 25459–125476. [Google Scholar] [CrossRef]

- Jiang, C.; Ren, H.; Ye, X.; Zhu, J.; Zeng, H.; Nan, Y.; Sun, M.; Ren, X.; Huo, H. Object detection from UAV thermal infrared images and videos using YOLO models. J. Appl. Earth Obs. Geoinf. 2022, 112, 102912. [Google Scholar] [CrossRef]

- Kannadaguli, P. YOLO v4 Based Human Detection System Using Aerial Thermal Imaging for UAV Based Surveillance Applications. In Proceedings of the 2020 International Conference on Decision Aid Sciences and Application (DASA), Sakheer, Bahrain, 8–9 November 2020; pp. 1213–1219. [Google Scholar] [CrossRef]

- Levin, E.; Zarnowski, A.; McCarty, J.L.; Bialas, J.; Banaszek, A.; Banaszek, S. Feasibility Study of Inexpensive Thermal Sensor and Small UAS Deployment for Living Human Detection in Rescue Missions Application Scenario. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLI-B8, 2016 XXIII ISPRS Congress, Prague, Czech Republic, 12–19 July 2016. [Google Scholar]

- Teutsch, M.; Mueller, T.; Huber, M.; Beyerer, J. Low Resolution Person Detection with a Moving Thermal Infrared Camera by Hot Spot Classification. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Work-Shops, Columbus, OH, USA, 23–28 June 2014; pp. 209–216. [Google Scholar] [CrossRef]

- Giitsidis, T.; Karakasis, E.G.; Gasteratos, A.; Sirakoulis, G.C. Human and Fire Detection from High Altitude UAV Images. In Proceedings of the 2015 23rd Euromicro International Conference on Parallel, Distributed, and Network-Based Processing, Turku, Finland, 4–6 March 2015; pp. 309–315. [Google Scholar] [CrossRef]

- Yeom, S. Moving People Tracking and False Track Removing with Infrared Thermal Imaging by a Multirotor. Drones 2021, 5, 65. [Google Scholar] [CrossRef]

- Leira, F.S.; Helgensen, H.H.; Johansen, T.A.; Fossen, T.I. Object detection, recognition, and tracking from UAVs using a thermal camera. J. Field Robot. 2021, 38, 242–267. [Google Scholar] [CrossRef]

- Helgesen, H.H.; Leira, F.S.; Johansen, T.A. Colored-Noise Tracking of Floating Objects using UAVs with Thermal Cameras. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 11–14 June 2019; pp. 651–660. [Google Scholar] [CrossRef]

- Davis, J.W.; Sharma, V. Background-Subtraction in Thermal Imagery Using Contour Saliency. Int. J. Comput. Vis. 2007, 71, 161–181. [Google Scholar] [CrossRef]

- Soundrapandiyan, R. Adaptive Pedestrian Detection in Infrared Images Using Background Subtraction and Local Thresh-olding. Procedia Comput. Sci. 2015, 58, 706–713. [Google Scholar] [CrossRef]

- Portmann, J.; Lynen, S.; Chli, M.; Siegwart, R. People detection and tracking from aerial thermal views. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1794–1800. [Google Scholar] [CrossRef]

- He, Y.-J.; Li, M.; Zhang, J.; Yao, J.-P. Infrared target tracking via weighted correlation filter. Infrared Phys. Technol. 2015, 73, 103–114. [Google Scholar] [CrossRef]

- Yu, T.; Mo, B.; Liu, F.; Qi, H.; Liu, Y. Robust thermal infrared object tracking with continuous correlation filters and adaptive feature fusion. Infrared Phys. Technol. 2019, 98, 69–81. [Google Scholar] [CrossRef]

- Yuan, D.; Shu, X.; Liu, Q.; Zhang, X.; He, Z. Robust thermal infrared tracking via an adaptively multi-feature fusion model. Neural Comput. Appl. 2022, 35, 3423–3434. [Google Scholar] [CrossRef] [PubMed]

- Gade, R.; Moeslund, T.B. Thermal Tracking of Sports Players. Sensors 2014, 14, 13679–13691. [Google Scholar] [CrossRef] [PubMed]

- WEl Ahmar, A.; Kolhatkar, D.; Nowruzi, F.E.; AlGhamdi, H.; Hou, J.; Laganiere, R. Multiple Object Detection and Tracking in the Thermal Spectrum. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–24 June 2022; pp. 276–284. [Google Scholar] [CrossRef]

- Liu, Q.; Lu, X.; He, Z.; Zhang, C.; Chen, W.-S. Deep convolutional neural networks for thermal infrared object tracking. Knowl.-Based Syst. 2017, 134, 189–198. [Google Scholar] [CrossRef]

- Available online: https://github.com/ultralytics/yolov5 (accessed on 30 January 2024).

- Yeom, S.; Nam, D.-H. Moving Vehicle Tracking with a Moving Drone Based on Track Association. Appl. Sci. 2021, 11, 4046. [Google Scholar] [CrossRef]

- Yeom, S. Long Distance Moving Vehicle Tracking with a Multirotor Based on IMM-Directional Track Association. Appl. Sci. 2021, 11, 11234. [Google Scholar] [CrossRef]

- Yeom, S. Long Distance Ground Target Tracking with Aerial Image-to-Position Conversion and Improved Track Association. Drones 2022, 6, 55. [Google Scholar] [CrossRef]

- Available online: https://github.com/HumanSignal/labelImg (accessed on 30 January 2024).

- Bar-Shalom, Y.; Li, X.R. Multitarget-Multisensor Tracking: Principles and Techniques; YBS Publishing: Storrs, CT, USA, 1995. [Google Scholar]

- Yeom, S.-W.; Kirubarajan, T.; Bar-Shalom, Y. Track segment association, fine-step IMM and initialization with doppler for improved track performance. IEEE Trans. Aerosp. Electron. Syst. 2004, 40, 293–309. [Google Scholar] [CrossRef]

- Available online: https://github.com/ultralytics/ultralytics (accessed on 30 January 2024).

| Video 1 | Video 2 | Video 3 | ||

|---|---|---|---|---|

| Descriptions | Num. of frames (duration) | 1801 (1 min) | 3601 (2 min) | |

| Num. of objects (people) | 4 | 3 | ||

| Num. of instances | 6000 | 10,652 | 10,800 | |

| YOLOv5s | Num. of detections | 5760 | 10,492 | 9143 |

| Num. of detections over 0.5 conf. level | 5176 | 10,283 | 8425 | |

| Recall over 0.5 conf. level | 0.863 | 0.965 | 0.780 | |

| YOLOv5l | Num. of detections | 6634 | 10,610 | 9693 |

| Num. of detections over 0.5 conf. level | 5811 | 10,474 | 9248 | |

| Recall over 0.5 conf. level | 0.969 | 0.983 | 0.856 | |

| YOLOv5x | Num. of detections | 5734 | 10,699 | 9947 |

| Num. of detections over 0.5 conf. level | 5406 | 10,567 | 9676 | |

| Recall over 0.5 conf. level | 0.901 | 0.992 | 0.862 | |

| Parameters (Unit) | Video 1 | Video 2 | Video 3 | |

|---|---|---|---|---|

| Sampling Time (second) | 1/15 | 1/30 | ||

| Max. initial target speed, Vmax (m/s) | 3 | |||

| Process noise std. | (m/s2) | 0.5 | 2.5 | |

| Measurement noise std. | (m) | 0.5 | ||

| Measurent association | Max. target speed, Smax (m/s) | 10 | 20 | 10 |

| Gate threshold, | 4 | |||

| Bbox threshold, | 0.6 | 0.4 | ||

| Track association | Gate threshold, | 10 | 20 | 10 |

| Angular threshold, (degree) | 45° | 60° | 45° | |

| Track termination | Maximum searching number (frame) | 20 | ||

| Min. track life length for track validity (second) | 2 | |||

| Case 1 | Case 2 | Case 3 | |

|---|---|---|---|

| Num. of Tracks | 3 | 3 | 3 |

| Avg. TTL | 0.987 | 0.982 | 1 |

| Avg. MTL | 0.987 | 0.982 | 1 |

| Avg. TP | 1 | 0.991 | 1 |

| Case 1 | Case 2 | Case 3 | |

|---|---|---|---|

| Num. of Tracks | 7 | 7 | 18 |

| Avg. TTL | 0.993 | 0.945 | 0.943 |

| Avg. MTL | 0.442 | 0.417 | 0.193 |

| Avg. TP | 0.999 | 0.954 | 0.963 |

| Case 1 | Case 2 | Case 3 | |

|---|---|---|---|

| Num. of Tracks | 20 | 22 | 29 |

| Avg. TTL | 0.894 | 0.878 | 0.890 |

| Avg. MTL | 0.151 | 0.130 | 0.097 |

| Avg. TP | 0.995 | 0.995 | 0.986 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yeom, S. Thermal Image Tracking for Search and Rescue Missions with a Drone. Drones 2024, 8, 53. https://doi.org/10.3390/drones8020053

Yeom S. Thermal Image Tracking for Search and Rescue Missions with a Drone. Drones. 2024; 8(2):53. https://doi.org/10.3390/drones8020053

Chicago/Turabian StyleYeom, Seokwon. 2024. "Thermal Image Tracking for Search and Rescue Missions with a Drone" Drones 8, no. 2: 53. https://doi.org/10.3390/drones8020053

APA StyleYeom, S. (2024). Thermal Image Tracking for Search and Rescue Missions with a Drone. Drones, 8(2), 53. https://doi.org/10.3390/drones8020053