Multiple-Target Matching Algorithm for SAR and Visible Light Image Data Captured by Multiple Unmanned Aerial Vehicles

Abstract

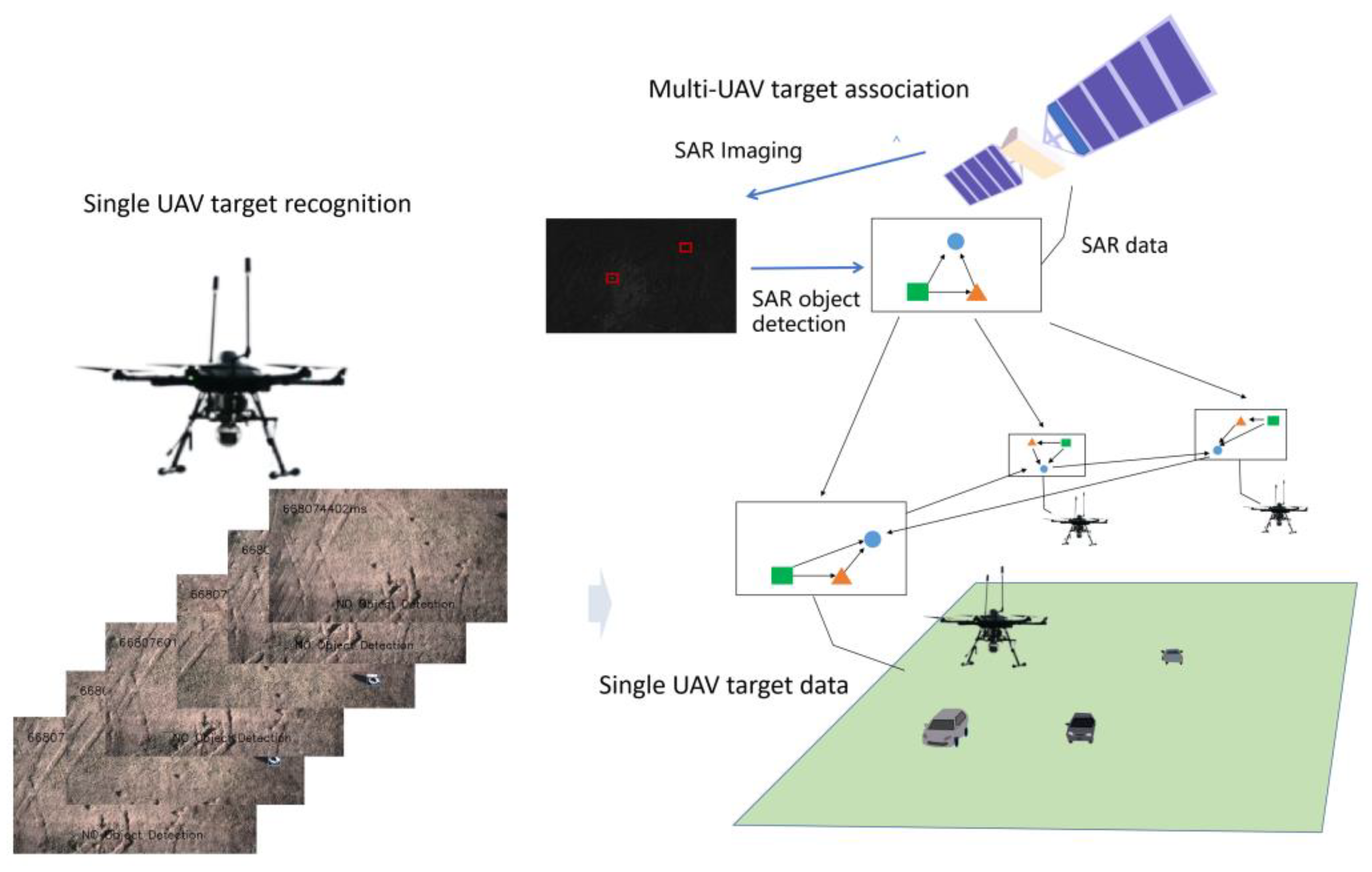

:1. Introduction

- An improved triplet loss function was constructed to effectively assess the similarity of targets detected by multiple UAVs.

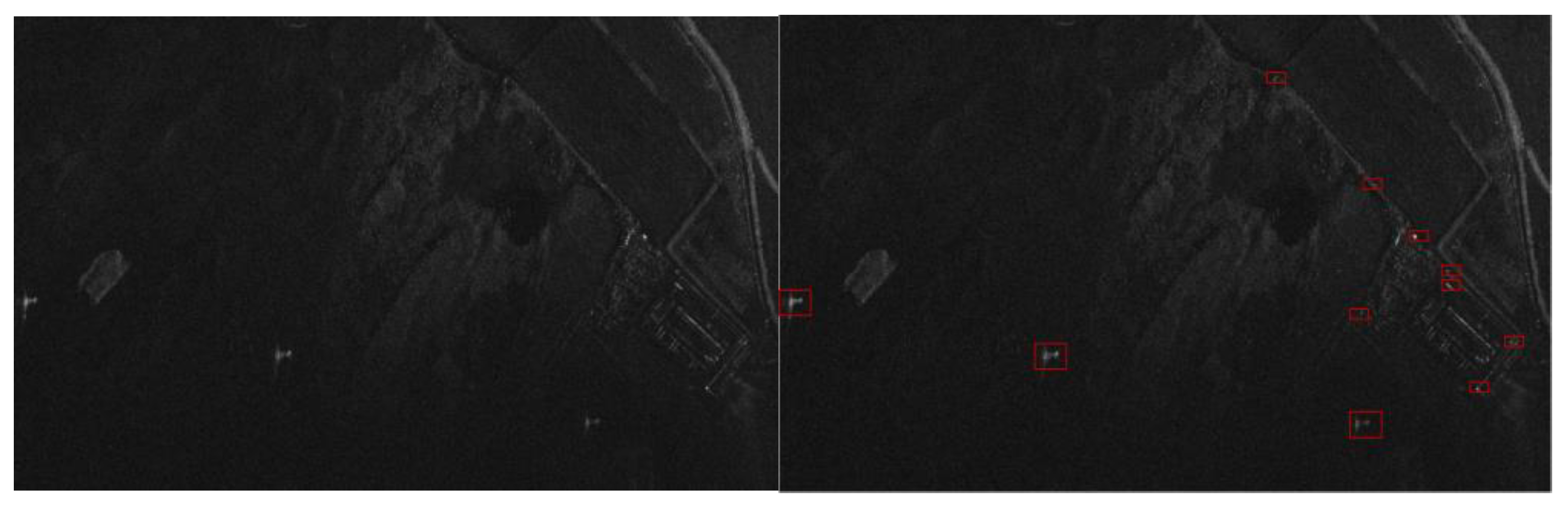

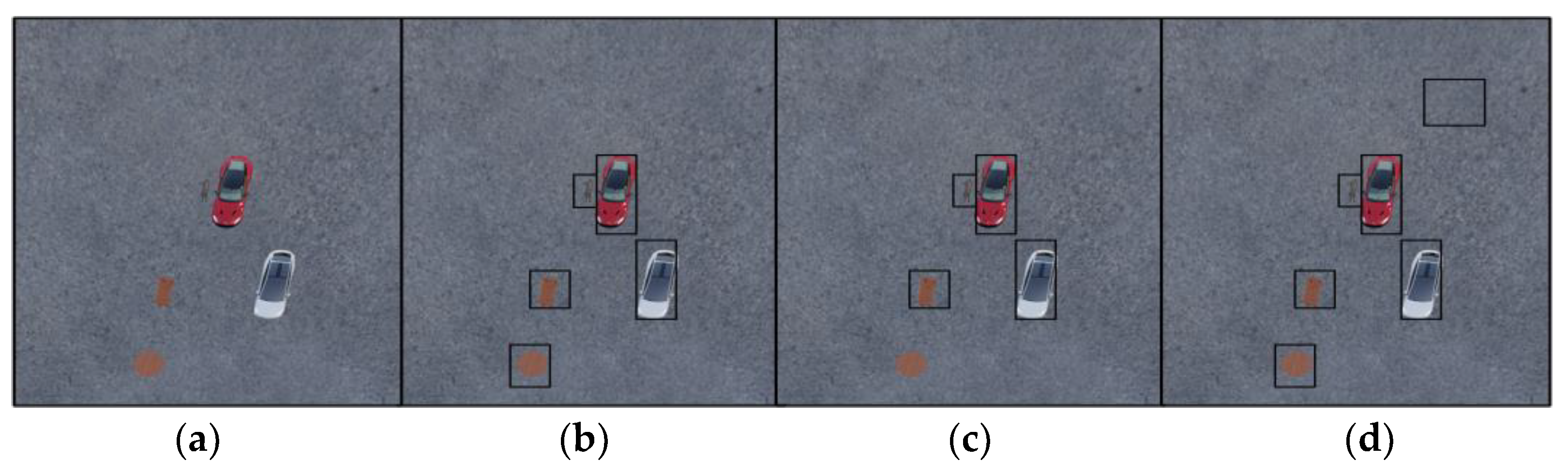

- A consistency discrimination algorithm is proposed for targets from multiple perspectives based on distributed computing. On UAVs equipped with optical sensors, the algorithm utilizes optical image features and the relative relationships between targets to achieve consistency discrimination in scenarios with a high false alarm rate. On UAVs equipped with SAR sensors, the algorithm employs SAR-detected local situational information and optical image detection for consistency judgment, effectively achieving consistency judgment from a global perspective.

- A multi-UAV multi-target detection database is established, and an open-source core code was developed, addressing the training and validation issues for algorithms in this scenario.

2. Related Work

2.1. Object Detection Algorithms

2.2. Data Association for Multi-Target and Multi-Camera Tracking

3. Proposed Algorithm

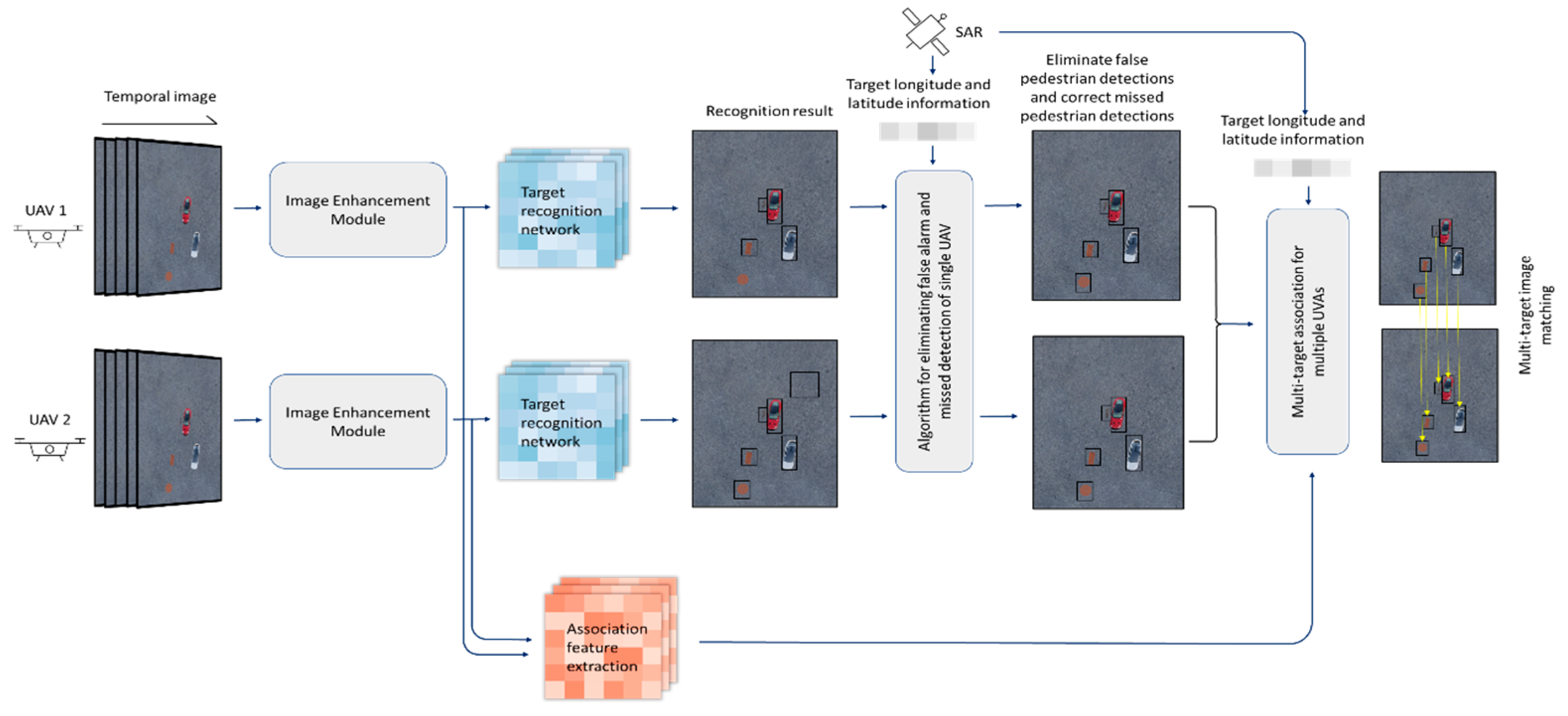

3.1. Image Enhancement

3.2. Target Recognition

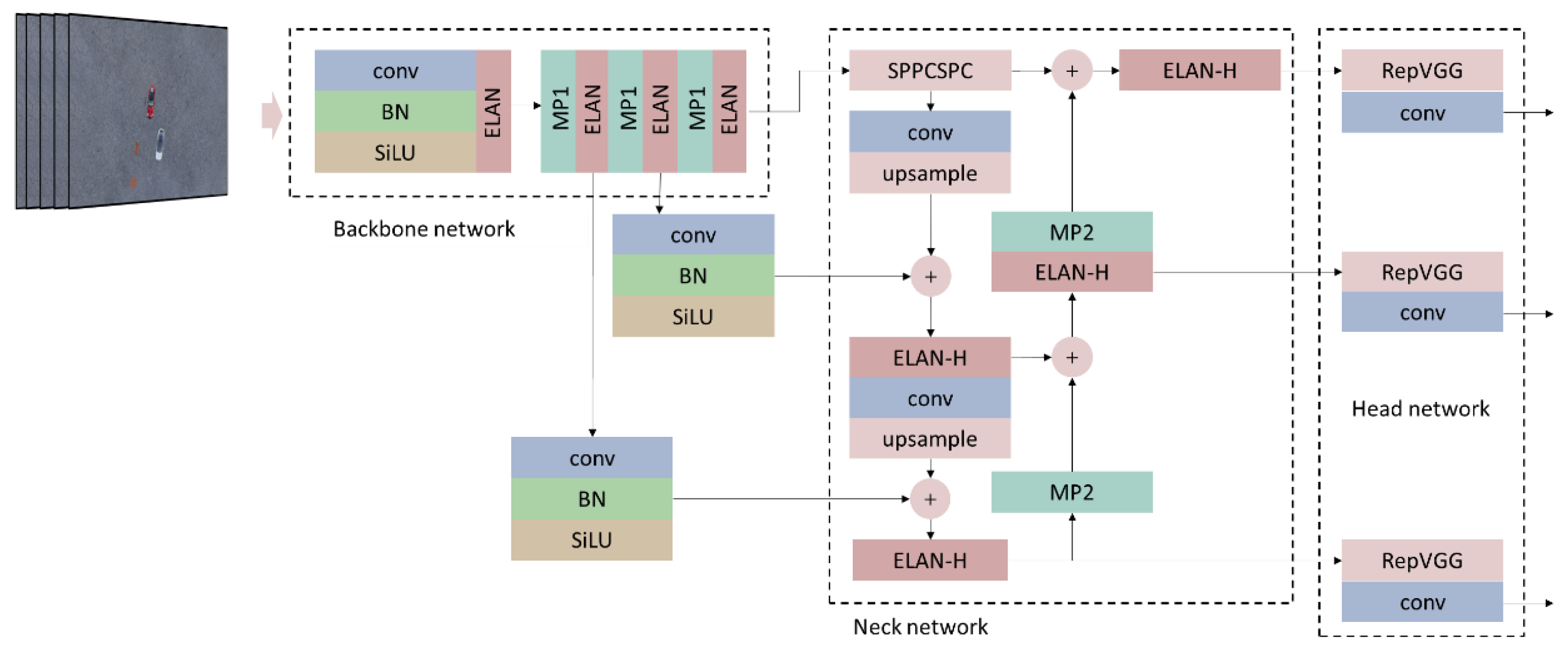

3.2.1. Backbone Network

3.2.2. Neck Network

3.2.3. Head Network

3.3. Association Feature Extraction

3.4. Matching Based on Individual UAVs

| Algorithm 1 Multi-target matching process for individual UAVs that eliminates false pedestrian detections and corrects missed pedestrian detections |

| 1: Input: multi-target recognition images, and features extracted from the target recognition network and corresponding SAR data 2: Apply input to define a global array with all pertinent multi-target information 3: While not the last video frame captured by the i-th UAV 4: Define array with information pertaining to a given target detected by the i-th UAV in each frame t (t = 1, 2, 3, …) 5: Calculate the IoU value of the target border information stored in and the target border information stored in according to Equation (1) 6: If IoU > α 7: Take the largest calculated IoU value corresponding to the target in 8: Increment for the corresponding target in by 1 9: If the confidence of the target classification in is less than or equal to the corresponding confidence in 10: Update the target information in with the information in 11: Else if IoU ≤ α 12: Add target information in as an element 13: For each target in 14: If ≥ 3 in 5 consecutive frames 15: Set this target as the subject of query 16: Calculate the relative position relationships between this target and the remain-ing n targets in 17: Compare these relative positions with the corresponding relative positions of the points in the SAR data based on the defined in Equation (2) 18: If < β 19: The target in SAR data is a false detection 20: Else 21: Find the target in the SAR data with the largest and use it as the final matching result of the target to be associated with the UAV image data 22: Else 23: Remove the target from |

3.5. Matching Based on Multiple UAVs

| Algorithm 2 Multi-target association algorithm for multiple UAVs |

| 0: Input: multi-target association features obtained from the association feature extraction network and corresponding SAR data 1: Set = number of targets detected by UAV 1 2: Set = number of targets detected by UAV 2 3: If ≤ 1 4: No positional relationship matching possible 5: If > 1 6: Set between the apparent features of all targets detected by UAV 1 and UAV 2} 7: If S > α, matching successful 8: Set the target category according to the category with the greatest confidence among the two detection results 9: Match the relative positions of UAV 2 targets with the corresponding targets in the SAR data one by one 10: Else 11: No action taken 12: If = 1 13: between the apparent features of all targets detected by UAV 1 and UAV 2} 14: If S > α, matching successful 15: Set the target category according to the category with the greatest confidence among the two detection results 16: Match the relative positions of UAV 1 targets with the corresponding targets in the SAR data one by one 17: Else 18: No action taken 19: If > 1 20: Set between the apparent features of all targets detected by UAV 1 and UAV 2 21: 22: If > γ 23: Set the target category according to the category with the greatest confidence among the two detection results 24: If < δ, matching has failed 25: Discard all target information 26: If δ < γ 27: Verification based on the value of calculated for the relative position vector obtained between the selected target and the other targets of the two UAVs 28: If } > ε 29: Two targets are matched. Set the target category according to the category with the greatest confidence among the two detection results |

4. Experiments

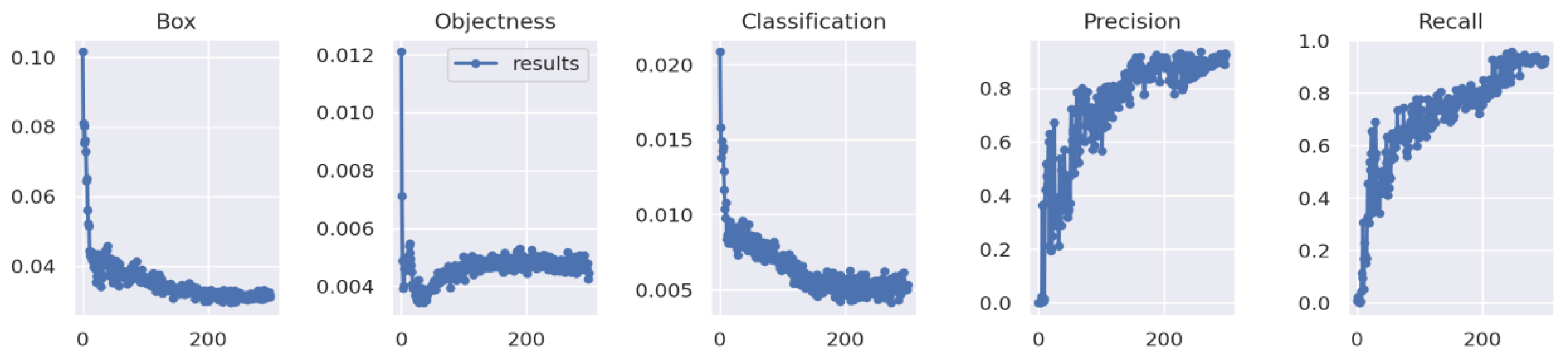

4.1. Training the Model

4.2. Datasets

4.3. Experimental Conditions

4.4. Experimental Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Grocholsky, B.; Keller, J.; Kumar, V.; Pappas, G. Cooperative air and ground surveillance. IEEE Robot. Autom. Mag. 2006, 13, 16–25. [Google Scholar] [CrossRef]

- Sinha, A.; Kirubarajan, T.; Bar-Shalom, Y. Autonomous surveillance by multiple cooperative UAVs. Signal Data Process. Small Targets 2005, 2005, 616–627. [Google Scholar]

- Capitan, J.; Merino, L.; Ollero, A. Cooperative decision-making under uncertainties for multi-target surveillance with multiples UAVs. J. Intell. Robot. Syst. 2016, 84, 371–386. [Google Scholar] [CrossRef]

- Oh, H.; Kim, S.; Shin, H.; Tsourdos, A. Coordinated standoff tracking of moving target groups using multiple UAVs. IEEE Trans. Aerosp. Electron. Syst. 2015, 51, 1501–1514. [Google Scholar] [CrossRef]

- Ragi, S.; Chong, E.K.P. Decentralized guidance control of UAVs with explicit optimization of communication. J. Intell. Robot Syst. 2014, 73, 811–822. [Google Scholar] [CrossRef]

- Jilkov, V.P.; Rong Li, X.; DelBalzo, D. Best combination of multiple objectives for UAV search & track path optimization. In Proceedings of the 2007 10th International Conference on Information Fusion, Québec, QC, Canada, 9–12 July 2007; pp. 1–8. [Google Scholar]

- Pitre, R.R.; Li, X.R.; Delbalzo, R. UAV route planning for joint search and track missions—An information-value approach. IEEE Trans. Aerosp. Electron. Syst. 2012, 48, 2551–2565. [Google Scholar] [CrossRef]

- Ousingsawat, J.; Campbell, M.E. Optimal cooperative reconnaissance using multiple vehicles. J. Guid. Control. Dyn. 2007, 30, 122–132. [Google Scholar] [CrossRef]

- Hoffmann, G.; Waslander, S.; Tomlin, C. Distributed cooperative search using information-theoretic costs for particle filters, with quadrotor applications. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Keystone, CO, USA, 21–24 August 2006; p. 6576. [Google Scholar]

- Hoffmann, G.M.; Tomlin, C.J. Mobile sensor network control using mutual information methods and particle filters. IEEE Trans. Autom. Control 2010, 55, 32–47. [Google Scholar] [CrossRef]

- Sinha, A.; Kirubarajan, T.; Bar-Shalom, Y. Autonomous ground target tracking by multiple cooperative UAVs. In Proceedings of the 2005 IEEE Aerospace Conference, Big Sky, MT, USA, 5–12 March 2005; pp. 1–9. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot MultiBox detector. In Computer Vision—ECCV 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting objects as paired keypoints. Int. J. Comput. Vis. 2020, 128, 642–656. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully convolutional one-stage object detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9626–9635. [Google Scholar]

- Liu, F.; Li, Y. NanoDet ship detection method based on visual saliency in SAR remote sensing images. J. Radars 2021, 10, 885–894. [Google Scholar] [CrossRef]

- Zhou, X.; Zhuo, J.; Krähenbühl, P. Bottom-up object detection by grouping extreme and center points. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 850–859. [Google Scholar]

- Liu, Z.; Zheng, T.; Xu, G.; Yang, Z.; Liu, H.; Cai, D. Training-time-friendly network for real-time object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11685–11692. [Google Scholar] [CrossRef]

- Arani, E.; Gowda, S.; Mukherjee, R.; Magdy, O.; Kathiresan, S.; Zonooz, B. A comprehensive study of real-time object detection networks across multiple domains: A survey. arXiv 2023, arXiv:2208.10895. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Wang, X.; Shrivastava, A.; Gupta, A. A-fast-RCNN: Hard positive generation via adversary for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2606–2615. [Google Scholar]

- Qin, Z.; Li, Z.; Zhang, Z.; Bao, Y.; Yu, G.; Peng, Y.; Sun, J. ThunderNet: Towards real-time generic object detection on mobile devices. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6717–6726. [Google Scholar]

- Ristani, E.; Tomasi, C. Features for Multi-target Multi-camera Tracking and Re-identification. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6036–6046. [Google Scholar]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In Computer Vision—ECCV 2016 Workshops; Springer: Cham, Switzerland, 2016; pp. 17–35. [Google Scholar]

- Tesfaye, Y.T.; Zemene, E.; Prati, A.; Pelillo, M.; Shah, M. Multi-target tracking in multiple non-overlapping cameras using fast-constrained dominant sets. Int. J. Comput. Vis. 2019, 127, 1303–1320. [Google Scholar] [CrossRef]

- Hou, Y.; Zheng, L.; Wang, Z.; Wang, S. Locality aware appearance metric for multi-target multi-camera tracking. arXiv 2019, arXiv:1911.12037. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.-C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. ShuffleNet v2: Practical guidelines for efficient CNN architecture design. In Computer Vision—ECCV 2018; Springer: Cham, Switzerland, 2018; pp. 122–138. [Google Scholar]

| Case | UAV 1 | UAV 2 | ||

|---|---|---|---|---|

| FP Rate | FN Rate | FP Rate | FN Rate | |

| 1 | 24.00 | 0.00 | 25.17 | 0.00 |

| 2 | 24.00 | 16.67 | 25.17 | 16.67 |

| 3 | 33.50 | 0.00 | 31.33 | 0.00 |

| 4 | 33.50 | 16.67 | 31.33 | 16.67 |

| Case | UAV 1 | UAV 2 | ||

|---|---|---|---|---|

| Large-Range Random | Small-Range Random | Large-Range Random | Small-Range Random | |

| 1 | 30.54 | 69.46 | 33.77 | 66.23 |

| 2 | 30.54 | 69.46 | 33.77 | 16.67 |

| 3 | 50.24 | 49.76 | 30.54 | 49.76 |

| 4 | 50.24 | 49.76 | 50.24 | 49.76 |

| Category | Abscissa Fluctuation of Center Point | Longitudinal Coordinate Fluctuation of Center Point | Width Fluctuation | Height Fluctuation | |

|---|---|---|---|---|---|

| Large fluctuation | 0–4 | 10–300 | 10–100 | 5–40 | 5–40 |

| Small fluctuation | 3 | 20–23 | 100–105 | 35–40 | 35–40 |

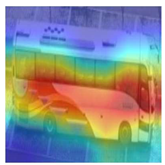

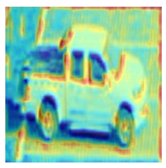

| Bus | Box Truck | Pickup Truck | Van |

|---|---|---|---|

|  |  |  |

|  |  |  |

|  |  |  |

|  |  |  |

|  |  |  |

|  |  |  |

|  |  |  |

|  |  |  |

|  |  |  |

|  |  |  |

| Model | mAP | Rank-1 | Rank-5 | Rank-10 |

|---|---|---|---|---|

| Proposed | 0.384 | 0.609 | 0.74 | 0.87 |

| MobileNetV3 | 0.245 | 0.174 | 0.348 | 0.566 |

| ShuffleNetV2 | 0.196 | 0.174 | 0.304 | 0.435 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Zheng, J.; Song, C. Multiple-Target Matching Algorithm for SAR and Visible Light Image Data Captured by Multiple Unmanned Aerial Vehicles. Drones 2024, 8, 83. https://doi.org/10.3390/drones8030083

Zhang H, Zheng J, Song C. Multiple-Target Matching Algorithm for SAR and Visible Light Image Data Captured by Multiple Unmanned Aerial Vehicles. Drones. 2024; 8(3):83. https://doi.org/10.3390/drones8030083

Chicago/Turabian StyleZhang, Hang, Jiangbin Zheng, and Chuang Song. 2024. "Multiple-Target Matching Algorithm for SAR and Visible Light Image Data Captured by Multiple Unmanned Aerial Vehicles" Drones 8, no. 3: 83. https://doi.org/10.3390/drones8030083

APA StyleZhang, H., Zheng, J., & Song, C. (2024). Multiple-Target Matching Algorithm for SAR and Visible Light Image Data Captured by Multiple Unmanned Aerial Vehicles. Drones, 8(3), 83. https://doi.org/10.3390/drones8030083