Evaluation of Mosaic Image Quality and Analysis of Influencing Factors Based on UAVs

Abstract

1. Introduction

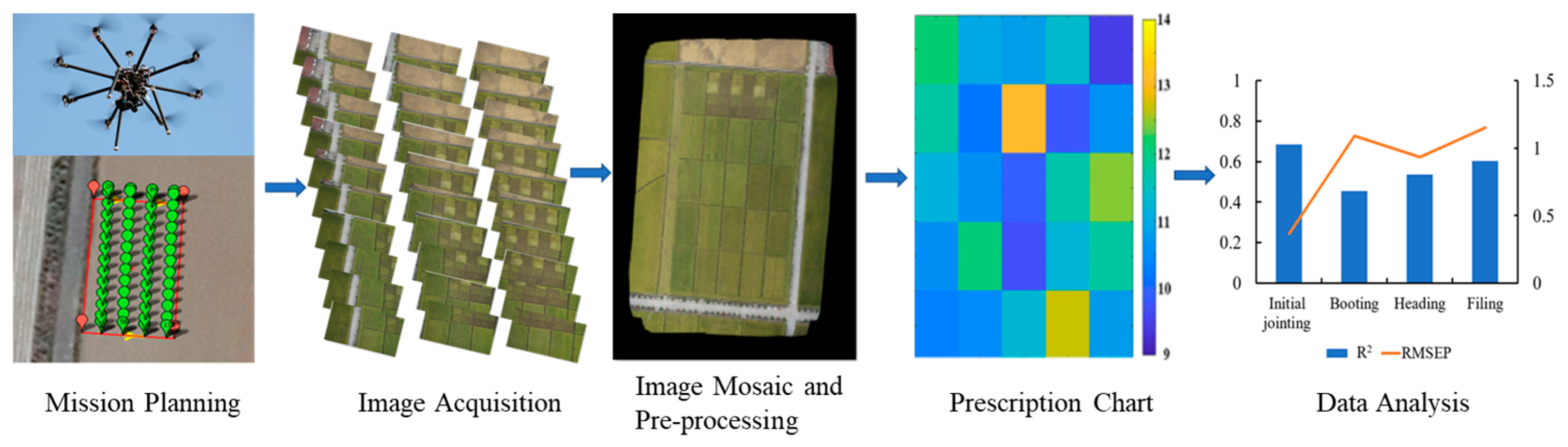

2. Materials and Methods

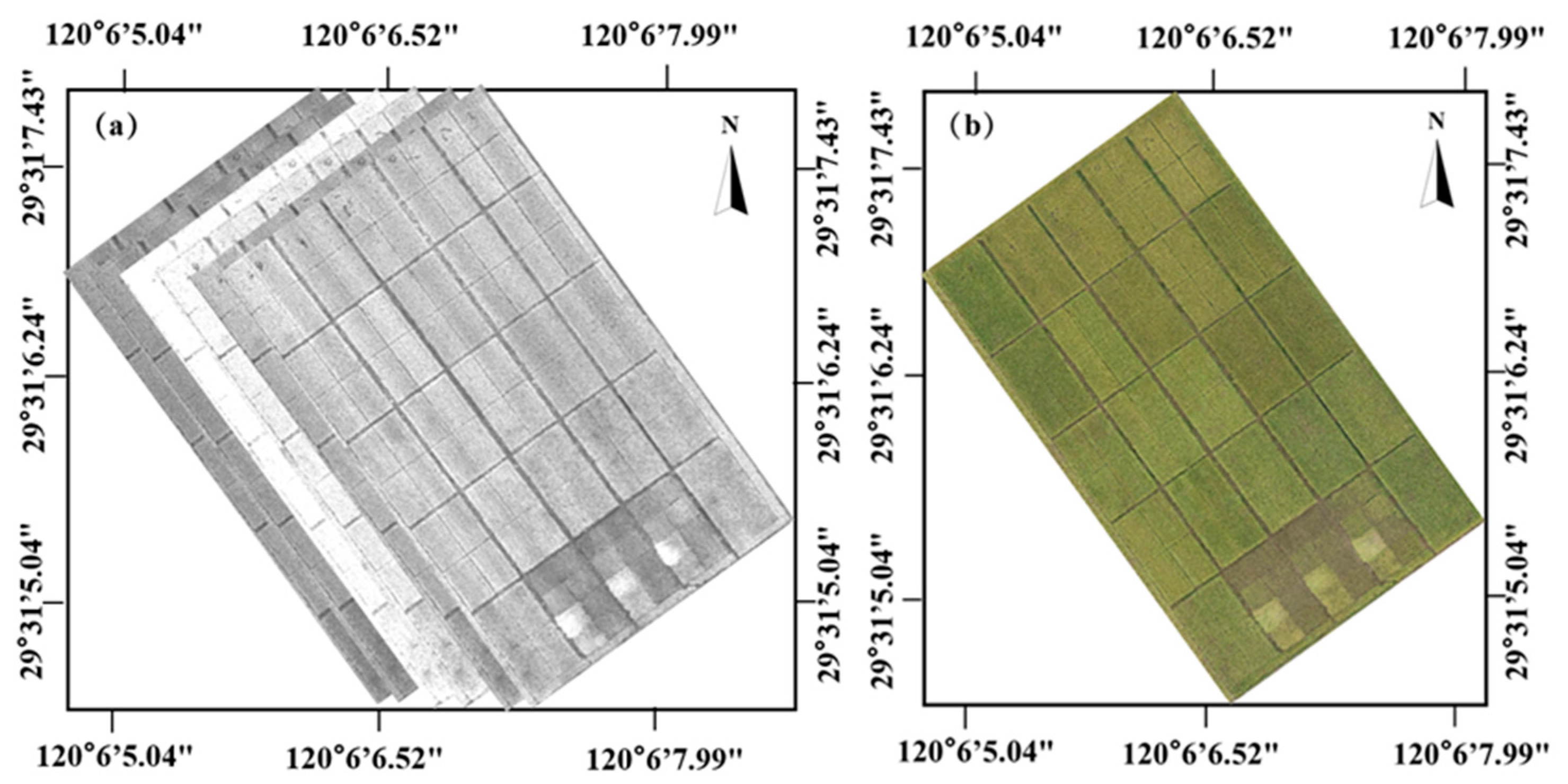

2.1. Image Acquisition

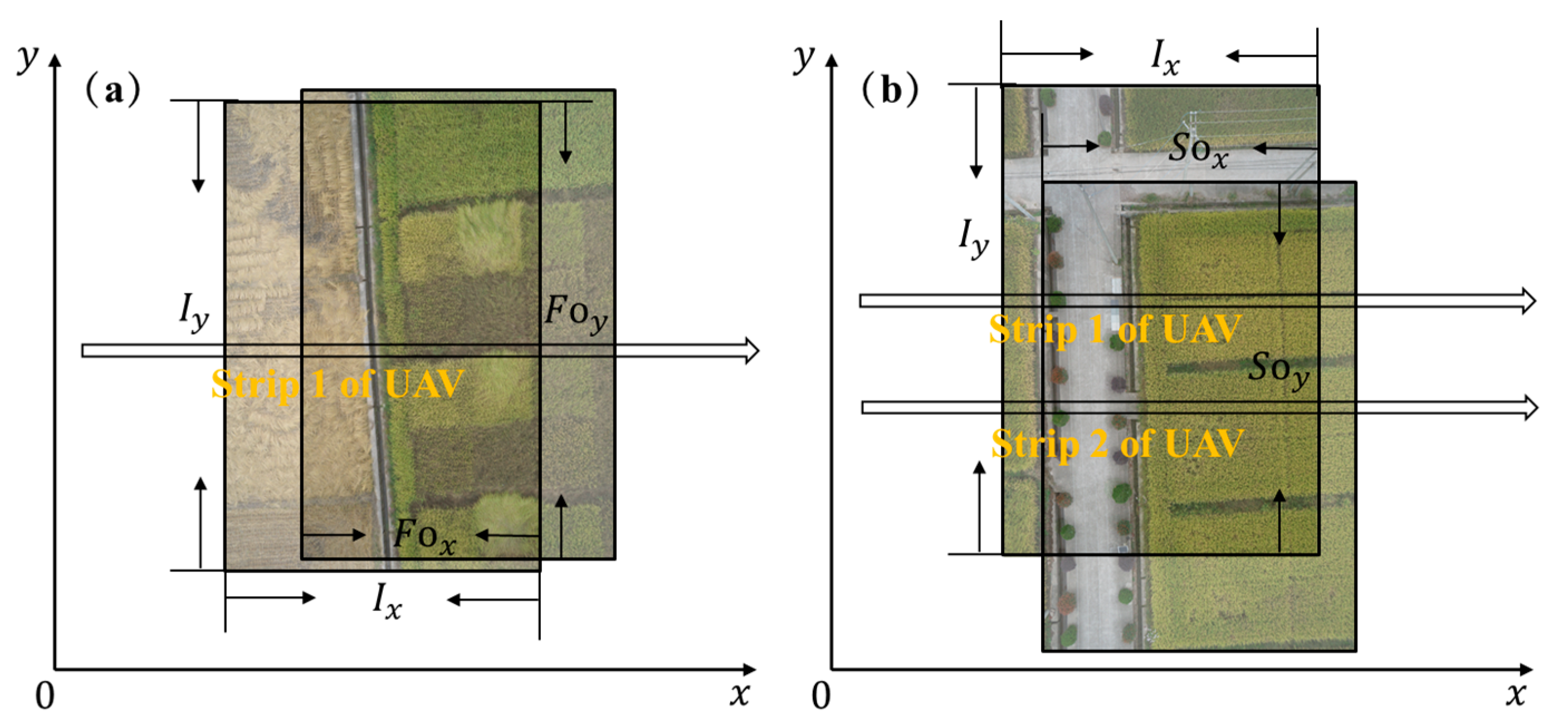

2.2. Overlap Calculation

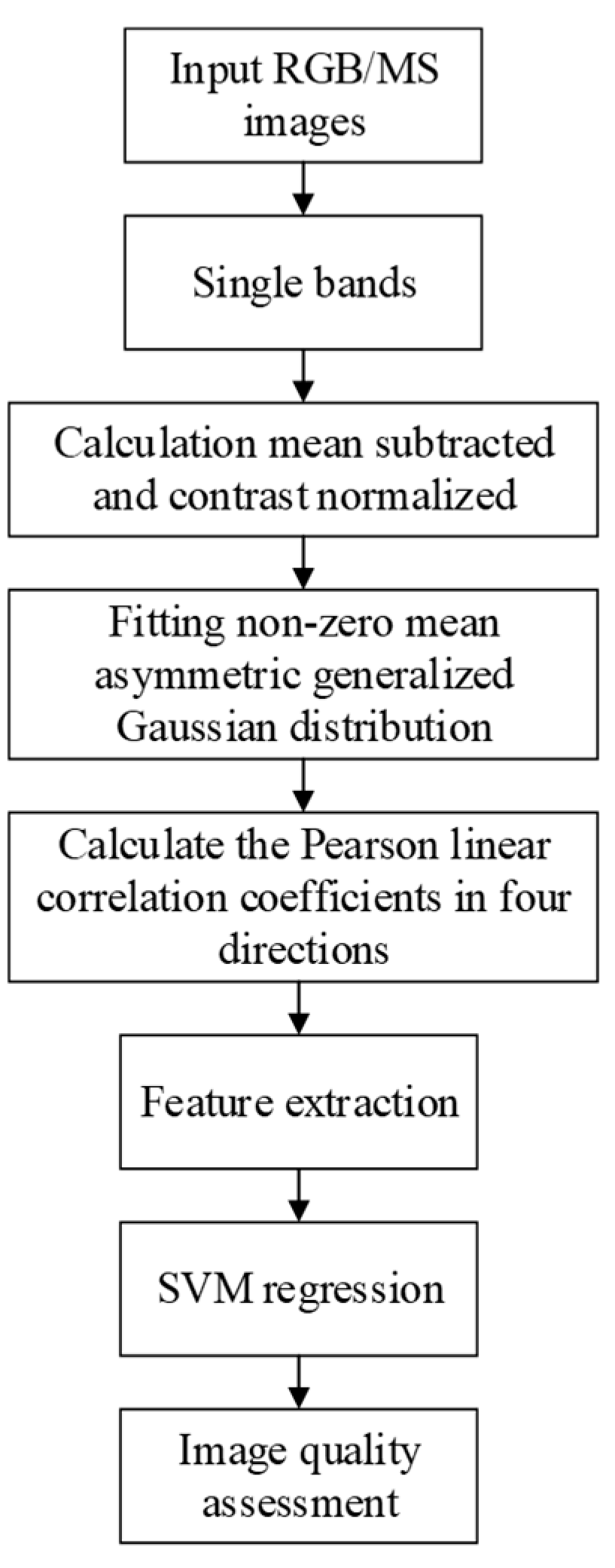

2.3. Image Evaluation

2.3.1. Conventional Image Quality Evaluation

2.3.2. BRISQUE Algorithm

2.4. Methods for Removing Redundancy

3. Results and Discussion

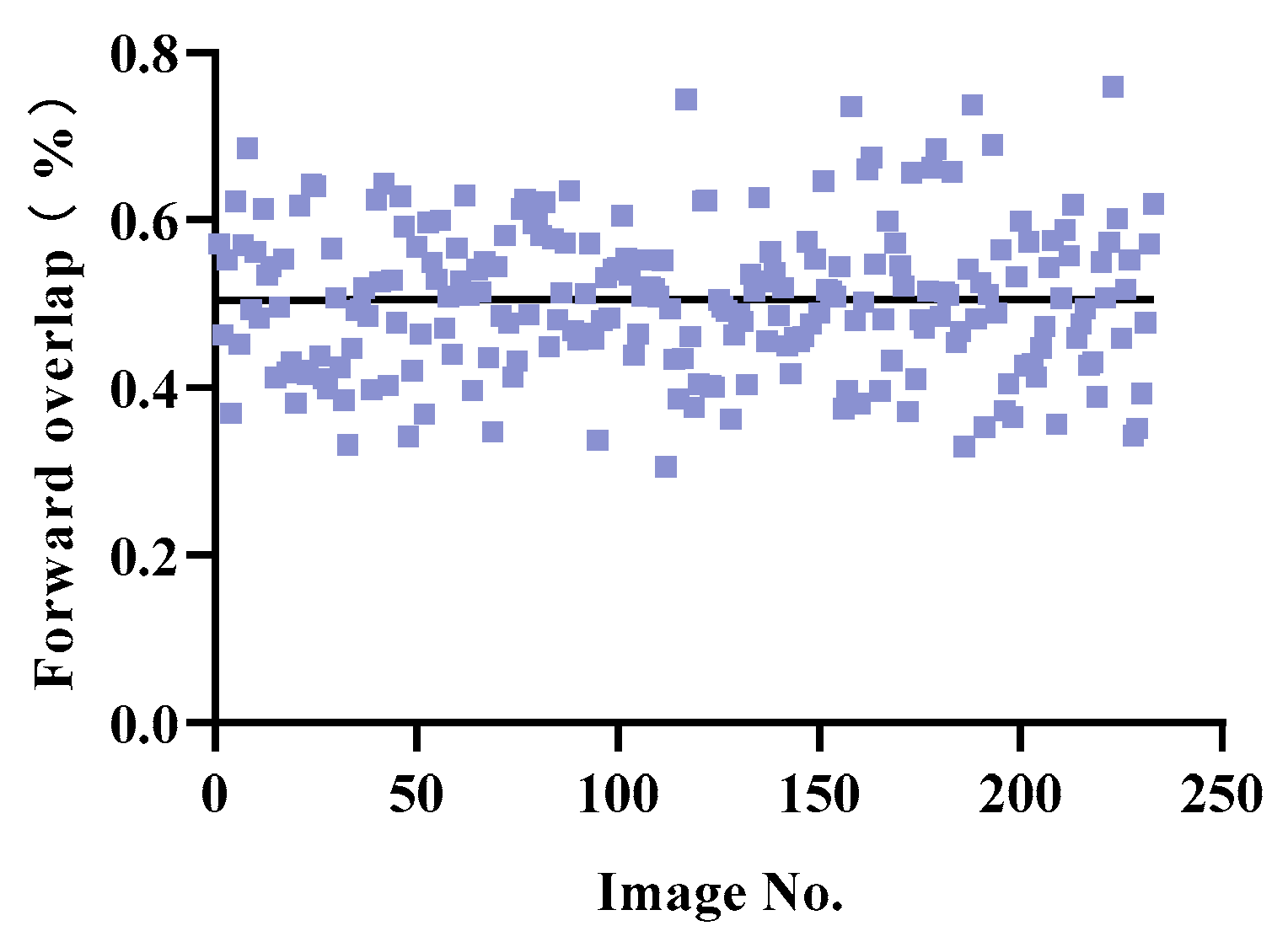

3.1. Calculation of Actual Overlap

3.2. UAV Image Quality Evaluation

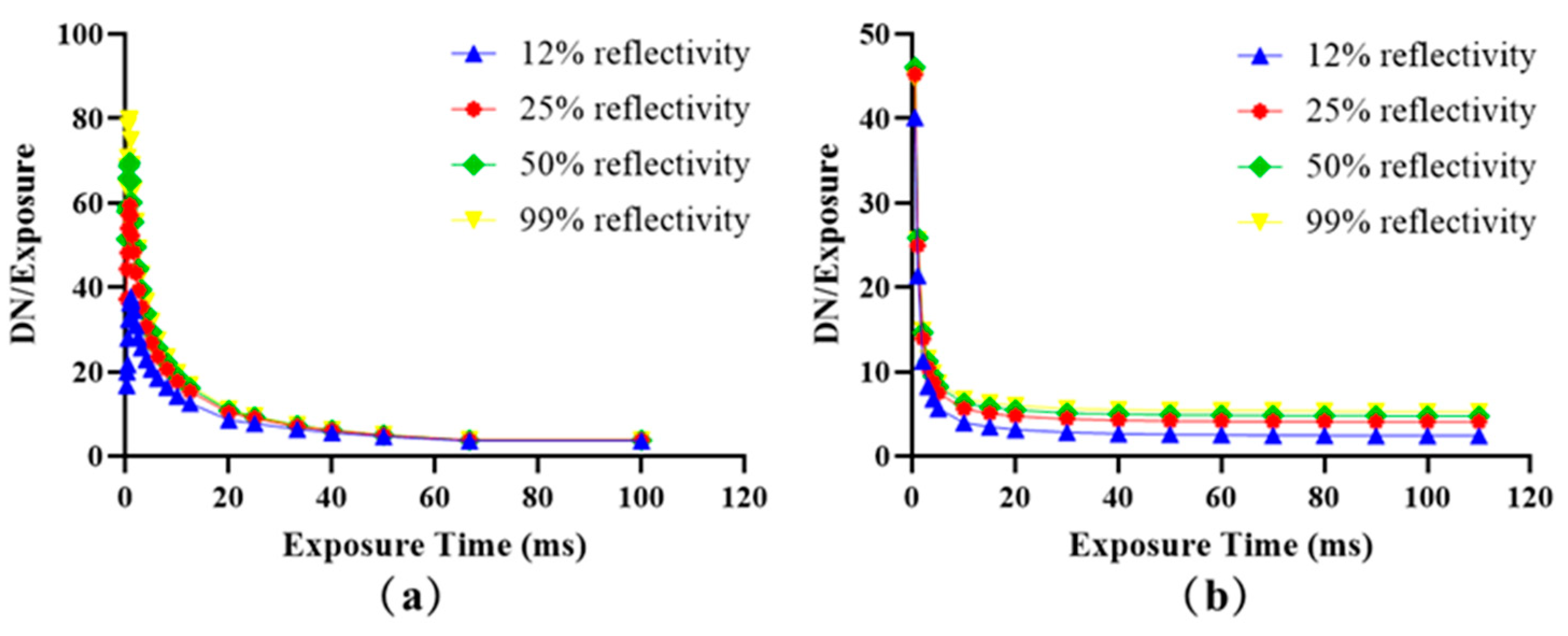

3.2.1. Influence of Exposure Time on Image Quality Evaluation

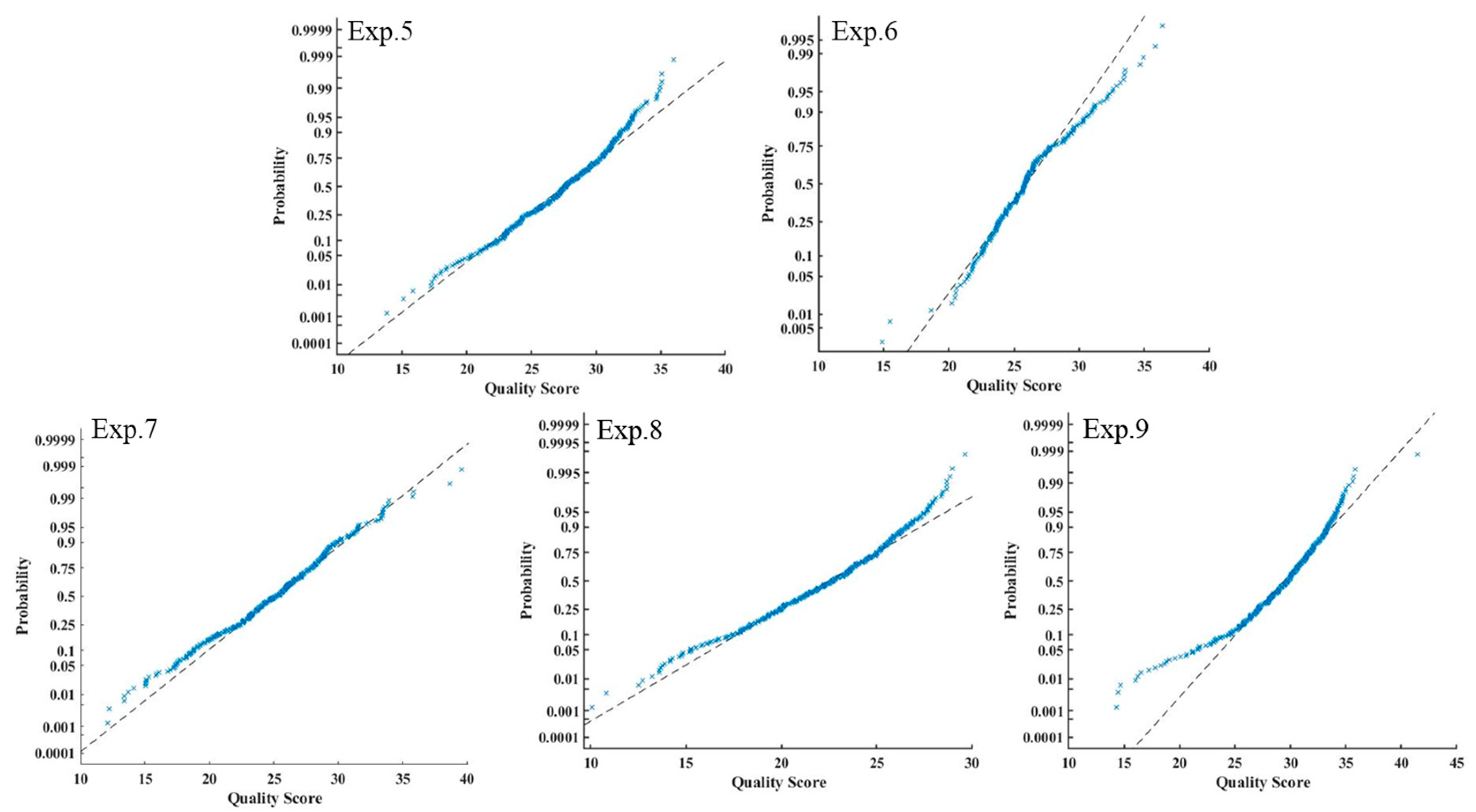

3.2.2. Image Quality Evaluation of Single Experiment

3.2.3. Image Quality Evaluation of Different Flight Altitude

3.3. Image Mosaic and Redundancy Reduction

4. Discussion of Flight Strategy

5. Conclusions

6. Patents

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lan, Y.; Thomson, S.J.; Huang, Y.; Hoffmann, W.C.; Zhang, H. Current Status and Future Directions of Precision Aerial Application for Site-Specific Crop Management in the USA. Comput. Electron. Agric. 2010, 74, 34–38. [Google Scholar] [CrossRef]

- Lu, W.; Okayama, T.; Komatsuzaki, M. Rice Height Monitoring between Different Estimation Models Using UAV Photogrammetry and Multispectral Technology. Remote Sens. 2022, 14, 78. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of Winter-Wheat above-Ground Biomass Based on UAV Ultrahigh-Ground-Resolution Image Textures and Vegetation Indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, K.; Wu, S.; Shi, H.; Sun, Y.; Zhao, Y.; Fu, E.; Chen, S.; Bian, C.; Ban, W. An Investigation of Winter Wheat Leaf Area Index Fitting Model Using Spectral and Canopy Height Model Data from Unmanned Aerial Vehicle Imagery. Remote Sens. 2022, 14, 5087. [Google Scholar] [CrossRef]

- Ma, Y.; Zhang, Q.; Yi, X.; Ma, L.; Zhang, L.; Huang, C.; Zhang, Z.; Lv, X. Estimation of Cotton Leaf Area Index (LAI) Based on Spectral Transformation and Vegetation Index. Remote Sens. 2021, 14, 136. [Google Scholar] [CrossRef]

- Xu, X.Q.; Lu, J.S.; Zhang, N.; Yang, T.C.; He, J.Y.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Inversion of Rice Canopy Chlorophyll Content and Leaf Area Index Based on Coupling of Radiative Transfer and Bayesian Network Models. ISPRS J. Photogramm. Remote Sens. 2019, 150, 185–196. [Google Scholar] [CrossRef]

- Kefauver, S.C.; Vicente, R.; Vergara-Díaz, O.; Fernandez-Gallego, J.A.; Kerfal, S.; Lopez, A.; Melichar, J.P.E.; Serret Molins, M.D.; Araus, J.L. Comparative UAV and Field Phenotyping to Assess Yield and Nitrogen Use Efficiency in Hybrid and Conventional Barley. Front. Plant Sci. 2017, 8, 1733. [Google Scholar] [CrossRef] [PubMed]

- Wan, L.; Cen, H.; Zhu, J.; Zhang, J.; Zhu, Y.; Sun, D.; Du, X.; Zhai, L.; Weng, H.; Li, Y.; et al. Grain Yield Prediction of Rice Using Multi-Temporal UAV-Based RGB and Multispectral Images and Model Transfer—A Case Study of Small Farmlands in the South of China. Agric. For. Meteorol. 2020, 291, 108096. [Google Scholar] [CrossRef]

- Fu, Z.; Jiang, J.; Gao, Y.; Krienke, B.; Wang, M.; Zhong, K.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; et al. Wheat Growth Monitoring and Yield Estimation Based on Multi-Rotor Unmanned Aerial Vehicle. Remote Sens. 2020, 12, 508. [Google Scholar] [CrossRef]

- Liu, Y.; An, L.; Wang, N.; Tang, W.; Liu, M.; Liu, G.; Sun, H.; Li, M.; Ma, Y. Leaf Area Index Estimation under Wheat Powdery Mildew Stress by Integrating UAV-based Spectral, Textural and Structural Features. Comput. Electron. Agric. 2023, 213, 108169. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña-Barragán, J.M.; Gómez-Candón, D.; De Castro, A.I.; López-Granados, F. Imagery from Unmanned Aerial Vehicles for Early Site Specific Weed Management. In Proceedings of the Precision Agriculture, Lleida, Spain, 7–11 July 2013; pp. 193–199. [Google Scholar]

- Faiçal, B.S.; Freitas, H.; Gomes, P.H.; Mano, L.Y.; Pessin, G.; de Carvalho, A.C.P.L.F.; Krishnamachari, B.; Ueyama, J. An Adaptive Approach for UAV-Based Pesticide Spraying in Dynamic Environments. Comput. Electron. Agric. 2017, 138, 210–223. [Google Scholar] [CrossRef]

- Song, C.; Liu, L.; Wang, G.; Han, J.; Zhang, T.; Lan, Y. Particle Deposition Distribution of Multi-Rotor UAV-Based Fertilizer Spreader under Different Height and Speed Parameters. Drones 2023, 7, 425. [Google Scholar] [CrossRef]

- Gu, J.; Zhang, R.; Dai, X.; Han, X.; Lan, Y.; Kong, F. Research on Setting Method of UAV Flight Parameters Based on SLAM. J. Chin. Agric. Mech. 2022, 43, 2095–5553. [Google Scholar]

- Hu, S.; Cao, X.; Deng, Y.; Lai, Q.; Wang, G.; Hu, D.; Zhang, L.; Liu, M.; Chen, X.; Xiao, B.; et al. Effects of the Flight Parameters of Plant Protection Drone on the Distribution of Pollination Droplets and the Fruit Setting Rate of Camellia. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2023, 39, 92–100. [Google Scholar] [CrossRef]

- He, Y.; Du, X.; Zheng, L.; Zhu, J.; Cen, H.; Xu, L. Effects of UAV Flight Height on Estimated Fractional Vegetation Cover and Vegetation Index. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2022, 38, 63–72. [Google Scholar]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-Reference Image Quality Assessment in the Spatial Domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

- Fang, Y.; Ma, K.; Wang, Z.; Lin, W.; Fang, Z.; Zhai, G. No-Reference Quality Assessment of Contrast-Distorted Images Based on Natural Scene Statistics. IEEE Signal Process. Lett. 2015, 22, 838–842. [Google Scholar] [CrossRef]

- Moorthy, A.K.; Bovik, A.C. Blind Image Quality Assessment: From Natural Scene Statistics to Perceptual Quality. IEEE Trans. Image Process. 2011, 20, 3350–3364. [Google Scholar] [CrossRef]

- Ye, P.; Doermann, D. No-Reference Image Quality Assessment Using Visual Codebooks. IEEE Trans. Image Process. 2012, 21, 3129–3181. [Google Scholar] [CrossRef]

- Tang, H.; Joshi, N.; Kapoor, A. Learning a Blind Measure of Perceptual Image Quality. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 305–312. [Google Scholar]

- Saad, M.A.; Bovik, A.C.; Charrier, C. Blind Image Quality Assessment: A Natural Scene Statistics Approach in the DCT Domain. IEEE Trans. Image Process. 2012, 21, 3339–3352. [Google Scholar] [CrossRef]

- Schölkopf, B.; Smola, A.J.; Williamson, R.C.; Bartlett, P.L. New Support Vector Algorithms. Neural Comput. 2000, 12, 1207–1245. [Google Scholar] [CrossRef] [PubMed]

- Storch, M.; Jarmer, T.; Adam, M.; de Lange, N. Systematic Approach for Remote Sensing of Historical Conflict Landscapes with UAV-Based Laserscanning. Sensors 2022, 22, 217. [Google Scholar] [CrossRef] [PubMed]

| Camera | Name | Parameter |

|---|---|---|

| RGB | Weight | 358 g |

| Sensor size | 23.4 mm × 15.6 mm | |

| Resolution | 6000 pixels × 4000 pixels | |

| Focus lens | 16 mm/fixed | |

| Field of view | 83 | |

| MS | Weight | 123 g |

| Sensor size | 11.27 mm × 6 mm | |

| Resolution | 409 pixels × 216 pixels | |

| Focus lens | 16 mm/fixed | |

| Field of view | 43.6 | |

| Bands | 600–1000 nm |

| Camera | Experiments | Exposure Time (ms) | Forward Overlap (%) | Side Overlap (%) | Number of Images |

|---|---|---|---|---|---|

| RGB | Exp. 1 | 1 | 65 | 55 | 221 |

| Exp. 2 | 1 | 80 | 65 | 577 | |

| Exp. 3 | 1 | 75 | 60 | 387 | |

| Exp. 4 | 1.25 | 75 | 60 | 388 | |

| MS | Exp. 5 | 5 | 75 | 60 | 387 |

| Exp. 6 | 6 | 65 | 55 | 211 | |

| Exp. 7 | 7 | 80 | 65 | 577 | |

| Exp. 8 | 16 | 75 | 60 | 387 | |

| Exp. 9 | 20 | 75 | 60 | 388 |

| Camera | Experiments | Completion Time (h) | ||

|---|---|---|---|---|

| Before | After | Improved | ||

| RGB | Exp. 1 | 1.02 | 0.75 | 26% |

| Exp. 2 | 2.16 | 1.5 | 30% | |

| Exp. 3 | 2.4 | 1.75 | 27% | |

| Exp. 4 | 19.4 | 10 | 48% | |

| MS | Exp. 5 | 0.08 | 0.07 | 13% |

| Exp. 6 | 0.05 | 0.05 | 0 | |

| Exp. 7 | 0.5 | 0.08 | 84% | |

| Exp. 8 | 0.09 | 0.08 | 11% | |

| Exp. 9 | 0.09 | 0.08 | 11% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, X.; Zheng, L.; Zhu, J.; Cen, H.; He, Y. Evaluation of Mosaic Image Quality and Analysis of Influencing Factors Based on UAVs. Drones 2024, 8, 143. https://doi.org/10.3390/drones8040143

Du X, Zheng L, Zhu J, Cen H, He Y. Evaluation of Mosaic Image Quality and Analysis of Influencing Factors Based on UAVs. Drones. 2024; 8(4):143. https://doi.org/10.3390/drones8040143

Chicago/Turabian StyleDu, Xiaoyue, Liyuan Zheng, Jiangpeng Zhu, Haiyan Cen, and Yong He. 2024. "Evaluation of Mosaic Image Quality and Analysis of Influencing Factors Based on UAVs" Drones 8, no. 4: 143. https://doi.org/10.3390/drones8040143

APA StyleDu, X., Zheng, L., Zhu, J., Cen, H., & He, Y. (2024). Evaluation of Mosaic Image Quality and Analysis of Influencing Factors Based on UAVs. Drones, 8(4), 143. https://doi.org/10.3390/drones8040143