UAV-Embedded Sensors and Deep Learning for Pathology Identification in Building Façades: A Review

Abstract

1. Introduction

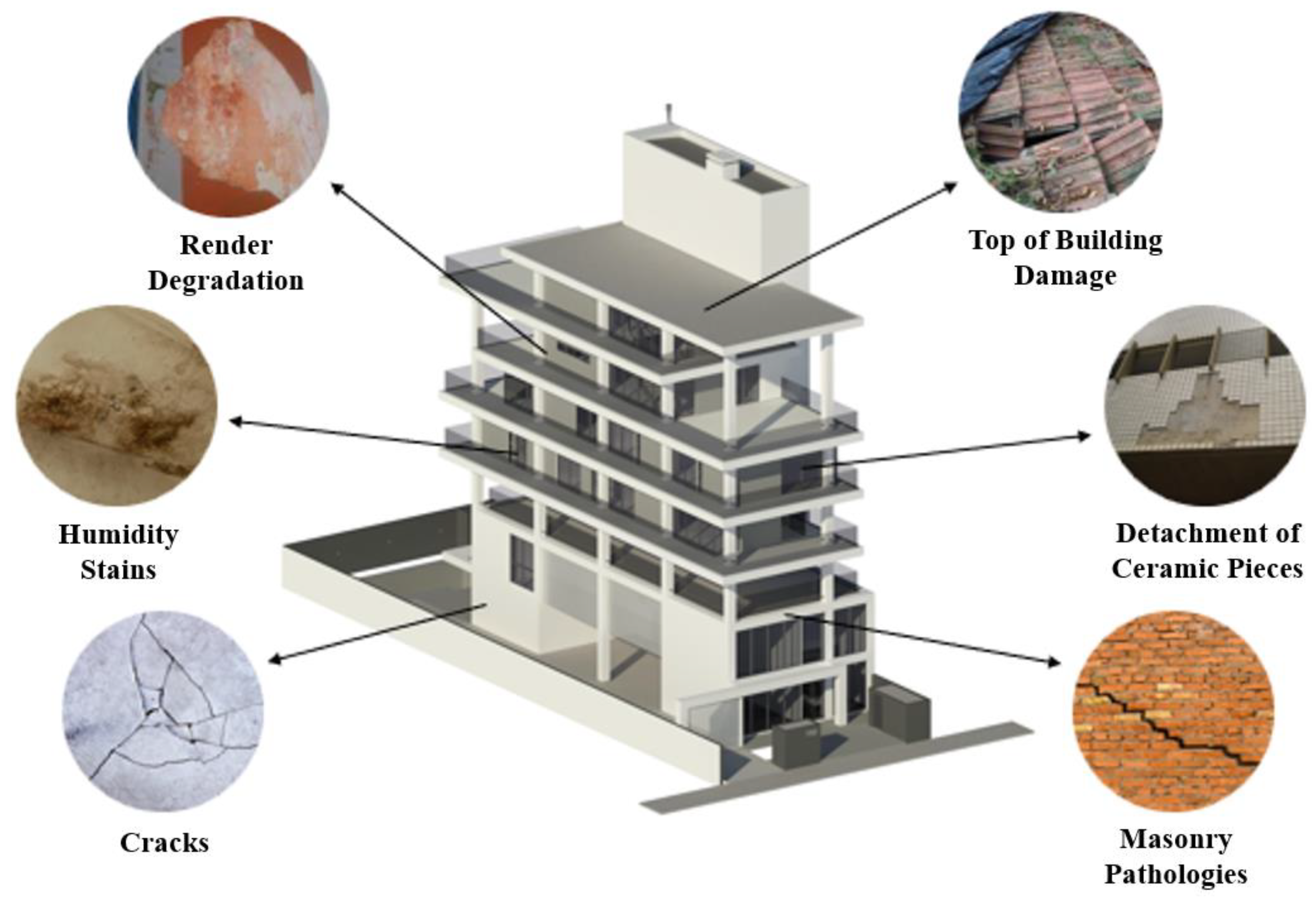

2. Building Pathology

- Cracks—characterized by the opening of structural and sealing elements, they can occur due to movements originated by thermal expansion, hygroscope, overload, excessive deformation, foundation settlement, material retraction, and chemical alterations. In general, these openings are an important indication of structural damage and can be classified by their size: fissure, up to 1 mm; crack, 1–3 mm; fracture, above 3 mm [42].

- Humidity stains—characterized by an excess of dampness in a certain point or extension of a construction. In general, this manifestation is associated with a lack of waterproofing or existing deficiencies in drainage and plumbing elements. Regarding the presence of humidity stains in façades, Ref. [41] highlighted that, aside from the causes previously mentioned, they can also occur due to excess dirtiness, the growth of micro-organisms, deposition of calcium carbonate over surfaces, and vandalism.

- Detachment of ceramic and render—characterized by the separation of a coating from its surface. In cases regarding ceramic pieces, this type of detachment occurs when the system’s adhesive resistance is inferior to the tension acting on it. When an anomaly occurs in the render, its causes can be associated with external agents, execution problems, and the end of the service life of that material [43].

- Degradation of paint covering—a pathology commonly associated with the end of the service life of material and problems related to paint dosage [40].

- Damage in opening elements (windows and doors)—manifestations associated with damage to elements such as windows and doors, basically focused on the degradation of the composing element of materials and in the installation portal [41].

- Damage to the top of the building (exposed slab and roof)—characterized by the deterioration of slabs and roof tiles, which mainly culminates in infiltrations. These pathologies occur through the appearance of cracks, broken tiles, problems in the installation of rain drainage elements and in the waterproofing system, and the action of damaging elements [16].

3. Theory of Building Pathologies

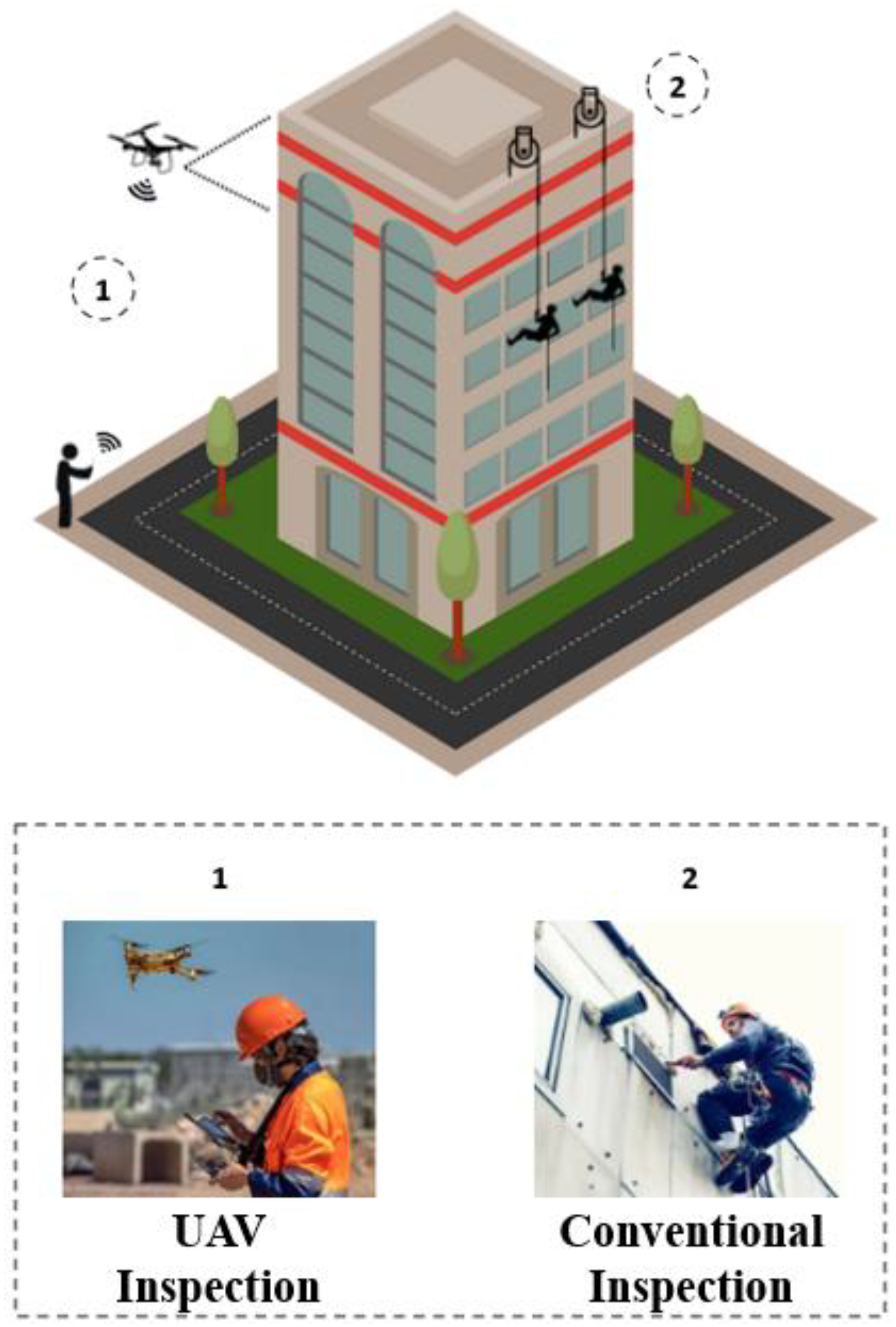

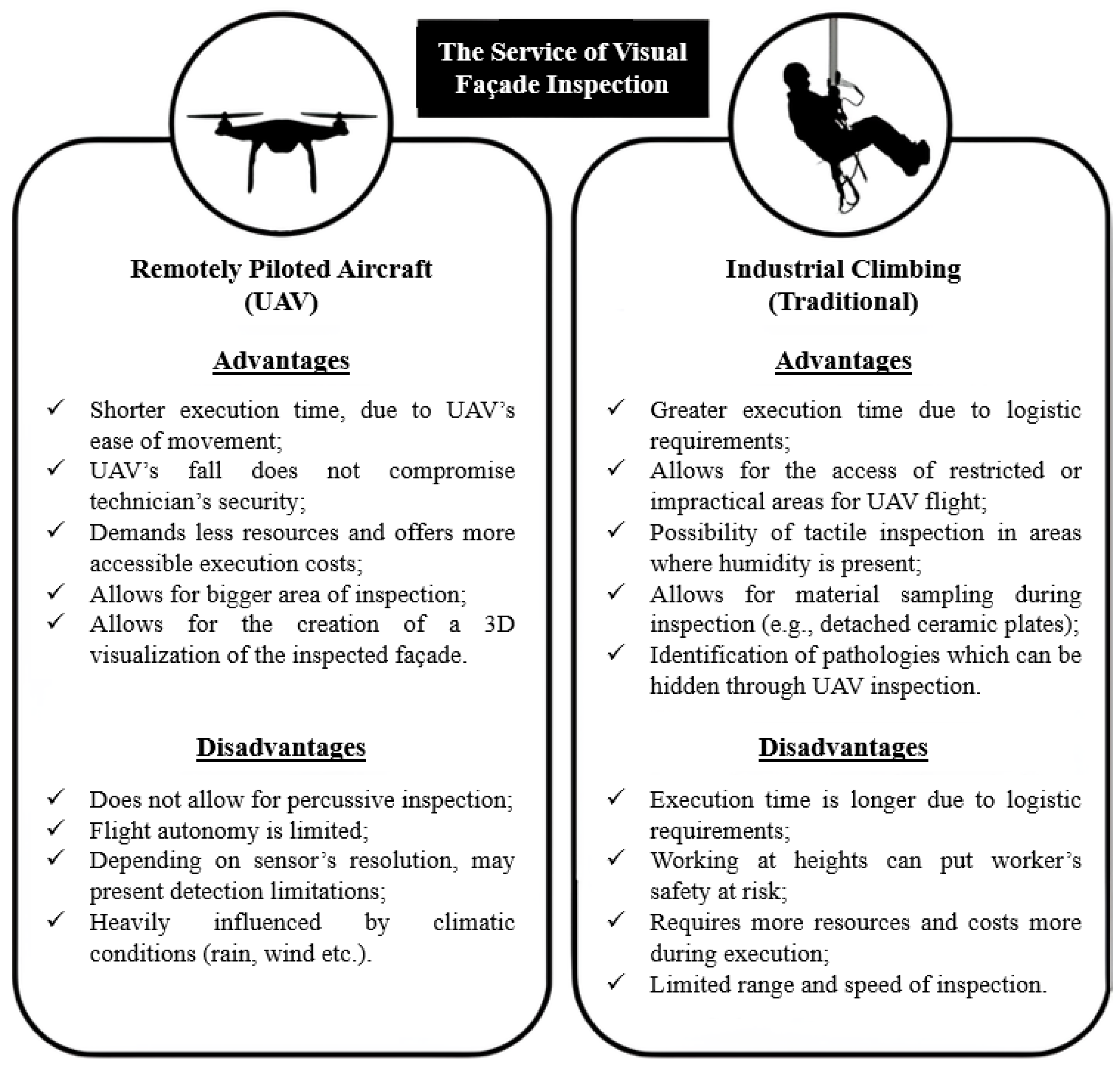

4. Inspection of Building Façades

- High risk—significant damage to the health and safety of users and the environment, leading to substantial loss of performance and functionality, possible shutdowns, high maintenance and recovery costs, and noticeable compromise in service life.

- Moderate risk—partial loss of performance and functionality in the structure without directly impacting system operations, accompanied by early signs of deterioration.

- Low risk—potential for minor, aesthetic impact or disruption to planned activities, with minimal likelihood of critical or regular risks, as well as little to no impact on real estate value.

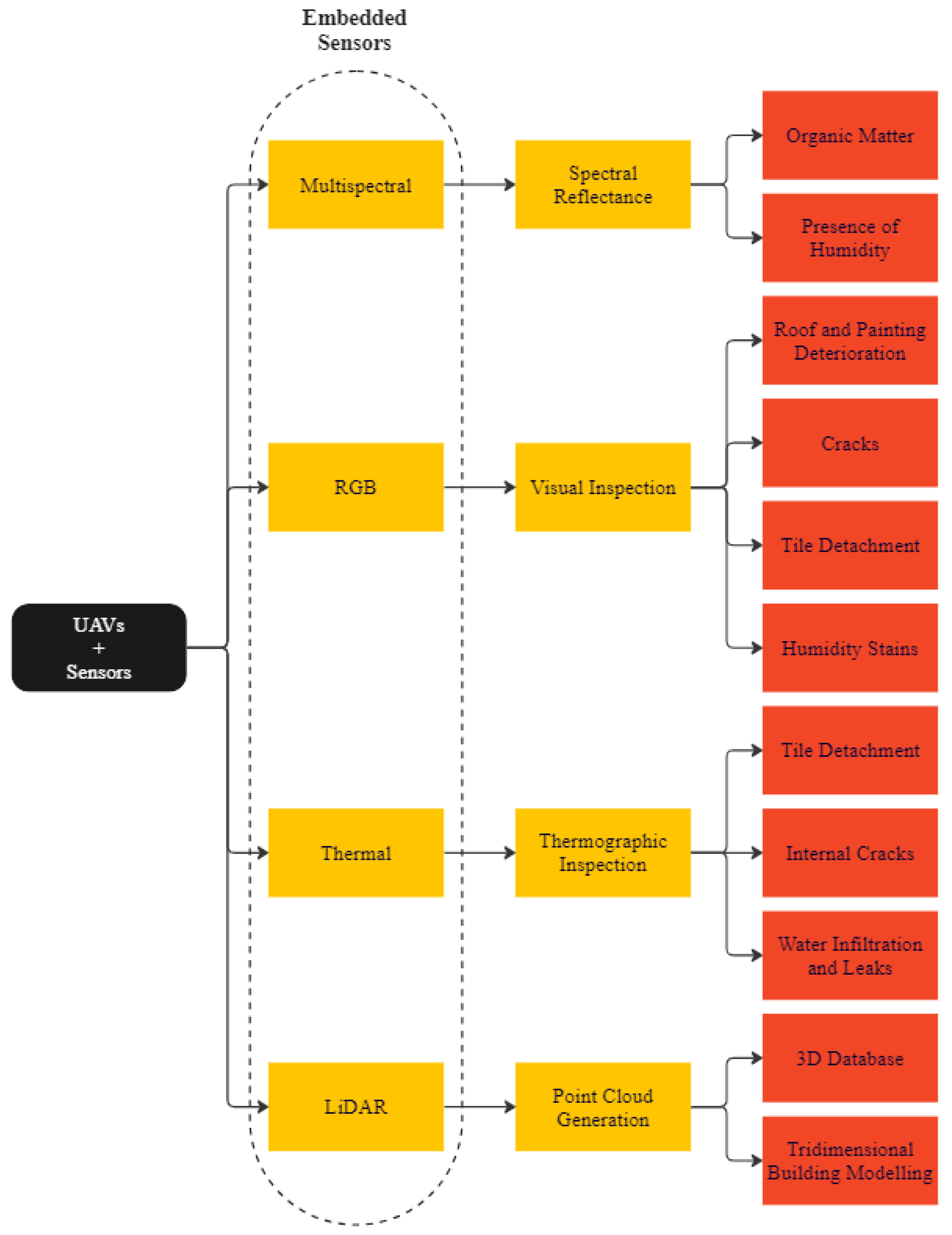

5. The Use of Embedded Sensors in UAVs for Façade Inspections

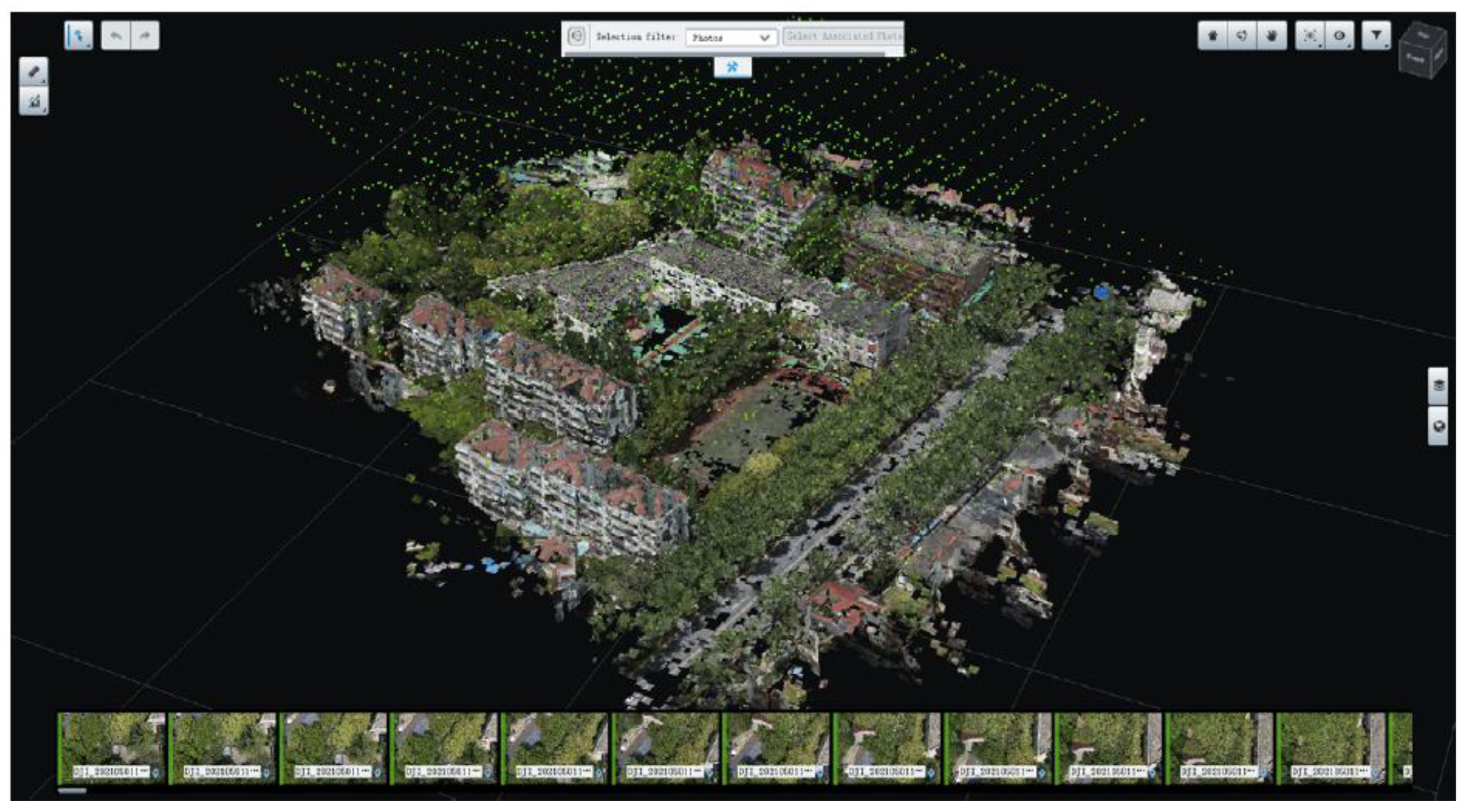

5.1. Tridimensional Mapping of Buildings

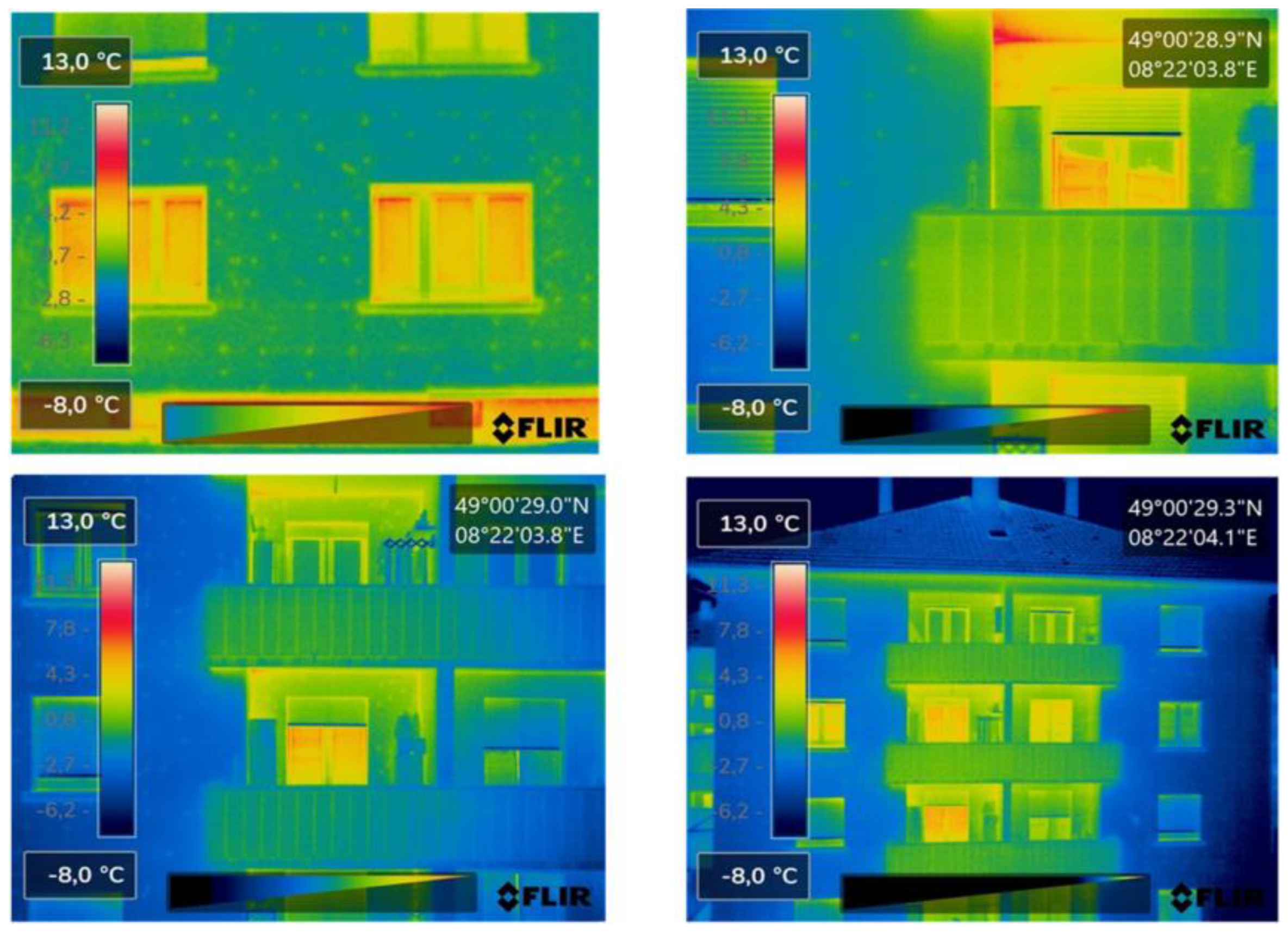

5.2. Thermal Inspection

5.3. Inspection with RGB Sensors

5.4. Inspection with Multispectral and Hyperspectral Sensors

6. Cost Comparison between Conventional and UAV Inspections

7. Artificial Intelligence

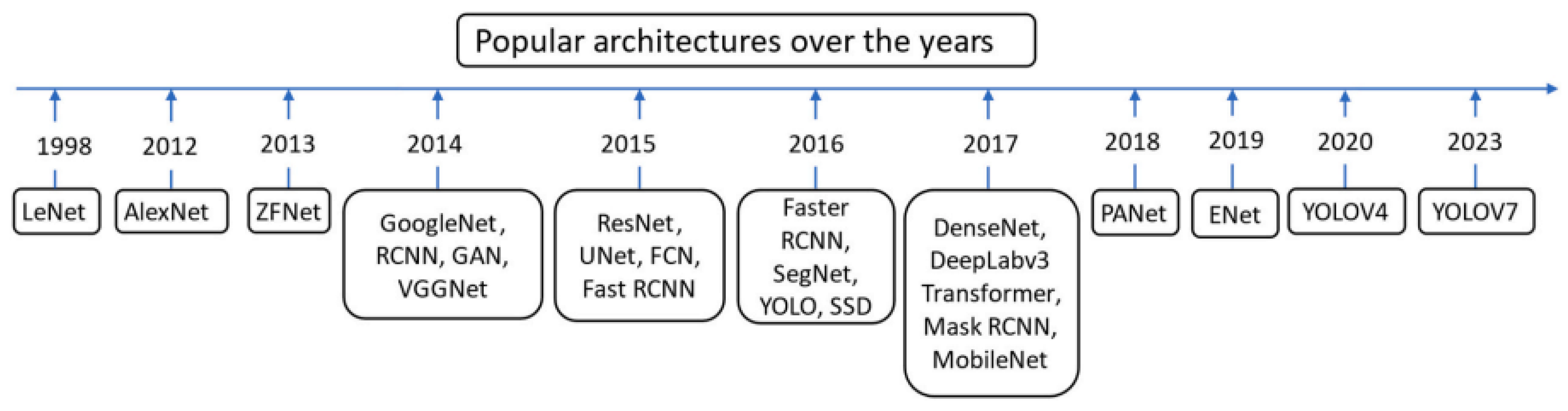

8. Deep Learning

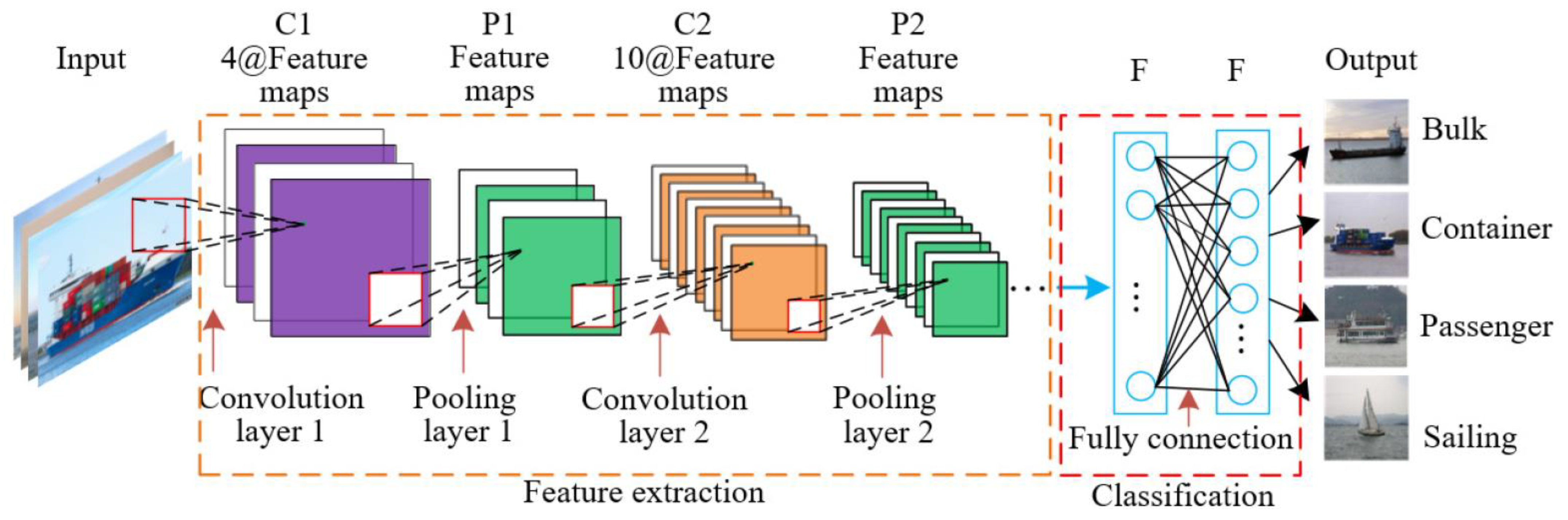

9. Convolution Neural Network (CNN)

10. Deep Learning Applied to Pathology of Building Façades

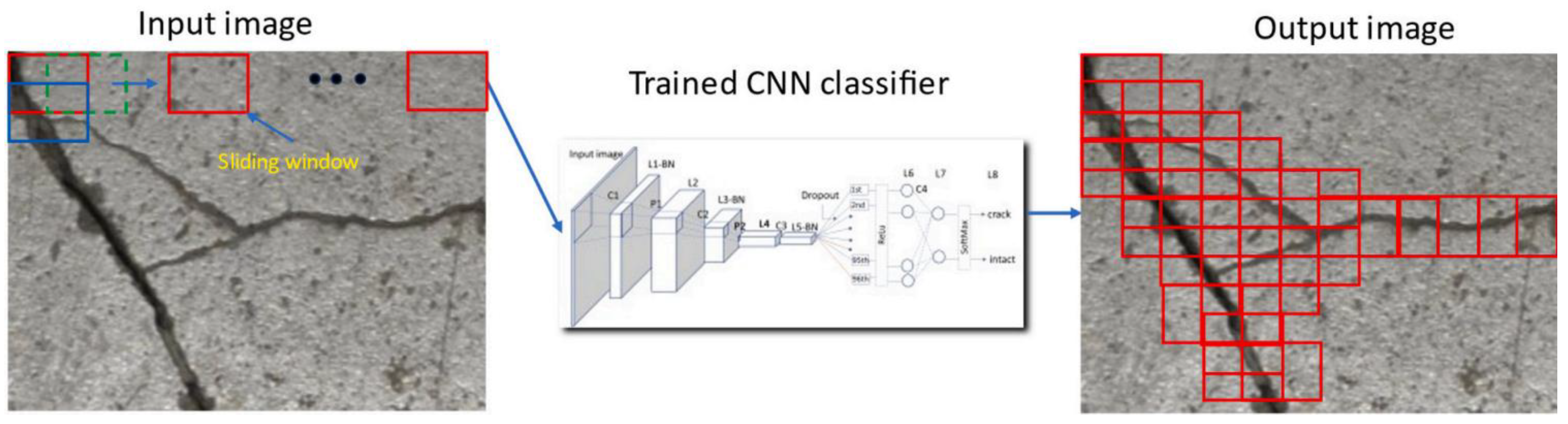

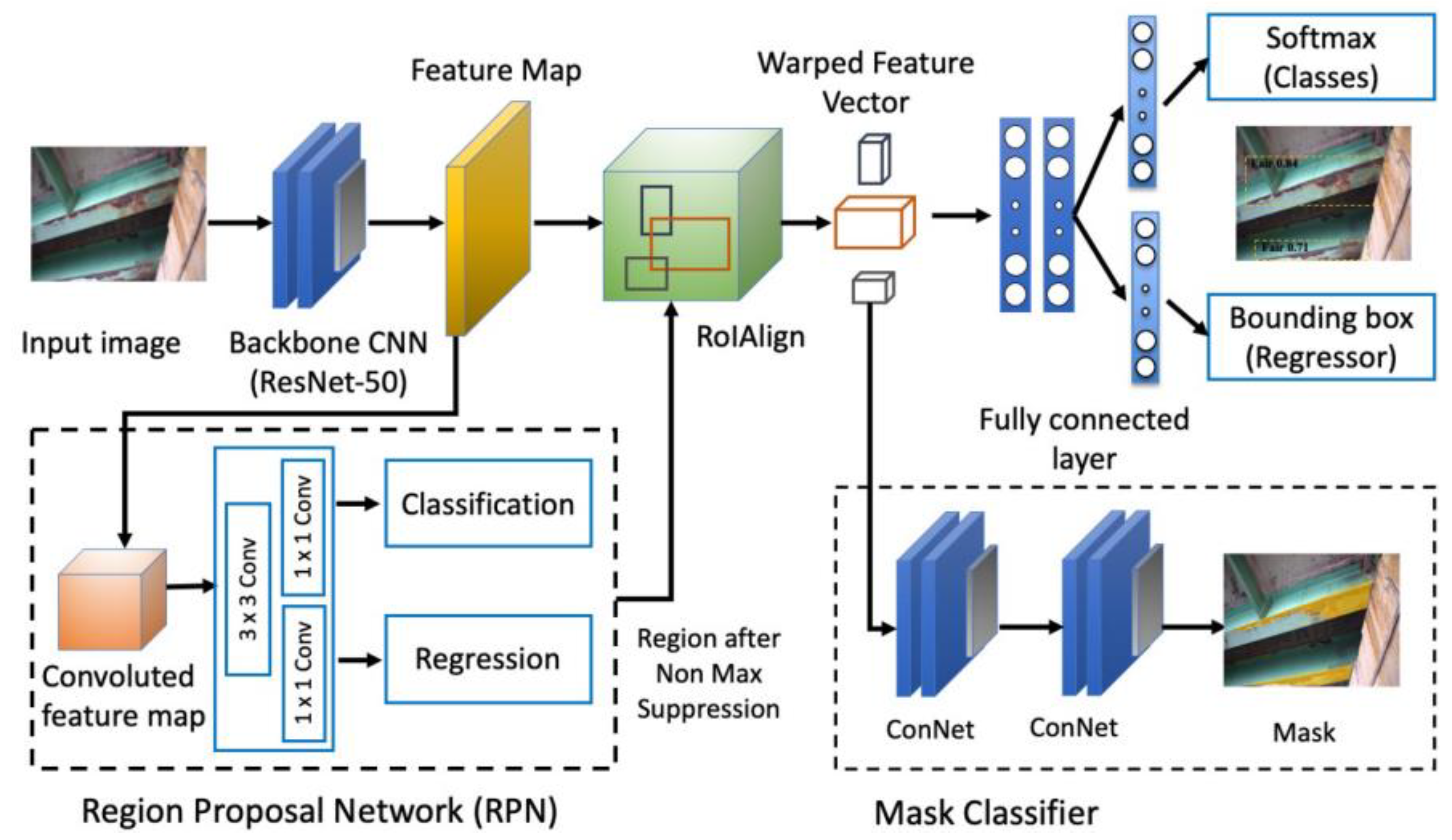

10.1. Deep Learning for Crack Detection

10.2. Deep Learning for Corrosion Detection

10.3. Deep Learning for Detachment of Ceramic Pieces

11. Conclusions and Recommendations for Future Research

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

References

- Kim, H.; Lee, J.; Ahn, E.; Cho, S.; Shin, M.; Sim, S. Concrete Crack Identification Using a UAV Incorporating Hybrid Image Processing. Sensors 2018, 17, 2052. [Google Scholar] [CrossRef] [PubMed]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Sreenath, S.; Malik, H.; Husnu, N.; Kalaichelavan, K. Assessment and Use of Unmanned Aerial Vehicle for Civil Structural Health Monitoring. Procedia Comput. Sci. 2020, 170, 656–663. [Google Scholar] [CrossRef]

- Silva, W.P.A.; Lordsleen Júnior, A.C.; Ruiz, R.D.B.; Rocha, J.H.A. Inspection of pathological manifestations in buildings by using a thermal imaging camera integrated with an Unmanned Aerial Vehicle (UAV): A documental research. Rev. ALCONPAT 2021, 11, 123–139. [Google Scholar]

- Mandirola, M.; Casarotti, C.; Peloso, S.; Lanese, I.; Brunesi, E.; Senaldi, I. Use of UAS for damage inspection and assessment of bridge infrastructures. Int. J. Disaster Risk Reduct. 2022, 72, 21. [Google Scholar] [CrossRef]

- Mayer, Z.; Epperlein, A.; Vollmer, E.; Volk, R.; Schultmann, F. Investigating the Quality of UAV-Based Images for the Thermographic Analysis of Buildings. Remote Sens. 2023, 15, 301. [Google Scholar] [CrossRef]

- Bauer, E.; Castro, E.K.; Silva, M.N.B. Estimate of the facades degradation with ceramic cladding: Study of master buildings. Ceramica 2015, 61, 151–159. [Google Scholar] [CrossRef][Green Version]

- Ballesteros, R.D.; Lordsleem Junior, A.C. Veículos Aéreos Não Tripulados (VANT) para inspeção de manifestações patológicas em fachadas com revestimento cerâmico. Ambiente Construído 2021, 21, 119–137. [Google Scholar] [CrossRef]

- Almeida, A.S.F.C.E.; Ornelas, A.J.A.; Cordeiro, A.R. Termografia passiva no diagnóstico de patologias e desempenho térmico em fachadas de edifícios através de câmara térmica instalada em drone. Abordagem preliminar em Coimbra (Portugal). Cad. Geogr. 2020, 42, 27–41. [Google Scholar]

- Borba, P. Extração Automática de Edificações para a Produção Cartográfica Utilizando Inteligência Artificial. Master’s Thesis, University of Brasília, Brasília, Brazil, 2022. [Google Scholar]

- Jiang, S.; Zhang, J. Real-Time Crack Assessment Using Deep Neural Networks with Wall-Climbing Unmanned Aerial System. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 549–564. [Google Scholar] [CrossRef]

- Calantropio, A.; Chiabrando, F.; Codastefano, M.; Bourke, E. Deep learning for Automatic Building Damage Assessment: Application in Post-Disaster Scenarios Using UAV Data. In Proceedings of the XXIV International Society for Photogrammetry and Remote Sensing Congress, XXIV ISPRS Congress, Nice, France, 5–9 July 2021. [Google Scholar]

- Chen, K.; Reichard, D.; Xu, X.; Akanmu, A. Automated Crack Segmentation in Close-Range Building Façade Inspection Images Using Deep Learning Techniques. J. Build. Eng. 2021, 43, 16. [Google Scholar] [CrossRef]

- Kumar, P.; Batchu, S.; Swamy, N.S.; Kota, S.R. Real-Time Concrete Damage Detection Using Deep Learning for High Rise Structures. IEEE Access 2021, 9, 112312–112331. [Google Scholar] [CrossRef]

- Sousa, A.D.P.; Sousa, G.C.L.; Maués, L.M.F. Using Digital Image Processing and Unmanned Aerial Vehicle (UAV) for Identifying Ceramic Cladding Detachment in Building Facades. Ambiente Construído 2022, 22, 199–213. [Google Scholar] [CrossRef]

- Santos, L.M.A.; Zanoni, V.A.G.; Bedin, E.; Pistori, H. Deep learning Applied to Equipment Detection on Flat Roofs in Images Captured by UAV. Case Stud. Constr. Mater. 2023, 18, 18. [Google Scholar] [CrossRef]

- Teng, S.; Chen, G. Deep Convolution Neural Network-Based Crack Feature Extraction, Detection and Quantification. J. Fail. Anal. Prev. 2022, 22, 1308–1321. [Google Scholar] [CrossRef]

- Ali, R.; Chuah, J.H.; Talip, M.S.A.; Mokhtar, N.; Shoaib, M.A. Structural crack detection using deep convolutional neural networks. Autom. Constr. 2022, 133, 28. [Google Scholar] [CrossRef]

- Gonthina, M.; Chamata, R.; Duppalapudi, J.; Lute, V. Deep CNN-based concrete cracks identification and quantification using image processing techniques. Asian J. Civ. Eng. 2022, 24, 727–740. [Google Scholar] [CrossRef]

- Fu, R.; Xu, H.; Wang, Z.; Shen, L.; Cao, M.; Liu, T.; Novák, D. Enhanced Intelligent Identification of Concrete Cracks Using Multi-Layered Image Preprocessing-Aided Convolutional Neural Networks. Sensors 2020, 20, 2021. [Google Scholar] [CrossRef] [PubMed]

- Słoński, M.; Tekiele, M. 2D Digital Image Correlation and Region-Based Convolutional Neural Network in Monitoring and Evaluation of Surface Cracks in Concrete Structural Elements. Materials 2020, 13, 3527. [Google Scholar] [CrossRef]

- Sjölander, A.; Belloni, V.; Anseli, A.; Nordström, E. Towards Automated Inspections of Tunnels: A Review of Optical Inspections and Autonomous Assessment of Concrete Tunnel Linings. Sensors 2023, 23, 3189. [Google Scholar] [CrossRef]

- Lee, J.; Kim, H.; Kim, N.; Ryu, E.; Kang, J. Learning to Detect Cracks on Damaged Concrete Surfaces Using Two-Branched Convolutional Neural Network. Sensors 2019, 19, 4796. [Google Scholar] [CrossRef] [PubMed]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaria, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions. J. Big Data 2021, 53, 74. [Google Scholar] [CrossRef] [PubMed]

- Yesilmen, S.; Tatar, B. Efficiency of convolutional neural networks (CNN) based image classification for monitoring construction related activities: A case study on aggregate mining for concrete production. Case Stud. Constr. Mater. 2022, 17, 11. [Google Scholar] [CrossRef]

- Taherkhani, A.; Cosma, G.; McGinnity, T.M. A Deep Convolutional Neural Network for Time Series Classification with Intermediate Targets. SN Comput. Sci. 2023, 4, 24. [Google Scholar] [CrossRef]

- Hussain, M.; Bird, J.J.; Faria, D.R. A Study on CNN Transfer Learning for Image Classification. Adv. Comput. Intell. Syst. 2018, 840, 191–202. [Google Scholar]

- Bahmei, B.; Birmingham, E.; Arzanpour, S. CNN-RNN and Data Augmentation Using Deep Convolutional Generative Adversarial Network for Environmental Sound Classification. IEEE Signal Process. Lett. 2022, 29, 682–686. [Google Scholar] [CrossRef]

- Sarker, I.H. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions. SN Comput. Sci. 2021, 2, 20. [Google Scholar] [CrossRef] [PubMed]

- Tong, Z.; Gao, J.; Yuan, D. Advances of deep learning applications in ground-penetrating radar: A survey. Constr. Build. Mater. 2020, 258, 14. [Google Scholar] [CrossRef]

- Qayyum, R.; Ehtisham, R.; Bahrami, A.; Camp, C.; Mir, J.; Ahmad, A. Assessment of Convolutional Neural Network Pre-Trained Models for Detection and Orientation of Cracks. Materials 2023, 16, 826. [Google Scholar] [CrossRef]

- Watt, D. Building Pathology: Principles and Practice; Wiley-Blackwell: Malden, MA, USA, 2009. [Google Scholar]

- Bolina, F.L.; Tutikian, B.F.; Helene, P. Patologia de Estruturas; Oficina de Textos: São Paulo, Brazil, 2019. [Google Scholar]

- International Council for Research and Innovation in Building and Construction (CIB). Building Pathology: A State-of-The-Art Report; Building Pathology Department: Delft, The Netherlands, 1993. [Google Scholar]

- Caporrino, C.F. Patologias em Alvenarias; Oficina de Textos: São Paulo, Brazil, 2018. [Google Scholar]

- Sena, G.O. Conceitos Iniciais. In Automating Cities. Patologia das Construções; Sena, G.O., Nascimento, M.L.M., Nabut Neto, A.C., Eds.; Editora 2B: Salvador, Bahia, 2020. [Google Scholar]

- Instituto Brasileiro de Avaliações e Perícias de Engenharia (IBAPE). Norma de Inspeção Predial–2021; IBAPE: São Paulo, Brazil, 2021; 27p. [Google Scholar]

- ABNT NBR 16747; Inspeção Predial–Diretrizes, Conceitos, Terminologia e Procedimento. Associação Brasileira de Normas Técnicas (ABNT): Rio de Janeiro, Brazil, 2021; 14p.

- ABNT NBR 14653-5; Avaliação de Bens—Parte 5: Máquinas, Equipamentos, Instalações e Bens Industriais em Geral. Associação Brasileira de Normas Técnicas (ABNT): Rio de Janeiro, Brazil, 2006; 19p.

- Petersen, A.B.B. Vida Útil, Manutenção e Inspeção: Foco em Fachadas Revestidas com Reboco e Pintura; Blucher: São Paulo, Brazil, 2022. [Google Scholar]

- Melo Júnior, C.M. Metodologia para Geração de Mapas de Danos de Fachadas a Partir de Fotografias Obtidas por Veículo Aéreo Não Tripulado e Processamento Digital de Imagens. Ph.D. Thesis, University of Brasília, Brasília, Brazil, 2016. [Google Scholar]

- Thomaz, E. Trincas em Edificações: Causas, Prevenção e Recuperação; Oficina de Textos: São Paulo, Brazil, 2020. [Google Scholar]

- Silvestre, J.D.; Brito, J. Ceramic Tiling in Building Facades: Inspection and Pathological Characterization Using an Expert System. Constr. Build. Mater. 2011, 25, 1560–1571. [Google Scholar] [CrossRef]

- Harper, R.F. The Code of Hammurabi, King of Babylon, about 2250 B.C.; The University of Chicago Press Callaghan & Company: Chicago, IL, USA, 1904. [Google Scholar]

- Macdonald, S. Concrete; Blackwell Science: Washington, DC, USA, 2003. [Google Scholar]

- Ferraz, G.; Brito, J.; Freitas, V.P.; Silvestre, J.D. State-of-the-Art Review of Building Inspection Systems. J. Perform. Constr. Facil. 2016, 30, 04016018-1–04016018-8. [Google Scholar] [CrossRef]

- Ruiz, R.D.B.; Lordsleem Júnior, A.C.; Rocha, J.H.A. Inspection of Facades with Unmanned Aerial Vehicles (UAV): An Exploratory Study. Rev. ALCONPAT 2022, 11, 88–104. [Google Scholar]

- Liang, H.; Lee, S.; Bae, W.; Kim, J.; Seo, S. Towards UAVs in Construction: Advancements, Challenges, and Future Directions for Monitoring and Inspection. Drones 2023, 7, 202. [Google Scholar] [CrossRef]

- Chaudhari, S.R.; Bhavsar, A.S.; Ranjwan, H.P.; Yadav, P.S.; Shaikh, S.S. Utilizing Drone Technology in Civil Engineering. Int. J. Adv. Res. Sci. Commun. Technol. 2022, 2, 765–780. [Google Scholar]

- Pruthviraj, U.; Kashyap, Y.; Baxevanaki, E.; Kosmopoulos, P. Solar Photovoltaic Hotspot Inspection Using Unmanned Aerial Vehicle Thermal Images at a Solar Field in South India. Remote Sens. 2023, 15, 1914. [Google Scholar] [CrossRef]

- Ramírez, I.S.; Chaparro, J.R.P.; Márquez, F.P.G. Unmanned Aerial Vehicle Integrated Real Time Kinematic in Infrared Inspection of Photovoltaic Panels. Remote Sens. 2023, 15, 20. [Google Scholar]

- Park, J.; Lee, D. Precise Inspection Method of Solar Photovoltaic Panel Using Optical and Thermal Infrared Sensor Image Taken by Drones. Mater. Sci. Eng. 2019, 6115, 7. [Google Scholar] [CrossRef]

- Morando, L.; Recchiuto, C.T.; Calla, J.; Scuteri, P.; Sgorbissa, A. Thermal and Visual Tracking of Photovoltaic Plants for Autonomous UAV Inspection. Drones 2022, 6, 347. [Google Scholar] [CrossRef]

- Aghaei, M.; Madukanya, U.E.; de Oliveira, A.K.V.; Ruther, R. Fault Inspection by Aerial Infrared Thermography in A PV Plant after a Meteorological Tsunami. In Proceedings of the VII Congresso Brasileiro de Energia Solar, VII CBENS, Gramado, Brazil, 17–20 April 2018. [Google Scholar]

- Beniaich, A.; Silva, M.L.N.; Guimaraes, D.V.; Avalos, F.A.P.; Terra, F.S.; Menezes, M.D.; Avanzi, J.C.; Candido, B.M. UAV-Based Vegetation Monitoring for Assessing the Impact of Soil Loss in Olive Orchards in Brazil. Geoderma Reg. 2022, 30, 15. [Google Scholar] [CrossRef]

- Lane, S.N.; Gentile, A.; Goldenschue, L. Combining UAV-Based SfM-MVS Photogrammetry with Conventional Monitoring to Set Environmental Flows: Modifying Dam Flushing Flows to Improve Alpine Stream Habitat. Remote Sens. 2020, 12, 3868. [Google Scholar] [CrossRef]

- Ioli, F.; Bianchi, A.; Cina, A.; de Michele, C.; Maschio, P.; Passoni, D.; Pinto, L. Mid-Term Monitoring of Glacier’s Variations with UAVs: The Example of the Belvedere Glacier. Remote Sens. 2022, 14, 28. [Google Scholar] [CrossRef]

- Yuan, S.; Li, Y.; Bao, F.; Xu, H.; Yang, Y.; Yan, Q.; Zhong, S.; Yin, H.; Xu, J.; Huang, Z.; et al. Marine Environmental Monitoring with Unmanned Vehicle Platforms: Present Applications and Future Prospects. Sci. Total Environ. 2023, 858, 15. [Google Scholar] [CrossRef] [PubMed]

- Næsset, E.; Gobakken, T.; Jutras-Perreault, M.; Ramtvedt, E.N. Comparing 3D Point Cloud Data from Laser Scanning and Digital Aerial Photogrammetry for Height Estimation of Small Trees and Other Vegetation in a Boreal–Alpine Ecotone. Remote Sens. 2021, 13, 2469. [Google Scholar] [CrossRef]

- Congress, S.; Puppala, A. Eye in The Sky: Condition Monitoring of Transportation Infrastructure Using Drones. Civ. Eng. 2022, 176, 40–48. [Google Scholar] [CrossRef]

- Chai, Y.; Choi, W. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. J. Build. Eng. 2023, 64, 21. [Google Scholar] [CrossRef]

- Astor, Y.; Nabesima, Y.; Utami, R.; Sihombing, A.V.R.; Adli, M.; Firdaus, M.R. Unmanned Aerial Vehicle Implementation for Pavement Condition Survey. Transp. Eng. 2023, 12, 11. [Google Scholar] [CrossRef]

- Roberts, R.; Inzerillo, L.; Mino, G.D. Using UAV Based 3D Modelling to Provide Smart Monitoring of Road Pavement Conditions. Information 2020, 11, 568. [Google Scholar] [CrossRef]

- Pan, Y.; Chen, X.; Sun, Q.; Zhang, X. Monitoring Asphalt Pavement Aging and Damage Conditions from Low-Altitude UAV Imagery Based on a CNN Approach. Can. J. Remote Sens. 2021, 47, 432–449. [Google Scholar] [CrossRef]

- Rauhala, A.; Tuomela, A.; Davids, C.; Rossi, P.M. UAV Remote Sensing Surveillance of a Mine Tailings Impoundment in Sub-Arctic Conditions. Remote Sens. 2017, 9, 1318. [Google Scholar] [CrossRef]

- Nappo, N.; Mavrouli, O.; Nex, F.; Westen, C.V.; Gambillara, R.; Michetti, A.M. Use of UAV-Based photogrammetry products for semi-automatic detection and classification of asphalt road damage in landslide-affected areas. Eng. Geol. 2021, 294, 29. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Q.; Wang, J.; Fu, B.; Duan, Z.; Zhao, Z. Application of Space–Sky–Earth Integration Technology with UAVs in Risk Identification of Tailings Ponds. Drones 2023, 7, 222. [Google Scholar] [CrossRef]

- Nasategay, F.F.U. UAV Applications in Road Monitoring for Maintenance Purposes. Master’s Thesis, University of Nevada, Reno, NV, USA, 2020. [Google Scholar]

- Khaloo, A.; Lattanzi, D.; Jachimowicz, A.; Devaney, C. Utilizing UAV and 3D Computer Vision for Visual Inspection of a Large Gravity Dam. Front. Built Environ. 2018, 4, 16. [Google Scholar] [CrossRef]

- Martinez, J.; Gheisari, M.; Alarcon, L.F. UAV Integration in Current Construction Safety Planning and Monitoring Processes: Case Study of a High-Rise Building Construction Project in Chile. J. Manag. Eng. 2020, 36, 15. [Google Scholar] [CrossRef]

- Jiang, Y.; Bai, Y. Estimation of Construction Site Elevations Using Drone-Based Orthoimagery and Deep learning. J. Comput. Civ. Eng. 2020, 146, 04020086-1–04020086-18. [Google Scholar] [CrossRef]

- Jacob-Loyola, N.; Rivera, F.M.; Herrera, R.F.; Atencio, E. Unmanned Aerial Vehicles (UAVs) for Physical Progress Monitoring of Construction. Sensors 2021, 21, 4227. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Liu, C. Applications of Multirotor Drone Technologies in Construction Management. Int. J. Constr. Manag. 2018, 19, 12. [Google Scholar] [CrossRef]

- Gopalakrishnan, K.; Gholami, H.; Vidyadharan, A.; Choudhary, A.; Agrawal, A. Crack Damage Detection in Unmanned Aerial Vehicle Images of Civil Infrastructure Using Pre-Trained Deep Learning Model. Int. J. Traffic Transp. Eng. 2018, 8, 14. [Google Scholar]

- Ellenberg, A.; Kontsos, A.; Moon, F.; Bartoli, I. Bridge Deck Delamination Identification from Unmanned Aerial Vehicle Infrared Imagery. Autom. Constr. 2016, 72, 155–165. [Google Scholar] [CrossRef]

- Sankarasrinivasan, S.; Balasubramanian, E.; Karthik, K.; Chandrasekar, U.; Gupta, R. Health Monitoring of Civil Structures with Integrated UAV and Image Processing System. Procedia Comput. Sci. 2015, 54, 508–515. [Google Scholar] [CrossRef]

- Rossi, G.; Tanteri, L.; Tofani, V.; Vannocci, P.; Moretti, S.; Casagli, N. Multitemporal UAV Surveys for Landslide Mapping and Characterization. Landslides 2018, 15, 1045–1052. [Google Scholar] [CrossRef]

- Maza, I.; Caballero, F.; Capitan, J.; Martínez-de-Dios, J.R.; Ollero, A. Experimental Results in Multi-UAV Coordination for Disaster Management and Civil Security Applications. J. Intell. Robot. Syst. 2011, 61, 563–585. [Google Scholar] [CrossRef]

- Greenwood, F.; Nelson, E.L.; Greenough, P.G. Flying into The Hurricane: A Case Study of UAV Use in Damage Assessment During the 2017 Hurricanes in Texas and Florida. PLoS ONE 2020, 15, 30. [Google Scholar] [CrossRef] [PubMed]

- Amaral, A.K.N.; de Souza, C.A.; Momoli, R.S.; Cherem, L.F.S. Use of Unmanned Aerial Vehicle to Calculate Soil Loss. Pesquisa. Agropecuária Trop. 2021, 51, 9. [Google Scholar] [CrossRef]

- Xiao, W.; Ren, H.; Sui, T.; Zhang, H.; Zhao, Y.; Hu, Z. A Drone and Field-Based Investigation of The Land Degradation and Soil Erosion at An Opencast Coal Mine Dump after 5 Years Evolution. Int. J. Coal Sci. Technol. 2022, 9, 17. [Google Scholar] [CrossRef]

- Novo, E.M.L. Sensoriamento Remoto: Princípios e Aplicações; Blucher: São Paulo, Brazil, 2010. [Google Scholar]

- Zhang, Z.; Zhu, L. A Review on Unmanned Aerial Vehicle Remote Sensing: Platforms, Sensors, Data Processing Methods, and Applications. Drones 2023, 7, 398. [Google Scholar] [CrossRef]

- Types of Loads. DJI. Available online: https://www.dji.com/br/products/enterprise?site=enterprise&from=nav#payloads (accessed on 28 May 2024).

- Sentera 6X DJI Skyport. Flying Eye. Available online: https://www.flyingeye.fr/product/sentera-6x-pour-matrice-200-210-300/ (accessed on 28 May 2024).

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Wang, D.; Shu, H. Accuracy Analysis of Three-Dimensional Modeling of a Multi-Level UAV without Control Points. Buildings 2022, 13, 592. [Google Scholar] [CrossRef]

- Kaartinen, E.; Dunphy, K.; Sadhu, A. LiDAR-Based Structural Health Monitoring: Applications in Civil Infrastructure Systems. Sensors 2022, 22, 4610. [Google Scholar] [CrossRef]

- Peng, K.C.; Feng, L.; Hsieh, Y.C.; Yang, T.H.; Hsiung, S.H.; Tsai, Y.D.; Kuo, C. Unmanned Aerial Vehicle for Infrastructure Inspection with Image Processing for Quantification of Measurement and Formation of Facade Map. In Proceedings of the International Conference on Applied System Innovation, ICASI, Sapporo, Japan, 13–17 May 2017. [Google Scholar]

- Jarzabek-Rychard, M.; Lin, D.; Maas, H. Supervised Detection of Façade Openings in 3D Point Clouds with Thermal Attributes. Remote Sens. 2020, 12, 543. [Google Scholar] [CrossRef]

- Bolourian, N.; Hannad, A. LiDAR-equipped UAV path Planning Considering Potential Locations of Defects for Bridge Inspection. Autom. Constr. 2020, 117, 16. [Google Scholar] [CrossRef]

- Liu, Y.; Lin, Y.; Yeoh, J.K.W.; Chua, D.K.H.; Wong, L.W.C.; Ang Júnior, M.H.; Lee, W.L.; Chew, M.Y.L. Framework for Automated UAV-Based Inspection of External Building Façades. In Automating Cities. Advances in 21st Century Human Settlements; Wang, B.T., Wang, C.M., Eds.; Springer: Singapore, 2021. [Google Scholar]

- Meola, C.; Carlomagno, G.M.; Giorleo, L. The Use of Infrared Thermography for Materials Characterization. J. Mater. Process. Technol. 2004, 155–156, 1132–1137. [Google Scholar] [CrossRef]

- Silva, F.A.M. Diagnóstico da Envolvente de um Edifício Escolar com Recurso a Análise Termográfica. Master’s Thesis, Instituto Politécnico de Viana do Castelo, Viana do Castelo, Portugal, 2016. [Google Scholar]

- Resende, M.M.; Gambare, E.B.; Silva, L.A.; Cordeiro, Y.S.; Almeida, E.; Salvador, R.P. Infrared Thermal Imaging to Inspect Pathologies on Façades of Historical Buildings: A Case Study on the Municipal Market of São Paulo, Brazil. Case Stud. Constr. Mater. 2022, 16, 12. [Google Scholar] [CrossRef]

- Carrio, A.; Pestana, J.; Sanchez-Lopoes, J.; Suarez-Fernandez, R.; Campoy, P.; Tendero, R.; García-de-Viedma, M.; González-Rodrigo, B.; Bonatti, J.; Rejas-Ayuga, J.G.; et al. UBRISTES: UAV-Based Building Rehabilitation with Visible and Thermal Infrared Remote Sensing. In Robot 2015: Second Iberian Robotics Conference, Advances in Intelligent Systems and Computing; Reis, L., Moreira, A., Lima, P., Montano, L., Muñoz-Martinez, V., Eds.; Springer: Cham, Switzerland, 2016; Volume 417. [Google Scholar]

- Lin, D.; Jarzabek-Rychard, M.; Schneider, D.; Maas, H.G. Thermal Texture Selection and Correction for Building Façade Inspection Based on Thermal Radial Characteristics. Remote Sens. Spat. Inf. Sci. 2018, XLII, 585–591. [Google Scholar]

- Ortiz-Sanz, J.; Gil-Docampo, M.; Arza-Garcia, M.; Cañas-Guerrero, I. IR Thermography from UAVs to Monitor Thermal Anomalies in the Envelopes of Traditional Wine Cellars: Field Test. Remote Sens. 2019, 11, 1424. [Google Scholar] [CrossRef]

- Luis-Ruiz, J.M.; Sedano-Cibrián, J.; Pérez-Álvarez, R.; Pereda-Garcia, R.; Malagón-Picón, B. Metric contrast of thermal 3D models of large industrial facilities obtained by means of low-cost infrared sensors in UAV platforms. Int. J. Remote Sens. 2022, 43, 457–483. [Google Scholar] [CrossRef]

- Wolff, F.; Kolari, T.H.M.; Villoslada, M.; Tahvanainen, T.; Korprlainen, P.; Zamboni, P.A.P.; Kumpula, T. RGB vs Multispectral Imagery: Mapping Aapa Mire Plant Communities with UAVs. Ecol. Indic. 2023, 148, 14. [Google Scholar] [CrossRef]

- Rolling Shutter vs Obturador Global Modo de Câmera CMOS. Oxford Instruments. Available online: https://andor.oxinst.com/learning/view/article/rolling-and-global-shutter (accessed on 15 January 2023).

- Lisboa, D.W.B.; da Silva, A.B.S.; de Souza, A.B.A.; da Silva, M.P. Utilização do Vant na Inspeção de Manifestações Patológicas em Fachadas de Edificações. In Proceedings of the Congresso Técnico Científico da Engenharia e da Agronomia, CONTECC 2018, Maceió, Brazil, 21–24 August 2018. [Google Scholar]

- Jordan, S.; Moore, J.; Hovet, S.; Box, J.; Perry, J.; Kirsche, K.; Lewis, D.; Tse, Z.T.H. State-of-the-art technologies for UAV Inspections. IET Radar Sonar Navig. 2018, 12, 151–164. [Google Scholar] [CrossRef]

- Chen, L.; Li, S.; Bai, Q.; Yang, J.; Jiang, S.; Miao, Y. Review of Image Classification Algorithms Based on Convolutional Neural Networks. Remote Sens. 2021, 13, 4712. [Google Scholar] [CrossRef]

- Adão, T.; Hruska, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Pandey, M.; Fernandez, M.; Gentile, F.; Isayev, A.; Tropsha, A.; Stern, A.C.; Cherkasov, A. The transformational role of GPU computing and deep learning in drug discovery. Nat. Mach. Intell. 2022, 4, 211–221. [Google Scholar] [CrossRef]

- Jia, J.; Wang, Y.; Chen, J.; Guo, R.; Shu, R.; Wang, J. CNN-RNN and Data Augmentation Using Deep Convolutional Generative Adversarial Network for Environmental Sound Classification. Infrared Phys. Technol. 2020, 104, 16. [Google Scholar]

- Zhang, H.; Zhang, B.; Wei, Z.; Wang, C.; Huang, Q. Lightweight Integrated Solution for a UAV-Borne Hyperspectral Imaging System. Remote Sens. 2020, 12, 657. [Google Scholar] [CrossRef]

- Proctor, C.; He, Y. Workflow for Building a Hyperspectral UAV: Challenges and Opportunities. International Archives of the Photogrammetry. Remote Sens. Spat. Inf. Sci. 2015, XL-1/W4, 415–419. [Google Scholar]

- Marcial-Pablo, M.J.; Gonzalez-Sanchez, A.; Jimenez-Jimenez, S.I.; Ontiveros-Capurata, R.E.; Ojeda-Bustamantem, W. Estimation of vegetation fraction using RGB and multispectral images from UAV. Int. J. Remote Sens. 2019, 40, 420–438. [Google Scholar] [CrossRef]

- Habili, N.; Kwan, E.; Webers, C.; Oorloff, J.; Armin, M.A.; Petersson, L. A Hyperspectral and RGB Dataset for Building Façade Segmentation. In Computer Vision–ECCV 2022 Workshops. ECCV 2022; Karlinsky, L., Michaeli, T., Nishino, K., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; Volume 13807. [Google Scholar]

- Olivetti, D.; Cicerelli, R.; Martinez, J.; Almeida, T.; Casari, R.; Borges, H.; Roig, H. Comparing Unmanned Aerial Multispectral and Hyperspectral Imagery for Harmful Algal Bloom Monitoring in Artificial Ponds Used for Fish Farming. Drones 2023, 7, 410. [Google Scholar] [CrossRef]

- Rocha, M.S.; Bezerra, M.R.C.S.; da Silva, G.M.; Brito, D.R.N. Inspeção de fachadas utilizando tecnologias da Indústria 4.0: Uma comparação com métodos tradicionais. Braz. J. Dev. 2023, 9, 23835–23848. [Google Scholar] [CrossRef]

- Falorca, J.F.; Miraldes, J.P.N.D.; Lanzinha, J.C.G. New trends in visual inspection of buildings and structures: Study for the use of drones. Open Eng. 2021, 11, 734–743. [Google Scholar] [CrossRef]

- Oliveira, A.A.; Graças, G.M.; Lopes, L.K.; Rezende, P.H.F. Inspeção de Manifestações Patológicas em Fachadas Utilizando Aeronaves Remotamente Pilotadas. Monography; Mackenzie Presbiterian University: São Paulo, Brazil, 2020. [Google Scholar]

- Ciampa, E.; de Vito, L.; Pecce, M.R. Practical issues on the use of drones for construction inspections. J. Phys. Conf. Ser. 2019, 1249, 12. [Google Scholar] [CrossRef]

- Wang, J.; Wang, P.; Qu, L.; Pei, Z.; Ueda, T. Automatic detection of building surface cracks using UAV and deep learning-combined approach. Struct. Concr. 2024; early view. [Google Scholar] [CrossRef]

- El-Mir, A.; El-Zahab, S.; Sbartai, Z.M.; Homsi, F.; Saliba, J.; El-Hassan, H. Machine Learning Prediction of Concrete Compressive Strength Using Rebound Hammer Test. J. Build. Eng. 2023, 64, 21. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban Land-use Mapping Using a Deep Convolutional Neural Network with High Spatial Resolution Multispectral Remote Sensing Imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Thangavel, K.; Spiller, D.; Sabatini, R.; Amici, S.; Sasidharan, S.T.; Fayek, H.; Marzocca, P. Autonomous Satellite Wildfire Detection Using Hyperspectral Imagery and Neural Networks: A Case Study on Australian Wildfire. Remote Sens. 2023, 15, 720. [Google Scholar] [CrossRef]

- Farizawani, A.G.; Puteh, M.; Marina, Y.; Rivaie, A. A Review of Artificial Neural Network Learning Rule Based on Multiple Variant of Conjugate Gradient Approaches. J. Phys. Conf. Ser. 2020, 1529, 13. [Google Scholar] [CrossRef]

- Sichman, J.S. Inteligência Artificial e Sociedade: Avanços e Riscos. Estud. Av. 2021, 35, 37–49. [Google Scholar] [CrossRef]

- Modelos de Neurônios em Redes Neurais Artificiais. Available online: https://www.researchgate.net/publication/340166849_Modelos_de_neuronios_em_redes_neurais_artificiais (accessed on 15 January 2023).

- Kaur, R.; Singh, S. A Comprehensive Review of Object Detection with Deep learning. Digit. Signal Process. 2023, 132, 17. [Google Scholar] [CrossRef]

- Emmert-Streib, F.; Yang, Z.; Feng, H.; Tripathi, S.; Dehmer, M. An Introductory Review of Deep learning for Prediction Models with Big Data. Front. Artif. Intell. 2020, 3, 23. [Google Scholar] [CrossRef] [PubMed]

- Deep Learning Made Easy with Deep Cognition. Available online: https://becominghuman.ai/deep-learning-made-easy-with-deep-cognition-403fbe445351 (accessed on 15 January 2023).

- Kumar, V.; Azamathulla, H.M.; Sharma, K.V.; Mehta, D.J.; Maharaj, K.T. The State of the Art in Deep Learning Applications, Challenges, and Future Prospects: A Comprehensive Review of Flood Forecasting and Management. Sustainability 2023, 15, 10543. [Google Scholar] [CrossRef]

- Zhang, G.; Wang, B.; Li, J.; Xu, Y. The application of deep learning in bridge health monitoring: A literature review. Adv. Bridge Eng. 2022, 3, 27. [Google Scholar] [CrossRef]

- Jia, J.; Li, Y. Deep Learning for Structural Health Monitoring: Data, Algorithms, Applications, Challenges, and Trends. Sensors 2023, 23, 8824. [Google Scholar] [CrossRef] [PubMed]

- Cha, Y.; Ali, R.; Lewis, J.; Büyüköztürk, O. Deep learning-based structural health monitoring. Autom. Constr. 2024, 161, 38. [Google Scholar] [CrossRef]

- Xu, D.; Xu, X.; Forde, M.C.; Caballero, A. Concrete and steel bridge Structural Health Monitoring—Insight into choices for machine learning applications. Constr. Build. Mater. 2023, 402, 16. [Google Scholar] [CrossRef]

- Bai, Y.; Demir, A.; Yilmaz, A.; Sezen, H. Assessment and monitoring of bridges using various camera placements and structural analysis. J. Civ. Struct. Health Monit. 2023, 14, 321–337. [Google Scholar] [CrossRef]

- Nash, W.; Drummond, T.; Birbilis, N. A review of deep learning in the study of materials degradation. NPJ Mater. Degrad. 2018, 37, 12. [Google Scholar] [CrossRef]

- Nash, W.; Zheng, L.; Birbilis, N. Deep learning corrosion detection with confidence. NPJ Mater. Degrad. 2022, 26, 13. [Google Scholar] [CrossRef]

- Wang, Y.; Oyen, D.; Guo, W.; Mehta, A.; Scott, C.B.; Panda, N.; Fernández-Godino, M.G.; Srinivasan, G.; Yue, X. StressNet—Deep learning to predict stress with fracture propagation in brittle materials. NPJ Mater. Degrad. 2021, 6, 10. [Google Scholar] [CrossRef]

- Gao, Y.; Yu, Z.; Yu, S.; Sui, H.; Feng, T.; Liu, Y. Metal intrusion enhanced deep learning-based high temperature deterioration analysis of rock materials. Eng. Geol. 2024, 335, 12. [Google Scholar] [CrossRef]

- Gao, Y.; Yu, Z.; Chen, W.; Yin, Q.; Wu, J.; Wang, W. Recognition of rock materials after high-temperature deterioration based on SEM images via deep learning. J. Mater. Res. Technol. 2023, 25, 273–284. [Google Scholar] [CrossRef]

- Zhu, J.; Wang, Y. Feature Selection and Deep Learning for Deterioration Prediction of the Bridges. J. Perform. Constr. Facil. 2021, 35, 04021078-1–04021078-13. [Google Scholar] [CrossRef]

- Wu, P.; Zhang, Z.; Peng, X.; Wang, R. Deep learning solutions for smart city challenges in urban development. Sci. Rep. 2024, 14, 19. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Lin, Y.; Zhao, L.; Wu, T.; Jin, D.; Li, Y. Spatial planning of urban communities via deep reinforcement learning. Nat. Comput. Sci. 2023, 3, 748–762. [Google Scholar] [CrossRef] [PubMed]

- Alghamdi, M. Smart city urban planning using an evolutionary deep learning model. Soft Comput. 2024, 28, 447–459. [Google Scholar] [CrossRef]

- Li, Y.; Yabuki, N.; Fukuda, T. Integrating GIS, deep learning, and environmental sensors for multicriteria evaluation of urban street walkability. Landsc. Urban Plan. 2023, 28, 17. [Google Scholar] [CrossRef]

- Pan, Z.; Xu, J.; Guo, Y.; Hu, Y.; Wang, G. Deep Learning Segmentation and Classification for Urban Village Using a Worldview Satellite Image Based on U-Net. Remote Sens. 2020, 12, 1574. [Google Scholar] [CrossRef]

- Ren, Y.; Yang, J.; Zhang, Q.; Guo, Z. Multi-Feature Fusion with Convolutional Neural Network for Ship Classification in Optical Images. Appl. Sci. 2019, 9, 4209. [Google Scholar] [CrossRef]

- Wang, T.; Wen, C.; Wang, H.; Gao, F.; Jiang, T.; Jin, S. Deep learning for wireless physical layer: Opportunities and challenges. IEEE 2017, 14, 92–111. [Google Scholar] [CrossRef]

- Lagaros, N.D. Artificial Neural Networks Applied in Civil Engineering. Appl. Sci. 2023, 13, 1131. [Google Scholar] [CrossRef]

- Ye, X.W.; Jin, T.; Yun, C.B. A Review on Deep Learning-Based Structural Health Monitoring of Civil Infrastructures. Smart Struct. Syst. 2019, 24, 567–586. [Google Scholar]

- Wei, G.; Wan, F.; Zhou, W.; Xu, C.; Ye, Z.; Liu, W.; Lei, G.; Xu, L. BFD-YOLO: A YOLOv7-Based Detection Method for Building Façade Defects. Electronics 2023, 12, 3612. [Google Scholar] [CrossRef]

- Moreh, F.; Lyu, H.; Rizvi, Z.H.; Wuttke, F. Deep neural networks for crack detection inside structures. Sci. Rep. 2024, 4439, 15. [Google Scholar] [CrossRef]

- Su, P.; Han, H.; Liu, M.; Yang, T.; Liu, S. MOD-YOLO: Rethinking the YOLO architecture at the level of feature information and applying it to crack detection. Expert Syst. Appl. 2024, 237, 16. [Google Scholar] [CrossRef]

- Yuan, J.; Ren, Q.; Jia, C.; Zhang, J.; Fu, J.; Li, M. Automated pixel-level crack detection and quantification using deep convolutional neural networks for structural condition assessment. Structures 2024, 59, 15. [Google Scholar] [CrossRef]

- Zhu, W.; Zhang, H.; Eastwood, J.; Qi, X.; Jia, J.; Cao, Y. Concrete crack detection using lightweight attention feature fusion single shot multibox detector. Knowl.-Based Syst. 2023, 261, 12. [Google Scholar] [CrossRef]

- Tang, W.; Mondal, T.G.; Wu, R.; Subedi, A.; Jahanshahi, M.R. Deep learning-based post-disaster building inspection with channel-wise attention and semi-supervised learning. Smart Struct. Syst. 2023, 31, 365–381. [Google Scholar]

- Mohammed, M.A.; Han, Z.; Li, Y.; Al-Huda, Z.; Li, C.; Wang, W. End-to-end semi-supervised deep learning model for surface crack detection of infrastructures. Front. Mater. 2022, 9, 19. [Google Scholar] [CrossRef]

- Cumbajin, E.; Rodrigues, N.; Costa, P.; Miragaia, R.; Frazão, L.; Costa, N.; Fernández-Caballero, A.; Carneiro, J.; Buruberri, L.H.; Pereira, A. A Real-Time Automated Defect Detection System for Ceramic Pieces Manufacturing Process Based on Computer Vision with Deep Learning. Sensors 2023, 24, 232. [Google Scholar] [CrossRef] [PubMed]

- Wan, G.; Fang, H.; Wang, D.; Yan, J.; Xie, B. Ceramic tile surface defect detection based on deep learning. Ceram. Int. 2022, 48, 11085–11093. [Google Scholar] [CrossRef]

- Cao, M. Drone-assisted segmentation of tile peeling on building façades using a deep learning model. J. Build. Eng. 2023, 80, 15. [Google Scholar] [CrossRef]

- Nguyen, H.; Hoang, N. Computer vision-based classification of concrete spall severity using metaheuristic-optimized Extreme Gradient Boosting Machine and Deep Convolutional Neural Network. Autom. Constr. 2022, 140, 14. [Google Scholar] [CrossRef]

- Arafin, P.; Billah, A.H.M.M.; Issa, A. Deep learning-based concrete defects classification and detection using semantic segmentation. Struct. Health Monit. 2023, 23, 383–409. [Google Scholar] [CrossRef] [PubMed]

- Forkan, A.R.M.; Kang, Y.; Jayaraman, P.P.; Liao, K.; Kaul, R.; Morgan, G.; Ranjan, R.; Sinha, S. CorrDetector: A framework for structural corrosion detection from drone images using ensemble deep learning. Expert Syst. Appl. 2022, 193, 116461. [Google Scholar] [CrossRef]

- Ha, M.; Kim, Y.; Park, T. Stain Defect Classification by Gabor Filter and Dual-Stream Convolutional Neural Network. Appl. Sci. 2023, 13, 4540. [Google Scholar] [CrossRef]

- Goetzke-Pala, A.; Hola, A.; Sadowski, L. A non-destructive method of the evaluation of the moisture in saline brick walls using artificial neural networks. Arch. Civ. Mech. Eng. 2018, 18, 1729–1742. [Google Scholar] [CrossRef]

- Hola, A. Methodology of the quantitative assessment of the moisture content of saline brick walls in historic buildings using machine learning. Arch. Civ. Mech. Eng. 2023, 23, 15. [Google Scholar] [CrossRef]

- Hola, A.; Sadowski, L. A method of the neural identification of the moisture content in brick walls of historic buildings on the basis of non-destructive tests. Autom. Constr. 2019, 106, 15. [Google Scholar] [CrossRef]

- Zhao, F.; Chao, Y.; Li, L. A Crack Segmentation Model Combining Morphological Network and Multiple Loss Mechanism. Sensor 2023, 23, 1127. [Google Scholar] [CrossRef] [PubMed]

- Ameli, Z.; Nesheli, S.J.; Landis, E.N. Deep Learning-Based Steel Bridge Corrosion Segmentation and Condition Rating Using Mask RCNN and YOLOv8. Infrastructures 2024, 9, 3. [Google Scholar] [CrossRef]

- Garrido, I.; Barreira, E.; Almeida, R.M.S.F.; Laguela, S. Introduction of active thermography and automatic defect segmentation in the thermographic inspection of specimens of ceramic tiling for building façades. Infrared Phys. Technol. 2022, 121, 20. [Google Scholar] [CrossRef]

| Term | Definition | Author |

|---|---|---|

| Anomaly | Phenomenon that hampers the utilization of a system or constructive elements, prematurely resulting in the downgrade of performance due to constructive irregularities or degradation processes. | [37] |

| Degradation | Deterioration of construction systems, components, and building equipment due to the action of damaging agents throughout time, considering given periodic maintenance activities. | [37] |

| Building Performance | The behavior of a building and its systems when subjected to exposure and usage, which normally occurs throughout its service life, considering its maintenance operations as anticipated during its project and construction. | [37] |

| Deterioration | Decomposition or early loss of performance in constructive systems, components, and building equipment due to anomalies or usage, operation, and/or maintenance inaccuracy. | [37] |

| Durability | The capacity of a building (or its systems) to perform functions throughout time and under conditions of exposure, usage, and maintenance as anticipated during its project construction, according to its use and maintenance manual. | [38] |

| Pathologic Manifestation | Signs or symptoms occurring due to existence of mechanisms or processes of degradation for materials, components, or systems that contribute to or influence the loss of performance. | [38] |

| Maintenance | Preventive or corrective actions necessary to preserve the normal conditions of a property’s use. | [39] |

| Prophylaxis | Actions and procedures necessary for the prevention, diminution, or correction of pathologic manifestations based on diagnostics. | [37] |

| Service Life of a Project | The period of time in which a building and its systems can be used as projected and built, fulfilling its performance requirements as anticipated previously, considering the correct execution of its maintenance programs. | [37] |

| Year | Method | Author |

|---|---|---|

| 1877 | The SPAB Manifesto | W. Morris e P. Webb |

| 1964 | The Venice Charter | ICOMOS |

| 1982 | Defect Action Sheets | BRE |

| 1985 | Anomalies Repair Forms (in Portuguese) | LNEC |

| 1993 | Cases of Failure Information Sheet CIB | CIB |

| 1995 | Building Pathology Sheets (in French) | AQC |

| 2003 | Construdoctor | OZ—Diagnosis |

| 2004 | Learning from Mistakes (in Italian) | BEGroup |

| 2008 | Severity of Degradation | Gaspar e Brito |

| 2009 | Web-Based Prototype System | P. Fong e K. Wong |

| 2010 | Maintainability Website | Y. L. Chew |

| 2013 | Building Medical Record | C. Chang e M. Tsai |

| 2016 | Methodologies for Service Life Prediction | Silva, Brito e Gaspar |

| 2020 | Expert Knowledge-Based Inspections Systems | Brito, Pereira, Silvestre e Flores-Cohen |

| Application | Authors |

|---|---|

| Photovoltaic power plant | [49,50,51,52,53,54] |

| Environmental | [55,56,57,58,59] |

| Roads and Highways | [60,61,62,63,64] |

| Dams and Mining | [65,66,67,68,69] |

| Civil Construction | [48,70,71,72,73] |

| Pathologic Manifestations | [1,4,74,75,76] |

| Geological, Hydrological, and Environmental Risks | [77,78,79,80,81] |

| Advantages | Limitations |

|---|---|

| Easy access to difficult areas | Dependency on meteorological conditions |

| Tree canopies are easily overcome | High cost of acquisition |

| Generation of products with centimeter-level resolution | High time of processing, considering the volume of generated data |

| Shorter time of execution and higher level or productivity when inspecting | Loss of performance when the height of a scan flight is larger |

| Ability to identify pathologic manifestations in smaller dimensions | Requires specialized personnel for data execution and interpretation |

| Advantages | Limitations |

|---|---|

| Easy access to harder-to-reach areas | Limited flight autonomy |

| Real-time data access | Dependency on ideal conditions of surface heat emission |

| Reduction in risk to technician’s life | Impossibility of measuring depth and thickness of pathologies |

| Faster inspection time | Necessity of specific software for thermal imaging processing |

| Detection of non-apparent pathologies such as infiltration, loose ceramic, and so on | Low accuracy when utilized to evaluate mirrored surfaces |

| Identification of mortar render with adherence issues | Necessity of qualified personnel for interpreting thermal data |

| Advantages | Limitations |

|---|---|

| Wider range of spectral information compared to RGB-type sensors | High complexity in the process of data acquisition and analysis |

| Ability to identify elements invisible to the human eye | Low availability of studies focused on building pathology |

| Enables capture of large amounts of data. | High acquisition cost |

| Application | Reference | Authors | Technology |

|---|---|---|---|

| Crack Detection | [148] | Wei et al. (2023) | YOLOv7; BFD-YOLO’s |

| [149] | Moreh et al. (2024) | DenseNet; CNN | |

| [17] | Teng and Chen (2022) | DeepLab_v3+; MATLAB; CNN | |

| [117] | Wang et al. (2024) | ResNet50; YOLOv8 | |

| [18] | Ali et al. (2022) | AlexNet; ZFNet; GoogLeNet; YOLO; Faster R-CNN; and Others | |

| [150] | Su et al. (2024) | MOD-YOLO; MODSConv; YOLOX | |

| [151] | Yuan et al. (2024) | ResNet-50; FPN-DB | |

| [152] | Zhu et al. (2023) | SSD; Faster-RCNN; EfficientDet; YOLOv3; YOLOv4; CenterNet | |

| [153] | Tang et al. (2023) | SSL; U-Net++; DeepLab-AASPP | |

| [154] | Mohammed et al. (2022) | U-Net; CNN | |

| Detachment of Ceramic Pieces | [15] | Sousa et al. (2022) | YOLOv2 |

| [155] | Cumbajin et al. (2023) | ResNet; VGG; AlexNET | |

| [156] | Wan et al. (2022) | YOLOv5s | |

| [157] | Cao (2023) | YOLOv7; R-CNN; and Others | |

| Spalling Detection | [158] | Nguyen and Hoang (2022) | XGBoost; DCNN |

| [159] | Arafin et al. (2023) | InceptionV3; ResNet50; VGG19 | |

| Corrosion Detection | [160] | Forkan et al. (2022) | CNN; R-CNN |

| Stain Defect | [161] | Ha et al. (2023) | GLCM; CNN |

| [162] | Goetzke-Pala et al. (2018) | ANN | |

| [163] | Hola (2023) | ANN; RF; SVM | |

| [164] | Hola and Sadowski (2019) | ANN |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meira, G.d.S.; Guedes, J.V.F.; Bias, E.d.S. UAV-Embedded Sensors and Deep Learning for Pathology Identification in Building Façades: A Review. Drones 2024, 8, 341. https://doi.org/10.3390/drones8070341

Meira GdS, Guedes JVF, Bias EdS. UAV-Embedded Sensors and Deep Learning for Pathology Identification in Building Façades: A Review. Drones. 2024; 8(7):341. https://doi.org/10.3390/drones8070341

Chicago/Turabian StyleMeira, Gabriel de Sousa, João Victor Ferreira Guedes, and Edilson de Souza Bias. 2024. "UAV-Embedded Sensors and Deep Learning for Pathology Identification in Building Façades: A Review" Drones 8, no. 7: 341. https://doi.org/10.3390/drones8070341

APA StyleMeira, G. d. S., Guedes, J. V. F., & Bias, E. d. S. (2024). UAV-Embedded Sensors and Deep Learning for Pathology Identification in Building Façades: A Review. Drones, 8(7), 341. https://doi.org/10.3390/drones8070341